generative-ai-use-cases

Application implementation with business use cases for safely utilizing generative AI in business operations

Stars: 1284

Generative AI Use Cases (GenU) is an application that provides well-architected implementation with business use cases for utilizing generative AI in business operations. It offers a variety of standard use cases leveraging generative AI, such as chat interaction, text generation, summarization, meeting minutes generation, writing assistance, translation, web content extraction, image generation, video generation, video analysis, diagram generation, voice chat, RAG technique, custom agent creation, and custom use case building. Users can experience generative AI use cases, perform RAG technique, use custom agents, and create custom use cases using GenU.

README:

Well-architected application implementation with business use cases for utilizing generative AI in business operations

[!IMPORTANT] GenU has supported multiple languages since v4.

GenU は v4 から多言語対応しました。日本語ドキュメントはこちら

Here we introduce GenU's features and options by usage pattern. For comprehensive deployment options, please refer to this document.

[!TIP] Click on a usage pattern to see details

I want to experience generative AI use cases

GenU provides a variety of standard use cases leveraging generative AI. These use cases can serve as seeds for ideas on how to utilize generative AI in business operations, or they can be directly applied to business as-is. We plan to continuously add more refined use cases in the future. If unnecessary, you can also hide specific use cases with an option. Here are the use cases provided by default.

| Use Case | Description |

| Chat | You can interact with large language models (LLMs) in a chat format. The existence of platforms that allow direct dialogue with LLMs enables quick responses to specific and new use cases. It's also effective as a testing environment for prompt engineering. |

| Text Generation | Generating text in any context is one of the tasks LLMs excel at. It generates all kinds of text including articles, reports, and emails. |

| Summarization | LLMs are good at summarizing large amounts of text. Beyond simple summarization, they can also extract necessary information in a conversational format after being given text as context. For example, after reading a contract, you can ask questions like "What are the conditions for XXX?" or "What is the amount for YYY?" |

| Meeting Minutes | Automatically generate meeting minutes from audio recordings or real-time transcription. Choose from Transcription, News Paper, or FAQ style with zero prompt engineering required. |

| Writing | LLMs can suggest improvements from a more objective perspective, considering not only typos but also the flow and content of the text. You can expect to improve quality by having the LLM objectively check points you might have missed before showing your work to others. |

| Translation | LLMs trained in multiple languages can perform translations. Beyond simple translation, they can incorporate various specified contextual information such as casualness and target audience into the translation. |

| Web Content Extraction | Extracts necessary information from web content such as blogs and documents. The LLM removes unnecessary information and formats it into well-structured text. Extracted content can be used in other use cases such as summarization and translation. |

| Image Generation | Image generation AI can create new images based on text or existing images. It allows for immediate visualization of ideas, potentially improving efficiency in design work. In this feature, LLMs can assist in creating prompts. |

| Video Generation | Video generation AI creates short videos from text. The generated videos can be used as materials in various scenarios. |

| Video Analysis | With multimodal models, it's now possible to input not only text but also images. In this feature, you can ask the LLM to analyze video frames and text inputs. |

| Diagram Generation | Diagram generation visualizes text and content on any topic using optimal diagrams. It allows for easy text-based diagram creation, enabling efficient creation of flowcharts and other diagrams even for non-programmers and non-designers. |

| Voice Chat | In Voice Chat, you can have a bidirectional voice chat with generative AI. Similar to natural conversation, you can also interrupt and speak while the AI is talking. Also, by setting a system prompt, you can have voice conversations with AI that has specific roles. |

I want to do RAG

RAG is a technique that allows LLMs to answer questions they normally couldn't by providing external up-to-date information or domain knowledge that LLMs typically struggle with. PDF, Word, Excel, and other files accumulated within your organization can serve as information sources. RAG also has the effect of preventing LLMs from providing "plausible but incorrect information" by only allowing answers based on evidence.

GenU provides a RAG Chat use case. Two types of information sources are available for RAG Chat: Amazon Kendra and Knowledge Base. When using Amazon Kendra, you can use manually created S3 Buckets or Kendra Indexes as they are. When using Knowledge Base, advanced RAG features such as Advanced Parsing, Chunk Strategy Selection, Query Decomposition, and Reranking are available. Knowledge Base also allows for Metadata Filter Settings. For example, you can meet requirements such as "switching accessible data sources by organization" or "allowing users to set filters from the UI."

Additionally, it is possible to build a RAG that references data outside of AWS by enabling MCP chat and adding an external service's MCP server to packages/cdk/mcp-api/mcp.json.

I want to use custom Bedrock Agents or AgentCore or Bedrock Flows within my organization

When you enable agents in GenU, Web Search Agent and Code Interpreter Agent are created. The Web Search Agent searches the web for information to answer user questions. For example, it can answer "What is AWS GenU?" The Code Interpreter Agent can execute code to respond to user requests. For example, it can respond to requests like "Draw a scatter plot with some dummy data."

While Web Search Agent and Code Interpreter Agent are basic agents, you might want to use more practical agents tailored to your business needs. GenU provides a feature to import agents that you've created manually or with other assets.

By using GenU as a platform for agent utilization, you can leverage GenU's rich security options and SAML authentication to spread practical agents within your organization. Additionally, you can hide unnecessary standard use cases or display agents inline to use GenU as a more agent-focused platform.

Similarly, there is an import feature for AgentCore Runtime, so please make use of it.

Similarly, there is an import feature for Bedrock Flows, so please make use of it.

Additionally, you can create agents that perform actions on services outside AWS by enabling MCP chat and adding external MCP servers to packages/cdk/mcp-api/mcp.json.

I want to create custom use cases

GenU provides a feature called "Use Case Builder" that allows you to create custom use cases by describing prompt templates in natural language.

Custom use case screens are automatically generated just from prompt templates, so no code changes to GenU itself are required.

Created use cases can be shared with all users who can log into the application, not just for personal use.

Use Case Builder can be disabled if not needed.

Use cases can also be exported as .json files and shared with third parties. When sharing use cases, please be careful not to include any confidential information in prompts or usage examples. Use cases shared by third parties can be imported by uploading the .json file from the new use case creation screen.

For more details about Use Case Builder, please check this blog.

While Use Case Builder can create use cases where you input text into forms or attach files, depending on your requirements, a chat UI might be more suitable.

In such cases, please utilize the system prompt saving feature of the "Chat" use case.

By saving system prompts, you can create business-necessary "bots" with just one click.

For example, you can create "a bot that thoroughly reviews source code when input" or "a bot that extracts email addresses from input content."

Additionally, chat conversation histories can be shared with logged-in users, and system prompts can be imported from shared conversation histories.

Since GenU is OSS, you can also customize it to add your own use cases.

In that case, please be careful about conflicts with GenU's main branch.

[!IMPORTANT] Please enable the

modelIds(text generation),imageGenerationModelIds(image generation), andvideoGenerationModelIds(video generation) in themodelRegionregion listed in/packages/cdk/cdk.json. (Amazon Bedrock Model access screen)

GenU deployment uses AWS Cloud Development Kit (CDK). If you cannot prepare a CDK execution environment, refer to the following deployment methods:

- Deployment method using AWS CloudShell (if preparing your own environment is difficult)

- Workshop (English / Japanese)

First, run the following command. All commands should be executed at the repository root.

npm ciIf you've never used CDK before, you need to Bootstrap for the first time only. The following command is unnecessary if your environment is already bootstrapped.

npx -w packages/cdk cdk bootstrapNext, deploy AWS resources with the following command. Please wait for the deployment to complete (it may take about 20 minutes).

# Normal deployment

npm run cdk:deploy

# Fast deployment (quickly deploy without pre-checking created resources)

npm run cdk:deploy:quick- Deployment Options

- Update Method

- Local Development Environment Setup

- Resource Deletion Method

- How to Use as a Native App

- Using Browser Extensions

We have published configuration and cost estimation examples for using GenU. (The service is pay-as-you-go, and actual costs will vary depending on your usage.)

- Simple Version (without RAG) Estimation

- With RAG (Amazon Kendra) Estimation

- With RAG (Knowledge Base) Estimation

| Customer | Quote |

|---|---|

|

Yasashiite Co., Ltd. Thanks to GenU, we were able to provide added value to users and improve employee work efficiency. We continue to evolve from "smooth operation" to "exciting work" as employees' "previous work" transforms into enjoyable work! ・See case details ・See case page |

|

TAKIHYO Co., Ltd. Achieved internal business efficiency and reduced over 450 hours of work by utilizing generative AI. Applied Amazon Bedrock to clothing design, etc., and promoted digital talent development. ・See case page |

|

Salsonido Inc. By utilizing GenU, which is provided as a solution, we were able to quickly start improving business processes with generative AI. ・See case details ・Applied service |

|

TAMURA CORPORATION The application samples that AWS publishes on Github have a wealth of immediately testable functions, and by using them as they are, we were able to easily select functions that suited us and shorten the development time of the final system. ・See case details |

|

JDSC Inc. Amazon Bedrock allows us to securely use LLMs with our data. Also, we can switch to the optimal model depending on the purpose, allowing us to improve speed and accuracy while keeping costs down. ・See case details |

|

iret, Inc. To accumulate and systematize internal knowledge for BANDAI NAMCO Amusement Inc.'s generative AI utilization, we developed a use case site using Generative AI Use Cases JP provided by AWS. iret, Inc. supported the design, construction, and development of this project. ・BANDAI NAMCO Amusement Inc.'s cloud utilization case study |

|

IDEALOG Inc. I feel that we can achieve even greater work efficiency than with conventional generative AI tools. Using Amazon Bedrock, which doesn't use input/output data for model training, gives us peace of mind regarding security. ・See case details ・Applied service |

|

eStyle Inc. By utilizing GenU, we were able to build a generative AI environment in a short period and promote knowledge sharing within the company. ・See case details |

|

Meidensha Corporation By using AWS services such as Amazon Bedrock and Amazon Kendra, we were able to quickly and securely build a generative AI usage environment. It contributes to employee work efficiency through automatic generation of meeting minutes and searching internal information. ・See case details |

|

Sankyo Tateyama, Inc. Information buried within the company became quickly searchable with Amazon Kendra. By referring to GenU, we were able to promptly provide the functions we needed, such as meeting minutes generation. ・See case details |

|

Oisix ra daichi Inc. Through the use case development project using GenU, we were able to grasp the necessary resources, project structure, external support, and talent development, which helped us clarify our image for the internal deployment of generative AI. ・See case page |

|

SAN-A CO., LTD. By utilizing Amazon Bedrock, our engineers' productivity has dramatically improved, accelerating the migration of our company-specific environment, which we had built in-house, to the cloud. ・See case details ・See case page |

|

ONE COMPATH CO., LTD. By utilizing GenU, we were able to quickly establish a company-wide generative AI foundation. This made it possible for the planning department to develop PoCs independently, which accelerated the business creation cycle and allowed the development department to concentrate resources on more important business initiatives. ・See case details |

|

Mitsubishi Electric Engineering CO., LTD. Team members with no prior experience in generative AI development successfully built a RAG system in just 3 months using GenU with ServerWorks’ guidance. By leveraging GenU’s architecture as a reference, they achieved improved efficiency in helpdesk manual search operations and realized in-house development capabilities. ・See case details |

|

Orbitics Inc. We were able to develop it in an astonishingly short period of time. We will strategically deploy the acquired development technology across various business domains to enhance operational efficiency throughout the entire organization. ・See case details |

If you would like to have your use case featured, please contact us via Issue.

- GitHub (Japanese): How to deploy GenU by one click

- Blog (Japanese): GenU Use Case Builder for Creating and Distributing Generative AI Apps with No Code

- Blog (Japanese): How to Make RAG Projects Successful #1 ~ Or How to Fail Fast ~

- Blog (Japanese): Debugging Methods to Improve Accuracy in RAG Chat

- Blog (Japanese): Customizing GenU with No Coding Using Amazon Q Developer CLI

- Blog (Japanese): How to Customize Generative AI Use Cases JP

- Blog (Japanese): Generative AI Use Cases JP ~ First Contribution Guide

- Blog (Japanese): Let Generative AI Decline Unreasonable Requests ~ Integrating Generative AI into Browsers ~

- Blog (Japanese): Developing an Interpreter with Amazon Bedrock!

- Blog (Japanese): Using GenU's Metadata Filter for Department-based Filtering

- Blog (Japanese): Running Bedrock Inference on GenU with Different AWS Account

- Video (Japanese): The Appeal and Usage of Generative AI Use Cases JP (GenU) for Thoroughly Considering Generative AI Use Cases

See CONTRIBUTING for more information.

This library is licensed under the MIT-0 License. See the LICENSE file.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for generative-ai-use-cases

Similar Open Source Tools

generative-ai-use-cases

Generative AI Use Cases (GenU) is an application that provides well-architected implementation with business use cases for utilizing generative AI in business operations. It offers a variety of standard use cases leveraging generative AI, such as chat interaction, text generation, summarization, meeting minutes generation, writing assistance, translation, web content extraction, image generation, video generation, video analysis, diagram generation, voice chat, RAG technique, custom agent creation, and custom use case building. Users can experience generative AI use cases, perform RAG technique, use custom agents, and create custom use cases using GenU.

morphik-core

Morphik is an AI-native toolset designed to help developers integrate context into their AI applications by providing tools to store, represent, and search unstructured data. It offers features such as multimodal search, fast metadata extraction, and integrations with existing tools. Morphik aims to address the challenges of traditional AI approaches that struggle with visually rich documents and provide a more comprehensive solution for understanding and processing complex data.

agent-lightning

Agent Lightning is a lightweight and efficient tool for automating repetitive tasks in the field of data analysis and machine learning. It provides a user-friendly interface to create and manage automated workflows, allowing users to easily schedule and execute data processing, model training, and evaluation tasks. With its intuitive design and powerful features, Agent Lightning streamlines the process of building and deploying machine learning models, making it ideal for data scientists, machine learning engineers, and AI enthusiasts looking to boost their productivity and efficiency in their projects.

llm-app

Pathway's LLM (Large Language Model) Apps provide a platform to quickly deploy AI applications using the latest knowledge from data sources. The Python application examples in this repository are Docker-ready, exposing an HTTP API to the frontend. These apps utilize the Pathway framework for data synchronization, API serving, and low-latency data processing without the need for additional infrastructure dependencies. They connect to document data sources like S3, Google Drive, and Sharepoint, offering features like real-time data syncing, easy alert setup, scalability, monitoring, security, and unification of application logic.

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

AppFlowy

AppFlowy.IO is an open-source alternative to Notion, providing users with control over their data and customizations. It aims to offer functionality, data security, and cross-platform native experience to individuals, as well as building blocks and collaboration infra services to enterprises and hackers. The tool is built with Flutter and Rust, supporting multiple platforms and emphasizing long-term maintainability. AppFlowy prioritizes data privacy, reliable native experience, and community-driven extensibility, aiming to democratize the creation of complex workplace management tools.

hi-ml

The Microsoft Health Intelligence Machine Learning Toolbox is a repository that provides low-level and high-level building blocks for Machine Learning / AI researchers and practitioners. It simplifies and streamlines work on deep learning models for healthcare and life sciences by offering tested components such as data loaders, pre-processing tools, deep learning models, and cloud integration utilities. The repository includes two Python packages, 'hi-ml-azure' for helper functions in AzureML, 'hi-ml' for ML components, and 'hi-ml-cpath' for models and workflows related to histopathology images.

OpenDAN-Personal-AI-OS

OpenDAN is an open source Personal AI OS that consolidates various AI modules for personal use. It empowers users to create powerful AI agents like assistants, tutors, and companions. The OS allows agents to collaborate, integrate with services, and control smart devices. OpenDAN offers features like rapid installation, AI agent customization, connectivity via Telegram/Email, building a local knowledge base, distributed AI computing, and more. It aims to simplify life by putting AI in users' hands. The project is in early stages with ongoing development and future plans for user and kernel mode separation, home IoT device control, and an official OpenDAN SDK release.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Conversation-Knowledge-Mining-Solution-Accelerator

The Conversation Knowledge Mining Solution Accelerator enables customers to leverage intelligence to uncover insights, relationships, and patterns from conversational data. It empowers users to gain valuable knowledge and drive targeted business impact by utilizing Azure AI Foundry, Azure OpenAI, Microsoft Fabric, and Azure Search for topic modeling, key phrase extraction, speech-to-text transcription, and interactive chat experiences.

sdk

Vikit.ai SDK is a software development kit that enables easy development of video generators using generative AI and other AI models. It serves as a langchain to orchestrate AI models and video editing tools. The SDK allows users to create videos from text prompts with background music and voice-over narration. It also supports generating composite videos from multiple text prompts. The tool requires Python 3.8+, specific dependencies, and tools like FFMPEG and ImageMagick for certain functionalities. Users can contribute to the project by following the contribution guidelines and standards provided.

Robyn

Robyn is an experimental, semi-automated and open-sourced Marketing Mix Modeling (MMM) package from Meta Marketing Science. It uses various machine learning techniques to define media channel efficiency and effectivity, explore adstock rates and saturation curves. Built for granular datasets with many independent variables, especially suitable for digital and direct response advertisers with rich data sources. Aiming to democratize MMM, make it accessible for advertisers of all sizes, and contribute to the measurement landscape.

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

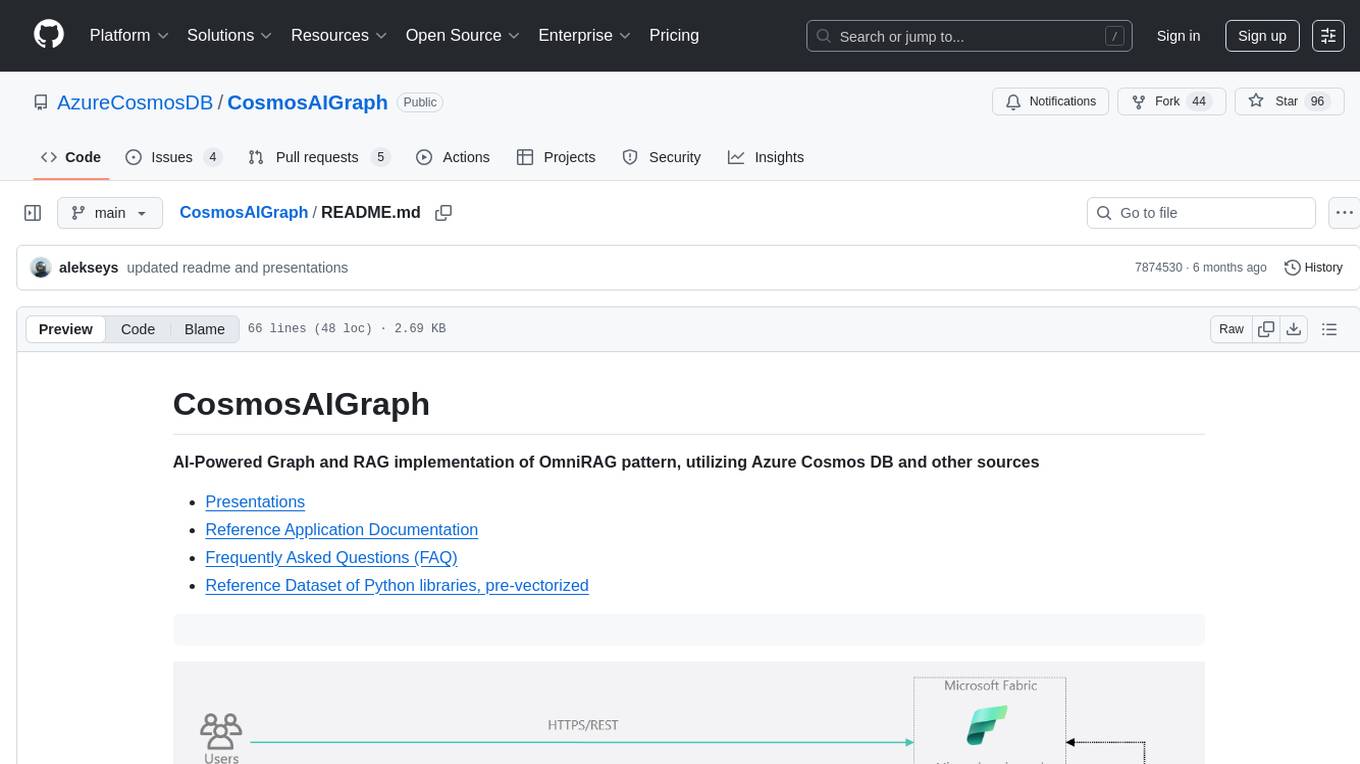

CosmosAIGraph

CosmosAIGraph is an AI-powered graph and RAG implementation of OmniRAG pattern, utilizing Azure Cosmos DB and other sources. It includes presentations, reference application documentation, FAQs, and a reference dataset of Python libraries pre-vectorized. The project focuses on Azure Cosmos DB for NoSQL and Apache Jena implementation for the in-memory RDF graph. It provides DockerHub images, with plans to add RBAC and Microsoft Entra ID/AAD authentication support, update AI model to gpt-4.5, and offer generic graph examples with a graph generation solution.

edenai-apis

Eden AI aims to simplify the use and deployment of AI technologies by providing a unique API that connects to all the best AI engines. With the rise of **AI as a Service** , a lot of companies provide off-the-shelf trained models that you can access directly through an API. These companies are either the tech giants (Google, Microsoft , Amazon) or other smaller, more specialized companies, and there are hundreds of them. Some of the most known are : DeepL (translation), OpenAI (text and image analysis), AssemblyAI (speech analysis). There are **hundreds of companies** doing that. We're regrouping the best ones **in one place** !

project_alice

Alice is an agentic workflow framework that integrates task execution and intelligent chat capabilities. It provides a flexible environment for creating, managing, and deploying AI agents for various purposes, leveraging a microservices architecture with MongoDB for data persistence. The framework consists of components like APIs, agents, tasks, and chats that interact to produce outputs through files, messages, task results, and URL references. Users can create, test, and deploy agentic solutions in a human-language framework, making it easy to engage with by both users and agents. The tool offers an open-source option, user management, flexible model deployment, and programmatic access to tasks and chats.

For similar tasks

generative-ai-use-cases

Generative AI Use Cases (GenU) is an application that provides well-architected implementation with business use cases for utilizing generative AI in business operations. It offers a variety of standard use cases leveraging generative AI, such as chat interaction, text generation, summarization, meeting minutes generation, writing assistance, translation, web content extraction, image generation, video generation, video analysis, diagram generation, voice chat, RAG technique, custom agent creation, and custom use case building. Users can experience generative AI use cases, perform RAG technique, use custom agents, and create custom use cases using GenU.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

InvokeAI

InvokeAI is a leading creative engine built to empower professionals and enthusiasts alike. Generate and create stunning visual media using the latest AI-driven technologies. InvokeAI offers an industry leading Web Interface, interactive Command Line Interface, and also serves as the foundation for multiple commercial products.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

StableSwarmUI

StableSwarmUI is a modular Stable Diffusion web user interface that emphasizes making power tools easily accessible, high performance, and extensible. It is designed to be a one-stop-shop for all things Stable Diffusion, providing a wide range of features and capabilities to enhance the user experience.

civitai

Civitai is a platform where people can share their stable diffusion models (textual inversions, hypernetworks, aesthetic gradients, VAEs, and any other crazy stuff people do to customize their AI generations), collaborate with others to improve them, and learn from each other's work. The platform allows users to create an account, upload their models, and browse models that have been shared by others. Users can also leave comments and feedback on each other's models to facilitate collaboration and knowledge sharing.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.