ImTip

ImTip 智能桌面助手:仅 832 KB,提供输入跟踪提示 + 超级热键 + AI 助手,可将各种桌面应用快速接入 AI 大模型

Stars: 2310

ImTip is a lightweight desktop assistant tool that provides features such as super hotkeys, input method status prompts, and custom AI assistant. It displays concise icons at the input cursor to show various input method and keyboard status, allowing users to customize appearance schemes. With ImTip, users can easily manage input method status without cluttering the screen with the built-in status bar. The tool supports visual editing of status prompt appearance and programmable extensions for super hotkeys. ImTip has low CPU usage and offers customizable tracking speed to adjust CPU consumption. It supports various input methods and languages, making it a versatile tool for enhancing typing efficiency and accuracy.

README:

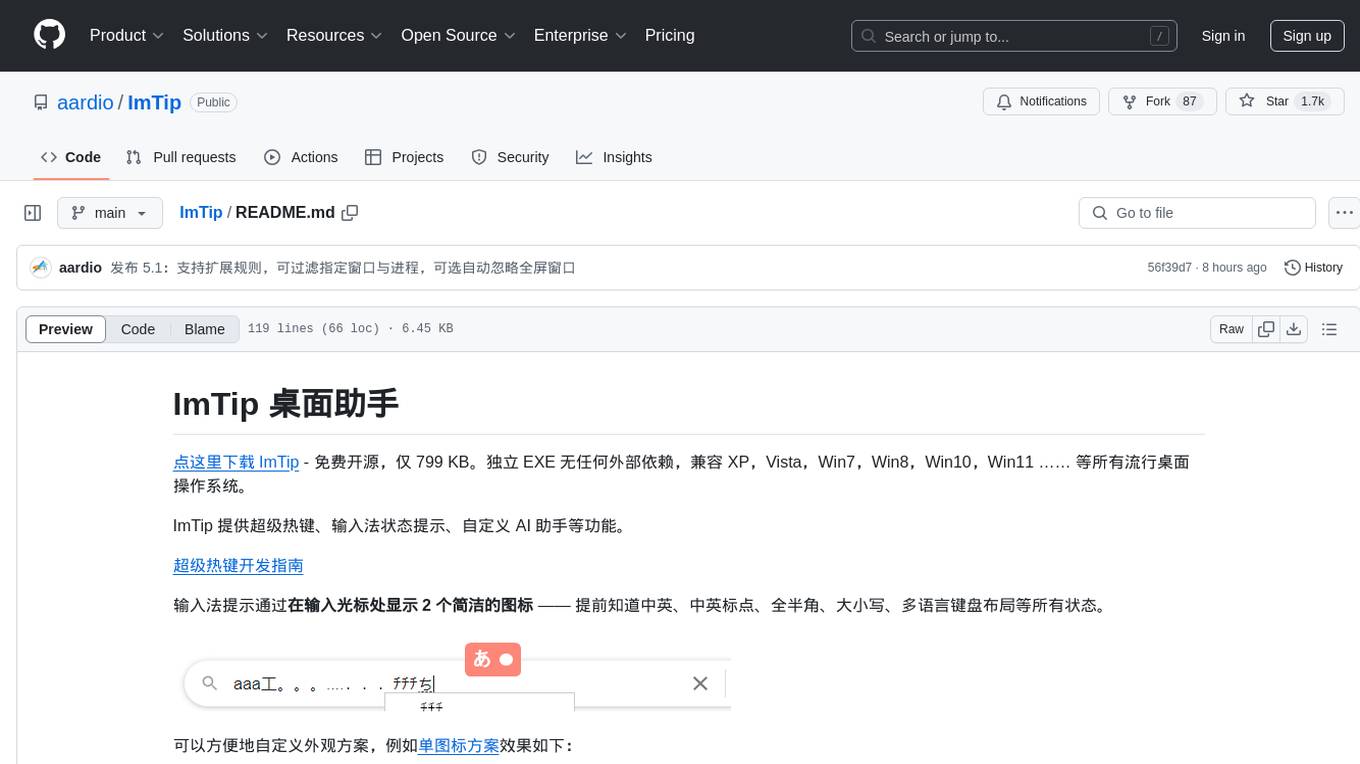

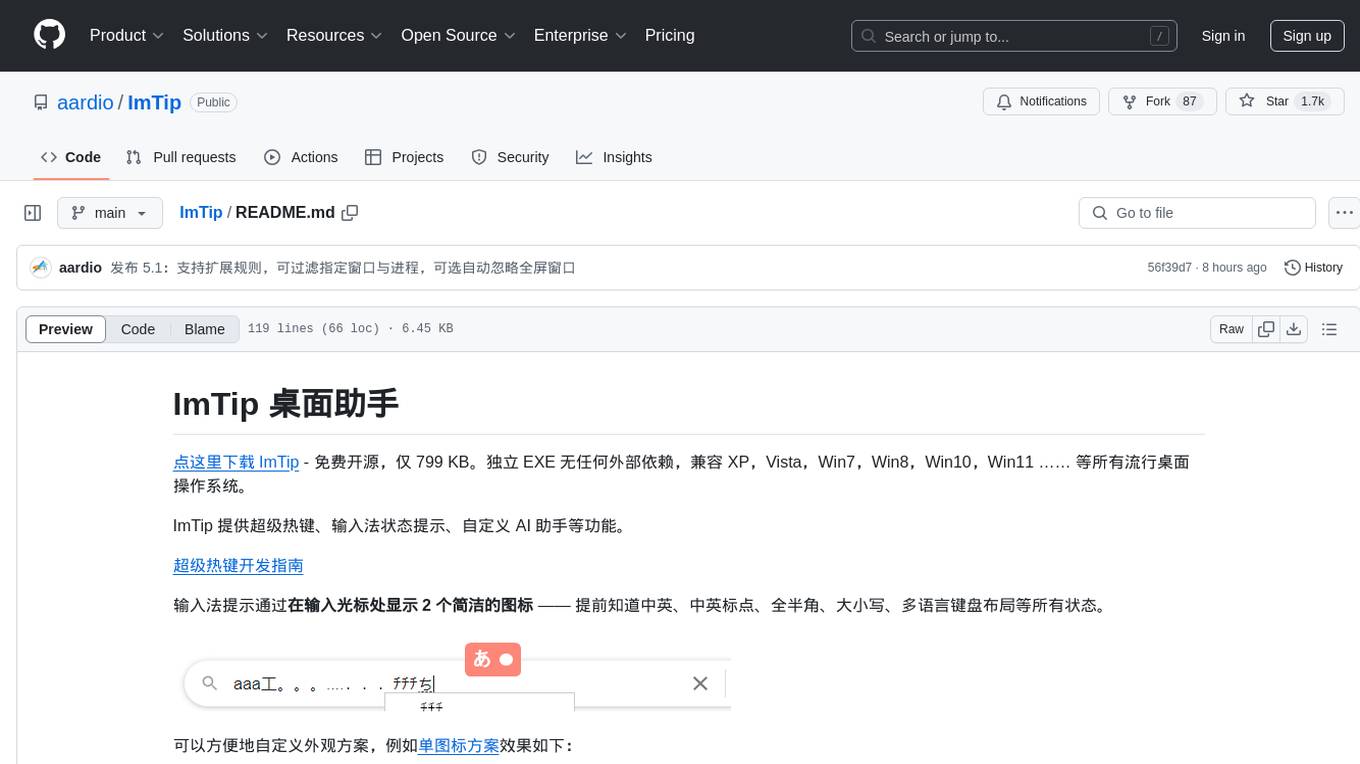

点这里下载 ImTip - 免费开源,仅 860 KB。独立 EXE 无任何外部依赖,兼容 XP,Vista,Win7,Win8,Win10,Win11 ……

ImTip 提供 输入跟踪提示、超级热键(各种桌面应用快速接入 AI)、自定义 AI 助手 等功能。

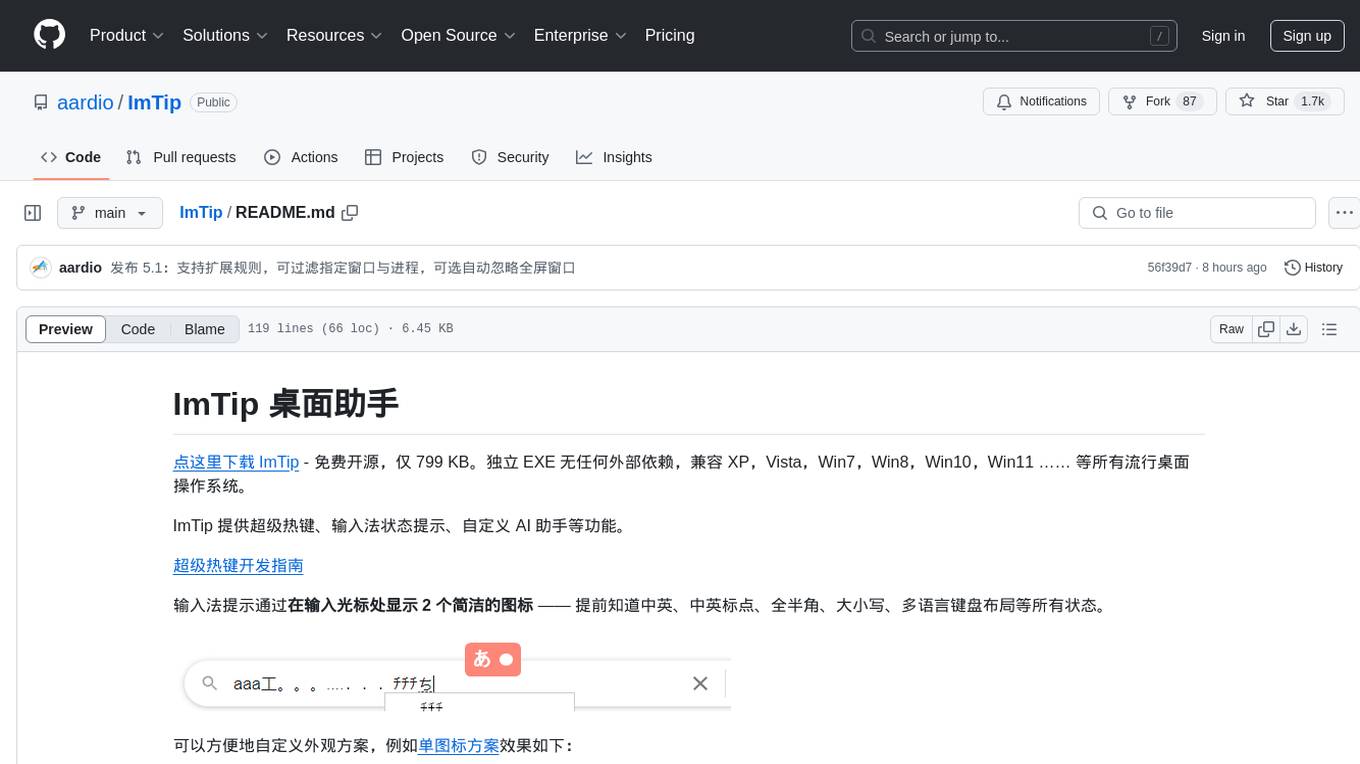

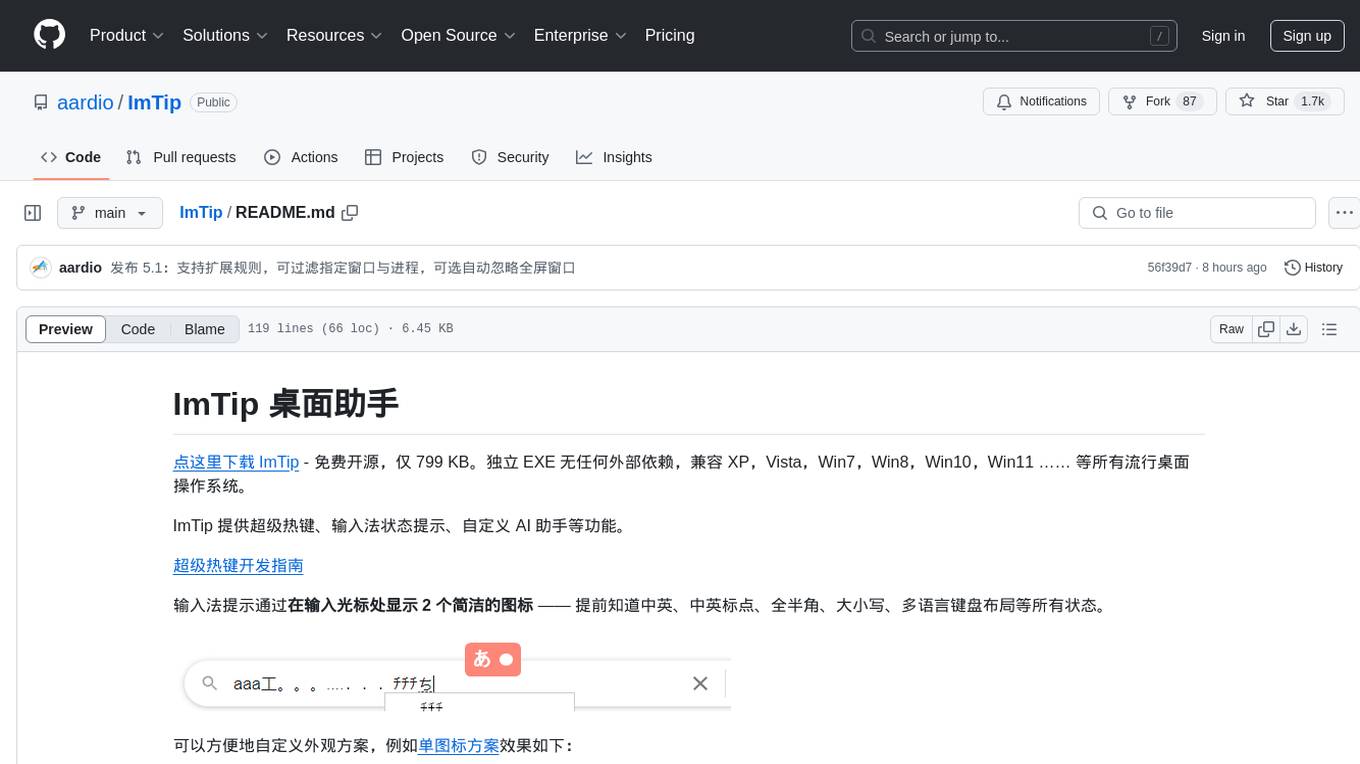

输入跟踪提示通过在输入光标处显示 2 个简洁的图标 —— 提前知道中英、中英标点、全半角、大小写、多语言键盘布局等所有状态。

可以方便地自定义外观方案,例如单图标方案效果如下:

再也不怕按错了! 保持思考与输入的连续性,避免低头看任务栏或通过其他操作检查输入状态。

- 不是只能看中英状态,而是关注更少的图标,了解更多的常用输入法与键盘状态。

- 不是只在切换输入法才显示一次状态,当切换到新的输入位置都会及时地提醒输入法状态,可以自定义显示时长、方式、外观。

有了 ImTip 就可以关掉输入法自带的状态栏,屏幕更干净了,美滋滋再也不用看右下角 !

理论上支持所有输入法,系统自带的微软拼音,微软五笔,小小输入法,搜狗输入法,百度输入法,QQ输入法,谷歌输入法,小鹤输入法,手心输入法 …… 包括我测试的日文、韩文、西班牙语输入法都可以支持 ImTip 。

ImTip 支持可视化编辑状态提示外观:

可将外观方案直接拖入 ImTip.exe 或外观设置窗口快速导入。

支持用剪贴板直接复制粘贴配置方案代码。

ImTip CPU 占用极低,可以通过设置「跟踪检测速度」调整 CPU 占用:

默认有微小延迟 —— 这是程序的主动优化( 并非被动延迟 ),您可以加快「跟踪检测速度」(更丝滑,增加的资源占用仍然是可忽略的)。

ImTip 提供可编程扩展的「超级热键」。

默认提供以下热键:

- Ctrl+@ AI 英汉词典 / AI 英汉翻译

- Ctrl+# 快速查单词(汽泡提示)

- Ctrl+$ 打开财务大写、日期时间大写、数学运算工具

更多示例:

超级热键调用 AI 大模型自动编写 aardio 代码( aardio 现在已自带类似的 F1 键 AI 助手):

超级热键调调用 AI 大模型在 PowerShell 中写代码

超级热键调调用 AI 大模型在记事本中续写与补全

AI 英汉词典需要用到

string.words,table.coca2000扩展库,AI 翻译的大声朗读功能需要用到web.edgeTextToSpeech扩展库。

- 请先在 aardio 在点击「 工具 » 扩展库 」

- 搜索关键词“英语”,勾选找到的

string.words,table.coca2000扩展库- 然后点击 「安装」 按钮

如果 aardio 已运行并且当前线程调用了

import ide则支持自动安装扩展库

ImTip 提供简洁可定制的 AI 桌面助手。 可迅速将大模型 API 转换为可用的桌面助手。AI 助手已支持渲染数学公式、代码高亮、一键分享截长屏、自动联网读取文档 …… 等功能。

可自定义多个 AI 助手配置,同一会话也可以随时切换不同的大模型。新版 ImTip 已经默认添加了翻译、词典等 AI 助手。

ImTip 也支持在超级热键中快助调用 AI 大模型接口,或者自动调用 AI 会话窗口。启用步骤如下:

ImTip 托盘菜单提供快捷启用系统输入法、切换双拼方案等功能。

ImTip 快捷键:

按住 Shift 点击托盘图标可打开 AI 助手。

接住 Ctrl 点击托盘图标可启用/禁用输入跟踪提示。

输入法常用快捷键:

Shift 切换中/英输入;

Ctrl+. 换中/英标点;

Shift+空格 切换全/半角;

Alt+Shift 切换语言

有些第三方输入法会安装「中文美式键盘」 - 可能导致不必要的错乱。这个键盘在 Win10 其实已被废弃,建议移除或更改为「英语美式键盘」。Win7/Win10/Win11 可在 ImTip 托盘菜单中禁用启用一次「英语键盘」就可修复该问题。

ImTip 默认以普通权限启动,以管理权限启动 ImTip.exe —— 才会对其他管理权限窗口生效。以管理权限启动后重新勾选 「允许开机启动」,则开机以管理权限启动( 不会再弹出请求权限弹框 , 注意只有同样在管理权限下启动才能取消此设置 )。

ImTip 使用了多种不同的接口获取输入位置,但少数任何接口都不支持的应用窗口会退化为取鼠标输入指针位置。

在设置界面勾选『启用 java.accessBridge 扩展 』可自动支持 JetBrains 等 Java 程序窗口,一键自动启用,不需要其他手动配置与操作。

如果勾选『启用 java.accessBridge 扩展』时自动取消,并且显示 『未启用 java.accessBridge 扩展 』,请检查当前系统是否能正常联网( 此功能需要下载 aardio 扩展库 java.accessBridge )。也可以自行下载 aardio 最新版,然后在 aardio 中运行下面的代码启用 JAB( Java Access Bridge ) :

import java.accessBridge;

print( java.accessBridge.switch(true) );

对于以上方式都不支持的窗口,请参考:设置兼容窗口类名

微信 4.0 已经完美支持 ImTip,不需要其他设置。

ImTip 仅在检测到输入框时显示输入状态。即使取消勾选「仅切换输入目标或状态后显示」,在检测不到输入目标的窗口仍然不会显示输入状态(除非所在窗口设置了兼容窗口类名)。

请参考:输入法与键备状态检测原理与规则

-

主流输入法基本都可以支持 ImTip 。

-

微软自带的所有输入法完美支持 ImTip。

-

小小输入法完美支持 ImTip。如有问题可使用开源工具 IMY 卸载重装一次小小输入法就可以了。

-

小狼毫输入法请安装最新 nightly build 版可支持 ImTip ,可通过 ImTip 托盘菜单启用或禁用输入法悬浮提示

-

微信输入法、手心输入法、讯飞输入法需要勾选『怪异模式』。注意这三种输入法分别使用不同的『怪异模式』,最好不要同时安装这些有问题的输入法,安装变动后也请重新勾选一次『怪异模式』以更新配置。勾选『怪异模式』则不支持其他正常输入法。

-

小鹤输入法在英文模式下切换全半角后状态会错乱,按 Shift 切换一次中英模式会恢复正常,可能基于多多的输入法都有类似问题。

-

个别老旧的输入法会导致其他输入法的状态也变得混乱,卸载有问题的输入法,切换或重新打开窗口可恢复正常。

-

ImTip.exe *.aardio加载配置方案,或者直接将配置文件拖到 ImTip.exe 上也可以。 -

ImTip.exe 无参数如果 ImTip 已运行则打开配置窗口,或者直接双击 ImTip.exe 也可以。 -

ImTip.exe /chat 配置名称 /q 需要立即发送的问题启动 AI 聊天助手会话窗口。配置名称可省略,q 参数也可以省略。 aardio 提供 process.imTip 库可以方便地启动 ImTip 聊天助手,可参考:超级热键 - 自动调用 AI 会话窗口。 -

ImTip.exe /sys启动时不显示主界面。勾选开机启动时设置的这是这个参数。

先退出 ImTip ,然后按 Ctrl+R打开「运行」,

输入

cmd /c rd /s /q %localappdata%\aardio\std\ImTip

回车执行即可删除配置目录( 也会删除超级热键配置 )。

重新运行 ImTip 会自动重置到最新版的默认配置。

本页的动画主要使用 开源免费,下载体积仅 820 KB 的极简录屏软件 Gif123 录制。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ImTip

Similar Open Source Tools

ImTip

ImTip is a lightweight desktop assistant tool that provides features such as super hotkeys, input method status prompts, and custom AI assistant. It displays concise icons at the input cursor to show various input method and keyboard status, allowing users to customize appearance schemes. With ImTip, users can easily manage input method status without cluttering the screen with the built-in status bar. The tool supports visual editing of status prompt appearance and programmable extensions for super hotkeys. ImTip has low CPU usage and offers customizable tracking speed to adjust CPU consumption. It supports various input methods and languages, making it a versatile tool for enhancing typing efficiency and accuracy.

forksilly.doc

ForkSilly.doc is a repository mainly for storing documentation of ForkSilly, an Android project developed using React Native/Expo. It is suitable for users with experience in SillyTavern. The project is self-shared and may not accept feature requests. It is designed for pure text cards, illustration cards, and Stable Diffusion text-image. It is compatible with SillyTavern V2 character cards, world books, regex, presets, and chat records. Users can import and export at any time. The tool supports various customization options such as chat font, background image, and quick toggle of preset entries. It also allows the use of various OpenAI-compatible APIs and provides built-in storage management features. Users can utilize text-image functionality and access free text-image services like pollinations.ai. Additionally, it supports Stable Diffusion text-image features and integration with silicon-based flow and Gemini embedding models. The tool does not support TTS or connecting to NAI.

chatgpt-webui

ChatGPT WebUI is a user-friendly web graphical interface for various LLMs like ChatGPT, providing simplified features such as core ChatGPT conversation and document retrieval dialogues. It has been optimized for better RAG retrieval accuracy and supports various search engines. Users can deploy local language models easily and interact with different LLMs like GPT-4, Azure OpenAI, and more. The tool offers powerful functionalities like GPT4 API configuration, system prompt setup for role-playing, and basic conversation features. It also provides a history of conversations, customization options, and a seamless user experience with themes, dark mode, and PWA installation support.

wealth-tracker

Wealth Tracker is a personal finance management tool designed to help users track their income, expenses, and investments in one place. With intuitive features and customizable categories, users can easily monitor their financial health and make informed decisions. The tool provides detailed reports and visualizations to analyze spending patterns and set financial goals. Whether you are budgeting, saving for a big purchase, or planning for retirement, Wealth Tracker offers a comprehensive solution to manage your money effectively.

fit-framework

FIT Framework is a Java enterprise AI development framework that provides a multi-language function engine (FIT), a flow orchestration engine (WaterFlow), and a Java ecosystem alternative solution (FEL). It runs in native/Spring dual mode, supports plug-and-play and intelligent deployment, seamlessly unifying large models and business systems. FIT Core offers language-agnostic computation base with plugin hot-swapping and intelligent deployment. WaterFlow Engine breaks the dimensional barrier of BPM and reactive programming, enabling graphical orchestration and declarative API-driven logic composition. FEL revolutionizes LangChain for the Java ecosystem, encapsulating large models, knowledge bases, and toolchains to integrate AI capabilities into Java technology stack seamlessly. The framework emphasizes engineering practices with intelligent conventions to reduce boilerplate code and offers flexibility for deep customization in complex scenarios.

AivisSpeech

AivisSpeech is a Japanese text-to-speech software based on the VOICEVOX editor UI. It incorporates the AivisSpeech Engine for generating emotionally rich voices easily. It supports AIVMX format voice synthesis model files and specific model architectures like Style-Bert-VITS2. Users can download AivisSpeech and AivisSpeech Engine for Windows and macOS PCs, with minimum memory requirements specified. The development follows the latest version of VOICEVOX, focusing on minimal modifications, rebranding only where necessary, and avoiding refactoring. The project does not update documentation, maintain test code, or refactor unused features to prevent conflicts with VOICEVOX.

JittorLLMs

JittorLLMs is a large model inference library that allows running large models on machines with low hardware requirements. It significantly reduces hardware configuration demands, enabling deployment on ordinary machines with 2GB of memory. It supports various large models and provides a unified environment configuration for users. Users can easily migrate models without modifying any code by installing Jittor version of torch (JTorch). The framework offers fast model loading speed, optimized computation performance, and portability across different computing devices and environments.

Tianji

Tianji is a free, non-commercial artificial intelligence system developed by SocialAI for tasks involving worldly wisdom, such as etiquette, hospitality, gifting, wishes, communication, awkwardness resolution, and conflict handling. It includes four main technical routes: pure prompt, Agent architecture, knowledge base, and model training. Users can find corresponding source code for these routes in the tianji directory to replicate their own vertical domain AI applications. The project aims to accelerate the penetration of AI into various fields and enhance AI's core competencies.

oba-live-tool

The oba live tool is a small tool for Douyin small shops and Kuaishou Baiying live broadcasts. It features multiple account management, intelligent message assistant, automatic product explanation, AI automatic reply, and AI intelligent assistant. The tool requires Windows 10 or above, Chrome or Edge browser, and a valid account for Douyin small shops or Kuaishou Baiying. Users can download the tool from the Releases page, connect to the control panel, set API keys for AI functions, and configure auto-reply prompts. The tool is licensed under the MIT license.

hugging-llm

HuggingLLM is a project that aims to introduce ChatGPT to a wider audience, particularly those interested in using the technology to create new products or applications. The project focuses on providing practical guidance on how to use ChatGPT-related APIs to create new features and applications. It also includes detailed background information and system design introductions for relevant tasks, as well as example code and implementation processes. The project is designed for individuals with some programming experience who are interested in using ChatGPT for practical applications, and it encourages users to experiment and create their own applications and demos.

HiveChat

HiveChat is an AI chat application designed for small and medium teams. It supports various models such as DeepSeek, Open AI, Claude, and Gemini. The tool allows easy configuration by one administrator for the entire team to use different AI models. It supports features like email or Feishu login, LaTeX and Markdown rendering, DeepSeek mind map display, image understanding, AI agents, cloud data storage, and integration with multiple large model service providers. Users can engage in conversations by logging in, while administrators can configure AI service providers, manage users, and control account registration. The technology stack includes Next.js, Tailwindcss, Auth.js, PostgreSQL, Drizzle ORM, and Ant Design.

Mirror-Flowers

Mirror Flowers is an out-of-the-box code security auditing tool that integrates local static scanning (line-level taint tracking + AST) with AI verification to help quickly discover and locate high-risk issues, providing repair suggestions. It supports multiple languages such as PHP, Python, JavaScript/TypeScript, and Java. The tool offers both single-file and project modes, with features like concurrent acceleration, integrated UI for visual results, and compatibility with multiple OpenAI interface providers. Users can configure the tool through environment variables or API, and can utilize it through a web UI or HTTP API for tasks like single-file auditing or project auditing.

wechat-bot

WeChat Bot is a simple and easy-to-use WeChat robot based on chatgpt and wechaty. It can help you automatically reply to WeChat messages or manage WeChat groups/friends. The tool requires configuration of AI services such as Xunfei, Kimi, or ChatGPT. Users can customize the tool to automatically reply to group or private chat messages based on predefined conditions. The tool supports running in Docker for easy deployment and provides a convenient way to interact with various AI services for WeChat automation.

new-api

New API is an open-source project based on One API with additional features and improvements. It offers a new UI interface, supports Midjourney-Proxy(Plus) interface, online recharge functionality, model-based charging, channel weight randomization, data dashboard, token-controlled models, Telegram authorization login, Suno API support, Rerank model integration, and various third-party models. Users can customize models, retry channels, and configure caching settings. The deployment can be done using Docker with SQLite or MySQL databases. The project provides documentation for Midjourney and Suno interfaces, and it is suitable for AI enthusiasts and developers looking to enhance AI capabilities.

app-platform

AppPlatform is an advanced large-scale model application engineering aimed at simplifying the development process of AI applications through integrated declarative programming and low-code configuration tools. This project provides a powerful and scalable environment for software engineers and product managers to support the full-cycle development of AI applications from concept to deployment. The backend module is based on the FIT framework, utilizing a plugin-based development approach, including application management and feature extension modules. The frontend module is developed using React framework, focusing on core modules such as application development, application marketplace, intelligent forms, and plugin management. Key features include low-code graphical interface, powerful operators and scheduling platform, and sharing and collaboration capabilities. The project also provides detailed instructions for setting up and running both backend and frontend environments for development and testing.

AI-automatically-generates-novels

AI Novel Writing Assistant is an intelligent productivity tool for novel creation based on AI + prompt words. It has been used by hundreds of studios and individual authors to quickly and batch generate novels. With AI technology to enhance writing efficiency and a comprehensive prompt word management feature, it achieves 20 times efficiency improvement in intelligent book disassembly, intelligent book title and synopsis generation, text polishing, and shift+L quick term insertion, making writing easier and more professional. It has been upgraded to v5.2. The tool supports mind map construction of outlines and chapters, AI self-optimization of novels, writing knowledge base management, shift+L quick term insertion in the text input field, support for any mainstream large models integration, custom skin color, prompt word import and export, support for large text memory, right-click polishing, expansion, and de-AI flavoring of outlines, chapters, and text, multiple sets of novel prompt word library management, and book disassembly function.

For similar tasks

ImTip

ImTip is a lightweight desktop assistant tool that provides features such as super hotkeys, input method status prompts, and custom AI assistant. It displays concise icons at the input cursor to show various input method and keyboard status, allowing users to customize appearance schemes. With ImTip, users can easily manage input method status without cluttering the screen with the built-in status bar. The tool supports visual editing of status prompt appearance and programmable extensions for super hotkeys. ImTip has low CPU usage and offers customizable tracking speed to adjust CPU consumption. It supports various input methods and languages, making it a versatile tool for enhancing typing efficiency and accuracy.

Open-LLM-VTuber

Open-LLM-VTuber is a voice-interactive AI companion supporting real-time voice conversations and featuring a Live2D avatar. It can run offline on Windows, macOS, and Linux, offering web and desktop client modes. Users can customize appearance and persona, with rich LLM inference, text-to-speech, and speech recognition support. The project is highly customizable, extensible, and actively developed with exciting features planned. It provides privacy with offline mode, persistent chat logs, and various interaction features like voice interruption, touch feedback, Live2D expressions, pet mode, and more.

VideoChat

VideoChat is a real-time voice interaction digital human tool that supports end-to-end voice solutions (GLM-4-Voice - THG) and cascade solutions (ASR-LLM-TTS-THG). Users can customize appearance and voice, support voice cloning, and achieve low first-packet delay of 3s. The tool offers various modules such as ASR, LLM, MLLM, TTS, and THG for different functionalities. It requires specific hardware and software configurations for local deployment, and provides options for weight downloads and customization of digital human appearance and voice. The tool also addresses known issues related to resource availability, video streaming optimization, and model loading.

pixel-banner

Pixel Banner is a powerful Obsidian plugin that enhances note-taking by creating visually stunning headers with customizable banner images. It offers AI-generated banners, professional banner images from a store, local image support, and direct URL banners. Users can customize banner placement, appearance, display modes, and add decorative icons. The plugin provides efficient workflow with quick banner selection, command integration, and custom field names. It also offers smart organization features like folder-specific settings and image shuffling. Premium features include a token-based system for AI banners, banner history, and prompt inspiration. Enhance your Obsidian experience with beautiful, intelligent banners that make your notes visually distinctive and organized.

For similar jobs

J.A.R.V.I.S.

J.A.R.V.I.S.1.0 is an advanced virtual assistant tool designed to assist users in various tasks. It provides a wide range of functionalities including voice commands, task automation, information retrieval, and communication management. With its intuitive interface and powerful capabilities, J.A.R.V.I.S.1.0 aims to enhance productivity and streamline daily activities for users.

ImTip

ImTip is a lightweight desktop assistant tool that provides features such as super hotkeys, input method status prompts, and custom AI assistant. It displays concise icons at the input cursor to show various input method and keyboard status, allowing users to customize appearance schemes. With ImTip, users can easily manage input method status without cluttering the screen with the built-in status bar. The tool supports visual editing of status prompt appearance and programmable extensions for super hotkeys. ImTip has low CPU usage and offers customizable tracking speed to adjust CPU consumption. It supports various input methods and languages, making it a versatile tool for enhancing typing efficiency and accuracy.

onyx

Onyx is an open-source Gen-AI and Enterprise Search tool that serves as an AI Assistant connected to company documents, apps, and people. It provides a chat interface, can be deployed anywhere, and offers features like user authentication, role management, chat persistence, and UI for configuring AI Assistants. Onyx acts as an Enterprise Search tool across various workplace platforms, enabling users to access team-specific knowledge and perform tasks like document search, AI answers for natural language queries, and integration with common workplace tools like Slack, Google Drive, Confluence, etc.

local-chat

LocalChat is a simple, easy-to-set-up, and open-source local AI chat tool that allows users to interact with generative language models on their own computers without transmitting data to a cloud server. It provides a chat-like interface for users to experience ChatGPT-like behavior locally, ensuring GDPR compliance and data privacy. Users can download LocalChat for macOS, Windows, or Linux to chat with open-weight generative language models.

Riona-AI-Agent

Riona-AI-Agent is a versatile AI chatbot designed to assist users in various tasks. It utilizes natural language processing and machine learning algorithms to understand user queries and provide accurate responses. The chatbot can be integrated into websites, applications, and messaging platforms to enhance user experience and streamline communication. With its customizable features and easy deployment, Riona-AI-Agent is suitable for businesses, developers, and individuals looking to automate customer support, provide information, and engage with users in a conversational manner.

duckduckgo-ai-chat

This repository contains a chatbot tool powered by AI technology. The chatbot is designed to interact with users in a conversational manner, providing information and assistance on various topics. Users can engage with the chatbot to ask questions, seek recommendations, or simply have a casual conversation. The AI technology behind the chatbot enables it to understand natural language inputs and provide relevant responses, making the interaction more intuitive and engaging. The tool is versatile and can be customized for different use cases, such as customer support, information retrieval, or entertainment purposes. Overall, the chatbot offers a user-friendly and interactive experience, leveraging AI to enhance communication and engagement.

blurt

Blurt is a Gnome shell extension that enables accurate speech-to-text input in Linux. It is based on the command line utility NoteWhispers and supports Gnome shell version 48. Users can transcribe speech using a local whisper.cpp installation or a whisper.cpp server. The extension allows for easy setup, start/stop of speech-to-text input with key bindings or icon click, and provides visual indicators during operation. It offers convenience by enabling speech input into any window that allows text input, with the transcribed text sent to the clipboard for easy pasting.

suna

Kortix is an open-source platform designed to build, manage, and train AI agents for various tasks. It allows users to create autonomous agents, from general-purpose assistants to specialized automation tools. The platform offers capabilities such as browser automation, file management, web intelligence, system operations, API integrations, and agent building tools. Users can create custom agents tailored to specific domains, workflows, or business needs, enabling tasks like research & analysis, browser automation, file & document management, data processing & analysis, and system administration.