STMP

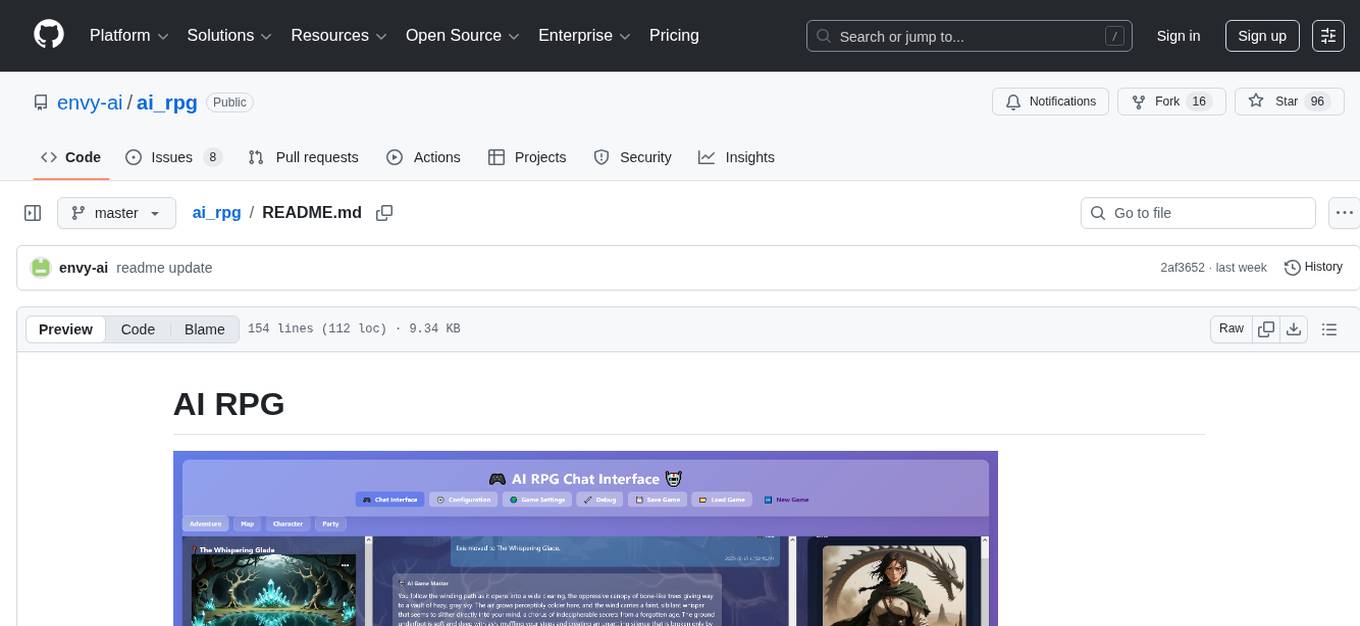

SillyTavern MultiPlayer is an LLM chat interface, created by RossAscends, that allows multiple users to chat together with each other and an AI.

Stars: 93

SillyTavern MultiPlayer (STMP) is an LLM chat interface that enables multiple users to chat with an AI. It features a sidebar chat for users, tools for the Host to manage the AI's behavior and moderate users. Users can change display names, chat in different windows, and the Host can control AI settings. STMP supports Text Completions, Chat Completions, and HordeAI. Users can add/edit APIs, manage past chats, view user lists, and control delays. Hosts have access to various controls, including AI configuration, adding presets, and managing characters. Planned features include smarter retry logic, host controls enhancements, and quality of life improvements like user list fading and highlighting exact usernames in AI responses.

README:

SillyTavern MultiPlayer is an LLM chat interface that allows multiple users to chat together with one or more AI characters. It also includes a sidebar chat for users only, and many tools for the Host to control the behavior of the AI and to moderate users.

Created by RossAscends

If this software brings you and your friend's joy, donations to Ross can be made via:

| Ko-fi | Patreon |

|---|---|

|

|

For tech support or to contact RossAscends directly, join the SillyTavern Discord.

- Make sure Node JS is installed on your system and has access through your firewall.

- Clone this github repo into a new folder NOT the main SillyTavern folder. (

git clone https://github.com/RossAscends/STMP/in a command line, or use a Github GUI like Github Desktop) - Run

STMP.batto install the required Node modules and start the server. - On the first run, the server will create an empty

secrets.jsonand a defaultconfig.json, as well as the/api-presets/and/chats/folders. - Open

http://localhost:8181/in your web browser.

(instructions coming soon)

You can use Horde as an anonymous user, but that generally leads to slower response times.

To use your Horde API key in STMP, add it to secrets.json like this (server should run at least once):

{

// some content

"horde_key": "YourKeyHere",

// some other content

}Don't have one? Registering a HordeAI account is free and easy.

This must be done AFTER completing all installation steps above.

- Make sure your STMP server is running.

- Run

Remote-Link.cmdto downloadcloudflared.exe(only one time, 57MB). - the Cloudflared server will auto-start and generate a random tunnel URL for your STMP server.

- Copy the URL displayed in the middle of the large box in the center of the console window.

- DO NOT CLOSE THE CLOUDFLARE CONSOLE WINDOW

- Share the generated cloudflared URL with the guest users.

- User will be able to directly and securely connect to your PC by opening the URL in their browser.

- User can change their display name at any time using either of the inputs at the top of the respective chat displays.

- You can have a different name for the User Chat and AI Chat.

- Usernames are stored in browser localStorage.

- Chatting can be done in either chat windows by typing into the appropriate box and then either pressing the Send button (✏️), or pressing Enter.

-

Shift+Entercan be used to add newlines to the input. - Markdown formatting is respected.

- Some limited HTML styling is also possible inside user messages.

- Users with the Host role can hover over any chat message in either chats to see editing and deletion buttons.

The host will see the following controls:

AI Config Section

-

Modecan be clicked to switch between TC/CC mode, and HordeAI mode.- 📑 = Completions

- 🧟 = Horde

-

Contextdefines how long your API prompt should be. Longer = more chat history sent, but slower processing times. -

Responsedefines how many tokens long the AI response can be. -

Streamingtogles streamed responses on or off. -

AutoAIToggle to determine whether the AI should respond to every user input, or only on command from the Host. -

Instructsets the type of instruct sequences to use when crafting the API prompt. -

Samplersets the hyperparameter preset, which affects the response style. -

APIselector to choose which LLM API to use, and anEditbutton to change its configuration. -

Modelsallows selection of models served by the connected API.

Insertions Section

-

System Promptdefines what will be placed at the very top of the prompt. -

Author Note(D4)defines what will be inserted as a system message at Depth 4 in the prompt. -

Final Instruction(D1, "JB")defines what to send as a system message at Depth 1 in the prompt.

Currently STMP supports Text Completions (TC), Chat Completions (CC), and HordeAI.

STMP has been tested with the following APIs:

- TabbyAPI

- YALS

- KoboldCPP

- Aphrodite

- Oobabooga Textgeneration Webui (OpenAI compatible mode)

- OpenRouter is supported in CC mode.

- OpenAI official API

- Anthropic's Claude

Other LLM backends that provide an Open AI compatible API should wotk with STMP.

- Select

Add new APIfrom theAPIselector to open the API Editing panel. - A new panel will be displayed with new inputs:

a.

Name- the label you want to remember the API as b.Endpoint URL- this is the base server URL for the LLM API. If the usual URL does not work, try addingv1/to the end. c.Key- If your API requires a key, put it in here. d.Endpoint Type- select from Text Completions or Chat Completions as appropriate for the API endpoint. e.Claude- select this if the API is based on Anthropic's Claude model, because it needs special prompt formatting. f.Close- will cancel the API editing/creating process and return you to the main AI Config panel. g.Test- sends a simple test message to the API to get a response. This may not work on all APIs. h.Save- confirms the API addition/edit and saves it to the database. i.Delete- removes the APi from the database. - When all of the fields are filled out, press

Saveto return to the main Control panel display.

- If you want to add more presets for Instruct formats or hyperparameter Samplers, put the JSON file into the appropriate folder:

- Samplers go in

/public/api-presets/ - Instruct formats go in

/public/instructFormats/ - It's highly reccomended to review the structure of the default STMP preset files.

- SillyTavern preset files will not work.

- A list of past AI Chats, click one to load it.

- Information listed with each past session item:

- AI characters in the chat history

- Number of messages in AI Chat

- Timestamp for the last message

- The list is ordered in reverse chronological (newest chats first)

- Place any SillyTavern compatible character card into the

/characters/folder and restart the server. - Drag-drop a v2 card spec character card over the chat to import.

- Characters can be added, removed, edited, or swapped out inside the 📜 panel.

- Changing the character roster does not require restting the chat.

- Hosts will see a (🧠) next to Character Selectors. This will open a popup with the character definitions.

- STMP handles three types of character definitions:

-

Name- What is displayed as the character's name in the chat. -

Description- What goes into the prompt at either the top, or at D4 if 'D4 Char Defs' box is checked. -

First Message- What gets auto-inserted into a new chat with that character.

-

-

Embedded Lorebook- is currently not used by STMP, but is visible for user reference. - (👁️) at the top of the character definition panel will show Legacy Fields

- Legacy Fields are read-only. STMP does not use them.

- Legacy fields = Personality, Example Messages, and Scenario.

-

Savewill update the character definitions. -

Closewill close the popup with no changes.

Why are Personality, Scenario, and Example Messages considered 'Legacy'?

- Personality and Scenario are outdated distinctions without a meaningful purpose, and should be incorporated into the Description.

- Example Message can also be incorporated into the Description. We recommend doing so in the form of AliChat.

- (🎨) (all users) Three inputs for Hue, Saturation, and Lightness to define the base color for STMP UI theming.

- (🎶) (all users) is a toggle that will play/stop a looping background audio track ("Cup of COhee" be Deffcolony). This helps mobile keep their websocket connection active when they minimize the app.

- (🤐) is a toggle to completely disable guest inputs for both chats.

- (🖌️) is a toggle to allow or deny markdown image display.

- (📢) lets the Host send a large notification to all connected users.

- The right side of the screen contains two users lists, one for each chat.

- Users with the Host role will have a 👑 next to their name.

- The AI Characters will have a 🤖 next to their names in the AI Chat User List.

- (🖼️) toggles the chat windows between three modes: maximize AI chat >> maximize User Chat >> return to normal dual display.

- (🔃) forces a page refresh.

- (

▶️ /⏸️) allows for manual disconnect/reconnect to the server. - (🔑) opens a text box for input of the Host key in order to gain the Host role.

- Once a vlid key has been entered, the page will automatically refresh to show the host controls.

- The Host key can be found in the server console at startup.

- After the user enters the key and presses Enter, their page will refresh and they will see the Host controls.

- (⛔) clears the saved Usernames and UniqueID from localStorage.

- If you are not the primary Host you will lose any roles you were given.

- You will be asked to register a new username next time you sign in on the same browser.

- (🗑️) Host only, Clears either chat.

- clearing the AI Chat will automatically create a new chat with the selected Character.

- (🧹) All users, visually clears the chat to reduce UI lag, but does not actually destroy anything.

- (⏳) Sets the chat 'cooldown' for regular members.

- During the delay period the (✏️) for that chat will become (🚫), and no input will be possible.

- (🤖) Manually triggering an AI response without user Input

- (✂️) Deleting the last message in the AI Chat

- (🔄) Retry, i.e. Remove the last chat message and prompt the AI character to give a new response.

Smarter retry logic (add entity metadata to each chat message; only remove the last AI response)

Toggle for locking AI chat for users? (this is already kind of done with AutoResponse off)- Turn-based Mode with Drag-sort list to set input Order

- Ability to rename chats.

- ability for Host to edit a User's role from the UI

- ability to change the max length of chat inputs (currently 1000 characters)

- make control for AI replying every X messages

- make control for host, to autoclear chat every X messages

-

disallow names that are only spaces, punctuations, or non ASCII (non-Latin?) charactersrequire at least 3? A-Za-z characters

- disallow registering of names that are already in the DB

- character creation in-app

- create instruct preset types in-app

- I/O for full app setup presets (includes: single API without key, )

- basic use of character-embedded lorebooks

make control for guests to clear DISPLAY of either chat (without affecting the chat database) to prevent browser lag- auto-parse reasoning into a collapsable container

- highlight exact username matches in AI response with their color

- fade out users in user chat list who havent chatted in X minutes (add a css class with opacity 50%)

- fade out users in ai chat list who are no longer connected, or are faded out in user list (same)

- show which users in the User Chat are using which name in the AI Chat

- add a link to the User message that the AI is responding to at the top of each AI message.

- When an AI resposne is triggered by UserX's input, UserX sees that response highlighted in the chat

- add external folder selector to source characters from.

Multiple AI characters active at once (group chats)- export chats as text, JSON, or SillyTavern-compatible JSONL?

UI themes- Bridge extension for SillyTavern to enable intra-server communication?

- send images from local drive to user-only chat

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for STMP

Similar Open Source Tools

STMP

SillyTavern MultiPlayer (STMP) is an LLM chat interface that enables multiple users to chat with an AI. It features a sidebar chat for users, tools for the Host to manage the AI's behavior and moderate users. Users can change display names, chat in different windows, and the Host can control AI settings. STMP supports Text Completions, Chat Completions, and HordeAI. Users can add/edit APIs, manage past chats, view user lists, and control delays. Hosts have access to various controls, including AI configuration, adding presets, and managing characters. Planned features include smarter retry logic, host controls enhancements, and quality of life improvements like user list fading and highlighting exact usernames in AI responses.

roam-extension-live-ai-assistant

Live AI is an AI Assistant tailor-made for Roam, providing access to the latest LLMs directly in Roam blocks. Users can interact with AI to extend their thinking, explore their graph, and chat with structured responses. The tool leverages Roam's features to write prompts, query graph parts, and chat with content. Users can dictate, translate, transform, and enrich content easily. Live AI supports various tasks like audio and video analysis, PDF reading, image generation, and web search. The tool offers features like Chat panel, Live AI context menu, and Ask Your Graph agent for versatile usage. Users can control privacy levels, compare AI models, create custom prompts, and apply styles for response formatting. Security concerns are addressed by allowing users to control data sent to LLMs.

vigenair

ViGenAiR is a tool that harnesses the power of Generative AI models on Google Cloud Platform to automatically transform long-form Video Ads into shorter variants, targeting different audiences. It generates video, image, and text assets for Demand Gen and YouTube video campaigns. Users can steer the model towards generating desired videos, conduct A/B testing, and benefit from various creative features. The tool offers benefits like diverse inventory, compelling video ads, creative excellence, user control, and performance insights. ViGenAiR works by analyzing video content, splitting it into coherent segments, and generating variants following Google's best practices for effective ads.

Discord-AI-Selfbot

Discord-AI-Selfbot is a Python-based Discord selfbot that uses the `discord.py-self` library to automatically respond to messages mentioning its trigger word using Groq API's Llama-3 model. It functions as a normal Discord bot on a real Discord account, enabling interactions in DMs, servers, and group chats without needing to invite a bot. The selfbot comes with features like custom AI instructions, free LLM model usage, mention and reply recognition, message handling, channel-specific responses, and a psychoanalysis command to analyze user messages for insights on personality.

aiyabot

AIYA is a Discord bot interface for Stable Diffusion, offering features like live preview, negative prompts, model swapping, image generation, image captioning, image resizing, and more. It supports various options and bonus features to enhance user experience. Users can set per-channel defaults, view stats, manage queues, upscale images, and perform various commands on images. AIYA requires setup with AUTOMATIC1111's Stable Diffusion AI Web UI or SD.Next, and can be deployed using Docker with additional configuration options. Credits go to AUTOMATIC1111, vladmandic, harubaru, and various contributors for their contributions to AIYA's development.

blurt

Blurt is a Gnome shell extension that enables accurate speech-to-text input in Linux. It is based on the command line utility NoteWhispers and supports Gnome shell version 48. Users can transcribe speech using a local whisper.cpp installation or a whisper.cpp server. The extension allows for easy setup, start/stop of speech-to-text input with key bindings or icon click, and provides visual indicators during operation. It offers convenience by enabling speech input into any window that allows text input, with the transcribed text sent to the clipboard for easy pasting.

node_characterai

Node.js client for the unofficial Character AI API, an awesome website which brings characters to life with AI! This repository is inspired by RichardDorian's unofficial node API. Though, I found it hard to use and it was not really stable and archived. So I remade it in javascript. This project is not affiliated with Character AI in any way! It is a community project. The purpose of this project is to bring and build projects powered by Character AI. If you like this project, please check their website.

ChatGPT-Telegram-Bot

The ChatGPT Telegram Bot is a powerful Telegram bot that utilizes various GPT models, including GPT3.5, GPT4, GPT4 Turbo, GPT4 Vision, DALL·E 3, Groq Mixtral-8x7b/LLaMA2-70b, and Claude2.1/Claude3 opus/sonnet API. It enables users to engage in efficient conversations and information searches on Telegram. The bot supports multiple AI models, online search with DuckDuckGo and Google, user-friendly interface, efficient message processing, document interaction, Markdown rendering, and convenient deployment options like Zeabur, Replit, and Docker. Users can set environment variables for configuration and deployment. The bot also provides Q&A functionality, supports model switching, and can be deployed in group chats with whitelisting. The project is open source under GPLv3 license.

ai_rpg

AI RPG is a Node.js application that transforms an OpenAI-compatible language model into a solo tabletop game master. It tracks players, locations, regions, and items; renders structured prompts with Nunjucks; and supports ComfyUI image pipeline. The tool offers a rich region and location generation system, NPC and item tracking, party management, levels, classes, stats, skills, abilities, and more. It allows users to create their own world settings, offers detailed NPC memory system, skill checks with real RNG, modifiable needs system, and under-the-hood disposition system. The tool also provides event processing, context compression, image uploading, and support for loading SillyTavern lorebooks.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

smartcat

Smartcat is a CLI interface that brings language models into the Unix ecosystem, allowing power users to leverage the capabilities of LLMs in their daily workflows. It features a minimalist design, seamless integration with terminal and editor workflows, and customizable prompts for specific tasks. Smartcat currently supports OpenAI, Mistral AI, and Anthropic APIs, providing access to a range of language models. With its ability to manipulate file and text streams, integrate with editors, and offer configurable settings, Smartcat empowers users to automate tasks, enhance code quality, and explore creative possibilities.

CLI

Bito CLI provides a command line interface to the Bito AI chat functionality, allowing users to interact with the AI through commands. It supports complex automation and workflows, with features like long prompts and slash commands. Users can install Bito CLI on Mac, Linux, and Windows systems using various methods. The tool also offers configuration options for AI model type, access key management, and output language customization. Bito CLI is designed to enhance user experience in querying AI models and automating tasks through the command line interface.

jaison-core

J.A.I.son is a Python project designed for generating responses using various components and applications. It requires specific plugins like STT, T2T, TTSG, and TTSC to function properly. Users can customize responses, voice, and configurations. The project provides a Discord bot, Twitch events and chat integration, and VTube Studio Animation Hotkeyer. It also offers features for managing conversation history, training AI models, and monitoring conversations.

llm-subtrans

LLM-Subtrans is an open source subtitle translator that utilizes LLMs as a translation service. It supports translating subtitles between any language pairs supported by the language model. The application offers multiple subtitle formats support through a pluggable system, including .srt, .ssa/.ass, and .vtt files. Users can choose to use the packaged release for easy usage or install from source for more control over the setup. The tool requires an active internet connection as subtitles are sent to translation service providers' servers for translation.

lovelaice

Lovelaice is an AI-powered assistant for your terminal and editor. It can run bash commands, search the Internet, answer general and technical questions, complete text files, chat casually, execute code in various languages, and more. Lovelaice is configurable with API keys and LLM models, and can be used for a wide range of tasks requiring bash commands or coding assistance. It is designed to be versatile, interactive, and helpful for daily tasks and projects.

aider-composer

Aider Composer is a VSCode extension that integrates Aider into your development workflow. It allows users to easily add and remove files, toggle between read-only and editable modes, review code changes, use different chat modes, and reference files in the chat. The extension supports multiple models, code generation, code snippets, and settings customization. It has limitations such as lack of support for multiple workspaces, Git repository features, linting, testing, voice features, in-chat commands, and configuration options.

For similar tasks

STMP

SillyTavern MultiPlayer (STMP) is an LLM chat interface that enables multiple users to chat with an AI. It features a sidebar chat for users, tools for the Host to manage the AI's behavior and moderate users. Users can change display names, chat in different windows, and the Host can control AI settings. STMP supports Text Completions, Chat Completions, and HordeAI. Users can add/edit APIs, manage past chats, view user lists, and control delays. Hosts have access to various controls, including AI configuration, adding presets, and managing characters. Planned features include smarter retry logic, host controls enhancements, and quality of life improvements like user list fading and highlighting exact usernames in AI responses.

For similar jobs

STMP

SillyTavern MultiPlayer (STMP) is an LLM chat interface that enables multiple users to chat with an AI. It features a sidebar chat for users, tools for the Host to manage the AI's behavior and moderate users. Users can change display names, chat in different windows, and the Host can control AI settings. STMP supports Text Completions, Chat Completions, and HordeAI. Users can add/edit APIs, manage past chats, view user lists, and control delays. Hosts have access to various controls, including AI configuration, adding presets, and managing characters. Planned features include smarter retry logic, host controls enhancements, and quality of life improvements like user list fading and highlighting exact usernames in AI responses.

telegram-summary-bot

Telegram group summary bot is a tool designed to help users manage large group chats on Telegram by summarizing and searching messages. It allows users to easily read and search through messages in groups with high message volume. The bot stores chat history in a database and provides features such as summarizing messages, searching for specific words, answering questions based on group chat, and checking bot status. Users can deploy their own instance of the bot to avoid limitations on message history and interactions with other bots. The tool is free to use and integrates with services like Cloudflare Workers and AI Gateway for enhanced functionality.