Best AI tools for< understanding >

20 - AI tool Sites

AI Terms and Conditions Reader

The AI Terms and Conditions Reader is an AI tool designed to analyze and summarize terms and conditions from various websites. It aims to provide users with a clear understanding of the legal agreements they agree to online. The tool reviews and highlights key points, potential concerns, and notable features of the terms of service and privacy policies. Users can access detailed insights on data retention, data sharing practices, user rights, and potential implications for privacy and legal recourse.

Symanto

Symanto is a global leader in Human AI, specializing in human language understanding and generation. The company's proprietary platform integrates with common LLM's and works across industries and languages. Symanto's technology enables computers to connect with people like friends, fostering trust-filled interactions full of emotion, empathy, and understanding. The company's clients include automotive, consulting, healthcare, consumer, sports, and other industries.

Swimm

Swimm is an AI-powered code understanding tool designed to help developers maintain and modernize legacy codebases efficiently. It provides contextual answers to complex coding questions, captures and utilizes developer knowledge, and offers static analysis of codebases to enhance documentation and understanding. Swimm aims to streamline the software development lifecycle by preserving vital codebase knowledge and improving developer productivity and code quality.

MiniGPT-4

MiniGPT-4 is a powerful AI tool that combines a vision encoder with a large language model (LLM) to enhance vision-language understanding. It can generate detailed image descriptions, create websites from handwritten drafts, write stories and poems inspired by images, provide solutions to problems shown in images, and teach users how to cook based on food photos. MiniGPT-4 is highly computationally efficient and easy to use, making it a valuable tool for a wide range of applications.

IndieFeel

IndieFeel is a website that provides interpretations of songs, movies, and poems. It uses large language models to generate these interpretations, which can be helpful for understanding the meaning of a work of art or getting a different perspective on it. The website is still in beta, but it already has a number of interpretations available, and the quality of the interpretations is generally good.

Amori

Amori is a Stanford-founded AI dating app with the mission to foster meaningful connections through voice. By learning about you from your conversations, Amori helps you navigate your relationships and connect with others on a deeper level.

Dreamora

Dreamora is an AI-powered dream interpretation application that provides accurate and comprehensive interpretations of dreams. It utilizes advanced artificial intelligence techniques and draws upon the knowledge of renowned dream interpreters like Ibn Sirin and Al-Nabulsi. By simply entering your dream into the application, you can receive a free and instant interpretation within seconds. Dreamora's interpretations consider all aspects of your dream, including the location, characters, and emotions, to offer the most precise results possible.

expert.ai

expert.ai is an AI platform that offers natural language technologies and responsible AI integrations across various industries such as insurance, banking, publishing, and more. The platform helps streamline operations, extract critical data, drive revelations, ensure compliance, and analyze complex documents. It provides solutions for insurers, pharmaceuticals, publishers, and financial services companies, leveraging a hybrid AI approach and purpose-built natural language workflow. expert.ai's Green Glass Approach focuses on transparent, sustainable, practical, and human-centered AI solutions.

Image Describer

Image Describer is an AI-powered image description generator that allows users to upload an image, select a use case, add additional information, and receive a detailed description of the image's content. It can summarize the content of the picture, describe physical objects, emotions, and atmosphere within the picture. The tool also offers Text-To-Speech ability to assist visually impaired individuals in understanding image content.

NSFW AI Images Generator

The NSFW AI Images Generator is an AI tool that specializes in crafting dream female portraits and generating NSFW AI chat conversations. It offers users the ability to create ideal beauty images and interact with an AI girlfriend for companionship. The tool aims to provide users with a unique and personalized experience through AI-generated content.

Base64.ai

Base64.ai is an automated document processing API that offers a leading no-code AI solution for understanding documents, photos, and videos. It provides a wide range of AI document processing features and solutions for various industries. With over 400+ integrations, Base64.ai ensures fast, secure, and accurate data extraction, certified for ISO, HIPAA, SOC 2 Type 1 & 2, and GDPR compliance. The platform allows users to add new document types, integrations, and business rules, commanding the AI to meet specific needs. Base64.ai also offers PII redaction, human-in-the-loop verification, and is accessible via API, RPA systems, scanners, web, and mobile apps.

Insurance Policy AI

This application utilizes AI technology to simplify the complex process of understanding health insurance policies. Unlike other apps that focus on insurance search and comparison, this app specializes in deciphering the intricate language found in policies. It provides instant access to policy analysis with a one-time payment, empowering users to gain clarity and make informed decisions regarding their health insurance coverage.

Visual Computing & Artificial Intelligence Lab at TUM

The Visual Computing & Artificial Intelligence Lab at TUM is a group of research enthusiasts advancing cutting-edge research at the intersection of computer vision, computer graphics, and artificial intelligence. Our research mission is to obtain highly-realistic digital replica of the real world, which include representations of detailed 3D geometries, surface textures, and material definitions of both static and dynamic scene environments. In our research, we heavily build on advances in modern machine learning, and develop novel methods that enable us to learn strong priors to fuel 3D reconstruction techniques. Ultimately, we aim to obtain holographic representations that are visually indistinguishable from the real world, ideally captured from a simple webcam or mobile phone. We believe this is a critical component in facilitating immersive augmented and virtual reality applications, and will have a substantial positive impact in modern digital societies.

Papertalk.io

Papertalk.io is a research tool that helps users find, understand, and apply research papers. It uses AI to generate clear, concise explanations of papers, and it provides a chatbot to answer any questions users may have. Papertalk.io is designed to make research more accessible and approachable for everyone, regardless of their academic background.

Picovoice

Picovoice is an on-device Voice AI and local LLM platform designed for enterprises. It offers a range of voice AI and LLM solutions, including speech-to-text, noise suppression, speaker recognition, speech-to-index, wake word detection, and more. Picovoice empowers developers to build virtual assistants and AI-powered products with compliance, reliability, and scalability in mind. The platform allows enterprises to process data locally without relying on third-party remote servers, ensuring data privacy and security. With a focus on cutting-edge AI technology, Picovoice enables users to stay ahead of the curve and adapt quickly to changing customer needs.

Abridge

Abridge is a generative AI tool designed for clinical conversations in healthcare. It transforms patient-clinician interactions into structured clinical notes in real-time, utilizing advanced AI technology. The platform saves time for clinicians, offers clinically accurate summaries across specialties, supports multiple languages, and generates highly accurate clinical note drafts. Abridge is integrated directly inside Epic, enabling seamless use within existing workflows to improve efficiency and patient care.

Twelve Labs

Twelve Labs offers a multimodal AI platform that provides APIs for searching, classifying, and generating videos. Its AI models can understand the content of videos, including objects, actions, and speech, and can be used to create applications such as video search engines, video recommendation systems, and video editing tools. The platform is designed to be easy to use and can be integrated with a variety of programming languages and frameworks.

InstantPersonas

InstantPersonas is an AI-powered tool that allows users to generate detailed user personas in seconds. It helps marketers and business owners understand their audience better by providing real-time insights into the thoughts of their audience. With InstantPersonas, users can create persona-driven content that resonates with their target audience, ultimately improving their content creation process and marketing strategies. The tool offers industry-leading AI capabilities at an affordable price, making it a valuable asset for businesses looking to enhance their marketing efforts.

Soulreply

Soulreply is an AI-powered mental health assistant designed to provide support and guidance to individuals seeking help with their mental well-being. The platform offers personalized recommendations, resources, and tools to help users manage stress, anxiety, and other mental health challenges. By leveraging artificial intelligence and natural language processing, Soulreply aims to create a safe and supportive space for users to explore their emotions and improve their overall mental wellness.

Ask a Philosopher

Ask a Philosopher is a website where users can submit questions to be answered by a philosopher. The platform allows individuals to seek philosophical insights and perspectives on various topics. Users can engage in thoughtful discussions and gain a deeper understanding of complex issues through the responses provided by the philosophers. The website aims to promote critical thinking and intellectual exploration by facilitating dialogue between users and experts in philosophy.

20 - Open Source AI Tools

Awesome-LLMs-for-Video-Understanding

Awesome-LLMs-for-Video-Understanding is a repository dedicated to exploring Video Understanding with Large Language Models. It provides a comprehensive survey of the field, covering models, pretraining, instruction tuning, and hybrid methods. The repository also includes information on tasks, datasets, and benchmarks related to video understanding. Contributors are encouraged to add new papers, projects, and materials to enhance the repository.

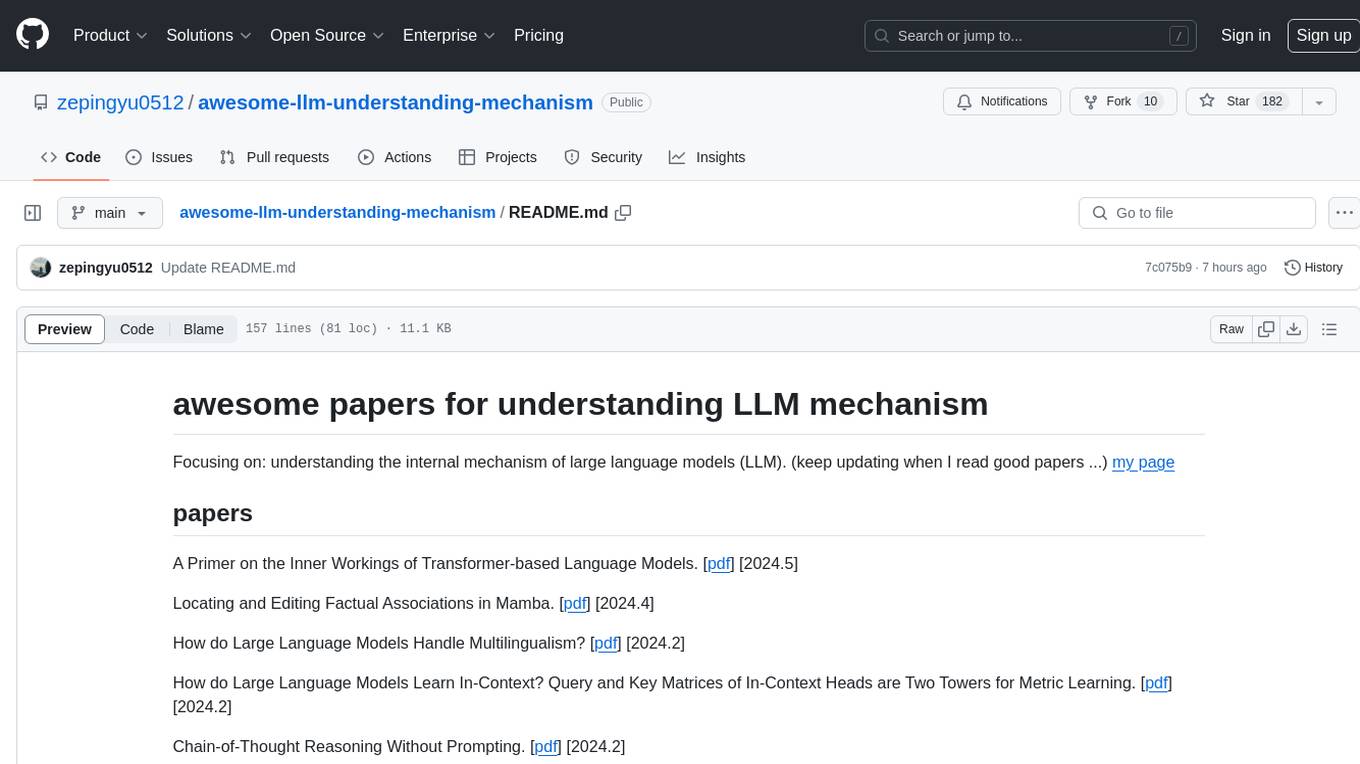

awesome-llm-understanding-mechanism

This repository is a collection of papers focused on understanding the internal mechanism of large language models (LLM). It includes research on topics such as how LLMs handle multilingualism, learn in-context, and handle factual associations. The repository aims to provide insights into the inner workings of transformer-based language models through a curated list of papers and surveys.

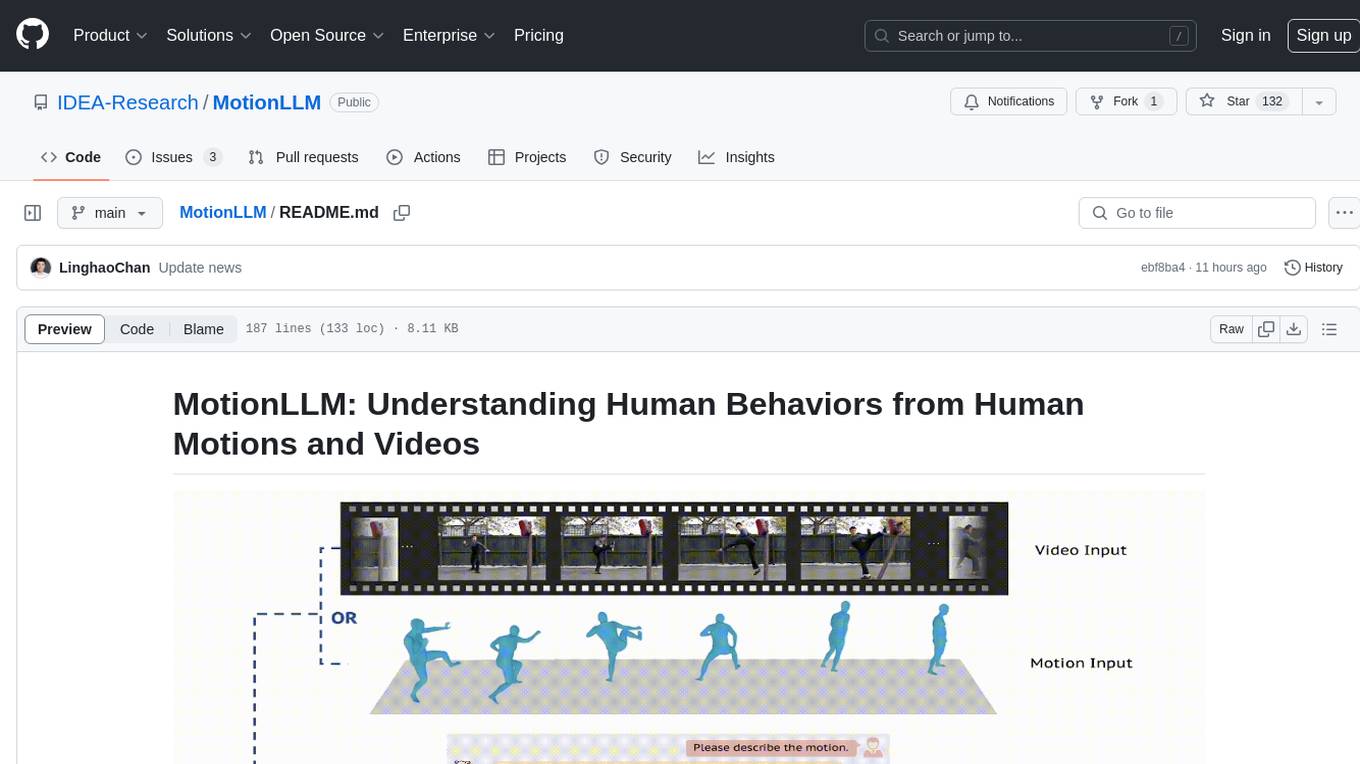

MotionLLM

MotionLLM is a framework for human behavior understanding that leverages Large Language Models (LLMs) to jointly model videos and motion sequences. It provides a unified training strategy, dataset MoVid, and MoVid-Bench for evaluating human behavior comprehension. The framework excels in captioning, spatial-temporal comprehension, and reasoning abilities.

shell-ai

Shell-AI (`shai`) is a CLI utility that enables users to input commands in natural language and receive single-line command suggestions. It leverages natural language understanding and interactive CLI tools to enhance command line interactions. Users can describe tasks in plain English and receive corresponding command suggestions, making it easier to execute commands efficiently. Shell-AI supports cross-platform usage and is compatible with Azure OpenAI deployments, offering a user-friendly and efficient way to interact with the command line.

ShapeLLM

ShapeLLM is the first 3D Multimodal Large Language Model designed for embodied interaction, exploring a universal 3D object understanding with 3D point clouds and languages. It supports single-view colored point cloud input and introduces a robust 3D QA benchmark, 3D MM-Vet, encompassing various variants. The model extends the powerful point encoder architecture, ReCon++, achieving state-of-the-art performance across a range of representation learning tasks. ShapeLLM can be used for tasks such as training, zero-shot understanding, visual grounding, few-shot learning, and zero-shot learning on 3D MM-Vet.

AiLearning-Theory-Applying

This repository provides a comprehensive guide to understanding and applying artificial intelligence (AI) theory, including basic knowledge, machine learning, deep learning, and natural language processing (BERT). It features detailed explanations, annotated code, and datasets to help users grasp the concepts and implement them in practice. The repository is continuously updated to ensure the latest information and best practices are covered.

DAMO-ConvAI

DAMO-ConvAI is the official repository for Alibaba DAMO Conversational AI. It contains the codebase for various conversational AI models and tools developed by Alibaba Research. These models and tools cover a wide range of tasks, including natural language understanding, natural language generation, dialogue management, and knowledge graph construction. DAMO-ConvAI is released under the MIT license and is available for use by researchers and developers in the field of conversational AI.

LLMBook-zh.github.io

This book aims to provide readers with a comprehensive understanding of large language model technology, including its basic principles, key technologies, and application prospects. Through in-depth research and practice, we can continuously explore and improve large language model technology, and contribute to the development of the field of artificial intelligence.

GPT4Point

GPT4Point is a unified framework for point-language understanding and generation. It aligns 3D point clouds with language, providing a comprehensive solution for tasks such as 3D captioning and controlled 3D generation. The project includes an automated point-language dataset annotation engine, a novel object-level point cloud benchmark, and a 3D multi-modality model. Users can train and evaluate models using the provided code and datasets, with a focus on improving models' understanding capabilities and facilitating the generation of 3D objects.

Groma

Groma is a grounded multimodal assistant that excels in region understanding and visual grounding. It can process user-defined region inputs and generate contextually grounded long-form responses. The tool presents a unique paradigm for multimodal large language models, focusing on visual tokenization for localization. Groma achieves state-of-the-art performance in referring expression comprehension benchmarks. The tool provides pretrained model weights and instructions for data preparation, training, inference, and evaluation. Users can customize training by starting from intermediate checkpoints. Groma is designed to handle tasks related to detection pretraining, alignment pretraining, instruction finetuning, instruction following, and more.

MiniCPM-V

MiniCPM-V is a series of end-side multimodal LLMs designed for vision-language understanding. The models take image and text inputs to provide high-quality text outputs. The series includes models like MiniCPM-Llama3-V 2.5 with 8B parameters surpassing proprietary models, and MiniCPM-V 2.0, a lighter model with 2B parameters. The models support over 30 languages, efficient deployment on end-side devices, and have strong OCR capabilities. They achieve state-of-the-art performance on various benchmarks and prevent hallucinations in text generation. The models can process high-resolution images efficiently and support multilingual capabilities.

VideoLLaMA2

VideoLLaMA 2 is a project focused on advancing spatial-temporal modeling and audio understanding in video-LLMs. It provides tools for multi-choice video QA, open-ended video QA, and video captioning. The project offers model zoo with different configurations for visual encoder and language decoder. It includes training and evaluation guides, as well as inference capabilities for video and image processing. The project also features a demo setup for running a video-based Large Language Model web demonstration.

Awesome-Tabular-LLMs

This repository is a collection of papers on Tabular Large Language Models (LLMs) specialized for processing tabular data. It includes surveys, models, and applications related to table understanding tasks such as Table Question Answering, Table-to-Text, Text-to-SQL, and more. The repository categorizes the papers based on key ideas and provides insights into the advancements in using LLMs for processing diverse tables and fulfilling various tabular tasks based on natural language instructions.

ragflow

RAGFlow is an open-source Retrieval-Augmented Generation (RAG) engine that combines deep document understanding with Large Language Models (LLMs) to provide accurate question-answering capabilities. It offers a streamlined RAG workflow for businesses of all sizes, enabling them to extract knowledge from unstructured data in various formats, including Word documents, slides, Excel files, images, and more. RAGFlow's key features include deep document understanding, template-based chunking, grounded citations with reduced hallucinations, compatibility with heterogeneous data sources, and an automated and effortless RAG workflow. It supports multiple recall paired with fused re-ranking, configurable LLMs and embedding models, and intuitive APIs for seamless integration with business applications.

EmoLLM

EmoLLM is a series of large-scale psychological health counseling models that can support **understanding-supporting-helping users** in the psychological health counseling chain, which is fine-tuned from `LLM` instructions. Welcome everyone to star~⭐⭐. The currently open source `LLM` fine-tuning configurations are as follows:

aws-healthcare-lifescience-ai-ml-sample-notebooks

The AWS Healthcare and Life Sciences AI/ML Immersion Day workshops provide hands-on experience for customers to learn about AI/ML services, gain a deep understanding of AWS AI/ML services, and understand best practices for using AI/ML in the context of HCLS applications. The workshops cater to individuals at all levels, from machine learning experts to developers and managers, and cover topics such as training, testing, MLOps, deployment practices, and software development life cycle in the context of AI/ML. The repository contains notebooks that can be used in AWS Instructure-Led Labs or self-paced labs, offering a comprehensive learning experience for integrating AI/ML into applications.

QA-Pilot

QA-Pilot is an interactive chat project that leverages online/local LLM for rapid understanding and navigation of GitHub code repository. It allows users to chat with GitHub public repositories using a git clone approach, store chat history, configure settings easily, manage multiple chat sessions, and quickly locate sessions with a search function. The tool integrates with `codegraph` to view Python files and supports various LLM models such as ollama, openai, mistralai, and localai. The project is continuously updated with new features and improvements, such as converting from `flask` to `fastapi`, adding `localai` API support, and upgrading dependencies like `langchain` and `Streamlit` to enhance performance.

M.I.L.E.S

M.I.L.E.S. (Machine Intelligent Language Enabled System) is a voice assistant powered by GPT-4 Turbo, offering a range of capabilities beyond existing assistants. With its advanced language understanding, M.I.L.E.S. provides accurate and efficient responses to user queries. It seamlessly integrates with smart home devices, Spotify, and offers real-time weather information. Additionally, M.I.L.E.S. possesses persistent memory, a built-in calculator, and multi-tasking abilities. Its realistic voice, accurate wake word detection, and internet browsing capabilities enhance the user experience. M.I.L.E.S. prioritizes user privacy by processing data locally, encrypting sensitive information, and adhering to strict data retention policies.

SQLAgent

DataAgent is a multi-agent system for data analysis, capable of understanding data development and data analysis requirements, understanding data, and generating SQL and Python code for tasks such as data query, data visualization, and machine learning.

responsible-ai-toolbox

Responsible AI Toolbox is a suite of tools providing model and data exploration and assessment interfaces and libraries for understanding AI systems. It empowers developers and stakeholders to develop and monitor AI responsibly, enabling better data-driven actions. The toolbox includes visualization widgets for model assessment, error analysis, interpretability, fairness assessment, and mitigations library. It also offers a JupyterLab extension for managing machine learning experiments and a library for measuring gender bias in NLP datasets.

20 - OpenAI Gpts

Toxic Relationship Guide

An empathetic expert on toxic relationships, offering understanding and guidance.

Concept Explainer

A facilitator for understanding concepts using a simplified Concept Attainment Method.

Borrower's Defense Assistant

Assistance in understanding and filling out the Borrower's Defense to Repayment Form provided by the United States Department of Education.

Fourth Turning Explorer

Your go-to for understanding how current events align with generational cycles.

fox8 botnet paper

A helpful guide for understanding the paper "Anatomy of an AI-powered malicious social botnet"

AI fact-checking paper

A helpful guide for understanding the paper "Artificial intelligence is ineffective and potentially harmful for fact checking"

Getting along with Chinese

Guides non-Chinese in understanding Chinese cultural norms and behaviors.

Intelligent Experiences Mentor

Your guide in understanding and applying the Intelligent Experiences Manifesto.