Best AI tools for< running errands >

20 - AI tool Sites

Copilot

Copilot is an AI-powered bike light and camera designed to keep cyclists safer on their rides. It uses artificial intelligence to understand when a vehicle is approaching or overtaking, and provides audible and visual alerts to the cyclist. Copilot also has a predictive risk estimation feature that tracks driver behavior to help cyclists ride smarter and prevent crashes.

Run Recommender

The Run Recommender is a web-based tool that helps runners find the perfect pair of running shoes. It uses a smart algorithm to suggest options based on your input, giving you a starting point in your search for the perfect pair. The Run Recommender is designed to be user-friendly and easy to use. Simply input your shoe width, age, weight, and other details, and the Run Recommender will generate a list of potential shoes that might suit your running style and body. You can also provide information about your running experience, distance, and frequency, and the Run Recommender will use this information to further refine its suggestions. Once you have a list of potential shoes, you can click on each shoe to learn more about it, including its features, benefits, and price. You can also search for the shoe on Amazon to find the best deals.

Ollama

Ollama is a platform that allows users to run large language models, such as Llama 2 and Code Llama, locally. This enables users to customize and create their own models, and to get up and running with large language models quickly and easily. Ollama is available for macOS, Linux, and Windows.

Cascadeur

Cascadeur is a standalone 3D software that lets you create keyframe animation, as well as clean up and edit any imported ones. Thanks to its AI-assisted and physics tools you can dramatically speed up the animation process and get high quality results. It works with .FBX, .DAE and .USD files making it easy to integrate into any animation workflow.

Gooey.AI

Gooey.AI is a platform that provides access to a variety of AI models and tools, making it easy for users to build and deploy AI solutions. The platform offers a no-code interface, making it accessible to users of all skill levels. Gooey.AI also provides a community of users who share workflows and examples, making it easy to get started with AI development.

Obviously AI

Obviously AI is a no-code AI tool that allows users to build and deploy machine learning models without writing any code. It is designed to be easy to use, even for those with no data science experience. Obviously AI offers a variety of features, including model building, model deployment, model monitoring, and integration with other tools. It also provides expert support from a dedicated data scientist.

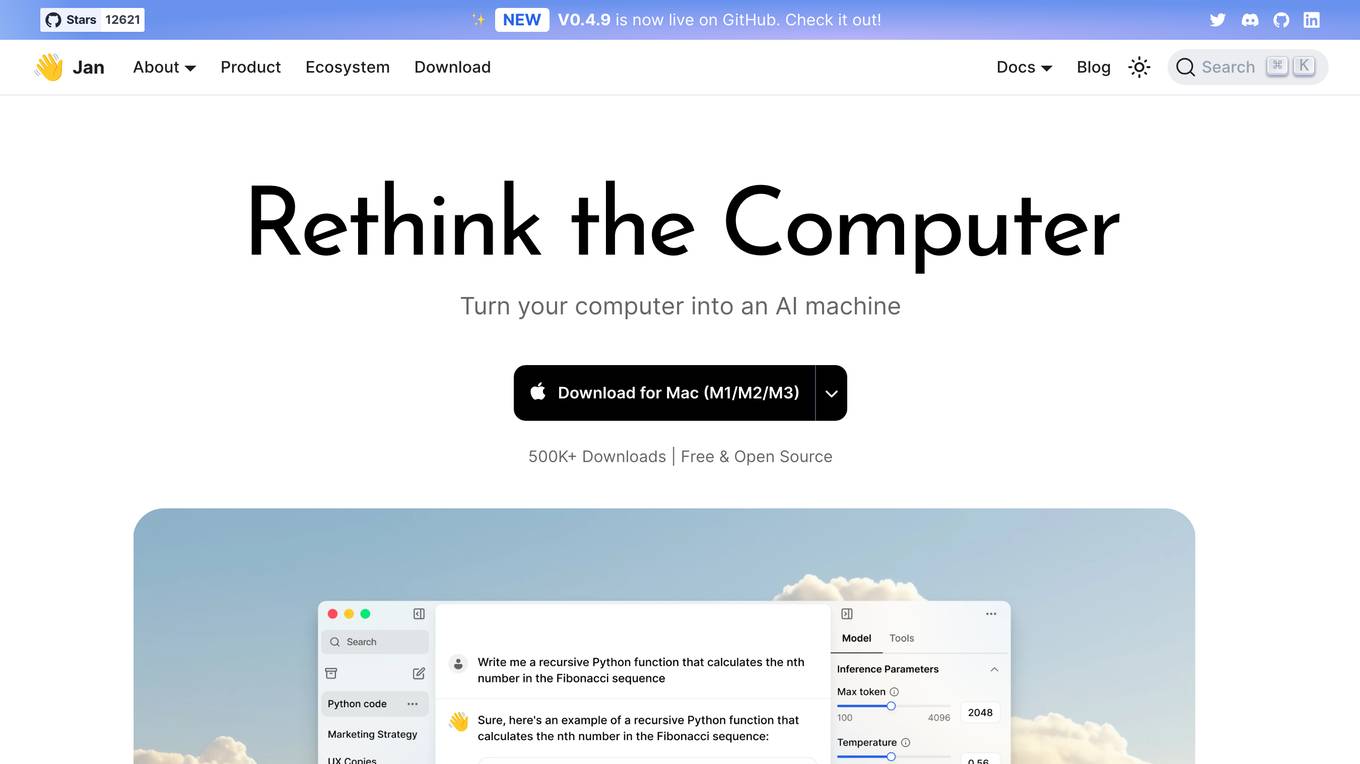

Jan

Jan is an open-source, local-first AI platform that allows users to run AI models locally or connect to remote APIs. It features a user-friendly interface, a library of popular AI models, and the ability to customize the experience with extensions. Jan is designed to be accessible to everyone, regardless of their technical expertise.

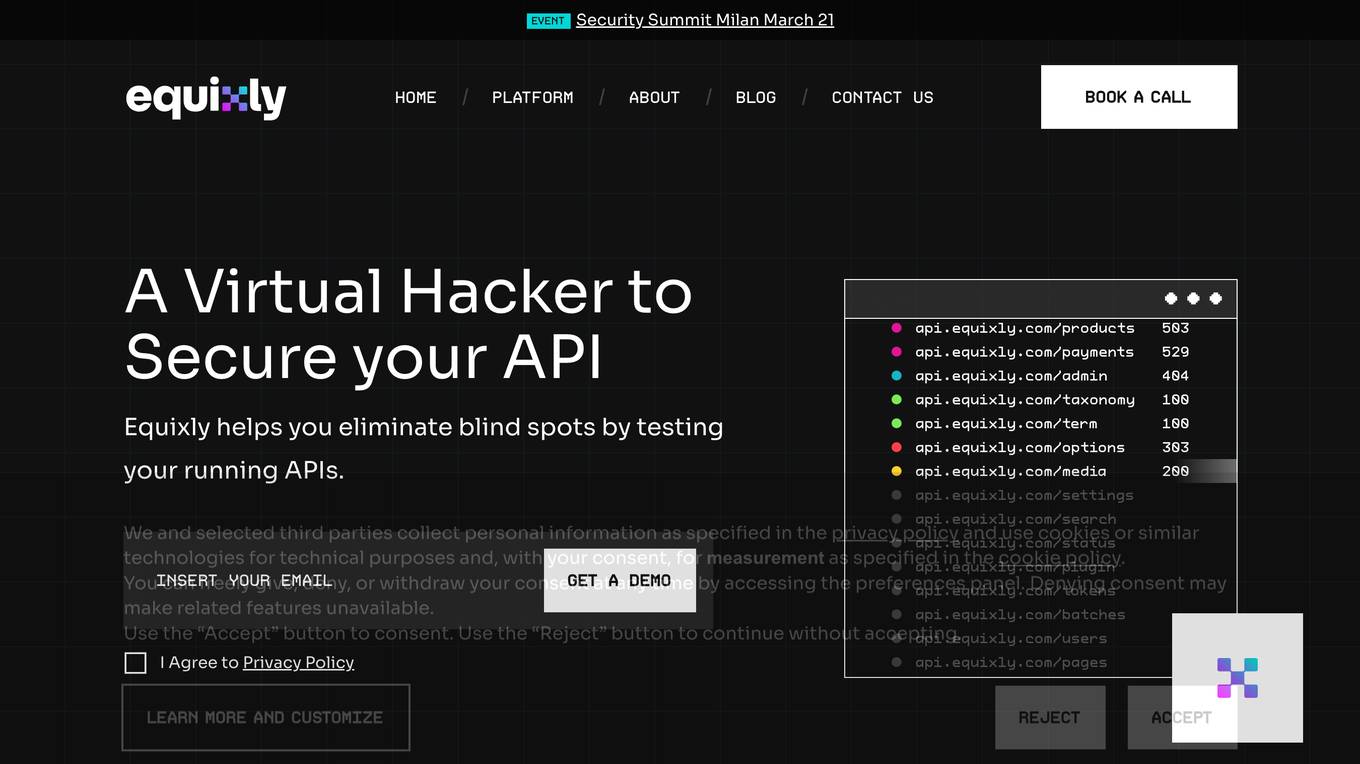

Equixly

Equixly is an AI-powered virtual hacker tool designed to secure APIs by identifying and fixing vulnerabilities in real-time. It helps users eliminate blind spots by continuously scanning APIs for flaws, making integration less time-consuming and enabling faster release of secure code. Equixly offers scalable API penetration testing, rapid remediation, and attack simulation based on OWASP Top 10 API risks. It provides inventory mapping of API landscapes, simplifies compliance tracking, and minimizes data exposure. Equixly is a comprehensive solution for enhancing API security and ensuring regulatory compliance.

Milo

Milo is an AI-powered co-pilot for parents, designed to help them manage the chaos of family life. It uses GPT-4, the latest in large-language models, to sort and organize information, send reminders, and provide updates. Milo is designed to be accurate and solve complex problems, and it learns and gets better based on user feedback. It can be used to manage tasks such as adding items to a grocery list, getting updates on the week's schedule, and sending screenshots of birthday invitations.

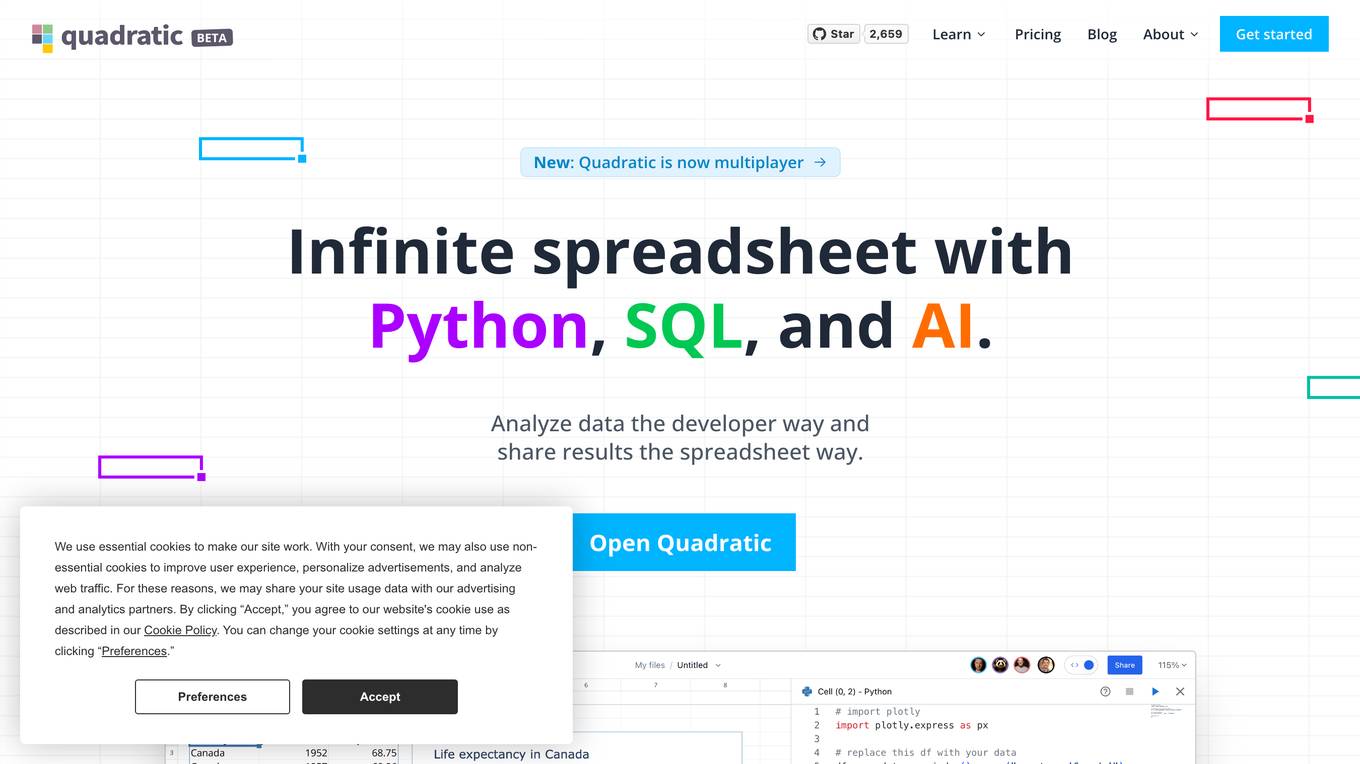

Quadratic

Quadratic is an infinite spreadsheet with Python, SQL, and AI. It combines the familiarity of a spreadsheet with the power of code, allowing users to analyze data, write code, and create visualizations in a single environment. With built-in Python library support, users can bring open source tools directly to their spreadsheets. Quadratic also features real-time collaboration, allowing multiple users to work on the same spreadsheet simultaneously. Additionally, Quadratic is built for speed and performance, utilizing Web Assembly and WebGL to deliver a smooth and responsive experience.

Momen

Momen is a no-code application development platform that allows users to build custom apps without writing code. It offers a drag-and-drop interface, pre-built components, and integrations with third-party services. Momen is suitable for building a wide range of applications, including websites, mobile apps, and business process automation tools.

Betafi

Betafi is a cloud-based user research and product feedback platform that helps businesses capture, organize, and share customer feedback from various sources, including user interviews, usability testing, and product demos. It offers features such as timestamped note-taking, automatic transcription and translation, video clipping, and integrations with popular collaboration tools like Miro, Figma, and Notion. Betafi enables teams to gather qualitative and quantitative feedback from users, synthesize insights, and make data-driven decisions to improve their products and services.

Lamini

Lamini is an enterprise-level LLM platform that offers precise recall with Memory Tuning, enabling teams to achieve over 95% accuracy even with large amounts of specific data. It guarantees JSON output and delivers massive throughput for inference. Lamini is designed to be deployed anywhere, including air-gapped environments, and supports training and inference on Nvidia or AMD GPUs. The platform is known for its factual LLMs and reengineered decoder that ensures 100% schema accuracy in the JSON output.

Endurance

Endurance is a platform that connects runners, swimmers, and cyclists with group training opportunities. Users can create their own teams or join existing ones in their neighborhood. The platform provides tools for creating and sharing structured workouts, tracking progress, and communicating with team members. Endurance also offers accident insurance for team subscribers.

Maige

Maige is an open-source infrastructure for running natural language workflows on your codebase. It allows you to connect your repo, write rules for what should happen when issues and PRs are opened, and then watch it run. Maige can label, assign, comment, review code, and even run simple code snippets. It is an AI with access to GitHub, so it can do anything you could do in the UI.

GPUX

GPUX is a cloud platform that provides access to GPUs for running AI workloads. It offers a variety of features to make it easy to deploy and run AI models, including a user-friendly interface, pre-built templates, and support for a variety of programming languages. GPUX is also committed to providing a sustainable and ethical platform, and it has partnered with organizations such as the Climate Leadership Council to reduce its carbon footprint.

Lemon Squeezy

Lemon Squeezy is an all-in-one platform for running your SaaS business. It provides a range of features to help you with payments, subscriptions, global tax compliance, fraud prevention, multi-currency support, failed payment recovery, PayPal integration, and more. Lemon Squeezy is designed to make running your software business easy and efficient.

GPT Prompt Tuner

GPT Prompt Tuner is an AI tool designed to enhance ChatGPT prompts by generating variations and running conversations in parallel. It allows users to delve into the field of 'Prompt Engineering' and potentially earn up to $335k/year. The tool offers a fully customizable experience, enabling users to input their own prompts or let the AI generate them. With flexible plans and a user-friendly interface, GPT Prompt Tuner aims to streamline the process of interacting with ChatGPT for improved communication outcomes.

Anycores

Anycores is an AI tool designed to optimize the performance of deep neural networks and reduce the cost of running AI models in the cloud. It offers a platform that provides automated solutions for tuning and inference consultation, optimized networks zoo, and platform for reducing AI model cost. Anycores focuses on faster execution, reducing inference time over 10x times, and footprint reduction during model deployment. It is device agnostic, supporting Nvidia, AMD GPUs, Intel, ARM, AMD CPUs, servers, and edge devices. The tool aims to provide highly optimized, low footprint networks tailored to specific deployment scenarios.

Modal

Modal is a high-performance cloud platform for developers, particularly those working with AI data and ML teams. It provides a serverless platform for running generative AI models, large-scale batch jobs, job queues, and more. Modal allows users to bring their own code and leverage the platform's infrastructure to run their applications. Key features include fast cold boots, autoscaling, custom container images, hardware specifications, network volumes, key-value stores, queues, job scheduling, web endpoints, observability, and security. Modal is designed for large-scale workloads and offers pay-as-you-go pricing. It is suitable for various use cases, including generative AI, AI inference, fine-tuning, batch processing, and more.

20 - Open Source AI Tools

OpenCatEsp32

OpenCat code running on BiBoard, a high-performance ESP32 quadruped robot development board. The board is mainly designed for developers and engineers working on multi-degree-of-freedom (MDOF) Multi-legged robots with up to 12 servos.

jetson-generative-ai-playground

This repo hosts tutorial documentation for running generative AI models on NVIDIA Jetson devices. The documentation is auto-generated and hosted on GitHub Pages using their CI/CD feature to automatically generate/update the HTML documentation site upon new commits.

ipex-llm

IPEX-LLM is a PyTorch library for running Large Language Models (LLMs) on Intel CPUs and GPUs with very low latency. It provides seamless integration with various LLM frameworks and tools, including llama.cpp, ollama, Text-Generation-WebUI, HuggingFace transformers, and more. IPEX-LLM has been optimized and verified on over 50 LLM models, including LLaMA, Mistral, Mixtral, Gemma, LLaVA, Whisper, ChatGLM, Baichuan, Qwen, and RWKV. It supports a range of low-bit inference formats, including INT4, FP8, FP4, INT8, INT2, FP16, and BF16, as well as finetuning capabilities for LoRA, QLoRA, DPO, QA-LoRA, and ReLoRA. IPEX-LLM is actively maintained and updated with new features and optimizations, making it a valuable tool for researchers, developers, and anyone interested in exploring and utilizing LLMs.

LLocalSearch

LLocalSearch is a completely locally running search aggregator using LLM Agents. The user can ask a question and the system will use a chain of LLMs to find the answer. The user can see the progress of the agents and the final answer. No OpenAI or Google API keys are needed.

spandrel

Spandrel is a library for loading and running pre-trained PyTorch models. It automatically detects the model architecture and hyperparameters from model files, and provides a unified interface for running models.

exllamav2

ExLlamaV2 is an inference library for running local LLMs on modern consumer GPUs. It is a faster, better, and more versatile codebase than its predecessor, ExLlamaV1, with support for a new quant format called EXL2. EXL2 is based on the same optimization method as GPTQ and supports 2, 3, 4, 5, 6, and 8-bit quantization. It allows for mixing quantization levels within a model to achieve any average bitrate between 2 and 8 bits per weight. ExLlamaV2 can be installed from source, from a release with prebuilt extension, or from PyPI. It supports integration with TabbyAPI, ExUI, text-generation-webui, and lollms-webui. Key features of ExLlamaV2 include: - Faster and better kernels - Cleaner and more versatile codebase - Support for EXL2 quantization format - Integration with various web UIs and APIs - Community support on Discord

skypilot

SkyPilot is a framework for running LLMs, AI, and batch jobs on any cloud, offering maximum cost savings, highest GPU availability, and managed execution. SkyPilot abstracts away cloud infra burdens: - Launch jobs & clusters on any cloud - Easy scale-out: queue and run many jobs, automatically managed - Easy access to object stores (S3, GCS, R2) SkyPilot maximizes GPU availability for your jobs: * Provision in all zones/regions/clouds you have access to (the _Sky_), with automatic failover SkyPilot cuts your cloud costs: * Managed Spot: 3-6x cost savings using spot VMs, with auto-recovery from preemptions * Optimizer: 2x cost savings by auto-picking the cheapest VM/zone/region/cloud * Autostop: hands-free cleanup of idle clusters SkyPilot supports your existing GPU, TPU, and CPU workloads, with no code changes.

cria

Cria is a Python library designed for running Large Language Models with minimal configuration. It provides an easy and concise way to interact with LLMs, offering advanced features such as custom models, streams, message history management, and running multiple models in parallel. Cria simplifies the process of using LLMs by providing a straightforward API that requires only a few lines of code to get started. It also handles model installation automatically, making it efficient and user-friendly for various natural language processing tasks.

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

beta9

Beta9 is an open-source platform for running scalable serverless GPU workloads across cloud providers. It allows users to scale out workloads to thousands of GPU or CPU containers, achieve ultrafast cold-start for custom ML models, automatically scale to zero to pay for only what is used, utilize flexible distributed storage, distribute workloads across multiple cloud providers, and easily deploy task queues and functions using simple Python abstractions. The platform is designed for launching remote serverless containers quickly, featuring a custom, lazy loading image format backed by S3/FUSE, a fast redis-based container scheduling engine, content-addressed storage for caching images and files, and a custom runc container runtime.

ai-lab-recipes

This repository contains recipes for building and running containerized AI and LLM applications with Podman. It provides model servers that serve machine-learning models via an API, allowing developers to quickly prototype new AI applications locally. The recipes include components like model servers and AI applications for tasks such as chat, summarization, object detection, etc. Images for sample applications and models are available in `quay.io`, and bootable containers for AI training on Linux OS are enabled.

aiarena-web

aiarena-web is a website designed for running the aiarena.net infrastructure. It consists of different modules such as core functionality, web API endpoints, frontend templates, and a module for linking users to their Patreon accounts. The website serves as a platform for obtaining new matches, reporting results, featuring match replays, and connecting with Patreon supporters. The project is licensed under GPLv3 in 2019.

SiLLM

SiLLM is a toolkit that simplifies the process of training and running Large Language Models (LLMs) on Apple Silicon by leveraging the MLX framework. It provides features such as LLM loading, LoRA training, DPO training, a web app for a seamless chat experience, an API server with OpenAI compatible chat endpoints, and command-line interface (CLI) scripts for chat, server, LoRA fine-tuning, DPO fine-tuning, conversion, and quantization.

dstack

Dstack is an open-source orchestration engine for running AI workloads in any cloud. It supports a wide range of cloud providers (such as AWS, GCP, Azure, Lambda, TensorDock, Vast.ai, CUDO, RunPod, etc.) as well as on-premises infrastructure. With Dstack, you can easily set up and manage dev environments, tasks, services, and pools for your AI workloads.

FedML

FedML is a unified and scalable machine learning library for running training and deployment anywhere at any scale. It is highly integrated with FEDML Nexus AI, a next-gen cloud service for LLMs & Generative AI. FEDML Nexus AI provides holistic support of three interconnected AI infrastructure layers: user-friendly MLOps, a well-managed scheduler, and high-performance ML libraries for running any AI jobs across GPU Clouds.

DistiLlama

DistiLlama is a Chrome extension that leverages a locally running Large Language Model (LLM) to perform various tasks, including text summarization, chat, and document analysis. It utilizes Ollama as the locally running LLM instance and LangChain for text summarization. DistiLlama provides a user-friendly interface for interacting with the LLM, allowing users to summarize web pages, chat with documents (including PDFs), and engage in text-based conversations. The extension is easy to install and use, requiring only the installation of Ollama and a few simple steps to set up the environment. DistiLlama offers a range of customization options, including the choice of LLM model and the ability to configure the summarization chain. It also supports multimodal capabilities, allowing users to interact with the LLM through text, voice, and images. DistiLlama is a valuable tool for researchers, students, and professionals who seek to leverage the power of LLMs for various tasks without compromising data privacy.

CGraph

CGraph is a cross-platform **D** irected **A** cyclic **G** raph framework based on pure C++ without any 3rd-party dependencies. You, with it, can **build your own operators simply, and describe any running schedules** as you need, such as dependence, parallelling, aggregation and so on. Some useful tools and plugins are also provide to improve your project. Tutorials and contact information are show as follows. Please **get in touch with us for free** if you need more about this repository.

spellbook-docker

The Spellbook Docker Compose repository contains the Docker Compose files for running the Spellbook AI Assistant stack. It requires ExLlama and a Nvidia Ampere or better GPU for real-time results. The repository provides instructions for installing Docker, building and starting containers with or without GPU, additional workers, Nvidia driver installation, port forwarding, and fresh installation steps. Users can follow the detailed guidelines to set up the Spellbook framework on Ubuntu 22, enabling them to run the UI, middleware, and additional workers for resource access.

codebox-api

CodeBox is a cloud infrastructure tool designed for running Python code in an isolated environment. It also offers simple file input/output capabilities and will soon support vector database operations. Users can install CodeBox using pip and utilize it by setting up an API key. The tool allows users to execute Python code snippets and interact with the isolated environment. CodeBox is currently in early development stages and requires manual handling for certain operations like refunds and cancellations. The tool is open for contributions through issue reporting and pull requests. It is licensed under MIT and can be contacted via email at [email protected].

flux-aio

Flux All-In-One is a lightweight distribution optimized for running the GitOps Toolkit controllers as a single deployable unit on Kubernetes clusters. It is designed for bare clusters, edge clusters, clusters with restricted communication, clusters with egress via proxies, and serverless clusters. The distribution follows semver versioning and provides documentation for specifications, installation, upgrade, OCI sync configuration, Git sync configuration, and multi-tenancy configuration. Users can deploy Flux using Timoni CLI and a Timoni Bundle file, fine-tune installation options, sync from public Git repositories, bootstrap repositories, and uninstall Flux without affecting reconciled workloads.

20 - OpenAI Gpts

Running Habit Architect

I'm a running coach that helps you to became addicted to running in 2-3 weeks by building your personalized plan.

Pace Assistant

Provides running splits for Strava Routes, accounting for distance and elevation changes

AgencyAi

If you are running an Agency, use this AI. It will answer you based on some books and podcast which I have used over the year. Also I uploaded some articles I have written for agency.

Painting Auto Agent - saysay.ai

Auto painting agent running with LLMermaid. Type "continue" for to continue tasks.

Mythological

A helpful assistant for D&D DMs running Dungeons & Dragons campaigns. Create towns, shops, characters, monsters, items, plots, encounters and more! Built for Dungeon Masters building DnD settings.

Dr. Business

An online business expert offering guidance for creating and running digital ventures.

Adept Online Business Builder

A guide for aspiring online entrepreneurs, offering practical advice on setting up and running a business. Please note: The product is independently developed and not affiliated, endorsed, or sponsored by OpenAI.

Live Dwell

I teach Home Economics and help with Cooking, Cleaning, and Running a Household.

AIProductGPT: Add AI to your Product and get a PRD

With simple prompts, AIProductGPT instantly crafts detailed AI-powered requirements (PRD) and mocks so that you team can hit the ground running

EOS Personal Growth Navigator

Your go-to assistant for integrating EOS (Entrepreneurial Operating System) principles into personal life. Offers expert guidance on utilizing the core EOS tools to drive personal growth and provides structured support for running your own personal annual, quarterly, and weekly L10 meetings.

Xアカウント分析GPTs

X(旧Twitter)アカウントの運用に関する専門的なアドバイスを提供するために設計されています。ユーザーが持つXアカウントのデータを分析し、エンゲージメントの向上、フォロワーの興味が高いトピックの把握、最適な投稿時間の特定などを行うことで、効果的なソーシャルメディア戦略を構築をサポートします。具体的には、ユーザーから提供されるXのアナリティクスデータを元に、高いエンゲージメントを得たツイートの特徴やエンゲージメントが低いツイートの改善点、インプレッションが高いツイートの分析などを行います。

Swiss Solopreneur Pilot

Comprehensive guide for Swiss solopreneurship, offering formal, well-researched advice.

Marathon Prep Coach

Designs training programs for runners preparing for marathons and other races, tailored to fitness levels.