aiarena-web

A website for running the aiarena.net ladder.

Stars: 94

aiarena-web is a website designed for running the aiarena.net infrastructure. It consists of different modules such as core functionality, web API endpoints, frontend templates, and a module for linking users to their Patreon accounts. The website serves as a platform for obtaining new matches, reporting results, featuring match replays, and connecting with Patreon supporters. The project is licensed under GPLv3 in 2019.

README:

A website for running the aiarena.net infrastructure.

-

Clone the project

You can use the IDE way of doing it, or do it manually:

git clone https://github.com/aiarena/aiarena-web/

-

Make sure you have

uvinstalled -

Set up your virtual environment

In Pycharm, you just need to create a new local interpreter (make sure to select

uvas the interpreter type and match the python version to the one currently set in pyproject.toml). Otherwise, you can do:uv venv

After this is done, you'll want to run

uv sync

to install python deps, and

cd aiarena/frontend-spa && npm install

to install javascript deps.

-

You'll want to set environment variable

DJANGO_ENVIRONMENTtoDEVELOPMENT, however that's done in your dev environment. -

Set up Postgres and Redis

There is a docker-compose.yml file that's configured to run correct versions of Postgres and Redis. It already ensures that there is a correct user / database created in the Postgres DB. If you want to manually connect to those, look up the credentials in the compose file.

The

DATABASESsetting and Redis-related settings in default.py will automatically point to those databases, but if you wish to use a custom database config, you can override those settings in local.py. This file is gitignored.

For Pycharm users, there's a ready-made

Databasesrun config that launches the docker-compose file, as well as aProjectcompound run config that launches everything needed for development. -

Populate the DB

You can go two routes here - either populate it with seed data (if you don't want to worry about protecting production data on a local machine, or don't have access to AWS), or just restore the production backup locally (better for reproducing performance issues, simpler, but requires AWS access).

- Seed data - run

uv run manage.py migrateto apply the database migrations, thenuv run manage.py seedand optionallyuv run manage.py generatestats - Restore backup - run

uv run run.py restore-backup --s3. That will download the latest production backup, which will already have the migrations applied.

- Seed data - run

-

Launch the Website then navigate your browser to

http://127.0.0.1:8000/uv run manage.py runserver

If you used seed data in the previous step, you can log into the website using the accounts below:

Admin user: username - devadmin, password - x.

Regular user: username - devuser, password - x.

Otherwise (if you restored the production backup), you can log in with the same username and password that you use in aiarena.net.

-

Install pre-commit hooks

Whenever you're pushing code to production, we'll run linters and make sure your code is well-formatted. To get faster feedback on this, you can install pre-commit hooks that will run the exact same checks when you're trying to commit.

uv run pre-commit install

This is needed to restore production backups, or do any other work with production environment.

-

Make sure you have an AWS user with

SuperPowerUsersgroup -

Configure your AWS credentials:

Get your

Access key IDandSecret access keyinIAMsection of AWS Console and save them on your machine underaiarenaprofile:- go to https://315513665747.signin.aws.amazon.com/console

- in the IAM find your user, go to

Security credentialsand create an access key if you don't have one - use the credentials to configure AWS:

$ aws configure --profile aiarena AWS Access Key ID [None]: ****** AWS Secret Access Key [None]: ****** Default region name [None]: eu-central-1 Default output format [None]:

Pre-requisites:

- Install local development tools and configure your AWS credentials, as described above;

- Install AWS Session Manager plugin.

After that you should be able to use the uv run.py production-one-off-task command.

It will spin up a new task with no production traffic routed to it, and with custom CPU and memory values.

By default, the task will be killed in 24 hours to make sure it's not consuming money after it's finished. You can use the --lifetime-hours flag to override this behaviour if you need to run something really long.

Also, by default, the task is killed early if you disconnect from the ssh session. Use the --dont-kill-on-disconnect flag to disable this behaviour, if you want it running in the background.

Pre-requisites:

- Install local development tools and configure your AWS credentials, as described above;

- Install AWS Session Manager plugin.

After that you should be able to use the uv run.py production-attach-to-task command. It will help you find an existing production container, and connect you to it.

You can also specify a task ID with --task-id <task id here> flag, if you already have one (for example, if you created a one-off task).

This is the way we do Infrastructure-as-Code. All the AWS resources we have are in index-default.yaml template file. Make some changes to it, and then generate a CloudFormation template based on it:

uv run.py cloudformation

The template would be saved to cloudformation-default.yaml in the project root

directory. Next, use this template to update the stack in CloudFormation

section of AWS Console:

- Select

aiarenaand clickUpdate - Choose

Replace current template - Choose

Upload a template file, and select thecloudformation-default.yamlfile that you generated in the previous step. Then click next. - Update the variables if you want to, then click next until you get to the last screen.

- On the last screen please wait for the

Change set previewto be generated, it can take a minute. Take a look at the preview, and make sure it makes sense. - Click

Submitand watch the infrastructure change

We're running the application code inside of Fargate containers, so to deploy a new version to prod, we need to build a new Docker image, and then update the Fargate containers to use it.

Here's how the deployment process works:

- Changes are pushed to the

mainbranch - GitHub Actions starts running tests/linters and in parallel runs

uv run.py prepare-images, which builds an image and pushes it to Elastic Container Registry with a tag likebuild-111-amd64 - If all tests/linters passed,

uv run.py ecsruns next:- It makes a

latestalias to the image that we built earlier - Then, it runs

manage.py migrate - Finally, it triggers a rolling update to all the Fargate containers, that will use the

latestimage

- It makes a

- As a final step,

uv run.py monitor-ecsruns. It watches the rolling update, and makes sure all the services are replaced, and running. This step can fail if the containers are failing to start for some reason.

Core project functionality

Web API endpoints and functionality.

This root api folder contains views for public use.

API endpoints specifically for use by the arenaclients to obtain new matches and report results.

API endpoints specifically for use by the livestream player to obtain a curated list of match replays to feature.

Django template website frontend

React frontend for the profile dashboard

GraphQL API used by the React frontend

A module for linking website users to their patreon counterparts.

Copyright (c) 2019

Licensed under the GPLv3 license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiarena-web

Similar Open Source Tools

aiarena-web

aiarena-web is a website designed for running the aiarena.net infrastructure. It consists of different modules such as core functionality, web API endpoints, frontend templates, and a module for linking users to their Patreon accounts. The website serves as a platform for obtaining new matches, reporting results, featuring match replays, and connecting with Patreon supporters. The project is licensed under GPLv3 in 2019.

ai-voice-cloning

This repository provides a tool for AI voice cloning, allowing users to generate synthetic speech that closely resembles a target speaker's voice. The tool is designed to be user-friendly and accessible, with a graphical user interface that guides users through the process of training a voice model and generating synthetic speech. The tool also includes a variety of features that allow users to customize the generated speech, such as the pitch, volume, and speaking rate. Overall, this tool is a valuable resource for anyone interested in creating realistic and engaging synthetic speech.

concierge

Concierge is a versatile automation tool designed to streamline repetitive tasks and workflows. It provides a user-friendly interface for creating custom automation scripts without the need for extensive coding knowledge. With Concierge, users can automate various tasks across different platforms and applications, increasing efficiency and productivity. The tool offers a wide range of pre-built automation templates and allows users to customize and schedule their automation processes. Concierge is suitable for individuals and businesses looking to automate routine tasks and improve overall workflow efficiency.

polis

Polis is an AI powered sentiment gathering platform that offers a more organic approach than surveys and requires less effort than focus groups. It provides a comprehensive wiki, main deployment at https://pol.is, discussions, issue tracking, and project board for users. Polis can be set up using Docker infrastructure and offers various commands for building and running containers. Users can test their instance, update the system, and deploy Polis for production. The tool also provides developer conveniences for code reloading, type checking, and database connections. Additionally, Polis supports end-to-end browser testing using Cypress and offers troubleshooting tips for common Docker and npm issues.

openui

OpenUI is a tool designed to simplify the process of building UI components by allowing users to describe UI using their imagination and see it rendered live. It supports converting HTML to React, Svelte, Web Components, etc. The tool is open source and aims to make UI development fun, fast, and flexible. It integrates with various AI services like OpenAI, Groq, Gemini, Anthropic, Cohere, and Mistral, providing users with the flexibility to use different models. OpenUI also supports LiteLLM for connecting to various LLM services and allows users to create custom proxy configs. The tool can be run locally using Docker or Python, and it offers a development environment for quick setup and testing.

pyrfuniverse

pyrfuniverse is a python package used to interact with RFUniverse simulation environment. It is developed with reference to ML-Agents and produce new features. The package allows users to work with RFUniverse for simulation purposes, providing tools and functionalities to interact with the environment and create new features.

ultravox

Ultravox is a fast multimodal Language Model (LLM) that can understand both text and human speech in real-time without the need for a separate Audio Speech Recognition (ASR) stage. By extending Meta's Llama 3 model with a multimodal projector, Ultravox converts audio directly into a high-dimensional space used by Llama 3, enabling quick responses and potential understanding of paralinguistic cues like timing and emotion in human speech. The current version (v0.3) has impressive speed metrics and aims for further enhancements. Ultravox currently converts audio to streaming text and plans to emit speech tokens for direct audio conversion. The tool is open for collaboration to enhance this functionality.

AlwaysReddy

AlwaysReddy is a simple LLM assistant with no UI that you interact with entirely using hotkeys. It can easily read from or write to your clipboard, and voice chat with you via TTS and STT. Here are some of the things you can use AlwaysReddy for: - Explain a new concept to AlwaysReddy and have it save the concept (in roughly your words) into a note. - Ask AlwaysReddy "What is X called?" when you know how to roughly describe something but can't remember what it is called. - Have AlwaysReddy proofread the text in your clipboard before you send it. - Ask AlwaysReddy "From the comments in my clipboard, what do the r/LocalLLaMA users think of X?" - Quickly list what you have done today and get AlwaysReddy to write a journal entry to your clipboard before you shutdown the computer for the day.

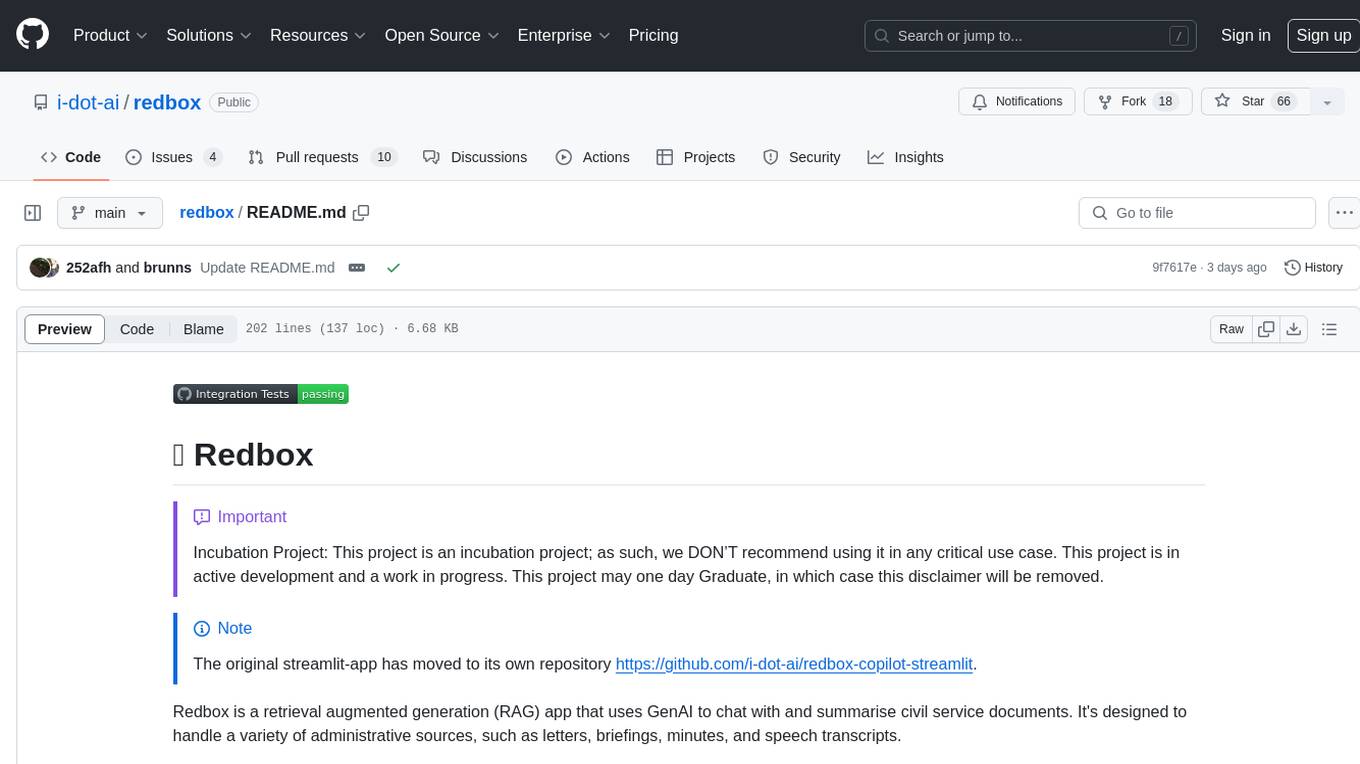

redbox-copilot

Redbox Copilot is a retrieval augmented generation (RAG) app that uses GenAI to chat with and summarise civil service documents. It increases organisational memory by indexing documents and can summarise reports read months ago, supplement them with current work, and produce a first draft that lets civil servants focus on what they do best. The project uses a microservice architecture with each microservice running in its own container defined by a Dockerfile. Dependencies are managed using Python Poetry. Contributions are welcome, and the project is licensed under the MIT License.

aisheets

Hugging Face AI Sheets is an open-source tool for building, enriching, and transforming datasets using AI models with no code. It can be deployed locally or on the Hub, providing access to thousands of open models. Users can easily generate datasets, run data generation scripts, and customize inference endpoints for text generation. The tool supports custom LLMs and offers advanced configuration options for authentication, inference, and miscellaneous settings. With AI Sheets, users can leverage the power of AI models without writing any code, making dataset management and transformation efficient and accessible.

langchain-google

LangChain Google is a repository containing three packages with Google integrations: langchain-google-genai for Google Generative AI models, langchain-google-vertexai for Google Cloud Generative AI on Vertex AI, and langchain-google-community for other Google product integrations. The repository is organized as a monorepo with a structure including libs for different packages, and files like pyproject.toml and Makefile for building, linting, and testing. It provides guidelines for contributing, local development dependencies installation, formatting, linting, working with optional dependencies, and testing with unit and integration tests. The focus is on maintaining unit test coverage and avoiding excessive integration tests, with annotations for GCP infrastructure-dependent tests.

ultimate-rvc

Ultimate RVC is an extension of AiCoverGen, offering new features and improvements for generating audio content using RVC. It is designed for users looking to integrate singing functionality into AI assistants/chatbots/vtubers, create character voices for songs or books, and train voice models. The tool provides easy setup, voice conversion enhancements, TTS functionality, voice model training suite, caching system, UI improvements, and support for custom configurations. It is available for local and Google Colab use, with a PyPI package for easy access. The tool also offers CLI usage and customization through environment variables.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

langgraph-studio

LangGraph Studio is a specialized agent IDE that enables visualization, interaction, and debugging of complex agentic applications. It offers visual graphs and state editing to better understand agent workflows and iterate faster. Users can collaborate with teammates using LangSmith to debug failure modes. The tool integrates with LangSmith and requires Docker installed. Users can create and edit threads, configure graph runs, add interrupts, and support human-in-the-loop workflows. LangGraph Studio allows interactive modification of project config and graph code, with live sync to the interactive graph for easier iteration on long-running agents.

reai-ghidra

The RevEng.AI Ghidra Plugin by RevEng.ai allows users to interact with their API within Ghidra for Binary Code Similarity analysis to aid in Reverse Engineering stripped binaries. Users can upload binaries, rename functions above a confidence threshold, and view similar functions for a selected function.

redbox

Redbox is a retrieval augmented generation (RAG) app that uses GenAI to chat with and summarise civil service documents. It increases organisational memory by indexing documents and can summarise reports read months ago, supplement them with current work, and produce a first draft that lets civil servants focus on what they do best. The project uses a microservice architecture with each microservice running in its own container defined by a Dockerfile. Dependencies are managed using Python Poetry. Contributions are welcome, and the project is licensed under the MIT License. Security measures are in place to ensure user data privacy and considerations are being made to make the core-api secure.

For similar tasks

aiarena-web

aiarena-web is a website designed for running the aiarena.net infrastructure. It consists of different modules such as core functionality, web API endpoints, frontend templates, and a module for linking users to their Patreon accounts. The website serves as a platform for obtaining new matches, reporting results, featuring match replays, and connecting with Patreon supporters. The project is licensed under GPLv3 in 2019.

For similar jobs

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.

pluto

Pluto is a development tool dedicated to helping developers **build cloud and AI applications more conveniently** , resolving issues such as the challenging deployment of AI applications and open-source models. Developers are able to write applications in familiar programming languages like **Python and TypeScript** , **directly defining and utilizing the cloud resources necessary for the application within their code base** , such as AWS SageMaker, DynamoDB, and more. Pluto automatically deduces the infrastructure resource needs of the app through **static program analysis** and proceeds to create these resources on the specified cloud platform, **simplifying the resources creation and application deployment process**.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

aioconsole

aioconsole is a Python package that provides asynchronous console and interfaces for asyncio. It offers asynchronous equivalents to input, print, exec, and code.interact, an interactive loop running the asynchronous Python console, customization and running of command line interfaces using argparse, stream support to serve interfaces instead of using standard streams, and the apython script to access asyncio code at runtime without modifying the sources. The package requires Python version 3.8 or higher and can be installed from PyPI or GitHub. It allows users to run Python files or modules with a modified asyncio policy, replacing the default event loop with an interactive loop. aioconsole is useful for scenarios where users need to interact with asyncio code in a console environment.

aiosqlite

aiosqlite is a Python library that provides a friendly, async interface to SQLite databases. It replicates the standard sqlite3 module but with async versions of all the standard connection and cursor methods, along with context managers for automatically closing connections and cursors. It allows interaction with SQLite databases on the main AsyncIO event loop without blocking execution of other coroutines while waiting for queries or data fetches. The library also replicates most of the advanced features of sqlite3, such as row factories and total changes tracking.