Best AI tools for< Extract Data From Web >

20 - AI tool Sites

Tablize

Tablize is a powerful data extraction tool that helps you turn unstructured data into structured, tabular format. With Tablize, you can easily extract data from PDFs, images, and websites, and export it to Excel, CSV, or JSON. Tablize uses artificial intelligence to automate the data extraction process, making it fast and easy to get the data you need.

Webscrape AI

Webscrape AI is a no-code web scraping tool that allows users to collect data from websites without writing any code. It is easy to use, accurate, and affordable, making it a great option for businesses of all sizes. With Webscrape AI, you can automate your data collection process and free up your time to focus on other tasks.

ScrapeComfort

ScrapeComfort is an AI-driven web scraping tool that offers an effortless and intuitive data mining solution. It leverages AI technology to extract data from websites without the need for complex coding or technical expertise. Users can easily input URLs, download data, set up extractors, and save extracted data for immediate use. The tool is designed to cater to various needs such as data analytics, market investigation, and lead acquisition, making it a versatile solution for businesses and individuals looking to streamline their data collection process.

GetOData

GetOData is a powerful web scraping API and Chrome extension that offers AI-based data extraction tools for small-scale scraping projects. It allows users to extract data from websites without getting blocked, bypassing captchas and other anti-bot mechanisms. The API is built by data extraction experts and provides features like choosing the output format, setting proxy locations, executing JavaScript, taking screenshots, and more. GetOData offers simplified pricing options for freelancers, startups, and businesses, with competitive rates and high success rates. Users can sign up for a free demo to experience the capabilities of GetOData API.

Simplescraper

Simplescraper is a web scraping tool that allows users to extract data from any website in seconds. It offers the ability to download data instantly, scrape at scale in the cloud, or create APIs without the need for coding. The tool is designed for developers and no-coders, making web scraping simple and efficient. Simplescraper AI Enhance provides a new way to pull insights from web data, allowing users to summarize, analyze, format, and understand extracted data using AI technology.

UseScraper

UseScraper is a web crawler and scraper API that allows users to extract data from websites for research, analysis, and AI applications. It offers features such as full browser rendering, markdown conversion, and automatic proxies to prevent rate limiting. UseScraper is designed to be fast, easy to use, and cost-effective, with plans starting at $0 per month.

Hexowatch

Hexowatch is an AI-powered website monitoring and archiving tool that helps businesses track changes to any website, including visual, content, source code, technology, availability, or price changes. It provides detailed change reports, archives snapshots of pages, and offers side-by-side comparisons and diff reports to highlight changes. Hexowatch also allows users to access monitored data fields as a downloadable CSV file, Google Sheet, RSS feed, or sync any update via Zapier to over 2000 different applications.

Axiom.ai

Axiom.ai is a no-code browser automation tool that allows users to automate website actions and repetitive tasks on any website or web app. It is a Chrome Extension that is simple to install and free to try. Once installed, users can pin Axiom to the Chrome Toolbar and click on the icon to open and close. Users can build custom bots or use templates to automate actions like clicking, typing, and scraping data from websites. Axiom.ai can be integrated with Zapier to trigger automations based on external events.

PromptLoop

PromptLoop is an AI-powered tool that integrates with Excel and Google Sheets to enhance market research and data analysis. It offers custom AI models tailored to specific needs, enabling users to extract insights from complex information. With PromptLoop, users can leverage advanced AI capabilities for tasks such as web research, content analysis, and data labeling, streamlining workflows and improving efficiency.

Browse AI

Browse AI is a web data extraction and monitoring platform that makes it easy, affordable, and reliable for anyone to collect data from the web at scale. It was founded in 2020 with the mission of making the web more accessible and useful for everyone.

PandasAI

PandasAI is an open-source AI tool designed for conversational data analysis. It allows users to ask questions in natural language to their enterprise data and receive real-time data insights. The tool is integrated with various data sources and offers enhanced analytics, actionable insights, detailed reports, and visual data representation. PandasAI aims to democratize data analysis for better decision-making, offering enterprise solutions for stable and scalable internal data analysis. Users can also fine-tune models, ingest universal data, structure data automatically, augment datasets, extract data from websites, and forecast trends using AI.

Floneum

Floneum is a versatile AI-powered tool designed for language-related tasks. It allows users to build workflows using large language models through a user-friendly drag-and-drop interface. Additionally, Floneum supports the secure extension of functionalities with WebAssembly plugins, enabling users to write plugins in various languages like Rust, C, Java, or Go. With 41 built-in plugins, Floneum offers a range of features to enhance text processing, search engine operations, file handling, Python script execution, browser automation, and more.

Octoparse

Octoparse is an AI web scraping tool that offers a no-coding solution for turning web pages into structured data with just a few clicks. It provides users with the ability to build reliable web scrapers without any coding knowledge, thanks to its intuitive workflow designer. With features like AI assistance, automation, and template libraries, Octoparse is a powerful tool for data extraction and analysis across various industries.

Apify

Apify is a full-stack web scraping and data extraction platform that offers a wide range of tools and services for developers to build, deploy, and publish data extraction and web automation tools. The platform provides pre-built web scraping tools called Actors, which allow users to easily extract data from popular websites. Apify also offers professional services for custom web scraping solutions and integrations with various apps and services. With features like serverless programs, anti-blocking proxies, and storage for results, Apify aims to simplify the process of web scraping and data extraction for users.

FetchFox

FetchFox is an AI-powered web scraping tool that allows users to extract data from any website by providing a prompt in plain English. It runs as a Chrome Extension and can bypass anti-scraping measures on sites like LinkedIn and Facebook. FetchFox is designed to quickly gather data for tasks such as lead generation, research data assembly, and market segment analysis.

Browse AI

Browse AI is an AI-powered data extraction platform that allows users to scrape and monitor data from any website without the need for coding. It offers managed services for stress-free data extraction, turning any website into an API within minutes. Users can monitor websites for changes and access a complete suite of features for data extraction. Browse AI caters to various industries such as E-Commerce, Real Estate, Recruitment, and Investors & VCs, providing valuable insights and data-driven decisions. The platform ensures best-in-class data reliability through automated site layout monitoring, human behavior emulation, and location-based data extraction.

Parsio

Parsio is an AI-powered document parser that can extract structured data from PDFs, emails, and other documents. It uses natural language processing to understand the context of the document and identify the relevant data points. Parsio can be used to automate a variety of tasks, such as extracting data from invoices, receipts, and emails.

Reworkd

Reworkd is a web data extraction tool that uses AI to generate and repair web extractors on the fly. It allows users to retrieve data from hundreds of websites without the need for developers. Reworkd is used by businesses in a variety of industries, including manufacturing, e-commerce, recruiting, lead generation, and real estate.

Airparser

Airparser is an AI-powered email and document parser tool that revolutionizes data extraction by utilizing the GPT parser engine. It allows users to automate the extraction of structured data from various sources such as emails, PDFs, documents, and handwritten texts. With features like automatic extraction, export to multiple platforms, and support for multiple languages, Airparser simplifies data extraction processes for individuals and businesses. The tool ensures data security and offers seamless integration with other applications through APIs and webhooks.

Pentest Copilot

Pentest Copilot by BugBase is an ultimate ethical hacking assistant that guides users through each step of the hacking journey, from analyzing web apps to root shells. It eliminates redundant research, automates payload and command generation, and provides intelligent contextual analysis to save time. The application excels at data extraction, privilege escalation, lateral movement, and leaving no trace behind. With features like secure VPN integration, total control over sessions, parallel command processing, and flexibility to choose between local or cloud execution, Pentest Copilot offers a seamless and efficient hacking experience without the need for Kali Linux installation.

20 - Open Source AI Tools

Scrapegraph-ai

ScrapeGraphAI is a web scraping Python library that utilizes LLM and direct graph logic to create scraping pipelines for websites and local documents. It offers various standard scraping pipelines like SmartScraperGraph, SearchGraph, SpeechGraph, and ScriptCreatorGraph. Users can extract information by specifying prompts and input sources. The library supports different LLM APIs such as OpenAI, Groq, Azure, and Gemini, as well as local models using Ollama. ScrapeGraphAI is designed for data exploration and research purposes, providing a versatile tool for extracting information from web pages and generating outputs like Python scripts, audio summaries, and search results.

Scrapegraph-ai

ScrapeGraphAI is a Python library that uses Large Language Models (LLMs) and direct graph logic to create web scraping pipelines for websites, documents, and XML files. It allows users to extract specific information from web pages by providing a prompt describing the desired data. ScrapeGraphAI supports various LLMs, including Ollama, OpenAI, Gemini, and Docker, enabling users to choose the most suitable model for their needs. The library provides a user-friendly interface through its `SmartScraper` class, which simplifies the process of building and executing scraping pipelines. ScrapeGraphAI is open-source and available on GitHub, with extensive documentation and examples to guide users. It is particularly useful for researchers and data scientists who need to extract structured data from web pages for analysis and exploration.

crawl4ai

Crawl4AI is a powerful and free web crawling service that extracts valuable data from websites and provides LLM-friendly output formats. It supports crawling multiple URLs simultaneously, replaces media tags with ALT, and is completely free to use and open-source. Users can integrate Crawl4AI into Python projects as a library or run it as a standalone local server. The tool allows users to crawl and extract data from specified URLs using different providers and models, with options to include raw HTML content, force fresh crawls, and extract meaningful text blocks. Configuration settings can be adjusted in the `crawler/config.py` file to customize providers, API keys, chunk processing, and word thresholds. Contributions to Crawl4AI are welcome from the open-source community to enhance its value for AI enthusiasts and developers.

CyberScraper-2077

CyberScraper 2077 is an advanced web scraping tool powered by AI, designed to extract data from websites with precision and style. It offers a user-friendly interface, supports multiple data export formats, operates in stealth mode to avoid detection, and promises lightning-fast scraping. The tool respects ethical scraping practices, including robots.txt and site policies. With upcoming features like proxy support and page navigation, CyberScraper 2077 is a futuristic solution for data extraction in the digital realm.

Scrapegraph-demo

ScrapeGraphAI is a web scraping Python library that utilizes LangChain, LLM, and direct graph logic to create scraping pipelines. Users can specify the information they want to extract, and the library will handle the extraction process. This repository contains an official demo/trial for the ScrapeGraphAI library, showcasing its capabilities in web scraping tasks. The tool is designed to simplify the process of extracting data from websites by providing a user-friendly interface and powerful scraping functionalities.

aio-scrapy

Aio-scrapy is an asyncio-based web crawling and web scraping framework inspired by Scrapy. It supports distributed crawling/scraping, implements compatibility with scrapyd, and provides options for using redis queue and rabbitmq queue. The framework is designed for fast extraction of structured data from websites. Aio-scrapy requires Python 3.9+ and is compatible with Linux, Windows, macOS, and BSD systems.

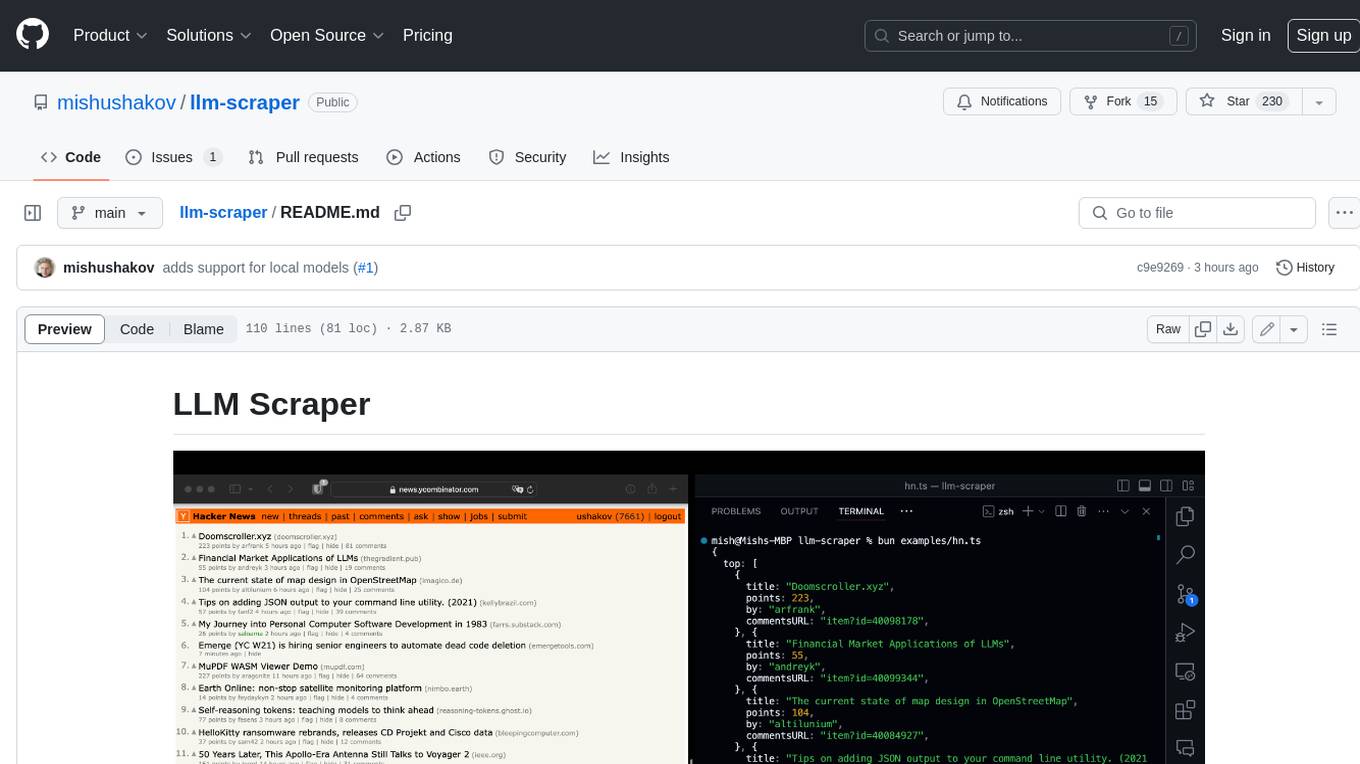

llm-scraper

LLM Scraper is a TypeScript library that allows you to convert any webpages into structured data using LLMs. It supports Local (GGUF), OpenAI, Groq chat models, and schemas defined with Zod. With full type-safety in TypeScript and based on the Playwright framework, it offers streaming when crawling multiple pages and supports four input modes: html, markdown, text, and image.

free-for-life

A massive list including a huge amount of products and services that are completely free! ⭐ Star on GitHub • 🤝 Contribute # Table of Contents * APIs, Data & ML * Artificial Intelligence * BaaS * Code Editors * Code Generation * DNS * Databases * Design & UI * Domains * Email * Font * For Students * Forms * Linux Distributions * Messaging & Streaming * PaaS * Payments & Billing * SSL

GenAI_Agents

GenAI Agents is a comprehensive repository for developing and implementing Generative AI (GenAI) agents, ranging from simple conversational bots to complex multi-agent systems. It serves as a valuable resource for learning, building, and sharing GenAI agents, offering tutorials, implementations, and a platform for showcasing innovative agent creations. The repository covers a wide range of agent architectures and applications, providing step-by-step tutorials, ready-to-use implementations, and regular updates on advancements in GenAI technology.

thepipe

The Pipe is a multimodal-first tool for feeding files and web pages into vision-language models such as GPT-4V. It is best for LLM and RAG applications that require a deep understanding of tricky data sources. The Pipe is available as a hosted API at thepi.pe, or it can be set up locally.

skyvern

Skyvern automates browser-based workflows using LLMs and computer vision. It provides a simple API endpoint to fully automate manual workflows, replacing brittle or unreliable automation solutions. Traditional approaches to browser automations required writing custom scripts for websites, often relying on DOM parsing and XPath-based interactions which would break whenever the website layouts changed. Instead of only relying on code-defined XPath interactions, Skyvern adds computer vision and LLMs to the mix to parse items in the viewport in real-time, create a plan for interaction and interact with them. This approach gives us a few advantages: 1. Skyvern can operate on websites it’s never seen before, as it’s able to map visual elements to actions necessary to complete a workflow, without any customized code 2. Skyvern is resistant to website layout changes, as there are no pre-determined XPaths or other selectors our system is looking for while trying to navigate 3. Skyvern leverages LLMs to reason through interactions to ensure we can cover complex situations. Examples include: 1. If you wanted to get an auto insurance quote from Geico, the answer to a common question “Were you eligible to drive at 18?” could be inferred from the driver receiving their license at age 16 2. If you were doing competitor analysis, it’s understanding that an Arnold Palmer 22 oz can at 7/11 is almost definitely the same product as a 23 oz can at Gopuff (even though the sizes are slightly different, which could be a rounding error!) Want to see examples of Skyvern in action? Jump to #real-world-examples-of- skyvern

spider

Spider is a high-performance web crawler and indexer designed to handle data curation workloads efficiently. It offers features such as concurrency, streaming, decentralization, headless Chrome rendering, HTTP proxies, cron jobs, subscriptions, smart mode, blacklisting, whitelisting, budgeting depth, dynamic AI prompt scripting, CSS scraping, and more. Users can easily get started with the Spider Cloud hosted service or set up local installations with spider-cli. The tool supports integration with Node.js and Python for additional flexibility. With a focus on speed and scalability, Spider is ideal for extracting and organizing data from the web.

awesome-generative-ai

A curated list of Generative AI projects, tools, artworks, and models

firecrawl

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It crawls all accessible subpages and provides clean markdown for each, without requiring a sitemap. The API is easy to use and can be self-hosted. It also integrates with Langchain and Llama Index. The Python SDK makes it easy to crawl and scrape websites in Python code.

ai-audio-startups

The 'ai-audio-startups' repository is a community list of startups working with AI for audio and music tech. It includes a comprehensive collection of tools and platforms that leverage artificial intelligence to enhance various aspects of music creation, production, source separation, analysis, recommendation, health & wellbeing, radio/podcast, hearing, sound detection, speech transcription, synthesis, enhancement, and manipulation. The repository serves as a valuable resource for individuals interested in exploring innovative AI applications in the audio and music industry.

20 - OpenAI Gpts

Spreadsheet Composer

Magically turning text from emails, lists and website content into spreadsheet tables

Regex Wizard

Generate and explain regex patterns from your description, it support English and Chinese.

QCM

ce GPT va recevoir des images dans lesquelles il y a des questions QCM codingame ou Problem Solving sur les sujets : Java, Hibernate, Angular, Spring Boot, SQL. Il doit extraire le texte depuis l'image et répondre au question QCM le plus rapidement possible.

PDF Ninja

I extract data and tables from PDFs to CSV, focusing on data privacy and precision.

Property Manager Document Assistant

Provides analysis and data extraction of Property Management documents and contracts for managers

Fill PDF Forms

Fill legal forms & complex PDF documents easily! Upload a file, provide data sources and I'll handle the rest.

Email Thread GPT

I'm EmailThreadAnalyzer, here to help you with your email thread analysis.