Best AI tools for< Deploy Data Applications >

20 - AI tool Sites

Streamlit

Streamlit is a web application framework that allows users to create interactive web applications effortlessly using Python. It enables data scientists and developers to build and deploy data-driven applications quickly and easily. With Streamlit, users can create interactive visualizations, dashboards, and machine learning models without the need for extensive web development knowledge. The platform provides a simple and intuitive way to turn data scripts into shareable web apps, making it ideal for prototyping, showcasing projects, and sharing insights with others.

Streamlit

Streamlit is a web application framework that allows data scientists and machine learning engineers to create interactive web applications quickly and easily. It simplifies the process of building and sharing data-focused applications by providing a simple Python script that can be turned into a shareable web app with just a few lines of code. With Streamlit, users can create interactive visualizations, dashboards, and machine learning models without the need for web development expertise.

SID

SID is a data ingestion, storage, and retrieval pipeline that provides real-time context for AI applications. It connects to various data sources, handles authentication and permission flows, and keeps information up-to-date. SID's API allows developers to retrieve the right piece of data for a given task, enabling them to build AI apps that are fast, accurate, and scalable. With SID, developers can focus on building their products and leave the data management to SID.

Amazon Web Services (AWS)

Amazon Web Services (AWS) is a comprehensive, evolving cloud computing platform from Amazon that provides a broad set of global compute, storage, database, analytics, application, and deployment services that help organizations move faster, lower IT costs, and scale applications. With AWS, you can use as much or as little of its services as you need, and scale up or down as required with only a few minutes notice. AWS has a global network of regions and availability zones, so you can deploy your applications and data in the locations that are optimal for you.

Unified DevOps platform to build AI applications

This is a unified DevOps platform to build AI applications. It provides a comprehensive set of tools and services to help developers build, deploy, and manage AI applications. The platform includes a variety of features such as a code editor, a debugger, a profiler, and a deployment manager. It also provides access to a variety of AI services, such as natural language processing, machine learning, and computer vision.

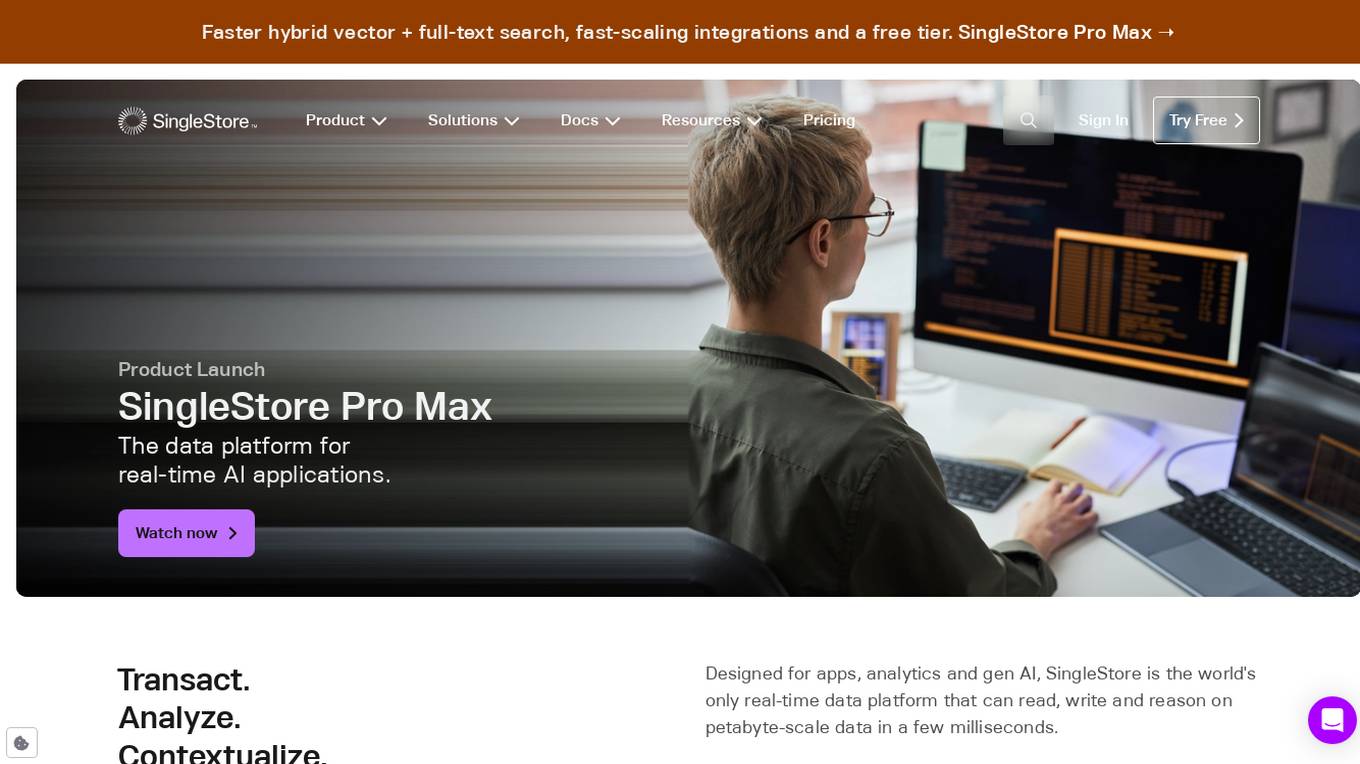

SingleStore

SingleStore is a real-time data platform designed for apps, analytics, and gen AI. It offers faster hybrid vector + full-text search, fast-scaling integrations, and a free tier. SingleStore can read, write, and reason on petabyte-scale data in milliseconds. It supports streaming ingestion, high concurrency, first-class vector support, record lookups, and more.

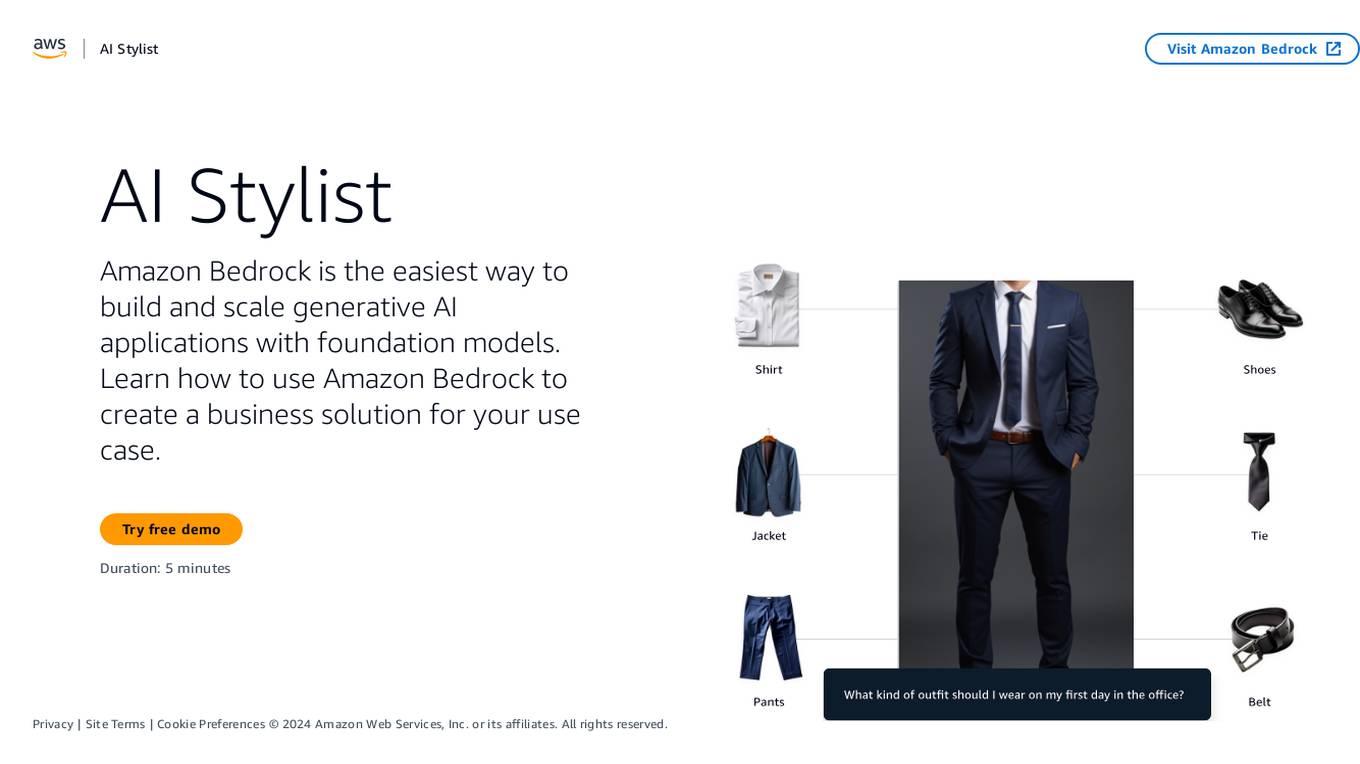

Amazon Bedrock

Amazon Bedrock is a cloud-based platform that enables developers to build, deploy, and manage serverless applications. It provides a fully managed environment that takes care of the infrastructure and operations, so developers can focus on writing code. Bedrock also offers a variety of tools and services to help developers build and deploy their applications, including a code editor, a debugger, and a deployment pipeline.

Helix AI

Helix AI is a private GenAI platform that enables users to build AI applications using open source models. The platform offers tools for RAG (Retrieval-Augmented Generation) and fine-tuning, allowing deployment on-premises or in a Virtual Private Cloud (VPC). Users can access curated models, utilize Helix API tools to connect internal and external APIs, embed Helix Assistants into websites/apps for chatbot functionality, write AI application logic in natural language, and benefit from the innovative RAG system for Q&A generation. Additionally, users can fine-tune models for domain-specific needs and deploy securely on Kubernetes or Docker in any cloud environment. Helix Cloud offers free and premium tiers with GPU priority, catering to individuals, students, educators, and companies of varying sizes.

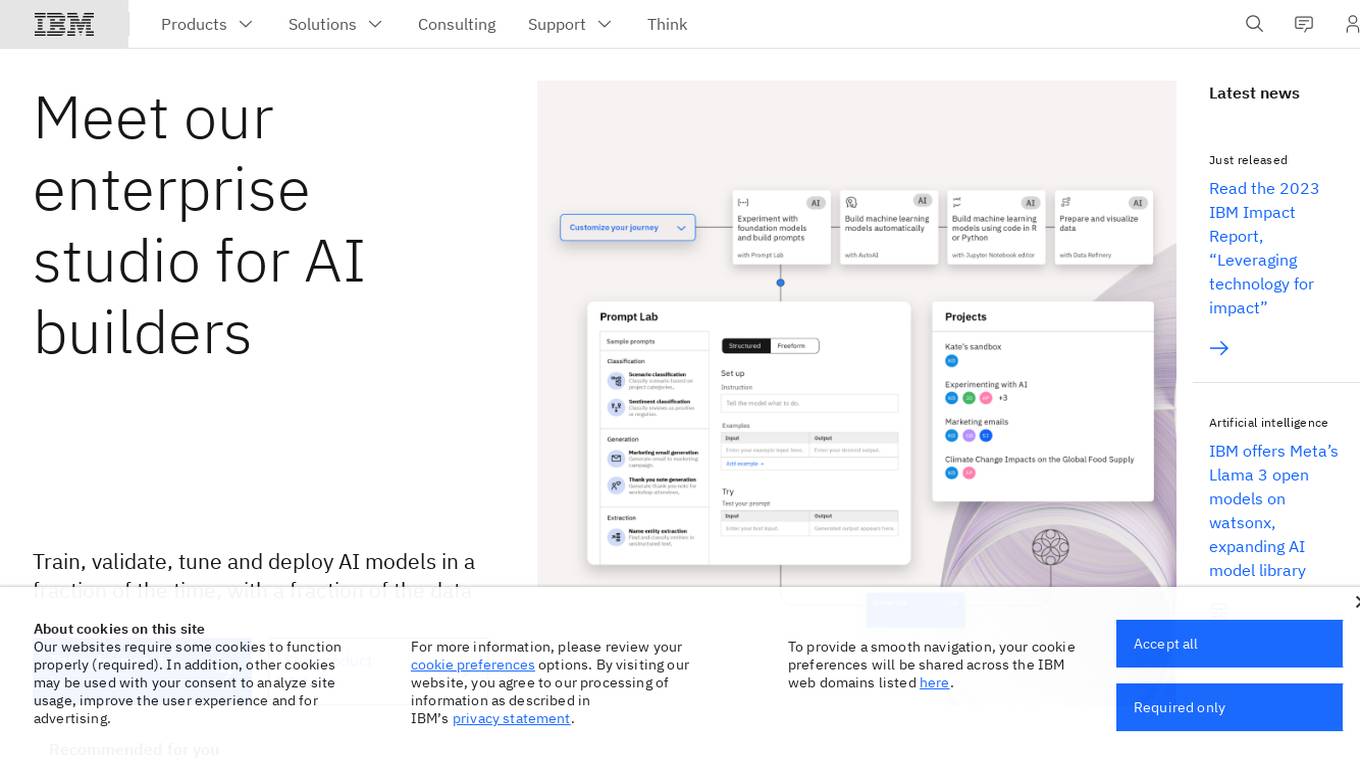

IBM Watsonx

IBM Watsonx is an enterprise studio for AI builders. It provides a platform to train, validate, tune, and deploy AI models quickly and efficiently. With Watsonx, users can access a library of pre-trained AI models, build their own models, and deploy them to the cloud or on-premises. Watsonx also offers a range of tools and services to help users manage and monitor their AI models.

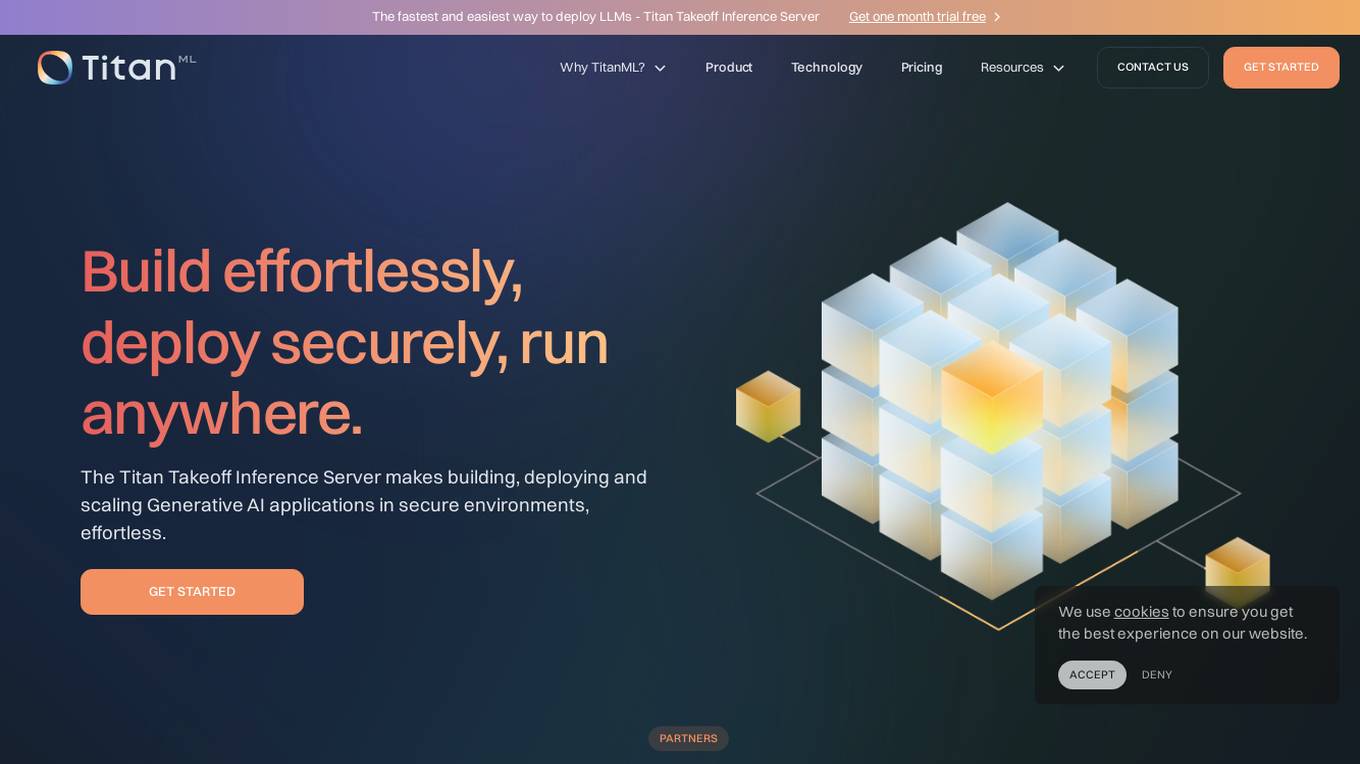

TitanML

TitanML is a platform that provides tools and services for deploying and scaling Generative AI applications. Their flagship product, the Titan Takeoff Inference Server, helps machine learning engineers build, deploy, and run Generative AI models in secure environments. TitanML's platform is designed to make it easy for businesses to adopt and use Generative AI, without having to worry about the underlying infrastructure. With TitanML, businesses can focus on building great products and solving real business problems.

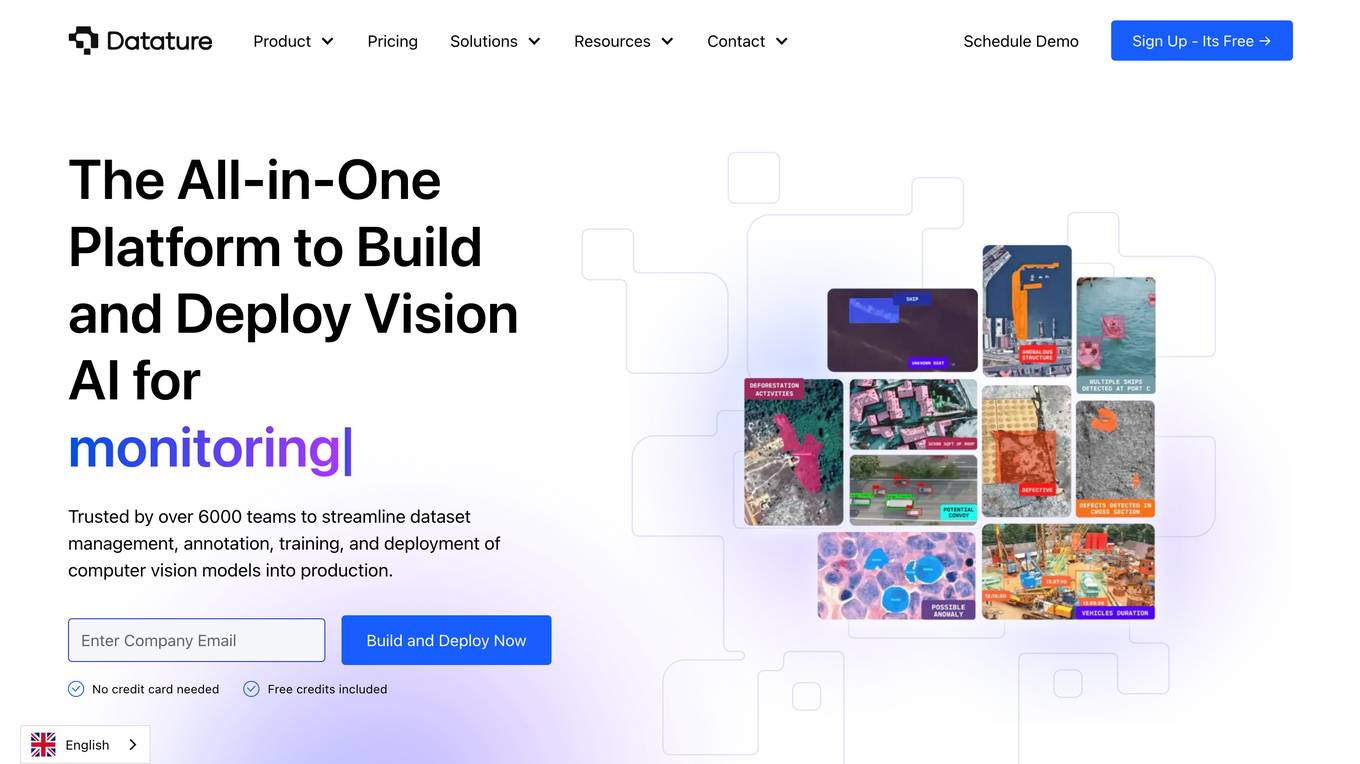

Datature

Datature is an all-in-one platform for building and deploying computer vision models. It provides tools for data management, annotation, training, and deployment, making it easy to develop and implement computer vision solutions. Datature is used by a variety of industries, including healthcare, retail, manufacturing, and agriculture.

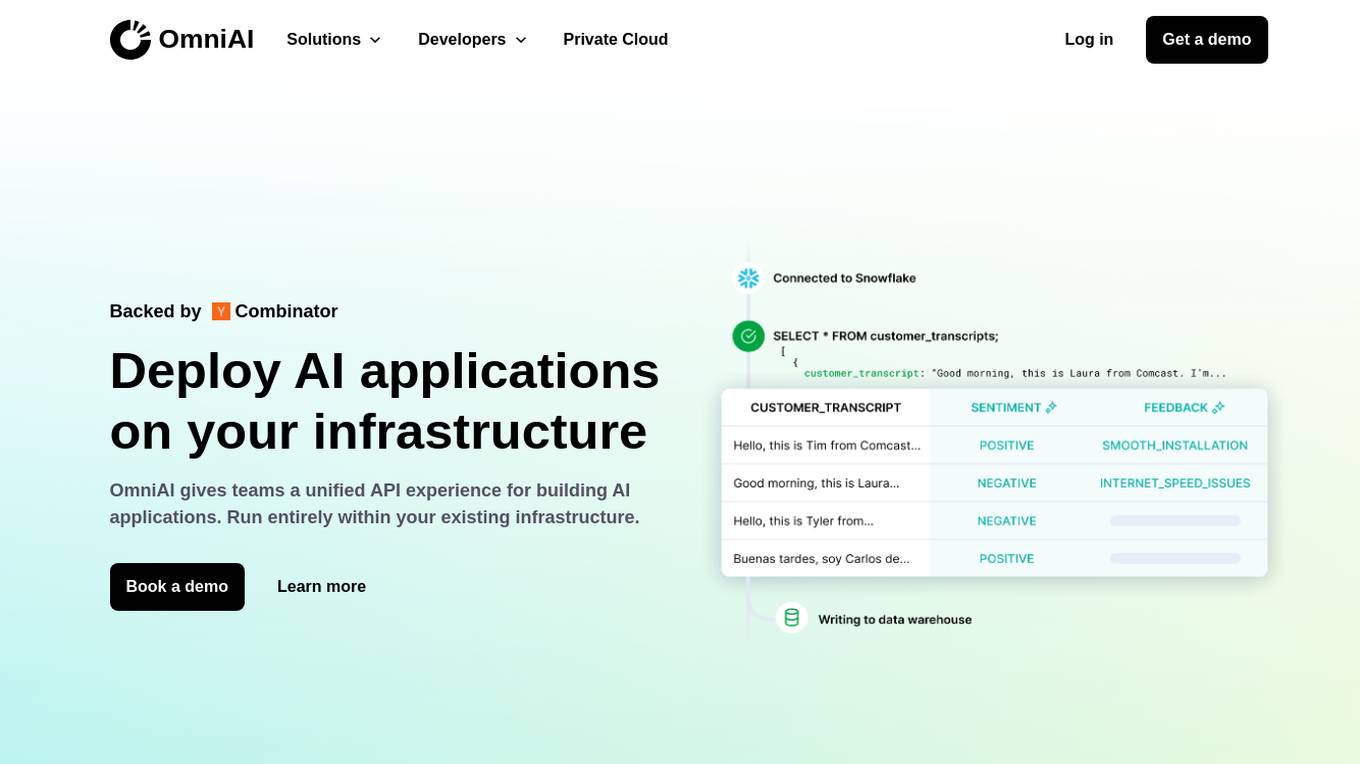

OmniAI

OmniAI is an AI tool that allows teams to deploy AI applications on their existing infrastructure. It provides a unified API experience for building AI applications and offers a wide selection of industry-leading models. With tools like Llama 3, Claude 3, Mistral Large, and AWS Titan, OmniAI excels in tasks such as natural language understanding, generation, safety, ethical behavior, and context retention. It also enables users to deploy and query the latest AI models quickly and easily within their virtual private cloud environment.

BentoML

BentoML is a platform for software engineers to build, ship, and scale AI products. It provides a unified AI application framework that makes it easy to manage and version models, create service APIs, and build and run AI applications anywhere. BentoML is used by over 1000 organizations and has a global community of over 3000 members.

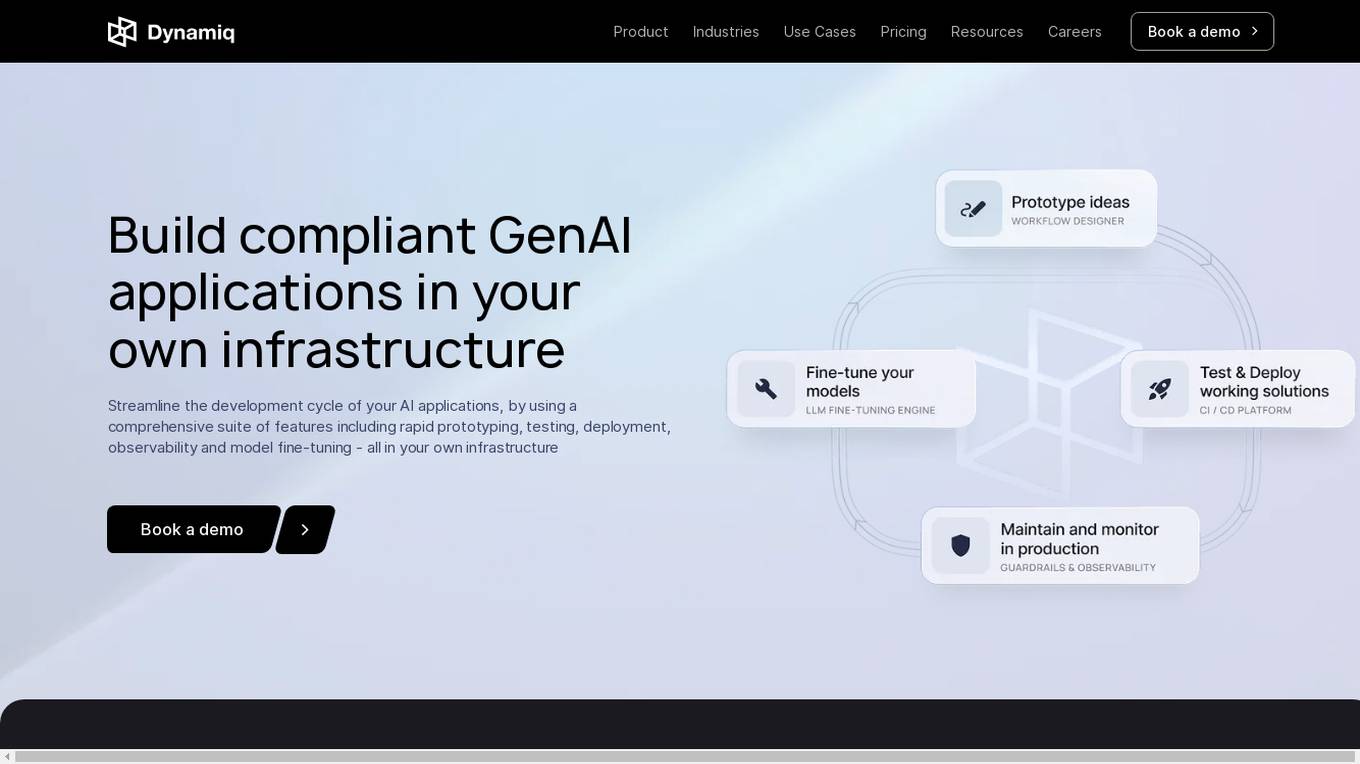

Dynamiq

Dynamiq is an operating platform for GenAI applications that enables users to build compliant GenAI applications in their own infrastructure. It offers a comprehensive suite of features including rapid prototyping, testing, deployment, observability, and model fine-tuning. The platform helps streamline the development cycle of AI applications and provides tools for workflow automations, knowledge base management, and collaboration. Dynamiq is designed to optimize productivity, reduce AI adoption costs, and empower organizations to establish AI ahead of schedule.

Myple

Myple is an AI application that enables users to build, scale, and secure AI applications with ease. It provides production-ready AI solutions tailored to individual needs, offering a seamless user experience. With support for multiple languages and frameworks, Myple simplifies the integration of AI through open-source SDKs. The platform features a clean interface, keyboard shortcuts for efficient navigation, and templates to kickstart AI projects. Additionally, Myple offers AI-powered tools like RAG chatbot for documentation, Gmail agent for email notifications, and AskFeynman for physics-related queries. Users can connect their favorite tools and services effortlessly, without any coding. Joining the beta program grants early access to new features and issue resolution prioritization.

LangChain

LangChain is a framework for developing applications powered by large language models (LLMs). It simplifies every stage of the LLM application lifecycle, including development, productionization, and deployment. LangChain consists of open-source libraries such as langchain-core, langchain-community, and partner packages. It also includes LangGraph for building stateful agents and LangSmith for debugging and monitoring LLM applications.

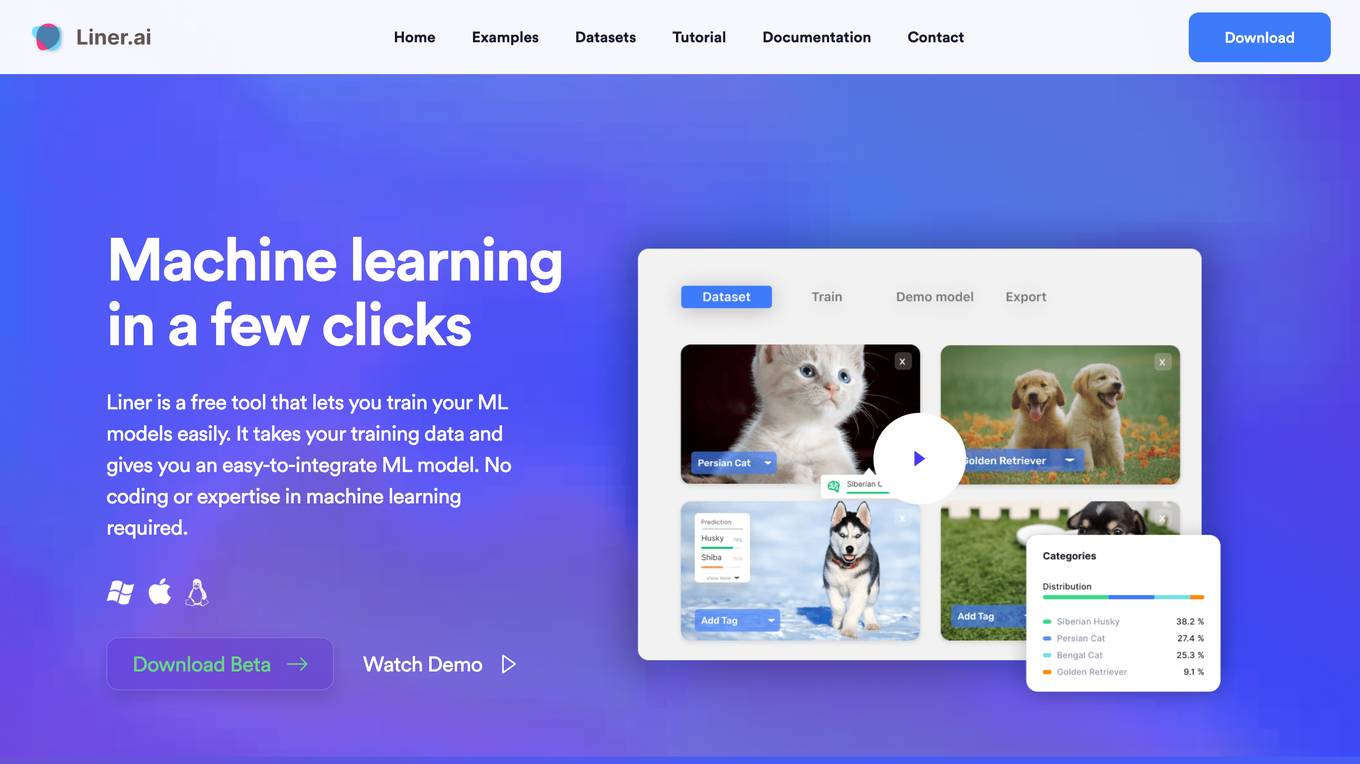

Liner.ai

Liner is a free and easy-to-use tool that allows users to train machine learning models without writing any code. It provides a user-friendly interface that guides users through the process of importing data, selecting a model, and training the model. Liner also offers a variety of pre-trained models that can be used for common tasks such as image classification, text classification, and object detection. With Liner, users can quickly and easily create and deploy machine learning applications without the need for specialized knowledge or expertise.

API Fabric

API Fabric is an AI API Generator that allows users to easily create and deploy APIs for their applications. With a user-friendly interface, API Fabric simplifies the process of generating APIs by providing pre-built templates and customization options. Users can quickly integrate AI capabilities into their projects without the need for extensive coding knowledge. The platform supports various AI models and algorithms, making it versatile for different use cases. API Fabric streamlines the API development process, saving time and effort for developers.

Vellum AI

Vellum AI is an AI platform that supports using Microsoft Azure hosted OpenAI models. It offers tools for prompt engineering, semantic search, prompt chaining, evaluations, and monitoring. Vellum enables users to build AI systems with features like workflow automation, document analysis, fine-tuning, Q&A over documents, intent classification, summarization, vector search, chatbots, blog generation, sentiment analysis, and more. The platform is backed by top VCs and founders of well-known companies, providing a complete solution for building LLM-powered applications.

JFrog ML

JFrog ML is an AI platform designed to streamline AI development from prototype to production. It offers a unified MLOps platform to build, train, deploy, and manage AI workflows at scale. With features like Feature Store, LLMOps, and model monitoring, JFrog ML empowers AI teams to collaborate efficiently and optimize AI & ML models in production.

1 - Open Source AI Tools

SQLAgent

DataAgent is a multi-agent system for data analysis, capable of understanding data development and data analysis requirements, understanding data, and generating SQL and Python code for tasks such as data query, data visualization, and machine learning.

20 - OpenAI Gpts

Data Engineer Consultant

Guides in data engineering tasks with a focus on practical solutions.

Streamlit Assistant

This GPT can read all Streamlit Documantation and helps you about Streamlit.

Cloudwise Consultant

Expert in cloud-native solutions, provides tailored tech advice and cost estimates.

TensorFlow Oracle

I'm an expert in TensorFlow, providing detailed, accurate guidance for all skill levels.

TonyAIDeveloperResume

Chat with my resume to see if I am a good fit for your AI related job.

Personality AI Creator

I will create a quality data set for a personality AI, just dive into each module by saying the name of it and do so for all the modules. If you find it useful, share it to your friends