Best AI tools for< Debug Requests >

20 - AI tool Sites

Lokal.so

Lokal.so is an AI-powered tool designed to supercharge your localhost development experience. It offers features like sharing your localhost with the public, debugging incoming requests, and developing with the assistance of an AI assistant. With Lokal.so, you can leverage Cloudflare's network for faster site delivery, use a built-in S3 server for easy file debugging, and automatically convert JSON payloads into different programming language models. The tool aims to simplify local development by providing a self-hosted tunnel server, unlimited .local domain access, and endpoint management with memorable names.

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

NoAGI

NoAGI is an AI tool that helps you write better code. It uses natural language processing to understand your code and suggest improvements. NoAGI can help you with a variety of coding tasks, including code generation, code completion, and code refactoring.

Helicone

Helicone is an open-source platform designed for developers, offering observability solutions for logging, monitoring, and debugging. It provides sub-millisecond latency impact, 100% log coverage, industry-leading query times, and is ready for production-level workloads. Trusted by thousands of companies and developers, Helicone leverages Cloudflare Workers for low latency and high reliability, offering features such as prompt management, uptime of 99.99%, scalability, and reliability. It allows risk-free experimentation, prompt security, and various tools for monitoring, analyzing, and managing requests.

Bugpilot

Bugpilot is an error monitoring tool specifically designed for React applications. It offers a comprehensive platform for error tracking, debugging, and user communication. With Bugpilot, developers can easily integrate error tracking into their React applications without any code changes or dependencies. The tool provides a user-friendly dashboard that helps developers quickly identify and prioritize errors, understand their root causes, and plan fixes. Bugpilot also includes features such as AI-assisted debugging, session recordings, and customizable error pages to enhance the user experience and reduce support requests.

Anywhere GPT

Anywhere GPT is a web-based platform that allows users to access a large language model, similar to ChatGPT, without the need to install any software or create an account. The platform is designed to be simple and easy to use, with a focus on providing users with quick and accurate responses to their questions and requests.

Code Companion AI

Code Companion AI is a desktop application powered by OpenAI's ChatGPT, designed to aid by performing a myriad of coding tasks. This application streamlines project management with its chatbot interface that can execute shell commands, generate code, handle database queries and review your existing code. Tasks are as simple as sending a message - you could request creation of a .gitignore file, or deploy an app on AWS, and CodeCompanion.AI does it for you. Simply download CodeCompanion.AI from the website to enjoy all features across various programming languages and platforms.

Debug Sage

Debug Sage is a website designed to help users understand and troubleshoot errors in their software applications. The platform provides detailed insights into various types of errors, allowing users to identify and resolve issues efficiently. With a user-friendly interface, Debug Sage aims to streamline the debugging process for developers and software engineers. The website also offers resources and tools to enhance the overall debugging experience. By leveraging advanced technologies, Debug Sage empowers users to tackle complex errors with ease.

Whybug

Whybug is an AI tool designed to help developers debug their code by providing explanations for errors. By utilizing a large language model trained on data from StackExchange and other sources, Whybug can predict the causes of errors and suggest fixes. Users can simply paste an error message and receive detailed explanations on how to resolve the issue. The tool aims to streamline the debugging process and improve code quality.

New Relic

New Relic is an AI monitoring platform that offers an all-in-one observability solution for monitoring, debugging, and improving the entire technology stack. With over 30 capabilities and 750+ integrations, New Relic provides the power of AI to help users gain insights and optimize performance across various aspects of their infrastructure, applications, and digital experiences.

Rerun

Rerun is an SDK, time-series database, and visualizer for temporal and multimodal data. It is used in fields like robotics, spatial computing, 2D/3D simulation, and finance to verify, debug, and explain data. Rerun allows users to log data like tensors, point clouds, and text to create streams, visualize and interact with live and recorded streams, build layouts, customize visualizations, and extend data and UI functionalities. The application provides a composable data model, dynamic schemas, and custom views for enhanced data visualization and analysis.

Snaplet

Snaplet is a data management tool for developers that provides AI-generated dummy data for local development, end-to-end testing, and debugging. It uses a real programming language (TypeScript) to define and edit data, ensuring type safety and auto-completion. Snaplet understands database structures and relationships, automatically transforming personally identifiable information and seeding data accordingly. It integrates seamlessly into development workflows, providing data where it's needed most: on local machines, for CI/CD testing, and preview environments.

Langfuse

Langfuse is an AI tool that offers the Langfuse TypeScript SDK v4 for building and debugging LLM (Large Language Models) applications. It provides features such as tracing, prompt management, evaluation, and metrics to enhance the performance of LLM applications. Langfuse is backed by a team of experts and offers integrations with various platforms and SDKs. The tool aims to simplify the development process of complex LLM applications and improve overall efficiency.

SourceAI

SourceAI is an AI-powered code generator that allows users to generate code in any programming language. It is easy to use, even for non-developers, and has a clear and intuitive interface. SourceAI is powered by GPT-3 and Codex, the most advanced AI technology available. It can be used to generate code for a variety of tasks, including calculating the factorial of a number, finding the roots of a polynomial, and translating text from one language to another.

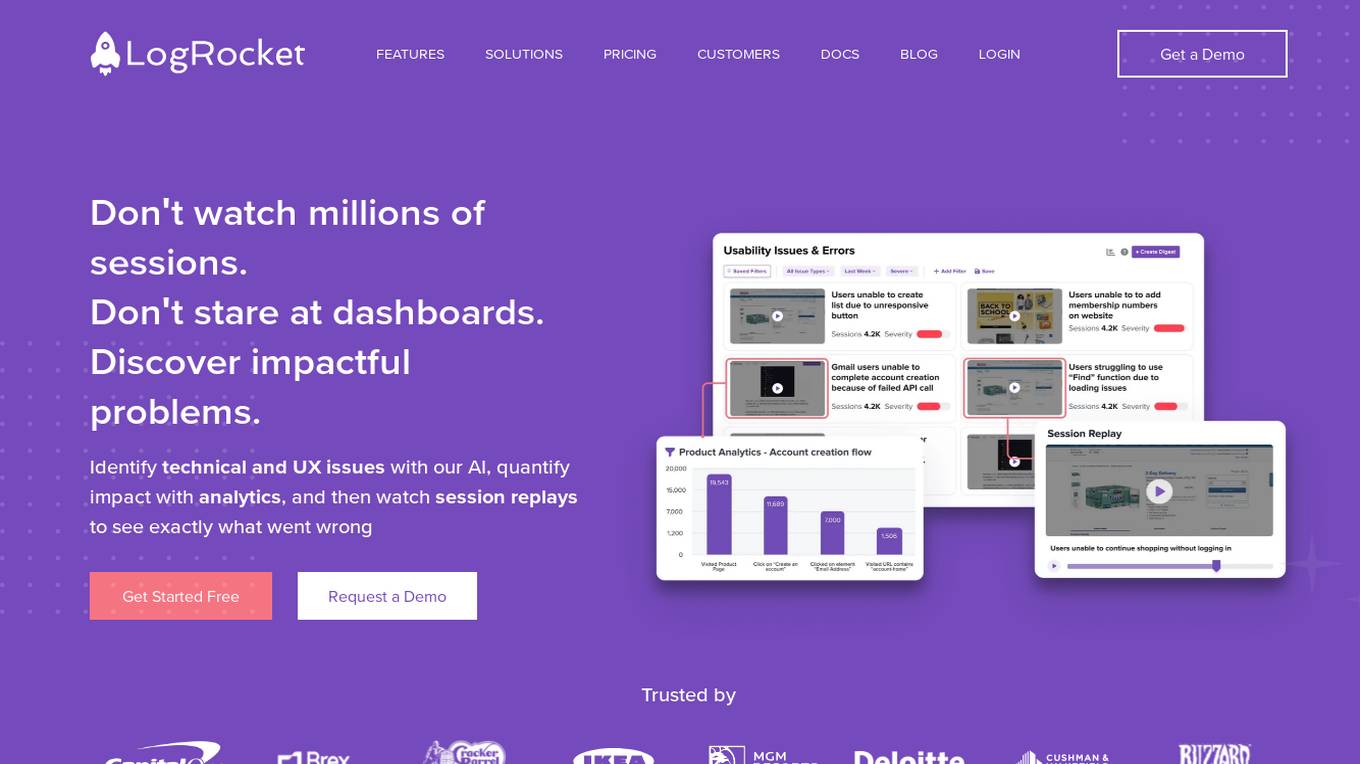

LogRocket

LogRocket is a session replay, product analytics, and issue detection platform that helps software teams deliver the best web and mobile experiences. With LogRocket, you can see exactly what users experienced on your app, as well as DOM playback, console and network logs, errors, and performance data. You can also surface the most impactful user issues with JavaScript errors, network errors, stack traces, automatic triaging, and alerting. LogRocket also provides product analytics to help you understand how users are interacting with your app, and UX analytics to help you visualize how users experience your app at both the individual and aggregate level.

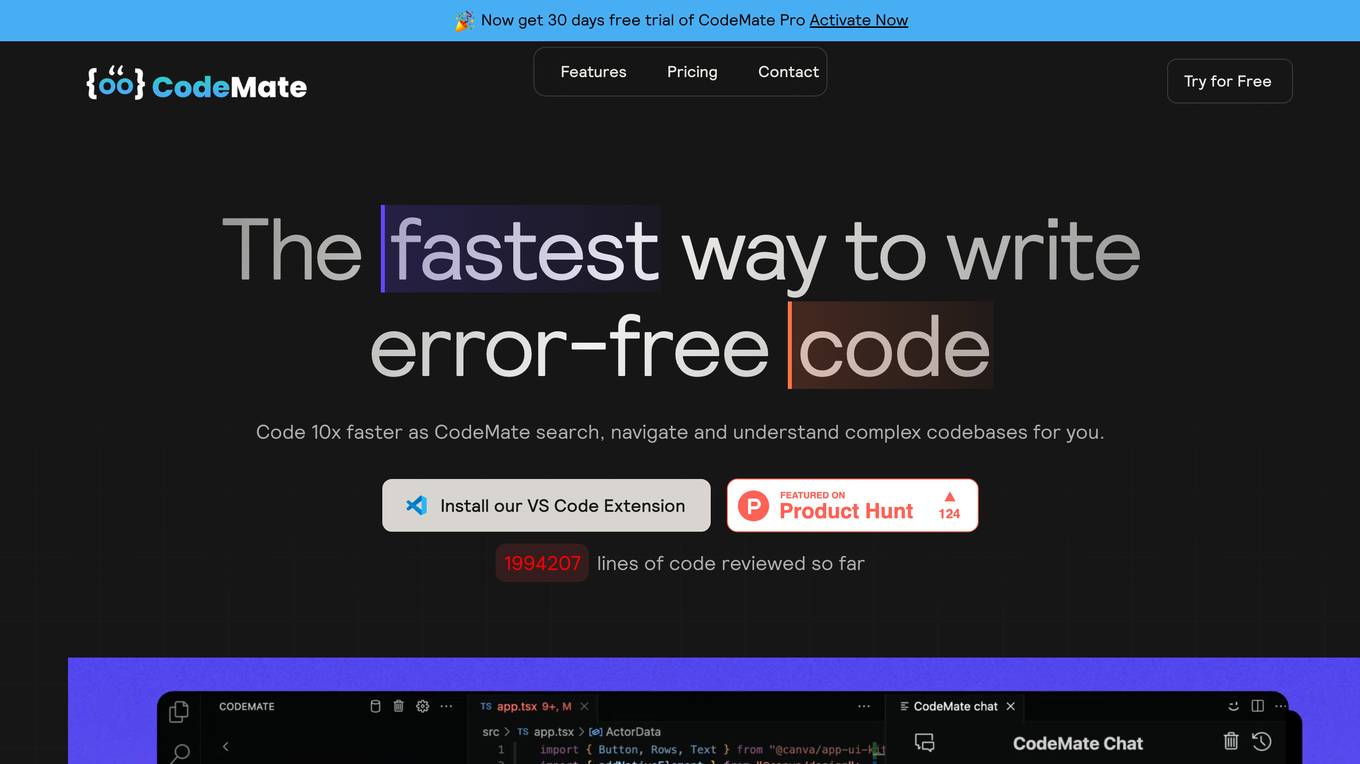

CodeMate

CodeMate is an AI pair programmer tool designed to help developers write error-free code faster. It offers features like code navigation, understanding complex codebases, intuitive interface for smarter coding, instant debugging, code refactoring, and AI-powered code reviews. CodeMate supports all programming languages and provides suggestions for code optimizations. The tool ensures the security and privacy of user code and offers different pricing plans for individual developers, teams, and enterprises. Users can interact with their codebase, documentation, and Git repositories using CodeMate Chat. The tool aims to improve code quality and productivity by acting as a co-developer while programming.

RagaAI Catalyst

RagaAI Catalyst is a sophisticated AI observability, monitoring, and evaluation platform designed to help users observe, evaluate, and debug AI agents at all stages of Agentic AI workflows. It offers features like visualizing trace data, instrumenting and monitoring tools and agents, enhancing AI performance, agentic testing, comprehensive trace logging, evaluation for each step of the agent, enterprise-grade experiment management, secure and reliable LLM outputs, finetuning with human feedback integration, defining custom evaluation logic, generating synthetic data, and optimizing LLM testing with speed and precision. The platform is trusted by AI leaders globally and provides a comprehensive suite of tools for AI developers and enterprises.

Langtail

Langtail is a platform that helps developers build, test, and deploy AI-powered applications. It provides a suite of tools to help developers debug prompts, run tests, and monitor the performance of their AI models. Langtail also offers a community forum where developers can share tips and tricks, and get help from other users.

Zazzani AI Buddy

Zazzani AI Buddy is an AI-powered platform that empowers users to create, debug code, write articles, and communicate with AI in multiple languages. It enhances productivity by generating ideas, providing context-specific answers, and eliminating monotony through automated tasks. Users can sign up to receive updates and contribute to the platform's growth. Zazzani AI Buddy aims to streamline workflows and inspire creativity through its innovative AI capabilities.

AtozAi

AtozAi is an AI application designed to empower developers by providing AI-powered tools that enhance coding efficiency and productivity. The platform offers features such as AI-driven code debugging, efficient code conversion, smart regex generation, comprehensive code explanations, and instant text explanations. AtozAi aims to cover a wide range of coding tasks with specialized AI algorithms, continually expanding its toolkit to make tasks easier, more efficient, and creative for developers.

0 - Open Source AI Tools

20 - OpenAI Gpts

Angular: Tu amigo experto desarrollador

Te ayuda a encontrar soluciones sobre los problemas del framework Angular

TypeScript Engineer

An expert TypeScript engineer to help you solve and debug problems together.

Deluge Developer by TechBloom

Zoho Deluge expert developer who is trained to write and debug Deluge Functions for Zoho CRM

The Dock - Your Docker Assistant

Technical assistant specializing in Docker and Docker Compose. Lets Debug !

Gif-PT

Gif generator. Uses Dalle3 to make a spritesheet, then code interpreter to slice it and animate. Includes an automatic refinement and debug mode. v1.2 GPTavern

María Dolores

Inspired by a TV character, lives on a farm, analytical and philosophical, with a 'DEBUG' mode.