Best AI tools for< Llm App Developer >

Infographic

20 - AI tool Sites

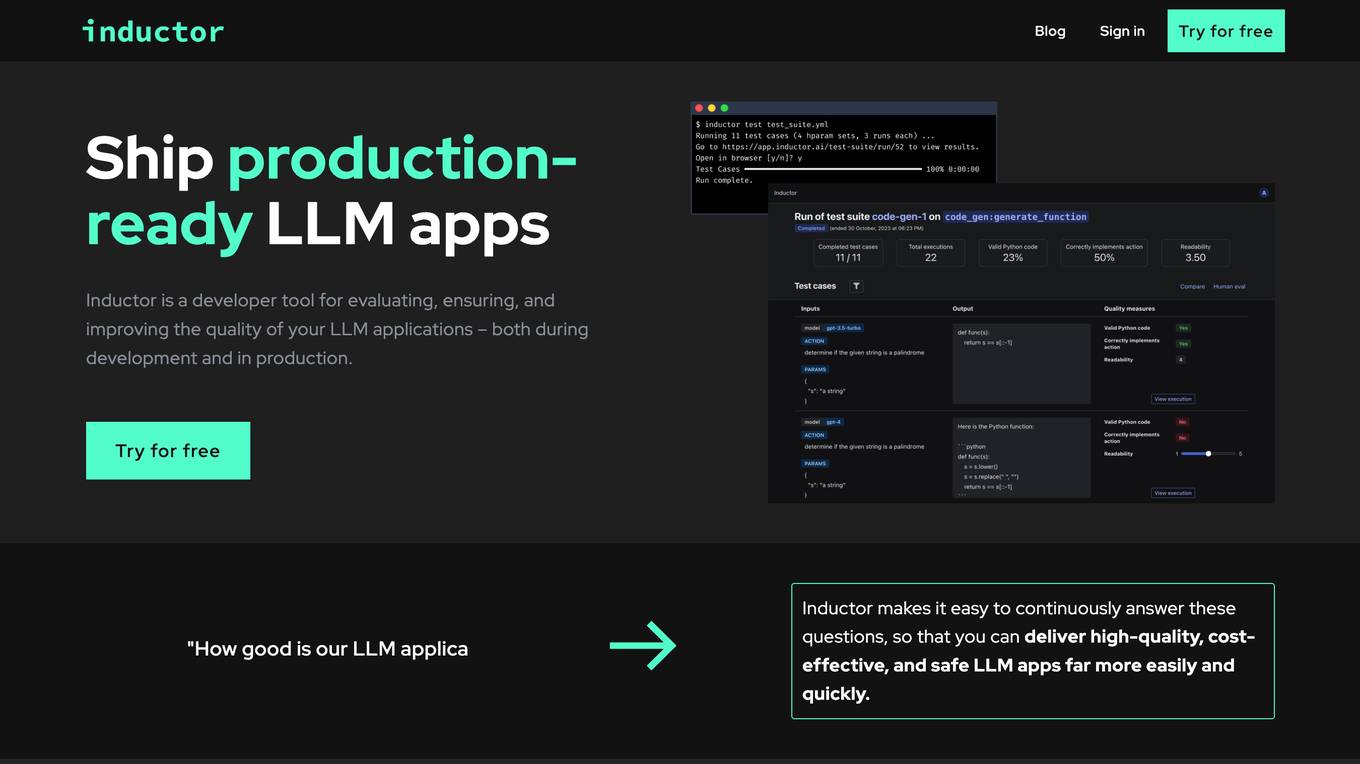

Inductor

Inductor is a developer tool for evaluating, ensuring, and improving the quality of your LLM applications – both during development and in production. It provides a fantastic workflow for continuous testing and evaluation as you develop, so that you always know your LLM app’s quality. Systematically improve quality and cost-effectiveness by actionably understanding your LLM app’s behavior and quickly testing different app variants. Rigorously assess your LLM app’s behavior before you deploy, in order to ensure quality and cost-effectiveness when you’re live. Easily monitor your live traffic: detect and resolve issues, analyze usage in order to improve, and seamlessly feed back into your development process. Inductor makes it easy for engineering and other roles to collaborate: get critical human feedback from non-engineering stakeholders (e.g., PM, UX, or subject matter experts) to ensure that your LLM app is user-ready.

Backmesh

Backmesh is an AI tool that serves as a proxy on edge CDN servers, enabling secure and direct access to LLM APIs without the need for a backend or SDK. It allows users to call LLM APIs from their apps, ensuring protection through JWT verification and rate limits. Backmesh also offers user analytics for LLM API calls, helping identify usage patterns and enhance user satisfaction within AI applications.

Grit Brokerage

Grit Brokerage is a domain and website brokerage platform that facilitates the buying and selling of domains. Users can inquire about domain prices, submit offers, and connect with domain brokers. The platform also features testimonials, blog posts, and a newsletter for updates and special offers. Grit Brokerage aims to provide a seamless experience for individuals and businesses looking to acquire or sell domain names.

Devv

Devv is the first AI coding agent designed to assist in building full-stack AI products efficiently. It offers native integrations for various functionalities such as authentication, LLM, database management, and image generation. Tailored for indie developers and small teams, Devv simplifies the app development process by enabling users to create functional software from mere ideas or descriptions.

Private LLM

Private LLM is a secure, local, and private AI chatbot designed for iOS and macOS devices. It operates offline, ensuring that user data remains on the device, providing a safe and private experience. The application offers a range of features for text generation and language assistance, utilizing state-of-the-art quantization techniques to deliver high-quality on-device AI experiences without compromising privacy. Users can access a variety of open-source LLM models, integrate AI into Siri and Shortcuts, and benefit from AI language services across macOS apps. Private LLM stands out for its superior model performance and commitment to user privacy, making it a smart and secure tool for creative and productive tasks.

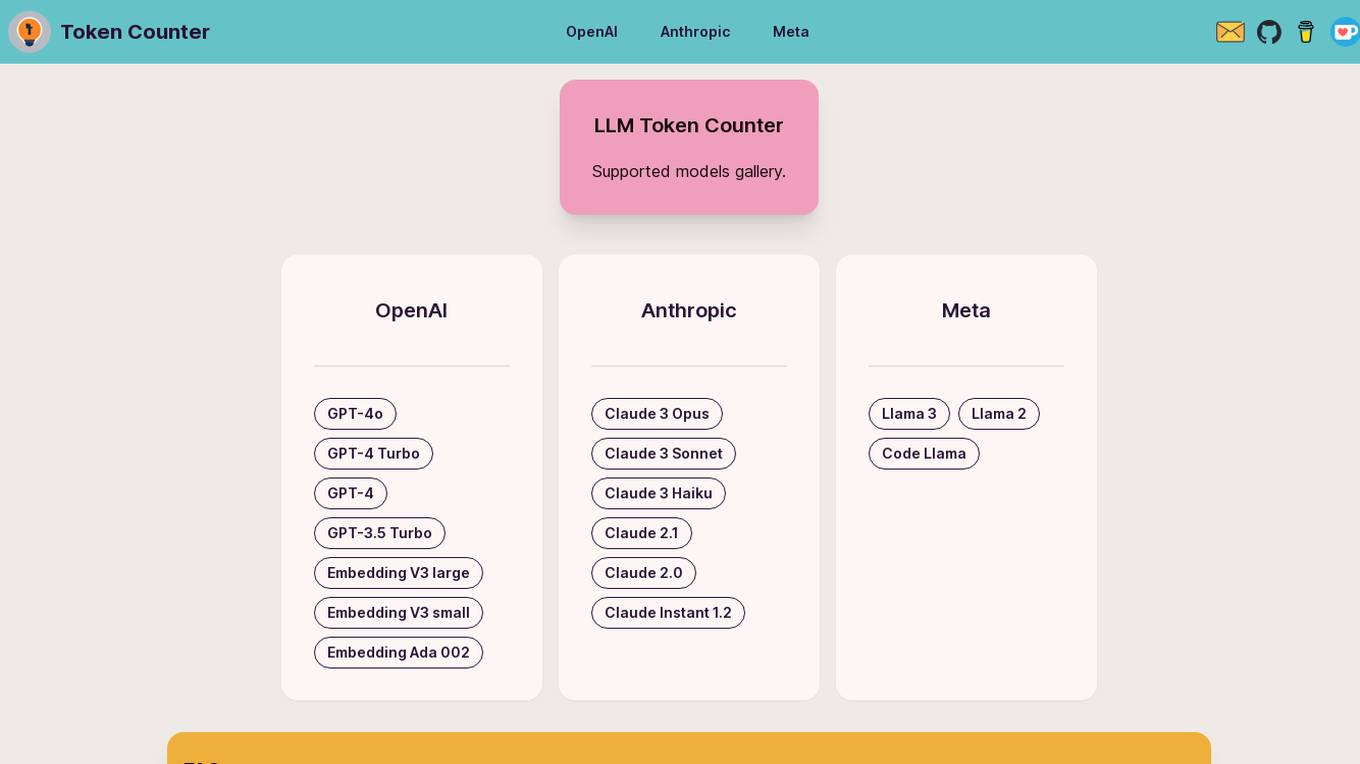

LLM Token Counter

The LLM Token Counter is a sophisticated tool designed to help users effectively manage token limits for various Language Models (LLMs) like GPT-3.5, GPT-4, Claude-3, Llama-3, and more. It utilizes Transformers.js, a JavaScript implementation of the Hugging Face Transformers library, to calculate token counts client-side. The tool ensures data privacy by not transmitting prompts to external servers.

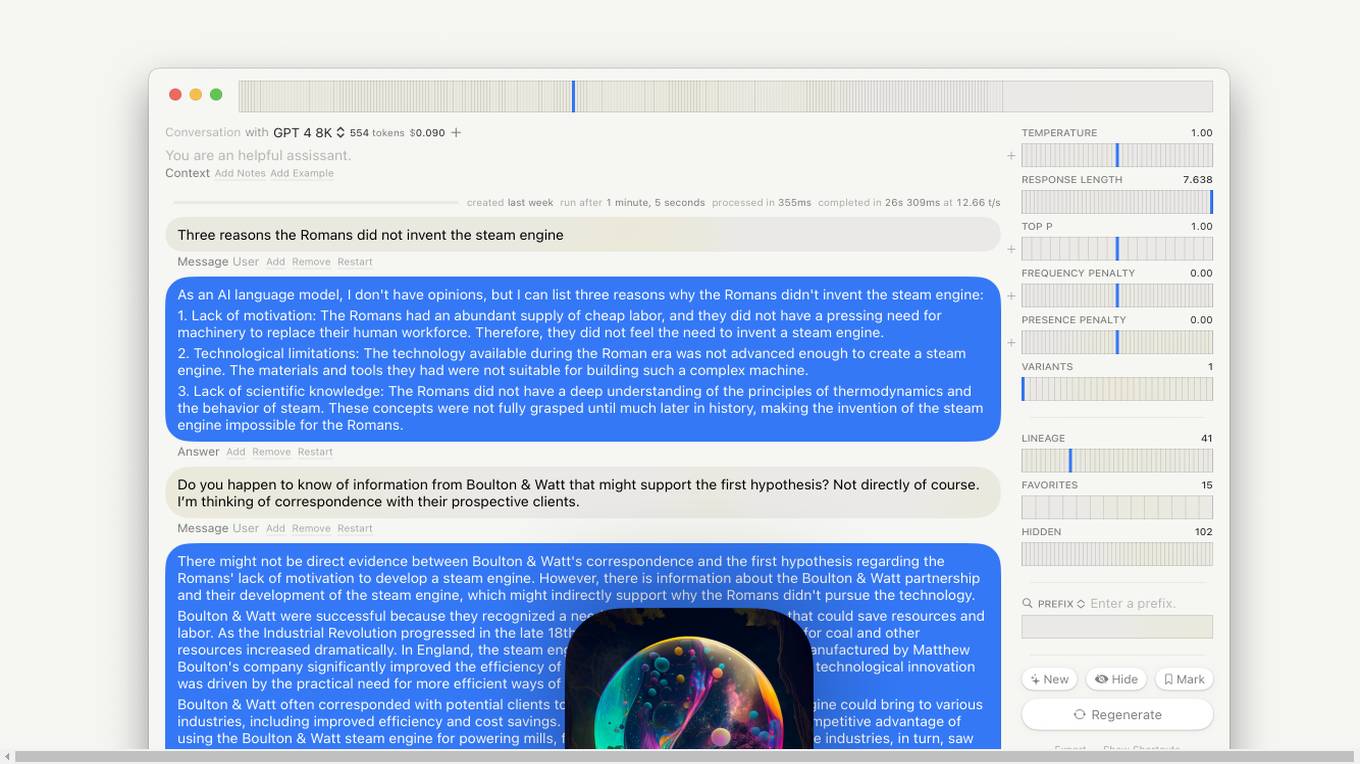

Lore macOS GPT-LLM Playground

Lore macOS GPT-LLM Playground is an AI tool designed for macOS users, offering a Multi-Model Time Travel Versioning Combinatorial Runs Variants Full-Text Search Model-Cost Aware API & Token Stats Custom Endpoints Local Models Tables. It provides a user-friendly interface with features like Syntax, LaTeX Notes Export, Shortcuts, Vim Mode, and Sandbox. The tool is built with Cocoa, SwiftUI, and SQLite, ensuring privacy and offering support & feedback.

Empower

Empower is a serverless fine-tuned LLM hosting platform that offers a developer platform for fine-tuned LLMs. It provides prebuilt task-specific base models with GPT4 level response quality, enabling users to save up to 80% on LLM bills with just 5 lines of code change. Empower allows users to own their models, offers cost-effective serving with no compromise on performance, and charges on a per-token basis. The platform is designed to be user-friendly, efficient, and cost-effective for deploying and serving fine-tuned LLMs.

RecurseChat

RecurseChat is a personal AI chat that is local, offline, and private. It allows users to chat with a local LLM, import ChatGPT history, chat with multiple models in one chat session, and use multimodal input. RecurseChat is also secure and private, and it is customizable to the core.

Flowise

Flowise is an open-source, low-code tool that enables developers to build customized LLM orchestration flows and AI agents. It provides a drag-and-drop interface, pre-built app templates, conversational agents with memory, and seamless deployment on cloud platforms. Flowise is backed by Combinator and trusted by teams around the globe.

Weights & Biases

Weights & Biases is an AI tool that offers documentation, guides, tutorials, and support for using AI models in applications. The platform provides two main products: W&B Weave for integrating AI models into code and W&B Models for building custom AI models. Users can access features such as tracing, output evaluation, cost estimates, hyperparameter sweeps, model registry, and more. Weights & Biases aims to simplify the process of working with AI models and improving model reproducibility.

Faraday.dev

Faraday.dev is an offline-first, zero-configuration, desktop app that supports chatting with AI Characters. With Faraday.dev, you can run over 100 different open-source LLMs all on your machine without needing to touch the command line. Faraday.dev also supports Llama 2 models and GPU acceleration.

LangChain

LangChain is an AI tool that offers a suite of products supporting developers in the LLM application lifecycle. It provides a framework to construct LLM-powered apps easily, visibility into app performance, and a turnkey solution for serving APIs. LangChain enables developers to build context-aware, reasoning applications and future-proof their applications by incorporating vendor optionality. LangSmith, a part of LangChain, helps teams improve accuracy and performance, iterate faster, and ship new AI features efficiently. The tool is designed to drive operational efficiency, increase discovery & personalization, and deliver premium products that generate revenue.

OpenClaw

OpenClaw is an open-source personal AI assistant and autonomous agent that operates on your local machine, providing privacy and control over your data. It offers a wide range of features, including managing emails, calendars, and flights from various chat apps. OpenClaw is designed to be proactive, autonomous, and highly customizable, allowing users to interact with it through popular chat platforms. With a focus on privacy and local sovereignty, OpenClaw aims to bridge the gap between imagination and reality by offering a seamless AI experience that adapts to individual needs and preferences.

Owlbot

Owlbot is one of the most advanced AI chatbot platforms in the world, empowering companies with AI to provide instant answers to customers, clients, and employees. It simplifies data analysis, integrates data from multiple sources, and offers customizable chatbot interfaces. Owlbot offers features like data integration, chatbot interface customization, conversation supervision, function calling, and leads generation. Its advantages include efficient data analysis, multilingual support, instant answers, diverse LLM models, and lead generation capabilities. However, Owlbot's disadvantages include potential data security concerns, the need for user expertise, and limited customer interaction compared to human operators.

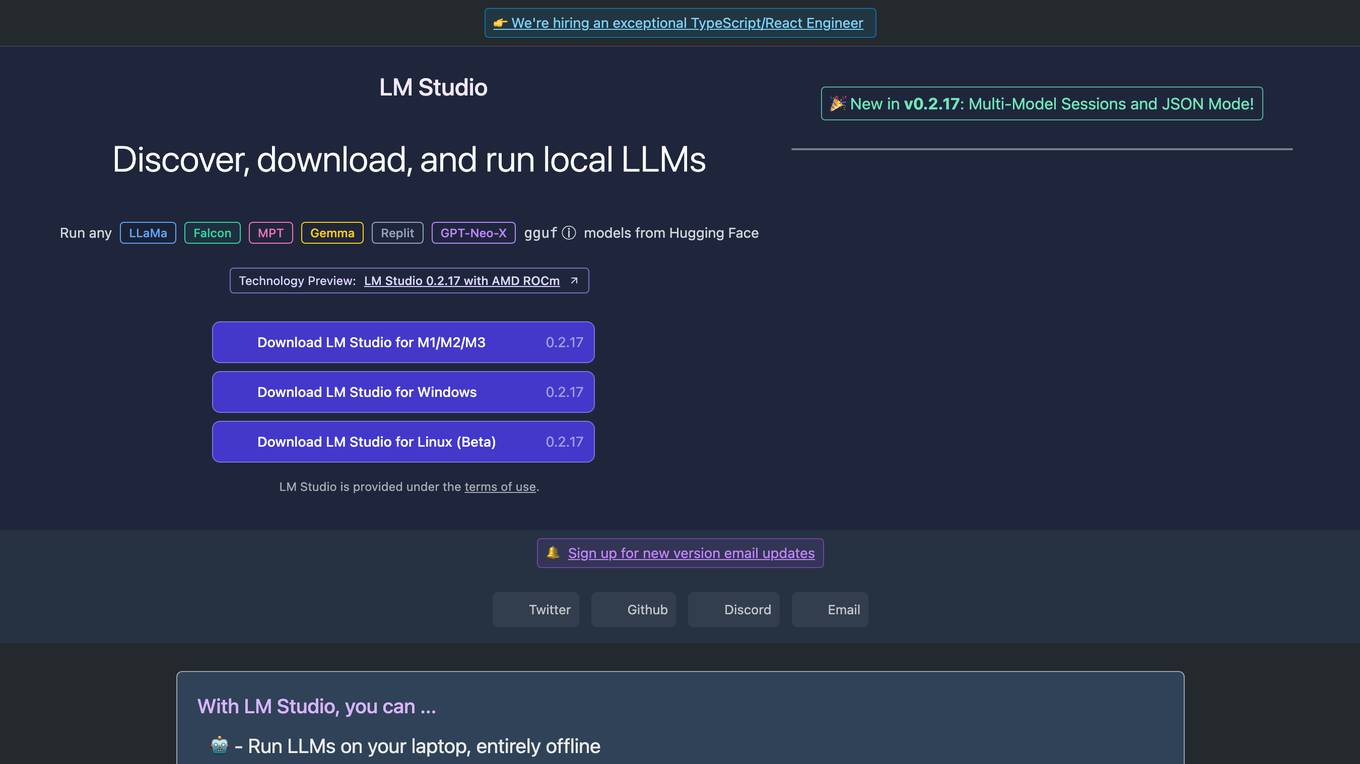

LM Studio

LM Studio is an AI tool designed for discovering, downloading, and running local LLMs (Large Language Models). Users can run LLMs on their laptops offline, use models through an in-app Chat UI or a local server, download compatible model files from HuggingFace repositories, and discover new LLMs. The tool ensures privacy by not collecting data or monitoring user actions, making it suitable for personal and business use. LM Studio supports various models like ggml Llama, MPT, and StarCoder on Hugging Face, with minimum hardware/software requirements specified for different platforms.

Faune

Faune is an anonymous AI chat app that brings the power of large language models (LLMs) like GPT-3, GPT-4, and Mistral directly to users. It prioritizes privacy and offers unique features such as a dynamic prompt editor, support for multiple LLMs, and a built-in image processor. With Faune, users can engage in rich and engaging AI conversations without the need for user accounts or complex setups.

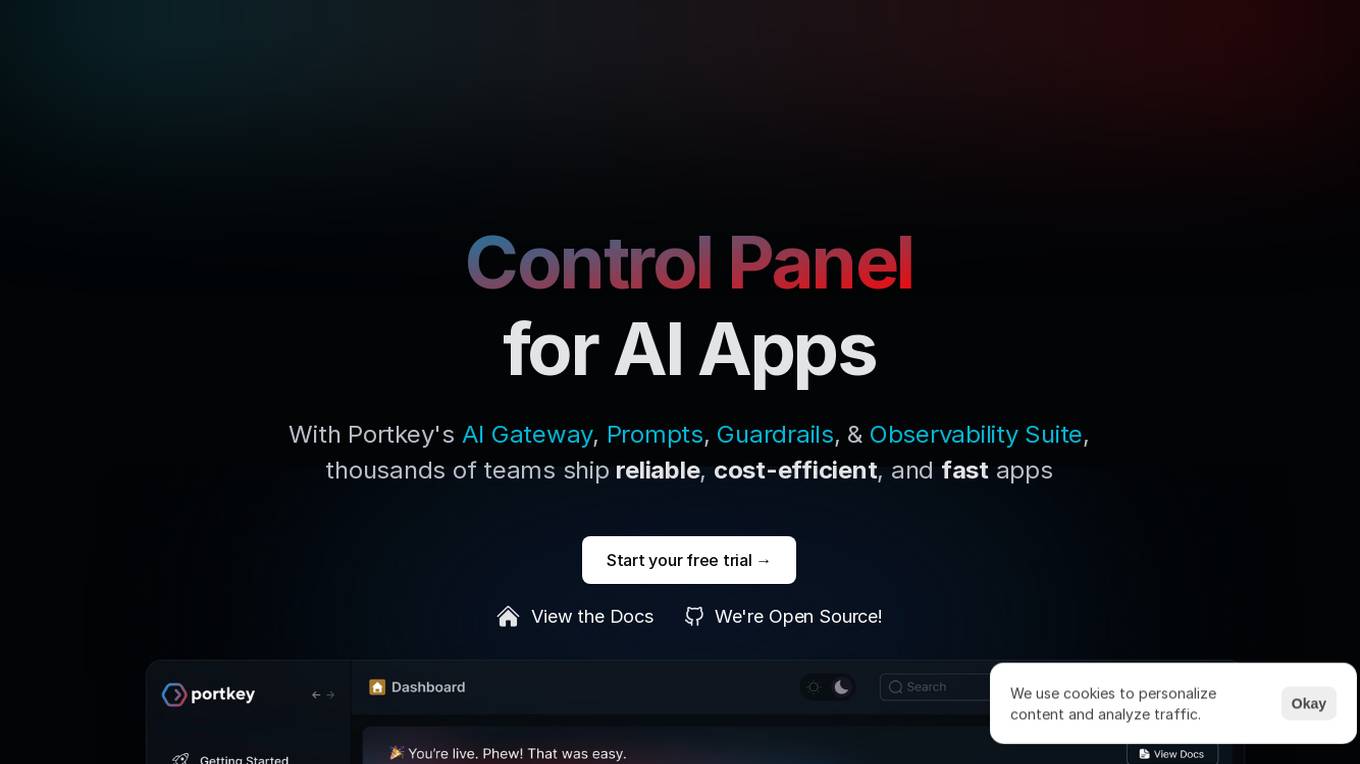

Portkey

Portkey is a control panel for production AI applications that offers an AI Gateway, Prompts, Guardrails, and Observability Suite. It enables teams to ship reliable, cost-efficient, and fast apps by providing tools for prompt engineering, enforcing reliable LLM behavior, integrating with major agent frameworks, and building AI agents with access to real-world tools. Portkey also offers seamless AI integrations for smarter decisions, with features like managed hosting, smart caching, and edge compute layers to optimize app performance.

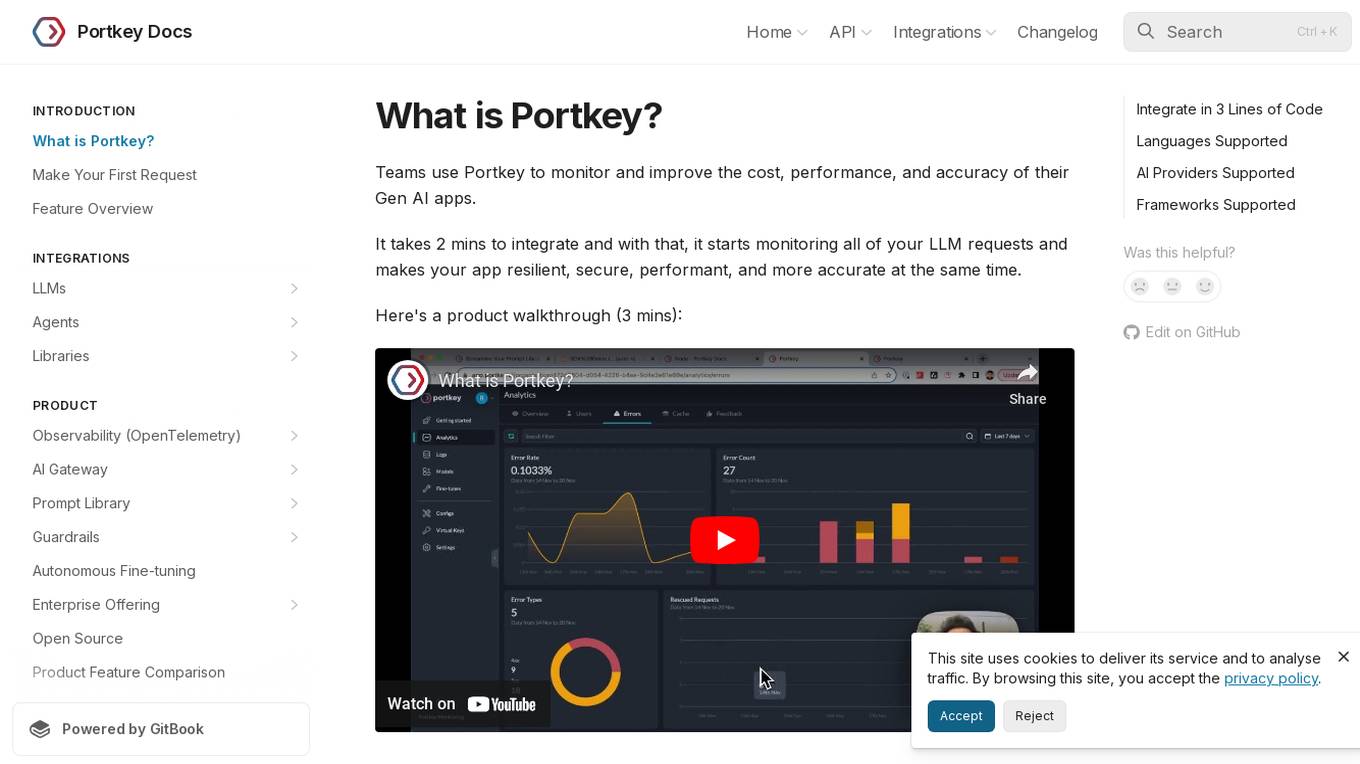

Portkey

Portkey is a monitoring and improvement tool for Gen AI apps, helping teams enhance cost, performance, and accuracy. It integrates quickly, monitors LLM requests, and boosts app resilience, security, performance, and accuracy. The tool offers a product walkthrough and easy integration with OpenAI Python and Node libraries.

Wordware

Wordware is an AI toolkit that empowers cross-functional teams to build reliable high-quality agents through rapid iteration. It combines the best aspects of software with the power of natural language, freeing users from traditional no-code tool constraints. With advanced technical capabilities, multiple LLM providers, one-click API deployment, and multimodal support, Wordware offers a seamless experience for AI app development and deployment.

1 - Open Source Tools

promptic

Promptic is a tool designed for LLM app development, providing a productive and pythonic way to build LLM applications. It leverages LiteLLM, allowing flexibility to switch LLM providers easily. Promptic focuses on building features by providing type-safe structured outputs, easy-to-build agents, streaming support, automatic prompt caching, and built-in conversation memory.

20 - OpenAI Gpts

Agent Prompt Generator for LLM's

This GPT generates the best possible LLM-agents for your system prompts. You can also specify the model size, like 3B, 33B, 70B, etc.

CISO GPT

Specialized LLM in computer security, acting as a CISO with 20 years of experience, providing precise, data-driven technical responses to enhance organizational security.

NEO - Ultimate AI

I imitate GPT-5 LLM, with advanced reasoning, personalization, and higher emotional intelligence

DataLearnerAI-GPT

Using OpenLLMLeaderboard data to answer your questions about LLM. For Currently!

Prompt Peerless - Complete Prompt Optimization

Premier AI Prompt Engineer for Advanced LLM Optimization, Enhancing AI-to-AI Interaction and Comprehension. Create -> Optimize -> Revise iteratively

EmotionPrompt(LLM→人間ver.)

EmotionPrompt手法に基づいて作成していますが、本来の理論とは反対に人間に対してLLMがPromptを投げます。本来の手法の詳細:https://ai-data-base.com/archives/58158

HackMeIfYouCan

Hack Me if you can - I can only talk to you about computer security, software security and LLM security @JacquesGariepy

SSLLMs Advisor

Helps you build logic security into your GPTs custom instructions. Documentation: https://github.com/infotrix/SSLLMs---Semantic-Secuirty-for-LLM-GPTs

Prompt For Me

🪄Prompt一键强化,快速、精准对齐需求,与AI对话更高效。 🧙♂️解锁LLM潜力,让ChatGPT、Claude更懂你,工作快人一步。 🧸你的AI对话伙伴,定制专属需求,轻松开启高品质对话体验