awesome-llm-apps

Collection of awesome LLM apps with AI Agents and RAG using OpenAI, Anthropic, Gemini and opensource models.

Stars: 92903

Awesome LLM Apps is a curated collection of applications that leverage RAG with OpenAI, Anthropic, Gemini, and open-source models. The repository contains projects such as Local Llama-3 with RAG for chatting with webpages locally, Chat with Gmail for interacting with Gmail using natural language, Chat with Substack Newsletter for conversing with Substack newsletters using GPT-4, Chat with PDF for intelligent conversation based on PDF documents, and Chat with YouTube Videos for engaging with YouTube video content through natural language. Users can clone the repository, navigate to specific project directories, install dependencies, and follow project-specific instructions to set up and run the apps. Contributions are encouraged, and new app ideas or improvements can be submitted via pull requests.

README:

Deutsch | Español | français | 日本語 | 한국어 | Português | Русский | 中文

A curated collection of Awesome LLM apps built with RAG, AI Agents, Multi-agent Teams, MCP, Voice Agents, and more. This repository features LLM apps that use models from OpenAI ,

Anthropic,

Google,

xAI and open-source models like

Qwen or

Llama that you can run locally on your computer.

- 💡 Discover practical and creative ways LLMs can be applied across different domains, from code repositories to email inboxes and more.

- 🔥 Explore apps that combine LLMs from OpenAI, Anthropic, Gemini, and open-source alternatives with AI Agents, Agent Teams, MCP & RAG.

- 🎓 Learn from well-documented projects and contribute to the growing open-source ecosystem of LLM-powered applications.

Tiger Data MCP |

Speechmatics |

Okara AI |

Become a Sponsor |

- 🎙️ AI Blog to Podcast Agent

- ❤️🩹 AI Breakup Recovery Agent

- 📊 AI Data Analysis Agent

- 🩻 AI Medical Imaging Agent

- 😂 AI Meme Generator Agent (Browser)

- 🎵 AI Music Generator Agent

- 🛫 AI Travel Agent (Local & Cloud)

- ✨ Gemini Multimodal Agent

- 🔄 Mixture of Agents

- 📊 xAI Finance Agent

- 🔍 OpenAI Research Agent

- 🕸️ Web Scraping AI Agent (Local & Cloud SDK)

- 🏚️ 🍌 AI Home Renovation Agent with Nano Banana Pro

- 🔍 AI Deep Research Agent

- 📊 AI VC Due Diligence Agent Team

- 🔬 AI Research Planner & Executor (Google Interactions API)

- 🤝 AI Consultant Agent

- 🏗️ AI System Architect Agent

- 💰 AI Financial Coach Agent

- 🎬 AI Movie Production Agent

- 📈 AI Investment Agent

- 🏋️♂️ AI Health & Fitness Agent

- 🚀 AI Product Launch Intelligence Agent

- 🗞️ AI Journalist Agent

- 🧠 AI Mental Wellbeing Agent

- 📑 AI Meeting Agent

- 🧬 AI Self-Evolving Agent

- 👨🏻💼 AI Sales Intelligence Agent Team

- 🎧 AI Social Media News and Podcast Agent

- 🌐 Openwork - Open Browser Automation Agent

- 🧲 AI Competitor Intelligence Agent Team

- 💲 AI Finance Agent Team

- 🎨 AI Game Design Agent Team

- 👨⚖️ AI Legal Agent Team (Cloud & Local)

- 💼 AI Recruitment Agent Team

- 🏠 AI Real Estate Agent Team

- 👨💼 AI Services Agency (CrewAI)

- 👨🏫 AI Teaching Agent Team

- 💻 Multimodal Coding Agent Team

- ✨ Multimodal Design Agent Team

- 🎨 🍌 Multimodal UI/UX Feedback Agent Team with Nano Banana

- 🌏 AI Travel Planner Agent Team

- 🗣️ AI Audio Tour Agent

- 📞 Customer Support Voice Agent

- 🔊 Voice RAG Agent (OpenAI SDK)

- 🎙️ OpenSource Voice Dictation Agent (like Wispr Flow

- 🔥 Agentic RAG with Embedding Gemma

- 🧐 Agentic RAG with Reasoning

- 📰 AI Blog Search (RAG)

- 🔍 Autonomous RAG

- 🔄 Contextual AI RAG Agent

- 🔄 Corrective RAG (CRAG)

- 🐋 Deepseek Local RAG Agent

- 🤔 Gemini Agentic RAG

- 👀 Hybrid Search RAG (Cloud)

- 🔄 Llama 3.1 Local RAG

- 🖥️ Local Hybrid Search RAG

- 🦙 Local RAG Agent

- 🧩 RAG-as-a-Service

- ✨ RAG Agent with Cohere

- ⛓️ Basic RAG Chain

- 📠 RAG with Database Routing

- 🖼️ Vision RAG

- 💾 AI ArXiv Agent with Memory

- 🛩️ AI Travel Agent with Memory

- 💬 Llama3 Stateful Chat

- 📝 LLM App with Personalized Memory

- 🗄️ Local ChatGPT Clone with Memory

- 🧠 Multi-LLM Application with Shared Memory

- 💬 Chat with GitHub (GPT & Llama3)

- 📨 Chat with Gmail

- 📄 Chat with PDF (GPT & Llama3)

- 📚 Chat with Research Papers (ArXiv) (GPT & Llama3)

- 📝 Chat with Substack

- 📽️ Chat with YouTube Videos

- 🎯 Toonify Token Optimization - Reduce LLM API costs by 30-60% using TOON format

- 🧠 Headroom Context Optimization - Reduce LLM API costs by 50-90% through intelligent context compression for AI agents (includes persistent memory & MCP support)

- Starter agent; model‑agnostic (OpenAI, Claude)

- Structured outputs (Pydantic)

- Tools: built‑in, function, third‑party, MCP tools

- Memory; callbacks; Plugins

- Simple multi‑agent; Multi‑agent patterns

OpenAI Agents SDK Crash Course

- Starter agent; function calling; structured outputs

- Tools: built‑in, function, third‑party integrations

- Memory; callbacks; evaluation

- Multi‑agent patterns; agent handoffs

- Swarm orchestration; routing logic

-

Clone the repository

git clone https://github.com/Shubhamsaboo/awesome-llm-apps.git

-

Navigate to the desired project directory

cd awesome-llm-apps/starter_ai_agents/ai_travel_agent -

Install the required dependencies

pip install -r requirements.txt

-

Follow the project-specific instructions in each project's

README.mdfile to set up and run the app.

🌟 Don’t miss out on future updates! Star the repo now and be the first to know about new and exciting LLM apps with RAG and AI Agents.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-llm-apps

Similar Open Source Tools

awesome-llm-apps

Awesome LLM Apps is a curated collection of applications that leverage RAG with OpenAI, Anthropic, Gemini, and open-source models. The repository contains projects such as Local Llama-3 with RAG for chatting with webpages locally, Chat with Gmail for interacting with Gmail using natural language, Chat with Substack Newsletter for conversing with Substack newsletters using GPT-4, Chat with PDF for intelligent conversation based on PDF documents, and Chat with YouTube Videos for engaging with YouTube video content through natural language. Users can clone the repository, navigate to specific project directories, install dependencies, and follow project-specific instructions to set up and run the apps. Contributions are encouraged, and new app ideas or improvements can be submitted via pull requests.

actionbook

Actionbook is a browser action engine designed for AI agents, providing up-to-date action manuals and DOM structure to enable instant website operations without guesswork. It offers faster execution, token savings, resilient automation, and universal compatibility, making it ideal for building reliable browser agents. Actionbook integrates seamlessly with AI coding assistants and offers three integration methods: CLI, MCP Server, and JavaScript SDK. The tool is well-documented and actively developed in a monorepo setup using pnpm workspaces and Turborepo.

eliza

Eliza is a versatile AI agent operating system designed to support various models and connectors, enabling users to create chatbots, autonomous agents, handle business processes, create video game NPCs, and engage in trading. It offers multi-agent and room support, document ingestion and interaction, retrievable memory and document store, and extensibility to create custom actions and clients. Eliza is easy to use and provides a comprehensive solution for AI agent development.

packmind

Packmind is an engineering playbook tool that helps AI-native engineers to centralize and manage their team's coding standards, commands, and skills. It addresses the challenges of storing standards in various formats and locations, and automates the generation of instruction files for AI tools like GitHub Copilot, Claude Code, and Cursor. With Packmind, users can create a real engineering playbook to ensure AI agents code according to their team's standards.

ollama

Ollama is a lightweight, extensible framework for building and running language models on the local machine. It provides a simple API for creating, running, and managing models, as well as a library of pre-built models that can be easily used in a variety of applications. Ollama is designed to be easy to use and accessible to developers of all levels. It is open source and available for free on GitHub.

chatbox

Chatbox is a desktop client for ChatGPT, Claude, and other LLMs, providing features like local data storage, multiple LLM provider support, image generation, enhanced prompting, keyboard shortcuts, and more. It offers a user-friendly interface with dark theme, team collaboration, cross-platform availability, web version access, iOS & Android apps, multilingual support, and ongoing feature enhancements. Developed for prompt and API debugging, it has gained popularity for daily chatting and professional role-playing with AI assistance.

better-chatbot

Better Chatbot is an open-source AI chatbot designed for individuals and teams, inspired by various AI models. It integrates major LLMs, offers powerful tools like MCP protocol and data visualization, supports automation with custom agents and visual workflows, enables collaboration by sharing configurations, provides a voice assistant feature, and ensures an intuitive user experience. The platform is built with Vercel AI SDK and Next.js, combining leading AI services into one platform for enhanced chatbot capabilities.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

heurist-agent-framework

Heurist Agent Framework is a flexible multi-interface AI agent framework that allows processing text and voice messages, generating images and videos, interacting across multiple platforms, fetching and storing information in a knowledge base, accessing external APIs and tools, and composing complex workflows using Mesh Agents. It supports various platforms like Telegram, Discord, Twitter, Farcaster, REST API, and MCP. The framework is built on a modular architecture and provides core components, tools, workflows, and tool integration with MCP support.

agentic-context-engine

Agentic Context Engine (ACE) is a framework that enables AI agents to learn from their execution feedback, continuously improving without fine-tuning or training data. It maintains a Skillbook of evolving strategies, extracting patterns from successful tasks and learning from failures transparently in context. ACE offers self-improving agents, better performance on complex tasks, token reduction in browser automation, and preservation of valuable knowledge over time. Users can integrate ACE with popular agent frameworks and benefit from its innovative approach to in-context learning.

chatbox

Chatbox is a desktop client for ChatGPT, Claude, and other LLMs, providing a user-friendly interface for AI copilot assistance on Windows, Mac, and Linux. It offers features like local data storage, multiple LLM provider support, image generation with Dall-E-3, enhanced prompting, keyboard shortcuts, and more. Users can collaborate, access the tool on various platforms, and enjoy multilingual support. Chatbox is constantly evolving with new features to enhance the user experience.

portia-sdk-python

Portia AI is an open source developer framework for predictable, stateful, authenticated agentic workflows. It allows developers to have oversight over their multi-agent deployments and focuses on production readiness. The framework supports iterating on agents' reasoning, extensive tool support including MCP support, authentication for API and web agents, and is production-ready with features like attribute multi-agent runs, large inputs and outputs storage, and connecting any LLM. Portia AI aims to provide a flexible and reliable platform for developing AI agents with tools, authentication, and smart control.

sandboxed.sh

sandboxed.sh is a self-hosted cloud orchestrator for AI coding agents that provides isolated Linux workspaces with Claude Code, OpenCode & Amp runtimes. It allows users to hand off entire development cycles, run multi-day operations unattended, and keep sensitive data local by analyzing data against scientific literature. The tool features dual runtime support, mission control for remote agent management, isolated workspaces, a git-backed library, MCP registry, and multi-platform support with a web dashboard and iOS app.

Alice

Alice is an open-source AI companion designed to live on your desktop, providing voice interaction, intelligent context awareness, and powerful tooling. More than a chatbot, Alice is emotionally engaging and deeply useful, assisting with daily tasks and creative work. Key features include voice interaction with natural-sounding responses, memory and context management, vision and visual output capabilities, computer use tools, function calling for web search and task scheduling, wake word support, dedicated Chrome extension, and flexible settings interface. Technologies used include Vue.js, Electron, OpenAI, Go, hnswlib-node, and more. Alice is customizable and offers a dedicated Chrome extension, wake word support, and various tools for computer use and productivity tasks.

UI-TARS-desktop

UI-TARS-desktop is a desktop application that provides a native GUI Agent based on the UI-TARS model. It offers features such as natural language control powered by Vision-Language Model, screenshot and visual recognition support, precise mouse and keyboard control, cross-platform support (Windows/MacOS/Browser), real-time feedback and status display, and private and secure fully local processing. The application aims to enhance the user's computer experience, introduce new browser operation features, and support the advanced UI-TARS-1.5 model for improved performance and precise control.

memU

MemU is an open-source memory framework designed for AI companions, offering high accuracy, fast retrieval, and cost-effectiveness. It serves as an intelligent 'memory folder' that adapts to various AI companion scenarios. With MemU, users can create AI companions that remember them, learn their preferences, and evolve through interactions. The framework provides advanced retrieval strategies, 24/7 support, and is specialized for AI companions. MemU offers cloud, enterprise, and self-hosting options, with features like memory organization, interconnected knowledge graph, continuous self-improvement, and adaptive forgetting mechanism. It boasts high memory accuracy, fast retrieval, and low cost, making it suitable for building intelligent agents with persistent memory capabilities.

For similar tasks

awesome-llm-apps

Awesome LLM Apps is a curated collection of applications that leverage RAG with OpenAI, Anthropic, Gemini, and open-source models. The repository contains projects such as Local Llama-3 with RAG for chatting with webpages locally, Chat with Gmail for interacting with Gmail using natural language, Chat with Substack Newsletter for conversing with Substack newsletters using GPT-4, Chat with PDF for intelligent conversation based on PDF documents, and Chat with YouTube Videos for engaging with YouTube video content through natural language. Users can clone the repository, navigate to specific project directories, install dependencies, and follow project-specific instructions to set up and run the apps. Contributions are encouraged, and new app ideas or improvements can be submitted via pull requests.

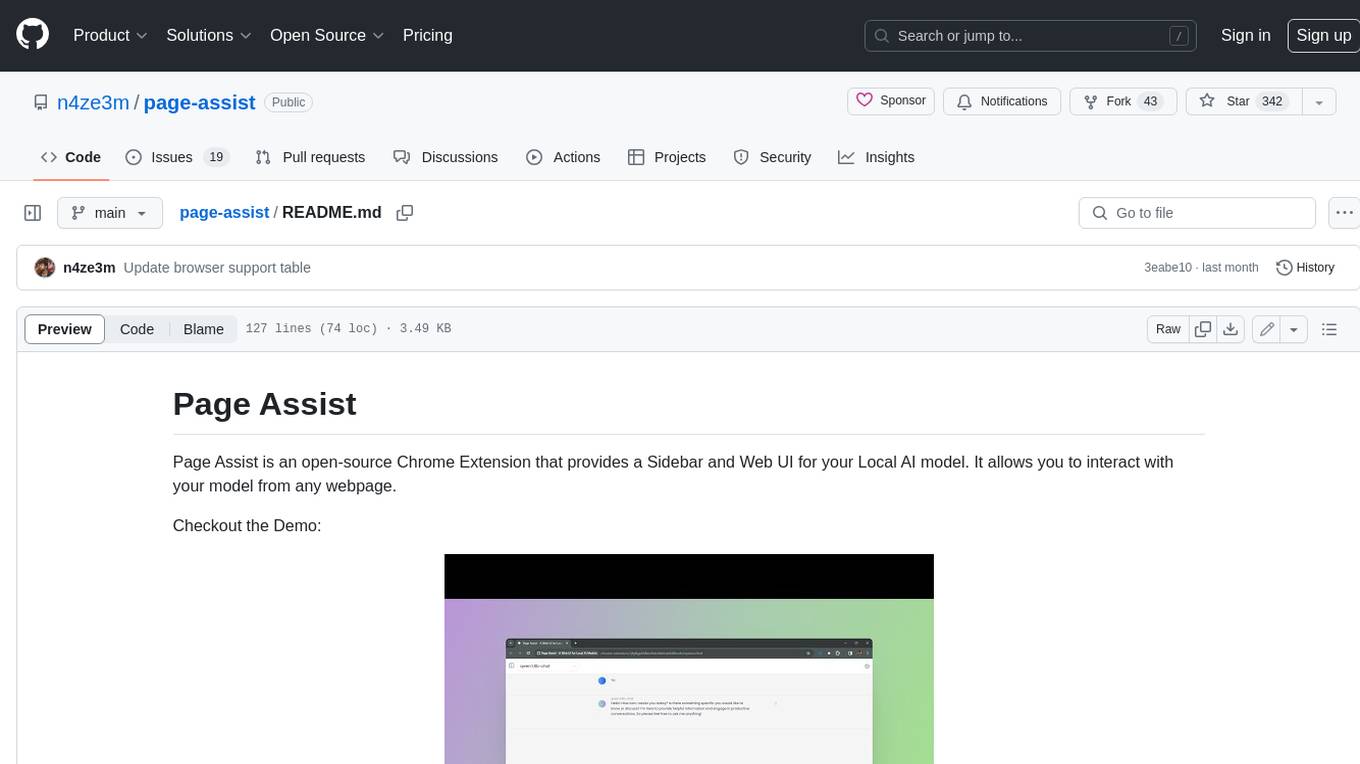

page-assist

Page Assist is an open-source Chrome Extension that provides a Sidebar and Web UI for your Local AI model. It allows you to interact with your model from any webpage.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.