teaching-boyfriend-llm

None

Stars: 524

The 'teaching-boyfriend-llm' repository contains study notes on LLM (Large Language Models) for the purpose of advancing towards AGI (Artificial General Intelligence). The notes are a collaborative effort towards understanding and implementing LLM technology.

README:

这是一份系统性的大语言模型 (LLM) 学习资料库,旨在帮助初学者从零开始理解 LLM 的核心原理与前沿技术。

- 📚 系统全面 - 覆盖从基础到进阶的完整知识体系

- 🎯 循序渐进 - 按日期顺序编排,学习路径清晰

- 💡 深入浅出 - 复杂概念用通俗易懂的方式讲解

- 🔥 紧跟前沿 - 包含 DeepSeek、Qwen、GPT-o3 等最新技术解读

- 🛠️ 理论+实践 - 原理讲解与代码实现相结合

- 🎓 想要入门 LLM 领域的开发者

- 💼 准备转型 AI/LLM 方向的工程师

- 📖 希望系统学习大模型知识的学生

- 🔬 需要快速了解前沿技术的研究者

🔖 难度:⭐⭐ | 推荐优先级:必学

| 文档 | 核心内容 |

|---|---|

| 0514 LLM训练流程与tokenizer | LLM 训练的完整流程、Tokenizer 的原理与实现 |

| 0515 Self Attention与KV Cache | 自注意力机制原理、KV Cache 加速推理 |

| 0516 位置编码 | 绝对位置编码、相对位置编码、RoPE |

| 1013 位置编码 | 位置编码进阶讲解 |

| 0518 Normalize与Decoding方法 | LayerNorm、RMSNorm、各种解码策略 |

| 0520 LLaMA3 | LLaMA3 模型架构详解 |

| 0607 学习率 | 学习率调度策略、Warmup、Cosine Decay |

| 预训练 | 大模型预训练完整流程 |

| 为什么大模型都是 Decoder-only 架构 | Decoder-only 架构优势分析 |

🔖 难度:⭐⭐⭐ | 推荐优先级:必学

| 文档 | 核心内容 |

|---|---|

| 0522 PEFT 参数高效微调 | PEFT 概述、各种高效微调方法对比 |

| 0601 指令微调 | Instruction Tuning 原理与实践 |

| 0605 指令微调数据集 | 高质量指令数据集构建方法 |

| 0613 LoRA | Low-Rank Adaptation 原理与实现 |

| 0618 AdaLoRA | 自适应 LoRA 参数分配 |

| 0622 Quantization | 模型量化技术:INT8/INT4 量化 |

| 0623 QLoRA | 量化 + LoRA 联合优化 |

| 0704 PTQ | Post-Training Quantization 训练后量化 |

🔖 难度:⭐⭐⭐⭐ | 推荐优先级:进阶必学

| 文档 | 核心内容 |

|---|---|

| 0720 强化学习1 - MDP与贝尔曼方程 | 马尔可夫决策过程、贝尔曼方程 |

| 0723 强化学习2 - 策略迭代 | 策略迭代、值迭代算法 |

| 0806 强化学习3 - 蒙特卡洛方法 | MC 方法、TD 方法 |

| 文档 | 核心内容 |

|---|---|

| 0816 DPO | Direct Preference Optimization |

| 0819 PPO | Proximal Policy Optimization |

| 25-0316 PPO 演化历程 | 从 Policy Gradient 到 PPO |

| 25-0321 RLHF | RLHF 完整流程详解 |

| 25-0401 DPO | DPO 进阶讲解 |

| 25-0401 GRPO | Group Relative Policy Optimization |

| 25-0401 DAPO | Diffusion-based Alignment |

| GFPO | Guided Flow Policy Optimization |

| GSPO | 从 Token 级到序列级优化 |

| SAPO | Self-Alignment Policy Optimization |

| 大模型强化学习中的熵机制 | 熵正则化在 RLHF 中的作用 |

🔖 难度:⭐⭐⭐ | 推荐优先级:应用必学

| 文档 | 核心内容 |

|---|---|

| 0524 RAG 入门 | RAG 基础概念与架构 |

| 0526 RAG from Scratch - LangChain (1) | 用 LangChain 从零实现 RAG |

| 0528 RAG from Scratch - LangChain (2) | RAG 进阶实现 |

| 0530 RAG from Scratch - LangChain (3) | RAG 高级技巧 |

| 0715 GraphRAG | 图结构增强的 RAG |

| 25-0302 GraphRAG | GraphRAG 深入讲解 |

| 25-0421 Agentic RAG | Agent + RAG 融合架构 |

| 25-0421 Agentic RAG 案例分析 | Agentic RAG 实战案例 |

🔖 难度:⭐⭐⭐⭐ | 推荐优先级:前沿方向

| 文档 | 核心内容 |

|---|---|

| 1117 Agent 入门 | Agent 基础概念与架构 |

| 25-0307 Agent 概述 | Agent 技术全景图 |

| 25-0507 Function Call | 函数调用机制 |

| 25-0501 MCP | Model Context Protocol |

| 文档 | 核心内容 |

|---|---|

| 1220 Agent Planning1 - 基础方法 | 规划基础方法 |

| 1223 Agent Planning2 - 规划 | 高级规划策略 |

| 25-0107 Agent Planning3 - 反思 | Reflection 机制 |

| 文档 | 核心内容 |

|---|---|

| 1230 Agent Memory | Agent 记忆机制 |

| 25-0121 Memory-based Agent (1) | 记忆驱动的 Agent |

| 25-0127 Memory-based Agent (2) | Memory Agent 进阶 |

| Engram | Engram 记忆架构 |

| 文档 | 核心内容 |

|---|---|

| 25-0326 阿里云百炼智能导购 Agent | Agent 开发实战 |

| 25-0502 失败的多智能体 | 多智能体系统经验教训 |

🔖 难度:⭐⭐ | 推荐优先级:应用必学

| 文档 | 核心内容 |

|---|---|

| 1104 LangChain 介绍与模型组件 | LangChain 基础与架构 |

| 1110 LangChain2 - 提示工程 | LangChain 中的 Prompt 管理 |

| 1111 LangChain3 - 模型调用与输出解析 | LLM 调用与输出解析器 |

🔖 难度:⭐⭐⭐⭐ | 推荐优先级:工程必学

| 文档 | 核心内容 |

|---|---|

| 0728 分布式训练1 - 数据并行 | DP、DDP 原理 |

| 0730 分布式训练2 - DDP | PyTorch DDP 实现细节 |

| 0803 Accelerate | HuggingFace Accelerate 使用 |

| 0808 DeepSpeed | DeepSpeed ZeRO 优化 |

🔖 难度:⭐⭐⭐⭐ | 推荐优先级:工程必学

| 文档 | 核心内容 |

|---|---|

| 0709 Flash Attention - 原理 | Flash Attention 原理详解 |

| 0710 Flash Attention - 代码 | Flash Attention 代码实现 |

| PageAttention | vLLM PagedAttention 原理 |

| 文档 | 核心内容 |

|---|---|

| 0813 vLLM 入门 | vLLM 高性能推理框架 |

| Continuous Batching | 连续批处理技术 |

| Prefill 与 Decode | 预填充与解码分离 |

| DistServe 预填充解码解耦 | 分布式推理优化 |

| SARATHI Chunked Prefill | 分块预填充技术 |

| 文档 | 核心内容 |

|---|---|

| 投机解码 Speculative Decoding | 推测解码加速推理 |

| Medusa | 多头推测解码 |

| 为什么推理阶段是左 Padding | Left Padding 原理 |

🔖 难度:⭐⭐⭐⭐ | 推荐优先级:进阶

| 文档 | 核心内容 |

|---|---|

| 1010 Long Context2 - 插值 | 位置编码插值扩展 |

| 1016 Long Context3 - 上下文窗口分割 | 长文本分块处理 |

| 1022 Long Context4 - 提示压缩 (1) | Prompt Compression |

| 1025 Long Context4 - 提示压缩 (2) | 高级压缩技术 |

🔖 难度:⭐⭐⭐ | 推荐优先级:应用必学

| 文档 | 核心内容 |

|---|---|

| 1124 Embedding Model (1) | Embedding 模型原理 |

| 1206 Embedding Model (2) | Embedding 模型进阶 |

| 1203 向量索引 | FAISS、向量数据库 |

| 1212 Rerank | 重排序模型 |

🔖 难度:⭐⭐⭐⭐ | 推荐优先级:保持前沿

| 文档 | 核心内容 |

|---|---|

| 0829 LLaMA 3.1 技术报告 | LLaMA 3.1 技术详解 |

| 0918 LLaMA 3 后训练 | LLaMA 3 后训练技术 |

| 文档 | 核心内容 |

|---|---|

| 25-0203 DeepSeek R1 技术报告 | DeepSeek R1 深度解读 |

| 25-0216 DeepSeek V3 技术报告 | DeepSeek V3 精读 |

| 25-0220 DeepSeek R1 20问 | R1 技术问答 |

| 重构残差连接: DeepSeek mHC | mHC 架构深度解析 |

| 文档 | 核心内容 |

|---|---|

| 25-0304 Qwen2.5 系列 | Qwen2.5 技术解读 |

| Qwen3-VL 技术报告 | Qwen3 视觉语言模型 |

| Qwen3-VL 核心技术 | Qwen3-VL 核心解析 |

| 文档 | 核心内容 |

|---|---|

| 25-0418 GPT-o3 | GPT-o3 技术分析 |

| Kimi K2 | Kimi K2 模型解读 |

🔖 难度:⭐⭐ | 推荐优先级:应用必学

| 文档 | 核心内容 |

|---|---|

| 0827 如何写出优雅的 Prompt | Prompt 最佳实践 |

| Chain of Draft | CoD 思维链草稿 |

| Stop Overthinking | 避免过度推理 |

🔖 难度:⭐⭐⭐⭐ | 推荐优先级:按需学习

| 文档 | 核心内容 |

|---|---|

| 0630 MoE | Mixture of Experts 原理 |

| 文档 | 核心内容 |

|---|---|

| 0611 MOCO | MOCO 对比学习 |

| 重读经典: MOCO | MOCO 深度解读 |

| 文档 | 核心内容 |

|---|---|

| 25-0228 Deep Research | Deep Research 方法论 |

| Deep Research | 深度研究技术 |

| 文档 | 核心内容 |

|---|---|

| 0718 XGBoost | XGBoost 算法详解 |

| 25-0222 NSA | Neural Scaling Analysis |

欢迎参与贡献!你可以通过以下方式参与:

- 🐛 报告问题 - 发现文档错误或有建议?请提交 Issue

- 📝 完善文档 - 补充内容、修正错误、改进表述

- 🌟 Star 支持 - 如果本项目对你有帮助,请点个 Star ⭐

- Fork 本仓库

- 创建你的分支 (

git checkout -b feature/AmazingFeature) - 提交你的更改 (

git commit -m 'Add some AmazingFeature') - 推送到分支 (

git push origin feature/AmazingFeature) - 提交 Pull Request

如果你觉得这个项目对你有帮助,请给个 Star ⭐️ 支持一下!

如果觉得有帮助,别忘了给个 ⭐ Star 哦!

Made with ❤️ by zhushiyun88

欢迎加入我的知识星球,星球提供以下资源:

✅ 1. 系统性强,覆盖求职全路径 星球提供了一套完整的大模型求职解决方案,从知识构建、学习计划、面试准备到实战项目,层层递进。尤其适合转行或基础较弱的同学,已帮助1000+人成功入职算法岗,战绩可查!

✅ 2. 35w字大模型笔记 + 可执行学习计划 笔记内容极其详实(35万字!),且持续更新,支持导出PDF,方便离线学习。更贴心的是,提供了两种学习计划表——“速成版”和“稳扎稳打版”,每天只需4小时,高效无压力。

✅ 3. 面试题库口语化、易记忆 收录超300道大厂真题,答案摒弃复杂公式图表,改用口语化表达,更容易理解和背诵。对面试紧张、不擅长技术表达的同学非常友好!

✅ 4. 实战项目+简历指导+面试逐字稿 不仅有企业级Agent项目代码,还教你如何写简历、如何讲解项目,甚至提供面试逐字稿——这种细节级的辅导在市面上很少见,真正做到了求职无死角。

✅ 5. 打破信息差,解答真实困惑 涵盖了求职中最常见的痛点:项目速成、微调深入、场景题回答、offer选择等……这些都是过来人最懂的问题,能帮你少走很多弯路。

✅ 6. 嘉宾团队强大,可无限提问 导师来自同济、北大、北航、字节等顶尖院校和企业,覆盖大模型、搜广推、AInfra、CV等多个方向,甚至还有万star GitHub项目核心贡献者!更重要的是——可以无限次提问,这性价比简直拉满。

如果你正在焦虑如何转型算法岗、如何系统学习大模型,或者只是想找一个能实时答疑的高质量圈子,不妨扫码加入试试,不合适三天内(72h)无理由退款。真诚推荐给每一位努力前行的小伙伴!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for teaching-boyfriend-llm

Similar Open Source Tools

teaching-boyfriend-llm

The 'teaching-boyfriend-llm' repository contains study notes on LLM (Large Language Models) for the purpose of advancing towards AGI (Artificial General Intelligence). The notes are a collaborative effort towards understanding and implementing LLM technology.

ai-app

The 'ai-app' repository is a comprehensive collection of tools and resources related to artificial intelligence, focusing on topics such as server environment setup, PyCharm and Anaconda installation, large model deployment and training, Transformer principles, RAG technology, vector databases, AI image, voice, and music generation, and AI Agent frameworks. It also includes practical guides and tutorials on implementing various AI applications. The repository serves as a valuable resource for individuals interested in exploring different aspects of AI technology.

adata

AData is a free and open-source A-share database that focuses on transaction-related data. It provides comprehensive data on stocks, including basic information, market data, and sentiment analysis. AData is designed to be easy to use and integrate with other applications, making it a valuable tool for quantitative trading and AI training.

Chinese-LLaMA-Alpaca

This project open sources the **Chinese LLaMA model and the Alpaca large model fine-tuned with instructions**, to further promote the open research of large models in the Chinese NLP community. These models **extend the Chinese vocabulary based on the original LLaMA** and use Chinese data for secondary pre-training, further enhancing the basic Chinese semantic understanding ability. At the same time, the Chinese Alpaca model further uses Chinese instruction data for fine-tuning, significantly improving the model's understanding and execution of instructions.

yudao-boot-mini

yudao-boot-mini is an open-source project focused on developing a rapid development platform for developers in China. It includes features like system functions, infrastructure, member center, data reports, workflow, mall system, WeChat official account, CRM, ERP, etc. The project is based on Spring Boot with Java backend and Vue for frontend. It offers various functionalities such as user management, role management, menu management, department management, workflow management, payment system, code generation, API documentation, database documentation, file service, WebSocket integration, message queue, Java monitoring, and more. The project is licensed under the MIT License, allowing both individuals and enterprises to use it freely without restrictions.

ruoyi-vue-pro

The ruoyi-vue-pro repository is an open-source project that provides a comprehensive development platform with various functionalities such as system features, infrastructure, member center, data reports, workflow, payment system, mall system, ERP system, CRM system, and AI big model. It is built using Java backend with Spring Boot framework and Vue frontend with different versions like Vue3 with element-plus, Vue3 with vben(ant-design-vue), and Vue2 with element-ui. The project aims to offer a fast development platform for developers and enterprises, supporting features like dynamic menu loading, button-level access control, SaaS multi-tenancy, code generator, real-time communication, integration with third-party services like WeChat, Alipay, and cloud services, and more.

yudao-cloud

Yudao-cloud is an open-source project designed to provide a fast development platform for developers in China. It includes various system functions, infrastructure, member center, data reports, workflow, mall system, WeChat public account, CRM, ERP, etc. The project is based on Java backend with Spring Boot and Spring Cloud Alibaba microservices architecture. It supports multiple databases, message queues, authentication systems, dynamic menu loading, SaaS multi-tenant system, code generator, real-time communication, integration with third-party services like WeChat, Alipay, and more. The project is well-documented and follows the Alibaba Java development guidelines, ensuring clean code and architecture.

yudao-ui-admin-vue3

The yudao-ui-admin-vue3 repository is an open-source project focused on building a fast development platform for developers in China. It utilizes Vue3 and Element Plus to provide features such as configurable themes, internationalization, dynamic route permission generation, common component encapsulation, and rich examples. The project supports the latest front-end technologies like Vue3 and Vite4, and also includes tools like TypeScript, pinia, vueuse, vue-i18n, vue-router, unocss, iconify, and wangeditor. It offers a range of development tools and features for system functions, infrastructure, workflow management, payment systems, member centers, data reporting, e-commerce systems, WeChat public accounts, ERP systems, and CRM systems.

Chinese-LLaMA-Alpaca-3

Chinese-LLaMA-Alpaca-3 is a project based on Meta's latest release of the new generation open-source large model Llama-3. It is the third phase of the Chinese-LLaMA-Alpaca open-source large model series projects (Phase 1, Phase 2). This project open-sources the Chinese Llama-3 base model and the Chinese Llama-3-Instruct instruction fine-tuned large model. These models incrementally pre-train with a large amount of Chinese data on the basis of the original Llama-3 and further fine-tune using selected instruction data, enhancing Chinese basic semantics and instruction understanding capabilities. Compared to the second-generation related models, significant performance improvements have been achieved.

Chinese-LLaMA-Alpaca-2

Chinese-LLaMA-Alpaca-2 is a large Chinese language model developed by Meta AI. It is based on the Llama-2 model and has been further trained on a large dataset of Chinese text. Chinese-LLaMA-Alpaca-2 can be used for a variety of natural language processing tasks, including text generation, question answering, and machine translation. Here are some of the key features of Chinese-LLaMA-Alpaca-2: * It is the largest Chinese language model ever trained, with 13 billion parameters. * It is trained on a massive dataset of Chinese text, including books, news articles, and social media posts. * It can be used for a variety of natural language processing tasks, including text generation, question answering, and machine translation. * It is open-source and available for anyone to use. Chinese-LLaMA-Alpaca-2 is a powerful tool that can be used to improve the performance of a wide range of natural language processing tasks. It is a valuable resource for researchers and developers working in the field of artificial intelligence.

PaddleScience

PaddleScience is a scientific computing suite developed based on the deep learning framework PaddlePaddle. It utilizes the learning ability of deep neural networks and the automatic (higher-order) differentiation mechanism of PaddlePaddle to solve problems in physics, chemistry, meteorology, and other fields. It supports three solving methods: physics mechanism-driven, data-driven, and mathematical fusion, and provides basic APIs and detailed documentation for users to use and further develop.

sanic-web

Sanic-Web is a lightweight, end-to-end, and easily customizable large model application project built on technologies such as Dify, Ollama & Vllm, Sanic, and Text2SQL. It provides a one-stop solution for developing large model applications, supporting graphical data-driven Q&A using ECharts, handling table-based Q&A with CSV files, and integrating with third-party RAG systems for general knowledge Q&A. As a lightweight framework, Sanic-Web enables rapid iteration and extension to facilitate the quick implementation of large model projects.

indie-hacker-tools-plus

Indie Hacker Tools Plus is a curated repository of essential tools and technology stacks for independent developers. The repository aims to help developers enhance efficiency, save costs, and mitigate risks by using popular and validated tools. It provides a collection of tools recognized by the industry to empower developers with the most refined technical support. Developers can contribute by submitting articles, software, or resources through issues or pull requests.

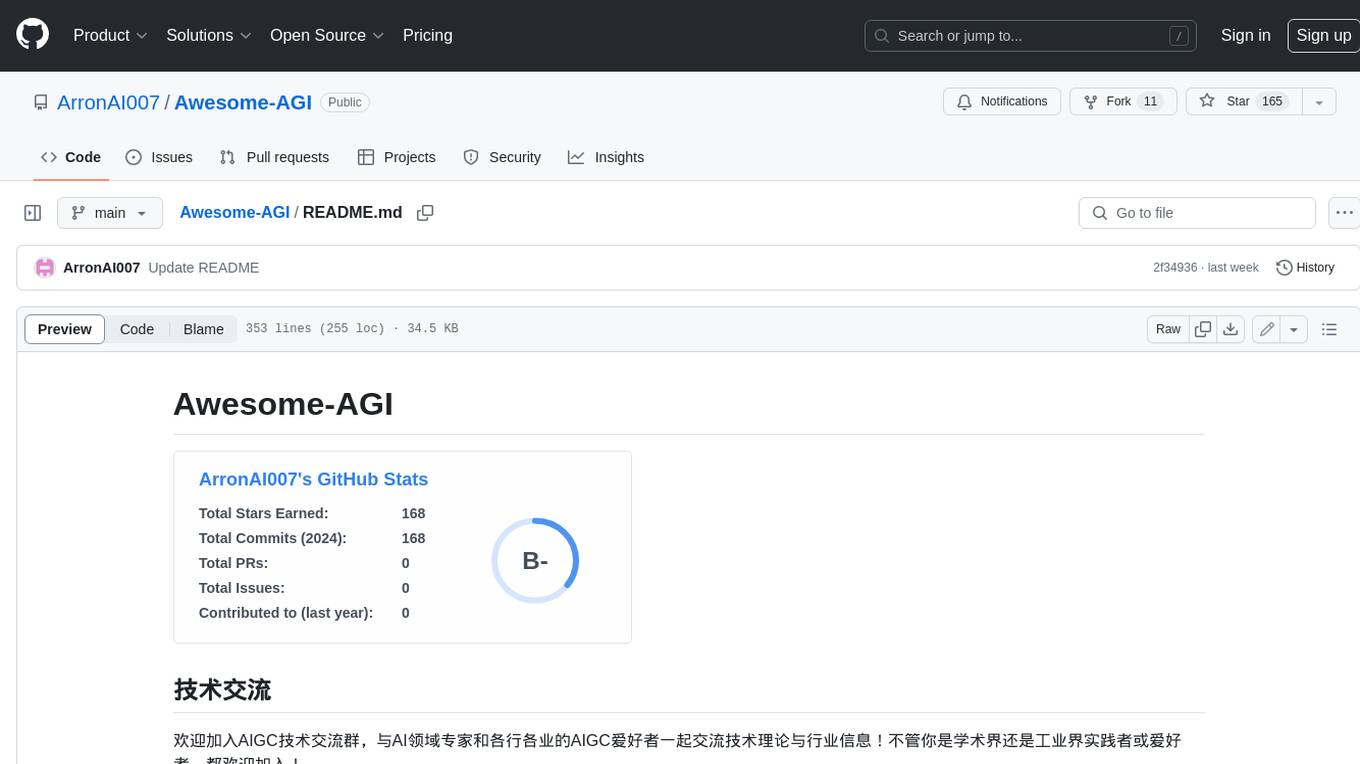

Awesome-AGI

Awesome-AGI is a curated list of resources related to Artificial General Intelligence (AGI), including models, pipelines, applications, and concepts. It provides a comprehensive overview of the current state of AGI research and development, covering various aspects such as model training, fine-tuning, deployment, and applications in different domains. The repository also includes resources on prompt engineering, RLHF, LLM vocabulary expansion, long text generation, hallucination mitigation, controllability and safety, and text detection. It serves as a valuable resource for researchers, practitioners, and anyone interested in the field of AGI.

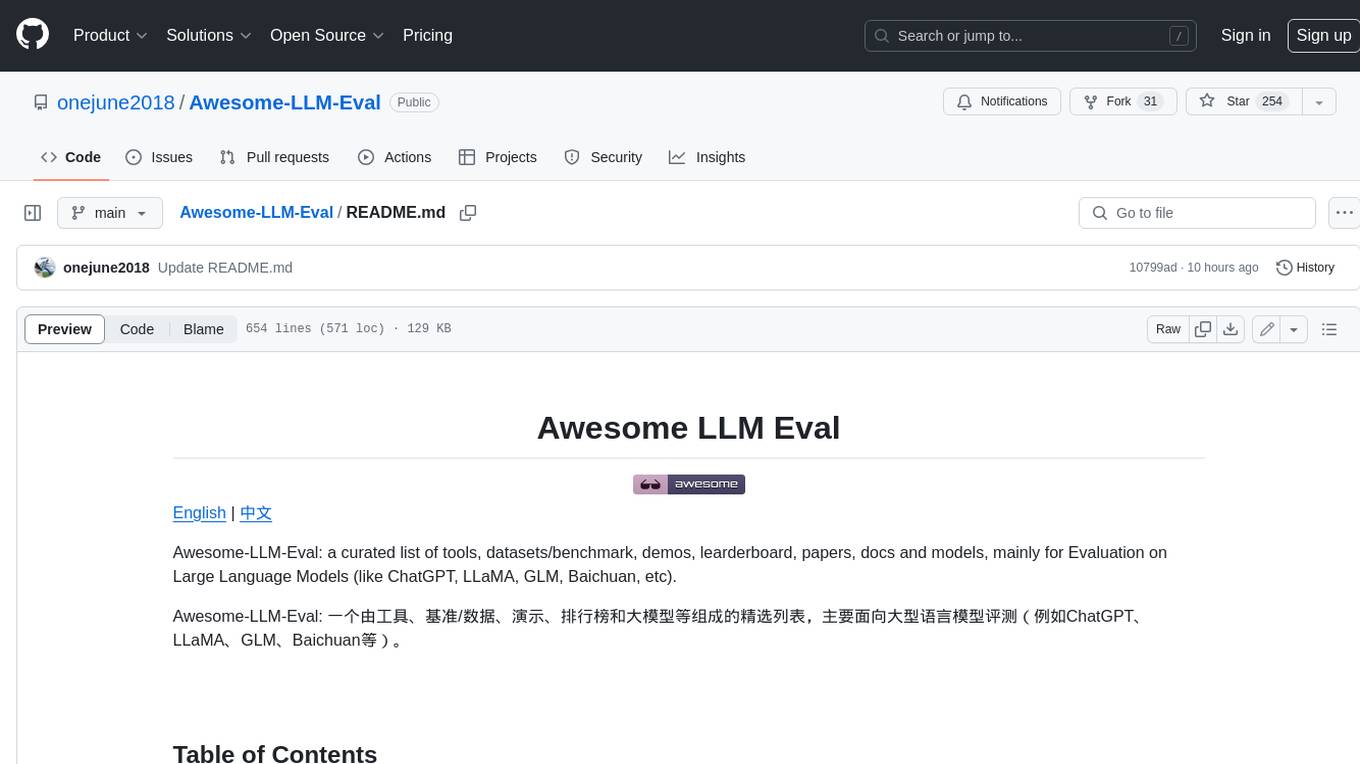

Awesome-LLM-Eval

Awesome-LLM-Eval: a curated list of tools, benchmarks, demos, papers for Large Language Models (like ChatGPT, LLaMA, GLM, Baichuan, etc) Evaluation on Language capabilities, Knowledge, Reasoning, Fairness and Safety.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.