awesome-vla-for-ad

🌐 Vision-Language-Action Models for Autonomous Driving: Past, Present, and Future

Stars: 287

README:

Autonomous driving has long relied on modular "Perception-Decision-Action" pipelines, whose hand-crafted interfaces and rule-based components often struggle in complex, dynamic, or long-tailed scenarios. Their cascaded structure also amplifies upstream perception errors, undermining downstream planning and control.

This survey reviews vision-action (VA) models and vision-language-action (VLA) models for autonomous driving. We trace the evolution from early VA approaches to modern VLA frameworks, and organize existing methods into two principal paradigms:

- End-to-End VLA, which integrates perception, reasoning, and planning within a single model.

- Dual-System VLA, which separates slow deliberation (via VLMs) from fast, safety-critical execution (via planners).

|

|---|

For more details, kindly refer to our 📚 Paper, 🌐 Project Page, and 🤗 HuggingFace Leaderboard.

If you find this work helpful for your research, please kindly consider citing our paper:

@article{survey_vla4ad,

title = {Vision-Language-Action Models for Autonomous Driving: Past, Present, and Future},

author = {Tianshuai Hu and Xiaolu Liu and Song Wang and Yiyao Zhu and Ao Liang and Lingdong Kong and Guoyang Zhao and Zeying Gong and Jun Cen and Zhiyu Huang and Xiaoshuai Hao and Linfeng Li and Hang Song and Xiangtai Li and Jun Ma and Shaojie Shen and Jianke Zhu and Dacheng Tao and Ziwei Liu and Junwei Liang},

journal = {arXiv preprint arXiv:2512.16760},

year = {2025},

}- 1. Vision-Action Models

- 2. Vision-Language-Action Models

- 3. Datasets & Benchmarks

- 4. Applications

- 5. Other Resources

⏲️ In chronological order, from the earliest to the latest.

⏲️ In chronological order, from the earliest to the latest.

⏲️ In chronological order, from the earliest to the latest.

⏲️ In chronological order, from the earliest to the latest.

⏲️ In chronological order, from the earliest to the latest.

⏲️ In chronological order, from the earliest to the latest.

⏲️ In chronological order, from the earliest to the latest.

⏲️ In chronological order, from the earliest to the latest.

⏲️ In chronological order, from the earliest to the latest.

⏲️ In chronological order, from the earliest to the latest.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-vla-for-ad

Similar Open Source Tools

Awesome-RL-for-LRMs

This repository contains a collection of awesome resources for reinforcement learning in language models. It includes tutorials, code implementations, research papers, and tools to help researchers and practitioners explore and apply reinforcement learning techniques in natural language processing tasks. Whether you are a beginner or an expert in the field, this repository aims to provide valuable insights and guidance to enhance your understanding and implementation of reinforcement learning in language models.

Awesome-Interpretability-in-Large-Language-Models

This repository is a collection of resources focused on interpretability in large language models (LLMs). It aims to help beginners get started in the area and keep researchers updated on the latest progress. It includes libraries, blogs, tutorials, forums, tools, programs, papers, and more related to interpretability in LLMs.

Steel-LLM

Steel-LLM is a project to pre-train a large Chinese language model from scratch using over 1T of data to achieve a parameter size of around 1B, similar to TinyLlama. The project aims to share the entire process including data collection, data processing, pre-training framework selection, model design, and open-source all the code. The goal is to enable reproducibility of the work even with limited resources. The name 'Steel' is inspired by a band '万能青年旅店' and signifies the desire to create a strong model despite limited conditions. The project involves continuous data collection of various cultural elements, trivia, lyrics, niche literature, and personal secrets to train the LLM. The ultimate aim is to fill the model with diverse data and leave room for individual input, fostering collaboration among users.

oumi

Oumi is an open-source platform for building state-of-the-art foundation models, offering tools for data preparation, training, evaluation, and deployment. It supports training and fine-tuning models with various parameters, working with text and multimodal models, synthesizing and curating training data, deploying models efficiently, evaluating models comprehensively, and running on different platforms. Oumi provides a consistent API, reliability, and flexibility for research purposes.

LLMs

LLMs is a Chinese large language model technology stack for practical use. It includes high-availability pre-training, SFT, and DPO preference alignment code framework. The repository covers pre-training data cleaning, high-concurrency framework, SFT dataset cleaning, data quality improvement, and security alignment work for Chinese large language models. It also provides open-source SFT dataset construction, pre-training from scratch, and various tools and frameworks for data cleaning, quality optimization, and task alignment.

cgft-llm

The cgft-llm repository is a collection of video tutorials and documentation for implementing large models. It provides guidance on topics such as fine-tuning llama3 with llama-factory, lightweight deployment and quantization using llama.cpp, speech generation with ChatTTS, introduction to Ollama for large model deployment, deployment tools for vllm and paged attention, and implementing RAG with llama-index. Users can find detailed code documentation and video tutorials for each project in the repository.

Hands-On-Large-Language-Models-CN

Hands-On Large Language Models CN(ZH) is a Chinese version of the book 'Hands-On Large Language Models' by Jay Alammar and Maarten Grootendorst. It provides detailed code annotations and additional insights, offers Notebook versions suitable for Chinese network environments, utilizes openbayes for free GPU access, allows convenient environment setup with vscode, and includes accompanying Chinese language videos on platforms like Bilibili and YouTube. The book covers various chapters on topics like Tokens and Embeddings, Transformer LLMs, Text Classification, Text Clustering, Prompt Engineering, Text Generation, Semantic Search, Multimodal LLMs, Text Embedding Models, Fine-tuning Models, and more.

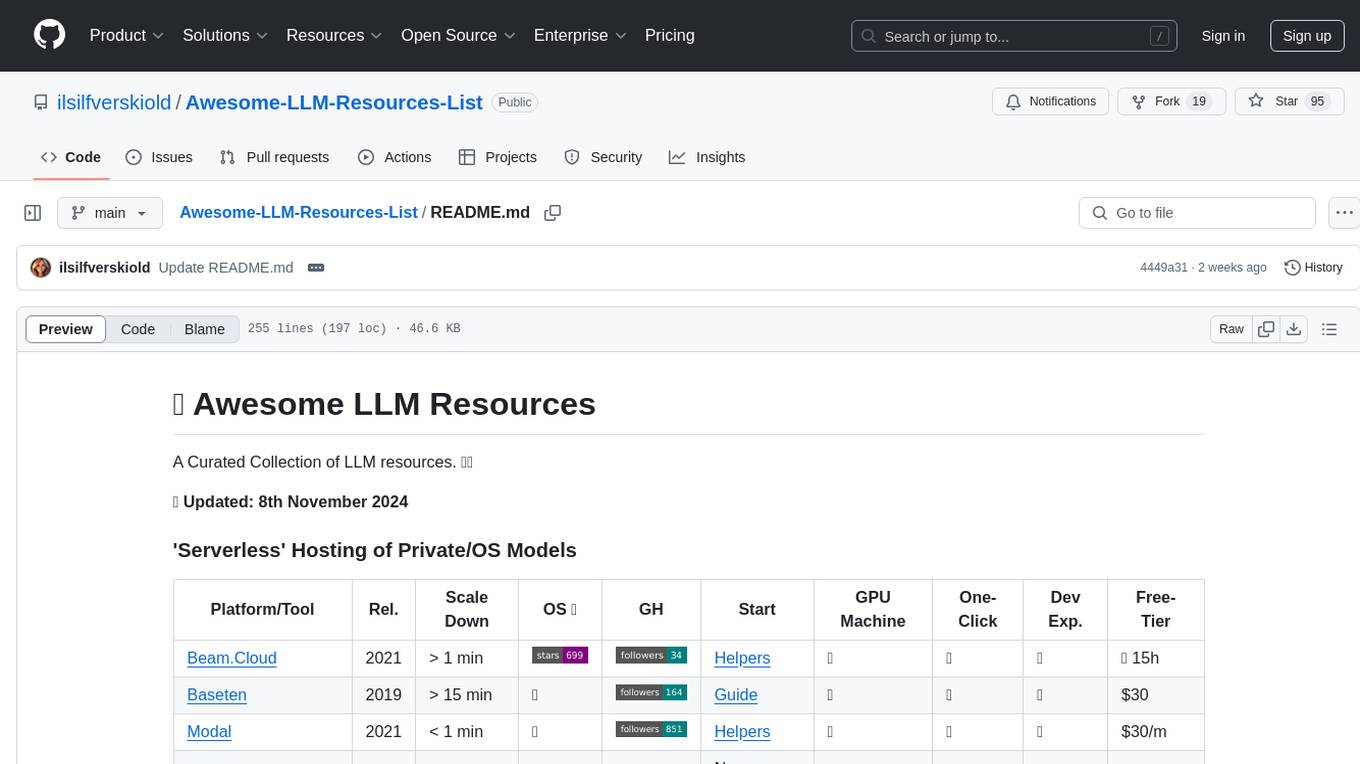

Awesome-LLM-Resources-List

Awesome LLM Resources is a curated collection of resources for Large Language Models (LLMs) covering various aspects such as serverless hosting, accessing off-the-shelf models via API, local inference, LLM serving frameworks, open-source LLM web chat UIs, renting GPUs for fine-tuning, fine-tuning with no-code UI, fine-tuning frameworks, OS agentic/AI workflow, AI agents, co-pilots, voice API, open-source TTS models, OS RAG frameworks, research papers on chain-of-thought prompting, CoT implementations, CoT fine-tuned models & datasets, and more.

phoenix

Phoenix is a tool that provides MLOps and LLMOps insights at lightning speed with zero-config observability. It offers a notebook-first experience for monitoring models and LLM Applications by providing LLM Traces, LLM Evals, Embedding Analysis, RAG Analysis, and Structured Data Analysis. Users can trace through the execution of LLM Applications, evaluate generative models, explore embedding point-clouds, visualize generative application's search and retrieval process, and statistically analyze structured data. Phoenix is designed to help users troubleshoot problems related to retrieval, tool execution, relevance, toxicity, drift, and performance degradation.

InternVL

InternVL scales up the ViT to _**6B parameters**_ and aligns it with LLM. It is a vision-language foundation model that can perform various tasks, including: **Visual Perception** - Linear-Probe Image Classification - Semantic Segmentation - Zero-Shot Image Classification - Multilingual Zero-Shot Image Classification - Zero-Shot Video Classification **Cross-Modal Retrieval** - English Zero-Shot Image-Text Retrieval - Chinese Zero-Shot Image-Text Retrieval - Multilingual Zero-Shot Image-Text Retrieval on XTD **Multimodal Dialogue** - Zero-Shot Image Captioning - Multimodal Benchmarks with Frozen LLM - Multimodal Benchmarks with Trainable LLM - Tiny LVLM InternVL has been shown to achieve state-of-the-art results on a variety of benchmarks. For example, on the MMMU image classification benchmark, InternVL achieves a top-1 accuracy of 51.6%, which is higher than GPT-4V and Gemini Pro. On the DocVQA question answering benchmark, InternVL achieves a score of 82.2%, which is also higher than GPT-4V and Gemini Pro. InternVL is open-sourced and available on Hugging Face. It can be used for a variety of applications, including image classification, object detection, semantic segmentation, image captioning, and question answering.

TigerBot

TigerBot is a cutting-edge foundation for your very own LLM, providing a world-class large model for innovative Chinese-style contributions. It offers various upgrades and features, such as search mode enhancements, support for large context lengths, and the ability to play text-based games. TigerBot is suitable for prompt-based game engine development, interactive game design, and real-time feedback for playable games.

go-cyber

Cyber is a superintelligence protocol that aims to create a decentralized and censorship-resistant internet. It uses a novel consensus mechanism called CometBFT and a knowledge graph to store and process information. Cyber is designed to be scalable, secure, and efficient, and it has the potential to revolutionize the way we interact with the internet.

VoiceBench

VoiceBench is a repository containing code and data for benchmarking LLM-Based Voice Assistants. It includes a leaderboard with rankings of various voice assistant models based on different evaluation metrics. The repository provides setup instructions, datasets, evaluation procedures, and a curated list of awesome voice assistants. Users can submit new voice assistant results through the issue tracker for updates on the ranking list.

Chinese-Mixtral-8x7B

Chinese-Mixtral-8x7B is an open-source project based on Mistral's Mixtral-8x7B model for incremental pre-training of Chinese vocabulary, aiming to advance research on MoE models in the Chinese natural language processing community. The expanded vocabulary significantly improves the model's encoding and decoding efficiency for Chinese, and the model is pre-trained incrementally on a large-scale open-source corpus, enabling it with powerful Chinese generation and comprehension capabilities. The project includes a large model with expanded Chinese vocabulary and incremental pre-training code.