verbis

A privacy-first fully local assistant for MacOS with SaaS connectors

Stars: 74

Verbis AI is a secure and fully local AI assistant for MacOS that indexes data from various SaaS applications securely on the user's system. It provides a single interface powered by GenAI models to query and manage information. Users can connect Verbis to apps like Google Drive, Outlook, Gmail, and Slack, and use it as a chatbot to search across their data without data leaving their device. The tool is powered by Ollama and Weaviate, utilizing models like Mistral 7B, ms-marco-MiniLM-L-12-v2, and nomic-embed-text. Verbis AI requires Apple Silicon Mac (m1+) and has minimal system resource utilization requirements.

README:

Verbis AI is a secure and fully local AI assistant for MacOS. By connecting to your various SaaS applications, Verbis AI indexes all your data securely and locally on your system. Verbis provides a single interface to query and manage your information with the power of GenAI models.

- Download and install Verbis

- Connect Verbis to your data sources (Google Drive, Outlook, Gmail, Slack etc)

- Use Verbis as a chatbot to search across your data. Your data never leaves your device.

Verbis downloads and locally indexes documents from third-party services authenticated via OAuth, called “apps”. To manage your apps:

- Click the gear icon on the top right of the Verbis window.

- A list of apps will appear, along with information on synchronized documents.

- To add a new app, select the app from the app catalog and click the “Connect” button.

- Your last active browser window should navigate to an OAuth consent screen.

- After completing the OAuth consent flow, the application will automatically begin syncing documents locally.

- If an application is not supported, you may click the “Request” button to notify our team of your request for future support.

Verbis AI is powered by Ollama and Weaviate, and we use the following models:

Mistral 7B, ms-marco-MiniLM-L-12-v2, and nomic-embed-text.

- Apple Silicon Mac (m1+): Macbook, Mac mini, Mac Pro, Mac Studio

- Disk: 6 GB for model weights, approximately 1-4 GB depending on connector configuration and synced data.

- All data is stored under ~/.verbis

- Memory: Approximately 1.2 GB for models and 200MB to 2 GB for indexes

- Models are unloaded from memory after 20 minutes of inactivity

- Compute: Depends on chipset. Very low CPU requirements during syncing, sharp spikes in GPU utilization during inference for 1-8 seconds

- Network: Up to 10 documents may be downloaded concurrently from each connector at peak network bandwidth during syncing

The Verbis AI team ([email protected])

- Sahil Kumar ([email protected])

- Alex Mavrogiannis ([email protected])

Verbis receives data from SaaS apps, sends telemetry data to Posthog. Your data never leaves your system. Telemetry can be disabled via the settings page. Our full privacy policy is available here

Downloaded to the local host running Verbis AI using OAuth credentials, and never shared with other third parties

Model weights for the following models are fetched from either the Ollama Library and Huggingface during initialization:

- Mistral 7B v0.3

Telemetry is an opt-out feature, but we encourage users to keep telemetry enabled to help the team improve Verbis. When telemetry is enabled, the following events will be reported to eu.posthog.com via an HTTP POST call:

- Application started

- Chipset

- MacOS version

- memory size

- Time to boot

- IP Address

- Connector sync complete

- Connector ID

- Connector type

- Number of synced documents

- Number of synced chunks

- Number of errors

- Sync error message

- Sync duration

- IP Address

- Prompt

- Duration of each prompt processing phase

- Number of search results

- Number of reranked results

To develop and build verbis, the following tools are needed on your local machine:

- Go 1.22 or later (

brew install go) - Python & utilities (

make builder-env) - NVM with node v21.6.2 or later

- A copy of

.build.envcontaining API keys and other variables required for the build process - A copy of

dist/credentials.json, used for Google OAuth credentials

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for verbis

Similar Open Source Tools

verbis

Verbis AI is a secure and fully local AI assistant for MacOS that indexes data from various SaaS applications securely on the user's system. It provides a single interface powered by GenAI models to query and manage information. Users can connect Verbis to apps like Google Drive, Outlook, Gmail, and Slack, and use it as a chatbot to search across their data without data leaving their device. The tool is powered by Ollama and Weaviate, utilizing models like Mistral 7B, ms-marco-MiniLM-L-12-v2, and nomic-embed-text. Verbis AI requires Apple Silicon Mac (m1+) and has minimal system resource utilization requirements.

mem0-chrome-extension

Mem0 Chrome Extension is a tool that enhances AI interactions by providing a universal memory layer across various AI assistants. It allows users to seamlessly share context, automatically capture relevant information, and retrieve memories intelligently. The extension offers features like one-click sync with existing ChatGPT memories and a memory dashboard for easy management. Users can install the extension in Google Chrome, sign in with Google, and start using it with supported AI assistants. Mem0 is free to use with no usage limits or ads, and it prioritizes privacy and data security by sending messages to the Mem0 API for memory extraction and retrieval.

super-agent-party

A 3D AI desktop companion with endless possibilities! This repository provides a platform for enhancing the LLM API without code modification, supporting seamless integration of various functionalities such as knowledge bases, real-time networking, multimodal capabilities, automation, and deep thinking control. It offers one-click deployment to multiple terminals, ecological tool interconnection, standardized interface opening, and compatibility across all platforms. Users can deploy the tool on Windows, macOS, Linux, or Docker, and access features like intelligent agent deployment, VRM desktop pets, Tavern character cards, QQ bot deployment, and developer-friendly interfaces. The tool supports multi-service providers, extensive tool integration, and ComfyUI workflows. Hardware requirements are minimal, making it suitable for various deployment scenarios.

Customer-Service-Conversational-Insights-with-Azure-OpenAI-Services

This solution accelerator is built on Azure Cognitive Search Service and Azure OpenAI Service to synthesize post-contact center transcripts for intelligent contact center scenarios. It converts raw transcripts into customer call summaries to extract insights around product and service performance. Key features include conversation summarization, key phrase extraction, speech-to-text transcription, sensitive information extraction, sentiment analysis, and opinion mining. The tool enables data professionals to quickly analyze call logs for improvement in contact center operations.

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

csghub-server

CSGHub Server is a part of the open source and reliable large model assets management platform - CSGHub. It focuses on management of models, datasets, and other LLM assets through REST API. Key features include creation and management of users and organizations, auto-tagging of model and dataset labels, search functionality, online preview of dataset files, content moderation for text and image, download of individual files, tracking of model and dataset activity data. The tool is extensible and customizable, supporting different git servers, flexible LFS storage system configuration, and content moderation options. The roadmap includes support for more Git servers, Git LFS, dataset online viewer, model/dataset auto-tag, S3 protocol support, model format conversion, and model one-click deploy. The project is licensed under Apache 2.0 and welcomes contributions.

AntSK

AntSK is an AI knowledge base/agent built with .Net8+Blazor+SemanticKernel. It features a semantic kernel for accurate natural language processing, a memory kernel for continuous learning and knowledge storage, a knowledge base for importing and querying knowledge from various document formats, a text-to-image generator integrated with StableDiffusion, GPTs generation for creating personalized GPT models, API interfaces for integrating AntSK into other applications, an open API plugin system for extending functionality, a .Net plugin system for integrating business functions, real-time information retrieval from the internet, model management for adapting and managing different models from different vendors, support for domestic models and databases for operation in a trusted environment, and planned model fine-tuning based on llamafactory.

PrivateDocBot

PrivateDocBot is a local LLM-powered chatbot designed for secure document interactions. It seamlessly merges Chainlit user-friendly interface with localized language models, tailored for sensitive data. The project streamlines data access by deciphering intricate user guides and extracting vital insights from complex PDF reports. Equipped with advanced technology, it offers an engaging conversational experience, redefining data interaction and empowering users with control.

Eppie-App

Eppie-App is a mobile application designed to help users manage their daily tasks and improve productivity. The app offers features such as task organization, reminders, and goal setting to assist users in staying organized and on track with their responsibilities. With a user-friendly interface and customizable options, Eppie-App aims to simplify task management and enhance efficiency in users' daily lives.

vector-vein

VectorVein is a no-code AI workflow software inspired by LangChain and langflow, aiming to combine the powerful capabilities of large language models and enable users to achieve intelligent and automated daily workflows through simple drag-and-drop actions. Users can create powerful workflows without the need for programming, automating all tasks with ease. The software allows users to define inputs, outputs, and processing methods to create customized workflow processes for various tasks such as translation, mind mapping, summarizing web articles, and automatic categorization of customer reviews.

coral-cloud

Coral Cloud Resorts is a sample hospitality application that showcases Data Cloud, Agents, and Prompts. It provides highly personalized guest experiences through smart automation, content generation, and summarization. The app requires licenses for Data Cloud, Agents, Prompt Builder, and Einstein for Sales. Users can activate features, deploy metadata, assign permission sets, import sample data, and troubleshoot common issues. Additionally, the repository offers integration with modern web development tools like Prettier, ESLint, and pre-commit hooks for code formatting and linting.

ComfyUI-Tara-LLM-Integration

Tara is a powerful node for ComfyUI that integrates Large Language Models (LLMs) to enhance and automate workflow processes. With Tara, you can create complex, intelligent workflows that refine and generate content, manage API keys, and seamlessly integrate various LLMs into your projects. It comprises nodes for handling OpenAI-compatible APIs, saving and loading API keys, composing multiple texts, and using predefined templates for OpenAI and Groq. Tara supports OpenAI and Grok models with plans to expand support to together.ai and Replicate. Users can install Tara via Git URL or ComfyUI Manager and utilize it for tasks like input guidance, saving and loading API keys, and generating text suitable for chaining in workflows.

bionic-gpt

BionicGPT is an on-premise replacement for ChatGPT, offering the advantages of Generative AI while maintaining strict data confidentiality. BionicGPT can run on your laptop or scale into the data center.

lluminous

lluminous is a fast and light open chat UI that supports multiple providers such as OpenAI, Anthropic, and Groq models. Users can easily plug in their API keys locally to access various models for tasks like multimodal input, image generation, multi-shot prompting, pre-filled responses, and more. The tool ensures privacy by storing all conversation history and keys locally on the user's device. Coming soon features include memory tool, file ingestion/embedding, embeddings-based web search, and prompt templates.

airbyte-platform

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's low-code Connector Development Kit (CDK). Airbyte is used by data engineers and analysts at companies of all sizes to move data for a variety of purposes, including data warehousing, data analysis, and machine learning.

azure-search-openai-demo

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access a GPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval. The repo includes sample data so it's ready to try end to end. In this sample application we use a fictitious company called Contoso Electronics, and the experience allows its employees to ask questions about the benefits, internal policies, as well as job descriptions and roles.

For similar tasks

verbis

Verbis AI is a secure and fully local AI assistant for MacOS that indexes data from various SaaS applications securely on the user's system. It provides a single interface powered by GenAI models to query and manage information. Users can connect Verbis to apps like Google Drive, Outlook, Gmail, and Slack, and use it as a chatbot to search across their data without data leaving their device. The tool is powered by Ollama and Weaviate, utilizing models like Mistral 7B, ms-marco-MiniLM-L-12-v2, and nomic-embed-text. Verbis AI requires Apple Silicon Mac (m1+) and has minimal system resource utilization requirements.

redbox-copilot

Redbox Copilot is a retrieval augmented generation (RAG) app that uses GenAI to chat with and summarise civil service documents. It increases organisational memory by indexing documents and can summarise reports read months ago, supplement them with current work, and produce a first draft that lets civil servants focus on what they do best. The project uses a microservice architecture with each microservice running in its own container defined by a Dockerfile. Dependencies are managed using Python Poetry. Contributions are welcome, and the project is licensed under the MIT License.

fastRAG

fastRAG is a research framework designed to build and explore efficient retrieval-augmented generative models. It incorporates state-of-the-art Large Language Models (LLMs) and Information Retrieval to empower researchers and developers with a comprehensive tool-set for advancing retrieval augmented generation. The framework is optimized for Intel hardware, customizable, and includes key features such as optimized RAG pipelines, efficient components, and RAG-efficient components like ColBERT and Fusion-in-Decoder (FiD). fastRAG supports various unique components and backends for running LLMs, making it a versatile tool for research and development in the field of retrieval-augmented generation.

llm-rag-workshop

The LLM RAG Workshop repository provides a workshop on using Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) to generate and understand text in a human-like manner. It includes instructions on setting up the environment, indexing Zoomcamp FAQ documents, creating a Q&A system, and using OpenAI for generation based on retrieved information. The repository focuses on enhancing language model responses with retrieved information from external sources, such as document databases or search engines, to improve factual accuracy and relevance of generated text.

local-genAI-search

Local-GenAI Search is a local generative search engine powered by the Llama3 model, allowing users to ask questions about their local files and receive concise answers with relevant document references. It utilizes MS MARCO embeddings for semantic search and can run locally on a 32GB laptop or computer. The tool can be used to index local documents, search for information, and provide generative search services through a user interface.

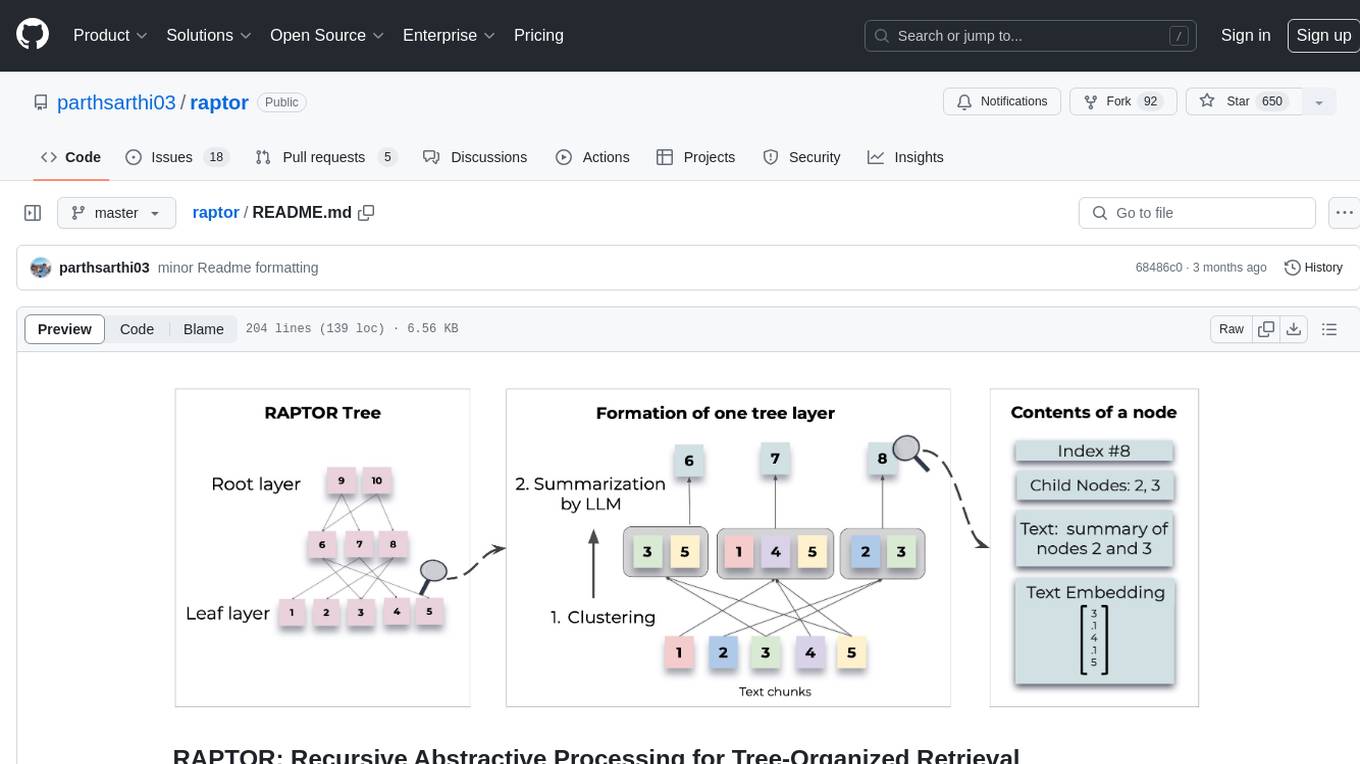

raptor

RAPTOR introduces a novel approach to retrieval-augmented language models by constructing a recursive tree structure from documents. This allows for more efficient and context-aware information retrieval across large texts, addressing common limitations in traditional language models. Users can add documents to the tree, answer questions based on indexed documents, save and load the tree, and extend RAPTOR with custom summarization, question-answering, and embedding models. The tool is designed to be flexible and customizable for various NLP tasks.

redbox

Redbox is a retrieval augmented generation (RAG) app that uses GenAI to chat with and summarise civil service documents. It increases organisational memory by indexing documents and can summarise reports read months ago, supplement them with current work, and produce a first draft that lets civil servants focus on what they do best. The project uses a microservice architecture with each microservice running in its own container defined by a Dockerfile. Dependencies are managed using Python Poetry. Contributions are welcome, and the project is licensed under the MIT License. Security measures are in place to ensure user data privacy and considerations are being made to make the core-api secure.

memfree

MemFree is an open-source hybrid AI search engine that allows users to simultaneously search their personal knowledge base (bookmarks, notes, documents, etc.) and the Internet. It features a self-hosted super fast serverless vector database, local embedding and rerank service, one-click Chrome bookmarks index, and full code open source. Users can contribute by opening issues for bugs or making pull requests for new features or improvements.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.