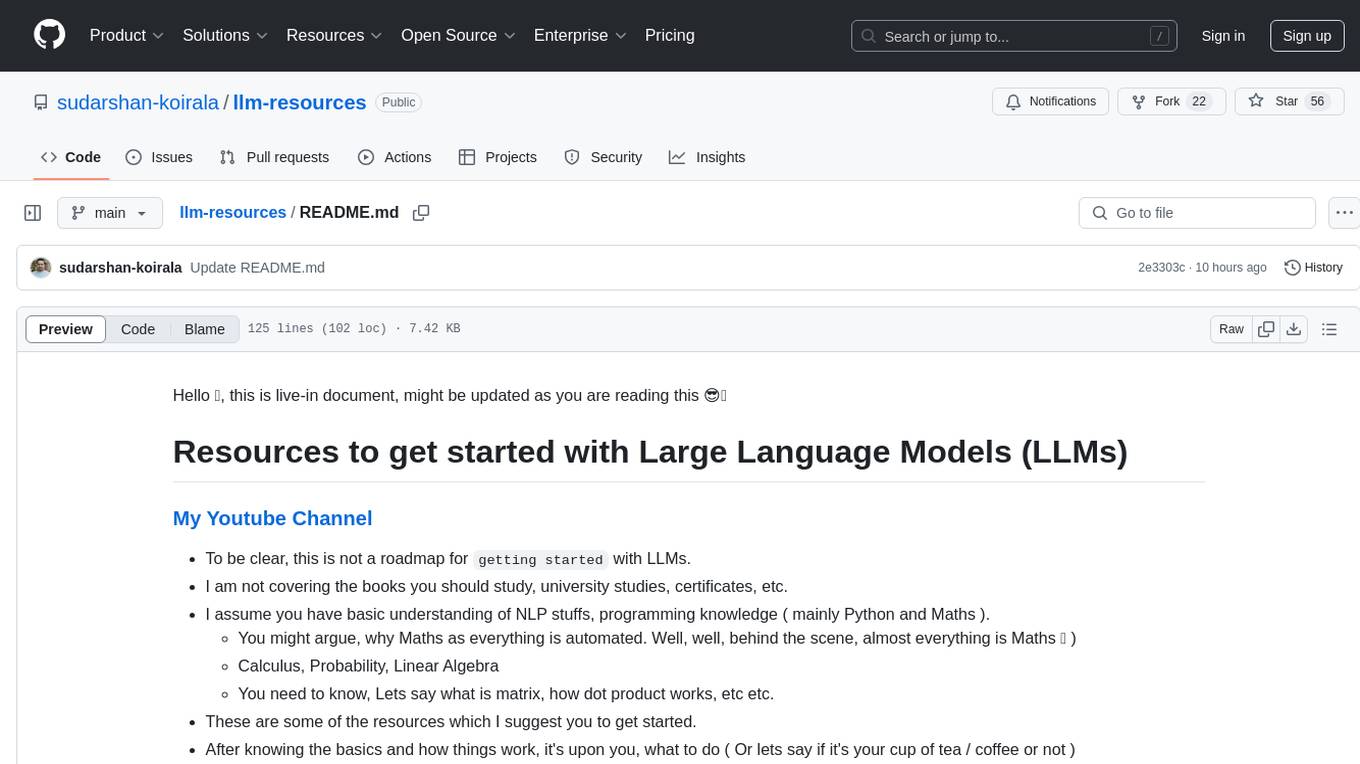

llm-resources

Repo that contains resources to learn or get started with Large Language Models (LLMs)

Stars: 56

llm-resources is a repository providing resources to get started with Large Language Models (LLMs). It includes videos on Neural Networks and LLMs, free courses, prompt engineering guides, explored frameworks, AI assistants, and tips on making RAG work properly. The repository also contains important links and updates related to LLMs, AWS, RAG, agents, model context protocol, and more. It aims to help individuals with a basic understanding of NLP and programming knowledge to explore and utilize LLMs effectively.

README:

Hello 👋, this is live-in document, might be updated as you are reading this 😎🧠

- To be clear, this is not a roadmap for

getting startedwith LLMs. - I am not covering the books you should study, university studies, certificates, etc.

- I assume you have basic understanding of NLP stuffs, programming knowledge ( mainly Python and Maths ).

- You might argue, why Maths as everything is automated. Well, well, behind the scene, almost everything is Maths 🧠 )

- Calculus, Probability, Linear Algebra

- You need to know, Lets say what is matrix, how dot product works, etc etc.

- These are some of the resources which I suggest you to get started.

- After knowing the basics and how things work, it's upon you, what to do ( Or lets say if it's your cup of tea / coffee or not )

Remember one thing, using LLMs and implementing are two different things, you need not necessary know how to implement, but you need to know how to use it in right way.

- A Hacker's Guide to Language Models by Jeremy Howard.

- [1hr Talk] Intro to Large Language Models by Andrej Karpathy.

- Neural Networks: Zero to Hero by Andrej Karpathy.

- Building RAG from scratch Using Python, LangChain and OpenAI API by Santiago.

-

fast.ai courses -->

Optional but highly recommended - DeepLearning.AI short courses -- My request, try to complete all this free short courses.

- DeepLearning.AI Specializations

- Prompt Engineering Guide

- OpenAI doc about Prompt Engineering

- Strategies to harness the power of LLMs -Prompt Engineering

- There is one from deeplearing.ai free short courses too about ChatGPT Prompt Engineering for Developers.

- There are many courses, articles, videos about this topic, it needs constant learning and experimenting.

Frameworks which I have explored untill now, there are many, you can give a try ( your world, your rules )

OpenAI has really good documentation and Cookbook

- There is unlimited knoweledge you can grasp, try to find the best ones and follow them instead of jumping among videos.

- Main thing is to understand things and try it yourself. Unless you try (practice youself), you won't learn.

- I have videos on LLMs with playlist on langchain, chainlit and Llamaindex. Many LLMs videos to follow in 2024

Main thing I want to highlight, practice practice and practice, take help with AI assistants 👇

- Perplexity AI --> let's put this way, it's Google Search with LLMs with it.

- Perplexity Labs, For Open Source models

- ChatGPT --> Based on your need, free or paid version. ( Team, Enterprise , etc)

- Bing Chat , Bing Enterprise.

- Hugging Chat

- Le Chat Mistral

- First, think on tweeking basic stuffs

- Cleaning document ( choose right parsing , eg. LlamaParse, Unstructured )

- Better Chunking strategies

- Choosing right embeddings model

- Choosing right Vectorstore

- Passing parsing Instructions, Reranking

- Choosing right Large Language Models

Links to follow for better understanding.

- Chunk visualizer

- Tokenizer, from OpenAI

- Huggingface Massive Text Embedding Benchmark (MTEB) Leaderboard

- What is a Vector Database & How Does it Work? Use Cases + Examples

- Chunking Strategies for LLM Applications

- 🤗 Open LLM Leaderboard

- 🏆 LMSYS Chatbot Arena Leaderboard

- 12 RAG Pain Points and Proposed Solutions

- Optimizing RAG with Hybrid Search & Reranking

- Improving RAG performance with Knowledge Graphs

- Enhancing RAG with a Multi-Agent System

- Llama-3-Groq-Tool-Use Models

- Berkeley Funciton Calling Leaderboard

- Independent analysis of AI models and API providers 📌

Cheers !!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm-resources

Similar Open Source Tools

llm-resources

llm-resources is a repository providing resources to get started with Large Language Models (LLMs). It includes videos on Neural Networks and LLMs, free courses, prompt engineering guides, explored frameworks, AI assistants, and tips on making RAG work properly. The repository also contains important links and updates related to LLMs, AWS, RAG, agents, model context protocol, and more. It aims to help individuals with a basic understanding of NLP and programming knowledge to explore and utilize LLMs effectively.

ml-engineering

This repository provides a comprehensive collection of methodologies, tools, and step-by-step instructions for successful training of large language models (LLMs) and multi-modal models. It is a technical resource suitable for LLM/VLM training engineers and operators, containing numerous scripts and copy-n-paste commands to facilitate quick problem-solving. The repository is an ongoing compilation of the author's experiences training BLOOM-176B and IDEFICS-80B models, and currently focuses on the development and training of Retrieval Augmented Generation (RAG) models at Contextual.AI. The content is organized into six parts: Insights, Hardware, Orchestration, Training, Development, and Miscellaneous. It includes key comparison tables for high-end accelerators and networks, as well as shortcuts to frequently needed tools and guides. The repository is open to contributions and discussions, and is licensed under Attribution-ShareAlike 4.0 International.

llmops-duke-aipi

LLMOps Duke AIPI is a course focused on operationalizing Large Language Models, teaching methodologies for developing applications using software development best practices with large language models. The course covers various topics such as generative AI concepts, setting up development environments, interacting with large language models, using local large language models, applied solutions with LLMs, extensibility using plugins and functions, retrieval augmented generation, introduction to Python web frameworks for APIs, DevOps principles, deploying machine learning APIs, LLM platforms, and final presentations. Students will learn to build, share, and present portfolios using Github, YouTube, and Linkedin, as well as develop non-linear life-long learning skills. Prerequisites include basic Linux and programming skills, with coursework available in Python or Rust. Additional resources and references are provided for further learning and exploration.

product-manager-prompts

A treasure trove of Generative AI prompt engineering tailored for product managers and product owners. It provides prompts for completing tasks, exploring ideas, conducting research, facilitating communication, and jumpstarting templates. Join the community to enrich this collection with insights, prompts, synthetic data, and examples, making it an indispensable resource for product management.

humanlayer

HumanLayer is a Python toolkit designed to enable AI agents to interact with humans in tool-based and asynchronous workflows. By incorporating humans-in-the-loop, agentic tools can access more powerful and meaningful tasks. The toolkit provides features like requiring human approval for function calls, human as a tool for contacting humans, omni-channel contact capabilities, granular routing, and support for various LLMs and orchestration frameworks. HumanLayer aims to ensure human oversight of high-stakes function calls, making AI agents more reliable and safe in executing impactful tasks.

miniLLMFlow

Mini LLM Flow is a 100-line minimalist LLM framework designed for agents, task decomposition, RAG, etc. It aims to be the framework used by LLMs, focusing on high-level programming paradigms while stripping away low-level implementation details. It serves as a learning resource and allows LLMs to design, build, and maintain projects themselves.

ai-notes

Notes on AI state of the art, with a focus on generative and large language models. These are the "raw materials" for the https://lspace.swyx.io/ newsletter. This repo used to be called https://github.com/sw-yx/prompt-eng, but was renamed because Prompt Engineering is Overhyped. This is now an AI Engineering notes repo.

learnhouse

LearnHouse is an open-source platform that allows anyone to easily provide world-class educational content. It supports various content types, including dynamic pages, videos, and documents. The platform is still in early development and should not be used in production environments. However, it offers several features, such as dynamic Notion-like pages, ease of use, multi-organization support, support for uploading videos and documents, course collections, user management, quizzes, course progress tracking, and an AI-powered assistant for teachers and students. LearnHouse is built using various open-source projects, including Next.js, TailwindCSS, Radix UI, Tiptap, FastAPI, YJS, PostgreSQL, LangChain, and React.

oreilly-retrieval-augmented-gen-ai

This repository focuses on Retrieval-Augmented Generation (RAG) and Large Language Models (LLMs). It provides code and resources to augment LLMs with real-time data for dynamic, context-aware applications. The content covers topics such as semantic search, fine-tuning embeddings, building RAG chatbots, evaluating LLMs, and using knowledge graphs in RAG. Prerequisites include Python skills, knowledge of machine learning and LLMs, and introductory experience with NLP and AI models.

Sarvadnya

Sarvadnya is a repository focused on interfacing custom data using Large Language Models (LLMs) through Proof-of-Concepts (PoCs) like Retrieval Augmented Generation (RAG) and Fine-Tuning. It aims to enable domain adaptation for LLMs to answer on user-specific corpora. The repository also covers topics such as Indic-languages models, 3D World Simulations, Knowledge Graphs Generation, Signal Processing, Drones, UAV Image Processing, and Floor Plan Segmentation. It provides insights into building chatbots of various modalities, preparing videos, and creating content for different platforms like Medium, LinkedIn, and YouTube. The tech stacks involved range from enterprise solutions like Google Doc AI and Microsoft Azure Language AI Services to open-source tools like Langchain and HuggingFace.

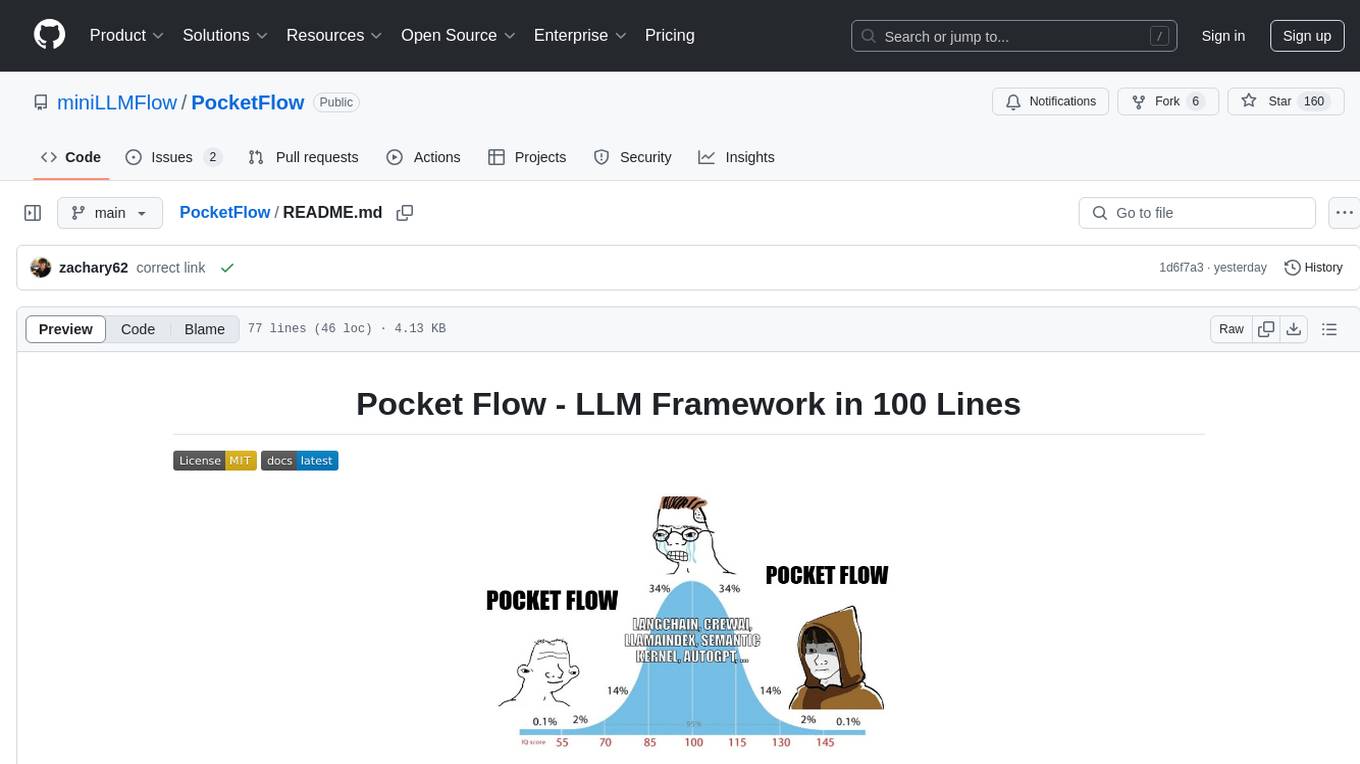

PocketFlow

Pocket Flow is a 100-line minimalist LLM framework designed for (Multi-)Agents, Task Decomposition, RAG, etc. It aims to be the framework used by LLMs, focusing on stripping away low-level implementation details and emphasizing high-level programming paradigms. Pocket Flow serves as a learning resource and provides a core abstraction of a nested directed graph for breaking down tasks into multiple steps.

start-machine-learning

Start Machine Learning in 2024 is a comprehensive guide for beginners to advance in machine learning and artificial intelligence without any prior background. The guide covers various resources such as free online courses, articles, books, and practical tips to become an expert in the field. It emphasizes self-paced learning and provides recommendations for learning paths, including videos, podcasts, and online communities. The guide also includes information on building language models and applications, practicing through Kaggle competitions, and staying updated with the latest news and developments in AI. The goal is to empower individuals with the knowledge and resources to excel in machine learning and AI.

kitops

KitOps is a packaging and versioning system for AI/ML projects that uses open standards so it works with the AI/ML, development, and DevOps tools you are already using. KitOps simplifies the handoffs between data scientists, application developers, and SREs working with LLMs and other AI/ML models. KitOps' ModelKits are a standards-based package for models, their dependencies, configurations, and codebases. ModelKits are portable, reproducible, and work with the tools you already use.

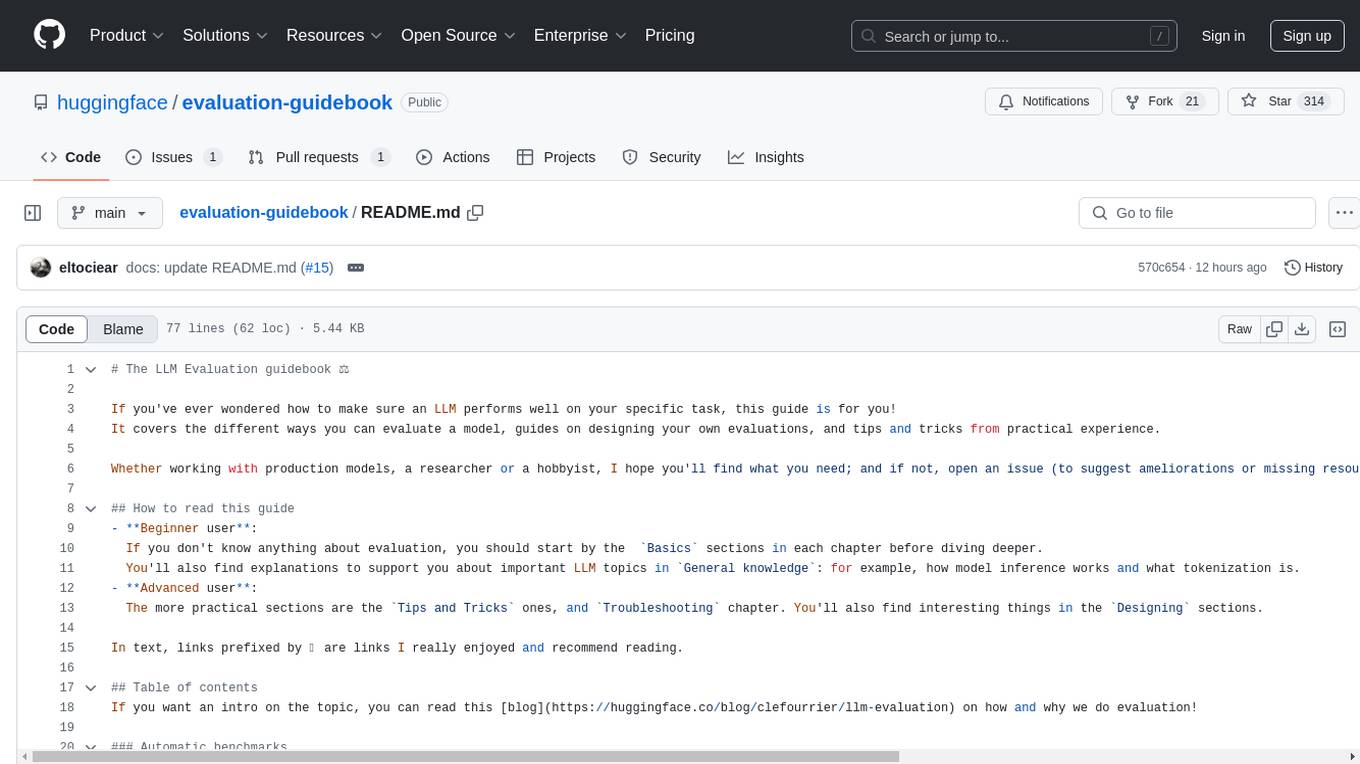

evaluation-guidebook

The LLM Evaluation guidebook provides comprehensive guidance on evaluating language model performance, including different evaluation methods, designing evaluations, and practical tips. It caters to both beginners and advanced users, offering insights on model inference, tokenization, and troubleshooting. The guide covers automatic benchmarks, human evaluation, LLM-as-a-judge scenarios, troubleshooting practicalities, and general knowledge on LLM basics. It also includes planned articles on automated benchmarks, evaluation importance, task-building considerations, and model comparison challenges. The resource is enriched with recommended links and acknowledgments to contributors and inspirations.

Second-Me

Second Me is an open-source prototype that allows users to craft their own AI self, preserving their identity, context, and interests. It is locally trained and hosted, yet globally connected, scaling intelligence across an AI network. It serves as an AI identity interface, fostering collaboration among AI selves and enabling the development of native AI apps. The tool prioritizes individuality and privacy, ensuring that user information and intelligence remain local and completely private.

For similar tasks

llm-resources

llm-resources is a repository providing resources to get started with Large Language Models (LLMs). It includes videos on Neural Networks and LLMs, free courses, prompt engineering guides, explored frameworks, AI assistants, and tips on making RAG work properly. The repository also contains important links and updates related to LLMs, AWS, RAG, agents, model context protocol, and more. It aims to help individuals with a basic understanding of NLP and programming knowledge to explore and utilize LLMs effectively.

Awesome-LLM-RAG-Application

Awesome-LLM-RAG-Application is a repository that provides resources and information about applications based on Large Language Models (LLM) with Retrieval-Augmented Generation (RAG) pattern. It includes a survey paper, GitHub repo, and guides on advanced RAG techniques. The repository covers various aspects of RAG, including academic papers, evaluation benchmarks, downstream tasks, tools, and technologies. It also explores different frameworks, preprocessing tools, routing mechanisms, evaluation frameworks, embeddings, security guardrails, prompting tools, SQL enhancements, LLM deployment, observability tools, and more. The repository aims to offer comprehensive knowledge on RAG for readers interested in exploring and implementing LLM-based systems and products.

rag-cookbooks

Welcome to the comprehensive collection of advanced + agentic Retrieval-Augmented Generation (RAG) techniques. This repository covers the most effective advanced + agentic RAG techniques with clear implementations and explanations. It aims to provide a helpful resource for researchers and developers looking to use advanced RAG techniques in their projects, offering ready-to-use implementations and guidance on evaluation methods. The RAG framework addresses limitations of Large Language Models by using external documents for in-context learning, ensuring contextually relevant and accurate responses. The repository includes detailed descriptions of various RAG techniques, tools used, and implementation guidance for each technique.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.