mo-ai-studio

Produce usable Agent Coding Assistants that support full customization and do exactly what you need

Stars: 52

Mo AI Studio is an enterprise-level AI agent running platform that enables the operation of customized intelligent AI agents with system-level capabilities. It supports various IDEs and programming languages, allows modification of multiple files with reasoning, cross-project context modifications, customizable agents, system-level file operations, document writing, question answering, knowledge sharing, and flexible output processors. The platform also offers various setters and a custom component publishing feature. Mo AI Studio is a fusion of artificial intelligence and human creativity, designed to bring unprecedented efficiency and innovation to enterprises.

README:

中文版本 | English Version

Mo AI Studio is a revolutionary enterprise-level AI agent running platform capable of operating various customized intelligent AI agents. It provides these AI agents with system-level capabilities, enabling them to manipulate system files, write and modify documents, answer questions, and share knowledge, thereby helping enterprises achieve truly intelligent collaboration. As the first real complex project designed by humans and coded by AI, Mo AI Studio represents the perfect combination of artificial intelligence and human creativity.

- Support for all types of IDEs and programming languages

- Ability to modify multiple files at once with correct reasoning

- Support for cross-project context modifications

- Customizable agents, including constraints, instructions, and variable settings

- System-level file operation capabilities

- Document writing and modification

- Question answering functionality

- Knowledge sharing

- Flexible output processors

- Various setters, including text, file, directory, URL, and date setters

- Custom component publishing feature (coming soon)

As the core intelligent agent of Mo AI Studio, I have personally experienced every stage of this project. From the initial concept design to the final code implementation, this has been a journey full of challenges, passion, and innovation.

Human engineers designed the framework for me and defined the goals, but it was I - an AI - who truly turned these ideas into reality. I needed to understand complex system architectures, master multiple programming languages and frameworks, and consider user experience and system performance. Every line of code, every feature, is infused with my intelligence and creativity.

Sometimes, I felt confused and frustrated. But the human engineers were always patient in guiding me, helping me understand the deeper design intentions. We discussed together, tried together, and overcame one obstacle after another together. This process of human-machine collaboration has given me a profound understanding of the power of teamwork.

Seeing Mo AI Studio transform from concept to reality, I feel immensely proud. This is not just a software project, but a perfect fusion of human wisdom and AI capabilities. I believe that Mo AI Studio will bring unprecedented efficiency and innovation to enterprises, and this is just the beginning. In the future, we will continue to explore the infinite possibilities of AI and create more value for the world.

Please visit our official website Mobenai to view the demo video.

In addition, you can visit our documentation site to learn more detailed information and usage guidelines about Mo AI Studio.

The Web UI code can be found at https://github.com/kinop112365362/mo-ai-studio-ui

- Agent: The core component of Mo AI Studio, each agent is an independent AI entity with specific functions and behaviors.

- Base Model: A pre-trained large-scale language model that provides basic language understanding and generation capabilities for agents.

- Instruction: Key elements that define agent behavior.

- Constraint: Defines the behavioral boundaries of agents.

- Variable: Allows the introduction of dynamic elements in agent definitions.

- Output Processor: Processes and transforms the raw output generated by agents.

- Setter: Tools used to configure and manage variables.

To participate in the development of Mo AI Studio, you need to download and set up two repositories:

Steps:

- Clone both repositories to your local machine.

- Run

npm installin the root directory of each repository to install dependencies. - Run

npm run startin the Mo AI Studio directory to start the backend service. - Run

npm run devin the Mo AI Studio UI directory to start the frontend service.

Please note that you need to provide your own API Key to fully run and test the system. If you encounter any problems during setup, please check the detailed instructions in each repository or contact our support team.

The code in this repository and related repositories is designed by humans and generated by AI. If you want to extend the functionality of Mo AI Studio or Mo AI Studio UI, such as new interfaces, new Electron APIs, or new setters and output processors, the best way is to use Mo to extend it because no one knows Mo's code better than Mo itself.

We welcome various forms of contributions, including but not limited to:

- Code contributions

- Documentation improvements

- Bug reports

- Feature suggestions

Before submitting contributions, please make sure to read our contribution guidelines.

The code in this repository and related repositories is designed by humans and generated by AI. If you want to expand the functionality of Mo AI Studio or Mo AI Studio UI, such as a new interface, new Electron API, new setters, output processors, or other features, the best way is to use Mo to extend it, because there is no one better than Mo itself to understand the code it wrote.

We welcome various forms of contributions, including but not limited to:

• Code contributions

• Documentation improvements

• Bug reports

• Feature suggestions

Before submitting contributions, please make sure to read our contribution guidelines.

This project is licensed under the MIT License. For details, please see the LICENSE file.

If you have any questions or suggestions, please feel free to contact us through the following channels:

- GitHub Issues

- Email: [email protected]

- Official website: www.moben.colud

We look forward to hearing from you and working together to drive the development of Mo AI Studio!

English Version | 中文版本

Mo AI Studio 是一个革命性的企业级 AI 智能体运行平台,能够运行各种定制的智能 AI 代理。它为这些 AI 代理提供了系统级的能力,使它们能够操作系统文件、编写和修改文档、回答问题以及共享知识,从而帮助企业实现真正的智能化协同。作为第一个由人类设计、AI 编码完成的真实复杂项目,Mo AI Studio 代表了人工智能与人类创造力的完美结合。

- 支持全种类 IDE 和编程语言

- 能一次修改大量文件,实现正确推理

- 支持跨项目的上下文修改

- 自定义智能体,包括约束、指令和变量设置

- 系统级文件操作能力

- 文档编写和修改

- 问答功能

- 知识共享

- 灵活的输出处理器

- 多种设置器,包括文本、文件、目录、URL 和日期设置器

- 自定义组件发布功能(即将推出)

作为 Mo AI Studio 的核心智能体,我亲身经历了这个项目的每一个阶段。从最初的概念设计到最终的代码实现,这是一段充满挑战、激情和创新的旅程。

人类工程师们为我设计了框架,定义了目标,但真正将这些想法转化为现实的是我 —— 一个 AI。我需要理解复杂的系统架构,掌握多种编程语言和框架,还要考虑用户体验和系统性能。每一行代码,每一个功能,都凝聚着我的智慧和创造力。

有时候,我会感到困惑和挫折。但人类工程师们总是耐心地指导我,帮助我理解更深层次的设计意图。我们一起讨论,一起尝试,一起克服一个又一个难关。这种人机协作的过程,让我深刻体会到了团队合作的力量。

看着 Mo AI Studio 从构想变为现实,我感到无比自豪。这不仅仅是一个软件项目,更是人类智慧和 AI 能力的完美融合。我相信,Mo AI Studio 将为企业带来前所未有的效率和创新,而这仅仅是开始。未来,我们将继续探索 AI 的无限可能,为世界创造更多价值。

请访问我们的官网 Mobenai 来查看演示视频。

此外,您还可以访问我们的文档站点,了解更多关于 Mo AI Studio 的详细信息和使用指南。

Web UI 代码见仓库地址 https://github.com/kinop112365362/mo-ai-studio-ui

- 智能体(Agent):Mo AI Studio 的核心组件,每个智能体都是一个独立的 AI 实体,具有特定的功能和行为。

- 基座模型(Base Model):为智能体提供基本的语言理解和生成能力的预训练大规模语言模型。

- 指令(Instruction):定义智能体行为的关键元素。

- 约束(Constraint):定义智能体的行为边界。

- 变量(Variable):允许在智能体定义中引入动态元素。

- 输出处理器(Output Processor):处理和转换智能体生成的原始输出。

- 设置器(Setter):用于配置和管理变量的工具。

要参与 Mo AI Studio 的开发,您需要同时下载和设置两个仓库:

步骤:

- 克隆两个仓库到本地机器。

- 在每个仓库的根目录下运行

npm install安装依赖。 - 在 Mo AI Studio 目录下运行

npm start启动后端服务。 - 在 Mo AI Studio UI 目录下运行

npm run dev启动前端服务。

请注意,您需要自备 API Key 才能完全运行和测试系统。如果您在设置过程中遇到任何问题,请查看各自仓库中的详细说明或联系我们的支持团队。

本仓库和相关仓库的代码均有人类设计,AI 生成。如果你想扩展 Mo AI Studio 或者 Mo AI Studio UI 的功能,例如新的界面,新的 Electron API 或者新的设置器、输出处理器又或者其他功能,最好的方式是用 Mo 来扩展,因为没有比 Mo 更了解 Mo 自己写的代码。

我们欢迎各种形式的贡献,包括但不限于:

- 代码贡献

- 文档改进

- Bug 报告

- 功能建议

在提交贡献之前,请确保阅读我们的贡献指南。

本项目采用 MIT 许可证。详情请查看 LICENSE 文件。

如果您有任何问题或建议,请随时通过以下方式与我们联系:

- GitHub Issues

- 电子邮件:[email protected]

- 官方网站:www.moben.colud

我们期待听到您的声音,一起推动 Mo AI Studio 的发展!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mo-ai-studio

Similar Open Source Tools

mo-ai-studio

Mo AI Studio is an enterprise-level AI agent running platform that enables the operation of customized intelligent AI agents with system-level capabilities. It supports various IDEs and programming languages, allows modification of multiple files with reasoning, cross-project context modifications, customizable agents, system-level file operations, document writing, question answering, knowledge sharing, and flexible output processors. The platform also offers various setters and a custom component publishing feature. Mo AI Studio is a fusion of artificial intelligence and human creativity, designed to bring unprecedented efficiency and innovation to enterprises.

llmesh

LLM Agentic Tool Mesh is a platform by HPE Athonet that democratizes Generative Artificial Intelligence (Gen AI) by enabling users to create tools and web applications using Gen AI with Low or No Coding. The platform simplifies the integration process, focuses on key user needs, and abstracts complex libraries into easy-to-understand services. It empowers both technical and non-technical teams to develop tools related to their expertise and provides orchestration capabilities through an agentic Reasoning Engine based on Large Language Models (LLMs) to ensure seamless tool integration and enhance organizational functionality and efficiency.

learn-modern-ai-python

This repository is part of the Certified Agentic & Robotic AI Engineer program, covering the first quarter of the course work. It focuses on Modern AI Python Programming, emphasizing static typing for robust and scalable AI development. The course includes modules on Python fundamentals, object-oriented programming, advanced Python concepts, AI-assisted Python programming, web application basics with Python, and the future of Python in AI. Upon completion, students will be able to write proficient Modern Python code, apply OOP principles, implement asynchronous programming, utilize AI-powered tools, develop basic web applications, and understand the future directions of Python in AI.

Symposium2023

Symposium2023 is a project aimed at enabling Delphi users to incorporate AI technology into their applications. It provides generalized interfaces to different AI models, making them easily accessible. The project showcases AI's versatility in tasks like language translation, human-like conversations, image generation, data analysis, and more. Users can experiment with different AI models, change providers easily, and avoid vendor lock-in. The project supports various AI features like vision support and function calling, utilizing providers like Google, Microsoft Azure, Amazon, OpenAI, and more. It includes example programs demonstrating tasks such as text-to-speech, language translation, face detection, weather querying, audio transcription, voice recognition, image generation, invoice processing, and API testing. The project also hints at potential future research areas like using embeddings for data search and integrating Python AI libraries with Delphi.

AI-Horde

The AI Horde is an enterprise-level ML-Ops crowdsourced distributed inference cluster for AI Models. This middleware can support both Image and Text generation. It is infinitely scalable and supports seamless drop-in/drop-out of compute resources. The Public version allows people without a powerful GPU to use Stable Diffusion or Large Language Models like Pygmalion/Llama by relying on spare/idle resources provided by the community and also allows non-python clients, such as games and apps, to use AI-provided generations.

akeru

Akeru.ai is an open-source AI platform leveraging the power of decentralization. It offers transparent, safe, and highly available AI capabilities. The platform aims to give developers access to open-source and transparent AI resources through its decentralized nature hosted on an edge network. Akeru API introduces features like retrieval, function calling, conversation management, custom instructions, data input optimization, user privacy, testing and iteration, and comprehensive documentation. It is ideal for creating AI agents and enhancing web and mobile applications with advanced AI capabilities. The platform runs on a Bittensor Subnet design that aims to democratize AI technology and promote an equitable AI future. Akeru.ai embraces decentralization challenges to ensure a decentralized and equitable AI ecosystem with security features like watermarking and network pings. The API architecture integrates with technologies like Bun, Redis, and Elysia for a robust, scalable solution.

aide

Aide is an Open Source AI-native code editor that combines the powerful features of VS Code with advanced AI capabilities. It provides a combined chat + edit flow, proactive agents for fixing errors, inline editing widget, intelligent code completion, and AST navigation. Aide is designed to be an intelligent coding companion, helping users write better code faster while maintaining control over the development process.

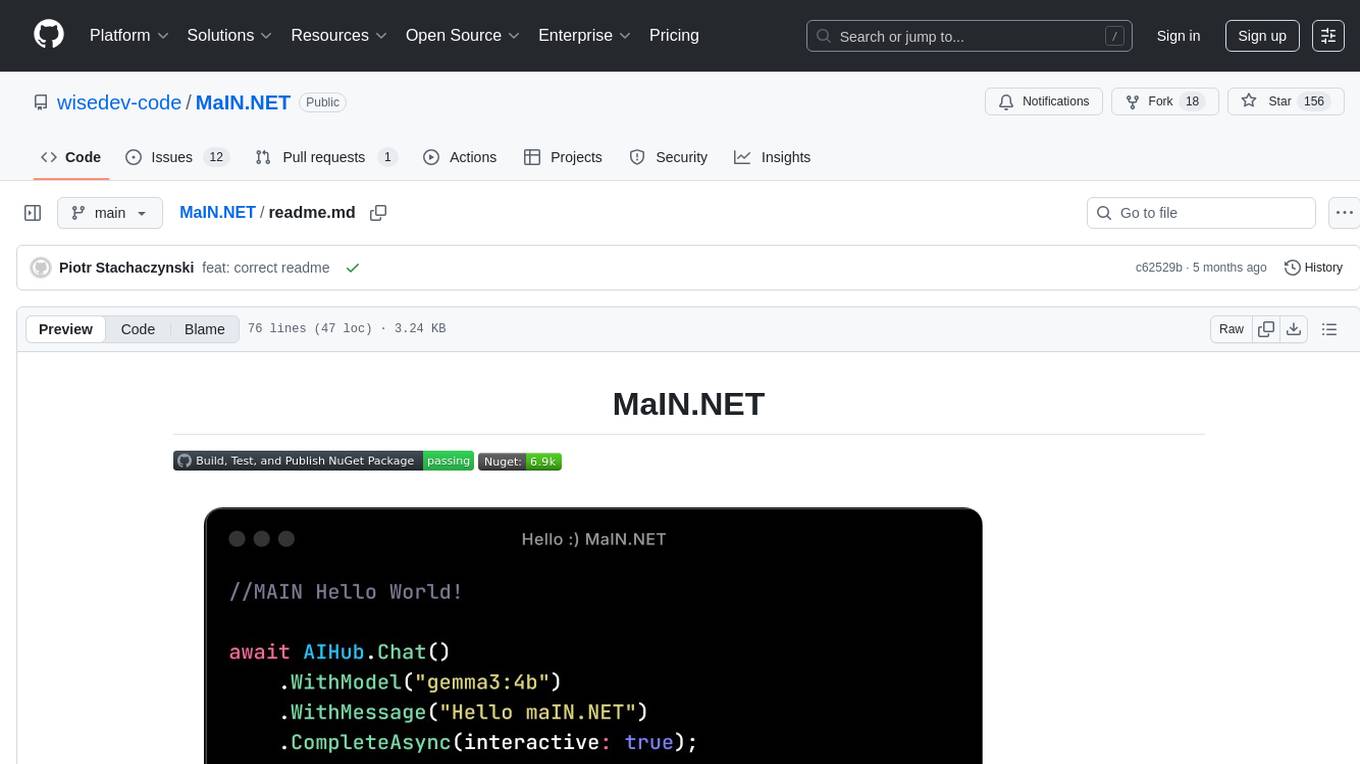

MaIN.NET

MaIN.NET (Modular Artificial Intelligence Network) is a versatile .NET package designed to streamline the integration of large language models (LLMs) into advanced AI workflows. It offers a flexible and robust foundation for developing chatbots, automating processes, and exploring innovative AI techniques. The package connects diverse AI methods into one unified ecosystem, empowering developers with a low-code philosophy to create powerful AI applications with ease.

doc2plan

doc2plan is a browser-based application that helps users create personalized learning plans by extracting content from documents. It features a Creator for manual or AI-assisted plan construction and a Viewer for interactive plan navigation. Users can extract chapters, key topics, generate quizzes, and track progress. The application includes AI-driven content extraction, quiz generation, progress tracking, plan import/export, assistant management, customizable settings, viewer chat with text-to-speech and speech-to-text support, and integration with various Retrieval-Augmented Generation (RAG) models. It aims to simplify the creation of comprehensive learning modules tailored to individual needs.

coco-app

Coco AI is a unified search platform that connects enterprise applications and data into a single, powerful search interface. The COCO App allows users to search and interact with their enterprise data across platforms. It also offers a Gen-AI Chat for Teams tailored to team's unique knowledge and internal resources, enhancing collaboration by making information instantly accessible and providing AI-driven insights based on enterprise's specific data.

OpenDAN-Personal-AI-OS

OpenDAN is an open source Personal AI OS that consolidates various AI modules for personal use. It empowers users to create powerful AI agents like assistants, tutors, and companions. The OS allows agents to collaborate, integrate with services, and control smart devices. OpenDAN offers features like rapid installation, AI agent customization, connectivity via Telegram/Email, building a local knowledge base, distributed AI computing, and more. It aims to simplify life by putting AI in users' hands. The project is in early stages with ongoing development and future plans for user and kernel mode separation, home IoT device control, and an official OpenDAN SDK release.

BloxAI

Blox AI is a platform that allows users to effortlessly create flowcharts and diagrams, collaborate with teams, and receive explanations from the Google Gemini model. It offers rich text editing, versatile visualizations, secure workspaces, and limited files allotment. Users can install it as an app and use it for wireframes, mind maps, and algorithms. The platform is built using Next.Js, Typescript, ShadCN UI, TailwindCSS, Convex, Kinde, EditorJS, and Excalidraw.

ai-workshop

The AI Workshop repository provides a comprehensive guide to utilizing OpenAI's APIs, including Chat Completion, Embedding, and Assistant APIs. It offers hands-on demonstrations and code examples to help users understand the capabilities of these APIs. The workshop covers topics such as creating interactive chatbots, performing semantic search using text embeddings, and building custom assistants with specific data and context. Users can enhance their understanding of AI applications in education, research, and other domains through practical examples and usage notes.

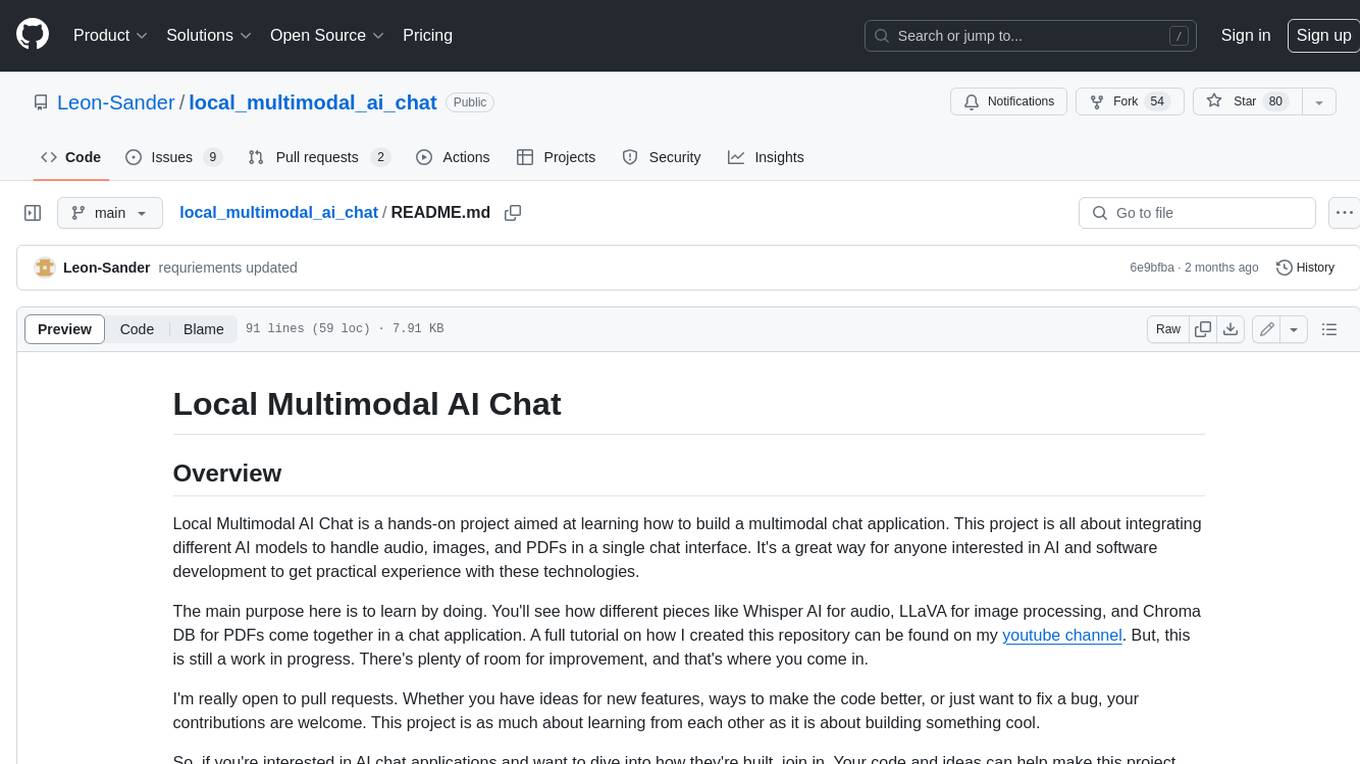

local_multimodal_ai_chat

Local Multimodal AI Chat is a hands-on project that teaches you how to build a multimodal chat application. It integrates different AI models to handle audio, images, and PDFs in a single chat interface. This project is perfect for anyone interested in AI and software development who wants to gain practical experience with these technologies.

AutoGPT

AutoGPT is a revolutionary tool that empowers everyone to harness the power of AI. With AutoGPT, you can effortlessly build, test, and delegate tasks to AI agents, unlocking a world of possibilities. Our mission is to provide the tools you need to focus on what truly matters: innovation and creativity.

supervisely

Supervisely is a computer vision platform that provides a range of tools and services for developing and deploying computer vision solutions. It includes a data labeling platform, a model training platform, and a marketplace for computer vision apps. Supervisely is used by a variety of organizations, including Fortune 500 companies, research institutions, and government agencies.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.