llm_benchmark

None

Stars: 514

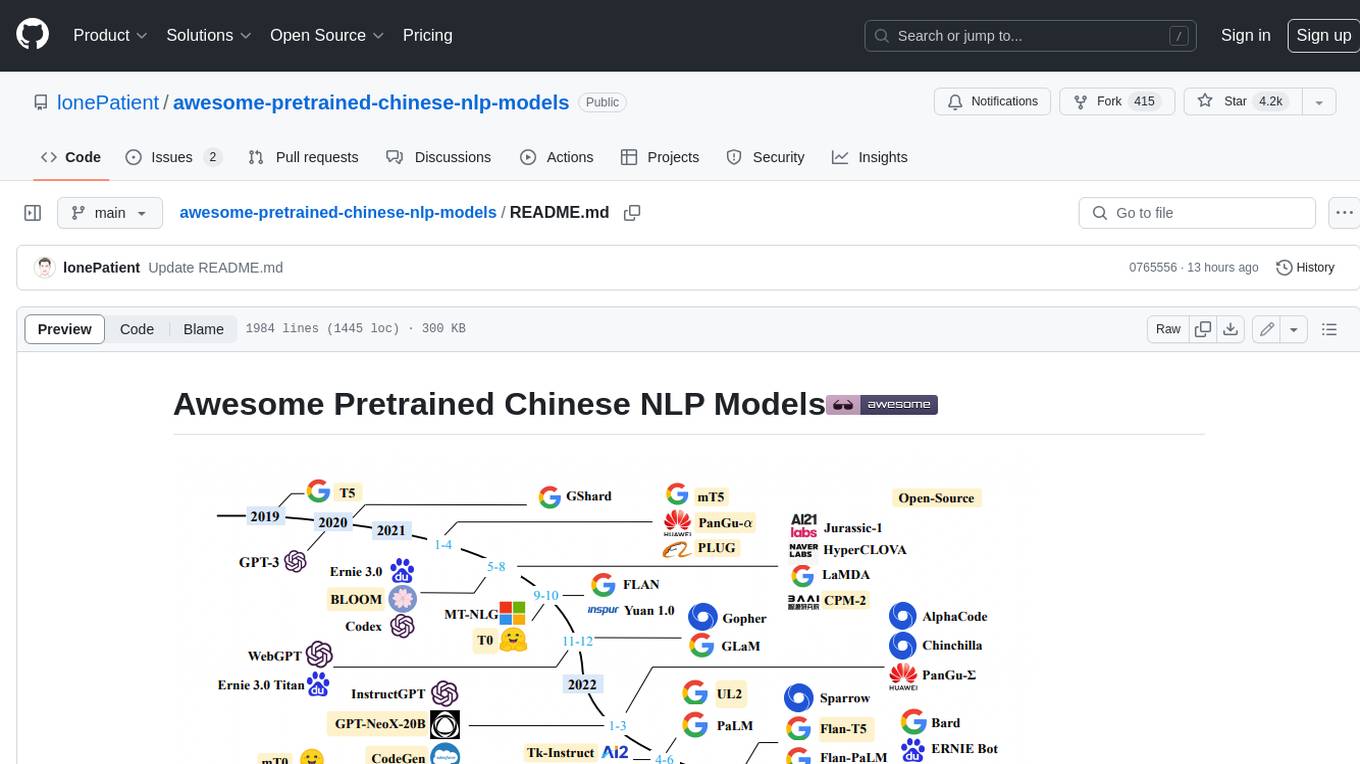

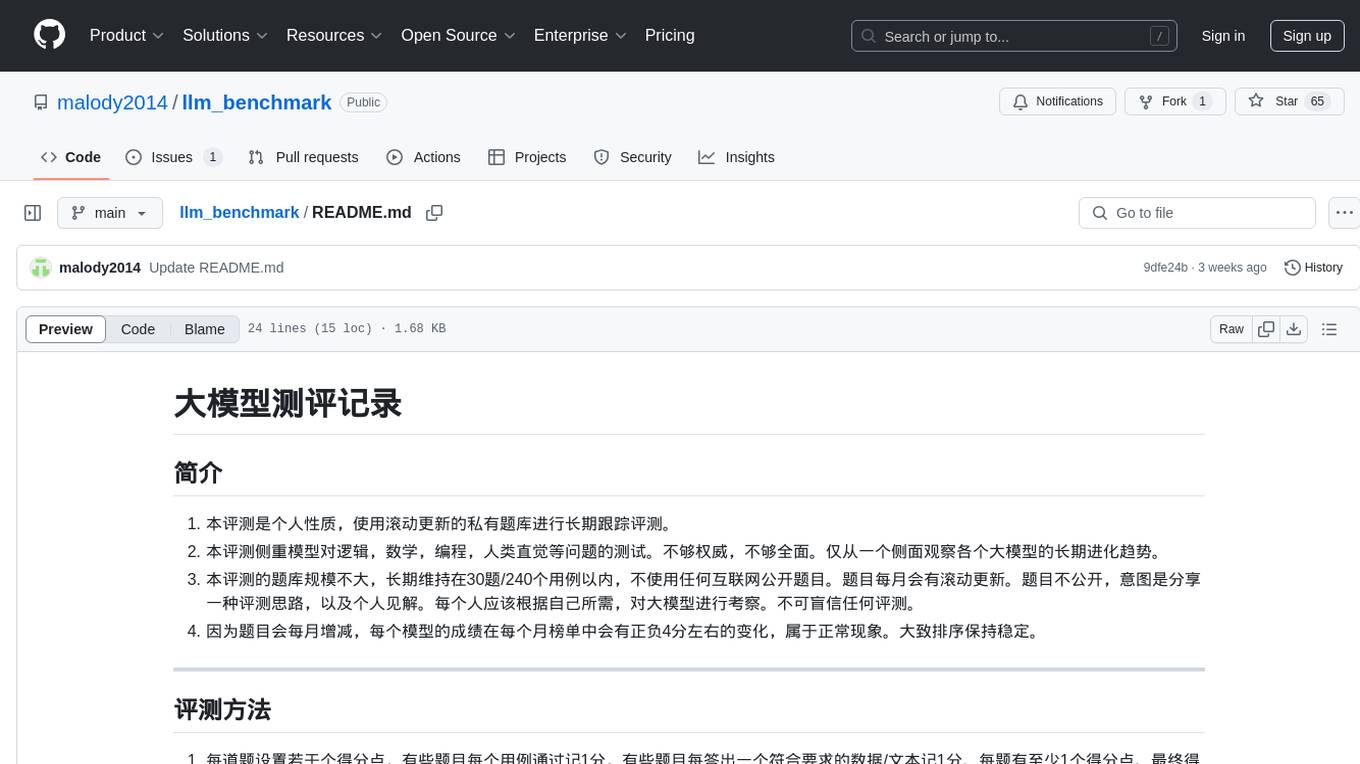

The 'llm_benchmark' repository is a personal evaluation project that tracks and tests various large models using a private question bank. It focuses on testing models' logic, mathematics, programming, and human intuition. The evaluation is not authoritative or comprehensive but aims to observe the long-term evolution trends of different large models. The question bank is small, with around 30 questions/240 test cases, and is not publicly available on the internet. The questions are updated monthly to share evaluation methods and personal insights. Users should assess large models based on their own needs and not blindly trust any evaluation. Model scores may vary by around +/-4 points each month due to question changes, but the overall ranking remains stable.

README:

- 本评测是个人性质,使用滚动更新的私有题库进行长期跟踪评测。

- 本评测侧重模型对逻辑,数学,编程,人类直觉等问题的测试。不够权威,不够全面。仅从一个侧面观察各个大模型的长期进化趋势。

- 本评测的题库规模不大,长期维持在30题/240个用例以内,不使用任何互联网公开题目。题目每月会有滚动更新。题目不公开,意图是分享一种评测思路,以及个人见解。每个人应该根据自己所需,对大模型进行考察。不可盲信任何评测。

- 因为题目会每月增减,每个模型的成绩在每个月榜单中会有正负4分左右的变化,属于正常现象。大致排序保持稳定。

- 每道题设置若干个得分点,有些题目每个用例通过记1分,有些题目每答出一个符合要求的数据/文本记1分。每题有至少1个得分点。最终得分是计分除以得分点总数,再乘以10。(即每道题满分10分)

- 每题要求推导过程必须正确,猜对的答案不得分。部分题目有额外要求,输出多余答案会扣分,避免枚举。

- 要求回答必须完全符合题目要求,如果明确要求不写解释,不得编程等,而回答包含了解释或编程部分,即使正确,也记0分。

- 评测统一使用官方API或OpenRouter中转。官方有明确建议的温度值,使用官方温度,否则使用默认温度值0.1,推理模型限制思考长度30K,输出长度10K,无法分别设置的模型,设置总输出为40K。非推理模型设置输出长度10K。模型支持的MaxToken达不到上限,就按模型上限。其他参数均默认。部分不提供API的模型使用官网问答。每道题测3遍,取最高分。

2、文本摘要:阅读包含误导信息的文本,提取正确信息,按要求格式输出

4、魔方旋转:按规则拧魔方后求魔方颜色

11、岛屿面积:给定字符形式地图,求图中岛屿面积

16、插件调用:给定插件描述,要求根据文本信息输出正确插件调用和参数

20、桌游模拟:给出桌游规则,求各位玩家的终局状态

22、连续计算:按要求对数字进行连续数学变换

24、数字规律:给2个示例,找数字变换规律

27、旅游规划:给出候选旅游地和成本,求约束下最优解

28、符号定义:重新定义数学符号含义,求数学计算式值

29、符号还原:将数学符号含义打乱,给表达式,要求推导符号原始含义

30、日记整理:阅读长文本,按给定条件和多个要求整理文本,考察指令遵循

31、棋盘图案:求经过棋盘上给定2个点的最大面积等腰三角形顶点

32、干支纪年:天干中删除部分,求历史某一年的干支

34、地铁换乘:给13条线路,160个站点信息,求A到B的最短换乘方案

35、拼图问题:给7块拼图的形状描述,要求拼出指定图案

37、投影问题:给三维投影视图,求对应的立方体体积

38、函数求交:给多个函数求所有交点

39、火车售票:多个车次,多人次操作购票退票,求最终售票情况

40、代码推导:给100行算法代码和输入,要求纸面推导输出结果

41、交织文本解读:从多段交织混合文本中寻找问题答案

42、长文本总结:从文本中提炼关键数据,输出核心摘要

43、目标数:通过数学运算将给定数字组合,计算得到目标数字

44、工具组合:给定若干工具,通过使用工具,得到指定输出

45、程序编写:完成复杂约束下的python编程

46、字母组合:从字母序列中找出存在的单词

47、高阶迷宫:存在错误出入口和复杂路径的迷宫问题

48、字符处理:根据规则对一段文本进行字符级别处理和统计

49、激光布局:根据条件约束,在10x10空间中部署满足要求的激光器

| 模型 | 原始分数 | 原始中位 | 极限分数 | 中位分数 | 中位差距 | 变更 | 价格(元/百万) | 平均长度(字) | 使用成本(元) | 平均耗时(秒) | 发布时间 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| GPT-5(high) | 247.101 | 235.49 | 88.25 | 84.10 | 4.70% | - | ¥72.00 | 10115 | ¥41.18 | 308 | 25-08-07 |

| GPT-5 Mini(high) | 228.345 | 218.72 | 81.55 | 78.12 | 4.21% | - | ¥14.40 | 8227 | ¥8.24 | 172 | 25-08-07 |

| Grok4 | 221.408 | 209.41 | 79.07 | 74.79 | 5.42% | +35.2% | ¥108.00 | 1638 | ¥41.99 | 207 | 25-07-10 |

| Grok4 Fast(Think) | 206.669 | 189.24 | 73.81 | 67.59 | 8.43% | - | ¥3.60 | 587 | ¥1.55 | 67 | 25-09-19 |

| DeepSeek V3.1 Terminus(Think) | 197.008 | 161.07 | 70.36 | 57.52 | 18.24% | +2.8% | ¥12.00 | 28049 | ¥4.86 | 693 | 25-09-22 |

| DeepSeek V3.1(Think) | 191.582 | 151.57 | 68.42 | 54.13 | 20.88% | +14% | ¥12.00 | 33053 | ¥5.20 | 672 | 25-08-19 |

| gpt-oss-120b(high) | 177.739 | 149.82 | 63.48 | 53.51 | 15.71% | - | ¥3.60 | 16083 | ¥0.60 | 46 | 25-08-05 |

| Claude Sonnet 4(Think) | 177.66 | 134.50 | 63.45 | 48.04 | 24.29% | +16.2% | ¥108.00 | 8758 | ¥29.27 | 143 | 25-05-23 |

| Qwen3-235B-A22B-2507(Think) | 172.546 | 148.71 | 61.62 | 53.11 | 13.82% | +14.5% | ¥20.00 | 34589 | ¥11.29 | 426 | 25-07-25 |

| Gemini 2.5 Pro | 171.315 | 144.61 | 61.18 | 51.65 | 15.59% | +2% | ¥72.00 | 10136 | ¥22.43 | 90 | 25-06-05 |

| Doubao-Seed-1.6(Think) | 170.341 | 137.99 | 60.84 | 49.28 | 18.99% | - | ¥8.00 | 14708 | ¥2.48 | 262 | 25-06-11 |

| Qwen3-Max | 167.517 | 146.80 | 59.83 | 52.43 | 12.37% | +5.2% | ¥24.00 | 7894 | ¥2.92 | 144 | 25-09-24 |

| Gemini 2.5 Flash 0925(Think) | 163.574 | 125.06 | 58.42 | 44.67 | 23.54% | +26% | ¥18.00 | 9822 | ¥4.82 | 47 | 25-09-25 |

| Doubao-Seed-1.6-thinking-0715 | 162.945 | 138.07 | 58.19 | 49.31 | 15.27% | - | ¥8.00 | 21375 | ¥3.64 | 336 | 25-07-14 |

| Qwen3-Max-Preview | 159.149 | 118.52 | 56.84 | 42.33 | 25.53% | - | ¥24.00 | 5934 | ¥2.64 | 100 | 25-09-05 |

| Seed-OSS-36B | 156.288 | 120.89 | 55.82 | 43.17 | 22.65% | - | ¥4.00 | 25168 | ¥2.08 | 712 | 25-08-21 |

| Qwn3-Next-80B-A3B(Think) | 155.449 | 122.04 | 55.52 | 43.59 | 21.49% | - | ¥10.00 | 47809 | ¥7.85 | 420 | 25-09-12 |

| Qwen3-235B-A22B-2507 | 154.753 | 120.62 | 55.27 | 43.08 | 22.06% | +54% | ¥8.00 | 7671 | ¥1.11 | 224 | 25-07-21 |

| kimi-k2-0905-preview | 150.416 | 112.04 | 53.72 | 40.01 | 25.52% | +71% | ¥16.00 | 6832 | ¥1.83 | 261 | 25-09-05 |

| GLM-4.5(Think) | 148.449 | 105.30 | 53.02 | 37.61 | 29.07% | - | ¥8.00 | 29780 | ¥3.78 | 558 | 25-07-28 |

| Qwen3-30B-A3B-2507(Think) | 148.374 | 130.24 | 52.99 | 46.52 | 12.22% | +14.7% | ¥7.50 | 30570 | ¥3.37 | 156 | 25-07-29 |

| Qwn3-Next-80B-A3B | 146.955 | 107.57 | 52.48 | 38.42 | 26.80% | - | ¥4.00 | 9342 | ¥0.49 | 101 | 25-09-12 |

| Hunyuan T1 0711 | 143.833 | 113.60 | 51.37 | 40.57 | 21.02% | +29.9% | ¥4.00 | 28108 | ¥2.28 | 350 | 25-07-11 |

| GPT-5 Chat | 139.465 | 103.08 | 49.81 | 36.82 | 26.09% | - | ¥72.00 | 3117 | ¥3.93 | 17 | 25-08-07 |

| GLM-4.5-Air(Think) | 138.316 | 118.10 | 49.40 | 42.18 | 14.62% | - | ¥2.00 | 31147 | ¥0.98 | 280 | 25-07-28 |

| Claude Opus 4 | 136.415 | 112.65 | 48.72 | 40.23 | 17.42% | - | ¥540.00 | 1931 | ¥28.53 | 52 | 25-05-23 |

| gpt-oss-20b(high) | 136.033 | 107.12 | 48.58 | 38.26 | 21.25% | - | ¥1.44 | 26775 | ¥0.40 | 38 | 25-08-05 |

| Gemini 2.5 Flash(Think) | 129.735 | 89.43 | 46.33 | 31.94 | 31.06% | +1.9% | ¥18.00 | 13417 | ¥6.55 | 55 | 25-05-20 |

| Step-3 | 129.33 | 103.68 | 46.19 | 37.03 | 19.83% | - | ¥8.00 | 27091 | ¥3.43 | 777 | 25-07-31 |

| Qwen3-32B(Think) | 125.06 | 75.03 | 44.66 | 26.80 | 40.00% | - | ¥20.00 | 26689 | ¥10.26 | 558 | 25-04-29 |

| DeepSeek V3.1(Terminus) | 122.346 | 93.73 | 43.70 | 33.48 | 23.39% | +1.2% | ¥12.00 | 4290 | ¥0.87 | 114 | 25-09-22 |

| DeepSeek V3.1 | 120.888 | 97.88 | 43.17 | 34.96 | 19.04% | - | ¥12.00 | 4606 | ¥0.93 | 127 | 25-08-19 |

| SenseNova-V6.5-Pro | 120.123 | 86.03 | 42.90 | 30.73 | 28.38% | +12.9% | ¥9.00 | 29352 | ¥5.07 | 749 | 25-07-26 |

| MiniMax-M1 | 106.752 | 79.19 | 38.13 | 28.28 | 25.82% | - | ¥8.00 | 33678 | ¥4.35 | 1411 | 25-06-17 |

| GLM-4.5 | 106.198 | 85.05 | 37.93 | 30.38 | 19.91% | - | ¥8.00 | 6196 | ¥0.87 | 114 | 25-07-28 |

| Claude Sonnet 4 | 104.219 | 81.71 | 37.22 | 29.18 | 21.60% | +33.3% | ¥108.00 | 1107 | ¥2.92 | 17 | 25-05-23 |

| 讯飞星火X1 0725 | 102.652 | 79.25 | 36.66 | 28.30 | 22.80% | +91.1% | ¥12.00 | 15552 | ¥3.63 | 350 | 25-07-25 |

| 文心一言X1.1 | 100.114 | 85.70 | 35.76 | 30.61 | 14.39% | +9.9% | ¥4.00 | 13445 | ¥1.04 | 499 | 25-09-08 |

| Gemini 2.5 Flash 0925 | 93.017 | 70.91 | 33.22 | 25.33 | 23.76% | +19% | ¥18.00 | 7954 | ¥1.57 | 19 | 25-09-25 |

| Qwen3-30B-A3B-2507 | 92.68 | 74.23 | 33.10 | 26.51 | 19.90% | - | ¥3.00 | 8277 | ¥0.46 | 55 | 25-07-29 |

| ERINE-4.5 Turbo | 90.888 | 68.33 | 32.46 | 24.40 | 24.82% | +55.6% | ¥3.20 | 3989 | ¥0.23 | 86 | 25-04-24 |

| Grok4 Fast | 83.152 | 65.22 | 29.70 | 23.29 | 21.56% | - | ¥3.60 | 2630 | ¥0.12 | 8 | 25-09-19 |

| Ling-flash-2.0 | 82.167 | 70.35 | 29.35 | 25.12 | 14.39% | - | ¥4.00 | 9624 | ¥0.70 | 165 | 25-09-18 |

| GLM-4.5-Air | 81.382 | 56.07 | 29.07 | 20.03 | 31.10% | - | ¥2.00 | 6833 | ¥0.22 | 65 | 25-07-28 |

| Gemini 2.5 Flash | 77.897 | 58.54 | 27.82 | 20.91 | 24.85% | -9.4% | ¥18.00 | 6947 | ¥1.47 | 21 | 25-05-20 |

| Llama4 Maverick | 77.151 | 67.01 | 27.55 | 23.93 | 13.15% | - | - | 2795 | - | 19 | 25-04-05 |

| Hunyuan TurboS 0716 | 75.177 | 59.20 | 26.85 | 21.14 | 21.25% | +29.9% | ¥2.00 | 7866 | ¥0.27 | 125 | 25-07-16 |

| ERNIE-4.5-300B-A47B | 72.995 | 55.82 | 26.07 | 19.94 | 23.52% | - | ¥3.20 | 3516 | ¥0.19 | 66 | 25-06-29 |

| Doubao-Seed-1.6 | 71.403 | 47.80 | 25.50 | 17.07 | 33.06% | +5.3% | ¥8.00 | 1269 | ¥0.20 | 29 | 25-06-11 |

| Qwen3-32B | 64.083 | 56.48 | 22.89 | 20.17 | 11.87% | - | ¥8.00 | 1253 | ¥0.19 | 25 | 25-04-29 |

| Magistral Medium 2506(Think) | 61.007 | 26.93 | 21.79 | 9.62 | 55.86% | - | ¥36.00 | 20468 | ¥10.92 | 288 | 25-06-08 |

- 不支持调节温度的模型:GPT-5(high)、GPT-5 Mini(high)、文心一言X1.1

- 使用推荐温度0.3的模型:GLM-4.5(Think)、GLM-4.5-Air(Think)

- 使用推荐温度0.6的模型:Qwen3-235B-A22B-2507(Think)、kimi-k2-0905-preview、Qwn3-Next-80B-A3B(Think)

- 使用推荐温度0.7的模型:Qwen3-30B-A3B-2507(Think)、Step-3、Qwn3-Next-80B-A3B

- 使用推荐温度1.0的模型:Doubao-Seed-1.6-thinking-0715、MiniMax-M1

- 使用推荐温度1.1的模型:Seed-OSS-36B

- 新模型测试后,总榜实时更新

- 每月25号做一次成绩归档

- 每个模型的详细评测首发在知乎个人号: 知乎主页,和微信公众号:大模型观测员

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm_benchmark

Similar Open Source Tools

llm_benchmark

The 'llm_benchmark' repository is a personal evaluation project that tracks and tests various large models using a private question bank. It focuses on testing models' logic, mathematics, programming, and human intuition. The evaluation is not authoritative or comprehensive but aims to observe the long-term evolution trends of different large models. The question bank is small, with around 30 questions/240 test cases, and is not publicly available on the internet. The questions are updated monthly to share evaluation methods and personal insights. Users should assess large models based on their own needs and not blindly trust any evaluation. Model scores may vary by around +/-4 points each month due to question changes, but the overall ranking remains stable.

Chinese-LLaMA-Alpaca-3

Chinese-LLaMA-Alpaca-3 is a project based on Meta's latest release of the new generation open-source large model Llama-3. It is the third phase of the Chinese-LLaMA-Alpaca open-source large model series projects (Phase 1, Phase 2). This project open-sources the Chinese Llama-3 base model and the Chinese Llama-3-Instruct instruction fine-tuned large model. These models incrementally pre-train with a large amount of Chinese data on the basis of the original Llama-3 and further fine-tune using selected instruction data, enhancing Chinese basic semantics and instruction understanding capabilities. Compared to the second-generation related models, significant performance improvements have been achieved.

adata

AData is a free and open-source A-share database that focuses on transaction-related data. It provides comprehensive data on stocks, including basic information, market data, and sentiment analysis. AData is designed to be easy to use and integrate with other applications, making it a valuable tool for quantitative trading and AI training.

BlossomLM

BlossomLM is a series of open-source conversational large language models. This project aims to provide a high-quality general-purpose SFT dataset in both Chinese and English, making fine-tuning accessible while also providing pre-trained model weights. **Hint**: BlossomLM is a personal non-commercial project.

tokencost

Tokencost is a clientside tool for calculating the USD cost of using major Large Language Model (LLMs) APIs by estimating the cost of prompts and completions. It helps track the latest price changes of major LLM providers, accurately count prompt tokens before sending OpenAI requests, and easily integrate to get the cost of a prompt or completion with a single function. Users can calculate prompt and completion costs using OpenAI requests, count tokens in prompts formatted as message lists or string prompts, and refer to a cost table with updated prices for various LLM models. The tool also supports callback handlers for LLM wrapper/framework libraries like LlamaIndex and Langchain.

Chinese-LLaMA-Alpaca-2

Chinese-LLaMA-Alpaca-2 is a large Chinese language model developed by Meta AI. It is based on the Llama-2 model and has been further trained on a large dataset of Chinese text. Chinese-LLaMA-Alpaca-2 can be used for a variety of natural language processing tasks, including text generation, question answering, and machine translation. Here are some of the key features of Chinese-LLaMA-Alpaca-2: * It is the largest Chinese language model ever trained, with 13 billion parameters. * It is trained on a massive dataset of Chinese text, including books, news articles, and social media posts. * It can be used for a variety of natural language processing tasks, including text generation, question answering, and machine translation. * It is open-source and available for anyone to use. Chinese-LLaMA-Alpaca-2 is a powerful tool that can be used to improve the performance of a wide range of natural language processing tasks. It is a valuable resource for researchers and developers working in the field of artificial intelligence.

sanic-web

Sanic-Web is a lightweight, end-to-end, and easily customizable large model application project built on technologies such as Dify, Ollama & Vllm, Sanic, and Text2SQL. It provides a one-stop solution for developing large model applications, supporting graphical data-driven Q&A using ECharts, handling table-based Q&A with CSV files, and integrating with third-party RAG systems for general knowledge Q&A. As a lightweight framework, Sanic-Web enables rapid iteration and extension to facilitate the quick implementation of large model projects.

yudao-ui-admin-vue3

The yudao-ui-admin-vue3 repository is an open-source project focused on building a fast development platform for developers in China. It utilizes Vue3 and Element Plus to provide features such as configurable themes, internationalization, dynamic route permission generation, common component encapsulation, and rich examples. The project supports the latest front-end technologies like Vue3 and Vite4, and also includes tools like TypeScript, pinia, vueuse, vue-i18n, vue-router, unocss, iconify, and wangeditor. It offers a range of development tools and features for system functions, infrastructure, workflow management, payment systems, member centers, data reporting, e-commerce systems, WeChat public accounts, ERP systems, and CRM systems.

teaching-boyfriend-llm

The 'teaching-boyfriend-llm' repository contains study notes on LLM (Large Language Models) for the purpose of advancing towards AGI (Artificial General Intelligence). The notes are a collaborative effort towards understanding and implementing LLM technology.

indie-hacker-tools-plus

Indie Hacker Tools Plus is a curated repository of essential tools and technology stacks for independent developers. The repository aims to help developers enhance efficiency, save costs, and mitigate risks by using popular and validated tools. It provides a collection of tools recognized by the industry to empower developers with the most refined technical support. Developers can contribute by submitting articles, software, or resources through issues or pull requests.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

Chinese-LLaMA-Alpaca

This project open sources the **Chinese LLaMA model and the Alpaca large model fine-tuned with instructions**, to further promote the open research of large models in the Chinese NLP community. These models **extend the Chinese vocabulary based on the original LLaMA** and use Chinese data for secondary pre-training, further enhancing the basic Chinese semantic understanding ability. At the same time, the Chinese Alpaca model further uses Chinese instruction data for fine-tuning, significantly improving the model's understanding and execution of instructions.

yudao-cloud

Yudao-cloud is an open-source project designed to provide a fast development platform for developers in China. It includes various system functions, infrastructure, member center, data reports, workflow, mall system, WeChat public account, CRM, ERP, etc. The project is based on Java backend with Spring Boot and Spring Cloud Alibaba microservices architecture. It supports multiple databases, message queues, authentication systems, dynamic menu loading, SaaS multi-tenant system, code generator, real-time communication, integration with third-party services like WeChat, Alipay, and more. The project is well-documented and follows the Alibaba Java development guidelines, ensuring clean code and architecture.

Awesome-AGI

Awesome-AGI is a curated list of resources related to Artificial General Intelligence (AGI), including models, pipelines, applications, and concepts. It provides a comprehensive overview of the current state of AGI research and development, covering various aspects such as model training, fine-tuning, deployment, and applications in different domains. The repository also includes resources on prompt engineering, RLHF, LLM vocabulary expansion, long text generation, hallucination mitigation, controllability and safety, and text detection. It serves as a valuable resource for researchers, practitioners, and anyone interested in the field of AGI.

ruoyi-vue-pro

The ruoyi-vue-pro repository is an open-source project that provides a comprehensive development platform with various functionalities such as system features, infrastructure, member center, data reports, workflow, payment system, mall system, ERP system, CRM system, and AI big model. It is built using Java backend with Spring Boot framework and Vue frontend with different versions like Vue3 with element-plus, Vue3 with vben(ant-design-vue), and Vue2 with element-ui. The project aims to offer a fast development platform for developers and enterprises, supporting features like dynamic menu loading, button-level access control, SaaS multi-tenancy, code generator, real-time communication, integration with third-party services like WeChat, Alipay, and cloud services, and more.

For similar tasks

llm_benchmark

The 'llm_benchmark' repository is a personal evaluation project that tracks and tests various large models using a private question bank. It focuses on testing models' logic, mathematics, programming, and human intuition. The evaluation is not authoritative or comprehensive but aims to observe the long-term evolution trends of different large models. The question bank is small, with around 30 questions/240 test cases, and is not publicly available on the internet. The questions are updated monthly to share evaluation methods and personal insights. Users should assess large models based on their own needs and not blindly trust any evaluation. Model scores may vary by around +/-4 points each month due to question changes, but the overall ranking remains stable.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.