Spring-Broken-AI-Blog

一个基于 Next.js 15 + TypeScript + AI 构建的现代化智能博客系统,集成了 AI 智能助手和 RAG (检索增强生成) 功能。

Stars: 92

Spring Broken AI Blog is a modern intelligent blog system built on Next.js 15 + TypeScript + AI, integrating AI assistant and RAG (retrieval-augmented generation) functions. It features modern frontend using Next.js with App Router and Turbopack, full-stack TypeScript support, headless components with shadcn/ui + Radix UI + Tailwind CSS, NextAuth.js v4 + JWT authentication strategy, Prisma ORM with SQLite/PostgreSQL, ESLint + Prettier + Husky for code quality, and responsive design. The AI highlights include AI writing assistant based on Kimi API, vector index system using ChromaDB + Ollama for local vector storage, RAG chat function for intelligent Q&A based on article content, AI completion feature for smart content continuation in the editor, AI recommendations for automatic category and tag suggestions, and real-time display of AI-generated content.

README:

一个基于 Next.js 15 + TypeScript + AI 构建的现代化智能博客系统,集成了 AI 智能助手和 RAG (检索增强生成) 功能。

- ✅ 现代化前端: Next.js 15 + App Router + Turbopack

- ✅ 类型安全: 全栈 TypeScript 支持

- ✅ 无头组件: shadcn/ui + Radix UI + Tailwind CSS

- ✅ 身份认证: NextAuth.js v4 + JWT 策略

- ✅ 数据库: Prisma ORM + SQLite/PostgreSQL

- ✅ 代码质量: ESLint + Prettier + Husky

- ✅ 响应式设计: 移动端友好的界面

- 🤖 智能写作助手: 基于 Kimi API 的 AI 辅助创作

- 🧠 向量索引系统: ChromaDB + Ollama 实现本地向量存储

- 🔍 RAG 聊天功能: 基于文章内容的智能问答

- ✨ AI 补全功能: 编辑器内的智能内容续写

- 📝 智能推荐: AI 自动推荐分类和标签

- 💬 流式输出: 实时展示 AI 生成内容

- Next.js 15: React 全栈框架,使用 App Router + Turbopack

- TypeScript: 静态类型检查

- React 18: 用户界面库

- shadcn/ui: 无头组件库

- Radix UI: 无头 UI 原语

- Tailwind CSS: 实用优先的 CSS 框架

- Lucide React: 现代化图标库

- Novel: Notion 风格的编辑器

- react-markdown: Markdown 渲染

- highlight.js: 代码高亮

- Kimi API (Moonshot AI): AI 对话和生成

- Ollama: 本地 Embedding 生成 (nomic-embed-text 模型)

- ChromaDB: 向量数据库,用于 RAG 检索

- OpenAI SDK: 兼容 Kimi API 的调用方式

- Prisma 6.16.1: 现代化 ORM

- SQLite: 开发/生产环境数据库

- Prisma Adapter: NextAuth.js 数据库适配器

- NextAuth.js v4: 身份认证库

- JWT: 会话管理策略

- bcryptjs: 密码哈希

- PM2: Node.js 进程管理器

- Nginx: Web 服务器和反向代理

Spring-Broken-AI-Blog/

├── src/

│ ├── app/ # Next.js App Router 页面

│ │ ├── admin/ # 管理后台页面

│ │ │ ├── page.tsx # 后台首页

│ │ │ ├── posts/ # 文章管理

│ │ │ ├── categories/ # 分类管理

│ │ │ ├── tags/ # 标签管理

│ │ │ ├── profile/ # 个人资料

│ │ │ └── settings/ # 系统设置

│ │ ├── login/ # 登录页面

│ │ ├── api/ # API 路由

│ │ │ ├── auth/ # NextAuth.js API

│ │ │ ├── admin/ # 管理后台 API

│ │ │ └── ai/ # AI 功能 API

│ │ ├── posts/[slug]/ # 文章详情页

│ │ ├── category/[slug]/ # 分类页面

│ │ ├── globals.css # 全局样式

│ │ └── layout.tsx # 根布局

│ ├── components/ # React 组件

│ │ ├── ui/ # shadcn/ui 基础组件

│ │ ├── admin/ # 管理后台组件

│ │ │ ├── ai-assistant.tsx # AI 写作助手

│ │ │ ├── rag-chat.tsx # RAG 聊天组件

│ │ │ ├── post-editor.tsx # 文章编辑器

│ │ │ └── publish-dialog.tsx # 发布对话框

│ │ ├── markdown/ # Markdown 渲染组件

│ │ ├── posts/ # 文章展示组件

│ │ ├── providers/ # 上下文提供器

│ │ └── layout/ # 布局组件

│ ├── lib/ # 工具库和配置

│ │ ├── auth.ts # NextAuth.js 配置

│ │ ├── prisma.ts # Prisma 客户端

│ │ ├── utils.ts # 工具函数

│ │ ├── ai/ # AI 相关

│ │ │ ├── client.ts # AI 客户端 (Kimi + Ollama)

│ │ │ ├── prompts/ # AI 提示词

│ │ │ └── rag.ts # RAG 实现

│ │ ├── vector/ # 向量索引

│ │ │ ├── chunker.ts # 文本分块

│ │ │ ├── indexer.ts # 索引管理

│ │ │ └── store.ts # 向量存储 (ChromaDB)

│ │ └── editor/ # 编辑器相关

│ │ ├── ai-completion-extension.ts

│ │ └── markdown-converter.ts

│ └── types/ # TypeScript 类型定义

├── prisma/ # Prisma 数据库配置

│ ├── schema.prisma # 数据库模型

│ ├── seed.ts # 数据库种子

│ └── dev.db # SQLite 数据库 (开发环境)

├── scripts/ # 脚本工具

│ ├── ai/ # AI 服务脚本

│ │ ├── start-ai.sh # 启动 AI 服务 (开发)

│ │ └── stop-ai.sh # 停止 AI 服务 (开发)

│ └── README.md # 脚本说明文档

├── docs/ # 项目文档

├── public/ # 静态资源

├── components.json # shadcn/ui 配置

├── tailwind.config.ts # Tailwind CSS 配置

├── middleware.ts # Next.js 中间件 (路由保护)

└── ecosystem.config.js # PM2 配置文件

Node.js >= 18.0.0

npm >= 8.0.0

如需使用 AI 功能,需要安装以下服务:

# macOS

brew install ollama

# Linux

curl -fsSL https://ollama.com/install.sh | sh

# 启动 Ollama 服务

ollama serve

# 拉取 Embedding 模型

ollama pull nomic-embed-text

# 使用 Docker

docker run -d --name chromadb -p 8000:8000 chromadb/chroma:latest

# 或直接使用 Python

pip install chromadb

chroma run --host localhost --port 8000

# 1. 克隆项目

git clone <repository-url>

cd Spring-Broken-AI-Blog

# 2. 安装依赖

npm install

# 3. 配置环境变量

cp .env.example .env.local

编辑 .env.local 文件:

# 数据库配置

DATABASE_URL="file:./prisma/dev.db"

# NextAuth 配置

NEXTAUTH_SECRET="your-secret-key-at-least-32-characters-long"

NEXTAUTH_URL="http://localhost:7777"

# 管理员账户 (seed 时使用)

ADMIN_USERNAME="admin"

ADMIN_PASSWORD="0919"

# AI 配置 (可选)

KIMI_API_KEY="your-kimi-api-key"

KIMI_BASE_URL="https://api.moonshot.cn/v1"

KIMI_MODEL="moonshot-v1-32k"

# Ollama 配置 (用于向量生成)

OLLAMA_BASE_URL="http://localhost:11434"

OLLAMA_EMBEDDING_MODEL="nomic-embed-text"

# ChromaDB 配置 (用于向量存储)

CHROMADB_HOST="localhost"

CHROMADB_PORT="8000"

# 生成 Prisma 客户端

npm run db:generate

# 推送数据库架构

npm run db:push

# 填充初始数据

npm run db:seed

# 启动开发服务器 (端口 7777)

npm run dev

# 访问应用

# 前台: http://localhost:7777

# 登录: http://localhost:7777/login

# 后台: http://localhost:7777/admin

用户名: admin

密码: 0919

# 1. 安装依赖

npm install

# 2. 配置环境变量

cp .env.example .env.local

# 编辑 .env.local,配置数据库和 NextAuth

# 3. 初始化数据库

npm run db:generate && npm run db:push && npm run db:seed

# 4. 启动开发服务器

npm run dev

# 访问 http://localhost:7777

# 1. 安装依赖

npm install

# 2. 安装 Ollama (macOS)

brew install ollama

# 3. 启动 AI 服务

./scripts/ai/start-ai.sh

# 4. 配置环境变量

cp .env.example .env.local

# 编辑 .env.local,添加 Kimi API Key:

# KIMI_API_KEY="sk-your-key-here"

# 5. 初始化数据库

npm run db:generate && npm run db:push && npm run db:seed

# 6. 启动开发服务器

npm run dev

# 访问 http://localhost:7777

停止 AI 服务:

./scripts/ai/stop-ai.sh

项目提供了 start-ai.sh 和 stop-ai.sh 脚本来管理 AI 服务:

- start-ai.sh: 自动启动 Ollama (向量生成) 和 ChromaDB (向量存储)

- stop-ai.sh: 停止所有 AI 服务

注意:

- 首次运行

start-ai.sh会自动下载nomic-embed-text模型 (约 274MB) - 需要申请 Kimi API Key 才能使用 AI 对话功能

- 详细的启动指南和故障排查请查看 启动指南.md

项目使用严格的代码规范来保证代码质量:

# 代码检查

npm run lint

# 类型检查

npm run type-check

# 代码格式化

npm run format

# 构建项目

npm run build

# 开发

npm run dev # 启动开发服务器 (端口 7777)

npm run build # 构建生产版本

npm run start # 启动生产服务器 (端口 3000)

# 数据库

npm run db:generate # 生成 Prisma 客户端

npm run db:push # 推送 schema 到数据库

npm run db:migrate # 创建迁移

npm run db:seed # 填充种子数据

npm run db:studio # 打开 Prisma Studio

npm run db:reset # 重置数据库

# PM2 管理

npm run pm2:start # 启动 PM2 进程

npm run pm2:restart # 重启 PM2 进程

npm run pm2:stop # 停止 PM2 进程

npm run pm2:delete # 删除 PM2 进程

import { getAIClient } from "@/lib/ai/client";

// 获取客户端实例

const aiClient = getAIClient();

// 非流式对话

const response = await aiClient.chat([{ role: "user", content: "你好" }]);

console.log(response.content);

// 流式对话

await aiClient.chatStream(

[{ role: "user", content: "写一篇文章" }],

{},

(chunk) => {

console.log(chunk); // 实时输出

}

);

import { indexPost, indexAllPosts } from "@/lib/vector/indexer";

// 索引单篇文章

await indexPost("post-id");

// 强制重新索引

await indexPost("post-id", { force: true });

// 批量索引所有文章

const result = await indexAllPosts({ force: true });

console.log(

`成功: ${result.indexed}, 跳过: ${result.skipped}, 失败: ${result.failed}`

);

import { ragChat } from "@/lib/ai/rag";

// 使用 RAG 进行问答

const answer = await ragChat("如何使用 Next.js?");

创建 .env.production 文件:

# 数据库配置 (生产环境)

DATABASE_URL="file:./prisma/prod.db"

# NextAuth 配置

NEXTAUTH_SECRET="your-production-secret-key-at-least-32-characters"

NEXTAUTH_URL="http://your-domain.com"

# AI 配置 (可选)

KIMI_API_KEY="your-kimi-api-key"

KIMI_BASE_URL="https://api.moonshot.cn/v1"

KIMI_MODEL="moonshot-v1-32k"

# Ollama 配置

OLLAMA_BASE_URL="http://localhost:11434"

OLLAMA_EMBEDDING_MODEL="nomic-embed-text"

# ChromaDB 配置

CHROMADB_HOST="localhost"

CHROMADB_PORT="8000"

# 构建项目

npm run build

# 使用 PM2 启动

npm run pm2:start

# 或直接启动

npm start

# 完整部署流程 (构建 + 数据库设置)

npm run deploy:setup:prod

# 仅构建

npm run deploy:build

-

位置:

src/components/admin/ai-assistant.tsx - 功能: 基于 Kimi API 的 AI 辅助创作

-

特性:

- 多种写作模式: 续写、扩展、润色、总结

- 流式输出,实时展示生成内容

- 支持自定义提示词

-

位置:

src/components/admin/rag-chat.tsx - 功能: 基于文章内容的智能问答

-

技术:

- 向量检索: ChromaDB + Ollama Embedding

- 语义分块: 智能文本分块算法

- 上下文注入: 检索结果注入提示词

-

位置:

src/lib/editor/ai-completion-extension.ts - 功能: 编辑器内的智能内容续写

- 实现: 基于 ProseMirror 的编辑器扩展

-

位置:

src/components/admin/publish-dialog/ - 功能: AI 自动推荐分类和标签

- 实现: 基于文章内容的 NLP 分析

-

位置:

src/lib/vector/ - 功能: 文章内容的向量化存储

-

组件:

-

chunker.ts: 智能文本分块 -

indexer.ts: 索引管理 -

store.ts: ChromaDB 存储

-

- 启动指南 - AI 服务启动

- 图片管理指南 - 图片资源管理

- 部署指南 - 生产环境部署

- 从零打造 AI 智能博客 - 项目技术分享

- Kimi (推荐): https://platform.moonshot.cn/

- DeepSeek: https://platform.deepseek.com/

- 通义千问: https://dashscope.aliyun.com/

A: 确保以下服务已启动:

- Ollama 服务:

ollama serve - ChromaDB 服务:

chroma run --host localhost --port 8000 - 已配置环境变量:

.env.local中配置KIMI_API_KEY

A: 检查:

- Ollama 服务是否运行

- 是否已拉取模型:

ollama pull nomic-embed-text - ChromaDB 服务是否启动

- 查看控制台错误日志

A: 尝试:

# 重置数据库

npm run db:reset

# 或手动删除后重新生成

rm prisma/dev.db

npm run db:generate

npm run db:push

npm run db:seed

Spring Broken AI Blog - 用 AI 赋能写作,让博客更智能 ✨

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Spring-Broken-AI-Blog

Similar Open Source Tools

Spring-Broken-AI-Blog

Spring Broken AI Blog is a modern intelligent blog system built on Next.js 15 + TypeScript + AI, integrating AI assistant and RAG (retrieval-augmented generation) functions. It features modern frontend using Next.js with App Router and Turbopack, full-stack TypeScript support, headless components with shadcn/ui + Radix UI + Tailwind CSS, NextAuth.js v4 + JWT authentication strategy, Prisma ORM with SQLite/PostgreSQL, ESLint + Prettier + Husky for code quality, and responsive design. The AI highlights include AI writing assistant based on Kimi API, vector index system using ChromaDB + Ollama for local vector storage, RAG chat function for intelligent Q&A based on article content, AI completion feature for smart content continuation in the editor, AI recommendations for automatic category and tag suggestions, and real-time display of AI-generated content.

adnify

Adnify is an advanced code editor with ultimate visual experience and deep integration of AI Agent. It goes beyond traditional IDEs, featuring Cyberpunk glass morphism design style and a powerful AI Agent supporting full automation from code generation to file operations.

MarkMap-OpenAi-ChatGpt

MarkMap-OpenAi-ChatGpt is a Vue.js-based mind map generation tool that allows users to generate mind maps by entering titles or content. The application integrates the markmap-lib and markmap-view libraries, supports visualizing mind maps, and provides functions for zooming and adapting the map to the screen. Users can also export the generated mind map in PNG, SVG, JPEG, and other formats. This project is suitable for quickly organizing ideas, study notes, project planning, etc. By simply entering content, users can get an intuitive mind map that can be continuously expanded, downloaded, and shared.

TrainPPTAgent

TrainPPTAgent is an AI-based intelligent presentation generation tool. Users can input a topic and the system will automatically generate a well-structured and content-rich PPT outline and page-by-page content. The project adopts a front-end and back-end separation architecture: the front-end is responsible for interaction, outline editing, and template selection, while the back-end leverages large language models (LLM) and reinforcement learning (GRPO) to complete content generation and optimization, making the generated PPT more tailored to user goals.

MahoShojo-Generator

MahoShojo-Generator is a web-based AI structured generation tool that allows players to create personalized and evolving magical girls (or quirky characters) and related roles. It offers exciting cyber battles, storytelling activities, and even a ranking feature. The project also includes AI multi-channel polling, user system, public data card sharing, and sensitive word detection. It supports various functionalities such as character generation, arena system, growth and social interaction, cloud and sharing, and other features like scenario generation, tavern ecosystem linkage, and content safety measures.

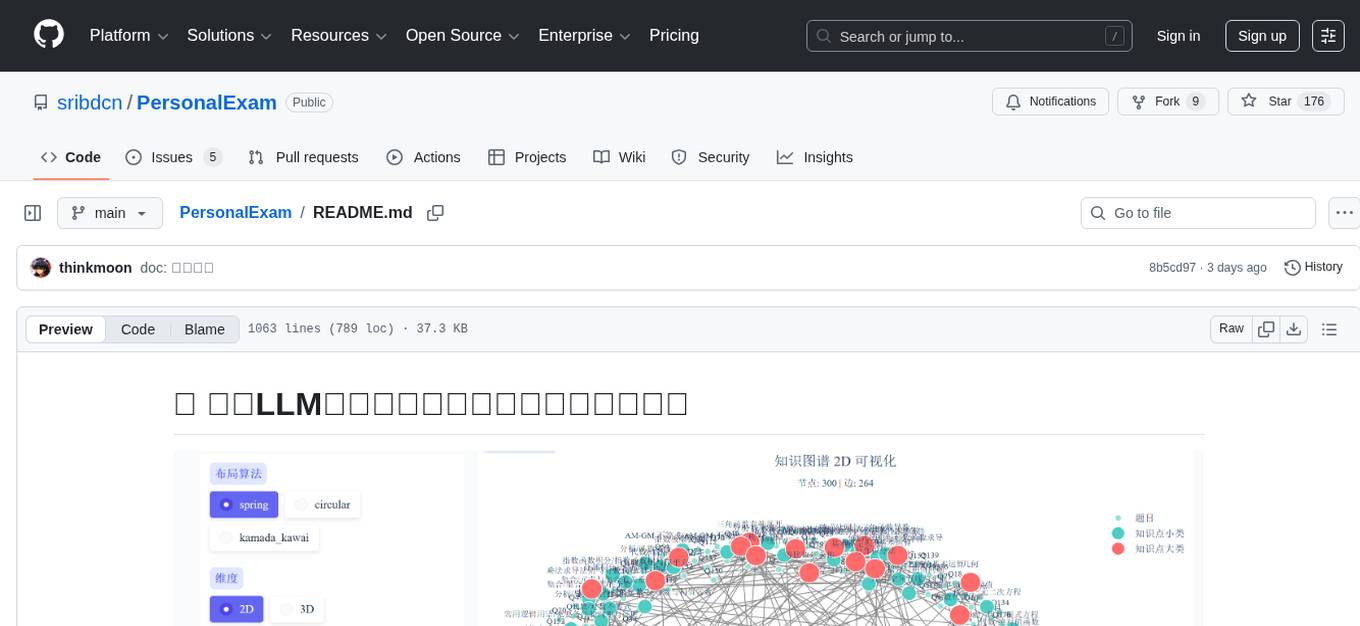

PersonalExam

PersonalExam is a personalized question generation system based on LLM and knowledge graph collaboration. It utilizes the BKT algorithm, RAG engine, and OpenPangu model to achieve personalized intelligent question generation and recommendation. The system features adaptive question recommendation, fine-grained knowledge tracking, AI answer evaluation, student profiling, visual reports, interactive knowledge graph, user management, and system monitoring.

AI-CloudOps

AI+CloudOps is a cloud-native operations management platform designed for enterprises. It aims to integrate artificial intelligence technology with cloud-native practices to significantly improve the efficiency and level of operations work. The platform offers features such as AIOps for monitoring data analysis and alerts, multi-dimensional permission management, visual CMDB for resource management, efficient ticketing system, deep integration with Prometheus for real-time monitoring, and unified Kubernetes management for cluster optimization.

DocTranslator

DocTranslator is a document translation tool that supports various file formats, compatible with OpenAI format API, and offers batch operations and multi-threading support. Whether for individual users or enterprise teams, DocTranslator helps efficiently complete document translation tasks. It supports formats like txt, markdown, word, csv, excel, pdf (non-scanned), and ppt for AI translation. The tool is deployed using Docker for easy setup and usage.

NGCBot

NGCBot is a WeChat bot based on the HOOK mechanism, supporting scheduled push of security news from FreeBuf, Xianzhi, Anquanke, and Qianxin Attack and Defense Community, KFC copywriting, filing query, phone number attribution query, WHOIS information query, constellation query, weather query, fishing calendar, Weibei threat intelligence query, beautiful videos, beautiful pictures, and help menu. It supports point functions, automatic pulling of people, ad detection, automatic mass sending, Ai replies, rich customization, and easy for beginners to use. The project is open-source and periodically maintained, with additional features such as Ai (Gpt, Xinghuo, Qianfan), keyword invitation to groups, automatic mass sending, and group welcome messages.

Lim-Code

LimCode is a powerful VS Code AI programming assistant that supports multiple AI models, intelligent tool invocation, and modular architecture. It features support for various AI channels, a smart tool system for code manipulation, MCP protocol support for external tool extension, intelligent context management, session management, and more. Users can install LimCode from the plugin store or via VSIX, or build it from the source code. The tool offers a rich set of features for AI programming and code manipulation within the VS Code environment.

prompt-optimizer

Prompt Optimizer is a powerful AI prompt optimization tool that helps you write better AI prompts, improving AI output quality. It supports both web application and Chrome extension usage. The tool features intelligent optimization for prompt words, real-time testing to compare before and after optimization, integration with multiple mainstream AI models, client-side processing for security, encrypted local storage for data privacy, responsive design for user experience, and more.

aitii-tekisuto

aitii-tekisuto is a unified technical documentation platform for AD domain control and data communication networks, covering architecture design, deployment, security hardening, and daily operation and maintenance. It helps you quickly build and maintain a stable and reliable enterprise network environment. The project uses MkDocs Material to provide a modern documentation site experience, integrating article content encryption functionality and smooth page transition animations for sensitive documents' security protection and optimized user browsing experience.

AutoGLM-GUI

AutoGLM-GUI is an AI-driven Android automation productivity tool that supports scheduled tasks, remote deployment, and 24/7 AI assistance. It features core functionalities such as deploying to servers, scheduling tasks, and creating an AI automation assistant. The tool enhances productivity by automating repetitive tasks, managing multiple devices, and providing a layered agent mode for complex task planning and execution. It also supports real-time screen preview, direct device control, and zero-configuration deployment. Users can easily download the tool for Windows, macOS, and Linux systems, and can also install it via Python package. The tool is suitable for various use cases such as server automation, batch device management, development testing, and personal productivity enhancement.

NovelForge

NovelForge is an AI-assisted writing tool with the potential for creating long-form content of millions of words. It offers a solution that combines world-building, structured content generation, and consistency maintenance. The tool is built around four core concepts: modular 'cards', customizable 'dynamic output models', flexible 'context injection', and consistency assurance through a 'knowledge graph'. It provides a highly structured and configurable writing environment, inspired by the Snowflake Method, allowing users to create and organize their content in a tree-like structure. NovelForge is highly customizable and extensible, allowing users to tailor their writing workflow to their specific needs.

LLMAI-writer

LLMAI-writer is a powerful AI tool for assisting in novel writing, utilizing state-of-the-art large language models to help writers brainstorm, plan, and create novels. Whether you are an experienced writer or a beginner, LLMAI-writer can help you efficiently complete the writing process.

For similar tasks

Spring-Broken-AI-Blog

Spring Broken AI Blog is a modern intelligent blog system built on Next.js 15 + TypeScript + AI, integrating AI assistant and RAG (retrieval-augmented generation) functions. It features modern frontend using Next.js with App Router and Turbopack, full-stack TypeScript support, headless components with shadcn/ui + Radix UI + Tailwind CSS, NextAuth.js v4 + JWT authentication strategy, Prisma ORM with SQLite/PostgreSQL, ESLint + Prettier + Husky for code quality, and responsive design. The AI highlights include AI writing assistant based on Kimi API, vector index system using ChromaDB + Ollama for local vector storage, RAG chat function for intelligent Q&A based on article content, AI completion feature for smart content continuation in the editor, AI recommendations for automatic category and tag suggestions, and real-time display of AI-generated content.

doc2plan

doc2plan is a browser-based application that helps users create personalized learning plans by extracting content from documents. It features a Creator for manual or AI-assisted plan construction and a Viewer for interactive plan navigation. Users can extract chapters, key topics, generate quizzes, and track progress. The application includes AI-driven content extraction, quiz generation, progress tracking, plan import/export, assistant management, customizable settings, viewer chat with text-to-speech and speech-to-text support, and integration with various Retrieval-Augmented Generation (RAG) models. It aims to simplify the creation of comprehensive learning modules tailored to individual needs.

RooFlow

RooFlow is a VS Code extension that enhances AI-assisted development by providing persistent project context and optimized mode interactions. It reduces token consumption and streamlines workflow by integrating Architect, Code, Test, Debug, and Ask modes. The tool simplifies setup, offers real-time updates, and provides clearer instructions through YAML-based rule files. It includes components like Memory Bank, System Prompts, VS Code Integration, and Real-time Updates. Users can install RooFlow by downloading specific files, placing them in the project structure, and running an insert-variables script. They can then start a chat, select a mode, interact with Roo, and use the 'Update Memory Bank' command for synchronization. The Memory Bank structure includes files for active context, decision log, product context, progress tracking, and system patterns. RooFlow features persistent context, real-time updates, mode collaboration, and reduced token consumption.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

lollms

LoLLMs Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

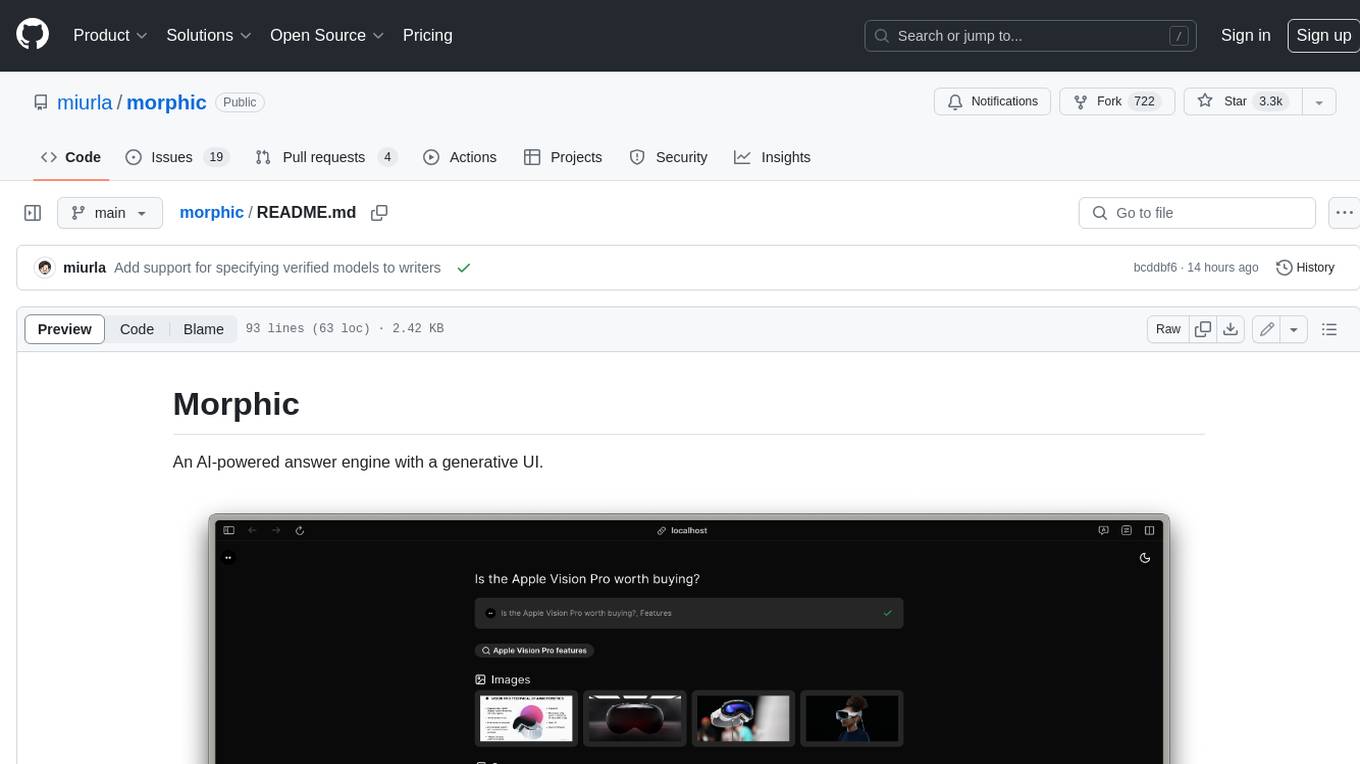

morphic

Morphic is an AI-powered answer engine with a generative UI. It utilizes a stack of Next.js, Vercel AI SDK, OpenAI, Tavily AI, shadcn/ui, Radix UI, and Tailwind CSS. To get started, fork and clone the repo, install dependencies, fill out secrets in the .env.local file, and run the app locally using 'bun dev'. You can also deploy your own live version of Morphic with Vercel. Verified models that can be specified to writers include Groq, LLaMA3 8b, and LLaMA3 70b.

llm

This repository contains a collection of experiments with Large Language Models (LLMs). The experiments explore various applications of LLMs, including text generation, question answering, and code generation. The repository also includes a setup guide and instructions on how to use the experiments.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.