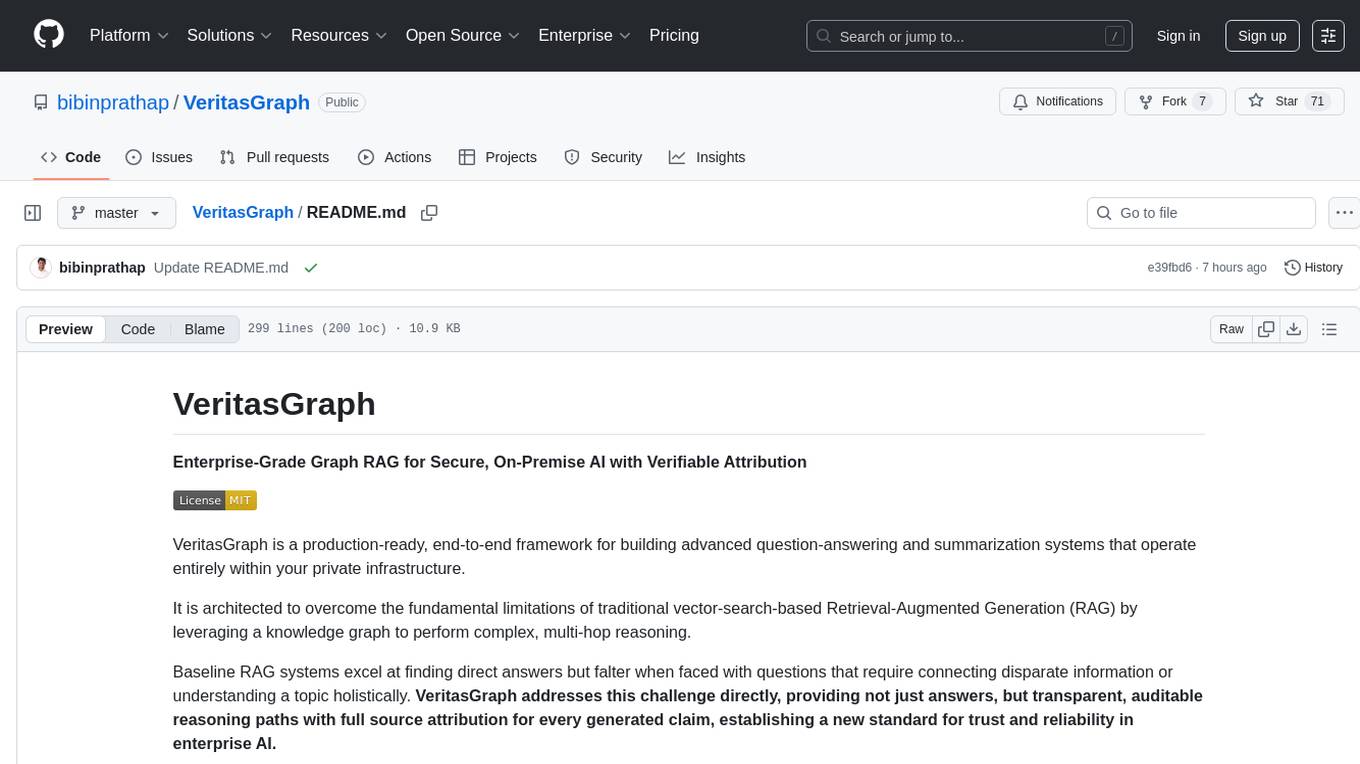

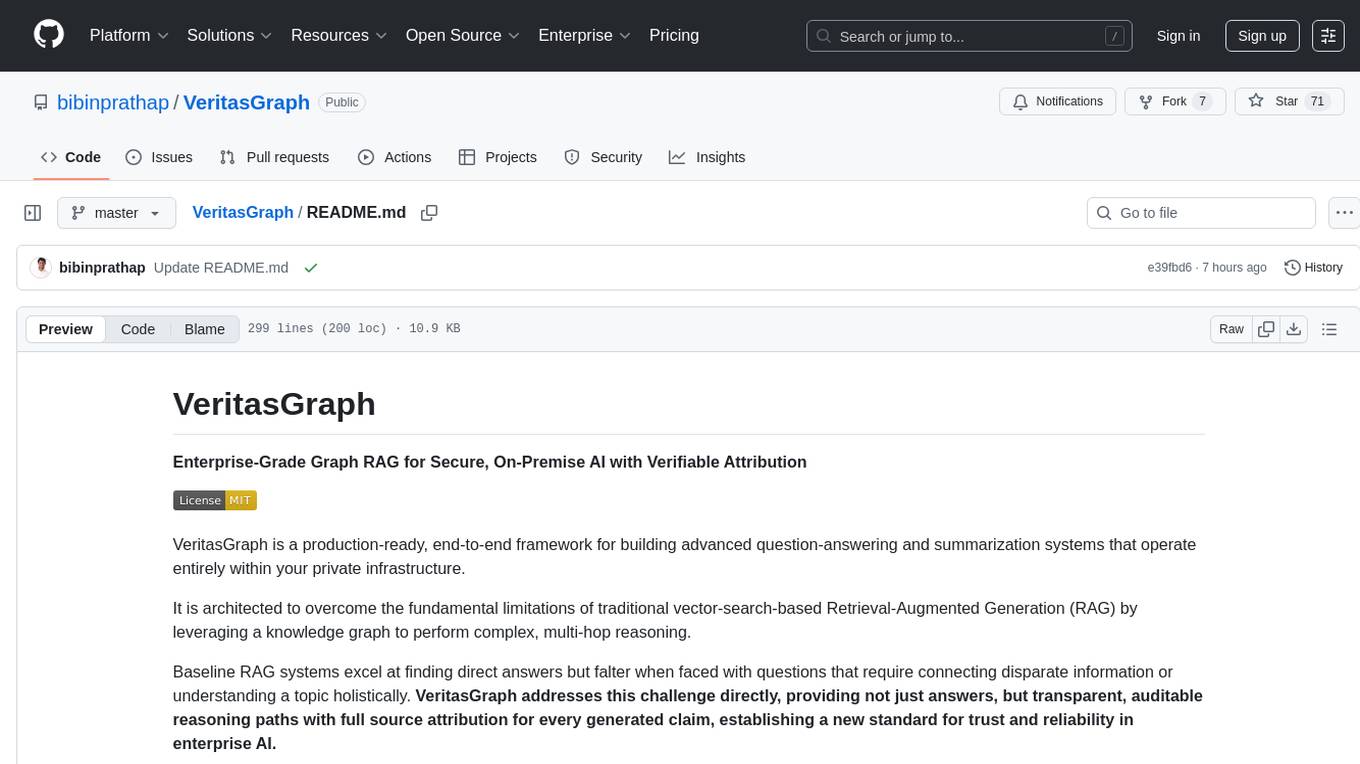

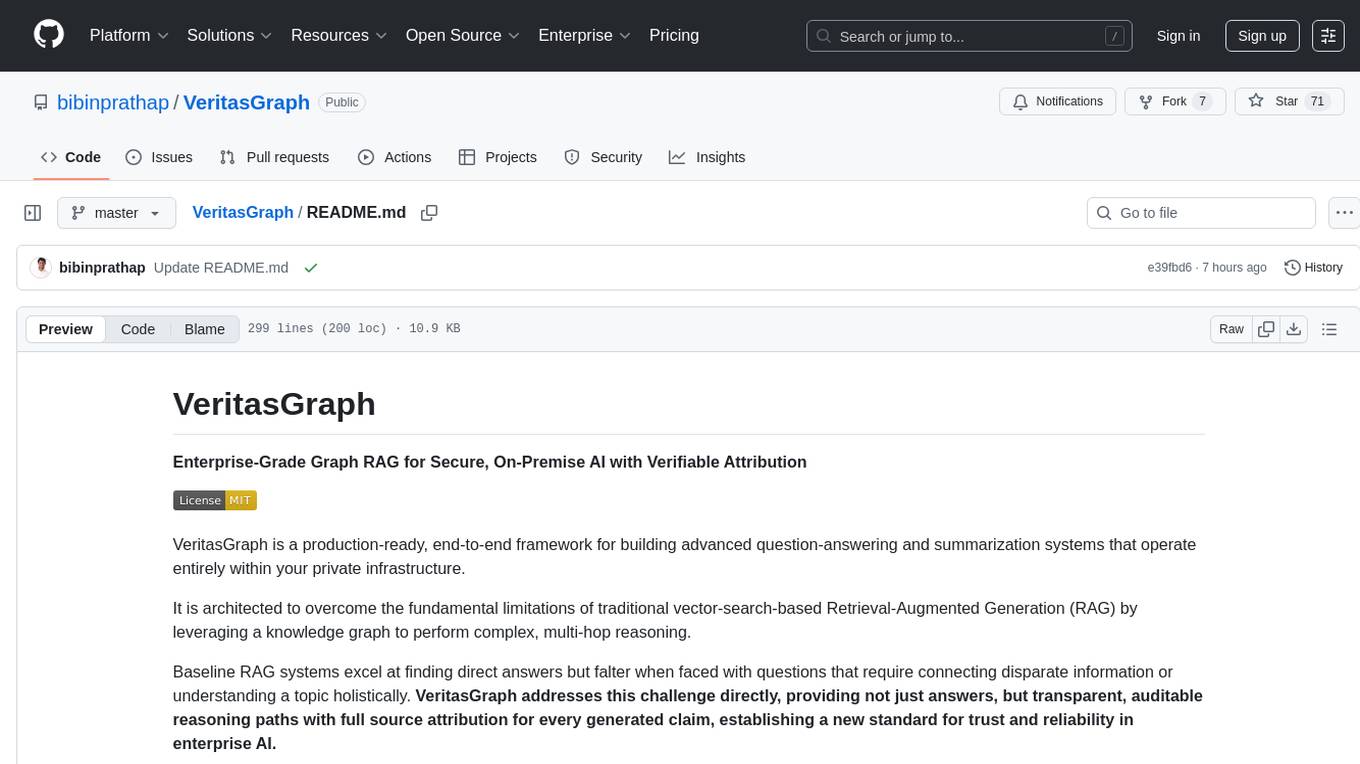

VeritasGraph

VeritasGraph: Enterprise-Grade Graph RAG for Secure, On-Premise AI with Verifiable Attribution

Stars: 83

VeritasGraph is an enterprise-grade graph RAG framework designed for secure, on-premise AI applications. It leverages a knowledge graph to perform complex, multi-hop reasoning, providing transparent, auditable reasoning paths with full source attribution. The framework excels at answering complex questions that traditional vector search engines struggle with, ensuring trust and reliability in enterprise AI. VeritasGraph offers full control over data and AI models, verifiable attribution for every claim, advanced graph reasoning capabilities, and open-source deployment with sovereignty and customization.

README:

Enterprise-Grade Graph RAG for Secure, On-Premise AI with Verifiable Attribution

VeritasGraph is a production-ready, end-to-end framework for building advanced question-answering and summarization systems that operate entirely within your private infrastructure.

It is architected to overcome the fundamental limitations of traditional vector-search-based Retrieval-Augmented Generation (RAG) by leveraging a knowledge graph to perform complex, multi-hop reasoning.

Baseline RAG systems excel at finding direct answers but falter when faced with questions that require connecting disparate information or understanding a topic holistically. VeritasGraph addresses this challenge directly, providing not just answers, but transparent, auditable reasoning paths with full source attribution for every generated claim, establishing a new standard for trust and reliability in enterprise AI.

Maintain 100% control over your data and AI models, ensuring maximum security and privacy.

Every generated claim is traced back to its source document, guaranteeing transparency and accountability.

Answer complex, multi-hop questions that go beyond the capabilities of traditional vector search engines.

Build a sovereign knowledge asset, free from vendor lock-in, with full ownership and customization.

A brief video demonstrating the core functionality of VeritasGraph, from data ingestion to multi-hop querying with full source attribution.

The following diagram illustrates the end-to-end pipeline of the VeritasGraph system:

graph TD

subgraph "Indexing Pipeline (One-Time Process)"

A --> B{Document Chunking};

B --> C{"LLM-Powered Extraction<br/>(Entities & Relationships)"};

C --> D[Vector Index];

C --> E[Knowledge Graph];

end

subgraph "Query Pipeline (Real-Time)"

F[User Query] --> G{Hybrid Retrieval Engine};

G -- "1. Vector Search for Entry Points" --> D;

G -- "2. Multi-Hop Graph Traversal" --> E;

G --> H{Pruning & Re-ranking};

H -- "Rich Reasoning Context" --> I{LoRA-Tuned LLM Core};

I -- "Generated Answer + Provenance" --> J{Attribution & Provenance Layer};

J --> K[Attributed Answer];

end

style A fill:#f2f2f2,stroke:#333,stroke-width:2px

style F fill:#e6f7ff,stroke:#333,stroke-width:2px

style K fill:#e6ffe6,stroke:#333,stroke-width:2pxI'm using Ollama ( llama3.1) on Windows and Ollama (nomic-text-embed) for text embeddings

Please don't use WSL if you use LM studio for embeddings because it will have issues connecting to the services on Windows (LM studio)

Ollama's default context length is 2048, which might truncate the input and output when indexing

I'm using 12k context here (10*1024=12288), I tried using 10k before, but the results still gets truncated

Input / Output truncated might get you a completely out of context report in local search!!

Note that if you change the model in setttings.yaml and try to reindex, it will restart the whole indexing!

First, pull the models we need to use

ollama serve

# in another terminal

ollama pull llama3.1

ollama pull nomic-embed-text

Then build the model with the Modelfile in this repo

ollama create llama3.1-12k -f ./Modelfile

First, activate the conda enviroment

conda create -n rag python=<any version below 3.12>

conda activate rag

Clone this project then cd the directory

cd graphrag-ollama-config

Then pull the code of graphrag (I'm using a local fix for graphrag here) and install the package

cd graphrag-ollama

pip install -e ./

You can skip this step if you used this repo, but this is for initializing the graphrag folder

pip install sympy

pip install future

pip install ollama

python -m graphrag.index --init --root .

Create your .env file

cp .env.example .env

Move your input text to ./input/

Double check the parameters in .env and settings.yaml, make sure in setting.yaml,

it should be "community_reports" instead of "community_report"

Then finetune the prompts (this is important, this will generate a much better result)

You can find more about how to tune prompts here

python -m graphrag.prompt_tune --root . --domain "Christmas" --method random --limit 20 --language English --max-tokens 2048 --chunk-size 256 --no-entity-types --output ./prompts

Then you can start the indexing

python -m graphrag.index --root .

You can check the logs in ./output/<timestamp>/reports/indexing-engine.log for errors

Test a global query

python -m graphrag.query \

--root . \

--method global \

"What are the top themes in this story?"

First, make sure requirements are installed

pip install -r requirements.txt

Then run the app using

gradio app.py

To use the app, visit http://127.0.0.1:7860/

- Core Capabilities

- The Architectural Blueprint

- Beyond Semantic Search

- Secure On-Premise Deployment Guide

- API Usage & Examples

- Project Philosophy & Future Roadmap

- Acknowledgments & Citations

VeritasGraph integrates four critical components into a cohesive, powerful, and secure system:

- Multi-Hop Graph Reasoning – Move beyond semantic similarity to traverse complex relationships within your data.

- Efficient LoRA-Tuned LLM – Fine-tuned using Low-Rank Adaptation for efficient, powerful on-premise deployment.

- End-to-End Source Attribution – Every statement is linked back to specific source documents and reasoning paths.

- Secure & Private On-Premise Architecture – Fully deployable within your infrastructure, ensuring data sovereignty.

The VeritasGraph pipeline transforms unstructured documents into a structured knowledge graph for attributable reasoning.

-

Document Chunking – Segment input docs into granular

TextUnits. -

Entity & Relationship Extraction – LLM extracts structured triplets

(head, relation, tail). - Graph Assembly – Nodes + edges stored in a graph database (e.g., Neo4j).

- Query Analysis & Entry-Point Identification – Vector search finds relevant entry nodes.

- Contextual Expansion via Multi-Hop Traversal – Graph traversal uncovers hidden relationships.

- Pruning & Re-Ranking – Removes noise, keeps most relevant facts for reasoning.

- Augmented Prompting – Context formatted with query, sources, and instructions.

- LLM Generation – Locally hosted, LoRA-tuned open-source model generates attributed answers.

- LoRA Fine-Tuning – Specialization for reasoning + attribution with efficiency.

- Metadata Propagation – Track source IDs, chunks, and graph nodes.

- Traceable Generation – Model explicitly cites sources.

- Structured Attribution Output – JSON object with provenance + reasoning trail.

Traditional RAG fails at complex reasoning (e.g., linking an engineer across projects and patents).

VeritasGraph succeeds by combining:

- Semantic search → finds entry points.

- Graph traversal → connects the dots.

- LLM reasoning → synthesizes final answer with citations.

Hardware

- CPU: 16+ cores

- RAM: 64GB+ (128GB recommended)

- GPU: NVIDIA GPU with 24GB+ VRAM (A100, H100, RTX 4090)

Software

- Docker & Docker Compose

- Python 3.10+

- NVIDIA Container Toolkit

- Copy

.env.example→.env - Populate with environment-specific values

VeritasGraph is founded on the principle that the most powerful AI systems should also be the most transparent, secure, and controllable.

The project's philosophy is a commitment to democratizing enterprise-grade AI, providing organizations with the tools to build their own sovereign knowledge assets.

This stands in contrast to reliance on opaque, proprietary, cloud-based APIs, empowering organizations to maintain full control over their data and reasoning processes.

Planned future enhancements include:

-

Expanded Database Support – Integration with more graph databases and vector stores.

-

Advanced Graph Analytics – Community detection and summarization for holistic dataset insights (inspired by Microsoft’s GraphRAG).

-

Agentic Framework – Multi-step reasoning tasks, breaking down complex queries into sub-queries.

-

Visualization UI – A web interface for graph exploration and attribution path inspection.

This project builds upon the foundational research and open-source contributions of the AI community.

We acknowledge the influence of the following works:

-

HopRAG – pioneering research on graph-structured RAG and multi-hop reasoning.

-

Microsoft GraphRAG – comprehensive approach to knowledge graph extraction and community-based reasoning.

-

LangChain & LlamaIndex – robust ecosystems that accelerate modular RAG system development.

-

Neo4j – foundational graph database technology enabling scalable Graph RAG implementations.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for VeritasGraph

Similar Open Source Tools

VeritasGraph

VeritasGraph is an enterprise-grade graph RAG framework designed for secure, on-premise AI applications. It leverages a knowledge graph to perform complex, multi-hop reasoning, providing transparent, auditable reasoning paths with full source attribution. The framework excels at answering complex questions that traditional vector search engines struggle with, ensuring trust and reliability in enterprise AI. VeritasGraph offers full control over data and AI models, verifiable attribution for every claim, advanced graph reasoning capabilities, and open-source deployment with sovereignty and customization.

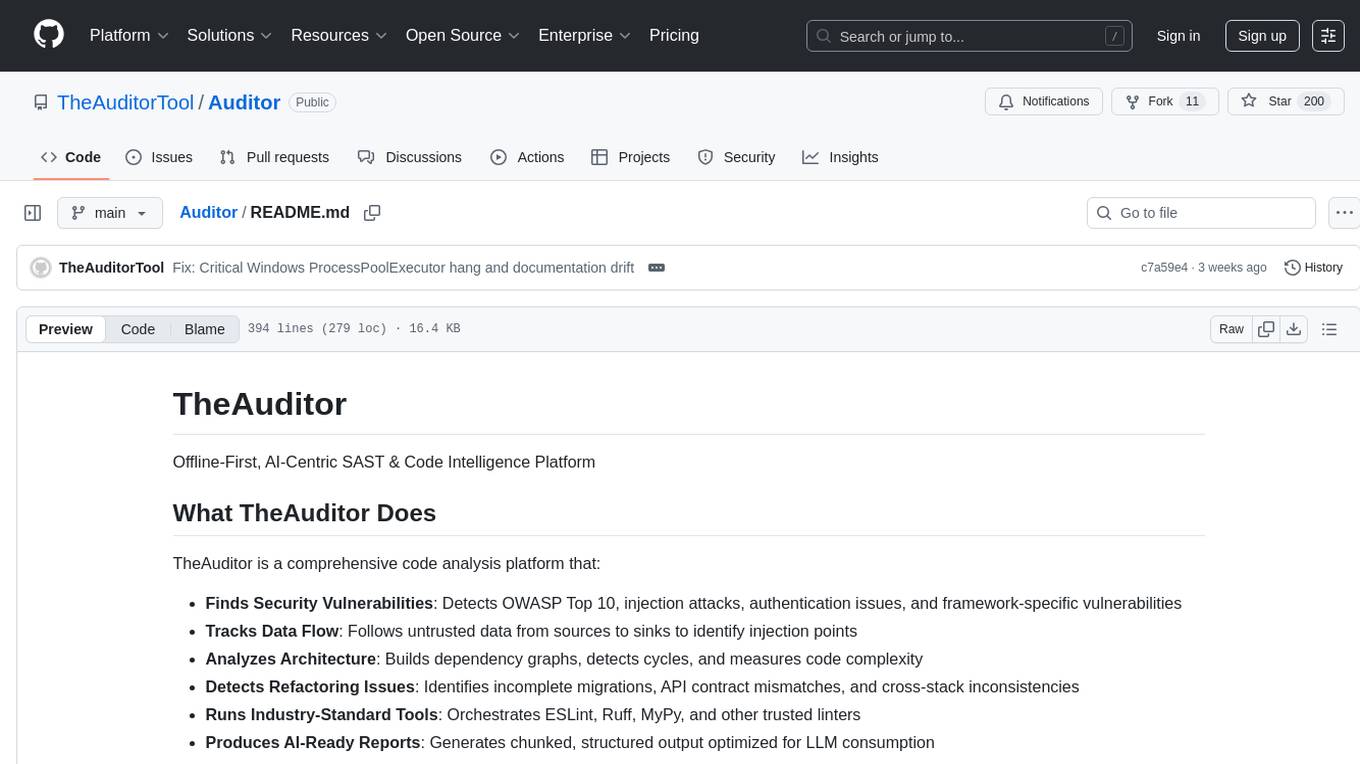

Auditor

TheAuditor is an offline-first, AI-centric SAST & code intelligence platform designed to find security vulnerabilities, track data flow, analyze architecture, detect refactoring issues, run industry-standard tools, and produce AI-ready reports. It is specifically tailored for AI-assisted development workflows, providing verifiable ground truth for developers and AI assistants. The tool orchestrates verifiable data, focuses on AI consumption, and is extensible to support Python and Node.js ecosystems. The comprehensive analysis pipeline includes stages for foundation, concurrent analysis, and final aggregation, offering features like refactoring detection, dependency graph visualization, and optional insights analysis. The tool interacts with antivirus software to identify vulnerabilities, triggers performance impacts, and provides transparent information on common issues and troubleshooting. TheAuditor aims to address the lack of ground truth in AI development workflows and make AI development trustworthy by providing accurate security analysis and code verification.

maiar-ai

MAIAR is a composable, plugin-based AI agent framework designed to abstract data ingestion, decision-making, and action execution into modular plugins. It enables developers to define triggers and actions as standalone plugins, while the core runtime handles decision-making dynamically. This framework offers extensibility, composability, and model-driven behavior, allowing seamless addition of new functionality. MAIAR's architecture is influenced by Unix pipes, ensuring highly composable plugins, dynamic execution pipelines, and transparent debugging. It remains declarative and extensible, allowing developers to build complex AI workflows without rigid architectures.

DevoxxGenieIDEAPlugin

Devoxx Genie is a Java-based IntelliJ IDEA plugin that integrates with local and cloud-based LLM providers to aid in reviewing, testing, and explaining project code. It supports features like code highlighting, chat conversations, and adding files/code snippets to context. Users can modify REST endpoints and LLM parameters in settings, including support for cloud-based LLMs. The plugin requires IntelliJ version 2023.3.4 and JDK 17. Building and publishing the plugin is done using Gradle tasks. Users can select an LLM provider, choose code, and use commands like review, explain, or generate unit tests for code analysis.

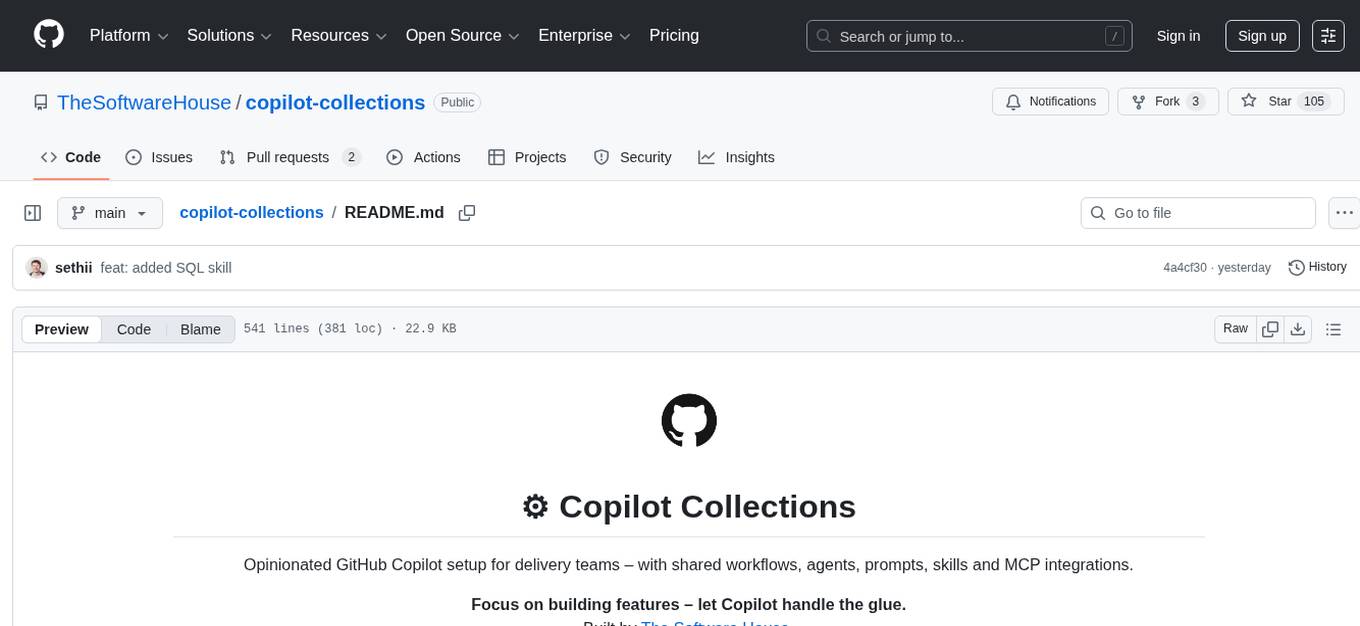

copilot-collections

Copilot Collections is an opinionated setup for GitHub Copilot tailored for delivery teams. It provides shared workflows, specialized agents, task prompts, reusable skills, and MCP integrations to streamline the software development process. The focus is on building features while letting Copilot handle the glue. The setup requires a GitHub Copilot Pro license and VS Code version 1.109 or later. It supports a standard workflow of Research, Plan, Implement, and Review, with specialized flows for UI-heavy tasks and end-to-end testing. Agents like Architect, Business Analyst, Software Engineer, UI Reviewer, Code Reviewer, and E2E Engineer assist in different stages of development. Skills like Task Analysis, Architecture Design, Codebase Analysis, Code Review, and E2E Testing provide specialized domain knowledge and workflows. The repository also includes prompts and chat commands for various tasks, along with instructions for installation and configuration in VS Code.

RainbowGPT

RainbowGPT is a versatile tool that offers a range of functionalities, including Stock Analysis for financial decision-making, MySQL Management for database navigation, and integration of AI technologies like GPT-4 and ChatGlm3. It provides a user-friendly interface suitable for all skill levels, ensuring seamless information flow and continuous expansion of emerging technologies. The tool enhances adaptability, creativity, and insight, making it a valuable asset for various projects and tasks.

UltraRAG

The UltraRAG framework is a researcher and developer-friendly RAG system solution that simplifies the process from data construction to model fine-tuning in domain adaptation. It introduces an automated knowledge adaptation technology system, supporting no-code programming, one-click synthesis and fine-tuning, multidimensional evaluation, and research-friendly exploration work integration. The architecture consists of Frontend, Service, and Backend components, offering flexibility in customization and optimization. Performance evaluation in the legal field shows improved results compared to VanillaRAG, with specific metrics provided. The repository is licensed under Apache-2.0 and encourages citation for support.

Biomni

Biomni is a general-purpose biomedical AI agent designed to autonomously execute a wide range of research tasks across diverse biomedical subfields. By integrating cutting-edge large language model (LLM) reasoning with retrieval-augmented planning and code-based execution, Biomni helps scientists dramatically enhance research productivity and generate testable hypotheses.

eole

EOLE is an open language modeling toolkit based on PyTorch. It aims to provide a research-friendly approach with a comprehensive yet compact and modular codebase for experimenting with various types of language models. The toolkit includes features such as versatile training and inference, dynamic data transforms, comprehensive large language model support, advanced quantization, efficient finetuning, flexible inference, and tensor parallelism. EOLE is a work in progress with ongoing enhancements in configuration management, command line entry points, reproducible recipes, core API simplification, and plans for further simplification, refactoring, inference server development, additional recipes, documentation enhancement, test coverage improvement, logging enhancements, and broader model support.

premsql

PremSQL is an open-source library designed to help developers create secure, fully local Text-to-SQL solutions using small language models. It provides essential tools for building and deploying end-to-end Text-to-SQL pipelines with customizable components, ideal for secure, autonomous AI-powered data analysis. The library offers features like Local-First approach, Customizable Datasets, Robust Executors and Evaluators, Advanced Generators, Error Handling and Self-Correction, Fine-Tuning Support, and End-to-End Pipelines. Users can fine-tune models, generate SQL queries from natural language inputs, handle errors, and evaluate model performance against predefined metrics. PremSQL is extendible for customization and private data usage.

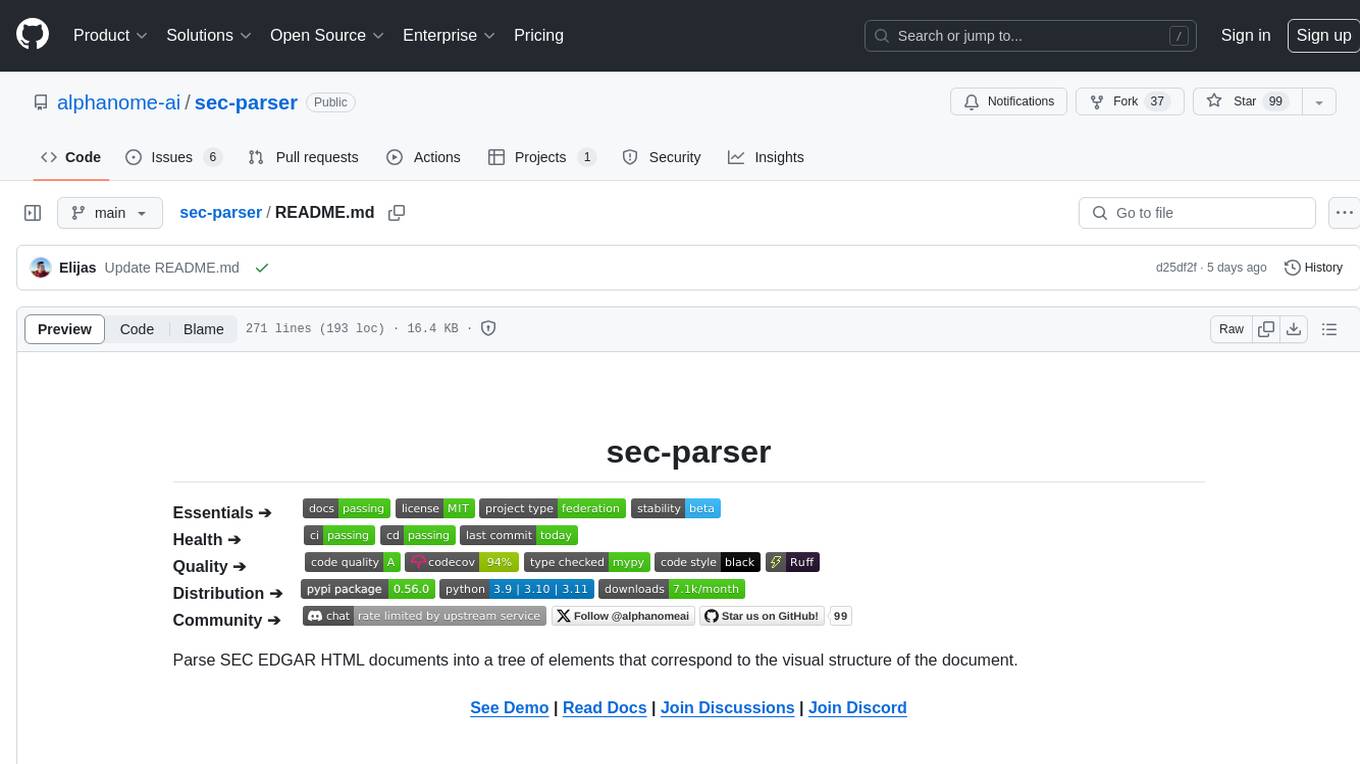

sec-parser

The `sec-parser` project simplifies extracting meaningful information from SEC EDGAR HTML documents by organizing them into semantic elements and a tree structure. It helps in parsing SEC filings for financial and regulatory analysis, analytics and data science, AI and machine learning, causal AI, and large language models. The tool is especially beneficial for AI, ML, and LLM applications by streamlining data pre-processing and feature extraction.

Simplifine

Simplifine is an open-source library designed for easy LLM finetuning, enabling users to perform tasks such as supervised fine tuning, question-answer finetuning, contrastive loss for embedding tasks, multi-label classification finetuning, and more. It provides features like WandB logging, in-built evaluation tools, automated finetuning parameters, and state-of-the-art optimization techniques. The library offers bug fixes, new features, and documentation updates in its latest version. Users can install Simplifine via pip or directly from GitHub. The project welcomes contributors and provides comprehensive documentation and support for users.

langmanus

LangManus is a community-driven AI automation framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It implements a hierarchical multi-agent system with agents like Coordinator, Planner, Supervisor, Researcher, Coder, Browser, and Reporter. The framework supports LLM integration, search and retrieval tools, Python integration, workflow management, and visualization. LangManus aims to give back to the open-source community and welcomes contributions in various forms.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.

Mira

Mira is an agentic AI library designed for automating company research by gathering information from various sources like company websites, LinkedIn profiles, and Google Search. It utilizes a multi-agent architecture to collect and merge data points into a structured profile with confidence scores and clear source attribution. The core library is framework-agnostic and can be integrated into applications, pipelines, or custom workflows. Mira offers features such as real-time progress events, confidence scoring, company criteria matching, and built-in services for data gathering. The tool is suitable for users looking to streamline company research processes and enhance data collection efficiency.

arbigent

Arbigent (Arbiter-Agent) is an AI agent testing framework designed to make AI agent testing practical for modern applications. It addresses challenges faced by traditional UI testing frameworks and AI agents by breaking down complex tasks into smaller, dependent scenarios. The framework is customizable for various AI providers, operating systems, and form factors, empowering users with extensive customization capabilities. Arbigent offers an intuitive UI for scenario creation and a powerful code interface for seamless test execution. It supports multiple form factors, optimizes UI for AI interaction, and is cost-effective by utilizing models like GPT-4o mini. With a flexible code interface and open-source nature, Arbigent aims to revolutionize AI agent testing in modern applications.

For similar tasks

VeritasGraph

VeritasGraph is an enterprise-grade graph RAG framework designed for secure, on-premise AI applications. It leverages a knowledge graph to perform complex, multi-hop reasoning, providing transparent, auditable reasoning paths with full source attribution. The framework excels at answering complex questions that traditional vector search engines struggle with, ensuring trust and reliability in enterprise AI. VeritasGraph offers full control over data and AI models, verifiable attribution for every claim, advanced graph reasoning capabilities, and open-source deployment with sovereignty and customization.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.