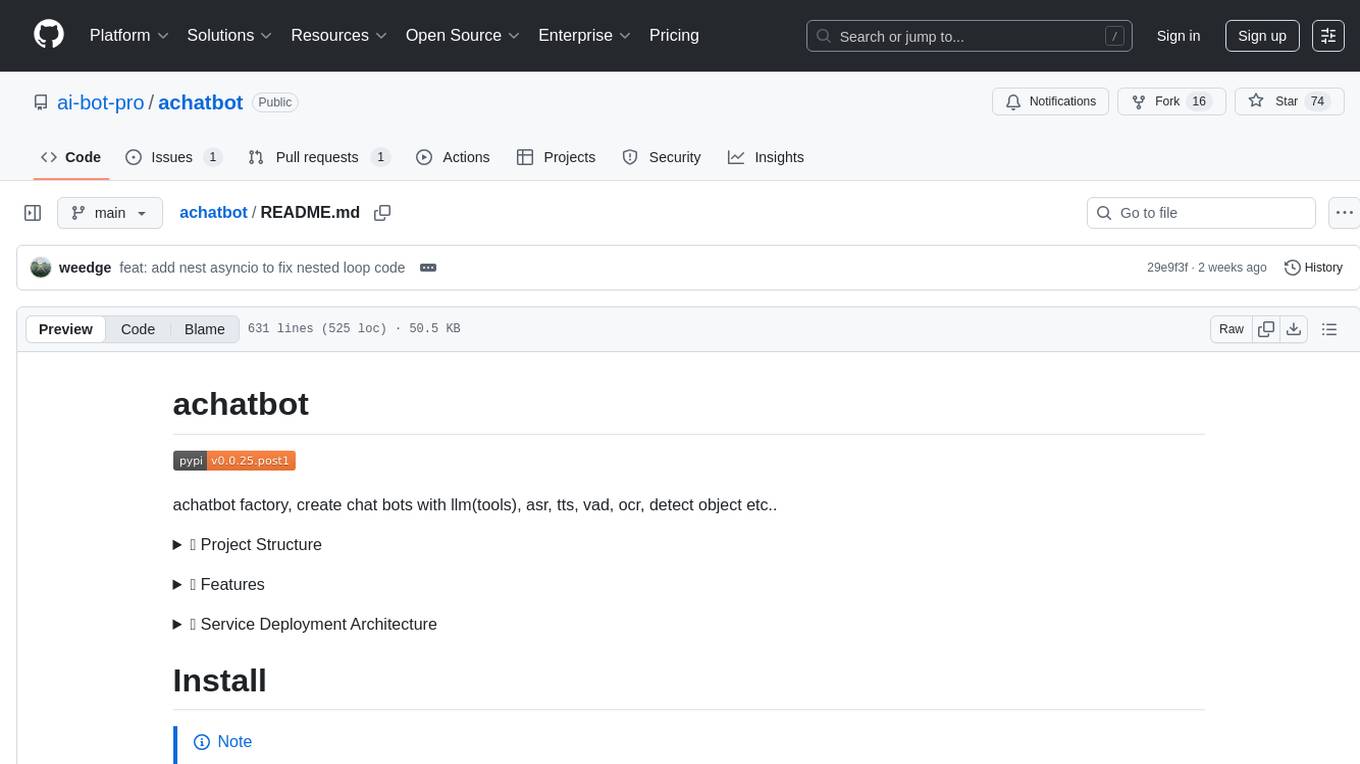

achatbot

An open source chat bot architecture for voice/vision (and multimodal) assistants, local(CPU/GPU bound) and remote(I/O bound) to run.

Stars: 75

achatbot is a factory tool that allows users to create chat bots with various functionalities such as llm (language models), asr (automatic speech recognition), tts (text-to-speech), vad (voice activity detection), ocr (optical character recognition), and object detection. The tool provides a structured project with features like chat bots for cmd, grpc, and http servers. It supports various chat bot processors, transport connectors, and AI modules for different tasks. Users can run chat bots locally or deploy them on cloud services like vercel, Cloudflare, AWS Lambda, or Docker. The tool also includes UI components for easy deployment and service architecture diagrams for reference.

README:

achatbot factory, create chat bots with llm(tools), asr, tts, vad, ocr, detect object etc..

🌿 Features

-

demo

-

podcast AI Podcast:https://podcast-997.pages.dev/ :)

# need GOOGLE_API_KEY in environment variables # default use language English # websit python -m demo.content_parser_tts instruct-content-tts \ "https://en.wikipedia.org/wiki/Large_language_model" python -m demo.content_parser_tts instruct-content-tts \ --role-tts-voices zh-CN-YunjianNeural \ --role-tts-voices zh-CN-XiaoxiaoNeural \ --language zh \ "https://en.wikipedia.org/wiki/Large_language_model" # pdf # https://web.stanford.edu/~jurafsky/slp3/ed3bookaug20_2024.pdf 600 page is ok~ :) python -m demo.content_parser_tts instruct-content-tts \ "/Users/wuyong/Desktop/Speech and Language Processing.pdf" python -m demo.content_parser_tts instruct-content-tts \ --role-tts-voices zh-CN-YunjianNeural \ --role-tts-voices zh-CN-XiaoxiaoNeural \ --language zh \ "/Users/wuyong/Desktop/Speech and Language Processing.pdf"

-

-

cmd chat bots:

- local-terminal-chat(be/fe)

- remote-queue-chat(be/fe)

- grpc-terminal-chat(be/fe)

- grpc-speaker

- http fastapi_daily_bot_serve (with chat bots pipeline)

- bots with config see notebooks:

-

support transport connector:

- [x] pipe(UNIX socket),

- [x] grpc,

- [x] queue (redis),

- [ ] websocket

- [ ] TCP/IP socket

-

chat bot processors:

- aggreators(llm use, assistant message),

- ai_frameworks

- [x] langchain: RAG

- [ ] llamaindex: RAG

- [ ] autoagen: multi Agents

- realtime voice inference(RTVI),

- transport:

- webRTC: (daily,livekit KISS)

- [x] Websocket server

- ai processor: llm, tts, asr etc..

- llm_processor:

- [x] openai(use openai sdk)

- [x] google gemini(use google-generativeai sdk)

- [x] litellm(use openai input/output format proxy sdk)

- llm_processor:

-

core module:

- local llm:

- [x] llama-cpp (support text,vision with function-call model)

- [x] transformers(manual, pipeline) (support text; vision,vision+image; speech,voice; vision+voice)

- [x] llm_transformers_manual_vision_llama

- [x] llm_transformers_manual_vision_molmo

- [x] llm_transformers_manual_vision_qwen

- [x] llm_transformers_manual_vision_deepseek

- [x] llm_transformers_manual_vision_janus_flow

- [x] llm_transformers_manual_image_janus_flow

- [x] llm_transformers_manual_vision_janus

- [x] llm_transformers_manual_image_janus

- [x] llm_transformers_manual_speech_llasa

- [x] llm_transformers_manual_speech_step

- [x] llm_transformers_manual_voice_glm

- [x] llm_transformers_manual_vision_voice_minicpmo, llm_transformers_manual_voice_minicpmo,llm_transformers_manual_audio_minicpmo,llm_transformers_manual_text_speech_minicpmo,llm_transformers_manual_instruct_speech_minicpmo,llm_transformers_manual_vision_minicpmo

- [x] llm_transformers_manual_qwen2_5omni, llm_transformers_manual_qwen2_5omni_audio_asr,llm_transformers_manual_qwen2_5omni_vision,llm_transformers_manual_qwen2_5omni_speech,llm_transformers_manual_qwen2_5omni_vision_voice,llm_transformers_manual_qwen2_5omni_text_voice,llm_transformers_manual_qwen2_5omni_audio_voice

- [x] llm_transformers_manual_kimi_voice,llm_transformers_manual_kimi_audio_asr,llm_transformers_manual_kimi_text_voice

- [x] llm_transformers_manual_vita_text llm_transformers_manual_vita_audio_asr llm_transformers_manual_vita_tts llm_transformers_manual_vita_text_voice llm_transformers_manual_vita_voice

- [x] llm_transformers_manual_phi4_vision_speech,llm_transformers_manual_phi4_audio_asr,llm_transformers_manual_phi4_audio_translation,llm_transformers_manual_phi4_vision,llm_transformers_manual_phi4_audio_chat

- [x] llm_transformers_manual_qwen3omni, llm_transformers_manual_qwen3omni_vision_voice

- remote api llm: personal-ai(like openai api, other ai provider)

- local llm:

-

AI modules:

- functions:

- [x] search: search,search1,serper

- [x] weather: openweathermap

- speech:

- [x] asr:

- [x] whisper_asr, whisper_timestamped_asr, whisper_faster_asr, whisper_transformers_asr, whisper_mlx_asr

- [x] whisper_groq_asr

- [x] sense_voice_asr

- [x] minicpmo_asr (whisper)

- [x] qwen2_5omni_asr (whisper)

- [x] kimi_asr (whisper)

- [x] vita_asr (sensevoice-small)

- [x] phi4_asr (conformer)

- [x] audio_stream: daily_room_audio_stream(in/out), pyaudio_stream(in/out)

- [x] detector: porcupine_wakeword,pyannote_vad,webrtc_vad,silero_vad,webrtc_silero_vad,fsmn_vad

- [x] player: stream_player

- [x] recorder: rms_recorder, wakeword_rms_recorder, vad_recorder, wakeword_vad_recorder

- [x] tts:

- [x] tts_edge

- [x] tts_g

- [x] tts_coqui

- [x] tts_chat

- [x] tts_cosy_voice,tts_cosy_voice2

- [x] tts_f5

- [x] tts_openvoicev2

- [x] tts_kokoro,tts_onnx_kokoro

- [x] tts_fishspeech

- [x] tts_llasa

- [x] tts_minicpmo

- [x] tts_zonos

- [x] tts_step

- [x] tts_spark

- [x] tts_orpheus

- [x] tts_mega3

- [x] tts_vita

- [x] vad_analyzer:

- [x] daily_webrtc_vad_analyzer

- [x] silero_vad_analyzer

- [x] asr:

- vision

- [x] OCR(Optical Character Recognition):

- [x] Detector:

- [x] YOLO (You Only Look Once)

- [ ] RT-DETR v2 (RealTime End-to-End Object Detection with Transformers)

- functions:

-

gen modules config(*.yaml, local/test/prod) from env with file:

.envu also use HfArgumentParser this module's args to local cmd parse args -

deploy to cloud ☁️ serverless:

- vercel (frontend ui pages)

- Cloudflare(frontend ui pages), personal ai workers

- fastapi-daily-chat-bot on cerebrium (provider aws)

- fastapi-daily-chat-bot on leptonai

- fastapi-daily-chat-bot on modal

- aws lambda + api Gateway

- docker -> k8s/k3s

- etc...

🌻 Service Deployment Architecture

- [x] ui/web-client-ui deploy it to cloudflare page with vite, access https://chat-client-weedge.pages.dev/

- [x] ui/educator-client deploy it to cloudflare page with vite, access https://educator-client.pages.dev/

- [x] chat-bot-rtvi-web-sandbox use this web sandbox to test config, actions with DailyRTVIGeneralBot

- [x] vite-react-rtvi-web-voice rtvi web voice chat bots, diff cctv roles etc, u can diy your own role by change the system prompt with DailyRTVIGeneralBot deploy it to cloudflare page with vite, access https://role-chat.pages.dev/

- [x] vite-react-web-vision deploy it to cloudflare page with vite, access https://vision-weedge.pages.dev/

- [x] nextjs-react-web-storytelling deploy it to cloudflare page worker with nextjs, access https://storytelling.pages.dev/

- [x] websocket-demo: websocket audio chat bot demo

- [x] deploy/modal(KISS) 👍🏻

- [x] deploy/leptonai(KISS)👍🏻

- [x] deploy/cerebrium/fastapi-daily-chat-bot :)

- [x] deploy/aws/fastapi-daily-chat-bot :|

- [x] deploy/docker/fastapi-daily-chat-bot 🏃

[!NOTE]

python --version>=3.10 with asyncio-task if installachatbot[tts_openvoicev2]need install melo-ttspip install git+https://github.com/myshell-ai/MeloTTS.git

[!TIP] use uv + pip to run, install the required dependencies fastly, e.g.:

uv pip install achatbotuv pip install "achatbot[fastapi_bot_server]"

python3 -m venv .venv_achatbot

source .venv_achatbot/bin/activate

pip install achatbot

# optional-dependencies e.g.

pip install "achatbot[fastapi_bot_server]"git clone --recursive https://github.com/ai-bot-pro/chat-bot.git

cd chat-bot

python3 -m venv .venv_achatbot

source .venv_achatbot/bin/activate

bash scripts/pypi_achatbot.sh dev

# optional-dependencies e.g.

pip install "dist/achatbot-{$version}-py3-none-any.whl[fastapi_bot_server]"| Chat Bot | optional-dependencies | Colab | Device | Pipeline Desc |

|---|---|---|---|---|

|

daily_bot livekit_bot agora_bot |

e.g.: daily_room_audio_stream | livekit_room_audio_stream, sense_voice_asr, groq | together api llm(text), tts_edge |

CPU (free, 2 cores) | e.g.: daily | livekit room in stream -> silero (vad) -> sense_voice (asr) -> groq | together (llm) -> edge (tts) -> daily | livekit room out stream |

|

| generate_audio2audio | remote_queue_chat_bot_be_worker | T4(free) | e.g.: pyaudio in stream -> silero (vad) -> sense_voice (asr) -> qwen (llm) -> cosy_voice (tts) -> pyaudio out stream |

|

|

daily_describe_vision_tools_bot livekit_describe_vision_tools_bot agora_describe_vision_tools_bot |

e.g.: daily_room_audio_stream |livekit_room_audio_stream deepgram_asr, goole_gemini, tts_edge |

CPU(free, 2 cores) | e.g.: daily |livekit room in stream -> silero (vad) -> deepgram (asr) -> google gemini -> edge (tts) -> daily |livekit room out stream |

|

|

daily_describe_vision_bot livekit_describe_vision_bot agora_describe_vision_bot |

e.g.: daily_room_audio_stream | livekit_room_audio_stream sense_voice_asr, llm_transformers_manual_vision_qwen, tts_edge |

achatbot_vision_qwen_vl.ipynb: achatbot_vision_janus.ipynb: achatbot_vision_minicpmo.ipynb: achatbot_kimivl.ipynb: achatbot_phi4_multimodal.ipynb: |

- Qwen2-VL-2B-Instruct T4(free) - Qwen2-VL-7B-Instruct L4 - Llama-3.2-11B-Vision-Instruct L4 - allenai/Molmo-7B-D-0924 A100 |

e.g.: daily | livekit room in stream -> silero (vad) -> sense_voice (asr) -> qwen-vl (llm) -> edge (tts) -> daily | livekit room out stream |

|

daily_chat_vision_bot livekit_chat_vision_bot agora_chat_vision_bot |

e.g.: daily_room_audio_stream |livekit_room_audio_stream sense_voice_asr, llm_transformers_manual_vision_qwen, tts_edge |

- Qwen2-VL-2B-Instruct T4(free) - Qwen2-VL-7B-Instruct L4 - Ll ama-3.2-11B-Vision-Instruct L4 - allenai/Molmo-7B-D-0924 A100 |

e.g.: daily | livekit room in stream -> silero (vad) -> sense_voice (asr) -> llm answer guide qwen-vl (llm) -> edge (tts) -> daily | livekit room out stream |

|

|

daily_chat_tools_vision_bot livekit_chat_tools_vision_bot agora_chat_tools_vision_bot |

e.g.: daily_room_audio_stream | livekit_room_audio_stream sense_voice_asr, groq api llm(text), tools: - llm_transformers_manual_vision_qwen, tts_edge |

- Qwen2-VL-2B-Instruct<br /> T4(free) - Qwen2-VL-7B-Instruct L4 - Llama-3.2-11B-Vision-Instruct L4 - allenai/Molmo-7B-D-0924 A100 |

e.g.: daily | livekit room in stream -> silero (vad) -> sense_voice (asr) ->llm with tools qwen-vl -> edge (tts) -> daily | livekit room out stream |

|

|

daily_annotate_vision_bot livekit_annotate_vision_bot agora_annotate_vision_bot |

e.g.: daily_room_audio_stream | livekit_room_audio_stream vision_yolo_detector tts_edge |

T4(free) | e.g.: daily | livekit room in stream vision_yolo_detector -> edge (tts) -> daily | livekit room out stream |

|

|

daily_detect_vision_bot livekit_detect_vision_bot agora_detect_vision_bot |

e.g.: daily_room_audio_stream | livekit_room_audio_stream vision_yolo_detector tts_edge |

T4(free) | e.g.: daily | livekit room in stream vision_yolo_detector -> edge (tts) -> daily | livekit room out stream |

|

|

daily_ocr_vision_bot livekit_ocr_vision_bot agora_ocr_vision_bot |

e.g.: daily_room_audio_stream | livekit_room_audio_stream sense_voice_asr, vision_transformers_got_ocr tts_edge |

T4(free) | e.g.: daily | livekit room in stream -> silero (vad) -> sense_voice (asr) vision_transformers_got_ocr -> edge (tts) -> daily | livekit room out stream |

|

| daily_month_narration_bot | e.g.: daily_room_audio_stream groq |together api llm(text), hf_sd, together api (image) tts_edge |

when use sd model with diffusers T4(free) cpu+cuda (slow) L4 cpu+cuda A100 all cuda |

e.g.: daily room in stream -> together (llm) -> hf sd gen image model -> edge (tts) -> daily room out stream |

|

| daily_storytelling_bot | e.g.: daily_room_audio_stream groq |together api llm(text), hf_sd, together api (image) tts_edge |

cpu (2 cores) when use sd model with diffusers T4(free) cpu+cuda (slow) L4 cpu+cuda A100 all cuda |

e.g.: daily room in stream -> together (llm) -> hf sd gen image model -> edge (tts) -> daily room out stream |

|

|

websocket_server_bot fastapi_websocket_server_bot |

e.g.: websocket_server sense_voice_asr, groq |together api llm(text), tts_edge |

cpu(2 cores) | e.g.: websocket protocol in stream -> silero (vad) -> sense_voice (asr) -> together (llm) -> edge (tts) -> websocket protocol out stream |

|

| daily_natural_conversation_bot | e.g.: daily_room_audio_stream sense_voice_asr, groq |together api llm(NLP task), gemini-1.5-flash (chat) tts_edge |

cpu(2 cores) | e.g.: daily room in stream -> together (llm NLP task) -> gemini-1.5-flash model (chat) -> edge (tts) -> daily room out stream |

|

| fastapi_websocket_moshi_bot | e.g.: websocket_server moshi opus stream voice llm |

L4/A100 | websocket protocol in stream -> silero (vad) -> moshi opus stream voice llm -> websocket protocol out stream |

|

|

daily_asr_glm_voice_bot daily_glm_voice_bot |

e.g.: daily_room_audio_stream glm voice llm |

T4/L4/A100 | e.g.: daily room in stream ->glm4-voice -> daily room out stream |

|

| daily_freeze_omni_voice_bot | e.g.: daily_room_audio_stream freezeOmni voice llm |

L4/A100 | e.g.: daily room in stream ->freezeOmni-voice -> daily room out stream |

|

|

daily_asr_minicpmo_voice_bot daily_minicpmo_voice_bot daily_minicpmo_vision_voice_bot |

e.g.: daily_room_audio_stream minicpmo llm |

T4: MiniCPM-o-2_6-int4 L4/A100: MiniCPM-o-2_6 |

e.g.: daily room in stream ->minicpmo -> daily room out stream |

|

|

livekit_asr_qwen2_5omni_voice_bot livekit_qwen2_5omni_voice_bot livekit_qwen2_5omni_vision_voice_bot |

e.g.: livekit_room_audio_stream qwen2.5omni llm |

A100 | e.g.: livekit room in stream ->qwen2.5omni -> livekit room out stream |

|

|

livekit_asr_kimi_voice_bot livekit_kimi_voice_bot |

e.g.: livekit_room_audio_stream kimi audio llm |

A100 | e.g.: livekit room in stream -> Kimi-Audio -> livekit room out stream |

|

|

livekit_asr_vita_voice_bot livekit_vita_voice_bot |

e.g.: livekit_room_audio_stream vita audio llm |

L4/100 | e.g.: livekit room in stream -> VITA-Audio -> livekit room out stream |

|

|

daily_phi4_voice_bot daily_phi4_vision_speech_bot |

e.g.: daily_room_audio_stream phi4-multimodal llm |

L4/100 | e.g.: daily room in stream -> phi4-multimodal -> edge (tts) -> daily room out stream |

|

🌑 Run local chat bots

[!NOTE]

run src code, replace achatbot to src, don't need set

ACHATBOT_PKG=1e.g.:TQDM_DISABLE=True \ python -m src.cmd.local-terminal-chat.generate_audio2audio > log/std_out.logPyAudio need install python3-pyaudio e.g. ubuntu

apt-get install python3-pyaudio, macosbrew install portaudiosee: https://pypi.org/project/PyAudio/llm llama-cpp-python init use cpu Pre-built Wheel to install, if want to use other lib(cuda), see: https://github.com/abetlen/llama-cpp-python#installation-configuration

install

pydubneed installffmpegsee: https://www.ffmpeg.org/download.html

-

run

pip install "achatbot[local_terminal_chat_bot]"to install dependencies to run local terminal chat bot; -

create achatbot data dir in

$HOMEdirmkdir -p ~/.achatbot/{log,config,models,records,videos}; -

cp .env.example .env, and check.env, add key/value env params; -

select a model ckpt to download:

- vad model ckpt (default vad ckpt model use silero vad)

# vad pyannote segmentation ckpt huggingface-cli download pyannote/segmentation-3.0 --local-dir ~/.achatbot/models/pyannote/segmentation-3.0 --local-dir-use-symlinks False- asr model ckpt (default whipser ckpt model use base size)

# asr openai whisper ckpt wget https://openaipublic.azureedge.net/main/whisper/models/ed3a0b6b1c0edf879ad9b11b1af5a0e6ab5db9205f891f668f8b0e6c6326e34e/base.pt -O ~/.achatbot/models/base.pt # asr hf openai whisper ckpt for transformers pipeline to load huggingface-cli download openai/whisper-base --local-dir ~/.achatbot/models/openai/whisper-base --local-dir-use-symlinks False # asr hf faster whisper (CTranslate2) huggingface-cli download Systran/faster-whisper-base --local-dir ~/.achatbot/models/Systran/faster-whisper-base --local-dir-use-symlinks False # asr SenseVoice ckpt huggingface-cli download FunAudioLLM/SenseVoiceSmall --local-dir ~/.achatbot/models/FunAudioLLM/SenseVoiceSmall --local-dir-use-symlinks False- llm model ckpt (default llamacpp ckpt(ggml) model use qwen-2 instruct 1.5B size)

# llm llamacpp Qwen2-Instruct huggingface-cli download Qwen/Qwen2-1.5B-Instruct-GGUF qwen2-1_5b-instruct-q8_0.gguf --local-dir ~/.achatbot/models --local-dir-use-symlinks False # llm llamacpp Qwen1.5-chat huggingface-cli download Qwen/Qwen1.5-7B-Chat-GGUF qwen1_5-7b-chat-q8_0.gguf --local-dir ~/.achatbot/models --local-dir-use-symlinks False # llm llamacpp phi-3-mini-4k-instruct huggingface-cli download microsoft/Phi-3-mini-4k-instruct-gguf Phi-3-mini-4k-instruct-q4.gguf --local-dir ~/.achatbot/models --local-dir-use-symlinks False- tts model ckpt (default whipser ckpt model use base size)

# tts chatTTS huggingface-cli download 2Noise/ChatTTS --local-dir ~/.achatbot/models/2Noise/ChatTTS --local-dir-use-symlinks False # tts coquiTTS huggingface-cli download coqui/XTTS-v2 --local-dir ~/.achatbot/models/coqui/XTTS-v2 --local-dir-use-symlinks False # tts cosy voice git lfs install git clone https://www.modelscope.cn/iic/CosyVoice-300M.git ~/.achatbot/models/CosyVoice-300M git clone https://www.modelscope.cn/iic/CosyVoice-300M-SFT.git ~/.achatbot/models/CosyVoice-300M-SFT git clone https://www.modelscope.cn/iic/CosyVoice-300M-Instruct.git ~/.achatbot/models/CosyVoice-300M-Instruct #git clone https://www.modelscope.cn/iic/CosyVoice-ttsfrd.git ~/.achatbot/models/CosyVoice-ttsfrd -

run local terminal chat bot with env; e.g.

- use dufault env params to run local chat bot

ACHATBOT_PKG=1 TQDM_DISABLE=True \ python -m achatbot.cmd.local-terminal-chat.generate_audio2audio > ~/.achatbot/log/std_out.log

🌒 Run remote http fastapi daily chat bots

-

run

pip install "achatbot[fastapi_daily_bot_server]"to install dependencies to run http fastapi daily chat bot; -

run below cmd to start http server, see api docs: http://0.0.0.0:4321/docs

ACHATBOT_PKG=1 python -m achatbot.cmd.http.server.fastapi_daily_bot_serve -

run chat bot processor, e.g.

- run a daily langchain rag bot api, with ui/educator-client

[!NOTE] need process youtube audio save to local file with

pytube, runpip install "achatbot[pytube,deep_translator]"to install dependencies and transcribe/translate to text, then chunks to vector store, and run langchain rag bot api; run data process:ACHATBOT_PKG=1 python -m achatbot.cmd.bots.rag.data_process.youtube_audio_transcribe_to_tidbor download processed data from hf dataset weege007/youtube_videos, then chunks to vector store .

curl -XPOST "http://0.0.0.0:4321/bot_join/chat-bot/DailyLangchainRAGBot" \ -H "Content-Type: application/json" \ -d $'{"config":{"llm":{"model":"llama-3.1-70b-versatile","messages":[{"role":"system","content":""}],"language":"zh"},"tts":{"tag":"cartesia_tts_processor","args":{"voice_id":"eda5bbff-1ff1-4886-8ef1-4e69a77640a0","language":"zh"}},"asr":{"tag":"deepgram_asr_processor","args":{"language":"zh","model":"nova-2"}}}}' | jq .- run a simple daily chat bot api, with ui/web-client-ui (default language: zh)

curl -XPOST "http://0.0.0.0:4321/bot_join/DailyBot" \ -H "Content-Type: application/json" \ -d '{}' | jq .

🌓 Run remote rpc chat bot worker

- run

pip install "achatbot[remote_rpc_chat_bot_be_worker]"to install dependencies to run rpc chat bot BE worker; e.g. :- use dufault env params to run rpc chat bot BE worker

ACHATBOT_PKG=1 RUN_OP=be TQDM_DISABLE=True \

TTS_TAG=tts_edge \

python -m achatbot.cmd.grpc.terminal-chat.generate_audio2audio > ~/.achatbot/log/be_std_out.log

- run

pip install "achatbot[remote_rpc_chat_bot_fe]"to install dependencies to run rpc chat bot FE;

ACHATBOT_PKG=1 RUN_OP=fe \

TTS_TAG=tts_edge \

python -m achatbot.cmd.grpc.terminal-chat.generate_audio2audio > ~/.achatbot/log/fe_std_out.log

🌔 Run remote queue chat bot worker

-

run

pip install "achatbot[remote_queue_chat_bot_be_worker]"to install dependencies to run queue chat bot worker; e.g.:- use default env params to run

ACHATBOT_PKG=1 REDIS_PASSWORD=$redis_pwd RUN_OP=be TQDM_DISABLE=True \ python -m achatbot.cmd.remote-queue-chat.generate_audio2audio > ~/.achatbot/log/be_std_out.log- sense_voice(asr) -> qwen (llm) -> cosy_voice (tts)

u can login redislabs create 30M free databases; set

REDIS_HOST,REDIS_PORTandREDIS_PASSWORDto run, e.g.:

ACHATBOT_PKG=1 RUN_OP=be \ TQDM_DISABLE=True \ REDIS_PASSWORD=$redis_pwd \ REDIS_HOST=redis-14241.c256.us-east-1-2.ec2.redns.redis-cloud.com \ REDIS_PORT=14241 \ ASR_TAG=sense_voice_asr \ ASR_LANG=zn \ ASR_MODEL_NAME_OR_PATH=~/.achatbot/models/FunAudioLLM/SenseVoiceSmall \ N_GPU_LAYERS=33 FLASH_ATTN=1 \ LLM_MODEL_NAME=qwen \ LLM_MODEL_PATH=~/.achatbot/models/qwen1_5-7b-chat-q8_0.gguf \ TTS_TAG=tts_cosy_voice \ python -m achatbot.cmd.remote-queue-chat.generate_audio2audio > ~/.achatbot/log/be_std_out.log -

run

pip install "achatbot[remote_queue_chat_bot_fe]"to install the required packages to run quueue chat bot frontend; e.g.:- use default env params to run (default vad_recorder)

ACHATBOT_PKG=1 RUN_OP=fe \ REDIS_PASSWORD=$redis_pwd \ REDIS_HOST=redis-14241.c256.us-east-1-2.ec2.redns.redis-cloud.com \ REDIS_PORT=14241 \ python -m achatbot.cmd.remote-queue-chat.generate_audio2audio > ~/.achatbot/log/fe_std_out.log- with wake word

ACHATBOT_PKG=1 RUN_OP=fe \ REDIS_PASSWORD=$redis_pwd \ REDIS_HOST=redis-14241.c256.us-east-1-2.ec2.redns.redis-cloud.com \ REDIS_PORT=14241 \ RECORDER_TAG=wakeword_rms_recorder \ python -m achatbot.cmd.remote-queue-chat.generate_audio2audio > ~/.achatbot/log/fe_std_out.log- default pyaudio player stream with tts tag out sample info(rate,channels..), e.g.: (be use tts_cosy_voice out stream info)

ACHATBOT_PKG=1 RUN_OP=fe \ REDIS_PASSWORD=$redis_pwd \ REDIS_HOST=redis-14241.c256.us-east-1-2.ec2.redns.redis-cloud.com \ REDIS_PORT=14241 \ RUN_OP=fe \ TTS_TAG=tts_cosy_voice \ python -m achatbot.cmd.remote-queue-chat.generate_audio2audio > ~/.achatbot/log/fe_std_out.logremote_queue_chat_bot_be_worker in colab examples :

- sense_voice(asr) -> qwen (llm) -> cosy_voice (tts)

🌕 Run remote grpc tts speaker bot

- run

pip install "achatbot[remote_grpc_tts_server]"to install dependencies to run grpc tts speaker bot server;

ACHATBOT_PKG=1 python -m achatbot.cmd.grpc.speaker.server.serve

- run

pip install "achatbot[remote_grpc_tts_client]"to install dependencies to run grpc tts speaker bot client;

ACHATBOT_PKG=1 TTS_TAG=tts_edge IS_RELOAD=1 python -m src.cmd.grpc.speaker.client

ACHATBOT_PKG=1 TTS_TAG=tts_g IS_RELOAD=1 python -m src.cmd.grpc.speaker.client

ACHATBOT_PKG=1 TTS_TAG=tts_coqui IS_RELOAD=1 python -m src.cmd.grpc.speaker.client

ACHATBOT_PKG=1 TTS_TAG=tts_chat IS_RELOAD=1 python -m src.cmd.grpc.speaker.client

ACHATBOT_PKG=1 TTS_TAG=tts_cosy_voice IS_RELOAD=1 python -m src.cmd.grpc.speaker.client

ACHATBOT_PKG=1 TTS_TAG=tts_fishspeech IS_RELOAD=1 python -m src.cmd.grpc.speaker.client

ACHATBOT_PKG=1 TTS_TAG=tts_f5 IS_RELOAD=1 python -m src.cmd.grpc.speaker.client

ACHATBOT_PKG=1 TTS_TAG=tts_openvoicev2 IS_RELOAD=1 python -m src.cmd.grpc.speaker.client

ACHATBOT_PKG=1 TTS_TAG=tts_kokoro IS_RELOAD=1 python -m src.cmd.grpc.speaker.client

ACHATBOT_PKG=1 TTS_TAG=tts_onnx_kokoro IS_RELOAD=1 KOKORO_ESPEAK_NG_LIB_PATH=/usr/local/lib/libespeak-ng.1.dylib KOKORO_LANGUAGE=cmn python -m src.cmd.grpc.speaker.client

ACHATBOT_PKG=1 TTS_TAG=tts_cosy_voice2 \

COSY_VOICE_MODELS_DIR=./models/FunAudioLLM/CosyVoice2-0.5B \

COSY_VOICE_REFERENCE_AUDIO_PATH=./test/audio_files/asr_example_zh.wav \

IS_RELOAD=1 python -m src.cmd.grpc.speaker.client

📹 Multimodal Interaction

- stream-ocr (realtime-object-detection)

- Embodied Intelligence: Robots that touch the world, perceive and move

achatbot is released under the BSD 3 license. (Additional code in this distribution is covered by the MIT and Apache Open Source licenses.) However you may have other legal obligations that govern your use of content, such as the terms of service for third-party models.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for achatbot

Similar Open Source Tools

achatbot

achatbot is a factory tool that allows users to create chat bots with various functionalities such as llm (language models), asr (automatic speech recognition), tts (text-to-speech), vad (voice activity detection), ocr (optical character recognition), and object detection. The tool provides a structured project with features like chat bots for cmd, grpc, and http servers. It supports various chat bot processors, transport connectors, and AI modules for different tasks. Users can run chat bots locally or deploy them on cloud services like vercel, Cloudflare, AWS Lambda, or Docker. The tool also includes UI components for easy deployment and service architecture diagrams for reference.

llama.cpp

The main goal of llama.cpp is to enable LLM inference with minimal setup and state-of-the-art performance on a wide range of hardware - locally and in the cloud. It provides a Plain C/C++ implementation without any dependencies, optimized for Apple silicon via ARM NEON, Accelerate and Metal frameworks, and supports various architectures like AVX, AVX2, AVX512, and AMX. It offers integer quantization for faster inference, custom CUDA kernels for NVIDIA GPUs, Vulkan and SYCL backend support, and CPU+GPU hybrid inference. llama.cpp is the main playground for developing new features for the ggml library, supporting various models and providing tools and infrastructure for LLM deployment.

llama.cpp

llama.cpp is a C++ implementation of LLaMA, a large language model from Meta. It provides a command-line interface for inference and can be used for a variety of tasks, including text generation, translation, and question answering. llama.cpp is highly optimized for performance and can be run on a variety of hardware, including CPUs, GPUs, and TPUs.

LLaMA-Factory

LLaMA Factory is a unified framework for fine-tuning 100+ large language models (LLMs) with various methods, including pre-training, supervised fine-tuning, reward modeling, PPO, DPO and ORPO. It features integrated algorithms like GaLore, BAdam, DoRA, LongLoRA, LLaMA Pro, LoRA+, LoftQ and Agent tuning, as well as practical tricks like FlashAttention-2, Unsloth, RoPE scaling, NEFTune and rsLoRA. LLaMA Factory provides experiment monitors like LlamaBoard, TensorBoard, Wandb, MLflow, etc., and supports faster inference with OpenAI-style API, Gradio UI and CLI with vLLM worker. Compared to ChatGLM's P-Tuning, LLaMA Factory's LoRA tuning offers up to 3.7 times faster training speed with a better Rouge score on the advertising text generation task. By leveraging 4-bit quantization technique, LLaMA Factory's QLoRA further improves the efficiency regarding the GPU memory.

swift

SWIFT (Scalable lightWeight Infrastructure for Fine-Tuning) supports training, inference, evaluation and deployment of nearly **200 LLMs and MLLMs** (multimodal large models). Developers can directly apply our framework to their own research and production environments to realize the complete workflow from model training and evaluation to application. In addition to supporting the lightweight training solutions provided by [PEFT](https://github.com/huggingface/peft), we also provide a complete **Adapters library** to support the latest training techniques such as NEFTune, LoRA+, LLaMA-PRO, etc. This adapter library can be used directly in your own custom workflow without our training scripts. To facilitate use by users unfamiliar with deep learning, we provide a Gradio web-ui for controlling training and inference, as well as accompanying deep learning courses and best practices for beginners. Additionally, we are expanding capabilities for other modalities. Currently, we support full-parameter training and LoRA training for AnimateDiff.

VideoLLaMA2

VideoLLaMA 2 is a project focused on advancing spatial-temporal modeling and audio understanding in video-LLMs. It provides tools for multi-choice video QA, open-ended video QA, and video captioning. The project offers model zoo with different configurations for visual encoder and language decoder. It includes training and evaluation guides, as well as inference capabilities for video and image processing. The project also features a demo setup for running a video-based Large Language Model web demonstration.

intel-extension-for-transformers

Intel® Extension for Transformers is an innovative toolkit designed to accelerate GenAI/LLM everywhere with the optimal performance of Transformer-based models on various Intel platforms, including Intel Gaudi2, Intel CPU, and Intel GPU. The toolkit provides the below key features and examples: * Seamless user experience of model compressions on Transformer-based models by extending [Hugging Face transformers](https://github.com/huggingface/transformers) APIs and leveraging [Intel® Neural Compressor](https://github.com/intel/neural-compressor) * Advanced software optimizations and unique compression-aware runtime (released with NeurIPS 2022's paper [Fast Distilbert on CPUs](https://arxiv.org/abs/2211.07715) and [QuaLA-MiniLM: a Quantized Length Adaptive MiniLM](https://arxiv.org/abs/2210.17114), and NeurIPS 2021's paper [Prune Once for All: Sparse Pre-Trained Language Models](https://arxiv.org/abs/2111.05754)) * Optimized Transformer-based model packages such as [Stable Diffusion](examples/huggingface/pytorch/text-to-image/deployment/stable_diffusion), [GPT-J-6B](examples/huggingface/pytorch/text-generation/deployment), [GPT-NEOX](examples/huggingface/pytorch/language-modeling/quantization#2-validated-model-list), [BLOOM-176B](examples/huggingface/pytorch/language-modeling/inference#BLOOM-176B), [T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), [Flan-T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), and end-to-end workflows such as [SetFit-based text classification](docs/tutorials/pytorch/text-classification/SetFit_model_compression_AGNews.ipynb) and [document level sentiment analysis (DLSA)](workflows/dlsa) * [NeuralChat](intel_extension_for_transformers/neural_chat), a customizable chatbot framework to create your own chatbot within minutes by leveraging a rich set of [plugins](https://github.com/intel/intel-extension-for-transformers/blob/main/intel_extension_for_transformers/neural_chat/docs/advanced_features.md) such as [Knowledge Retrieval](./intel_extension_for_transformers/neural_chat/pipeline/plugins/retrieval/README.md), [Speech Interaction](./intel_extension_for_transformers/neural_chat/pipeline/plugins/audio/README.md), [Query Caching](./intel_extension_for_transformers/neural_chat/pipeline/plugins/caching/README.md), and [Security Guardrail](./intel_extension_for_transformers/neural_chat/pipeline/plugins/security/README.md). This framework supports Intel Gaudi2/CPU/GPU. * [Inference](https://github.com/intel/neural-speed/tree/main) of Large Language Model (LLM) in pure C/C++ with weight-only quantization kernels for Intel CPU and Intel GPU (TBD), supporting [GPT-NEOX](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox), [LLAMA](https://github.com/intel/neural-speed/tree/main/neural_speed/models/llama), [MPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/mpt), [FALCON](https://github.com/intel/neural-speed/tree/main/neural_speed/models/falcon), [BLOOM-7B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/bloom), [OPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/opt), [ChatGLM2-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/chatglm), [GPT-J-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptj), and [Dolly-v2-3B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox). Support AMX, VNNI, AVX512F and AVX2 instruction set. We've boosted the performance of Intel CPUs, with a particular focus on the 4th generation Intel Xeon Scalable processor, codenamed [Sapphire Rapids](https://www.intel.com/content/www/us/en/products/docs/processors/xeon-accelerated/4th-gen-xeon-scalable-processors.html).

gitmesh

GitMesh is an AI-powered Git collaboration network designed to address contributor dropout in open source projects. It offers real-time branch-level insights, intelligent contributor-task matching, and automated workflows. The platform transforms complex codebases into clear contribution journeys, fostering engagement through gamified rewards and integration with open source support programs. GitMesh's mascot, Meshy/Mesh Wolf, symbolizes agility, resilience, and teamwork, reflecting the platform's ethos of efficiency and power through collaboration.

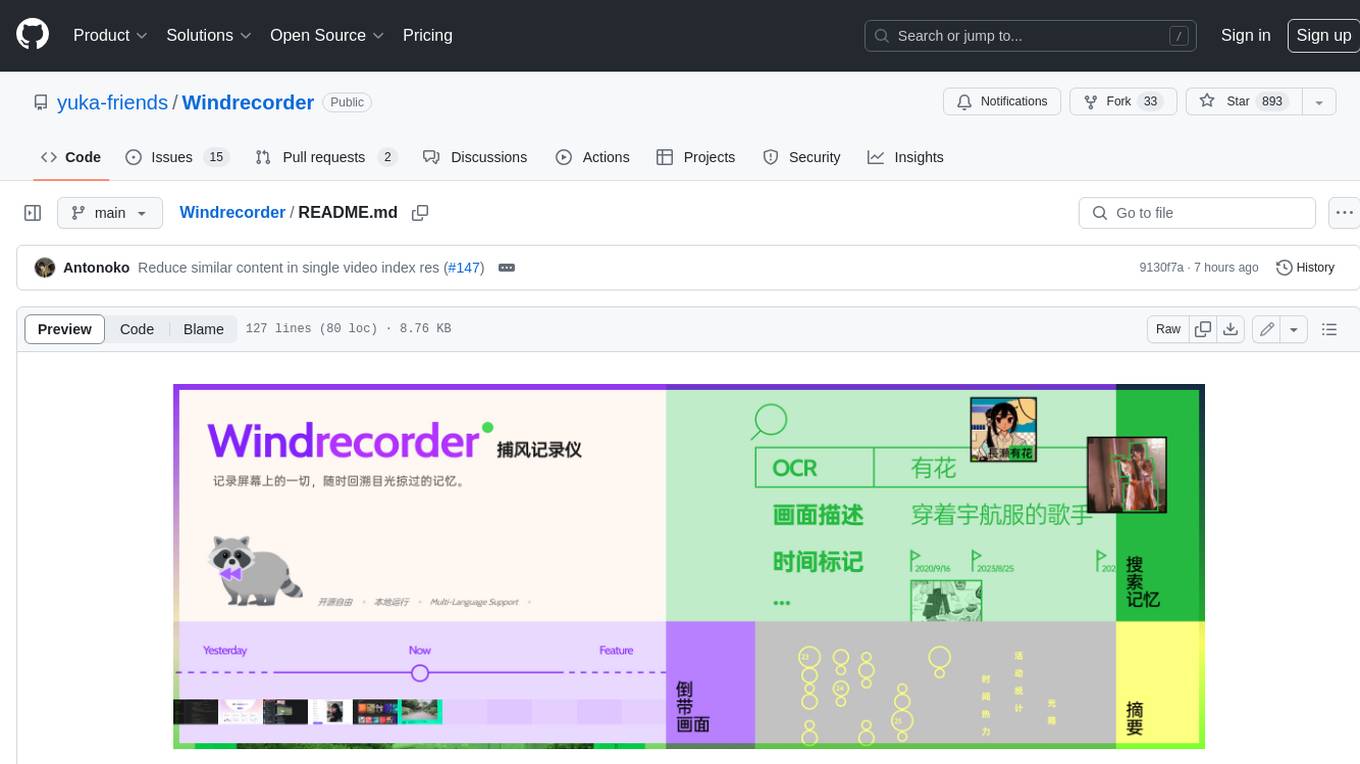

Windrecorder

Windrecorder is an open-source tool that helps you retrieve memory cues by recording everything on your screen. It can search based on OCR text or image descriptions and provides a summary of your activities. All of its capabilities run entirely locally, without the need for an internet connection or uploading any data, giving you complete ownership of your data.

UMOE-Scaling-Unified-Multimodal-LLMs

Uni-MoE is a MoE-based unified multimodal model that can handle diverse modalities including audio, speech, image, text, and video. The project focuses on scaling Unified Multimodal LLMs with a Mixture of Experts framework. It offers enhanced functionality for training across multiple nodes and GPUs, as well as parallel processing at both the expert and modality levels. The model architecture involves three training stages: building connectors for multimodal understanding, developing modality-specific experts, and incorporating multiple trained experts into LLMs using the LoRA technique on mixed multimodal data. The tool provides instructions for installation, weights organization, inference, training, and evaluation on various datasets.

helicone

Helicone is an open-source observability platform designed for Language Learning Models (LLMs). It logs requests to OpenAI in a user-friendly UI, offers caching, rate limits, and retries, tracks costs and latencies, provides a playground for iterating on prompts and chat conversations, supports collaboration, and will soon have APIs for feedback and evaluation. The platform is deployed on Cloudflare and consists of services like Web (NextJs), Worker (Cloudflare Workers), Jawn (Express), Supabase, and ClickHouse. Users can interact with Helicone locally by setting up the required services and environment variables. The platform encourages contributions and provides resources for learning, documentation, and integrations.

InternLM

InternLM is a powerful language model series with features such as 200K context window for long-context tasks, outstanding comprehensive performance in reasoning, math, code, chat experience, instruction following, and creative writing, code interpreter & data analysis capabilities, and stronger tool utilization capabilities. It offers models in sizes of 7B and 20B, suitable for research and complex scenarios. The models are recommended for various applications and exhibit better performance than previous generations. InternLM models may match or surpass other open-source models like ChatGPT. The tool has been evaluated on various datasets and has shown superior performance in multiple tasks. It requires Python >= 3.8, PyTorch >= 1.12.0, and Transformers >= 4.34 for usage. InternLM can be used for tasks like chat, agent applications, fine-tuning, deployment, and long-context inference.

cool-admin-java

Cool-admin-java is an open-source backend permission management system with features like Ai coding, flow arrangement, modularity, and plugin support. It is used to quickly build backend applications. The system offers a modern development experience by providing functionalities such as one-click generation of API interfaces to frontend pages, drag-and-drop flow arrangement, modularized code for easy maintenance, and extensibility through plugin installation for features like payments, SMS, and emails.

GraphGen

GraphGen is a framework for synthetic data generation guided by knowledge graphs. It enhances supervised fine-tuning for large language models (LLMs) by generating synthetic data based on a fine-grained knowledge graph. The tool identifies knowledge gaps in LLMs, prioritizes generating QA pairs targeting high-value knowledge, incorporates multi-hop neighborhood sampling, and employs style-controlled generation to diversify QA data. Users can use LLaMA-Factory and xtuner for fine-tuning LLMs after data generation.

ScaleLLM

ScaleLLM is a cutting-edge inference system engineered for large language models (LLMs), meticulously designed to meet the demands of production environments. It extends its support to a wide range of popular open-source models, including Llama3, Gemma, Bloom, GPT-NeoX, and more. ScaleLLM is currently undergoing active development. We are fully committed to consistently enhancing its efficiency while also incorporating additional features. Feel free to explore our **_Roadmap_** for more details. ## Key Features * High Efficiency: Excels in high-performance LLM inference, leveraging state-of-the-art techniques and technologies like Flash Attention, Paged Attention, Continuous batching, and more. * Tensor Parallelism: Utilizes tensor parallelism for efficient model execution. * OpenAI-compatible API: An efficient golang rest api server that compatible with OpenAI. * Huggingface models: Seamless integration with most popular HF models, supporting safetensors. * Customizable: Offers flexibility for customization to meet your specific needs, and provides an easy way to add new models. * Production Ready: Engineered with production environments in mind, ScaleLLM is equipped with robust system monitoring and management features to ensure a seamless deployment experience.

For similar tasks

achatbot

achatbot is a factory tool that allows users to create chat bots with various functionalities such as llm (language models), asr (automatic speech recognition), tts (text-to-speech), vad (voice activity detection), ocr (optical character recognition), and object detection. The tool provides a structured project with features like chat bots for cmd, grpc, and http servers. It supports various chat bot processors, transport connectors, and AI modules for different tasks. Users can run chat bots locally or deploy them on cloud services like vercel, Cloudflare, AWS Lambda, or Docker. The tool also includes UI components for easy deployment and service architecture diagrams for reference.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.