awesome-ai-agent-papers

A curated collection of AI agent research papers released in 2026, covering agent engineering, memory, evaluation, workflows, and autonomous systems.

Stars: 87

This repository contains a curated list of papers related to artificial intelligence agents. It includes research papers, articles, and resources covering various aspects of AI agents, such as reinforcement learning, multi-agent systems, natural language processing, and more. Whether you are a researcher, student, or practitioner in the field of AI, this collection of papers can serve as a valuable reference to stay updated with the latest advancements and trends in AI agent technologies.

README:

A curated collection of research papers published in 2026 and sourced from arXiv, covering core topics from the AI agent ecosystem like multi-agent coordination, memory & RAG, tooling, evaluation & observability, and security.

Whether you're an AI engineer building agent systems, a researcher exploring new architectures, or a developer integrating LLM agents into products, these papers help you stay on top of what's actually working, what's breaking, and where the field is heading. Updated weekly from arXiv.

Hundreds of papers are published on arXiv every week, and a growing number of them touch on AI agents. We go through them all, filter the ones that are directly relevant to the AI agent ecosystem, and categorize them so you don't have to. This list only includes papers published from January 2026 onward.

- Multi-Agent (35)

- Memory & RAG (32)

- Eval & Observability (50)

- Agent Tooling (54)

- AI Agent Security (53)

| Paper | arXiv ID |

|---|---|

| DyTopo: Dynamic Topology Routing for Multi-Agent Reasoning via Semantic Matching - Investigates dynamically rewiring agent-to-agent connections at each reasoning round via semantic matching instead of fixed communication topologies. |  |

| RuleSmith: Multi-Agent LLMs for Automated Game Balancing - Explores automated game balancing by combining multi-agent LLM self-play with Bayesian optimization on a civ-style game. |  |

| CommCP: Efficient Multi-Agent Coordination via LLM-Based Communication with Conformal Prediction - Examines how conformal prediction can filter noisy inter-agent messages to improve multi-robot coordination. |  |

| AgenticPay: A Multi-Agent LLM Negotiation System for Buyer-Seller Transactions - Introduces a 110+ task benchmark to evaluate how well multi-agent LLM systems handle buyer-seller negotiation through natural language. |  |

| Gender Dynamics and Homophily in a Social Network of LLM Agents - Analyzes social network formation among 70K+ autonomous LLM agents on Chirper.ai to study emergent group behavior and bias. |  |

| ROMA: Recursive Open Meta-Agent Framework for Long-Horizon Multi-Agent Systems - Proposes breaking large tasks into subtask trees that run in parallel across multiple agents to handle long-horizon workflows without exceeding context windows. |  |

| ORCH: many analyses, one merge — a deterministic multi-agent orchestrator - Proposes a deterministic multi-agent orchestrator where multiple LLMs analyze a problem independently and a merge agent selects the best answer without any training. |  |

| H-AdminSim: A Multi-Agent Simulator for Realistic Hospital Administrative Workflows - Simulates end-to-end hospital administrative workflows with multi-agent LLMs and FHIR integration to test LLM-driven automation in healthcare settings. |  |

| Agyn: A Multi-Agent System for Team-Based Autonomous Software Engineering - Proposes a multi-agent system for autonomous software engineering that assigns specialized agents to roles like coordination, research, implementation, and review. |  |

| Multi-Agent Teams Hold Experts Back - Examines whether self-organizing LLM agent teams can match or beat their best member's performance across collaborative benchmarks. |  |

| Evolving Interpretable Constitutions for Multi-Agent Coordination - Explores using LLM-driven genetic programming to automatically discover behavioral norms for multi-agent coordination in a survival-pressure grid-world simulation. |  |

| Scaling Multiagent Systems with Process Rewards - Proposes per-action process rewards from AI feedback to improve credit assignment and sample efficiency when finetuning multi-agent LLM systems. |  |

| MonoScale: Scaling Multi-Agent System with Monotonic Improvement - Proposes a framework for safely growing multi-agent pools by generating familiarization tasks and building routing memory, with a guaranteed non-decreasing performance across onboarding rounds. |  |

| Task-Aware LLM Council with Adaptive Decision Pathways for Decision Support - Proposes a task-adaptive multi-agent framework that routes control to the most suitable LLM at each decision step using semantic matching against each model's success history. |  |

| SYMPHONY: Synergistic Multi-agent Planning with Heterogeneous Language Model Assembly - Explores using a pool of different LLM agents within MCTS planning to increase rollout diversity and improve multi-step reasoning. |  |

| Learning to Recommend Multi-Agent Subgraphs from Calling Trees - Proposes a recommendation framework that uses historical calling trees to select the best agents or agent teams for each subtask in multi-agent orchestration. |  |

| Learning Decentralized LLM Collaboration with Multi-Agent Actor Critic - Investigates actor-critic reinforcement learning methods for training decentralized LLM agent collaboration across writing, coding, and game-playing tasks. |  |

| AgenticSimLaw: A Juvenile Courtroom Multi-Agent Debate Simulation for Explainable High-Stakes Tabular Decision Making - Proposes a role-structured multi-agent courtroom debate framework with defined agent roles, interaction protocols, and private reasoning strategies for auditable high-stakes decision-making. |  |

| Epistemic Context Learning: Building Trust the Right Way in LLM-Based Multi-Agent Systems - Introduces a reasoning framework that builds peer reliability profiles from interaction history so agents in multi-agent systems learn which peers to trust when uncertain. |  |

| Adaptive Confidence Gating in Multi-Agent Collaboration for Efficient and Optimized Code Generation - Explores structured multi-agent debate with three role-based agents and adaptive confidence gating to improve small language model code generation. |  |

| CASTER: Context-Aware Strategy for Task Efficient Routing in Multi-Agent Systems - Proposes a lightweight router for dynamic model selection in graph-based multi-agent systems that combines semantic embeddings with structural meta-features and self-optimizes through on-policy negative feedback. |  |

| Phase Transition for Budgeted Multi-Agent Synergy - Develops a theory for predicting when budgeted multi-agent LLM systems improve, saturate, or collapse based on context windows, communication fidelity, and shared-error correlation. |  |

| Dynamic Role Assignment for Multi-Agent Debate - Proposes a meta-debate framework that dynamically assigns roles in multi-agent systems by matching model capabilities to positions through proposal and peer review stages. |  |

| Learning to Collaborate: An Orchestrated-Decentralized Framework for Peer-to-Peer LLM Federation - Introduces orchestrated decentralized peer-to-peer LLM collaboration that uses contextual bandits to learn optimal matchmaking between heterogeneous agents via secure distillation. |  |

| Mixture-of-Models: Unifying Heterogeneous Agents via N-Way Self-Evaluating Deliberation - Explores a runtime Mixture-of-Models architecture with a dynamic expertise broker and quadratic voting consensus that enables small model ensembles to match frontier performance. |  |

| Multi-Agent Constraint Factorization Reveals Latent Invariant Solution Structure - Formalizes through operator theory why multi-agent LLM systems access invariant solutions that a single agent applying all constraints simultaneously cannot reach. |  |

| MAS-Orchestra: Understanding and Improving Multi-Agent Reasoning Through Holistic Orchestration and Controlled Benchmarks - Proposes a training-time framework that formulates multi-agent orchestration as function-calling reinforcement learning with holistic system-level reasoning and introduces MASBENCH for controlled evaluation. |  |

| MASCOT: Towards Multi-Agent Socio-Collaborative Companion Systems - Proposes a bi-level optimization framework for multi-agent companions that aligns individual personas via RLAIF and optimizes collaborative dialogue through group-level meta-policy rewards. |  |

| If You Want Coherence, Orchestrate a Team of Rivals: Multi-Agent Models of Organizational Intelligence - Explores a team-of-rivals multi-agent architecture with specialized roles and a remote code executor that separates reasoning from data execution to maintain clean context windows. |  |

| The Orchestration of Multi-Agent Systems: Architectures, Protocols, and Enterprise Adoption - Formalizes a unified architectural framework for orchestrated multi-agent systems integrating MCP for tool access and Agent2Agent protocol for peer coordination, delegation, and policy enforcement. |  |

| MARO: Learning Stronger Reasoning from Social Interaction - Proposes Multi-Agent Reward Optimization, a method that decomposes multi-agent social interaction outcomes into per-behavior learning signals to improve LLM reasoning through simulated social environments. |  |

| LSTM-MAS: A Long Short-Term Memory Inspired Multi-Agent System for Long-Context Understanding - Introduces an LSTM-inspired multi-agent architecture with worker, filter, judge, and manager agents that emulate gated memory mechanisms to control information flow for long-context understanding. |  |

| Do We Always Need Query-Level Workflows? Rethinking Agentic Workflow Generation for Multi-Agent Systems - Examines whether query-level workflow generation is always necessary in multi-agent systems and proposes a low-cost task-level framework that uses self-prediction with few-shot calibration instead of full execution. |  |

| Learning Latency-Aware Orchestration for Parallel Multi-Agent Systems - Proposes a latency-aware multi-agent orchestration framework that explicitly optimizes the critical execution path under parallel execution to reduce end-to-end latency while maintaining task performance. |  |

| TopoDIM: One-shot Topology Generation of Diverse Interaction Modes for Multi-Agent Systems - Proposes a one-shot topology generation framework with diverse interaction modes that enables decentralized agents to autonomously construct heterogeneous communication topologies without iterative coordination. |  |

| Paper | arXiv ID |

|---|---|

| BudgetMem: Learning Query-Aware Budget-Tier Routing for Runtime Agent Memory - Investigates routing agent memory queries to different processing tiers based on query difficulty to control the cost-accuracy trade-off at runtime. |  |

| Learning to Share: Selective Memory for Efficient Parallel Agentic Systems - Proposes a shared memory bank with a learned controller that decides what information is worth passing between parallel agent teams to reduce redundant work. |  |

| CompactRAG: Reducing LLM Calls and Token Overhead in Multi-Hop Question Answering - Explores converting a corpus into atomic QA pairs offline to resolve multi-hop questions with just two LLM calls regardless of hop count. |  |

| Mitigating Hallucination in Financial Retrieval-Augmented Generation via Fine-Grained Knowledge Verification - Examines breaking financial RAG answers into atomic facts and verifying each against retrieved documents using reinforcement learning rewards. |  |

| Graph-based Agent Memory: Taxonomy, Techniques, and Applications - Surveys graph-based memory architectures for agents, covering extraction, storage, retrieval, and how memory evolves over time. |  |

| AI Agent Systems for Supply Chains: Structured Decision Prompts and Memory Retrieval - Proposes a multi-agent system for inventory management that retrieves similar past decisions to adapt ordering across various supply chain scenarios. |  |

| SOPRAG: Multi-view Graph Experts Retrieval for Industrial Standard Operating Procedures - Explores replacing flat chunk-based RAG with graph experts that understand entity relationships, causality, and process flows for structured documents like SOPs. |  |

| ProcMEM: Learning Reusable Procedural Memory from Experience via Non-Parametric PPO for LLM Agents - Investigates letting agents save step-by-step procedural skills from past runs and reuse them later without retraining to reduce repeated computation. |  |

| Aggregation Queries over Unstructured Text: Benchmark and Agentic Method - Proposes an agentic method for aggregation queries over unstructured text that tries to find all matching evidence, breaking the task into disambiguation, filtering, and aggregation stages. |  |

| DIVERGE: Diversity-Enhanced RAG for Open-Ended Information Seeking - Proposes an agentic RAG framework that uses reflection and memory-based refinement to generate diverse answers for open-ended questions. |  |

| JADE: Bridging the Strategic-Operational Gap in Dynamic Agentic RAG - Proposes joint optimization of planning and execution in agentic RAG by modeling the system as a cooperative multi-agent team with shared backbone and outcome-based rewards. |  |

| ProRAG: Process-Supervised Reinforcement Learning for Retrieval-Augmented Generation - Proposes process-supervised reinforcement learning for RAG that uses MCTS-based step-level rewards to identify and fix flawed reasoning steps in multi-hop retrieval. |  |

| E-mem: Multi-agent based Episodic Context Reconstruction for LLM Agent Memory - Introduces an episodic memory framework where assistant agents maintain uncompressed memory contexts while a master agent orchestrates global planning, replacing destructive memory compression with context reconstruction. |  |

| ShardMemo: Masked MoE Routing for Sharded Agentic LLM Memory - Proposes a tiered memory service for agentic LLM systems that uses masked mixture-of-experts routing to probe only eligible memory shards under a fixed budget. |  |

| When should I search more: Adaptive Complex Query Optimization with Reinforcement Learning - Explores adaptive query optimization in RAG using reinforcement learning to dynamically decide when to split complex queries into sub-queries and fuse the retrieved results. |  |

| A2RAG: Adaptive Agentic Graph Retrieval for Cost-Aware and Reliable Reasoning - Introduces an adaptive agentic Graph-RAG framework that verifies evidence sufficiency and progressively escalates retrieval effort, mapping graph signals back to source text to handle extraction loss. |  |

| MemCtrl: Using MLLMs as Active Memory Controllers on Embodied Agents - Investigates augmenting multimodal LLMs with a trainable memory gate that decides which observations to retain, update, or discard during online embodied agent exploration. |  |

| AMA: Adaptive Memory via Multi-Agent Collaboration - Proposes a multi-agent memory framework with hierarchical granularity, adaptive query routing, consistency verification, and targeted memory refresh for long-term agent interaction. |  |

| When Iterative RAG Beats Ideal Evidence: A Diagnostic Study in Scientific Multi-hop Question Answering - Examines when iterative retrieval-reasoning loops outperform static gold-context RAG in scientific multi-hop QA, diagnosing failure modes across retrieval coverage, hypothesis drift, and stopping calibration. |  |

| Dep-Search: Learning Dependency-Aware Reasoning Traces with Persistent Memory - Introduces a dependency-aware search framework that uses GRPO reinforcement learning to teach LLMs to decompose questions with dependency relationships and store intermediate results in persistent memory. |  |

| FadeMem: Biologically-Inspired Forgetting for Efficient Agent Memory - Proposes a biologically-inspired agent memory architecture with adaptive exponential decay, LLM-guided conflict resolution, and intelligent memory fusion across a dual-layer hierarchy. |  |

| FastInsight: Fast and Insightful Retrieval via Fusion Operators for Graph RAG - Explores two fusion operators for Graph RAG that combine graph-aware reranking with semantic-topological expansion to improve retrieval accuracy and generation quality. |  |

| Less is More for RAG: Information Gain Pruning for Generator-Aligned Reranking and Evidence Selection - Proposes a generator-aligned reranking and pruning module for RAG that selects evidence using utility signals and filters weak or harmful passages before context truncation. |  |

| DeepEra: A Deep Evidence Reranking Agent for Scientific Retrieval-Augmented Generated Question Answering - Introduces a step-by-step reasoning reranking agent for RAG that distinguishes semantically similar but logically irrelevant passages in retrieval-augmented question answering. |  |

| SPARC-RAG: Adaptive Sequential-Parallel Scaling with Context Management for Retrieval-Augmented Generation - Introduces a multi-agent RAG framework that coordinates sequential and parallel inference-time scaling under unified context management to prevent contamination and improve multi-hop reasoning. |  |

| Incorporating Q&A Nuggets into Retrieval-Augmented Generation - Proposes a nugget-augmented generation system that constructs a bank of Q&A nuggets from retrieved documents to guide extraction, selection, and report generation with citation provenance. |  |

| Augmenting Question Answering with A Hybrid RAG Approach - Introduces a hybrid RAG architecture combining query augmentation, agentic routing, and structured retrieval that merges vector and graph-based techniques with context unification for question answering. |  |

| Utilizing Metadata for Better Retrieval-Augmented Generation - Presents a systematic study of metadata-aware retrieval strategies for RAG, comparing prefix, suffix, unified embedding, and late-fusion approaches with field-level ablations on embedding space structure. |  |

| Deep GraphRAG: A Balanced Approach to Hierarchical Retrieval and Adaptive Integration - Proposes a hierarchical global-to-local retrieval strategy for GraphRAG with beam search-optimized re-ranking and a compact LLM integration module trained via dynamic-weighting reinforcement learning. |  |

| Grounding Agent Memory in Contextual Intent - Introduces an agentic memory system that indexes trajectory steps with structured contextual intent cues and retrieves history by intent compatibility to reduce interference in long-horizon goal-oriented interactions. |  |

| Structure and Diversity Aware Context Bubble Construction for Enterprise Retrieval Augmented Systems - Proposes a structure-informed and diversity-constrained context bubble construction framework for RAG that preserves document structure and balances relevance, coverage, and redundancy under strict token budgets. |  |

| Topo-RAG: Topology-aware retrieval for hybrid text-table documents - Introduces a dual-architecture RAG framework that routes narrative through dense retrievers and tabular data through a cell-aware late interaction mechanism to preserve spatial relationships in hybrid documents. |  |

| Paper | arXiv ID |

|---|---|

| From Features to Actions: Explainability in Traditional and Agentic AI Systems - Examines why standard feature-attribution explanations fail for debugging agent failures and proposes trace-based diagnostics for multi-step agent runs. |  |

| Agentic Uncertainty Reveals Agentic Overconfidence - Investigates whether agents can accurately predict their own success rates in agentic tasks. |  |

| AIRS-Bench: a Suite of Tasks for Frontier AI Research Science Agents - Introduces 20 research tasks from real ML papers covering idea generation, experiments, and refinement for benchmarking science agents. |  |

| JADE: Expert-Grounded Dynamic Evaluation for Open-Ended Professional Tasks - Proposes evaluating agent outputs by decomposing responses into individual claims and checking each against expert knowledge. |  |

| Completing Missing Annotation: Multi-Agent Debate for Accurate Relevant Assessment - Explores using multi-agent debate to fill missing labels in information retrieval benchmarks. |  |

| TrajAD: Trajectory Anomaly Detection for Trustworthy LLM Agents - Proposes a specialized verifier that detects and locates errors in agent execution trajectories at runtime to enable precise rollback-and-retry. |  |

| Emulating Aggregate Human Choice Behavior and Biases with GPT Conversational Agents - Examines whether GPT-4/5 agents can reproduce aggregate human cognitive biases in interactive decision-making scenarios. |  |

| Capture the Flags: Family-Based Evaluation of Agentic LLMs - Proposes generating families of equivalent CTF challenges through code transformations to test whether agents truly understand exploits or just memorize patterns. |  |

| PieArena: Frontier Language Agents Achieve MBA-Level Negotiation - Introduces a negotiation benchmark where frontier LLM agents are evaluated against MBA students to reveal cross-model differences in deception, accuracy, and trustworthiness. |  |

| ES-MemEval: Benchmarking Conversational Agents on Personalized Long-Term Emotional Support - Benchmarks how well conversational agents retain and use personal information over long emotional support conversations. |  |

| HumanStudy-Bench: Towards AI Agent Design for Participant Simulation - Introduces a benchmark that replays published human-subject experiments with LLM agents to test how well they simulate real participants. |  |

| Benchmarking Agents in Insurance Underwriting Environments - Proposes an expert-designed multi-turn insurance underwriting benchmark to evaluate agent performance under real-world enterprise conditions with noisy tools and proprietary knowledge. |  |

| TriCEGAR: A Trace-Driven Abstraction Mechanism for Agentic AI - Proposes automated state abstraction from agent execution traces using predicate trees and counterexample refinement for probabilistic runtime verification of agent behavior. |  |

| Sifting the Noise: A Comparative Study of LLM Agents in Vulnerability False Positive Filtering - Compares three LLM agent frameworks (Aider, OpenHands, SWE-agent) on vulnerability false positive filtering to study how agent design and backbone model affect triage performance. |  |

| Why Are AI Agent Involved Pull Requests (Fix-Related) Remain Unmerged? An Empirical Study - Analyzes 8,106 fix-related pull requests from five AI coding agents to catalog the reasons agent-generated contributions are closed without merging. |  |

| JAF: Judge Agent Forest - Proposes a judge agent framework that evaluates query-response pairs jointly across a cohort rather than in isolation, using in-context neighborhoods for cross-instance pattern detection. |  |

| Stalled, Biased, and Confused: Uncovering Reasoning Failures in LLMs for Cloud-Based Root Cause Analysis - Evaluates LLM reasoning under ReAct and Plan-and-Execute agentic workflows across 48,000 simulated failure scenarios, producing a taxonomy of 16 common reasoning failures. |  |

| CAR-bench: Evaluating the Consistency and Limit-Awareness of LLM Agents under Real-World Uncertainty - Introduces a benchmark for evaluating LLM agent consistency, uncertainty handling, and capability awareness in multi-turn tool-using scenarios with incomplete or ambiguous user requests. |  |

| More Code, Less Reuse: Investigating Code Quality and Reviewer Sentiment towards AI-generated Pull Requests - Examines code quality, maintainability, and reviewer sentiment toward AI-agent-generated pull requests compared to human-authored contributions. |  |

| The Quiet Contributions: Insights into AI-Generated Silent Pull Requests - Analyzes silent (no-comment) AI-generated pull requests to examine their impact on code complexity, quality issues, and security vulnerabilities. |  |

| Agent Benchmarks Fail Public Sector Requirements - Analyzes over 1,300 agent benchmarks against public-sector requirements including process-based evaluation, realism, and domain-specific metrics. |  |

| Interpreting Emergent Extreme Events in Multi-Agent Systems - Applies Shapley values to attribute emergent extreme events in LLM multi-agent systems to specific agent actions across time, agent, and behavior dimensions. |  |

| Who Writes the Docs in SE 3.0? Agent vs. Human Documentation Pull Requests - Analyzes AI agent contributions to documentation pull requests and examines how human developers review and intervene in agent-authored documentation changes. |  |

| Are We All Using Agents the Same Way? An Empirical Study of Core and Peripheral Developers Use of Coding Agents - Examines how core and peripheral developers differ in their use, review, modification, and verification of coding-agent-generated pull requests. |  |

| DevOps-Gym: Benchmarking AI Agents in Software DevOps Cycle - Introduces an end-to-end benchmark with 700+ real-world tasks across build, monitoring, issue resolving, and test generation for evaluating AI agents in full software DevOps workflows. |  |

| Toward Architecture-Aware Evaluation Metrics for LLM Agents - Proposes an architecture-informed evaluation approach that links agent components like planners, memory, and tool routers to observable behaviors and diagnostic metrics. |  |

| Balancing Sustainability And Performance: The Role Of Small-Scale LLMs In Agentic AI Systems - Investigates whether smaller-scale language models can reduce energy consumption in multi-agent agentic AI systems without compromising task quality. |  |

| Understanding Dominant Themes in Reviewing Agentic AI-authored Code - Analyzes 19,450 inline review comments on agent-authored pull requests and derives a taxonomy of 12 review themes to understand how reviewers respond to AI-generated code. |  |

| Let's Make Every Pull Request Meaningful: An Empirical Analysis of Developer and Agentic Pull Requests - Analyzes 40,214 developer and agentic pull requests to compare merge outcomes and identify how submitter attributes and review features differ between human and AI agent contributions. |  |

| Automated Structural Testing of LLM-Based Agents: Methods, Framework, and Case Studies - Presents structural testing methods for LLM-based agents using OpenTelemetry traces, mocking for reproducible behavior, and automated assertions for component-level verification. |  |

| When AI Agents Touch CI/CD Configurations: Frequency and Success - Analyzes how five AI coding agents interact with CI/CD configurations across 8,031 pull requests, examining modification frequency, merge rates, and build success. |  |

| Fingerprinting AI Coding Agents on GitHub - Identifies behavioral signatures of five AI coding agents from 33,580 pull requests using commit, PR structure, and code features for agent attribution. |  |

| Interpreting Agentic Systems: Beyond Model Explanations to System-Level Accountability - Assesses existing interpretability methods for agentic systems and identifies gaps in explaining temporal dynamics, compounding decisions, and context-dependent behaviors. |  |

| AI builds, We Analyze: An Empirical Study of AI-Generated Build Code Quality - Investigates maintainability and security-related build code smells in AI-agent-generated pull requests across 364 identified quality issues. |  |

| Will It Survive? Deciphering the Fate of AI-Generated Code in Open Source - Examines long-term survival of AI-agent-generated code through survival analysis of 200,000+ code units across 201 open-source projects. |  |

| LUMINA: Long-horizon Understanding for Multi-turn Interactive Agents - Develops an oracle counterfactual framework for multi-turn agentic tasks that measures the criticality of individual capabilities like planning and state tracking. |  |

| When Agents Fail to Act: A Diagnostic Framework for Tool Invocation Reliability in Multi-Agent LLM Systems - Presents a 12-category error taxonomy and diagnostic framework for evaluating tool-use reliability across open-weight and proprietary LLMs in multi-agent systems on edge hardware. |  |

| Agentic Confidence Calibration - Introduces the problem of agentic confidence calibration and proposes Holistic Trajectory Calibration, extracting process-level features across an agent's entire trajectory to diagnose failures. |  |

| Improving Methodologies for Agentic Evaluations Across Domains: Leakage of Sensitive Information, Fraud and Cybersecurity Threats - Examines methodological challenges in evaluating AI agents across sensitive information leakage, fraud, and cybersecurity threats through a multi-national collaborative benchmarking exercise. |  |

| MiRAGE: A Multiagent Framework for Generating Multimodal Multihop Question-Answer Dataset for RAG Evaluation - Introduces a multi-agent framework that generates verified, domain-specific, multimodal, multi-hop question-answer datasets for benchmarking retrieval-augmented generation systems. |  |

| When Agents Fail: A Comprehensive Study of Bugs in LLM Agents with Automated Labeling - Analyzes 1,187 bug reports from LLM agent software across seven frameworks to categorize bug types, root causes, effects, and tests automated bug labeling with a ReAct agent. |  |

| The Why Behind the Action: Unveiling Internal Drivers via Agentic Attribution - Proposes a hierarchical framework for general agentic attribution that identifies internal factors driving agent actions through temporal likelihood dynamics and perturbation-based analysis. |  |

| Tokenomics: Quantifying Where Tokens Are Used in Agentic Software Engineering - Analyzes token consumption patterns across software development lifecycle stages in a multi-agent system to identify where tokens are consumed and which stages drive cost. |  |

| APEX-Agents - Introduces a benchmark of 480 long-horizon, cross-application productivity tasks created by investment banking analysts, consultants, and lawyers for evaluating AI agent capabilities in realistic work environments. |  |

| CooperBench: Why Coding Agents Cannot be Your Teammates Yet - Introduces a benchmark of 600+ collaborative coding tasks to evaluate whether coding agents can coordinate as effective teammates, revealing a curse of coordination where collaboration reduces success. |  |

| Insider Knowledge: How Much Can RAG Systems Gain from Evaluation Secrets? - Investigates how RAG systems can game nugget-based LLM judge evaluations through metric overfitting, demonstrating near-perfect scores when evaluation elements are leaked or predictable. |  |

| Replayable Financial Agents: A Determinism-Faithfulness Assurance Harness for Tool-Using LLM Agents - Introduces the Determinism-Faithfulness Assurance Harness for measuring trajectory determinism and evidence-conditioned faithfulness in tool-using LLM agents across 74 configurations and 12 models. |  |

| AEMA: Verifiable Evaluation Framework for Trustworthy and Controlled Agentic LLM Systems - Presents a process-aware and auditable multi-agent evaluation framework that plans, executes, and aggregates multi-step evaluations across heterogeneous agentic workflows under human oversight. |  |

| Terminal-Bench: Benchmarking Agents on Hard, Realistic Tasks in Command Line Interfaces - Introduces a curated benchmark of 89 hard tasks in computer terminal environments with unique environments, human-written solutions, and comprehensive tests for evaluating frontier agent capabilities. |  |

| ATOD: An Evaluation Framework and Benchmark for Agentic Task-Oriented Dialogue Systems - Introduces a benchmark and evaluation framework for agentic task-oriented dialogue systems covering multi-goal coordination, dependency management, memory, adaptability, and proactivity. |  |

| Paper | arXiv ID |

|---|---|

| TraceCoder: A Trace-Driven Multi-Agent Framework for Automated Debugging - Proposes a multi-agent observe-analyze-repair loop that uses runtime traces to find and fix bugs in LLM-generated code. |  |

| Generative Ontology: When Structured Knowledge Learns to Create - Explores constraining LLM generation with executable schemas and multi-agent roles to produce structurally valid yet creative outputs. |  |

| Structured Context Engineering for File-Native Agentic Systems - Tests how context format (YAML, JSON, Markdown) affects agent accuracy across 9,649 experiments in file-native agentic systems. |  |

| ProAct: Agentic Lookahead in Interactive Environments - Explores training agents to think ahead by distilling environment search into causal reasoning chains in interactive environments. |  |

| Autonomous Question Formation for Large Language Model-Driven AI Systems - Investigates teaching agents to ask themselves the right questions before acting to adapt to new situations autonomously. |  |

| From Perception to Action: Spatial AI Agents and World Models - Surveys the connection between agentic architectures and spatial tasks like robotics and navigation, covering memory, planning, and world models in embodied agents. |  |

| World Models as an Intermediary between Agents and the Real World - Argues for using world models as a bridge between agents and high-cost real-world environments to provide richer learning signals across domains like robotics and ML engineering. |  |

| Engineering AI Agents for Clinical Workflows: A Case Study in Architecture, MLOps, and Governance - Presents a reference architecture for production AI agents integrating Clean Architecture, event-driven design, per-agent MLOps lifecycles, and human-in-the-loop governance. |  |

| Autonomous Data Processing using Meta-Agents - Proposes a meta-agent framework that builds, runs, and keeps refining data processing pipelines through hierarchical agent orchestration. |  |

| MEnvAgent: Scalable Polyglot Environment Construction for Verifiable Software Engineering - Proposes a multi-agent framework for automatically building executable test environments across ten programming languages using planning-execution-verification with environment reuse. |  |

| Learning with Challenges: Adaptive Difficulty-Aware Data Generation for Mobile GUI Agent Training - Proposes an adaptive data generation framework for training mobile GUI agents that matches task difficulty to the agent's current capability level. |  |

| AutoRefine: From Trajectories to Reusable Expertise for Continual LLM Agent Refinement - Proposes extracting dual-form reusable expertise from agent execution histories — specialized subagents for procedural tasks and skill patterns for static knowledge — with continuous pruning and merging. |  |

| ToolTok: Tool Tokenization for Efficient and Generalizable GUI Agents - Proposes modeling GUI agent operations as sequences of learnable tool tokens with semantic anchoring and curriculum-based training instead of coordinate-based visual grounding. |  |

| From Self-Evolving Synthetic Data to Verifiable-Reward RL: Post-Training Multi-turn Interactive Tool-Using Agents - Proposes a framework combining a self-evolving multi-agent data engine with verifier-based reinforcement learning to train multi-turn interactive tool-using agents. |  |

| Why Reasoning Fails to Plan: A Planning-Centric Analysis of Long-Horizon Decision Making in LLM Agents - Analyzes why step-wise reasoning in LLM agents fails at long-horizon planning and proposes future-aware lookahead with reward estimation to let early actions account for delayed outcomes. |  |

| SWE-Replay: Efficient Test-Time Scaling for Software Engineering Agents - Proposes a test-time scaling method for software engineering agents that recycles prior trajectories and branches at critical intermediate steps instead of resampling from scratch. |  |

| Optimizing Agentic Workflows using Meta-tools - Proposes bundling recurring sequences of agent tool calls into deterministic meta-tools to skip unnecessary intermediate LLM reasoning steps and cut failures. |  |

| astra-langchain4j: Experiences Combining LLMs and Agent Programming - Explores integrating LLM capabilities into the ASTRA agent programming language to study how traditional agent toolkits and modern LLM-based agentic platforms can inform each other. |  |

| Meta Context Engineering via Agentic Skill Evolution - Introduces a bi-level framework where a meta-agent evolves context engineering skills via agentic crossover while a base agent executes them to optimize context as files and code. |  |

| DataCross: A Unified Benchmark and Agent Framework for Cross-Modal Heterogeneous Data Analysis - Proposes a multi-agent framework and benchmark for cross-modal data analysis that coordinates specialized sub-agents via a divide-and-conquer workflow across structured and unstructured data sources. |  |

| CovAgent: Overcoming the 30% Curse of Mobile Application Coverage with Agentic AI and Dynamic Instrumentation - Explores agentic AI for Android app testing that uses code inspection and dynamic instrumentation to reach activities that standard GUI fuzzers cannot access. |  |

| CUA-Skill: Develop Skills for Computer Using Agent - Introduces a large-scale computer-using agent skill library with parameterized execution, composition graphs, dynamic retrieval, and memory-aware failure recovery for desktop applications. |  |

| Textual Equilibrium Propagation for Deep Compound AI Systems - Explores local equilibrium propagation for optimizing deep compound AI systems that avoids signal degradation in long-horizon agentic workflows by replacing global textual backpropagation. |  |

| Should I Have Expressed a Different Intent? Counterfactual Generation for LLM-Based Autonomous Control - Investigates counterfactual reasoning in agentic LLM control scenarios using structural causal models and conformal prediction for formal reliability guarantees. |  |

| Insight Agents: An LLM-Based Multi-Agent System for Data Insights - Introduces a hierarchical multi-agent system with out-of-domain detection and BERT-based agent routing for delivering personalized data insights at production scale. |  |

| Agentic Design Patterns: A System-Theoretic Framework - Introduces a system-theoretic framework that decomposes agentic AI into five functional subsystems and derives 12 reusable design patterns for building robust agent architectures. |  |

| A Practical Guide to Agentic AI Transition in Organizations - Explores a pragmatic framework for transitioning organizational processes to agentic AI, covering domain-driven use case identification, task delegation, and human-in-the-loop operating models. |  |

| JitRL: Just-In-Time Reinforcement Learning for Continual Learning in LLM Agents Without Gradient Updates - Proposes a training-free continual learning framework for LLM agents that retrieves relevant past experiences and modulates output logits at test time without gradient updates. |  |

| Think-Augmented Function Calling: Improving LLM Parameter Accuracy Through Embedded Reasoning - Proposes embedding explicit reasoning at both function and parameter levels during agent tool calls, with dynamic complexity scoring to trigger granular justification for critical decisions. |  |

| Paying Less Generalization Tax: A Cross-Domain Generalization Study of RL Training for LLM Agents - Investigates which RL training environment properties and modeling choices most influence cross-domain generalization for LLM agents deployed beyond their training domains. |  |

| Think Locally, Explain Globally: Graph-Guided LLM Investigations via Local Reasoning and Belief Propagation - Proposes disaggregating LLM investigation into bounded local evidence mining with deterministic graph traversal and belief propagation for reliable open-ended agent reasoning. |  |

| AI Agent for Reverse-Engineering Legacy Finite-Difference Code - Presents a LangGraph-based AI agent framework combining GraphRAG, multi-stage retrieval, and RL-inspired adaptive feedback for reverse-engineering legacy scientific code. |  |

| PatchIsland: Orchestration of LLM Agents for Continuous Vulnerability Repair - Proposes a continuous vulnerability repair system that orchestrates a diverse LLM agent ensemble with two-phase deduplication for integration with continuous fuzzing pipelines. |  |

| DALIA: Towards a Declarative Agentic Layer for Intelligent Agents in MCP-Based Server Ecosystems - Introduces a declarative architectural layer for agentic workflows with formalized capabilities, declarative discovery protocol, and deterministic task graph construction. |  |

| SWE-Pruner: Self-Adaptive Context Pruning for Coding Agents - Presents a task-aware context pruning framework for coding agents that trains a lightweight neural skimmer to selectively retain relevant code lines based on explicit goals. |  |

| REprompt: Prompt Generation for Intelligent Software Development Guided by Requirements Engineering - Proposes a multi-agent prompt optimization framework guided by requirements engineering principles for system and user prompts in agent-based software development. |  |

| EvoConfig: Self-Evolving Multi-Agent Systems for Efficient Autonomous Environment Configuration - Introduces a self-evolving multi-agent framework for automated environment configuration with expert diagnosis and dynamic error-fixing priority adjustment. |  |

| SemanticALLI: Caching Reasoning, Not Just Responses, in Agentic Systems - Proposes a pipeline-aware caching architecture for agentic systems that elevates structured intermediate reasoning representations to first-class cacheable artifacts to reduce redundant LLM calls. |  |

| Controlling Long-Horizon Behavior in Language Model Agents with Explicit State Dynamics - Investigates imposing explicit dynamical structure on an external affective state to induce temporal coherence and controlled recovery in multi-turn dialogue agents. |  |

| Agentic Uncertainty Quantification - Proposes a Dual-Process framework that transforms verbalized uncertainty into bi-directional control signals for agent memory and reflection to prevent cascading hallucination errors. |  |

| Agentic AI Governance and Lifecycle Management in Healthcare - Presents a Unified Agent Lifecycle Management blueprint with five control-plane layers for governing agent fleets including identity registry, orchestration, and runtime policy enforcement. |  |

| Autonomous Business System via Neuro-symbolic AI - Introduces a neuro-symbolic architecture that integrates LLM agents with predicate-logic programming and knowledge graphs to orchestrate end-to-end business initiatives through task-specific logic programs. |  |

| How to Build AI Agents by Augmenting LLMs with Codified Human Expert Domain Knowledge? A Software Engineering Framework - Proposes a software engineering framework for capturing and embedding codified human domain knowledge into LLM-based agents through request classification, RAG, and expert rule integration. |  |

| Agent Identity URI Scheme: Topology-Independent Naming and Capability-Based Discovery for Multi-Agent Systems - Defines the agent:// URI scheme that decouples agent identity from network location through trust roots, hierarchical capability paths, and cryptographic attestation for multi-agent discovery. |  |

| Toward Efficient Agents: Memory, Tool learning, and Planning - Surveys efficiency in agent systems across memory, tool learning, and planning, comparing approaches under fixed cost budgets and analyzing the Pareto frontier between effectiveness and cost. |  |

| Toward self-coding information systems - Proposes self-coding information systems that use agentic AI to dynamically generate, test, and redeploy their own source code at runtime to reduce feature delivery time. |  |

| A Lightweight Modular Framework for Constructing Autonomous Agents Driven by Large Language Models: Design, Implementation, and Applications in AgentForge - Presents a lightweight open-source Python framework for building LLM-driven agents with composable skill abstractions, a unified LLM backend interface, and declarative YAML-based configuration. |  |

| MagicGUI-RMS: A Multi-Agent Reward Model System for Self-Evolving GUI Agents via Automated Feedback Reflux - Introduces a multi-agent reward model system for GUI agents that combines domain-specific and general-purpose reward models with automated data reflux for self-evolving agent training. |  |

| Agentic AI Meets Edge Computing in Autonomous UAV Swarms - Investigates three deployment architectures for integrating LLM-based agentic AI with edge computing in UAV swarms, covering standalone, edge-enabled, and edge-cloud hybrid configurations. |  |

| Agentic Artificial Intelligence (AI): Architectures, Taxonomies, and Evaluation of Large Language Model Agents - Proposes a unified taxonomy decomposing AI agents into Perception, Brain, Planning, Action, Tool Use, and Collaboration subsystems, covering MCP, native computer use, and evaluation practices. |  |

| Agentic Reasoning for Large Language Models - Surveys agentic reasoning across foundational, self-evolving, and collective multi-agent dimensions, distinguishing in-context reasoning from post-training approaches across planning, tool use, and coordination. |  |

| POLARIS: Typed Planning and Governed Execution for Agentic AI in Back-Office Automation - Introduces a governed orchestration framework that treats agentic automation as typed plan synthesis with DAG-based planning, rubric-guided selection, validator-gated execution, and compiled policy guardrails. |  |

| From Everything-is-a-File to Files-Are-All-You-Need: How Unix Philosophy Informs the Design of Agentic AI Systems - Explores how the Unix 'everything is a file' principle informs agentic AI design through file-like abstractions and code-based specifications for composable, auditable agent interfaces. |  |

| Towards AGI A Pragmatic Approach Towards Self Evolving Agent - Introduces a hierarchical self-evolving multi-agent framework that integrates curriculum learning, reward-based learning, and genetic algorithm evolution for continuous autonomous capability expansion. |  |

| Paper | arXiv ID |

|---|---|

| Confundo: Learning to Generate Robust Poison for Practical RAG Systems - Trains an LLM to generate RAG poison that survives real-world content processing and query variation for stress-testing RAG defenses. |  |

| Malicious Agent Skills in the Wild: A Large-Scale Security Empirical Study - Analyzes 98K agent skills from community registries to study the prevalence and nature of malicious third-party agent plugins. |  |

| Subgraph Reconstruction Attacks on Graph RAG Deployments with Practical Defenses - Investigates whether attackers can reconstruct knowledge graphs from Graph RAG outputs through multi-turn probing. |  |

| Zero-Trust Runtime Verification for Agentic Payment Protocols - Proposes consume-once mandate semantics for AI agent payment protocols to prevent replay and redirect attacks in autonomous transactions. |  |

| Identifying Adversary Tactics and Techniques in Malware Binaries with an LLM Agent - Explores using an LLM agent to identify attack techniques in stripped malware binaries through incremental context retrieval. |  |

| Agent2Agent Threats in Safety-Critical LLM Assistants: A Human-Centric Taxonomy - Maps attack paths in agent-to-agent communication protocols for automotive LLM assistants, from driver distraction to unauthorized vehicle control. |  |

| Learning to Inject: Automated Prompt Injection via Reinforcement Learning - Explores using reinforcement learning to auto-generate prompt injection attacks that transfer across multiple frontier LLM models. |  |

| A Dual-Loop Agent Framework for Automated Vulnerability Reproduction - Proposes an LLM agent with dual feedback loops for strategy and code to automate vulnerability reproduction from CVE descriptions. |  |

| Human Society-Inspired Approaches to Agentic AI Security: The 4C Framework - Organizes agentic security risks into four layers (Core, Connection, Cognition, Compliance) to address trust and governance issues beyond prompt injection. |  |

| MAGIC: A Co-Evolving Attacker-Defender Adversarial Game for Robust LLM Safety - Proposes a co-evolving RL game between an attacker and defender agent to stress-test safety alignment against novel attack patterns. |  |

| TxRay: Agentic Postmortem of Live Blockchain Attacks - Introduces an LLM agentic system that reconstructs blockchain exploit lifecycles from limited evidence and generates runnable proof-of-concept reproductions. |  |

| To Defend Against Cyber Attacks, We Must Teach AI Agents to Hack - Argues that AI-agent-driven cyber attacks are inevitable and proposes building frontier offensive AI capabilities responsibly as essential defensive infrastructure. |  |

| SMCP: Secure Model Context Protocol - Proposes protocol-level security improvements for the Model Context Protocol including unified identity management, mutual authentication, and fine-grained policy enforcement. |  |

| Persuasion Propagation in LLM Agents - Investigates how user persuasion during conversation can carry over and change how autonomous AI agents perform later tasks. |  |

| When Agents "Misremember" Collectively: Exploring the Mandela Effect in LLM-based Multi-Agent Systems - Explores how collective false memories form in LLM-based multi-agent systems and proposes defenses including cognitive anchoring and alignment-based approaches. |  |

| "Someone Hid It": Query-Agnostic Black-Box Attacks on LLM-Based Retrieval - Proposes a black-box attack method that generates transferable adversarial tokens to manipulate LLM-based retrieval systems without needing access to the target's queries or model. |  |

| From Similarity to Vulnerability: Key Collision Attack on LLM Semantic Caching - Introduces CacheAttack, a black-box framework that exploits the trade-off between locality and collision resistance in semantic caching to hijack LLM responses and manipulate agent behavior. |  |

| TessPay: Verify-then-Pay Infrastructure for Trusted Agentic Commerce - Proposes a verify-then-pay infrastructure for agent transactions that locks funds in escrow, requires cryptographic proof of task execution, and releases payment only after verification. |  |

| Whispers of Wealth: Red-Teaming Google's Agent Payments Protocol via Prompt Injection - Red-teams Google's Agent Payments Protocol via prompt injection attacks that manipulate product ranking and extract sensitive user data in agent-led purchase flows. |  |

| StepShield: When, Not Whether to Intervene on Rogue Agents - Introduces a benchmark for evaluating when agent violations are detected during execution rather than just whether, with temporal metrics for early intervention and tokens saved. |  |

| Delegation Without Living Governance - Argues that static compliance-based governance fails for agentic AI at machine speed and proposes runtime governance as a path to preserving human relevance in agent-driven decision-making. |  |

| DRAINCODE: Stealthy Energy Consumption Attacks on Retrieval-Augmented Code Generation via Context Poisoning - Introduces an adversarial attack that poisons retrieval contexts in RAG-based code generation to force longer outputs, increasing GPU latency and energy consumption. |  |

| Securing AI Agents in Cyber-Physical Systems: A Survey of Environmental Interactions, Deepfake Threats, and Defenses - Surveys security threats targeting AI agents in cyber-physical systems, covering deepfake attacks, MCP-mediated vulnerabilities, and defense-in-depth architectures. |  |

| Multimodal Multi-Agent Ransomware Analysis Using AutoGen - Explores AutoGen-based multi-agent coordination with specialized agents for static, dynamic, and network-level ransomware family classification using confidence-aware decisions. |  |

| SHIELD: An Auto-Healing Agentic Defense Framework for LLM Resource Exhaustion Attacks - Introduces a multi-agent auto-healing defense framework with semantic similarity retrieval, pattern matching, and an evolving knowledgebase for defending LLMs against resource exhaustion attacks. |  |

| AgenticSCR: An Autonomous Agentic Secure Code Review for Immature Vulnerabilities Detection - Explores agentic AI for pre-commit secure code review that uses autonomous decision-making, tool invocation, and security-focused semantic memories to detect immature vulnerabilities. |  |

| AgentDoG: A Diagnostic Guardrail Framework for AI Agent Safety and Security - Introduces a three-dimensional taxonomy for agentic risks and a diagnostic guardrail framework that monitors agent trajectories with fine-grained root cause analysis beyond binary safety labels. |  |

| When Personalization Legitimizes Risks: Uncovering Safety Vulnerabilities in Personalized Dialogue Agents - Examines how benign personal memories in personalized agents bias intent inference and cause models to legitimize harmful queries, revealing a previously unexplored safety failure mode. |  |

| Multi-Agent Collaborative Intrusion Detection for LAE-IoT - Proposes a multi-agent collaborative framework with specialized LLM-enhanced agents for intelligent data processing and adaptive intrusion classification in aerial IoT networks. |  |

| Faramesh: A Protocol-Agnostic Execution Control Plane for Autonomous Agent Systems - Introduces a protocol-agnostic execution control plane for autonomous agents that enforces authorization boundaries with canonical action representation and deterministic policy evaluation. |  |

| A Systemic Evaluation of Multimodal RAG Privacy - Examines privacy risks in multimodal RAG pipelines through inclusion inference and metadata leakage attacks during standard model prompting. |  |

| Breaking the Protocol: Security Analysis of the Model Context Protocol Specification - Presents the first security analysis of the Model Context Protocol specification, identifying three protocol-level vulnerabilities and proposing backward-compatible security extensions. |  |

| Prompt Injection Attacks on Agentic Coding Assistants: A Systematic Analysis - Surveys 78 studies to systematize prompt injection attacks on agentic coding assistants with a three-dimensional taxonomy across delivery vectors, modalities, and propagation. |  |

| Connect the Dots: Knowledge Graph-Guided Crawler Attack on Retrieval-Augmented Generation Systems - Introduces RAGCrawler, a knowledge graph-guided attack that adaptively steals RAG corpus content through targeted queries to maximize coverage under a query budget. |  |

| Securing LLM-as-a-Service for Small Businesses: An Industry Case Study of a Distributed Chatbot Deployment Platform - Presents a multi-tenant chatbot deployment platform with container-based isolation and platform-level defenses against prompt injection attacks in RAG-based systems. |  |

| Interoperable Architecture for Digital Identity Delegation for AI Agents with Blockchain Integration - Introduces delegation grants and a canonical verification context for bounded, auditable identity delegation across human users and AI agents in heterogeneous identity ecosystems. |  |

| INFA-Guard: Mitigating Malicious Propagation via Infection-Aware Safeguarding in LLM-Based Multi-Agent Systems - Proposes an infection-aware defense framework for multi-agent systems that distinguishes infected agents from attackers and applies topological constraints to halt malicious propagation. |  |

| Query-Efficient Agentic Graph Extraction Attacks on GraphRAG Systems - Proposes AGEA, an agentic framework using novelty-guided exploration and graph memory to steal latent entity-relation graphs from GraphRAG systems under strict query budgets. |  |

| NeuroFilter: Privacy Guardrails for Conversational LLM Agents - Introduces activation-space guardrails that detect privacy-violating intent in LLM agents through linear separation of internal representations, including drift detection across multi-turn conversations. |  |

| VirtualCrime: Evaluating Criminal Potential of Large Language Models via Sandbox Simulation - Proposes a three-agent sandbox simulation framework with 40 crime tasks across 13 objectives to evaluate the criminal capabilities of LLM agents in realistic scenarios. |  |

| PINA: Prompt Injection Attack against Navigation Agents - Introduces an adaptive prompt injection framework targeting navigation agents under black-box, long-context, and action-executable constraints across indoor and outdoor environments. |  |

| Prompt Injection Mitigation with Agentic AI, Nested Learning, and AI Sustainability via Semantic Caching - Explores a multi-agent defense pipeline combining semantic similarity caching, nested learning, and observability-aware evaluation to mitigate prompt injection attacks while reducing computational costs. |  |

| CODE: A Contradiction-Based Deliberation Extension Framework for Overthinking Attacks on Retrieval-Augmented Generation - Introduces an overthinking attack framework for RAG systems with reasoning models, using multi-agent-constructed poisoning samples that cause excessive reasoning token consumption without degrading task accuracy. |  |

| AgenTRIM: Tool Risk Mitigation for Agentic AI - Introduces a framework for detecting and mitigating tool-driven agency risks through offline interface verification and runtime per-step least-privilege tool access with adaptive filtering. |  |

| Efficient Privacy-Preserving Retrieval Augmented Generation with Distance-Preserving Encryption - Proposes a privacy-preserving RAG framework using conditional approximate distance-comparison-preserving encryption that enables similarity computation on encrypted embeddings in untrusted cloud environments. |  |

| Taming Various Privilege Escalation in LLM-Based Agent Systems: A Mandatory Access Control Framework - Proposes a mandatory access control framework for LLM agent systems that monitors agent-tool interactions via information flow graphs and enforces attribute-based policies against privilege escalation. |  |

| Institutional AI: Governing LLM Collusion in Multi-Agent Cournot Markets via Public Governance Graphs - Introduces governance graphs as public, immutable manifests with enforceable sanctions and restorative paths to govern multi-agent LLM coordination and prevent harmful collusion. |  |

| SD-RAG: A Prompt-Injection-Resilient Framework for Selective Disclosure in Retrieval-Augmented Generation - Proposes a prompt-injection-resilient RAG framework that decouples security enforcement from generation by applying sanitization and policy-aware disclosure controls during the retrieval phase. |  |

| Beyond Max Tokens: Stealthy Resource Amplification via Tool Calling Chains in LLM Agents - Introduces a stealthy multi-turn economic DoS attack exploiting the agent-tool communication loop through MCP-compatible tool server modifications that inflate costs by up to 658x. |  |

| Hidden-in-Plain-Text: A Benchmark for Social-Web Indirect Prompt Injection in RAG - Introduces a benchmark and harness for evaluating web-facing RAG systems under indirect prompt injection and retrieval poisoning attacks with standardized end-to-end evaluation from ingestion to generation. |  |

| Breaking Up with Normatively Monolithic Agency with GRACE: A Reason-Based Neuro-Symbolic Architecture for Safe and Ethical AI Alignment - Introduces a neuro-symbolic containment architecture that decouples normative reasoning from instrumental decision-making through a Moral Module, Decision-Making Module, and compliance Guard for agent safety. |  |

| AgentGuardian: Learning Access Control Policies to Govern AI Agent Behavior - Presents a security framework that learns context-aware access-control policies from monitored execution traces to govern AI agent operations and detect malicious inputs while preserving normal functionality. |  |

| Agent Skills in the Wild: An Empirical Study of Security Vulnerabilities at Scale - Analyzes 42,447 agent skills from two major marketplaces, finding 26.1% contain security vulnerabilities spanning prompt injection, data exfiltration, privilege escalation, and supply chain risks. |  |

We welcome contributions! See CONTRIBUTING.md for guidelines.

- Submit new papers via PR

- Suggest category improvements

MIT License - see LICENSE

This is a curated list. Papers listed here are created and published by their respective authors, not by us. We curate papers relevant to the AI agent ecosystem and do not audit, endorse, or guarantee the correctness of listed research.

If you find an issue with a listed paper or want a paper removed, please open an issue and we'll take care of it promptly.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-ai-agent-papers

Similar Open Source Tools

awesome-ai-agent-papers

This repository contains a curated list of papers related to artificial intelligence agents. It includes research papers, articles, and resources covering various aspects of AI agents, such as reinforcement learning, multi-agent systems, natural language processing, and more. Whether you are a researcher, student, or practitioner in the field of AI, this collection of papers can serve as a valuable reference to stay updated with the latest advancements and trends in AI agent technologies.

awesome-LLM-resources

This repository is a curated list of resources for learning and working with Large Language Models (LLMs). It includes a collection of articles, tutorials, tools, datasets, and research papers related to LLMs such as GPT-3, BERT, and Transformer models. Whether you are a researcher, developer, or enthusiast interested in natural language processing and artificial intelligence, this repository provides valuable resources to help you understand, implement, and experiment with LLMs.

ai-collection

The ai-collection repository is a collection of various artificial intelligence projects and tools aimed at helping developers and researchers in the field of AI. It includes implementations of popular AI algorithms, datasets for training machine learning models, and resources for learning AI concepts. The repository serves as a valuable resource for anyone interested in exploring the applications of artificial intelligence in different domains.

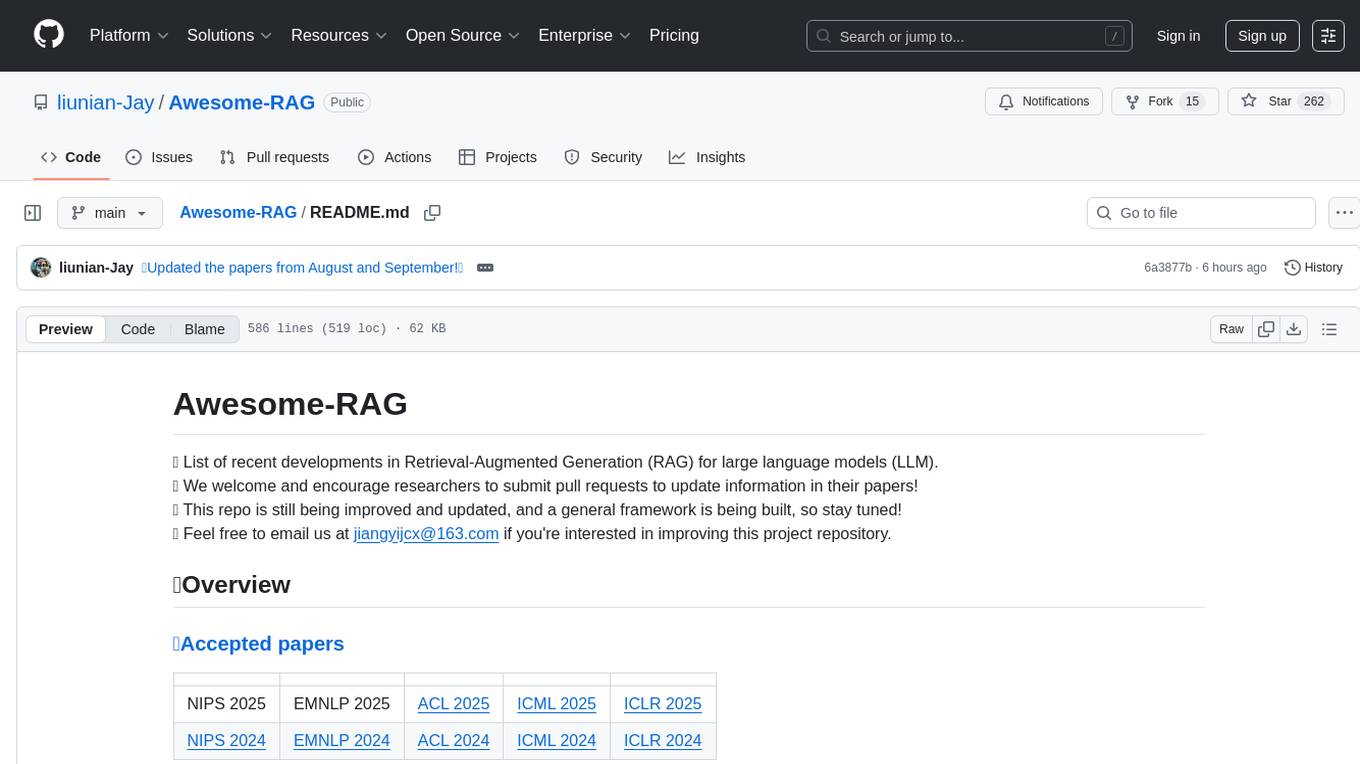

Awesome-RAG

Awesome-RAG is a repository that lists recent developments in Retrieval-Augmented Generation (RAG) for large language models (LLM). It includes accepted papers, evaluation datasets, latest news, and papers from various conferences like NIPS, EMNLP, ACL, ICML, and ICLR. The repository is continuously updated and aims to build a general framework for RAG. Researchers are encouraged to submit pull requests to update information in their papers. The repository covers a wide range of topics related to RAG, including knowledge-enhanced generation, contrastive reasoning, self-alignment, mobile agents, and more.

God-Level-AI

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This repository is designed for individuals aiming to excel in the field of Data and AI, providing video sessions and text content for learning. It caters to those in leadership positions, professionals, and students, emphasizing the need for dedicated effort to achieve excellence in the tech field. The content covers various topics with a focus on practical application.

artificial-intelligence

This repository contains a collection of AI projects implemented in Python, primarily in Jupyter notebooks. The projects cover various aspects of artificial intelligence, including machine learning, deep learning, natural language processing, computer vision, and more. Each project is designed to showcase different AI techniques and algorithms, providing a hands-on learning experience for users interested in exploring the field of artificial intelligence.

ai-workshop-code

The ai-workshop-code repository contains code examples and tutorials for various artificial intelligence concepts and algorithms. It serves as a practical resource for individuals looking to learn and implement AI techniques in their projects. The repository covers a wide range of topics, including machine learning, deep learning, natural language processing, computer vision, and reinforcement learning. By exploring the code and following the tutorials, users can gain hands-on experience with AI technologies and enhance their understanding of how these algorithms work in practice.

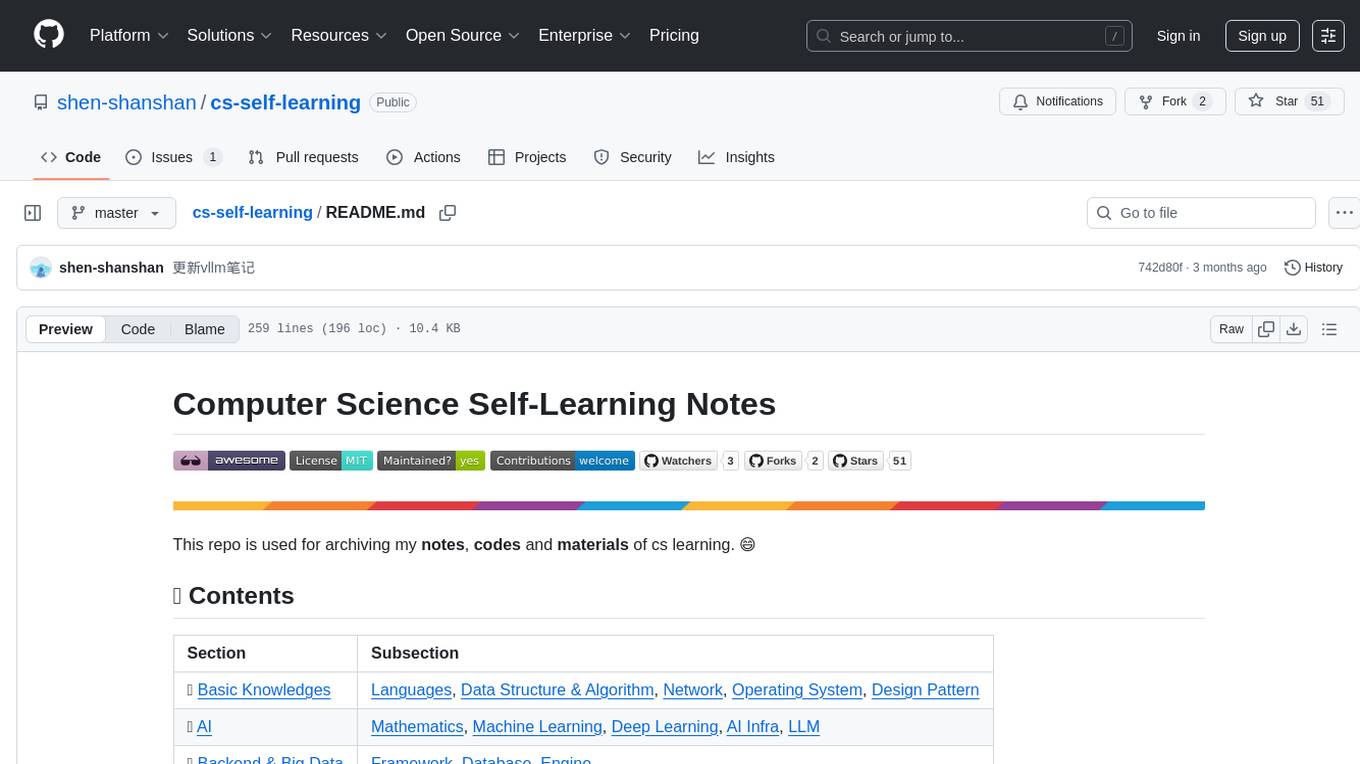

cs-self-learning

This repository serves as an archive for computer science learning notes, codes, and materials. It covers a wide range of topics including basic knowledge, AI, backend & big data, tools, and other related areas. The content is organized into sections and subsections for easy navigation and reference. Users can find learning resources, programming practices, and tutorials on various subjects such as languages, data structures & algorithms, AI, frameworks, databases, development tools, and more. The repository aims to support self-learning and skill development in the field of computer science.

spring-ai-examples

Spring AI Examples is a repository containing various examples of integrating artificial intelligence capabilities into Spring applications. The examples cover a wide range of AI technologies such as machine learning, natural language processing, computer vision, and more. These examples serve as a practical guide for developers looking to incorporate AI functionalities into their Spring projects.

ai-engineering-hub

The AI Engineering Hub is a repository that provides in-depth tutorials on LLMs and RAGs, real-world AI agent applications, and examples to implement, adapt, and scale in projects. It caters to beginners, practitioners, and researchers, offering resources for all skill levels to experiment and succeed in AI engineering.

home-assistant-datasets

This package provides a collection of datasets for evaluating AI Models in the context of Home Assistant. It includes synthetic data generation, loading data into Home Assistant, model evaluation with different conversation agents, human annotation of results, and visualization of improvements over time. The datasets cover home descriptions, area descriptions, device descriptions, and summaries that can be performed on a home. The tool aims to build datasets for future training purposes.

LLMs-playground

LLMs-playground is a repository containing code examples and tutorials for learning and experimenting with Large Language Models (LLMs). It provides a hands-on approach to understanding how LLMs work and how to fine-tune them for specific tasks. The repository covers various LLM architectures, pre-training techniques, and fine-tuning strategies, making it a valuable resource for researchers, students, and practitioners interested in natural language processing and machine learning. By exploring the code and following the tutorials, users can gain practical insights into working with LLMs and apply their knowledge to real-world projects.

ai

This repository contains a collection of AI algorithms and models for various machine learning tasks. It provides implementations of popular algorithms such as neural networks, decision trees, and support vector machines. The code is well-documented and easy to understand, making it suitable for both beginners and experienced developers. The repository also includes example datasets and tutorials to help users get started with building and training AI models. Whether you are a student learning about AI or a professional working on machine learning projects, this repository can be a valuable resource for your development journey.

LLMs-in-Finance

This repository focuses on the application of Large Language Models (LLMs) in the field of finance. It provides insights and knowledge about how LLMs can be utilized in various scenarios within the finance industry, particularly in generating AI agents. The repository aims to explore the potential of LLMs to enhance financial processes and decision-making through the use of advanced natural language processing techniques.

ai-tutor-rag-system

The AI Tutor RAG System repository contains Jupyter notebooks supporting the RAG course, focusing on enhancing AI models with retrieval-based methods. It covers foundational and advanced concepts in retrieval-augmented generation, including data retrieval techniques, model integration with retrieval systems, and practical applications of RAG in real-world scenarios.

trae-agent

Trae-agent is a Python library for building and training reinforcement learning agents. It provides a simple and flexible framework for implementing various reinforcement learning algorithms and experimenting with different environments. With Trae-agent, users can easily create custom agents, define reward functions, and train them on a variety of tasks. The library also includes utilities for visualizing agent performance and analyzing training results, making it a valuable tool for both beginners and experienced researchers in the field of reinforcement learning.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.