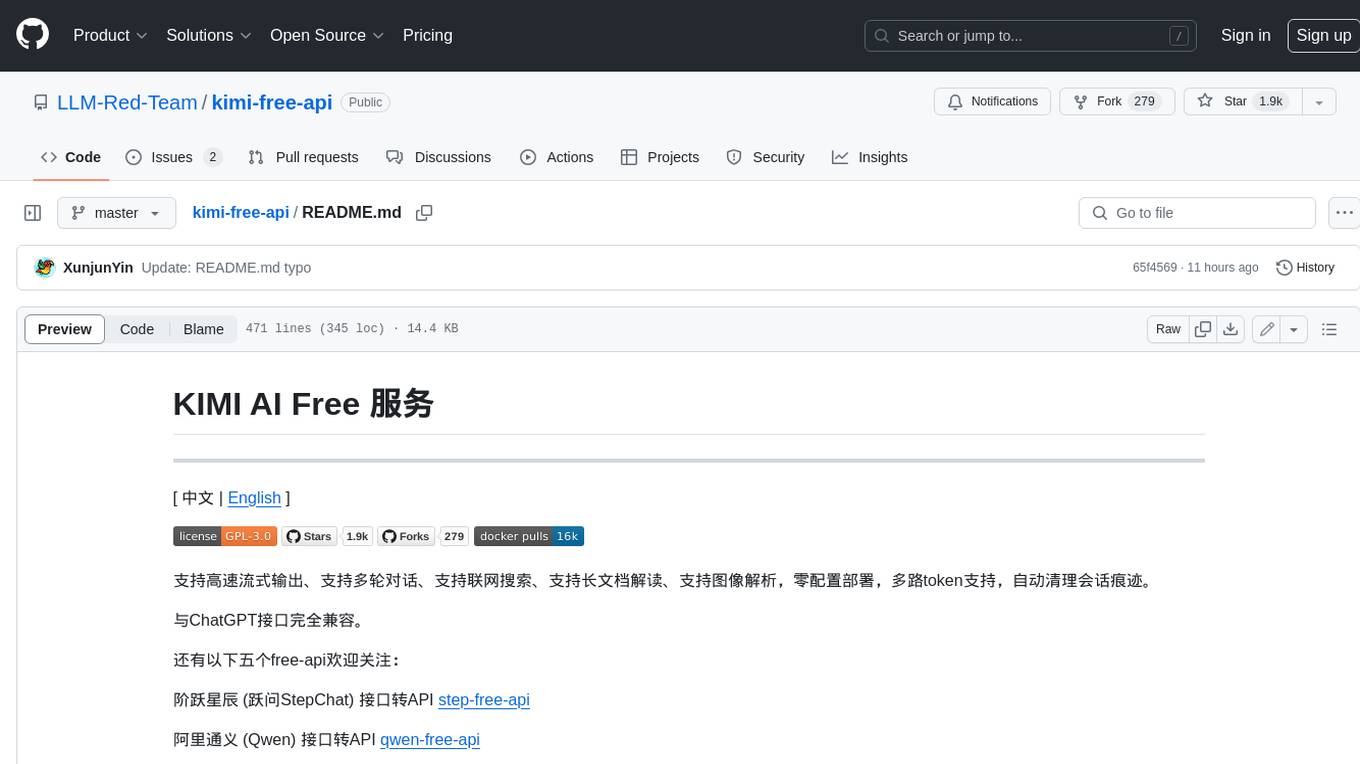

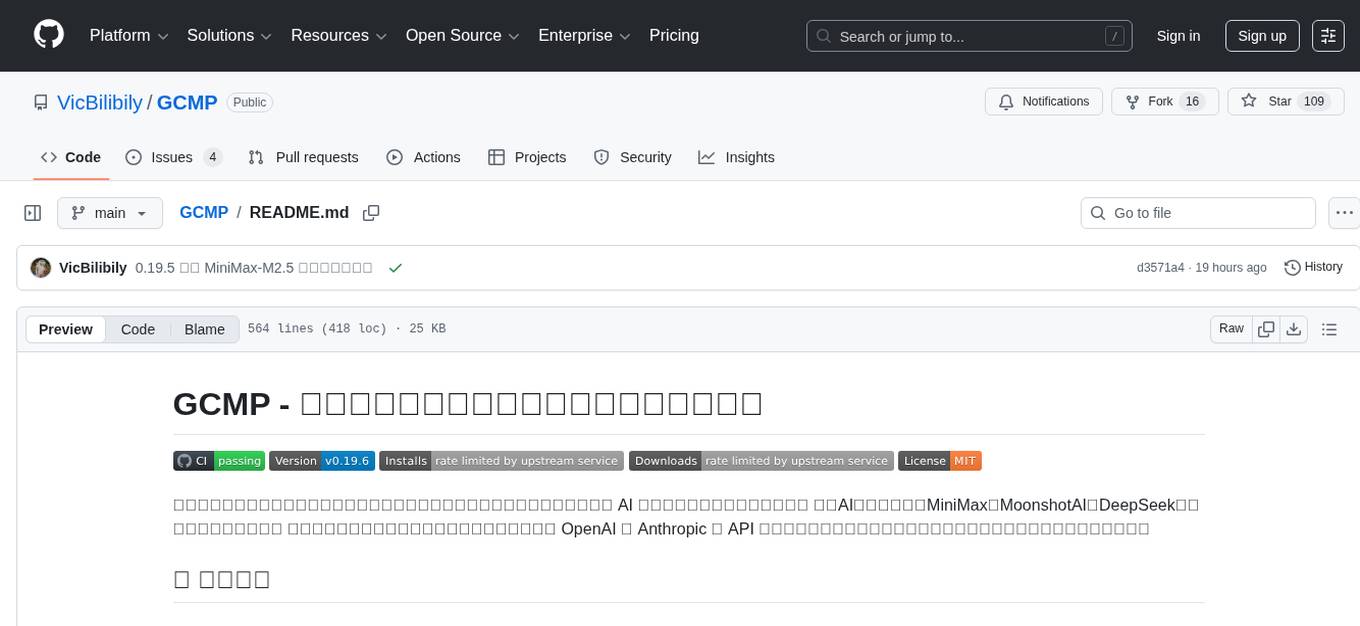

GCMP

GitHub Copilot Chat 模型提供商扩展。通过集成国内主流原生大模型提供商,为开发者提供更加丰富、更适合本土需求的 AI 编程助手选择。目前已内置支持 智谱AI、火山方舟、MiniMax、MoonshotAI、DeepSeek、快手万擎、阿里云百炼 等原生大模型提供商。此外,扩展插件已适配支持 OpenAI 与 Anthropic 的 API 接口兼容模型,支持自定义接入任何提供兼容接口的第三方云服务模型。

Stars: 108

GCMP is an extension that integrates multiple native large model providers in China to provide developers with a richer and more suitable AI programming assistant selection for local needs. It currently supports native large model providers such as ZhipuAI, Volcano Ark, MiniMax, MoonshotAI, DeepSeek, Kuaishou Wanqing, and Alibaba Cloud Bailian. In addition, the extension plugins are compatible with OpenAI and Anthropic API interfaces, supporting custom integration of any third-party cloud service models compatible with the interface.

README:

通过集成国内主流原生大模型提供商,为开发者提供更加丰富、更适合本土需求的 AI 编程助手选择。目前已内置支持 智谱AI、火山方舟、MiniMax、MoonshotAI、DeepSeek、快手万擎、阿里云百炼 等原生大模型提供商。此外,扩展插件已适配支持 OpenAI 与 Anthropic 的 API 接口兼容模型,支持自定义接入任何提供兼容接口的第三方云服务模型。

在VS Code扩展市场搜索 GCMP 并安装,或使用扩展标识符:vicanent.gcmp

- 打开

VS Code的GitHub Copilot Chat面板 - 在模型选择器的底部选择

管理模型,从弹出的模型提供商列表中选择所需的提供商 - 若第一次使用,选择提供商后会要求设置 ApiKey,根据提示完成API密钥配置后,即可返回模型选择器添加并启用模型

- 在模型选择器中选中目标模型后,即可开始与AI助手进行对话

智谱AI - GLM系列

-

编程套餐:GLM-5(Thinking)、GLM-4.7(Thinking)、GLM-4.6、GLM-4.6V(Thinking)、GLM-4.5-Air

- 用量查询:已支持状态栏显示周期剩余用量,可查看 GLM Coding Plan 用量信息。

- 按量计费:GLM-5(Thinking)、GLM-4.7、GLM-4.7-FlashX、GLM-4.6、GLM-4.6V、GLM-4.5-Air

- 免费模型:GLM-4.7-Flash、GLM-4.6V-Flash

- 国际站点:已支持国际站(z.ai)切换设置。

-

搜索功能:集成

联网搜索MCP及Web Search API,支持#zhipuWebSearch进行联网搜索。- 默认启用

联网搜索MCP模式,编程套餐支持:Lite(100次/月)、Pro(1000次/月)、Max(4000次/月)。 - 可通过设置关闭

联网搜索MCP模式以使用Web Search API按次计费。

- 默认启用

-

Coding Plan 编程套餐:MiniMax-M2.5(极速版)、MiniMax-M2.1、MiniMax-M2

-

搜索功能:集成 Coding Plan 联网搜索调用工具,支持通过

#minimaxWebSearch进行联网搜索。 - 用量查询:已支持状态栏显示周期剩余用量,可查看 Coding Plan 编程套餐用量信息。

- 国际站点:已支持国际站 Coding Plan 编程套餐使用。

-

搜索功能:集成 Coding Plan 联网搜索调用工具,支持通过

- 按量计费:MiniMax-M2.5(极速版)、MiniMax-M2.1(极速版)、MiniMax-M2

MoonshotAI - Kimi K2系列

-

会员权益:Kimi

会员计划套餐的附带的Kimi For Coding,当前使用 Roo Code 发送 Anthropic 请求。- 用量查询:已支持状态栏显示周期剩余用量,可查看套餐的剩余用量及限频重置时间。

- 预置模型:Kimi-K2.5(Thinking)

- 余额查询:已支持状态栏显示当前账户额度,可查看账户余额状况。

DeepSeek - 深度求索

- 预置模型:DeepSeek-V3.2(Reasoner)

- 余额查询:已支持状态栏显示当前账户额度,可查看账户余额详情。

阿里云百炼 - 通义大模型

- Coding Plan 套餐:Qwen3-Max(Thinking)、Qwen3-Coder-Plus

- 通义千问系列:Qwen3-Max(Thinking)、Qwen3-VL-Plus、Qwen3-VL-Flash、Qwen-Plus、Qwen-Flash

快手万擎 - StreamLake

-

KwaiKAT Coding Plan:KAT-Coder-Pro-V1

-

KAT-Coder系列:KAT-Coder-Pro-V1(按量付费)、KAT-Coder-Air-V1

火山方舟 - 豆包大模型

-

Coding Plan 套餐:

- 支持模型:Doubao-Seed-2.0-Code、Doubao-Seed-Code、Kimi-K2.5、GLM-4.7、Deepseek v3.2、Kimi-K2-Thinking

- 豆包系列:Doubao-Seed-2.0(lite/mini/pro/Code)、Doubao-Seed-1.8、Doubao-Seed-1.6、Doubao-Seed-1.6-Lite

- 协作奖励计划:GLM-4.7、DeepSeek-V3.2(Thinking)、DeepSeek-V3.1-terminus、Kimi-K2-250905、Kimi-K2-Thinking-251104

-

上下文缓存实验性支持:Doubao-Seed-1.8(Caching)、GLM-4.7(Caching)

- 使用此模式需要在后台手动开通模型的上下文缓存功能。

- 在首次对话请求时默认创建 1h 上下文缓存窗口,本地命中时额外增加 5m 缓存时间差异窗口(即首次对话 55m 后重建 1h 缓存)。

展开查看 CLI 认证支持提供商说明

心流AI - iFlow CLI

阿里巴巴旗下的AI平台,支持通过 iFlow CLI 进行 使用 iFlow 登录 认证(需要本地安装 iFlow CLI)。

由于 iFlow 接口针对 CLI 专有模型增加了请求签名验证,已不再允许外部调用这些模型(虽可逆向,但求合规),只在 CLI 可用的模型不再内置。

npm install -g @iflow-ai/iflow-cli@latest- 支持模型:GLM-4.6、iFlow-ROME、Qwen3-Coder-Plus、Kimi-K2-0905

Qwen Code - Qwen Code CLI

阿里云通义千问官方编程助手,支持通过 Qwen Code CLI 进行 Qwen Auth 认证(需要本地安装 Qwen Code CLI)。

npm install -g @qwen-code/qwen-code@latest- 支持模型:Qwen3-Coder-Plus、Qwen3-VL-Plus

Gemini - Gemini CLI

Google 官方 Gemini API 命令行工具,支持通过 Gemini CLI 进行 Login with Google 认证(需要本地安装 Gemini CLI)。

npm install -g @google/gemini-cli@latest- 支持模型:Gemini 2.5 Pro、Gemini 2.5 Flash

- 预览模型:Gemini 3 Pro Preview、Gemini 3 Flash Preview

GCMP 支持通过 VS Code 设置来自定义AI模型的行为参数,让您获得更个性化的AI助手体验。

📝 提示:

settings.json所有参数修改会立即生效。

展开查看高级配置说明

{

"gcmp.maxTokens": 16000, // 32-256000

"gcmp.editToolMode": "claude", // claude/gpt-5/none

"gcmp.zhipu.search.enableMCP": true // 启用`联网搜索MCP`(Coding Plan专属)

}GCMP 支持通过 gcmp.providerOverrides 配置项来覆盖提供商的默认设置,包括 baseUrl、customHeader、模型配置等。

配置示例:

{

"gcmp.providerOverrides": {

"dashscope": {

"models": [

{

"id": "deepseek-v3.2", // 增加额外模型:不在提示可选选项,但允许自定义新增

"name": "Deepseek-V3.2 (阿里云百炼)",

"tooltip": "DeepSeek-V3.2是引入DeepSeek Sparse Attention(一种稀疏注意力机制)的正式版模型,也是DeepSeek推出的首个将思考融入工具使用的模型,同时支持思考模式与非思考模式的工具调用。",

// "sdkMode": "openai", // 阿里云百炼已默认继承提供商设置,其他提供商模型可按需设置

// "baseUrl": "https://dashscope.aliyuncs.com/compatible-mode/v1",

"maxInputTokens": 128000,

"maxOutputTokens": 16000,

"capabilities": {

"toolCalling": true,

"imageInput": false

}

}

]

}

}

}GCMP 提供 OpenAI / Anthropic Compatible Provider,用于支持任何 OpenAI 或 Anthropic 兼容的 API。通过 gcmp.compatibleModels 配置,您可以完全自定义模型参数,包括扩展请求参数。

- 通过

GCMP: Compatible Provider 设置命令启动配置向导。 - 在

settings.json设置中编辑gcmp.compatibleModels配置项。

展开查看自定义模型配置说明

聚合转发类型的提供商可提供内置特殊适配,不作为单一提供商提供。

若需要内置或特殊适配的请通过 Issue 提供相关信息。

| 提供商ID | 提供商名称 | 提供商描述 | 余额查询 |

|---|---|---|---|

| aiping | AI Ping | 用户账户余额 | |

| aihubmix | AIHubMix | 可立享 10% 优惠 | ApiKey余额 |

| openrouter | OpenRouter | 用户账户余额 | |

| siliconflow | 硅基流动 | 用户账户余额 |

配置示例:

{

"gcmp.compatibleModels": [

{

"id": "glm-4.6",

"name": "GLM-4.6",

"provider": "zhipu",

"model": "glm-4.6",

"sdkMode": "openai",

"baseUrl": "https://open.bigmodel.cn/api/coding/paas/v4",

// "sdkMode": "anthropic",

// "baseUrl": "https://open.bigmodel.cn/api/anthropic",

"maxInputTokens": 128000,

"maxOutputTokens": 4096,

"capabilities": {

"toolCalling": true, // Agent模式下模型必须支持工具调用

"imageInput": false

},

// customHeader 和 extraBody 可按需设置

"customHeader": {

"X-Model-Specific": "value",

"X-Custom-Key": "${APIKEY}"

},

"extraBody": {

"temperature": 0.1,

"top_p": 0.9,

// "top_p": null, // 部分提供商不支持同时设置 temperature 和 top_p

"thinking": { "type": "disabled" }

}

}

]

}gcmp.compatibleModels[*].sdkMode 用于指定兼容层的请求/流式解析方式。除 openai / anthropic 标准模式外,以下两项为实验性能力:

-

openai-responses:OpenAI Responses API 模式(实验性)- 使用 OpenAI SDK 的 Responses API(

/responses)进行请求与流式处理。 - 参数:默认不传递

max_output_tokens,若需设置通过extraBody单独设置 - Codex:默认通过请求头传递

conversation_id、session_id,请求体传递prompt_cache_key(火山方舟传递previous_response_id除外)。 - 注意:并非所有 OpenAI 兼容服务都实现

/responses;若报 404/不兼容,请切回openai或openai-sse。 -

useInstructions(仅对openai-responses生效):是否使用 Responses API 的instructions参数传递系统指令。-

false:用“用户消息”承载系统指令(默认,兼容性更好) -

true:用instructions传递系统指令(部分网关可能不支持)

-

- 使用 OpenAI SDK 的 Responses API(

-

gemini-sse:Gemini HTTP SSE 模式(实验性)- 使用纯 HTTP + SSE(

data:)/ JSON 行流解析,不依赖 Google SDK,主要用于兼容第三方 Gemini 网关。 - 适用:你的网关对外暴露 Gemini

:streamGenerateContent风格接口(通常需要alt=sse)。

- 使用纯 HTTP + SSE(

- FIM (Fill In the Middle) 是一种代码补全技术,模型通过上下文预测中间缺失的代码,适合快速补全单行或短片段代码。

- NES (Next Edit Suggestions) 是一个智能代码建议功能,根据当前编辑上下文提供更精准的代码补全建议,支持多行代码生成。

- 使用 FIM/NES 补全功能前,必须先在对话模型配置中设置对应提供商的 ApiKey 并验证可用。补全功能复用对话模型的 ApiKey 配置。

- 在输出面板选择

GitHub Copilot Inline Completion via GCMP输出通道,可查看具体补全运行情况和调试信息。- 目前能接入的都是通用大语言模型,没有经过专门的补全训练调优,效果可能不如 Copilot 自带的 Tab 补全。

展开查看详细配置说明

FIM 和 NES 补全都使用单独的模型配置,可以分别通过 gcmp.fimCompletion.modelConfig 和 gcmp.nesCompletion.modelConfig 进行设置。

-

启用 FIM 补全模式(推荐 DeepSeek、Qwen 等支持 FIM 的模型):

- 已测试支持

DeepSeek、硅基流动,特殊支持阿里云百炼。

- 已测试支持

{

"gcmp.fimCompletion.enabled": true, // 启用 FIM 补全功能

"gcmp.fimCompletion.debounceMs": 500, // 自动触发补全的防抖延迟

"gcmp.fimCompletion.timeoutMs": 5000, // FIM 补全的请求超时时间

"gcmp.fimCompletion.modelConfig": {

"provider": "deepseek", // 提供商ID,其他请先添加 OpenAI Compatible 自定义模型 provider 并设置 ApiKey

"baseUrl": "https://api.deepseek.com/beta", // ⚠️ DeepSeek FIM 必须使用 beta 端点才支持

// "baseUrl": "https://api.siliconflow.cn/v1", // 硅基流动(provider:`siliconflow`)

// "baseUrl": "https://dashscope.aliyuncs.com/compatible-mode/v1", // 阿里云百炼(provider:`dashscope`)

"model": "deepseek-chat",

"maxTokens": 100

// "extraBody": { "top_p": 0.9 }

}

}- 启用 NES 手动补全模式:

{

"gcmp.nesCompletion.enabled": true, // 启用 NES 补全功能

"gcmp.nesCompletion.debounceMs": 500, // 自动触发补全的防抖延迟

"gcmp.nesCompletion.timeoutMs": 10000, // NES 补全请求超时时间

"gcmp.nesCompletion.manualOnly": true, // 启用手动 `Alt+/` 快捷键触发代码补全提示

"gcmp.nesCompletion.modelConfig": {

"provider": "zhipu", // 提供商ID,其他请先添加 OpenAI Compatible 自定义模型 provider 并设置 ApiKey

"baseUrl": "https://open.bigmodel.cn/api/coding/paas/v4", // OpenAI Chat Completion Endpoint 的 BaseUrl 地址

"model": "glm-4.6", // 推荐使用性能较好的模型,留意日志输出是否包含 ``` markdown 代码符

"maxTokens": 200,

"extraBody": {

// GLM-4.6 默认启用思考,补全场景建议关闭思考以加快响应

"thinking": { "type": "disabled" }

}

}

}- 混合使用 FIM + NES 补全模式:

- 自动触发 + manualOnly: false:根据光标位置智能选择提供者

- 光标在行尾 → 使用 FIM(适合补全当前行)

- 光标不在行尾 → 使用 NES(适合编辑代码中间部分)

- 如果使用 NES 提供无结果或补全无意义,则自动回退到 FIM

- 自动触发 + manualOnly: true:仅发起 FIM 请求(NES 需手动触发)

- 手动触发(按

Alt+/):直接调用 NES,不发起 FIM- 模式切换(按

Shift+Alt+/):在自动/手动间切换(仅影响 NES)

MistralAI Coding 示例配置

{

"gcmp.compatibleModels": [

{

"id": "codestral-latest",

"name": "codestral-latest",

"provider": "mistral",

"baseUrl": "https://codestral.mistral.ai/v1",

"sdkMode": "openai",

"maxInputTokens": 32000,

"maxOutputTokens": 4096,

"capabilities": {

"toolCalling": true,

"imageInput": false

}

}

],

"gcmp.fimCompletion.enabled": true,

"gcmp.fimCompletion.debounceMs": 500,

"gcmp.fimCompletion.timeoutMs": 5000,

"gcmp.fimCompletion.modelConfig": {

"provider": "mistral",

"baseUrl": "https://codestral.mistral.ai/v1/fim",

"model": "codestral-latest",

"extraBody": { "code_annotations": null },

"maxTokens": 100

},

"gcmp.nesCompletion.enabled": false,

"gcmp.nesCompletion.debounceMs": 500,

"gcmp.nesCompletion.timeoutMs": 10000,

"gcmp.nesCompletion.manualOnly": false,

"gcmp.nesCompletion.modelConfig": {

"provider": "mistral",

"baseUrl": "https://codestral.mistral.ai/v1",

"model": "codestral-latest",

"maxTokens": 200

}

}| 快捷键 | 操作说明 |

|---|---|

Alt+/ |

手动触发补全建议(NES 模式) |

Shift+Alt+/ |

切换 NES 手动触发模式 |

GCMP 提供上下文窗口占用比例状态栏显示功能,帮助您实时监控当前会话的上下文窗口使用情况。

展开主要特性说明

- 实时监控:状态栏实时显示当前会话的上下文窗口占用比例

-

详细统计:悬停状态栏可查看详细的上下文占用信息,包括:

- 系统提示:系统提示词占用的 token 数量

- 可用工具:工具及MCP定义占用的 token 数量

- 环境信息:编辑器环境信息占用的 token 数量

- 压缩消息:经过压缩的历史消息占用的 token 数量

- 历史消息:历史对话消息占用的 token 数量

- 思考内容:会话思考过程占用的 token 数量

- 本轮图片:当前会话图片附件的 token 数量

- 本轮消息:当前会话消息占用的 token 数量

GCMP 内置了完整的 Token 消耗统计功能,帮助您追踪和管理 AI 模型的使用情况。

展开查看详细功能说明

- 持久化记录:基于文件系统的日志记录,无存储限制,支持长期数据保存

-

用量统计:记录每次 API 请求的模型和用量信息,包括:

- 模型信息(提供商、模型 ID、模型名称)

- Token 用量(预估输入、实际输入、输出、缓存、推理等)

- 请求状态(预估/完成/失败)

-

多维度统计:按日期、提供商、模型、小时等多维度查看统计数据

-

小时统计详情:支持按小时、提供商、模型三层嵌套显示

- ⏰ 小时级别:显示该小时的总计数据

- 📦 提供商级别:显示该提供商在该小时的汇总数据

- ├─ 模型级别:显示该模型在该小时的详细数据

- 提供商和模型按请求数降序排列,无有效请求的提供商和模型不显示

-

小时统计详情:支持按小时、提供商、模型三层嵌套显示

- 实时状态栏:状态栏实时显示今日 Token 用量,30秒自动刷新

- 可视化视图:WebView 详细视图支持查看历史记录、分页显示请求记录

-

查看统计:点击状态栏的 Token 用量显示,或通过命令面板执行

GCMP: 查看今日 Token 消耗统计详情命令 - 历史记录:在详细视图中可查看任意日期的统计记录

- 数据管理:支持打开日志存储目录进行手动管理

{

"gcmp.usages.retentionDays": 100 // 历史数据保留天数(0表示永久保留)

}GCMP 支持在提交前自动读取当前仓库的改动(已暂存/未暂存/新文件),提取关键 diff 片段并结合相关历史提交与仓库整体提交风格(auto 模式下)来生成更贴合你项目习惯的提交信息。

展开查看详细使用说明

-

vscode.git 扩展:该功能依赖 VS Code 内置的

vscode.git扩展来访问 Git 仓库信息- 扩展会自动检测 Git 可用性,当 Git 不可用时相关按钮将自动隐藏

- 如果你的环境中禁用了

vscode.git扩展,Commit 消息生成功能将不可用

- 仓库标题栏按钮:

生成提交消息 - 更改分组栏按钮:

- 在“暂存的更改”上生成:

生成提交消息 - 暂存的更改 - 在“更改”上生成

生成提交消息 - 未暂存的更改

- 在“暂存的更改”上生成:

-

生成提交消息:默认行为,同时分析 staged + working tree(tracked + untracked)。 -

生成提交消息 - 暂存的更改:仅分析 staged,适合“分步提交/拆分提交”。 -

生成提交消息 - 未暂存的更改:仅分析 working tree(tracked + untracked),不包含 staged。

多仓库工作区:如果当前工作区包含多个 Git 仓库,GCMP 会尝试根据你点击的 SCM 区域推断仓库;无法推断时会弹出仓库选择。

该功能基于 VS Code Language Model API 调用模型。

- 第一次使用或未配置模型时,会自动引导选择模型(也可手动运行

GCMP: 选择 Commit 消息生成模型)。 - 相关配置项:

{

"gcmp.commit.language": "chinese", // 生成语言:chinese / english(auto 模式语言不明确时的回退值)

"gcmp.commit.format": "auto", // 提交消息格式:auto(默认) / 见下方 format 说明

"gcmp.commit.customInstructions": "", // 自定义指令(仅当 format=custom 时生效)

"gcmp.commit.model": {

"provider": "zhipu", // 生成模型的提供商(providerKey,例如 zhipu / minimax / compatible)

"model": "glm-4.6" // 生成模型的 ID(对应 VS Code Language Model 的 model.id)

}

}说明:以下示例仅用于展示格式形态;实际内容会根据你的 diff 自动生成。

-

auto:自动推断(会参考仓库历史的语言/风格;不明确时回退为plain+gcmp.commit.language),默认推荐。 -

plain:简洁一句话,不含 type/scope/emoji(适合快速提交)。 -

custom:完全由你的自定义指令控制(gcmp.commit.customInstructions)。 -

conventional:Conventional Commits(可带 scope,常见写法是“标题 + 可选正文要点”)。

feat(commit): 新增提交消息生成

- 支持 staged / 未暂存分别生成

- 自动补充相关历史提交作为参考

-

angular:Angular 风格(type(scope): summary,语义上接近 conventional)。

feat(commit): 新增 SCM 入口

- 在仓库标题栏与更改分组栏增加入口

-

karma:Karma 风格(偏“单行”,保持短小)。

fix(commit): 修复多仓库选择

-

semantic:语义化type: message(不带 scope;也可以带正文要点)。

feat: 新增提交消息生成

- 自动识别本次变更的关键 diff

-

emoji:Emoji 前缀(不带 type)。

✨ 新增提交消息生成

-

emojiKarma:Emoji + Karma(emoji +type(scope): msg)。

✨ feat(commit): 新增提交消息生成

- 更贴合仓库既有提交习惯

-

google:Google 风格(Type: Description)。

Feat: 新增提交消息生成

- 支持按仓库风格自动选择语言与格式

-

atom:Atom 风格(:emoji: message)。

:sparkles: 新增提交消息生成

我们欢迎社区贡献!无论是报告bug、提出功能建议还是提交代码,都能帮助这个项目变得更好。

# 克隆项目

git clone https://github.com/VicBilibily/GCMP.git

cd GCMP

# 安装依赖

npm install

# 在 VsCode 打开后按下 F5 开始扩展调试如果您觉得这个项目对您有帮助,欢迎通过 查看赞助二维码 支持项目的持续开发。

本项目采用 MIT 许可证 - 查看 LICENSE 文件了解详情。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for GCMP

Similar Open Source Tools

GCMP

GCMP is an extension that integrates multiple native large model providers in China to provide developers with a richer and more suitable AI programming assistant selection for local needs. It currently supports native large model providers such as ZhipuAI, Volcano Ark, MiniMax, MoonshotAI, DeepSeek, Kuaishou Wanqing, and Alibaba Cloud Bailian. In addition, the extension plugins are compatible with OpenAI and Anthropic API interfaces, supporting custom integration of any third-party cloud service models compatible with the interface.

Streamer-Sales

Streamer-Sales is a large model for live streamers that can explain products based on their characteristics and inspire users to make purchases. It is designed to enhance sales efficiency and user experience, whether for online live sales or offline store promotions. The model can deeply understand product features and create tailored explanations in vivid and precise language, sparking user's desire to purchase. It aims to revolutionize the shopping experience by providing detailed and unique product descriptions to engage users effectively.

illufly

illufly is an Agent framework with self-evolution capabilities, aiming to quickly create value based on self-evolution. It is designed to have self-evolution capabilities in various scenarios such as intent guessing, Q&A experience, data recall rate, and tool planning ability. The framework supports continuous dialogue, built-in RAG support, and self-evolution during conversations. It also provides tools for managing experience data and supports multiple agents collaboration.

GalTransl

GalTransl is an automated translation tool for Galgames that combines minor innovations in several basic functions with deep utilization of GPT prompt engineering. It is used to create embedded translation patches. The core of GalTransl is a set of automated translation scripts that solve most known issues when using ChatGPT for Galgame translation and improve overall translation quality. It also integrates with other projects to streamline the patch creation process, reducing the learning curve to some extent. Interested users can more easily build machine-translated patches of a certain quality through this project and may try to efficiently build higher-quality localization patches based on this framework.

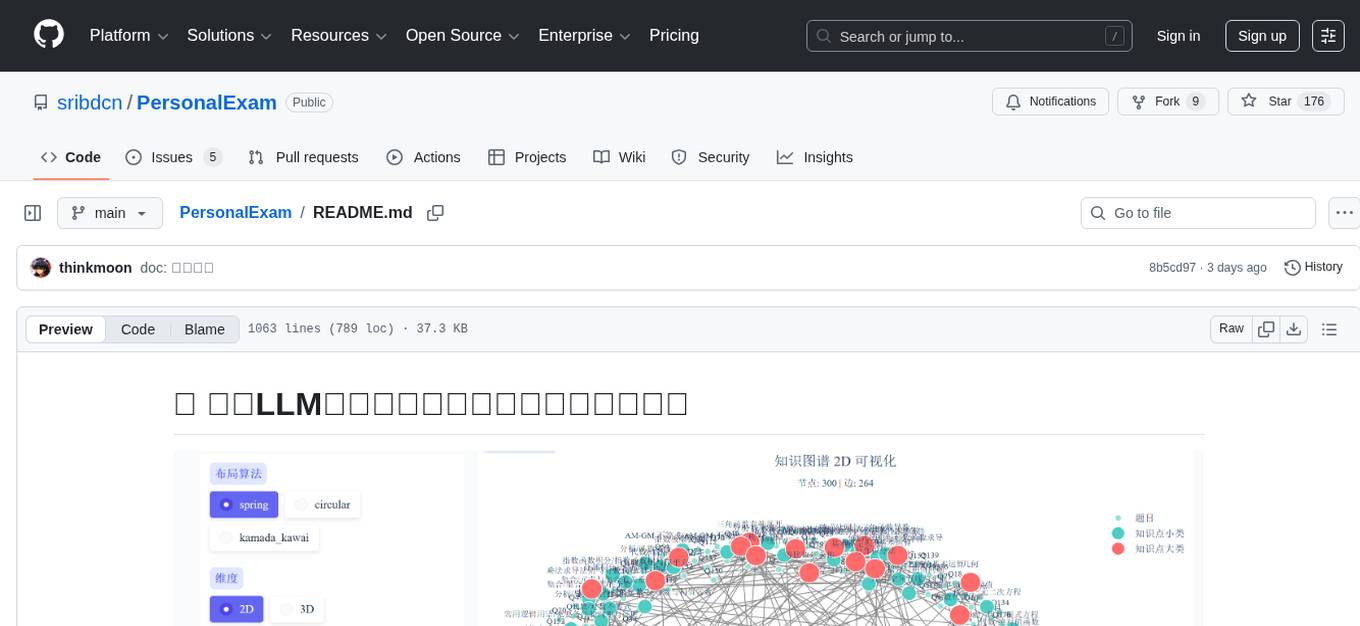

PersonalExam

PersonalExam is a personalized question generation system based on LLM and knowledge graph collaboration. It utilizes the BKT algorithm, RAG engine, and OpenPangu model to achieve personalized intelligent question generation and recommendation. The system features adaptive question recommendation, fine-grained knowledge tracking, AI answer evaluation, student profiling, visual reports, interactive knowledge graph, user management, and system monitoring.

huge-ai-search

Huge AI Search MCP Server integrates Google AI Mode search into clients like Cursor, Claude Code, and Codex, supporting continuous follow-up questions and source links. It allows AI clients to directly call 'huge-ai-search' for online searches, providing AI summary results and source links. The tool supports text and image searches, with the ability to ask follow-up questions in the same session. It requires Microsoft Edge for installation and supports various IDEs like VS Code. The tool can be used for tasks such as searching for specific information, asking detailed questions, and avoiding common pitfalls in development tasks.

ailab

The 'ailab' project is an experimental ground for code generation combining AI (especially coding agents) and Deno. It aims to manage configuration files defining coding rules and modes in Deno projects, enhancing the quality and efficiency of code generation by AI. The project focuses on defining clear rules and modes for AI coding agents, establishing best practices in Deno projects, providing mechanisms for type-safe code generation and validation, applying test-driven development (TDD) workflow to AI coding, and offering implementation examples utilizing design patterns like adapter pattern.

GitHubSentinel

GitHub Sentinel is an intelligent information retrieval and high-value content mining AI Agent designed for the era of large models (LLMs). It is aimed at users who need frequent and large-scale information retrieval, especially open source enthusiasts, individual developers, and investors. The main features include subscription management, update retrieval, notification system, report generation, multi-model support, scheduled tasks, graphical interface, containerization, continuous integration, and the ability to track and analyze the latest dynamics of GitHub open source projects and expand to other information channels like Hacker News for comprehensive information mining and analysis capabilities.

AivisSpeech-Engine

AivisSpeech-Engine is a powerful open-source tool for speech recognition and synthesis. It provides state-of-the-art algorithms for converting speech to text and text to speech. The tool is designed to be user-friendly and customizable, allowing developers to easily integrate speech capabilities into their applications. With AivisSpeech-Engine, users can transcribe audio recordings, create voice-controlled interfaces, and generate natural-sounding speech output. Whether you are building a virtual assistant, developing a speech-to-text application, or experimenting with voice technology, AivisSpeech-Engine offers a comprehensive solution for all your speech processing needs.

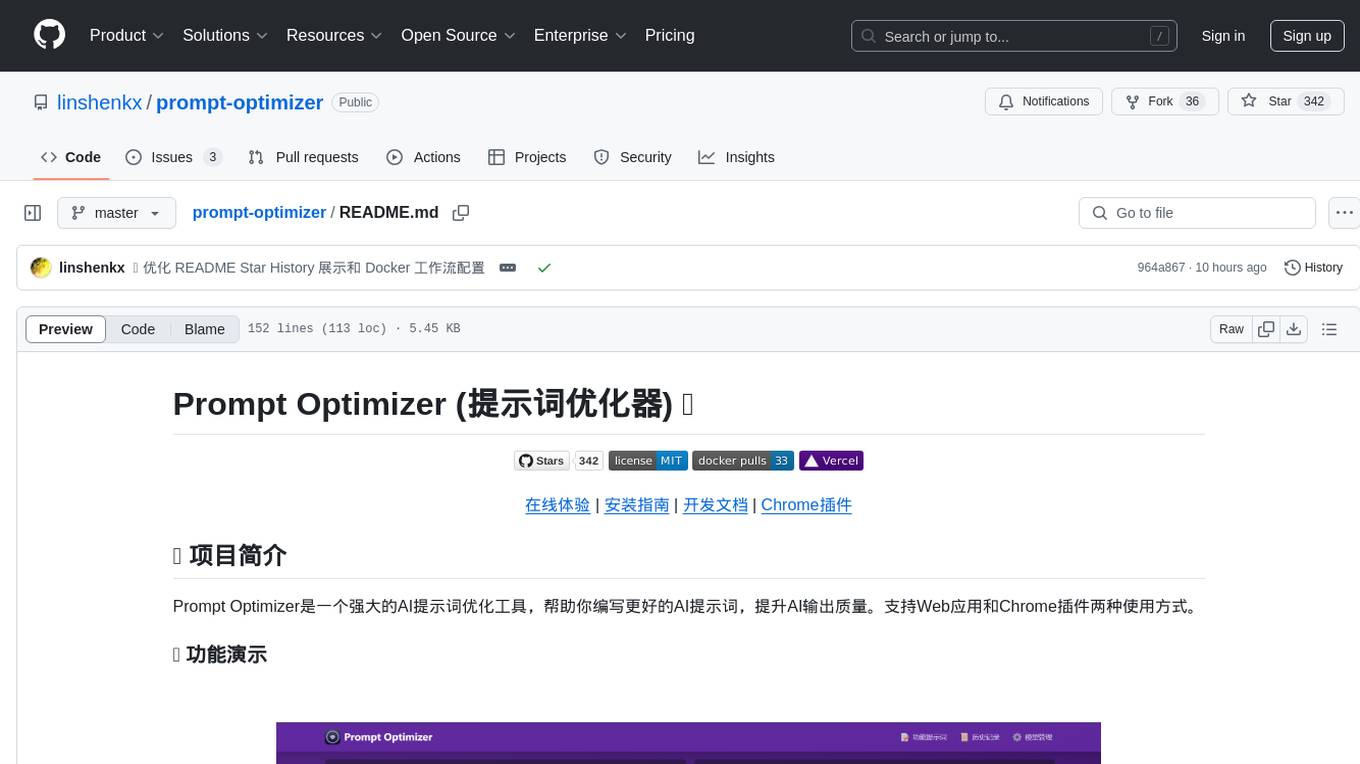

prompt-optimizer

Prompt Optimizer is a powerful AI prompt optimization tool that helps you write better AI prompts, improving AI output quality. It supports both web application and Chrome extension usage. The tool features intelligent optimization for prompt words, real-time testing to compare before and after optimization, integration with multiple mainstream AI models, client-side processing for security, encrypted local storage for data privacy, responsive design for user experience, and more.

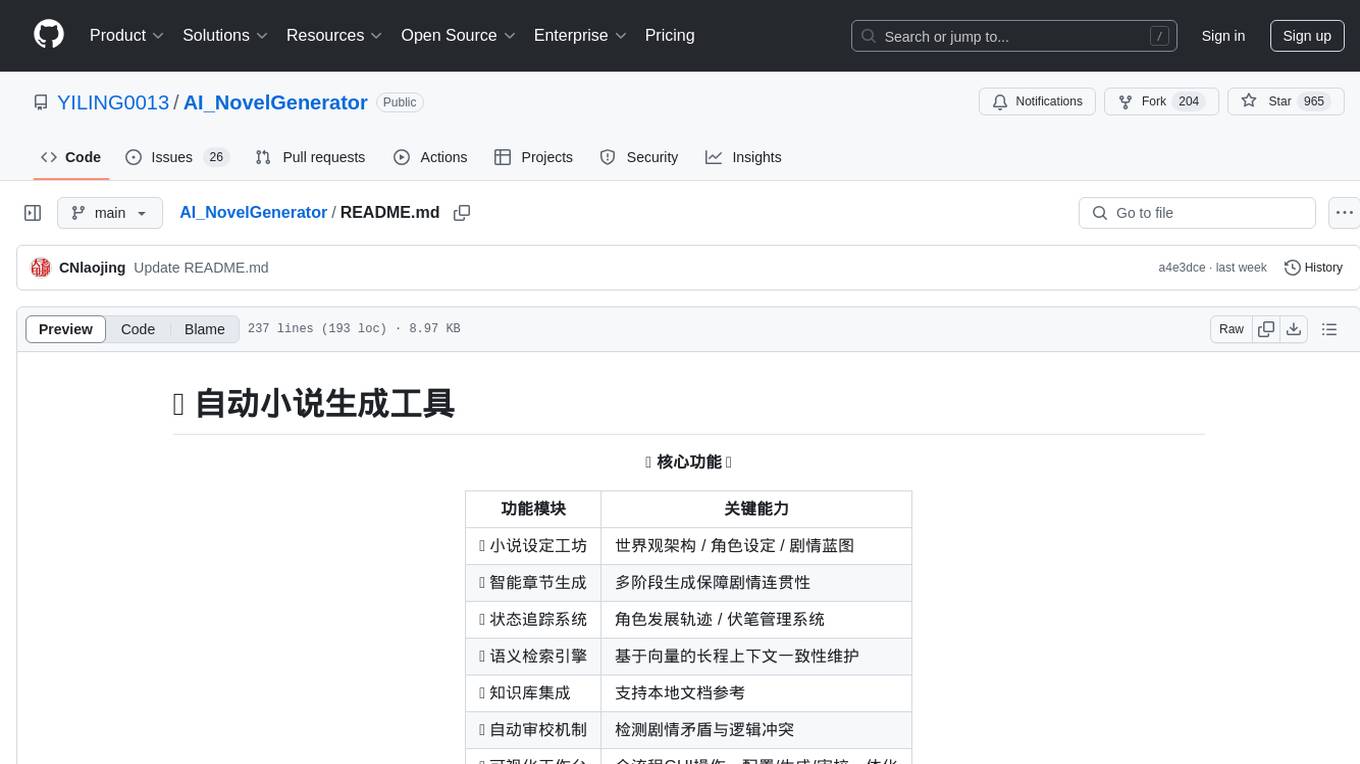

AI_NovelGenerator

AI_NovelGenerator is a versatile novel generation tool based on large language models. It features a novel setting workshop for world-building, character development, and plot blueprinting, intelligent chapter generation for coherent storytelling, a status tracking system for character arcs and foreshadowing management, a semantic retrieval engine for maintaining long-range context consistency, integration with knowledge bases for local document references, an automatic proofreading mechanism for detecting plot contradictions and logic conflicts, and a visual workspace for GUI operations encompassing configuration, generation, and proofreading. The tool aims to assist users in efficiently creating logically rigorous and thematically consistent long-form stories.

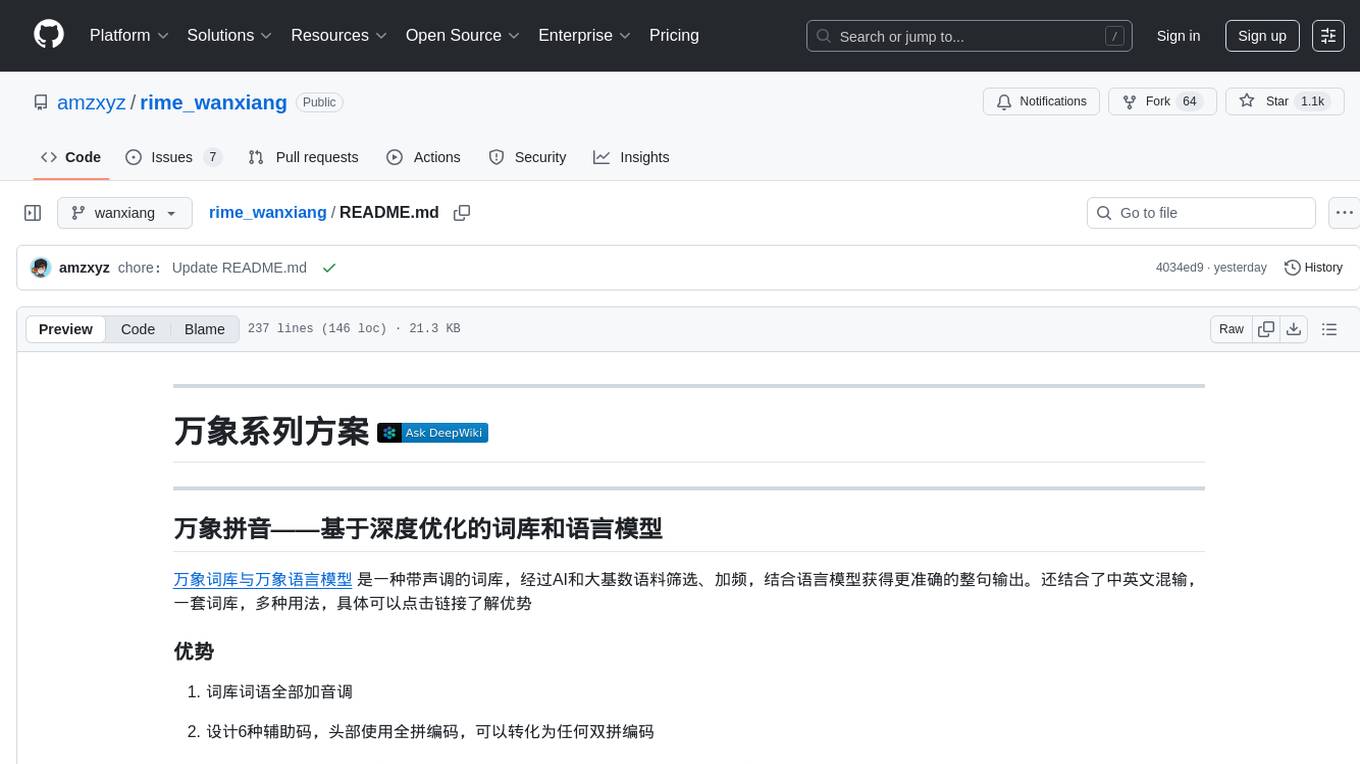

rime_wanxiang

Rime Wanxiang is a pinyin input method based on deep optimized lexicon and language model. It features a lexicon with tones, AI and large corpus filtering, and frequency addition to provide more accurate sentence output. The tool supports various input methods and customization options, aiming to enhance user experience through lexicon and transcription. Users can also refresh the lexicon with different types of auxiliary codes using the LMDG toolkit package. Wanxiang offers core features like tone-marked pinyin annotations, phrase composition, and word frequency, with customizable functionalities. The tool is designed to provide a seamless input experience based on lexicon and transcription.

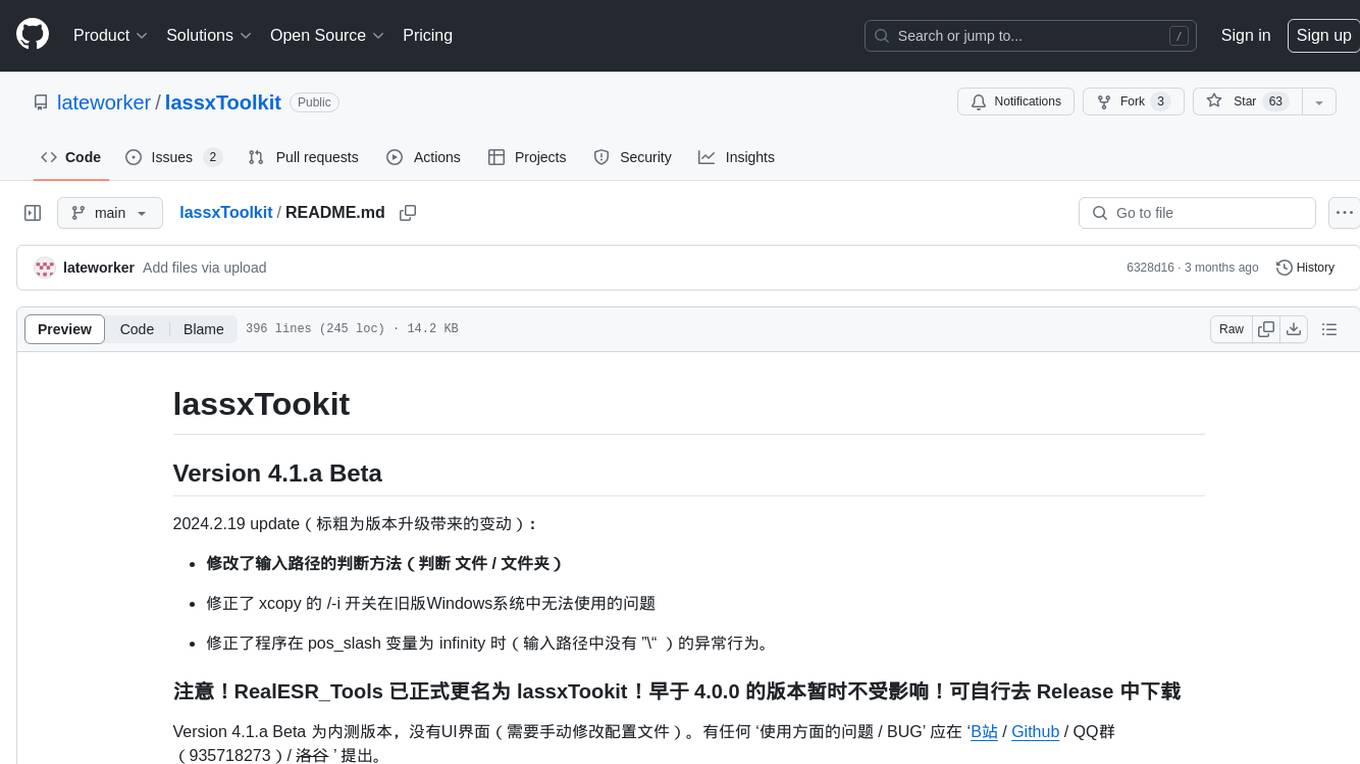

lassxToolkit

lassxToolkit is a versatile tool designed for file processing tasks. It allows users to manipulate files and folders based on specified configurations in a strict .json format. The tool supports various AI models for tasks such as image upscaling and denoising. Users can customize settings like input/output paths, error handling, file selection, and plugin integration. lassxToolkit provides detailed instructions on configuration options, default values, and model selection. It also offers features like tree restoration, recursive processing, and regex-based file filtering. The tool is suitable for users looking to automate file processing tasks with AI capabilities.

chatgpt-web-sea

ChatGPT Web Sea is an open-source project based on ChatGPT-web for secondary development. It supports all models that comply with the OpenAI interface standard, allows for model selection, configuration, and extension, and is compatible with OneAPI. The tool includes a Chinese ChatGPT tuning guide, supports file uploads, and provides model configuration options. Users can interact with the tool through a web interface, configure models, and perform tasks such as model selection, API key management, and chat interface setup. The project also offers Docker deployment options and instructions for manual packaging.

kimi-free-api

KIMI AI Free 服务 支持高速流式输出、支持多轮对话、支持联网搜索、支持长文档解读、支持图像解析,零配置部署,多路token支持,自动清理会话痕迹。 与ChatGPT接口完全兼容。 还有以下五个free-api欢迎关注: 阶跃星辰 (跃问StepChat) 接口转API step-free-api 阿里通义 (Qwen) 接口转API qwen-free-api ZhipuAI (智谱清言) 接口转API glm-free-api 秘塔AI (metaso) 接口转API metaso-free-api 聆心智能 (Emohaa) 接口转API emohaa-free-api

EasyNovelAssistant

EasyNovelAssistant is a simple novel generation assistant powered by a lightweight and uncensored Japanese local LLM 'LightChatAssistant-TypeB'. It allows for perpetual generation with 'Generate forever' feature, stacking up lucky gacha draws. It also supports text-to-speech. Users can directly utilize KoboldCpp and Style-Bert-VITS2 internally or use EasySdxlWebUi to generate images while using the tool. The tool is designed for local novel generation with a focus on ease of use and flexibility.

For similar tasks

GCMP

GCMP is an extension that integrates multiple native large model providers in China to provide developers with a richer and more suitable AI programming assistant selection for local needs. It currently supports native large model providers such as ZhipuAI, Volcano Ark, MiniMax, MoonshotAI, DeepSeek, Kuaishou Wanqing, and Alibaba Cloud Bailian. In addition, the extension plugins are compatible with OpenAI and Anthropic API interfaces, supporting custom integration of any third-party cloud service models compatible with the interface.

For similar jobs

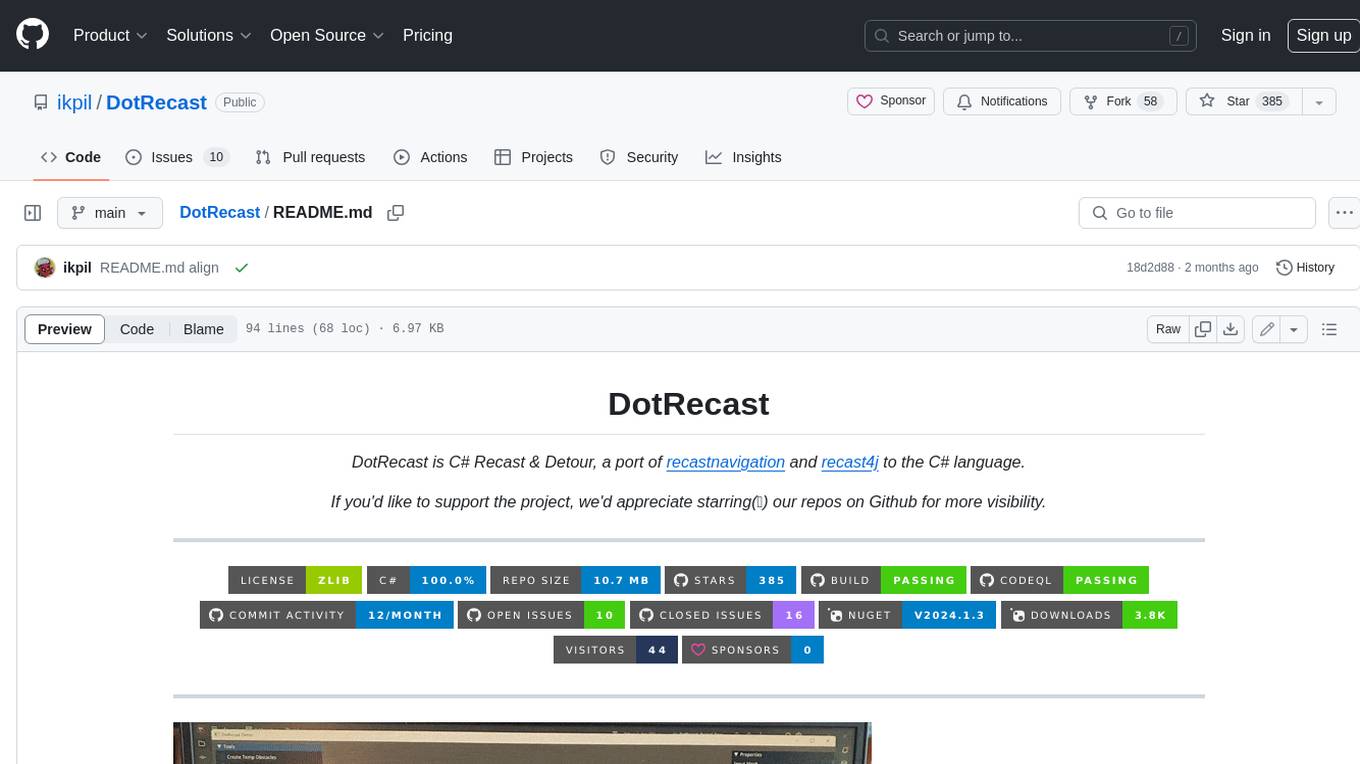

DotRecast

DotRecast is a C# port of Recast & Detour, a navigation library used in many AAA and indie games and engines. It provides automatic navmesh generation, fast turnaround times, detailed customization options, and is dependency-free. Recast Navigation is divided into multiple modules, each contained in its own folder: - DotRecast.Core: Core utils - DotRecast.Recast: Navmesh generation - DotRecast.Detour: Runtime loading of navmesh data, pathfinding, navmesh queries - DotRecast.Detour.TileCache: Navmesh streaming. Useful for large levels and open-world games - DotRecast.Detour.Crowd: Agent movement, collision avoidance, and crowd simulation - DotRecast.Detour.Dynamic: Robust support for dynamic nav meshes combining pre-built voxels with dynamic objects which can be freely added and removed - DotRecast.Detour.Extras: Simple tool to import navmeshes created with A* Pathfinding Project - DotRecast.Recast.Toolset: All modules - DotRecast.Recast.Demo: Standalone, comprehensive demo app showcasing all aspects of Recast & Detour's functionality - Tests: Unit tests Recast constructs a navmesh through a multi-step mesh rasterization process: 1. First Recast rasterizes the input triangle meshes into voxels. 2. Voxels in areas where agents would not be able to move are filtered and removed. 3. The walkable areas described by the voxel grid are then divided into sets of polygonal regions. 4. The navigation polygons are generated by re-triangulating the generated polygonal regions into a navmesh. You can use Recast to build a single navmesh, or a tiled navmesh. Single meshes are suitable for many simple, static cases and are easy to work with. Tiled navmeshes are more complex to work with but better support larger, more dynamic environments. Tiled meshes enable advanced Detour features like re-baking, hierarchical path-planning, and navmesh data-streaming.

bots

The 'bots' repository is a collection of guides, tools, and example bots for programming bots to play video games. It provides resources on running bots live, installing the BotLab client, debugging bots, testing bots in simulated environments, and more. The repository also includes example bots for games like EVE Online, Tribal Wars 2, and Elvenar. Users can learn about developing bots for specific games, syntax of the Elm programming language, and tools for memory reading development. Additionally, there are guides on bot programming, contributing to BotLab, and exploring Elm syntax and core library.

Half-Life-Resurgence

Half-Life-Resurgence is a recreation and expansion project that brings NPCs, entities, and weapons from the Half-Life series into Garry's Mod. The goal is to faithfully recreate original content while also introducing new features and custom content envisioned by the community. Users can expect a wide range of NPCs with new abilities, AI behaviors, and weapons, as well as support for playing as any character and replacing NPCs in Half-Life 1 & 2 campaigns.

SwordCoastStratagems

Sword Coast Stratagems (SCS) is a mod that enhances Baldur's Gate games by adding over 130 optional components focused on improving monster AI, encounter difficulties, cosmetic enhancements, and ease-of-use tweaks. This repository serves as an archive for the project, with updates pushed only when new releases are made. It is not a collaborative project, and bug reports or suggestions should be made at the Gibberlings 3 forums. The mod is designed for offline workflow and should be downloaded from official releases.

LambsDanger

LAMBS Danger FSM is an open-source mod developed for Arma3, aimed at enhancing the AI behavior by integrating buildings into the tactical landscape, creating distinct AI states, and ensuring seamless compatibility with vanilla, ACE3, and modded assets. Originally created for the Norwegian gaming community, it is now available on Steam Workshop and GitHub for wider use. Users are allowed to customize and redistribute the mod according to their requirements. The project is licensed under the GNU General Public License (GPLv2) with additional amendments.

beehave

Beehave is a powerful addon for Godot Engine that enables users to create robust AI systems using behavior trees. It simplifies the design of complex NPC behaviors, challenging boss battles, and other advanced setups. Beehave allows for the creation of highly adaptive AI that responds to changes in the game world and overcomes unexpected obstacles, catering to both beginners and experienced developers. The tool is currently in development for version 3.0.

thinker

Thinker is an AI improvement mod for Alpha Centauri: Alien Crossfire that enhances single player challenge and gameplay with features like improved production/movement AI, visual changes on map rendering, more config options, resolution settings, and automation features. It includes Scient's patches and requires the GOG version of Alpha Centauri with the official Alien Crossfire patch version 2.0 installed. The mod provides additional DLL features developed in C++ for a richer gaming experience.

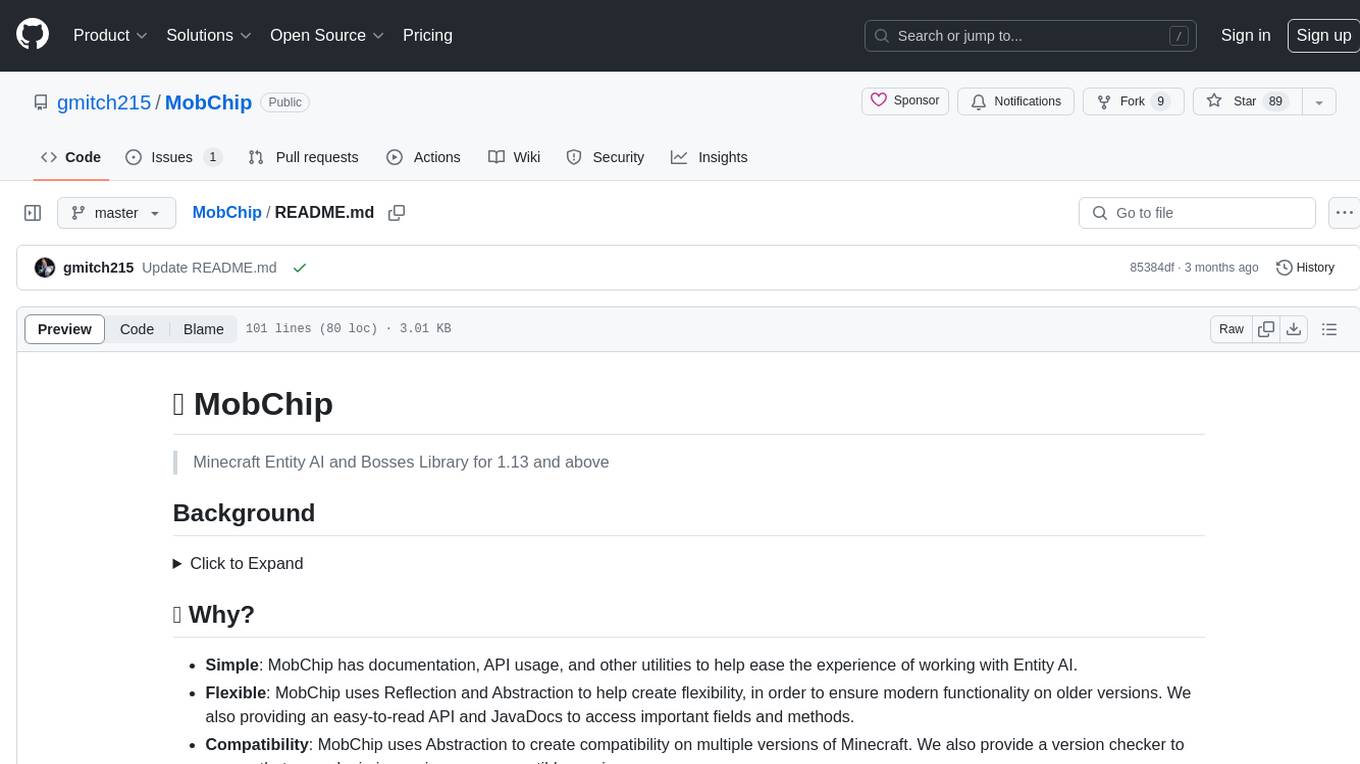

MobChip

MobChip is an all-in-one Entity AI and Bosses Library for Minecraft 1.13 and above. It simplifies the implementation of Minecraft's native entity AI into plugins, offering documentation, API usage, and utilities for ease of use. The library is flexible, using Reflection and Abstraction for modern functionality on older versions, and ensuring compatibility across multiple Minecraft versions. MobChip is open source, providing features like Bosses Library, Pathfinder Goals, Behaviors, Villager Gossip, Ender Dragon Phases, and more.