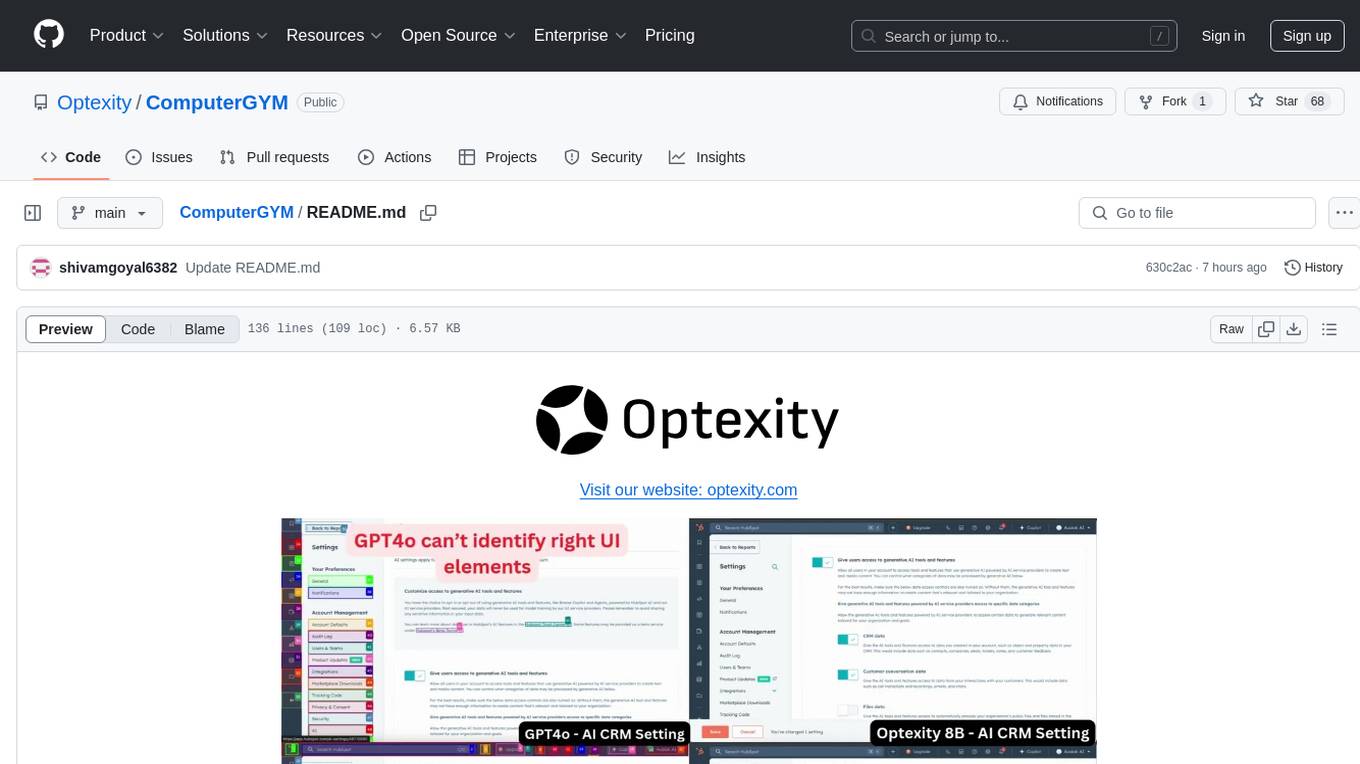

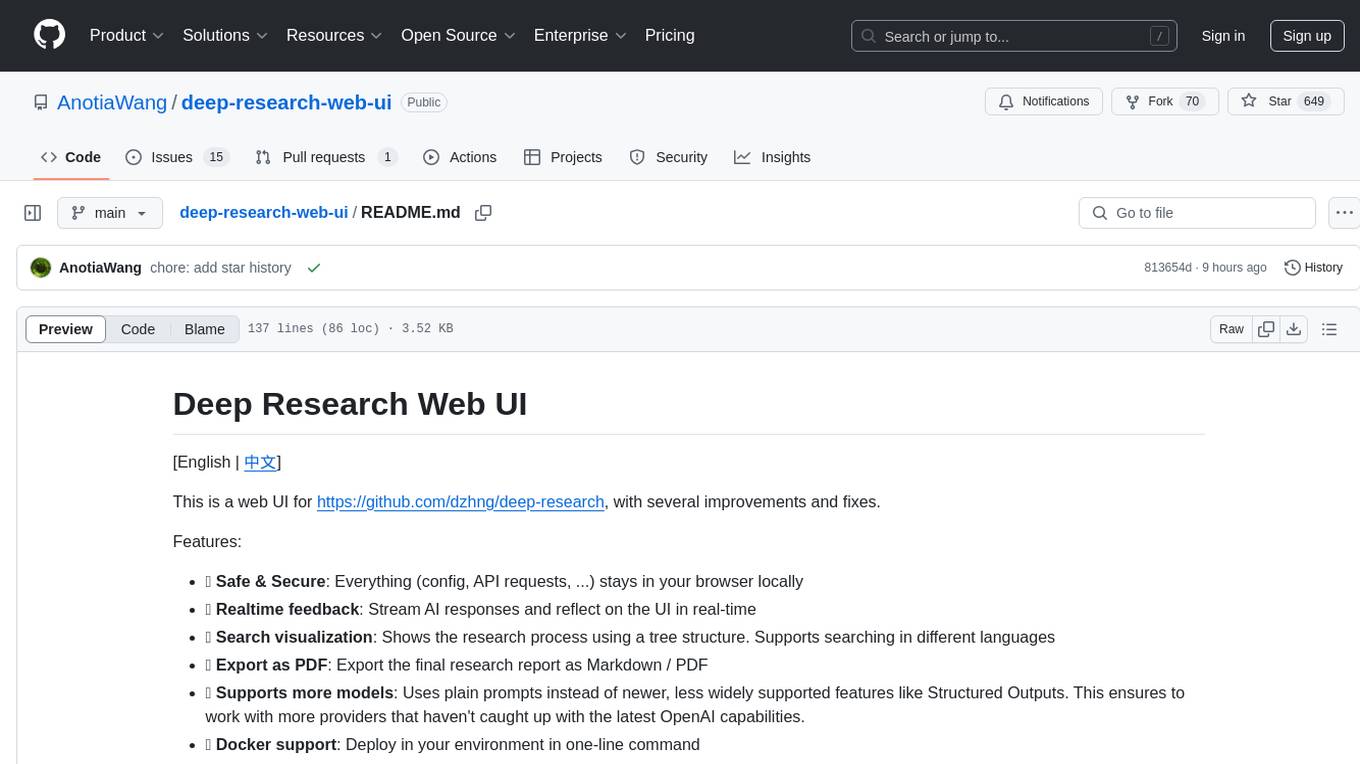

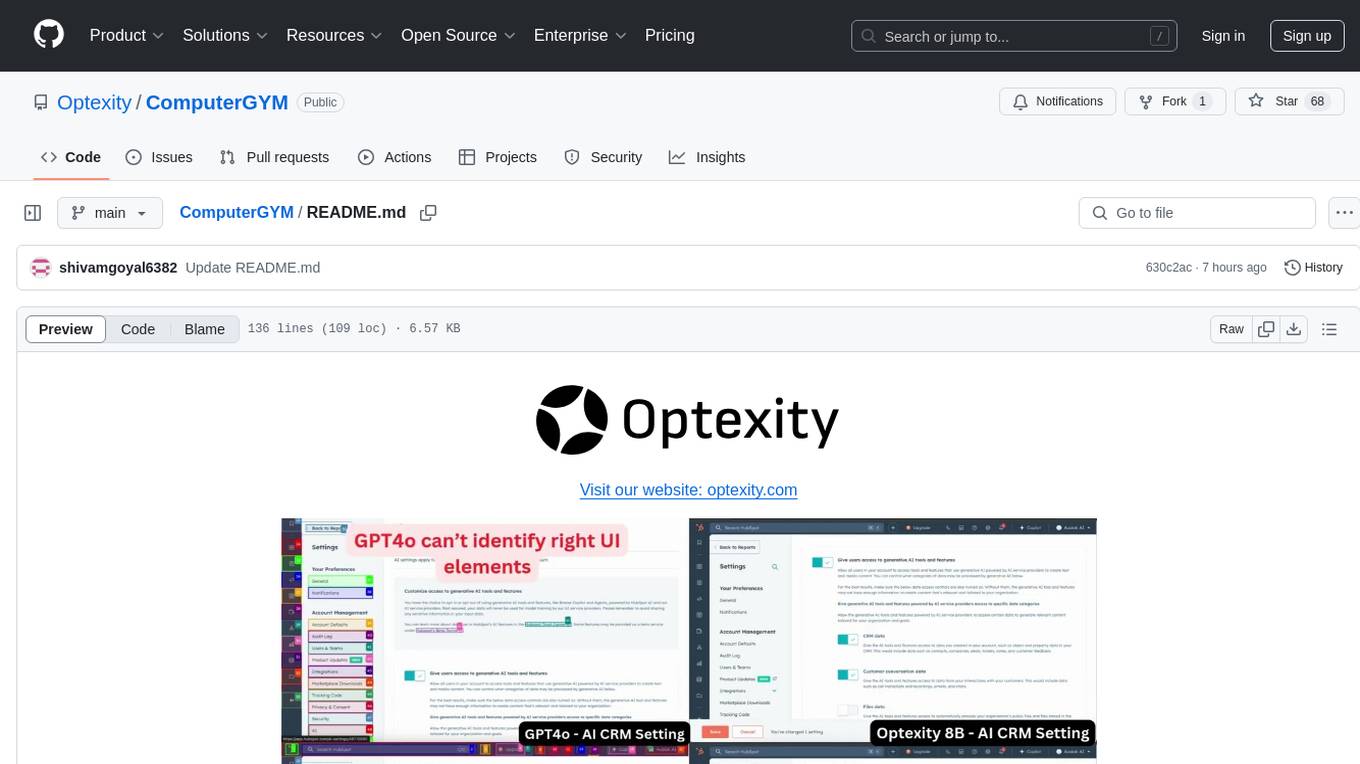

ComputerGYM

Foundation Model Training Using Human Demonstrations

Stars: 68

Optexity is a framework for training foundation models using human demonstrations of computer tasks. It enables recording, processing, and utilizing demonstrations to train AI agents for web-based tasks. The tool also plans to incorporate training through self-exploration, software documentations, and YouTube videos in the future.

README:

Visit our website: optexity.com

Optexity enables training foundation models using human demonstrations of computer tasks. This framework allows for recording, processing, and using demonstrations to train AI agents to complete web-based tasks. We will be adding training using self exploration using reinforement learning, training from software documentations and training using youtube videos in future.

Explore our step-by-step video guides to get started with Optexity:

- Optexity Tutorial Part 1 | Introduction and State of Browser Agents for Software Use

- Optexity Tutorial Part 2 | Training AI with Human Demonstrations

- Optexity Tutorial Part 3 | AI Agent in Action!

-

Repository Setup Clone the necessary repositories:

mkdir optexity cd optexity git clone https://github.com/Optexity/ComputerGYM.git git clone https://github.com/Optexity/AgentAI.git git clone https://github.com/Optexity/playwright.git -

Environment Setup Create and activate a Conda environment with the required Python and Node.js versions:

conda create -n optexity python=3.10 nodejs conda activate optexity

-

Installing Dependencies Install the required packages and build the Playwright framework:

pip install -e ComputerGym pip install -e AgentAI cd playwright git checkout playwright_optexity npm install npm run build playwright install cd ..

To evaluate vanilla gemini 2.0 flash for a specific web task, execute:

EXPORT GEMINI_API_KEY=<YOUR_GEMINI_API_KEY>

python AgentAI/agentai/main.py --url "https://app.hubspot.com" --port 8000 --log_to_console --goal "change currency to SGD" --storage_state cache_dir/auth.json --model geminiNext section shows you how to improve the performance of these agents on specific tasks.

Pro Tip: You can visit https://aistudio.google.com/apikey to create a free gemini api key to test out any task on any website.

-

Recording Demonstrations Record human demonstrations by creating a configuration file and running the demonstration script:

./ComputerGYM/computergym/demonstrations/demonstrate.sh ComputerGYM/computergym/demonstrations/demonstration_config.yaml

Note: Create your own

demonstration_config.yamlconfiguration file before running this script. -

Processing Demonstrations Process the recorded demonstrations to prepare them for training:

python ComputerGYM/computergym/demonstrations/process_demonstration.py --yaml ComputerGYM/computergym/demonstrations/demonstration_config.yaml --seed 5

-

Generating Training Data Convert processed demonstrations into a format suitable for model training:

python AgentAI/agentai/sft/prepare_training_data.py --agent_config AgentAI/agentai/train_configs/hubspot_agent.yaml

-

Training the Model Our data preparation scripts generate JSON data in a format compatible with LLaMA-Factory. The generated training and inference configurations are stored in the

train_datadirectory. Please refer to the LLaMA-Factory documentation for detailed instructions on model training. -

Evaluating the Trained Agent After training your model, deploy it as an inference service on

http://localhost:8000. By default, our framework is configured to work with the vLLM serving capability provided by LLaMA-Factory. If you're using an alternative serving method, you'll need to modify the appropriate scripts.To evaluate your trained agent on a specific web task, execute:

python AgentAI/agentai/main.py --url "https://app.hubspot.com" --port 8000 --log_to_console --goal "change currency to SGD" --storage_state cache_dir/auth.json --model vllm

For comprehensive information on configuration options and advanced usage patterns, please refer to the detailed documentation available in each repository:

- ComputerGYM: Environment setup, demonstration recording, and processing

- AgentAI: Model training configurations, inference settings, and evaluation metrics

- Playwright Integration: Custom extensions and modifications for web automation

- Demonstration configuration: See

ComputerGYM/computergym/demonstrations/demonstration_config_example.yaml - Training parameters: See

AgentAI/agentai/train_configs/README.md

This project builds upon and extends the work of:

- BrowserGym - For the browser automation environment foundation

- Playwright - For reliable web testing and automation capabilities

- LLaMA-Factory - For efficient foundation model fine-tuning

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ComputerGYM

Similar Open Source Tools

ComputerGYM

Optexity is a framework for training foundation models using human demonstrations of computer tasks. It enables recording, processing, and utilizing demonstrations to train AI agents for web-based tasks. The tool also plans to incorporate training through self-exploration, software documentations, and YouTube videos in the future.

UltraRAG

The UltraRAG framework is a researcher and developer-friendly RAG system solution that simplifies the process from data construction to model fine-tuning in domain adaptation. It introduces an automated knowledge adaptation technology system, supporting no-code programming, one-click synthesis and fine-tuning, multidimensional evaluation, and research-friendly exploration work integration. The architecture consists of Frontend, Service, and Backend components, offering flexibility in customization and optimization. Performance evaluation in the legal field shows improved results compared to VanillaRAG, with specific metrics provided. The repository is licensed under Apache-2.0 and encourages citation for support.

LLMstudio

LLMstudio by TensorOps is a platform that offers prompt engineering tools for accessing models from providers like OpenAI, VertexAI, and Bedrock. It provides features such as Python Client Gateway, Prompt Editing UI, History Management, and Context Limit Adaptability. Users can track past runs, log costs and latency, and export history to CSV. The tool also supports automatic switching to larger-context models when needed. Coming soon features include side-by-side comparison of LLMs, automated testing, API key administration, project organization, and resilience against rate limits. LLMstudio aims to streamline prompt engineering, provide execution history tracking, and enable effortless data export, offering an evolving environment for teams to experiment with advanced language models.

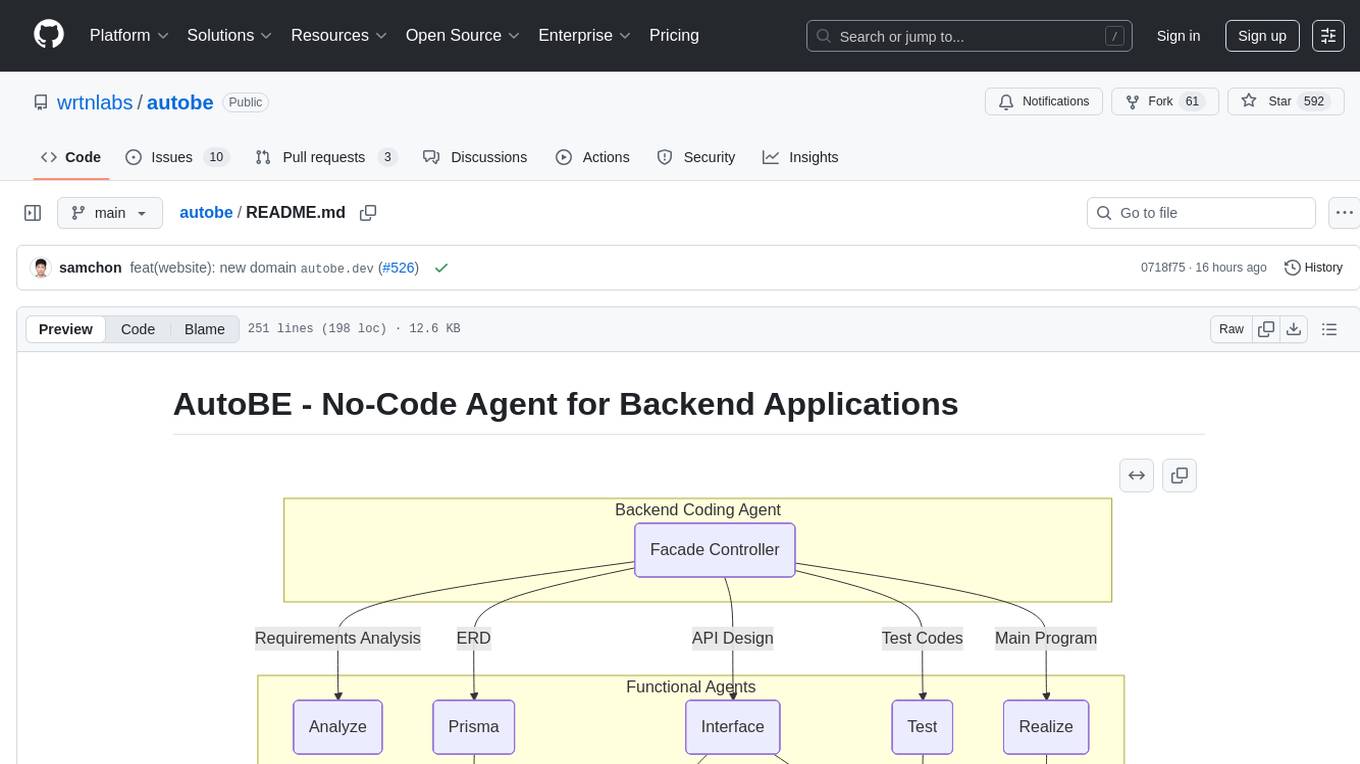

autobe

AutoBE is an AI-powered no-code agent that builds backend applications, enhanced by compiler feedback. It automatically generates backend applications using TypeScript, NestJS, and Prisma following a waterfall development model. The generated code is validated by review agents and OpenAPI/TypeScript/Prisma compilers, ensuring 100% working code. The tool aims to enable anyone to build backend servers, AI chatbots, and frontend applications without coding knowledge by conversing with AI.

Upsonic

Upsonic offers a cutting-edge enterprise-ready framework for orchestrating LLM calls, agents, and computer use to complete tasks cost-effectively. It provides reliable systems, scalability, and a task-oriented structure for real-world cases. Key features include production-ready scalability, task-centric design, MCP server support, tool-calling server, computer use integration, and easy addition of custom tools. The framework supports client-server architecture and allows seamless deployment on AWS, GCP, or locally using Docker.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

llm-on-ray

LLM-on-Ray is a comprehensive solution for building, customizing, and deploying Large Language Models (LLMs). It simplifies complex processes into manageable steps by leveraging the power of Ray for distributed computing. The tool supports pretraining, finetuning, and serving LLMs across various hardware setups, incorporating industry and Intel optimizations for performance. It offers modular workflows with intuitive configurations, robust fault tolerance, and scalability. Additionally, it provides an Interactive Web UI for enhanced usability, including a chatbot application for testing and refining models.

katib

Katib is a Kubernetes-native project for automated machine learning (AutoML). Katib supports Hyperparameter Tuning, Early Stopping and Neural Architecture Search. Katib is the project which is agnostic to machine learning (ML) frameworks. It can tune hyperparameters of applications written in any language of the users’ choice and natively supports many ML frameworks, such as TensorFlow, Apache MXNet, PyTorch, XGBoost, and others. Katib can perform training jobs using any Kubernetes Custom Resources with out of the box support for Kubeflow Training Operator, Argo Workflows, Tekton Pipelines and many more.

neuro-san-studio

Neuro SAN Studio is an open-source library for building agent networks across various industries. It simplifies the development of collaborative AI systems by enabling users to create sophisticated multi-agent applications using declarative configuration files. The tool offers features like data-driven configuration, adaptive communication protocols, safe data handling, dynamic agent network designer, flexible tool integration, robust traceability, and cloud-agnostic deployment. It has been used in various use-cases such as automated generation of multi-agent configurations, airline policy assistance, banking operations, market analysis in consumer packaged goods, insurance claims processing, intranet knowledge management, retail operations, telco network support, therapy vignette supervision, and more.

taipy

Taipy is an open-source Python library for easy, end-to-end application development, featuring what-if analyses, smart pipeline execution, built-in scheduling, and deployment tools.

radicalbit-ai-monitoring

The Radicalbit AI Monitoring Platform provides a comprehensive solution for monitoring Machine Learning and Large Language models in production. It helps proactively identify and address potential performance issues by analyzing data quality, model quality, and model drift. The repository contains files and projects for running the platform, including UI, API, SDK, and Spark components. Installation using Docker compose is provided, allowing deployment with a K3s cluster and interaction with a k9s container. The platform documentation includes a step-by-step guide for installation and creating dashboards. Community engagement is encouraged through a Discord server. The roadmap includes adding functionalities for batch and real-time workloads, covering various model types and tasks.

amazon-bedrock-agentcore-samples

Amazon Bedrock AgentCore Samples repository provides examples and tutorials to deploy and operate AI agents securely at scale using any framework and model. It is framework-agnostic and model-agnostic, allowing flexibility in deployment. The repository includes tutorials, end-to-end applications, integration guides, deployment automation, and full-stack reference applications for developers to understand and implement Amazon Bedrock AgentCore capabilities into their applications.

PrivateDocBot

PrivateDocBot is a local LLM-powered chatbot designed for secure document interactions. It seamlessly merges Chainlit user-friendly interface with localized language models, tailored for sensitive data. The project streamlines data access by deciphering intricate user guides and extracting vital insights from complex PDF reports. Equipped with advanced technology, it offers an engaging conversational experience, redefining data interaction and empowering users with control.

deep-research-web-ui

This web UI tool is designed to enhance the user experience of the deep-research repository by providing a safe and secure environment for conducting AI research. It offers features such as real-time feedback, search visualization, export as PDF, support for various AI models, and Docker deployment. Users can interact with multiple AI providers and web search services, making research processes more efficient and accessible. The tool also includes recent updates that improve functionality and fix bugs, ensuring a seamless experience for users.

openvino

OpenVINO™ is an open-source toolkit for optimizing and deploying AI inference. It provides a common API to deliver inference solutions on various platforms, including CPU, GPU, NPU, and heterogeneous devices. OpenVINO™ supports pre-trained models from Open Model Zoo and popular frameworks like TensorFlow, PyTorch, and ONNX. Key components of OpenVINO™ include the OpenVINO™ Runtime, plugins for different hardware devices, frontends for reading models from native framework formats, and the OpenVINO Model Converter (OVC) for adjusting models for optimal execution on target devices.

ai-platform-engineering

The AI Platform Engineering repository provides a collection of tools and resources for building and deploying AI models. It includes libraries for data preprocessing, model training, and model serving. The repository also contains example code and tutorials to help users get started with AI development. Whether you are a beginner or an experienced AI engineer, this repository offers valuable insights and best practices to streamline your AI projects.

For similar tasks

ComputerGYM

Optexity is a framework for training foundation models using human demonstrations of computer tasks. It enables recording, processing, and utilizing demonstrations to train AI agents for web-based tasks. The tool also plans to incorporate training through self-exploration, software documentations, and YouTube videos in the future.

Minic

Minic is a chess engine developed for learning about chess programming and modern C++. It is compatible with CECP and UCI protocols, making it usable in various software. Minic has evolved from a one-file code to a more classic C++ style, incorporating features like evaluation tuning, perft, tests, and more. It has integrated NNUE frameworks from Stockfish and Seer implementations to enhance its strength. Minic is currently ranked among the top engines with an Elo rating around 3400 at CCRL scale.

Kolo

Kolo is a lightweight tool for fast and efficient data generation, fine-tuning, and testing of Large Language Models (LLMs) on your local machine. It simplifies the fine-tuning and data generation process, runs locally without the need for cloud-based services, and supports popular LLM toolkits. Kolo is built using tools like Unsloth, Torchtune, Llama.cpp, Ollama, Docker, and Open WebUI. It requires Windows 10 OS or higher, Nvidia GPU with CUDA 12.1 capability, and 8GB+ VRAM, and 16GB+ system RAM. Users can join the Discord group for issues or feedback. The tool provides easy setup, training data generation, and integration with major LLM frameworks.

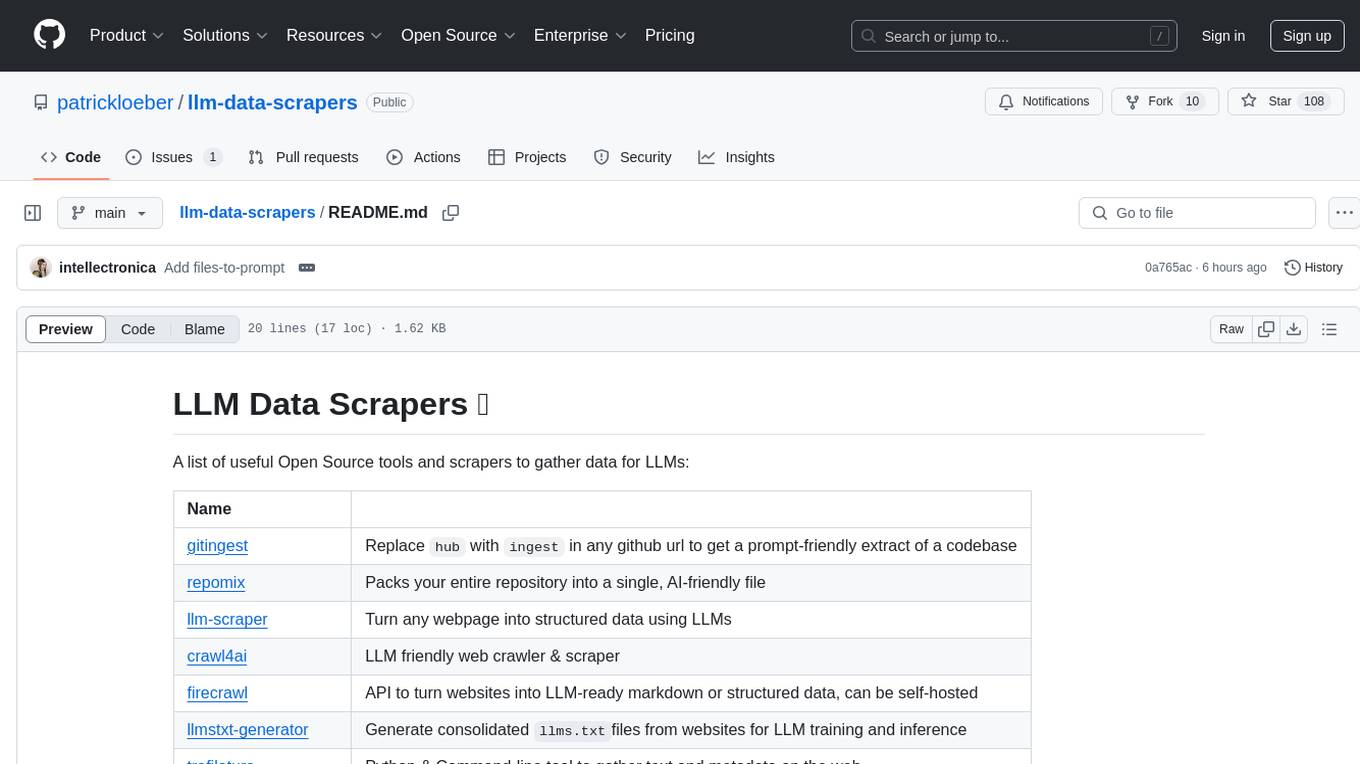

llm-data-scrapers

LLM Data Scrapers is a collection of open source tools and scrapers designed to gather data for Large Language Models (LLMs). The repository includes various tools such as gitingest for extracting codebases, repomix for packing repositories into AI-friendly files, llm-scraper for converting webpages into structured data, crawl4ai for web crawling, and firecrawl for turning websites into LLM-ready markdown or structured data. Additionally, the repository offers tools like llmstxt-generator for generating training data, trafilatura for gathering web text and metadata, RepoToTextForLLMs for fetching repo content, marker for converting PDFs, reader for converting URLs to LLM-friendly inputs, and files-to-prompt for concatenating files into prompts for LLMs.

1.5-Pints

1.5-Pints is a repository that provides a recipe to pre-train models in 9 days, aiming to create AI assistants comparable to Apple OpenELM and Microsoft Phi. It includes model architecture, training scripts, and utilities for 1.5-Pints and 0.12-Pint developed by Pints.AI. The initiative encourages replication, experimentation, and open-source development of Pint by sharing the model's codebase and architecture. The repository offers installation instructions, dataset preparation scripts, model training guidelines, and tools for model evaluation and usage. Users can also find information on finetuning models, converting lit models to HuggingFace models, and running Direct Preference Optimization (DPO) post-finetuning. Additionally, the repository includes tests to ensure code modifications do not disrupt the existing functionality.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.