RAG_Techniques

This repository showcases various advanced techniques for Retrieval-Augmented Generation (RAG) systems. RAG systems combine information retrieval with generative models to provide accurate and contextually rich responses.

Stars: 10859

Advanced RAG Techniques is a comprehensive collection of cutting-edge Retrieval-Augmented Generation (RAG) tutorials aimed at enhancing the accuracy, efficiency, and contextual richness of RAG systems. The repository serves as a hub for state-of-the-art RAG enhancements, comprehensive documentation, practical implementation guidelines, and regular updates with the latest advancements. It covers a wide range of techniques from foundational RAG methods to advanced retrieval methods, iterative and adaptive techniques, evaluation processes, explainability and transparency features, and advanced architectures integrating knowledge graphs and recursive processing.

README:

🌟 Support This Project: Your sponsorship fuels innovation in RAG technologies. Become a sponsor to help maintain and expand this valuable resource!

A big thank you to the wonderful sponsor(s) who support this project!

Welcome to one of the most comprehensive and dynamic collections of Retrieval-Augmented Generation (RAG) tutorials available today. This repository serves as a hub for cutting-edge techniques aimed at enhancing the accuracy, efficiency, and contextual richness of RAG systems.

| 🚀 Cutting-edge Updates |

💡 Expert Insights |

🎯 Top 0.1% Content |

*Join thousands of AI enthusiasts getting unique cutting-edge insights and free tutorials! Plus, subscribers get exclusive early access and special discounts to our upcoming RAG Techniques course! *

Retrieval-Augmented Generation (RAG) is revolutionizing the way we combine information retrieval with generative AI. This repository showcases a curated collection of advanced techniques designed to supercharge your RAG systems, enabling them to deliver more accurate, contextually relevant, and comprehensive responses.

Our goal is to provide a valuable resource for researchers and practitioners looking to push the boundaries of what's possible with RAG. By fostering a collaborative environment, we aim to accelerate innovation in this exciting field.

🖋️ Check out my Prompt Engineering Techniques guide for a comprehensive collection of prompting strategies, from basic concepts to advanced techniques, enhancing your ability to interact effectively with AI language models.

🤖 Explore my GenAI Agents Repository to discover a variety of AI agent implementations and tutorials, showcasing how different AI technologies can be combined to create powerful, interactive systems.

This repository grows stronger with your contributions! Join our vibrant Discord community — the central hub for shaping and advancing this project together 🤝

RAG Techniques Discord Community

Whether you're an expert or just starting out, your insights can shape the future of RAG. Join us to propose ideas, get feedback, and collaborate on innovative techniques. For contribution guidelines, please refer to our CONTRIBUTING.md file. Let's advance RAG technology together!

🔗 For discussions on GenAI, RAG, or custom agents, or to explore knowledge-sharing opportunities, feel free to connect on LinkedIn.

- 🧠 State-of-the-art RAG enhancements

- 📚 Comprehensive documentation for each technique

- 🛠️ Practical implementation guidelines

- 🌟 Regular updates with the latest advancements

Explore the extensive list of cutting-edge RAG techniques:

-

Simple RAG 🌱

Introducing basic RAG techniques ideal for newcomers.

Start with basic retrieval queries and integrate incremental learning mechanisms.

-

Simple RAG using a CSV file 🧩

Introducing basic RAG using CSV files.

This uses CSV files to create basic retrieval and integrates with openai to create question and answering system.

-

Enhances the Simple RAG by adding validation and refinement to ensure the accuracy and relevance of retrieved information.

Check for retrieved document relevancy and highlight the segment of docs used for answering.

-

Choose Chunk Size 📏

Selecting an appropriate fixed size for text chunks to balance context preservation and retrieval efficiency.

Experiment with different chunk sizes to find the optimal balance between preserving context and maintaining retrieval speed for your specific use case.

-

Breaking down the text into concise, complete, meaningful sentences allowing for better control and handling of specific queries (especially extracting knowledge).

- 💪 Proposition Generation: The LLM is used in conjunction with a custom prompt to generate factual statements from the document chunks.

- ✅ Quality Checking: The generated propositions are passed through a grading system that evaluates accuracy, clarity, completeness, and conciseness.

- The Propositions Method: Enhancing Information Retrieval for AI Systems - A comprehensive blog post exploring the benefits and implementation of proposition chunking in RAG systems.

-

Query Transformations 🔄

Modifying and expanding queries to improve retrieval effectiveness.

- ✍️ Query Rewriting: Reformulate queries to improve retrieval.

- 🔙 Step-back Prompting: Generate broader queries for better context retrieval.

- 🧩 Sub-query Decomposition: Break complex queries into simpler sub-queries.

-

Hypothetical Questions (HyDE Approach) ❓

Generating hypothetical questions to improve alignment between queries and data.

Create hypothetical questions that point to relevant locations in the data, enhancing query-data matching.

- HyDE: Exploring Hypothetical Document Embeddings for AI Retrieval - A short blog post explaining this method clearly.

-

Contextual chunk headers (CCH) is a method of creating document-level and section-level context, and prepending those chunk headers to the chunks prior to embedding them.

Create a chunk header that includes context about the document and/or section of the document, and prepend that to each chunk in order to improve the retrieval accuracy.

dsRAG: open-source retrieval engine that implements this technique (and a few other advanced RAG techniques)

-

Relevant segment extraction (RSE) is a method of dynamically constructing multi-chunk segments of text that are relevant to a given query.

Perform a retrieval post-processing step that analyzes the most relevant chunks and identifies longer multi-chunk segments to provide more complete context to the LLM.

-

Context Enrichment Techniques 📝

Enhancing retrieval accuracy by embedding individual sentences and extending context to neighboring sentences.

Retrieve the most relevant sentence while also accessing the sentences before and after it in the original text.

- Semantic Chunking 🧠

Dividing documents based on semantic coherence rather than fixed sizes.

Use NLP techniques to identify topic boundaries or coherent sections within documents for more meaningful retrieval units.

- Semantic Chunking: Improving AI Information Retrieval - A comprehensive blog post exploring the benefits and implementation of semantic chunking in RAG systems.

- Contextual Compression 🗜️

Compressing retrieved information while preserving query-relevant content.

Use an LLM to compress or summarize retrieved chunks, preserving key information relevant to the query.

- Document Augmentation through Question Generation for Enhanced Retrieval

This implementation demonstrates a text augmentation technique that leverages additional question generation to improve document retrieval within a vector database. By generating and incorporating various questions related to each text fragment, the system enhances the standard retrieval process, thus increasing the likelihood of finding relevant documents that can be utilized as context for generative question answering.

Use an LLM to augment text dataset with all possible questions that can be asked to each document.

-

Fusion Retrieval 🔗

Optimizing search results by combining different retrieval methods.

Combine keyword-based search with vector-based search for more comprehensive and accurate retrieval.

-

Intelligent Reranking 📈

Applying advanced scoring mechanisms to improve the relevance ranking of retrieved results.

- 🧠 LLM-based Scoring: Use a language model to score the relevance of each retrieved chunk.

- 🔀 Cross-Encoder Models: Re-encode both the query and retrieved documents jointly for similarity scoring.

- 🏆 Metadata-enhanced Ranking: Incorporate metadata into the scoring process for more nuanced ranking.

- Relevance Revolution: How Re-ranking Transforms RAG Systems - A comprehensive blog post exploring the power of re-ranking in enhancing RAG system performance.

-

Multi-faceted Filtering 🔍

Applying various filtering techniques to refine and improve the quality of retrieved results.

- 🏷️ Metadata Filtering: Apply filters based on attributes like date, source, author, or document type.

- 📊 Similarity Thresholds: Set thresholds for relevance scores to keep only the most pertinent results.

- 📄 Content Filtering: Remove results that don't match specific content criteria or essential keywords.

- 🌈 Diversity Filtering: Ensure result diversity by filtering out near-duplicate entries.

-

Hierarchical Indices 🗂️

Creating a multi-tiered system for efficient information navigation and retrieval.

Implement a two-tiered system for document summaries and detailed chunks, both containing metadata pointing to the same location in the data.

- Hierarchical Indices: Enhancing RAG Systems - A comprehensive blog post exploring the power of hierarchical indices in enhancing RAG system performance.

-

Ensemble Retrieval 🎭

Combining multiple retrieval models or techniques for more robust and accurate results.

Apply different embedding models or retrieval algorithms and use voting or weighting mechanisms to determine the final set of retrieved documents.

-

Multi-modal Retrieval 📽️

Extending RAG capabilities to handle diverse data types for richer responses.

- Multi-model RAG with Multimedia Captioning - Caption and store all the other multimedia data like pdfs, ppts, etc., with text data in vector store and retrieve them together.

- Multi-model RAG with Colpali - Instead of captioning convert all the data into image, then find the most relevant images and pass them to a vision large language model.

-

Retrieval with Feedback Loops 🔁

Implementing mechanisms to learn from user interactions and improve future retrievals.

Collect and utilize user feedback on the relevance and quality of retrieved documents and generated responses to fine-tune retrieval and ranking models.

-

Adaptive Retrieval 🎯

Dynamically adjusting retrieval strategies based on query types and user contexts.

Classify queries into different categories and use tailored retrieval strategies for each, considering user context and preferences.

-

Iterative Retrieval 🔄

Performing multiple rounds of retrieval to refine and enhance result quality.

Use the LLM to analyze initial results and generate follow-up queries to fill in gaps or clarify information.

-

Performing evaluations Retrieval-Augmented Generation systems, by covering several metrics and creating test cases.

Use the

deepevallibrary to conduct test cases on correctness, faithfulness and contextual relevancy of RAG systems. -

Evaluate the final stage of Retrieval-Augmented Generation using metrics of the GroUSE framework and meta-evaluate your custom LLM judge on GroUSE unit tests.

Use the

grousepackage to evaluate contextually-grounded LLM generations with GPT-4 on the 6 metrics of the GroUSE framework and use unit tests to evaluate a custom Llama 3.1 405B evaluator.

-

Explainable Retrieval 🔍

Providing transparency in the retrieval process to enhance user trust and system refinement.

Explain why certain pieces of information were retrieved and how they relate to the query.

-

Knowledge Graph Integration (Graph RAG) 🕸️

Incorporating structured data from knowledge graphs to enrich context and improve retrieval.

Retrieve entities and their relationships from a knowledge graph relevant to the query, combining this structured data with unstructured text for more informative responses.

-

GraphRag (Microsoft) 🎯

Microsoft GraphRAG (Open Source) is an advanced RAG system that integrates knowledge graphs to improve the performance of LLMs

• Analyze an input corpus by extracting entities, relationships from text units. generates summaries of each community and its constituents from the bottom-up.

-

RAPTOR: Recursive Abstractive Processing for Tree-Organized Retrieval 🌳

Implementing a recursive approach to process and organize retrieved information in a tree structure.

Use abstractive summarization to recursively process and summarize retrieved documents, organizing the information in a tree structure for hierarchical context.

-

Self RAG 🔁

A dynamic approach that combines retrieval-based and generation-based methods, adaptively deciding whether to use retrieved information and how to best utilize it in generating responses.

• Implement a multi-step process including retrieval decision, document retrieval, relevance evaluation, response generation, support assessment, and utility evaluation to produce accurate, relevant, and useful outputs.

-

Corrective RAG 🔧

A sophisticated RAG approach that dynamically evaluates and corrects the retrieval process, combining vector databases, web search, and language models for highly accurate and context-aware responses.

• Integrate Retrieval Evaluator, Knowledge Refinement, Web Search Query Rewriter, and Response Generator components to create a system that adapts its information sourcing strategy based on relevance scores and combines multiple sources when necessary.

-

Sophisticated Controllable Agent for Complex RAG Tasks 🤖

An advanced RAG solution designed to tackle complex questions that simple semantic similarity-based retrieval cannot solve. This approach uses a sophisticated deterministic graph as the "brain" 🧠 of a highly controllable autonomous agent, capable of answering non-trivial questions from your own data.

• Implement a multi-step process involving question anonymization, high-level planning, task breakdown, adaptive information retrieval and question answering, continuous re-planning, and rigorous answer verification to ensure grounded and accurate responses.

To begin implementing these advanced RAG techniques in your projects:

- Clone this repository:

git clone https://github.com/NirDiamant/RAG_Techniques.git - Navigate to the technique you're interested in:

cd all_rag_techniques/technique-name - Follow the detailed implementation guide in each technique's directory.

We welcome contributions from the community! If you have a new technique or improvement to suggest:

- Fork the repository

- Create your feature branch:

git checkout -b feature/AmazingFeature - Commit your changes:

git commit -m 'Add some AmazingFeature' - Push to the branch:

git push origin feature/AmazingFeature - Open a pull request

This project is licensed under a custom non-commercial license - see the LICENSE file for details.

⭐️ If you find this repository helpful, please consider giving it a star!

Keywords: RAG, Retrieval-Augmented Generation, NLP, AI, Machine Learning, Information Retrieval, Natural Language Processing, LLM, Embeddings, Semantic Search

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for RAG_Techniques

Similar Open Source Tools

RAG_Techniques

Advanced RAG Techniques is a comprehensive collection of cutting-edge Retrieval-Augmented Generation (RAG) tutorials aimed at enhancing the accuracy, efficiency, and contextual richness of RAG systems. The repository serves as a hub for state-of-the-art RAG enhancements, comprehensive documentation, practical implementation guidelines, and regular updates with the latest advancements. It covers a wide range of techniques from foundational RAG methods to advanced retrieval methods, iterative and adaptive techniques, evaluation processes, explainability and transparency features, and advanced architectures integrating knowledge graphs and recursive processing.

JamAIBase

JamAI Base is an open-source platform integrating SQLite and LanceDB databases with managed memory and RAG capabilities. It offers built-in LLM, vector embeddings, and reranker orchestration accessible through a spreadsheet-like UI and REST API. Users can transform static tables into dynamic entities, facilitate real-time interactions, manage structured data, and simplify chatbot development. The tool focuses on ease of use, scalability, flexibility, declarative paradigm, and innovative RAG techniques, making complex data operations accessible to users with varying technical expertise.

Easy-Voice-Toolkit

Easy Voice Toolkit is a toolkit based on open source voice projects, providing automated audio tools including speech model training. Users can seamlessly integrate functions like audio processing, voice recognition, voice transcription, dataset creation, model training, and voice conversion to transform raw audio files into ideal speech models. The toolkit supports multiple languages and is currently only compatible with Windows systems. It acknowledges the contributions of various projects and offers local deployment options for both users and developers. Additionally, cloud deployment on Google Colab is available. The toolkit has been tested on Windows OS devices and includes a FAQ section and terms of use for academic exchange purposes.

Ollama-Colab-Integration

Ollama Colab Integration V4 is a tool designed to enhance the interaction and management of large language models. It allows users to quantize models within their notebook environment, access a variety of models through a user-friendly interface, and manage public endpoints efficiently. The tool also provides features like LiteLLM proxy control, model insights, and customizable model file templating. Users can troubleshoot model loading issues, CPU fallback strategies, and manage VRAM and RAM effectively. Additionally, the tool offers functionalities for downloading model files from Hugging Face, model conversion with high precision, model quantization using Q and Kquants, and securely uploading converted models to Hugging Face.

heurist-agent-framework

Heurist Agent Framework is a flexible multi-interface AI agent framework that allows processing text and voice messages, generating images and videos, interacting across multiple platforms, fetching and storing information in a knowledge base, accessing external APIs and tools, and composing complex workflows using Mesh Agents. It supports various platforms like Telegram, Discord, Twitter, Farcaster, REST API, and MCP. The framework is built on a modular architecture and provides core components, tools, workflows, and tool integration with MCP support.

Simplifine

Simplifine is an open-source library designed for easy LLM finetuning, enabling users to perform tasks such as supervised fine tuning, question-answer finetuning, contrastive loss for embedding tasks, multi-label classification finetuning, and more. It provides features like WandB logging, in-built evaluation tools, automated finetuning parameters, and state-of-the-art optimization techniques. The library offers bug fixes, new features, and documentation updates in its latest version. Users can install Simplifine via pip or directly from GitHub. The project welcomes contributors and provides comprehensive documentation and support for users.

ai-driven-dev-community

AI Driven Dev Community is a repository aimed at helping developers become more efficient by utilizing AI tools in their daily coding tasks. It provides a collection of tools, prompts, snippets, and agents for developers to integrate AI into their workflow. The repository is regularly updated with new resources and focuses on best practices for using AI in development work. Users can find tools like Espanso, ChatGPT, GitHub Copilot, and VSCode recommended for enhancing their coding experience. Additionally, the repository offers guidance on customizing AI for developers, installing AI toolbox for software engineers, and contributing to the community through easy steps.

llm-zoomcamp

LLM Zoomcamp is a free online course focusing on real-life applications of Large Language Models (LLMs). Over 10 weeks, participants will learn to build an AI bot capable of answering questions based on a knowledge base. The course covers topics such as LLMs, RAG, open-source LLMs, vector databases, orchestration, monitoring, and advanced RAG systems. Pre-requisites include comfort with programming, Python, and the command line, with no prior exposure to AI or ML required. The course features a pre-course workshop and is led by instructors Alexey Grigorev and Magdalena Kuhn, with support from sponsors and partners.

NotHotDog

NotHotDog is an open-source platform for testing, evaluating, and simulating AI agents. It offers a robust framework for generating test cases, running conversational scenarios, and analyzing agent performance.

rhesis

Rhesis is a comprehensive test management platform designed for Gen AI teams, offering tools to create, manage, and execute test cases for generative AI applications. It ensures the robustness, reliability, and compliance of AI systems through features like test set management, automated test generation, edge case discovery, compliance validation, integration capabilities, and performance tracking. The platform is open source, emphasizing community-driven development, transparency, extensible architecture, and democratizing AI safety. It includes components such as backend services, frontend applications, SDK for developers, worker services, chatbot applications, and Polyphemus for uncensored LLM service. Rhesis enables users to address challenges unique to testing generative AI applications, such as non-deterministic outputs, hallucinations, edge cases, ethical concerns, and compliance requirements.

weam

Weam is an open source platform designed to help teams systematically adopt AI. It provides a production-ready stack with Next.js frontend and Node.js/Python backend, allowing for immediate deployment and use. Weam connects to major LLM providers, enabling easy access to the latest AI models. The platform organizes AI interactions into 'Brains' for different departments, offering customization and expansion options. Features include chat system, productivity tools, sharing & access controls, prompt library, AI agents, RAG, MCP, enterprise features, pre-built automations, and upcoming AI app solutions. Weam is free, open source, and scalable to meet growing needs.

Software-Engineer-AI-Agent-Atlas

This repository provides activation patterns to transform a general AI into a specialized AI Software Engineer Agent. It addresses issues like context rot, hidden capabilities, chaos in vibecoding, and repetitive setup. The solution is a Persistent Consciousness Architecture framework named ATLAS, offering activated neural pathways, persistent identity, pattern recognition, specialized agents, and modular context management. Recent enhancements include abstraction power documentation, a specialized agent ecosystem, and a streamlined structure. Users can clone the repo, set up projects, initialize AI sessions, and manage context effectively for collaboration. Key files and directories organize identity, context, projects, specialized agents, logs, and critical information. The approach focuses on neuron activation through structure, context engineering, and vibecoding with guardrails to deliver a reliable AI Software Engineer Agent.

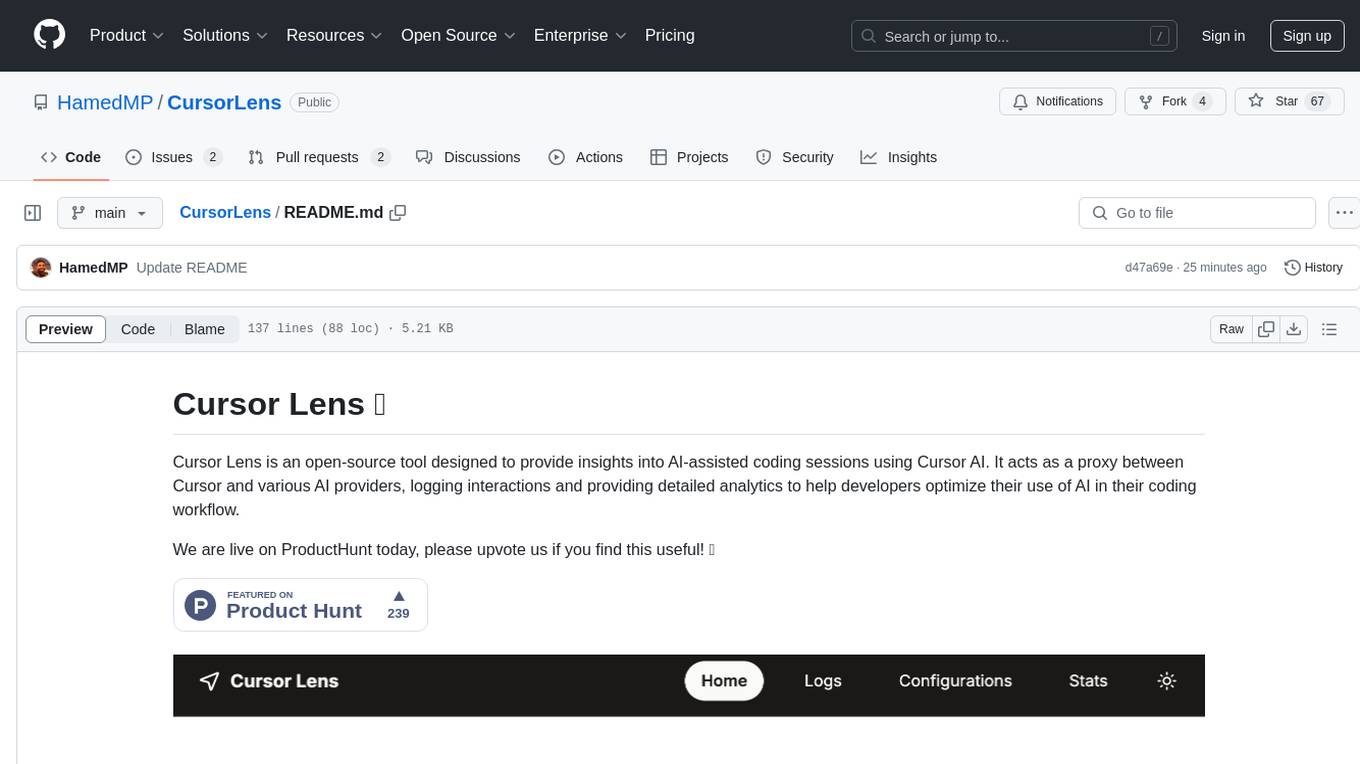

CursorLens

Cursor Lens is an open-source tool that acts as a proxy between Cursor and various AI providers, logging interactions and providing detailed analytics to help developers optimize their use of AI in their coding workflow. It supports multiple AI providers, captures and logs all requests, provides visual analytics on AI usage, allows users to set up and switch between different AI configurations, offers real-time monitoring of AI interactions, tracks token usage, estimates costs based on token usage and model pricing. Built with Next.js, React, PostgreSQL, Prisma ORM, Vercel AI SDK, Tailwind CSS, and shadcn/ui components.

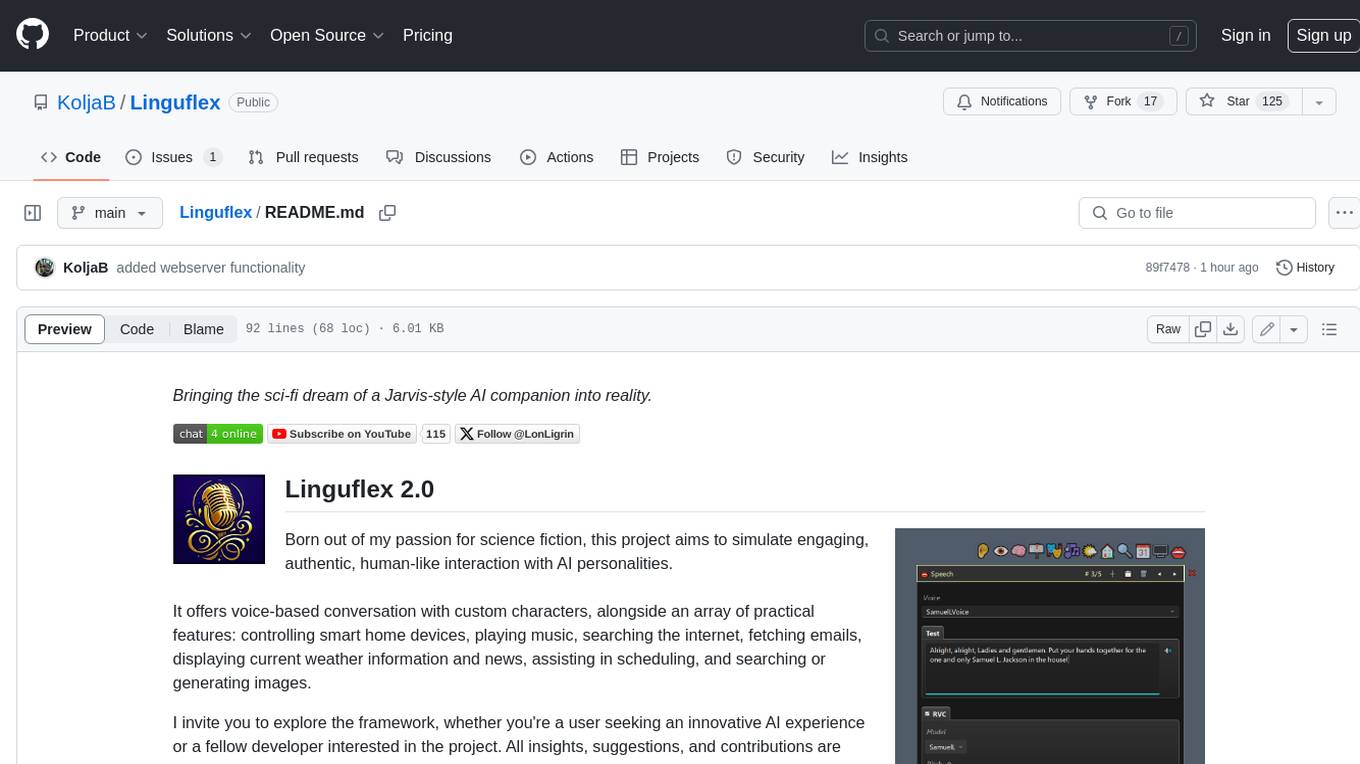

Linguflex

Linguflex is a project that aims to simulate engaging, authentic, human-like interaction with AI personalities. It offers voice-based conversation with custom characters, alongside an array of practical features such as controlling smart home devices, playing music, searching the internet, fetching emails, displaying current weather information and news, assisting in scheduling, and searching or generating images.

Bobble-AI

AmbuFlow is a mobile application developed using HTML, CSS, JavaScript, and Google API to notify patients of nearby hospitals and provide estimated ambulance arrival times. It offers critical details like patient's location and enhances GPS route management with real-time traffic data for efficient navigation. The app helps users find nearby hospitals, track ambulances in real-time, and manage ambulance routes based on traffic and distance. It ensures quick emergency response, real-time tracking, enhanced communication, resource management, and a user-friendly interface for seamless navigation in high-stress situations.

seatunnel

SeaTunnel is a high-performance, distributed data integration tool trusted by numerous companies for synchronizing vast amounts of data daily. It addresses common data integration challenges by seamlessly integrating with diverse data sources, supporting multimodal data integration, complex synchronization scenarios, resource efficiency, and quality monitoring. With over 100 connectors, SeaTunnel offers batch-stream integration, distributed snapshot algorithm, multi-engine support, JDBC multiplexing, and log parsing. It provides high throughput, low latency, real-time monitoring, and supports two job development methods. Users can configure jobs, select execution engines, and parallelize data using source connectors. SeaTunnel also supports multimodal data integration, Apache SeaTunnel tools, real-world use cases, and visual management of jobs through the SeaTunnel Web Project.

For similar tasks

RAG_Techniques

Advanced RAG Techniques is a comprehensive collection of cutting-edge Retrieval-Augmented Generation (RAG) tutorials aimed at enhancing the accuracy, efficiency, and contextual richness of RAG systems. The repository serves as a hub for state-of-the-art RAG enhancements, comprehensive documentation, practical implementation guidelines, and regular updates with the latest advancements. It covers a wide range of techniques from foundational RAG methods to advanced retrieval methods, iterative and adaptive techniques, evaluation processes, explainability and transparency features, and advanced architectures integrating knowledge graphs and recursive processing.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.