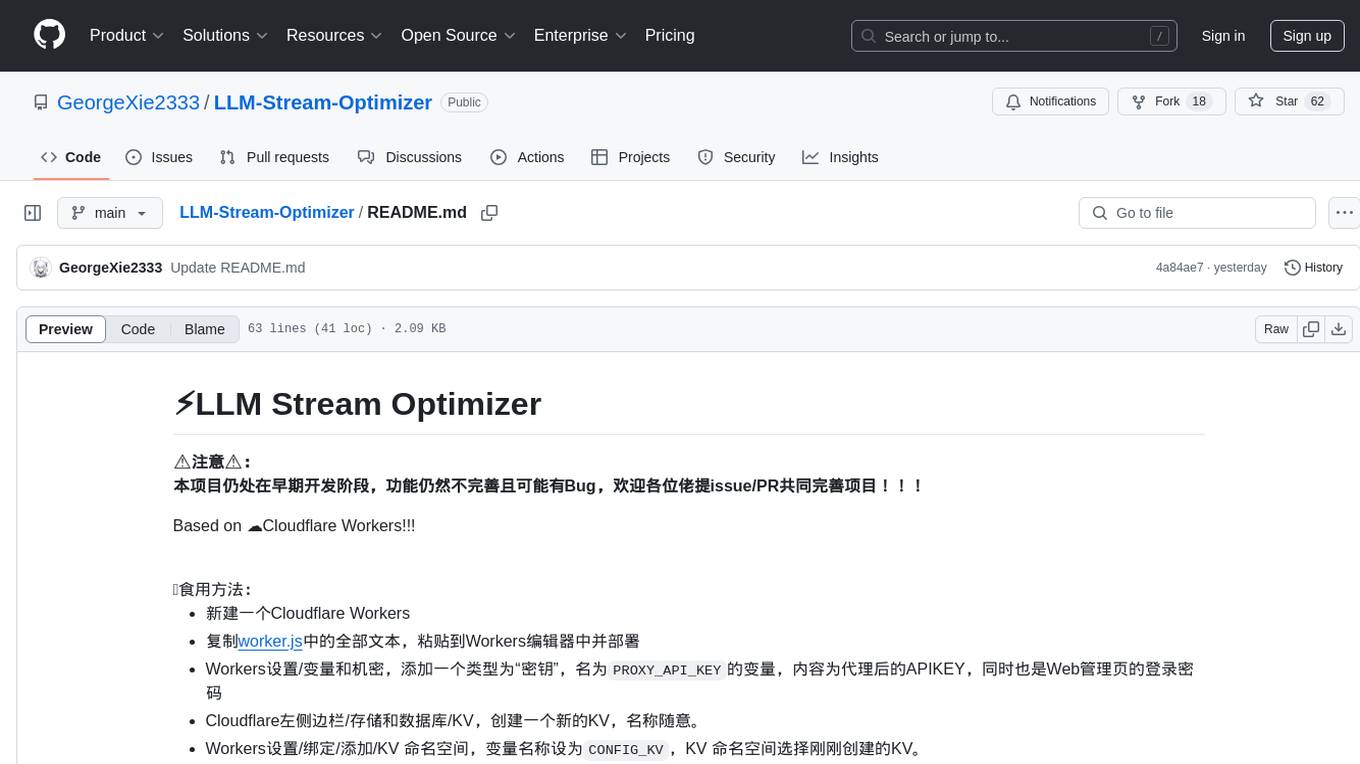

LLM-Stream-Optimizer

⚡基于 Cloudflare Workers 优化LLM流式输出,支持多种格式API,转换大型响应块,带Web管理页,原生Fetch请求(ShadowFetch),支持多KEY负载均衡

Stars: 112

LLM Stream Optimizer is a tool developed on Cloudflare Workers for optimizing streaming responses and managing multiple APIs. It features intelligent stream output optimization, adaptive delay algorithm, web API management page, and removal of unnecessary Cloudflare fetch headers. The tool aims to enhance API performance and provide a smooth user experience.

README:

本项目仍处在早期开发阶段,功能仍然不完善且可能有Bug,欢迎各位佬提issue/PR共同完善项目!!!

Based on ☁️Cloudflare Workers!!!

🍗食用方法:

- 新建一个Cloudflare Workers

- 复制worker.js中的全部文本,粘贴到Workers编辑器中并部署

- Workers设置/变量和机密,添加一个类型为“密钥”,名为

PROXY_API_KEY的变量,内容为代理后的APIKEY,同时也是Web管理页的登录密码 - Cloudflare左侧边栏/存储和数据库/KV,创建一个新的KV,名称随意。

- Workers设置/绑定/添加/KV 命名空间,变量名称设为

CONFIG_KV,KV 命名空间选择刚刚创建的KV。 - 部署完成,打开你的Workers域名即可访问管理面板!

变量:

PROXY_API_KEY=代理APIKEY,同时也是Web管理页的登录密码

CONFIG_KV=KV数据库,用于存储API数据及流式优化配置

功能:

API多合一

- 支持添加OpenAI、Anthropic、Google Gemini格式的API

- 支持添加多个OpenAI API

- 统一转为OpenAI格式API

智能流式输出优化

- 将大型响应块分解为逐字符输出

- 基于响应块大小和时间间隔智能调整字符间延迟

自适应延迟算法

- 检测响应数据块大小:块越大,字符延迟越小

- 监控响应时间间隔:间隔越长,字符延迟越大

- 确保输出平滑自然,没有明显停顿

Web API管理页面

- 支持通过Web管理页面调整API设置

- 访问workers域名根目录即为Web管理页面

- Web管理页面登录密码为变量

PROXY_API_KEY

剔除 Cloudflare 自带 fetch 的多余请求头

- 使用ShadowFetch替代Cloudflare Fetch

- 确保请求上游API时不会带有Cloudflare添加的其他请求头

- 支持对单个API设置启用或关闭原生Fetch以适配更多使用情景

支持/v1/models路径获取所有API的模型列表

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLM-Stream-Optimizer

Similar Open Source Tools

LLM-Stream-Optimizer

LLM Stream Optimizer is a tool developed on Cloudflare Workers for optimizing streaming responses and managing multiple APIs. It features intelligent stream output optimization, adaptive delay algorithm, web API management page, and removal of unnecessary Cloudflare fetch headers. The tool aims to enhance API performance and provide a smooth user experience.

AGiXT

AGiXT is a dynamic Artificial Intelligence Automation Platform engineered to orchestrate efficient AI instruction management and task execution across a multitude of providers. Our solution infuses adaptive memory handling with a broad spectrum of commands to enhance AI's understanding and responsiveness, leading to improved task completion. The platform's smart features, like Smart Instruct and Smart Chat, seamlessly integrate web search, planning strategies, and conversation continuity, transforming the interaction between users and AI. By leveraging a powerful plugin system that includes web browsing and command execution, AGiXT stands as a versatile bridge between AI models and users. With an expanding roster of AI providers, code evaluation capabilities, comprehensive chain management, and platform interoperability, AGiXT is consistently evolving to drive a multitude of applications, affirming its place at the forefront of AI technology.

bifrost

Bifrost is a high-performance AI gateway that unifies access to multiple providers through a single OpenAI-compatible API. It offers features like automatic failover, load balancing, semantic caching, and enterprise-grade functionalities. Users can deploy Bifrost in seconds with zero configuration, benefiting from its core infrastructure, advanced features, enterprise and security capabilities, and developer experience. The repository structure is modular, allowing for maximum flexibility. Bifrost is designed for quick setup, easy configuration, and seamless integration with various AI models and tools.

llxprt-code

LLxprt Code is an AI-powered coding assistant that works with any LLM provider, offering a command-line interface for querying and editing codebases, generating applications, and automating development workflows. It supports various subscriptions, provider flexibility, top open models, local model support, and a privacy-first approach. Users can interact with LLxprt Code in both interactive and non-interactive modes, leveraging features like subscription OAuth, multi-account failover, load balancer profiles, and extensive provider support. The tool also allows for the creation of advanced subagents for specialized tasks and integrates with the Zed editor for in-editor chat and code selection.

sparka

Sparka AI is a multi-provider AI chat tool that allows users to access various AI models like Claude, GPT-5, Gemini, and Grok through a single interface. It offers features such as document analysis, image generation, code execution, and research tools without the need for multiple subscriptions. The tool is open-source, production-ready, and provides capabilities for collaboration, secure authentication, attachment support, AI-powered image generation, syntax highlighting, resumable streams, chat branching, chat sharing, deep research, code execution, document creation, and web analytics. Built with modern technologies for scalability and performance, Sparka AI integrates with Vercel AI SDK, tRPC, Drizzle ORM, PostgreSQL, Redis, and AI SDK Gateway.

ToolNeuron

ToolNeuron is a secure, offline AI ecosystem for Android devices that allows users to run private AI models and dynamic plugins fully offline, with hardware-grade encryption ensuring maximum privacy. It enables users to have an offline-first experience, add capabilities without app updates through pluggable tools, and ensures security by design with strict plugin validation and sandboxing.

structured-prompt-builder

A lightweight, browser-first tool for designing well-structured AI prompts with a clean UI, live previews, a local Prompt Library, and optional Gemini-powered prompt optimization. It supports structured fields like Role, Task, Audience, Style, Tone, Constraints, Steps, Inputs, and Few-shot examples. Users can copy/download prompts in Markdown, JSON, and YAML formats, and utilize model parameters like Temperature, Top-p, Max tokens, Presence & Frequency penalties. The tool also features a Local Prompt Library for saving, loading, duplicating, and deleting prompts, as well as a Gemini Optimizer for cleaning grammar/clarity without altering the schema. It offers dark/light friendly styles and a focused reading mode for long prompts.

basilic

Basilic is a full-stack monorepo starter designed for teams developing Web3 and AI applications. It provides SDKs, public APIs, and multichain support to accelerate feature shipping. The starter includes AI-first development workflows, REST API with JWT authentication, SDK generation, Web3 and AI templates, design system, preconfigured development tools, security measures, multichain support, and TypeScript-first approach. It offers a technology stack covering AI, frontend, backend, Web3, and DevOps, along with various apps, packages, and scripts for setup, formatting, linting, testing, security, hooks, and miscellaneous tasks.

AgriTech

AgriTech is an AI-powered smart agriculture platform designed to assist farmers with crop recommendations, yield prediction, plant disease detection, and community-driven collaboration—enabling sustainable and data-driven farming practices. It offers AI-driven decision support for modern agriculture, early-stage plant disease detection, crop yield forecasting using machine learning models, and a collaborative ecosystem for farmers and stakeholders. The platform includes features like crop recommendation, yield prediction, disease detection, an AI chatbot for platform guidance and agriculture support, a farmer community, and shopkeeper listings. AgriTech's AI chatbot provides comprehensive support for farmers with features like platform guidance, agriculture support, decision making, image analysis, and 24/7 support. The tech stack includes frontend technologies like HTML5, CSS3, JavaScript, backend technologies like Python (Flask) and optional Node.js, machine learning libraries like TensorFlow, Scikit-learn, OpenCV, and database & DevOps tools like MySQL, MongoDB, Firebase, Docker, and GitHub Actions.

dataset-viewer

Dataset Viewer is a modern, high-performance tool built with Tauri, React, and TypeScript, designed to handle massive datasets from multiple sources with efficient streaming for large files (100GB+) and lightning-fast search capabilities. It supports instant large file opening, real-time search, direct archive preview, multi-protocol and multi-format support, and features a modern interface with dark/light themes and responsive design. The tool is perfect for data scientists, log analysis, archive management, remote access, and performance-critical tasks.

Zettelgarden

Zettelgarden is a human-centric, open-source personal knowledge management system that helps users develop and maintain their understanding of the world. It focuses on creating and connecting atomic notes, thoughtful AI integration, and scalability from personal notes to company knowledge bases. The project is actively evolving, with features subject to change based on community feedback and development priorities.

scrapegraph-sdk

Official SDKs for the ScrapeGraph AI API - Intelligent web scraping and search powered by AI. Extract structured data from any webpage or perform AI-powered web searches with natural language prompts. The SDK offers features such as SmartScraper for data extraction, SearchScraper for AI-powered web search, Markdownify for converting webpages to markdown, SmartCrawler for intelligent crawling, AgenticScraper for automated browser actions, and more. It provides seamless integration with popular frameworks and tools, supports Python and JavaScript SDKs, LLM frameworks, low-code platforms, and offers core features like AI-powered extraction, structured output, multiple data formats, high performance, and enterprise-grade security.

agent-hub

Agent Hub is a platform for AI Agent solutions, containing three different projects aimed at transforming enterprise workflows, enhancing personalized language learning experiences, and enriching multimodal interactions. The projects include GitHub Sentinel for project management and automatic updates, LanguageMentor for personalized language learning support, and ChatPPT for multimodal AI-driven insights and PowerPoint automation in enterprise settings. The future vision of agent-hub is to serve as a launchpad for more AI Agents catering to different industries and pushing the boundaries of AI technology. Users are encouraged to explore, clone the repository, and contribute to the development of transformative AI agents.

aigne-hub

AIGNE Hub is a unified AI gateway that manages connections to multiple LLM and AIGC providers, eliminating the complexity of handling API keys, usage tracking, and billing across different AI services. It provides self-hosting capabilities, multi-provider management, unified security, usage analytics, flexible billing, and seamless integration with the AIGNE framework. The tool supports various AI providers and deployment scenarios, catering to both enterprise self-hosting and service provider modes. Users can easily deploy and configure AI providers, enable billing, and utilize core capabilities such as chat completions, image generation, embeddings, and RESTful APIs. AIGNE Hub ensures secure access, encrypted API key management, user permissions, and audit logging. Built with modern technologies like AIGNE Framework, Node.js, TypeScript, React, SQLite, and Blocklet for cloud-native deployment.

OpenChat

OS Chat is a free, open-source AI personal assistant that combines 40+ language models with powerful automation capabilities. It allows users to deploy background agents, connect services like Gmail, Calendar, Notion, GitHub, and Slack, and get things done through natural conversation. With features like smart automation, service connectors, AI models, chat management, interface customization, and premium features, OS Chat offers a comprehensive solution for managing digital life and workflows. It prioritizes privacy by being open source and self-hostable, with encrypted API key storage.

ito

Ito is an intelligent voice assistant that provides seamless voice dictation to any application on your computer. It works in any app, offers global keyboard shortcuts, real-time transcription, and instant text insertion. It is smart and adaptive with features like custom dictionary, context awareness, multi-language support, and intelligent punctuation. Users can customize trigger keys, audio preferences, and privacy controls. It also offers data management features like a notes system, interaction history, cloud sync, and export capabilities. Ito is built as a modern Electron application with a multi-process architecture and utilizes technologies like React, TypeScript, Rust, gRPC, and AWS CDK.

For similar tasks

LLM-Stream-Optimizer

LLM Stream Optimizer is a tool developed on Cloudflare Workers for optimizing streaming responses and managing multiple APIs. It features intelligent stream output optimization, adaptive delay algorithm, web API management page, and removal of unnecessary Cloudflare fetch headers. The tool aims to enhance API performance and provide a smooth user experience.

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.

speakeasy

Speakeasy is a tool that helps developers create production-quality SDKs, Terraform providers, documentation, and more from OpenAPI specifications. It supports a wide range of languages, including Go, Python, TypeScript, Java, and C#, and provides features such as automatic maintenance, type safety, and fault tolerance. Speakeasy also integrates with popular package managers like npm, PyPI, Maven, and Terraform Registry for easy distribution.

fastapi

智元 Fast API is a one-stop API management system that unifies various LLM APIs in terms of format, standards, and management, achieving the ultimate in functionality, performance, and user experience. It supports various models from companies like OpenAI, Azure, Baidu, Keda Xunfei, Alibaba Cloud, Zhifu AI, Google, DeepSeek, 360 Brain, and Midjourney. The project provides user and admin portals for preview, supports cluster deployment, multi-site deployment, and cross-zone deployment. It also offers Docker deployment, a public API site for registration, and screenshots of the admin and user portals. The API interface is similar to OpenAI's interface, and the project is open source with repositories for API, web, admin, and SDK on GitHub and Gitee.

uni-api

uni-api is a project that unifies the management of large language model APIs, allowing you to call multiple backend services through a single unified API interface, converting them all to OpenAI format, and supporting load balancing. It supports various backend services such as OpenAI, Anthropic, Gemini, Vertex, Azure, xai, Cohere, Groq, Cloudflare, OpenRouter, and more. The project offers features like no front-end, pure configuration file setup, unified management of multiple backend services, support for multiple standard OpenAI format interfaces, rate limiting, automatic retry, channel cooling, fine-grained model timeout settings, and fine-grained permission control.

supavec

Supavec is an open-source tool that serves as an alternative to Carbon.ai. It allows users to build powerful RAG applications using any data source and at any scale. The tool is designed to provide a simple API endpoint for easy integration and usage. Supavec is built with Next.js, Supabase, Tailwind CSS, Bun, and Upstash, offering a robust and flexible solution for application development. Users can refer to the API documentation for detailed information on how to utilize the tool effectively.

For similar jobs

RirikoBot

RirikoBot is a powerful AI-powered Discord bot with features like Twitch Live Notifier, Giveaways, OpenAI, Stable Diffusion, Moderations, Anime / Manga Finder, and more. It is based on Discord.js v14 and can be hosted on a PC or a Server. Users can interact with the bot through various commands to access different functionalities.

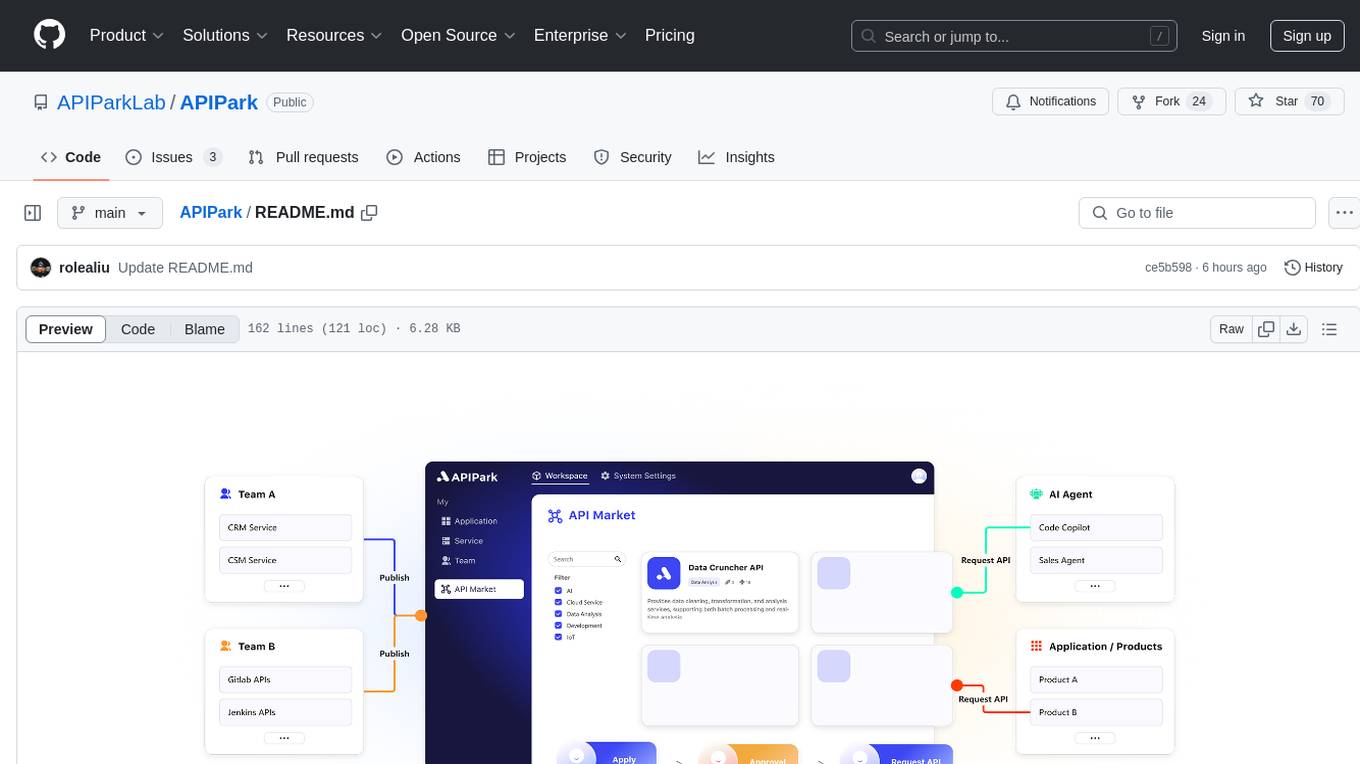

APIPark

APIPark is an open-source AI Gateway and Developer Portal that enables users to easily manage, integrate, and deploy AI and API services. It provides robust API management features, including creation, monitoring, and access control, to help developers efficiently and securely develop and manage their APIs. The platform aims to solve challenges such as connecting to powerful AI models, managing complex AI & API call relationships, overseeing API creation and security, simplifying fault detection and troubleshooting, and enhancing the visibility and valuation of data assets.

airtable

A simple Golang package to access the Airtable API. It provides functionalities to interact with Airtable such as initializing client, getting tables, listing records, adding records, updating records, deleting records, and bulk deleting records. The package is compatible with Go 1.13 and above.

openapi

The `@samchon/openapi` repository is a collection of OpenAPI types and converters for various versions of OpenAPI specifications. It includes an 'emended' OpenAPI v3.1 specification that enhances clarity by removing ambiguous and duplicated expressions. The repository also provides an application composer for LLM (Large Language Model) function calling from OpenAPI documents, allowing users to easily perform LLM function calls based on the Swagger document. Conversions to different versions of OpenAPI documents are also supported, all based on the emended OpenAPI v3.1 specification. Users can validate their OpenAPI documents using the `typia` library with `@samchon/openapi` types, ensuring compliance with standard specifications.

LLM-Stream-Optimizer

LLM Stream Optimizer is a tool developed on Cloudflare Workers for optimizing streaming responses and managing multiple APIs. It features intelligent stream output optimization, adaptive delay algorithm, web API management page, and removal of unnecessary Cloudflare fetch headers. The tool aims to enhance API performance and provide a smooth user experience.

jentic-public-apis

The Jentic Public APIs repository aims to collate all knowledge about the world's APIs into a detailed, comprehensive, structured documentation catalog designed for use by AI. It focuses on standardized OpenAPI specifications, Arazzo workflows, associated tooling, evaluations, and RFCs for extensions to open formats. The project is in ALPHA stage and welcomes contributions to accelerate the effort of building an open knowledge foundation for AI agents.

routilux

Routilux is a powerful event-driven workflow orchestration framework designed for building complex data pipelines and workflows effortlessly. It offers features like event queue architecture, flexible connections, built-in state management, robust error handling, concurrent execution, persistence & recovery, and simplified API. Perfect for tasks such as data pipelines, API orchestration, event processing, workflow automation, microservices coordination, and LLM agent workflows.

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.