easy-learn-ai

Easy-to-understand AI learning resources for beginners.

Stars: 443

Easy AI is a modern web application platform focused on AI education, aiming to help users understand complex artificial intelligence concepts through a concise and intuitive approach. The platform integrates multiple learning modules, providing a comprehensive AI knowledge system from basic concepts to practical applications.

README:

Easy AI 是一个专注于 AI 教育的现代化 Web 应用平台,旨在通过简洁直观的方式帮助用户理解复杂的人工智能概念。平台集成了多个学习模块,提供从基础概念到实践应用的全方位 AI 知识体系。

| 分类 | 主题内容 | 状态 |

|---|---|---|

| 模型基础 | 轻松理解 NLP - 人工智能中处理自然语言的分支 | ✅ |

| 模型基础 | 轻松理解 Transformer - 自注意力架构,高效处理长文本 | ✅ |

| 模型基础 | 轻松理解 LLM - 革命性的人工智能技术,重新定义机器理解能力 | ✅ |

| 模型基础 | 轻松理解 模型蒸馏 - 将复杂大模型知识压缩到轻量小模型的技术 | ✅ |

| 模型基础 | 轻松理解 模型量化 - 将模型权重转换为较低精度表示的技术 | ✅ |

| 模型基础 | 轻松理解 模型幻觉 - 模型在生成文本时出现的不真实、不合理的现象 | ✅ |

| 模型基础 | 轻松理解 Token - 模型在生成文本时的最小单位,每个 Token 代表一个词或词的一部分 | ✅ |

| 模型基础 | 轻松理解 BERT - 基于 Encoder-Only 架构的预训练语言模型 | ✅ |

| 模型基础 | 轻松理解 多模态 - 让AI理解和生成图片、视频、音频等多种模态数据 | ✅ |

| 模型基础 | 轻松理解 T5 - 基于 Encoder-Decoder 架构的预训练语言模型 | ✅ |

| 模型基础 | 轻松理解 GPT - 基于 Decoder-Only PLM 架构的预训练语言模型 | ✅ |

| 模型基础 | 轻松理解 LLaMA - 基于 Decoder-Only 架构的预训练语言模型 | ✅ |

| 模型基础 | 轻松理解 DeepSeek R1 - 通过创新算法让大语言模型获得强大推理能力 | ✅ |

| 模型基础 | 轻松理解 GGUF - 实现更高效模型存储、加载和部署的格式 | ✅ |

| 模型基础 | 轻松理解 MoE - 一种基于专家路由的模型架构,能够并行处理不同任务 | 👷 |

| 模型部署 | 轻松理解 模型部署 - 对比 Ollama 和 VLLM 两大主流本地部署方案 | ✅ |

| 模型训练 | 轻松理解 预训练 - 大语言模型训练的第一阶段 | ✅ |

| 模型微调 | 轻松理解 为什么要微调 - 长文本、知识库、微调的对比分析 | ✅ |

| 模型微调 | 轻松理解 模型微调方法 - 全参数微调、LoRA微调、冻结微调对比 | ✅ |

| 模型微调 | 轻松理解 SFT - 将预训练模型转化为实用AI助手的关键步骤 | ✅ |

| 模型微调 | 轻松理解 LoRA - 当前最受欢迎的大模型高效微调方法之一 | ✅ |

| 模型微调 | 轻松理解 RLHF - 通过强化学习将人类的主观偏好转化为模型的客观优化目标 | ✅ |

| 模型微调 | 轻松理解 微调参数:学习率 - 决定模型参数调整幅度的关键参数 | ✅ |

| 模型微调 | 轻松理解 微调参数:训练轮数 - 模型完整遍历训练数据集的次数 | ✅ |

| 模型微调 | 轻松理解 微调参数:批量大小 - 每次更新模型参数时的样本数量 | ✅ |

| 模型微调 | 轻松理解 微调参数:Lora秩 - 决定模型微调时表达能力的关键参数 | ✅ |

| 模型微调 | 轻松理解 DeepSpeed - 深度学习优化库,可以简化分布式训练与推理过程 | ✅ |

| 模型微调 | 轻松理解 Loss - 模型在训练过程中用于衡量预测值与真实值之间差异的指标 | ✅ |

| 模型评估 | 轻松理解 模型评估 - 评估模型性能的指标和方法 | ✅ |

| 数据增强 | 轻松理解 MGA - 通过轻量级框架将现有语料系统重构为多样化变体 | ✅ |

| 提示词 | 轻松理解 提示词工程 - 即将到来 | 👷 |

| Agent | 轻松理解 Agent - 让 AI 不只是答题机器,而是会做事的智能体 | ✅ |

| Agent | 轻松理解 Function Calling - 大语言模型与外部数据源、工具交互的重要方式 | ✅ |

| Agent | 轻松理解 MCP - 开放标准协议,解决 AI 模型与外部数据源交互难题 | ✅ |

| RAG | 轻松理解 RAG - 检索增强生成技术,解决大语言模型事实性问题 | ✅ |

| RAG | 轻松理解 向量嵌入 - 即将到来 | 👷 |

| RAG | 轻松理解 知识图谱 - 即将到来 | 👷 |

💡 持续更新中 ...

| 分类 | 主题内容 | 状态 |

|---|---|---|

| AI 入门 | 建立AI整体认知 - AI 技术是如何演进的? | ✅ |

| 模型部署 | 教你搭建一个纯本地、可联网、带本地知识库的私人 DeepSeek | ✅ |

| 模型微调 | 如何把你的 DeePseek-R1 微调为某个领域的专家?(理论篇) | ✅ |

| 模型微调 | 如何把你的 DeePseek-R1 微调为某个领域的专家?(实战篇) | ✅ |

| 模型微调 | LLaMA Factory 微调教程(二):入门和安装使用 | ✅ |

| 模型微调 | LLaMA Factory 微调教程(二):如何构建高质量数据集 | ✅ |

| 模型微调 | LLaMA Factory 微调教程(三):如何调整微调参数及显存消耗 | ✅ |

| 模型微调 | LLaMA Factory 微调教程(四):如何观测微调过程及模型导出 | ✅ |

| 模型微调 | LLaMA Factory 微调教程(完整版):从零微调一个专属领域大模型 | ✅ |

| 模型评估 | 大模型评估入门,及业界主流测试基准 | ✅ |

| 模型评估 | 垂直领域模型评估:一键生成测试集 + 自动化评估实践指南 | ✅ |

| 数据集 | 想微调特定领域的模型,数据集究竟要怎么搞? | ✅ |

| 数据集 | 如何把领域文献批量转换为可供模型微调的数据集? | ✅ |

| 数据集 | 使用 Easy Dataset 构造数据集实践教程 | ✅ |

| 数据集 | 五个数据集构建实战案例 | ✅ |

| Agent | MCP + 数据库,一种提高结构化数据检索精度的新方式 | ✅ |

| Agent | 全网最细,看完你就能理解 MCP 的核心原理! | ✅ |

| Agent | MCP 比传统应用面临着更大的安全威胁! | ✅ |

| Agent | 一期带你彻底搞懂 Agent Skills,从原理到实战! | ✅ |

| Agent | Agent Skills 实现知识库检索,比传统 RAG 效果更好吗? | ✅ |

💡 持续更新中 ...

精选各大 AI 平台优质提示词,了解 AI 提示词的精髓。

| Manus | Cluely | Cursor | Lovable | Devin |

|---|---|---|---|---|

| dia | Junie | Bolt | Cline | Codex CLI |

| Replit | RooCode | Same.dev | Spawn | Trae |

| v0 | VSCode | Warp.dev | Xcode | Windsurf |

汇聚优质AI工具资源,按分类精准导航,助力工作与创作效率提升。

| 分类名称 | 工具数量 | 分类名称 | 工具数量 |

|---|---|---|---|

| 全部工具 | 878+ | AI写作工具 | 100+ |

| AI视频工具 | 100+ | AI图像工具 | 69+ |

| AI设计工具 | 78+ | AI音频工具 | 75+ |

| AI对话聊天 | 72+ | AI编程工具 | 65+ |

| AI训练模型 | 49+ | AI开发平台 | 43+ |

| AI搜索引擎 | 40+ | AI幻灯片 | 36+ |

| AI办公工具 | 30+ | AI智能体 | 19+ |

| AI语言翻译 | 19+ | AI内容检测 | 16+ |

| AI法律助手 | 8+ |

💡 提示:点击分类名称可直接跳转到对应工具页面,支持URL分享和收藏特定分类。

汇聚主流 AI 大模型信息,提供模型参数、能力特点、发布时间等关键数据,帮助你快速了解和对比各类 AI 模型。

| 功能特性 | 说明 |

|---|---|

| 模型检索 | 支持按名称、公司、标签等多维度搜索筛选 |

| 模型对比 | 展示上下文窗口、最大输出、开源状态等核心参数 |

| 分类浏览 | 按公司分组或按发布时间排序浏览 |

| 实时更新 | 持续收录最新发布的 AI 模型信息 |

💡 访问地址:AI 模型库

收录 AI 领域主流评测基准,涵盖语言理解、推理能力、多模态、代码生成等多个维度,助力了解 AI 模型能力评估体系。

| 基准类型 | 示例基准 |

|---|---|

| 语言理解 | MMLU、GLUE、SuperGLUE、HellaSwag |

| 推理能力 | ARC-AGI-1、ARC-AGI-2、GSM8K、MATH |

| 数学推理 | FrontierMath、AIME、Olympiad Bench |

| 多模态 | MMMU、Video-MMMU、VQA |

| 代码生成 | HumanEval、MBPP、SWE-bench、SciCode |

| 智能体 | MCP-Atlas、GAIA、MLE-bench |

💡 访问地址:AI 基准库

精选各渠道 AI 一手新闻,每日汇总报告。

精选 AI 领域的重大事件,让你梳理清楚 AI 发展脉络。

我们欢迎所有形式的贡献,包括但不限于:

- 🐛 报告 Bug

- 💡 提出新功能建议

- 📖 改进文档

- 🔧 提交代码修复

如有任何问题或建议,欢迎通过以下方式联系:

🎯 让 AI 学习变得简单 | 🚀 让知识传播更高效

Made with ❤️ for the AI learning community

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for easy-learn-ai

Similar Open Source Tools

easy-learn-ai

Easy AI is a modern web application platform focused on AI education, aiming to help users understand complex artificial intelligence concepts through a concise and intuitive approach. The platform integrates multiple learning modules, providing a comprehensive AI knowledge system from basic concepts to practical applications.

DeepLearing-Interview-Awesome-2024

DeepLearning-Interview-Awesome-2024 is a repository that covers various topics related to deep learning, computer vision, big models (LLMs), autonomous driving, smart healthcare, and more. It provides a collection of interview questions with detailed explanations sourced from recent academic papers and industry developments. The repository is aimed at assisting individuals in academic research, work innovation, and job interviews. It includes six major modules covering topics such as large language models (LLMs), computer vision models, common problems in computer vision and perception algorithms, deep learning basics and frameworks, as well as specific tasks like 3D object detection, medical image segmentation, and more.

Tiktoken

Tiktoken is a high-performance implementation focused on token count operations. It provides various encodings like o200k_base, cl100k_base, r50k_base, p50k_base, and p50k_edit. Users can easily encode and decode text using the provided API. The repository also includes a benchmark console app for performance tracking. Contributions in the form of PRs are welcome.

ai-app

The 'ai-app' repository is a comprehensive collection of tools and resources related to artificial intelligence, focusing on topics such as server environment setup, PyCharm and Anaconda installation, large model deployment and training, Transformer principles, RAG technology, vector databases, AI image, voice, and music generation, and AI Agent frameworks. It also includes practical guides and tutorials on implementing various AI applications. The repository serves as a valuable resource for individuals interested in exploring different aspects of AI technology.

lingti-bot

lingti-bot is an AI Bot platform that integrates MCP Server, multi-platform message gateway, rich toolset, intelligent conversation, and voice interaction. It offers core advantages like zero-dependency deployment with a single 30MB binary file, cloud relay support for quick integration with enterprise WeChat/WeChat Official Account, built-in browser automation with CDP protocol control, 75+ MCP tools covering various scenarios, native support for Chinese platforms like DingTalk, Feishu, enterprise WeChat, WeChat Official Account, and more. It is embeddable, supports multiple AI backends like Claude, DeepSeek, Kimi, MiniMax, and Gemini, and allows access from platforms like DingTalk, Feishu, enterprise WeChat, WeChat Official Account, Slack, Telegram, and Discord. The bot is designed with simplicity as the highest design principle, focusing on zero-dependency deployment, embeddability, plain text output, code restraint, and cloud relay support.

Chinese-LLaMA-Alpaca

This project open sources the **Chinese LLaMA model and the Alpaca large model fine-tuned with instructions**, to further promote the open research of large models in the Chinese NLP community. These models **extend the Chinese vocabulary based on the original LLaMA** and use Chinese data for secondary pre-training, further enhancing the basic Chinese semantic understanding ability. At the same time, the Chinese Alpaca model further uses Chinese instruction data for fine-tuning, significantly improving the model's understanding and execution of instructions.

hello-agents

Hello-Agents is a comprehensive tutorial on building intelligent agent systems, covering both theoretical foundations and practical applications. The tutorial aims to guide users in understanding and building AI-native agents, diving deep into core principles, architectures, and paradigms of intelligent agents. Users will learn to develop their own multi-agent applications from scratch, gaining hands-on experience with popular low-code platforms and agent frameworks. The tutorial also covers advanced topics such as memory systems, context engineering, communication protocols, and model training. By the end of the tutorial, users will have the skills to develop real-world projects like intelligent travel assistants and cyber towns.

gpt_server

The GPT Server project leverages the basic capabilities of FastChat to provide the capabilities of an openai server. It perfectly adapts more models, optimizes models with poor compatibility in FastChat, and supports loading vllm, LMDeploy, and hf in various ways. It also supports all sentence_transformers compatible semantic vector models, including Chat templates with function roles, Function Calling (Tools) capability, and multi-modal large models. The project aims to reduce the difficulty of model adaptation and project usage, making it easier to deploy the latest models with minimal code changes.

XiaoFeiShu

XiaoFeiShu is a specialized automation software developed closely following the quality user rules of Xiaohongshu. It provides a set of automation workflows for Xiaohongshu operations, avoiding the issues of traditional RPA being mechanical, rule-based, and easily detected. The software is easy to use, with simple operation and powerful functionality.

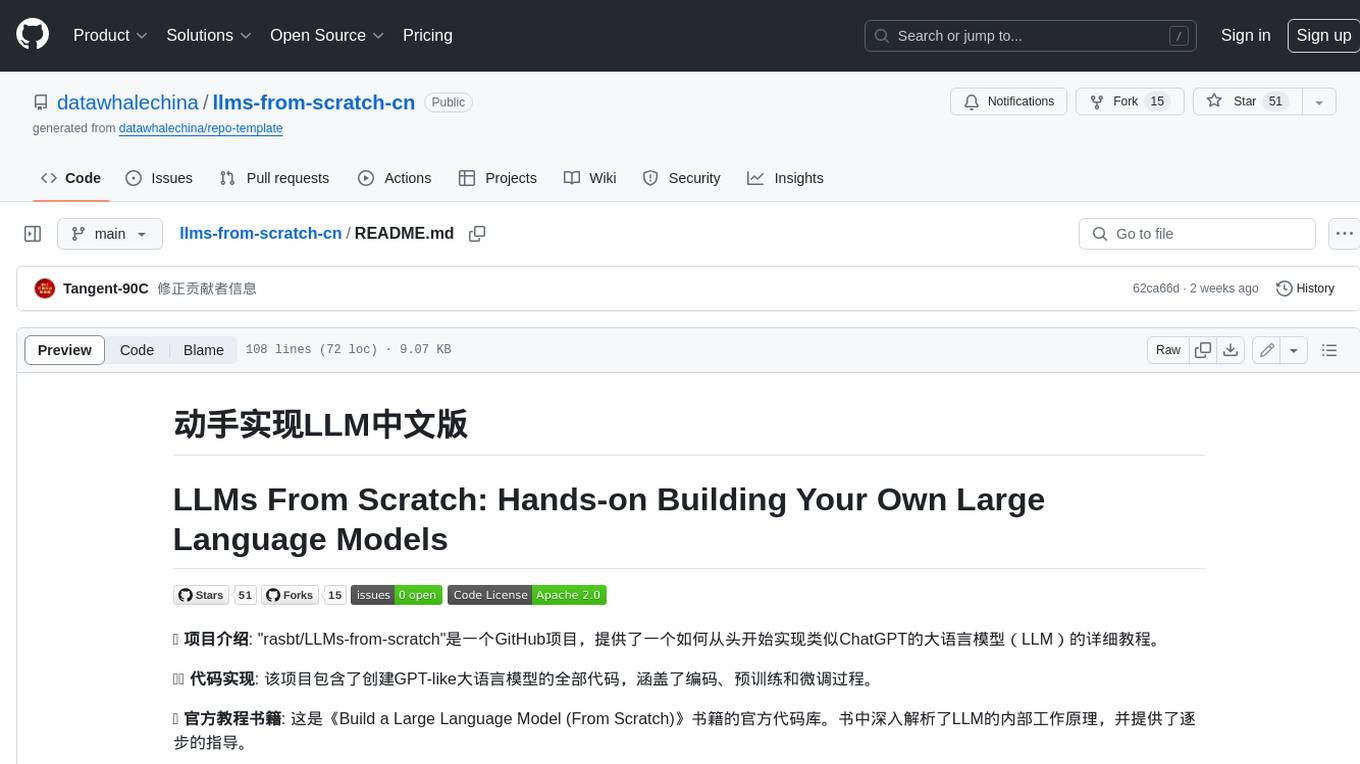

llms-from-scratch-cn

This repository provides a detailed tutorial on how to build your own large language model (LLM) from scratch. It includes all the code necessary to create a GPT-like LLM, covering the encoding, pre-training, and fine-tuning processes. The tutorial is written in a clear and concise style, with plenty of examples and illustrations to help you understand the concepts involved. It is suitable for developers and researchers with some programming experience who are interested in learning more about LLMs and how to build them.

prisma-ai

Prisma-AI is an open-source tool designed to assist users in their job search process by addressing common challenges such as lack of project highlights, mismatched resumes, difficulty in learning, and lack of answers in interview experiences. The tool utilizes AI to analyze user experiences, generate actionable project highlights, customize resumes for specific job positions, provide study materials for efficient learning, and offer structured interview answers. It also features a user-friendly interface for easy deployment and supports continuous improvement through user feedback and collaboration.

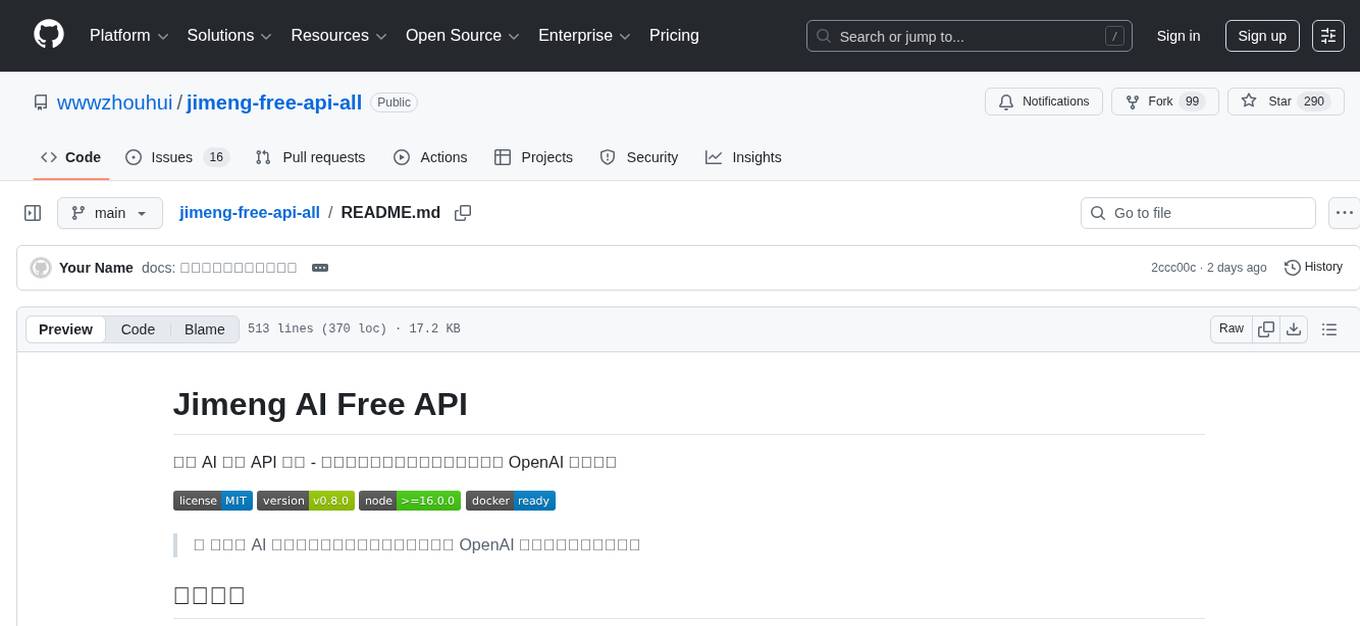

jimeng-free-api-all

Jimeng AI Free API is a reverse-engineered API server that encapsulates Jimeng AI's image and video generation capabilities into OpenAI-compatible API interfaces. It supports the latest jimeng-5.0-preview, jimeng-4.6 text-to-image models, Seedance 2.0 multi-image intelligent video generation, zero-configuration deployment, and multi-token support. The API is fully compatible with OpenAI API format, seamlessly integrating with existing clients and supporting multiple session IDs for polling usage.

Chinese-LLaMA-Alpaca-2

Chinese-LLaMA-Alpaca-2 is a large Chinese language model developed by Meta AI. It is based on the Llama-2 model and has been further trained on a large dataset of Chinese text. Chinese-LLaMA-Alpaca-2 can be used for a variety of natural language processing tasks, including text generation, question answering, and machine translation. Here are some of the key features of Chinese-LLaMA-Alpaca-2: * It is the largest Chinese language model ever trained, with 13 billion parameters. * It is trained on a massive dataset of Chinese text, including books, news articles, and social media posts. * It can be used for a variety of natural language processing tasks, including text generation, question answering, and machine translation. * It is open-source and available for anyone to use. Chinese-LLaMA-Alpaca-2 is a powerful tool that can be used to improve the performance of a wide range of natural language processing tasks. It is a valuable resource for researchers and developers working in the field of artificial intelligence.

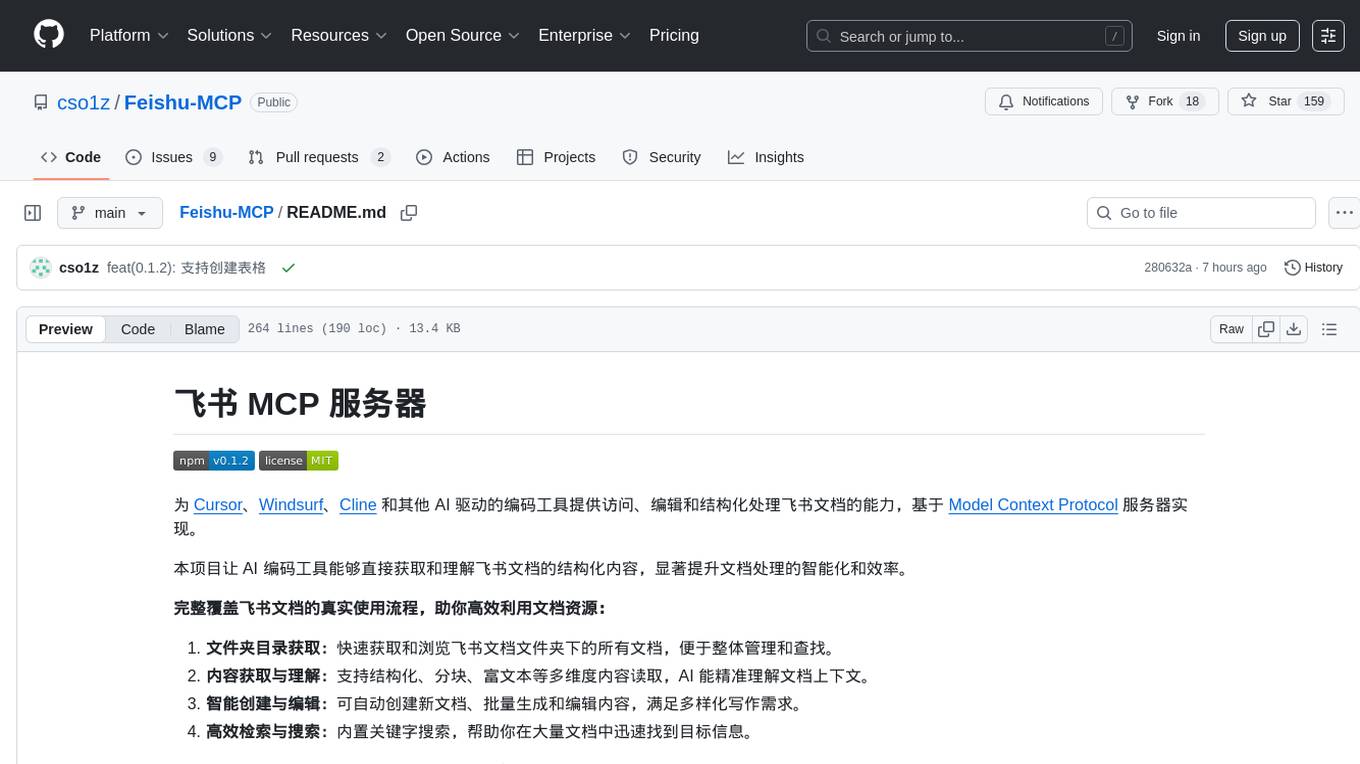

Feishu-MCP

Feishu-MCP is a server that provides access, editing, and structured processing capabilities for Feishu documents for Cursor, Windsurf, Cline, and other AI-driven coding tools, based on the Model Context Protocol server. This project enables AI coding tools to directly access and understand the structured content of Feishu documents, significantly improving the intelligence and efficiency of document processing. It covers the real usage process of Feishu documents, allowing efficient utilization of document resources, including folder directory retrieval, content retrieval and understanding, smart creation and editing, efficient search and retrieval, and more. It enhances the intelligent access, editing, and searching of Feishu documents in daily usage, improving content processing efficiency and experience.

sanic-web

Sanic-Web is a lightweight, end-to-end, and easily customizable large model application project built on technologies such as Dify, Ollama & Vllm, Sanic, and Text2SQL. It provides a one-stop solution for developing large model applications, supporting graphical data-driven Q&A using ECharts, handling table-based Q&A with CSV files, and integrating with third-party RAG systems for general knowledge Q&A. As a lightweight framework, Sanic-Web enables rapid iteration and extension to facilitate the quick implementation of large model projects.

For similar tasks

easy-learn-ai

Easy AI is a modern web application platform focused on AI education, aiming to help users understand complex artificial intelligence concepts through a concise and intuitive approach. The platform integrates multiple learning modules, providing a comprehensive AI knowledge system from basic concepts to practical applications.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

AI-in-a-Box

AI-in-a-Box is a curated collection of solution accelerators that can help engineers establish their AI/ML environments and solutions rapidly and with minimal friction, while maintaining the highest standards of quality and efficiency. It provides essential guidance on the responsible use of AI and LLM technologies, specific security guidance for Generative AI (GenAI) applications, and best practices for scaling OpenAI applications within Azure. The available accelerators include: Azure ML Operationalization in-a-box, Edge AI in-a-box, Doc Intelligence in-a-box, Image and Video Analysis in-a-box, Cognitive Services Landing Zone in-a-box, Semantic Kernel Bot in-a-box, NLP to SQL in-a-box, Assistants API in-a-box, and Assistants API Bot in-a-box.

NeMo

NeMo Framework is a generative AI framework built for researchers and pytorch developers working on large language models (LLMs), multimodal models (MM), automatic speech recognition (ASR), and text-to-speech synthesis (TTS). The primary objective of NeMo is to provide a scalable framework for researchers and developers from industry and academia to more easily implement and design new generative AI models by being able to leverage existing code and pretrained models.

E2B

E2B Sandbox is a secure sandboxed cloud environment made for AI agents and AI apps. Sandboxes allow AI agents and apps to have long running cloud secure environments. In these environments, large language models can use the same tools as humans do. For example: * Cloud browsers * GitHub repositories and CLIs * Coding tools like linters, autocomplete, "go-to defintion" * Running LLM generated code * Audio & video editing The E2B sandbox can be connected to any LLM and any AI agent or app.

floneum

Floneum is a graph editor that makes it easy to develop your own AI workflows. It uses large language models (LLMs) to run AI models locally, without any external dependencies or even a GPU. This makes it easy to use LLMs with your own data, without worrying about privacy. Floneum also has a plugin system that allows you to improve the performance of LLMs and make them work better for your specific use case. Plugins can be used in any language that supports web assembly, and they can control the output of LLMs with a process similar to JSONformer or guidance.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.