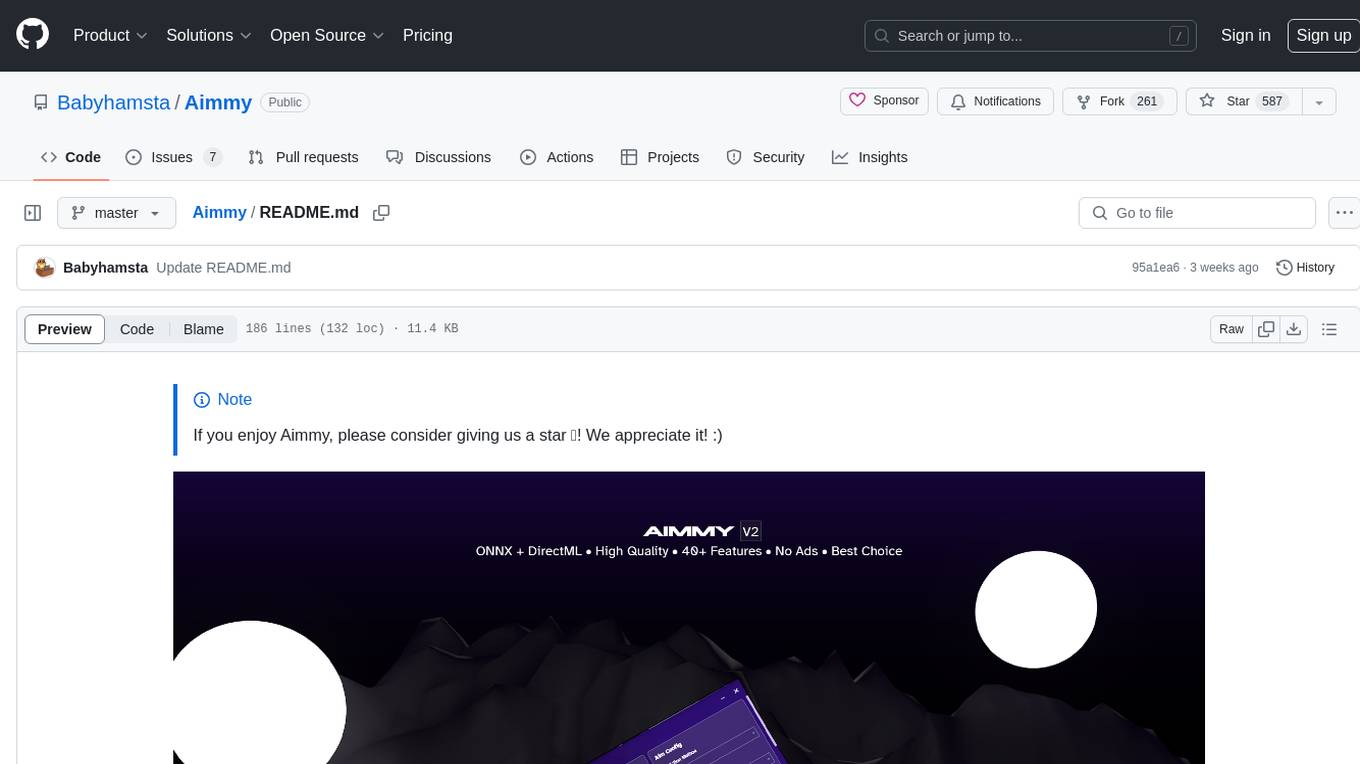

Aimmy

Universal Second Eye for Gamers with Impairments (Universal AI Aim Aligner - ONNX/YOLOv8 - C#)

Stars: 839

Aimmy is a universal AI-Based Aim Alignment Mechanism developed by BabyHamsta, MarsQQ & Taylor to make gaming more accessible for users who have difficulty aiming. It utilizes DirectML, ONNX, and YOLOV8 for player detection, offering high accuracy and fast performance. Aimmy features an easy-to-use UI, extensive customizability, and is free of ads and paywalls. It is designed for gamers facing challenges like physical or mental disabilities, poor hand-eye coordination, or aiming difficulties due to environmental factors. Aimmy provides various features like AI detection, customizability, anti-recoil system, mouse movement methods, hotswappability, and a model/configuration store with repository support.

README:

[!NOTE] If you enjoy Aimmy, please consider giving us a star ⭐! We appreciate it! :)

Aimmy is a universal AI-Based Aim Alignment Mechanism developed by BabyHamsta, MarsInsanity & Taylor to make gaming more accessible for users who have difficulty aiming.

Unlike most AI-Based Aim Alignment Mechanisms, Aimmy utilizes DirectML, ONNX, and YOLOV8 to detect players, offering both higher accuracy and faster performance compared to other Aim Aligners, especially on AMD GPUs, which would not perform well on Aim Alignment Mechanisms that utilize TensorRT.

Aimmy also provides an easy to use user-interface, a wide set of features and customizability options which makes Aimmy a great option for anyone who wants to use and tailor an Aim Alignment Mechanism for a specific game without having to code.

Aimmy is 100% free to use. This means no ads, no key system, and no paywalled features. Aimmy is not, and will never be for sale for the end user, and is considered a source-available product, not open source as we actively discourage other developers from making commercial forks of Aimmy.

Please do not confuse Aimmy as an open-source project, we are not one.

Want to connect with us? Join our Discord Server: https://discord.gg/aimmy

If you want to share Aimmy with your friends, our website is: https://aimmy.dev/

- What is the purpose of Aimmy?

- How does Aimmy Work?

- Features

- Setup

- How is Aimmy better than similar AI-Based tools?

- How the hell is Aimmy free?

- What is the Web Model?

- How do I train my own model?

- How do I upload my model to the "Downloadable Models" menu

- Gamers who are physically challenged

- Gamers who are mentally challenged

- Gamers who suffer from untreated/untreatable visual impairments

- Gamers who do not have access to a seperate Human-Interface Device (HID) for controlling the pointer

- Gamers trying to improve their reaction time

- Gamers with poor Hand/Eye coordination

- Gamers who perform poorly in FPS games

- Gamers who play for long periods in hot environments, causing greasy hands that make aiming difficult

flowchart LR

A["Playing Game System"]

C["Screen Grabbing Functionality"]

B["YOLOv8 (DirectML + ONNX) Recognition"]

D{Making Decision}

DA["X+Y Adjustment"]

DB["FOV"]

E["Triggering Functionality"]

F["Mouse Cursor"]

A --> E--> C --> B --> D --> F

DA --> D

DB --> D

When you press the trigger binding, Aimmy will capture the screen and run the image through AI recognition powered by your computer hardware. The result it develops will be combined with any adjustment you made in the X and Y axis, and your current FOV and will result in a change in your mouse cursor position.

- Full Fledged UI

- Aimmy provides a well designed and full-fledged UI for easy usage and game adjustment.

- DirectML + ONNX + YOLOv8 AI Detection Algorithm

- The use of these technologies allows Aimmy to be one of the most accurate and fastest Aim Alignment Mechanisms out there in the world

- Dynamic Customizability System

- Aimmy provides an interactive customizability system with various features that auto-updates the way Aimmy will aim as you customize. From AI Confidence, to FOV, to Anti-Recoil Adjustment, Aimmy makes it easy for anyone to tune their aim

- Dynamic Visual System

- Aimmy contains a universal ESP system that will highlight the player detected by the AI. This is helpful for visually impaired users who have a hard time differentiating enemies, and for configuration creators attempting to debug their configurations.

- Adjustable Anti-Recoil

- Aimmy offers an incredibly customizable Anti-Recoil system that's designed to be easy to use. With features like recording your Fire Rate, setting your X and Y adjustment, and Configuration Switch Keybindings

- Mouse Movement Method

- Aimmy grants you the right to switch between 5 Mouse Movement Methods depending on your Mouse Type and Game for better Aim Alignment

- Hotswappability

- Aimmy lets you hotswap models and configurations on the go. There is no need to reset Aimmy to make your changes

- Model and Configuration Store with Repository Support

- Aimmy makes it easy to get any models and configurations you may ever need, and with repository support, you can get up to date with the latest models and configurations from your favorite creators

- Download and Install the x64 version of .NET Runtime 8.0.X.X

- Download and Install the x64 version of Visual C++ Redistributable

- Download Aimmy from Releases (Make sure it's the Aimmy zip and not Source zip)

- Extract the Aimmy.zip file

- Run Aimmy.exe

- Choose your Model and Enjoy :)

Aimmy is written in C# using .NET 8 and WPF utilizing pre-existing libraries like DirectML and ONNX. This has allowed us to make a very fast Aim Aligner with high compatiblity on both AMD and NVIDIA GPUs without sacrificing the end-user experience.

Beyond the core functionality, Aimmy also adds some amazing additional features like Detection ESP and Anti-Recoil to help you tune your gaming experience however you like it.

Aimmy comes pre-bundled with 2 well trained AI models with thousands of images each.

- Phantom Forces

- Universal Model

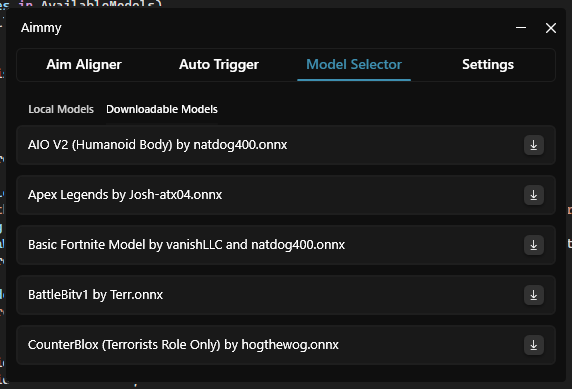

Besides those 2 models, Aimmy provides dozens of other community made models through the store, with more models being developed every day by other Aimmy users. These models vary from game to image count, making Aimmy incredibly versatile and universal for thousands of games on the market right now.

As an AI based Aim Aligner, Aimmy does not require any sort of upkeep because it does not read any specific game data to perform it's actions. If Aimmy team stops maintaining Aimmy, even if no one pitches in to fork and maintain the project, Aimmy would still work.

This has meant that while we do currently use out of pocket expenses to run Aimmy, those expenses have been low enough that it hasn't been a necessity for Aimmy to run on even an ad-supported model.

We do not seek to make money from Aimmy, we only seek your kind words <3, and a chance to help people aim better, by assisting their aim or even to train how they aim (yes, you can use Aimmy in that way too)

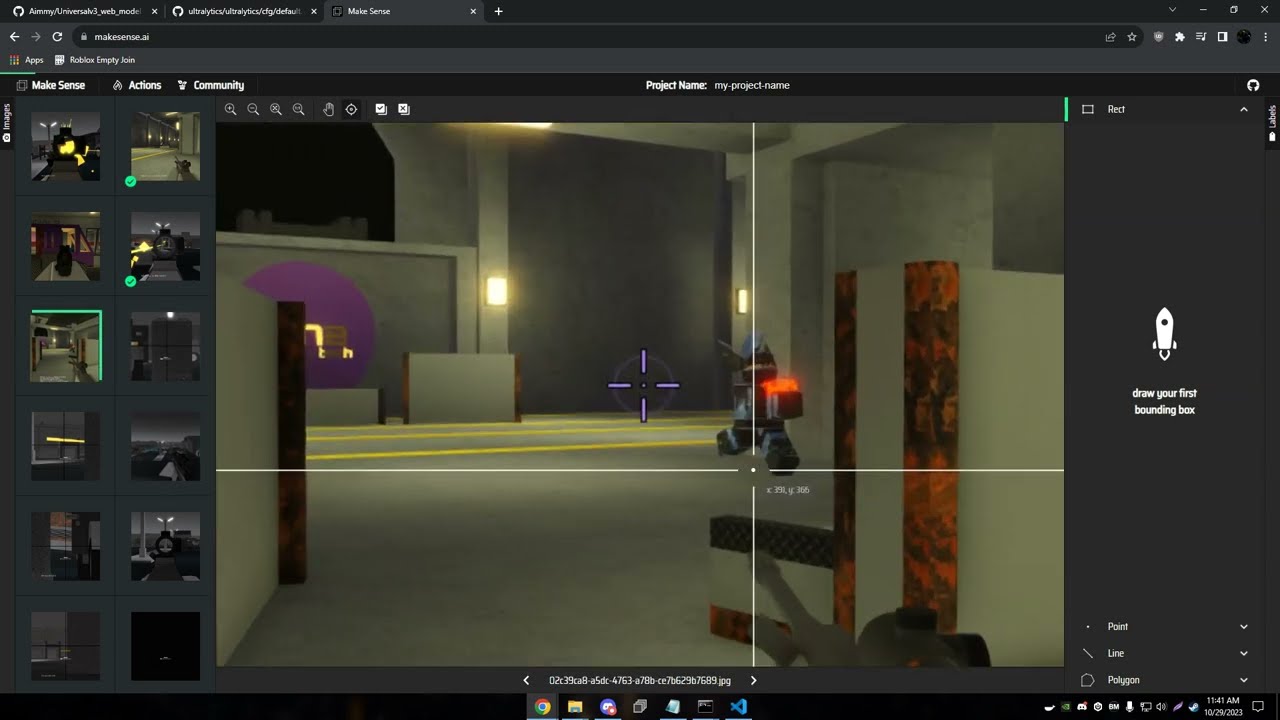

The web model is a TFJS (TensorFlow Javascript) export of the model. This allow you to use the model for image labeling, which then images can be sent to us to help further train the PF/Universal model or you can use those images to train your own YOLOv8 model. You may wonder, "Why is it in YOLOv5 and not YOLOv8?". This is due to us using the tool called MakeSense, it to me is one of the easiest tools and is all web based. I am sure there are other tools that may accept the YOLOv8 web model.

You can visit MakeSense here: https://www.makesense.ai You then can simply load all of your images in and select Object Detection.

Then run the AI locally, select YOLOv5, and upload all the web model files.

You can now go through your images and click and drag to highlight any Enemies on screen and approve the auto detected enemies from the web model:

Once you are finished labeling you'll want to export the labels for AI training:

Please see the video tutorial bellow on how to label images and train your own model. (Redirects to Youtube)

If you are not aware already, Aimmy contains a "Downloadable Models" tab that allows you to download models developed and shared by the Aimmy Community.

Aimmy pulls these models from the Aimmy repository, this means anyone can upload models to the "Downloadable Models" tab by making a pull request.

To start, please note that if you would like to be credited for your work, name your model as: [Game Name/Model Name] by [The Creator]

If you would like to stay anonymous however, you may only list the Game Name/Model Name.

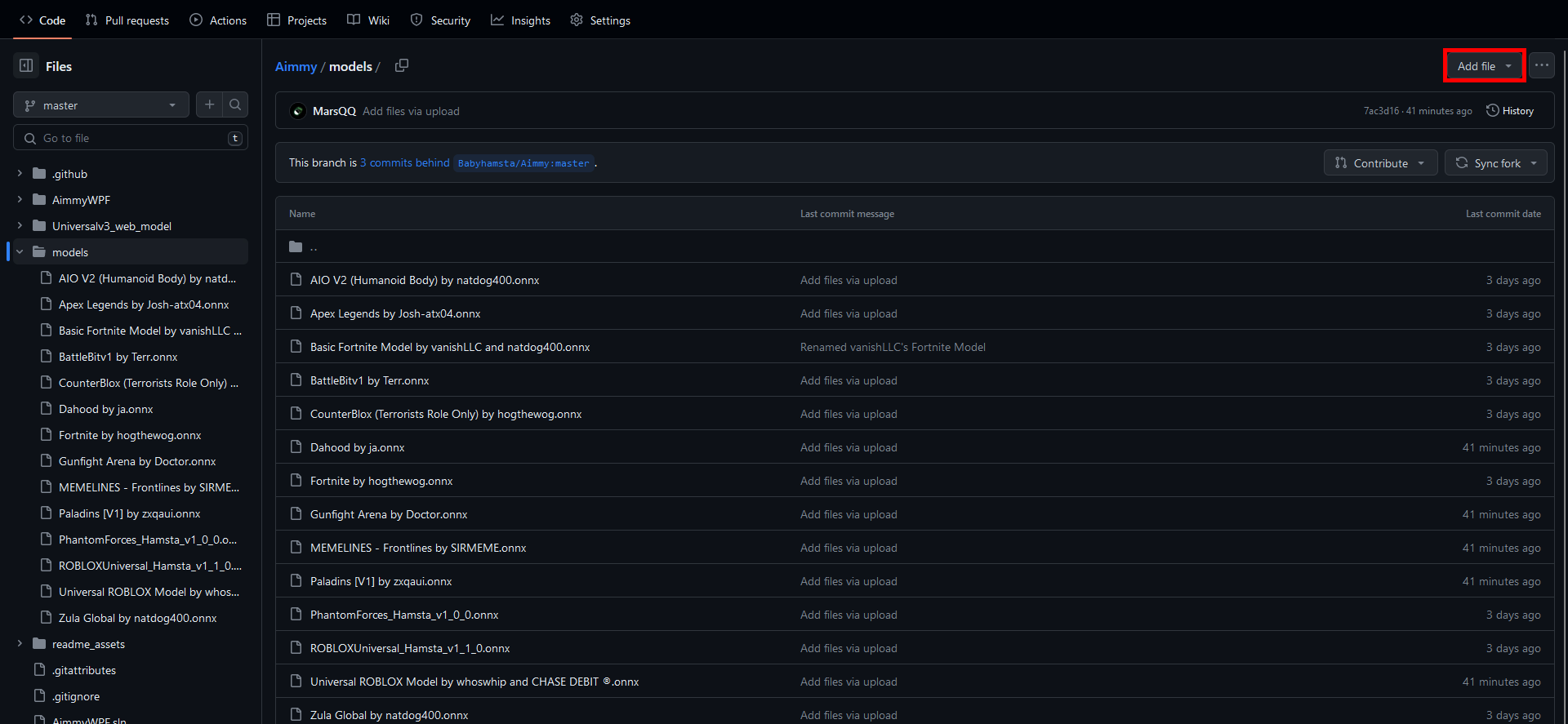

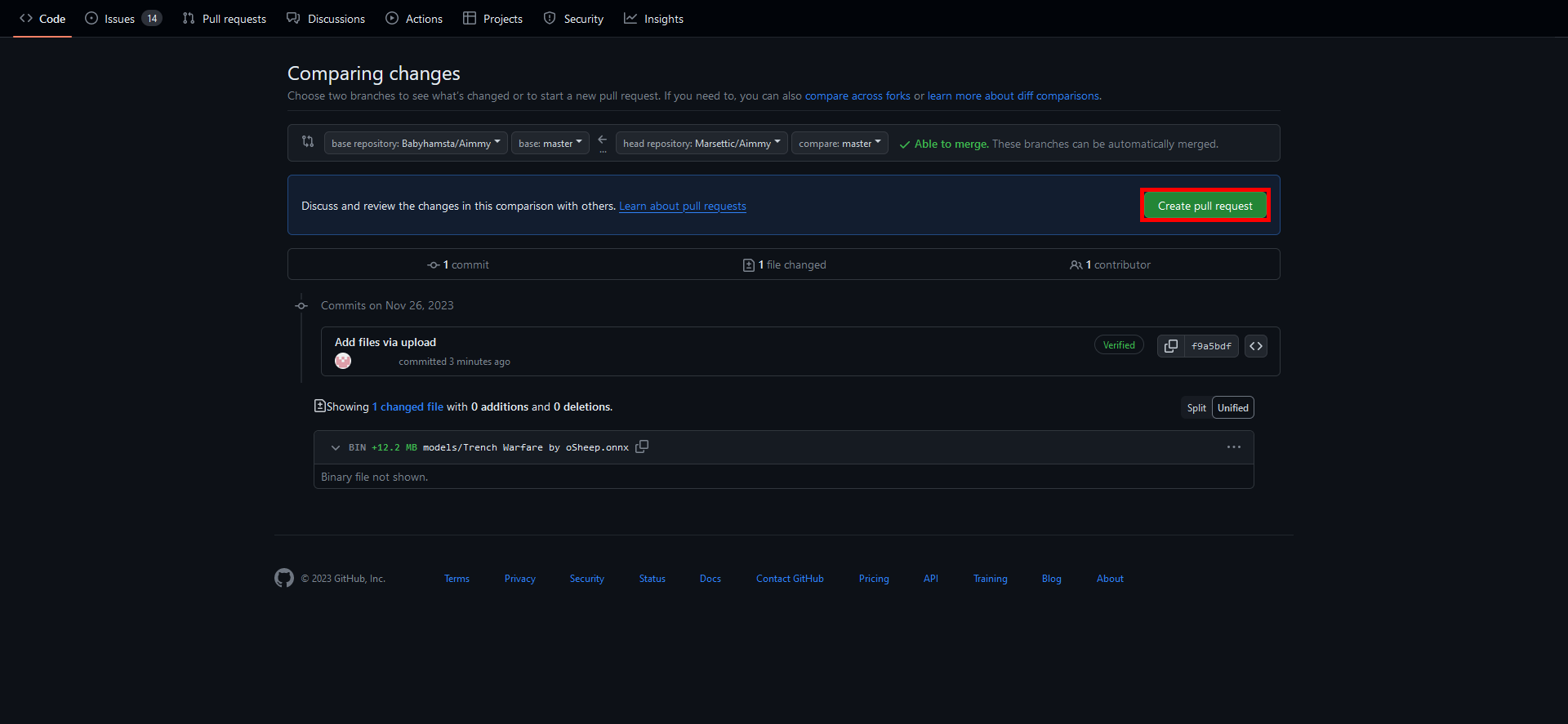

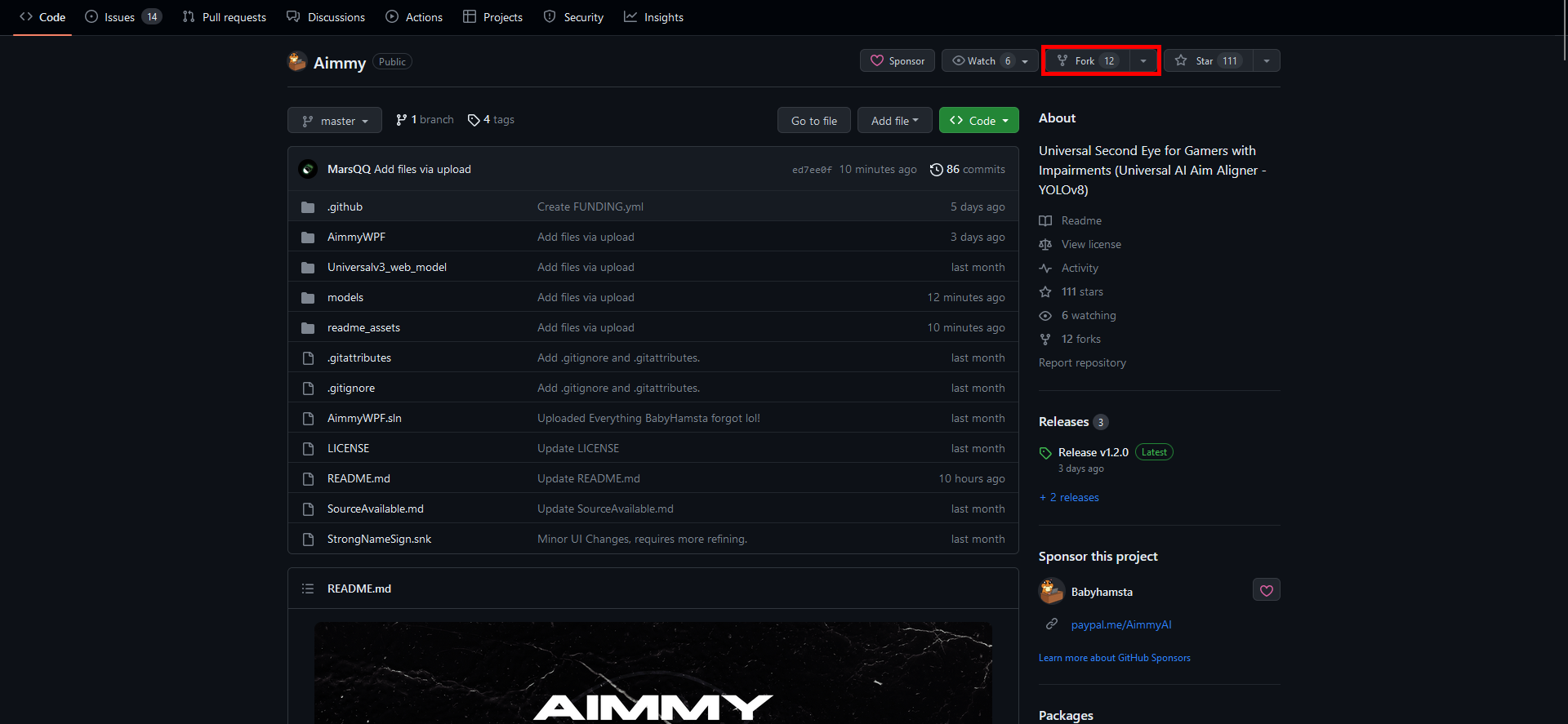

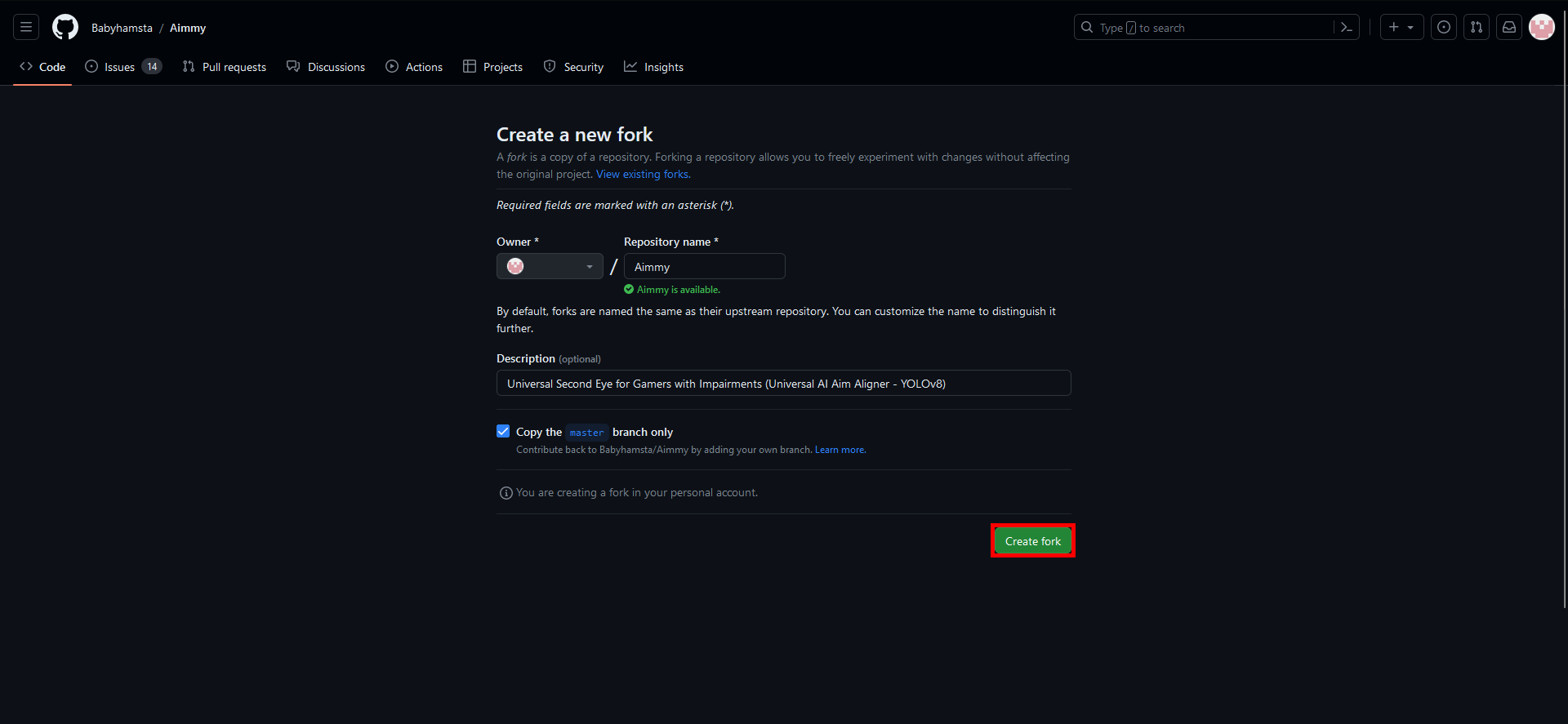

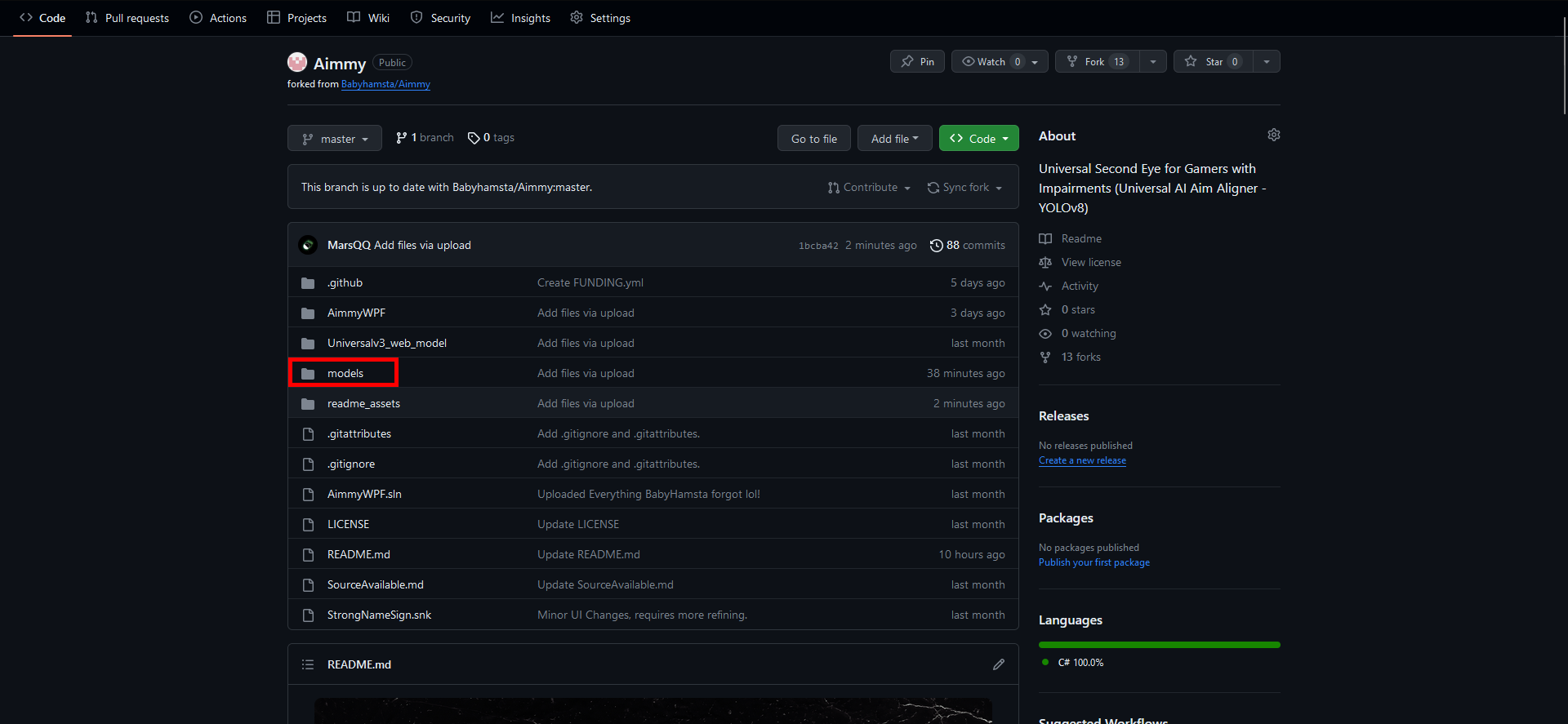

Now, fork the Aimmy Repository

After that, go to your fork's model folder

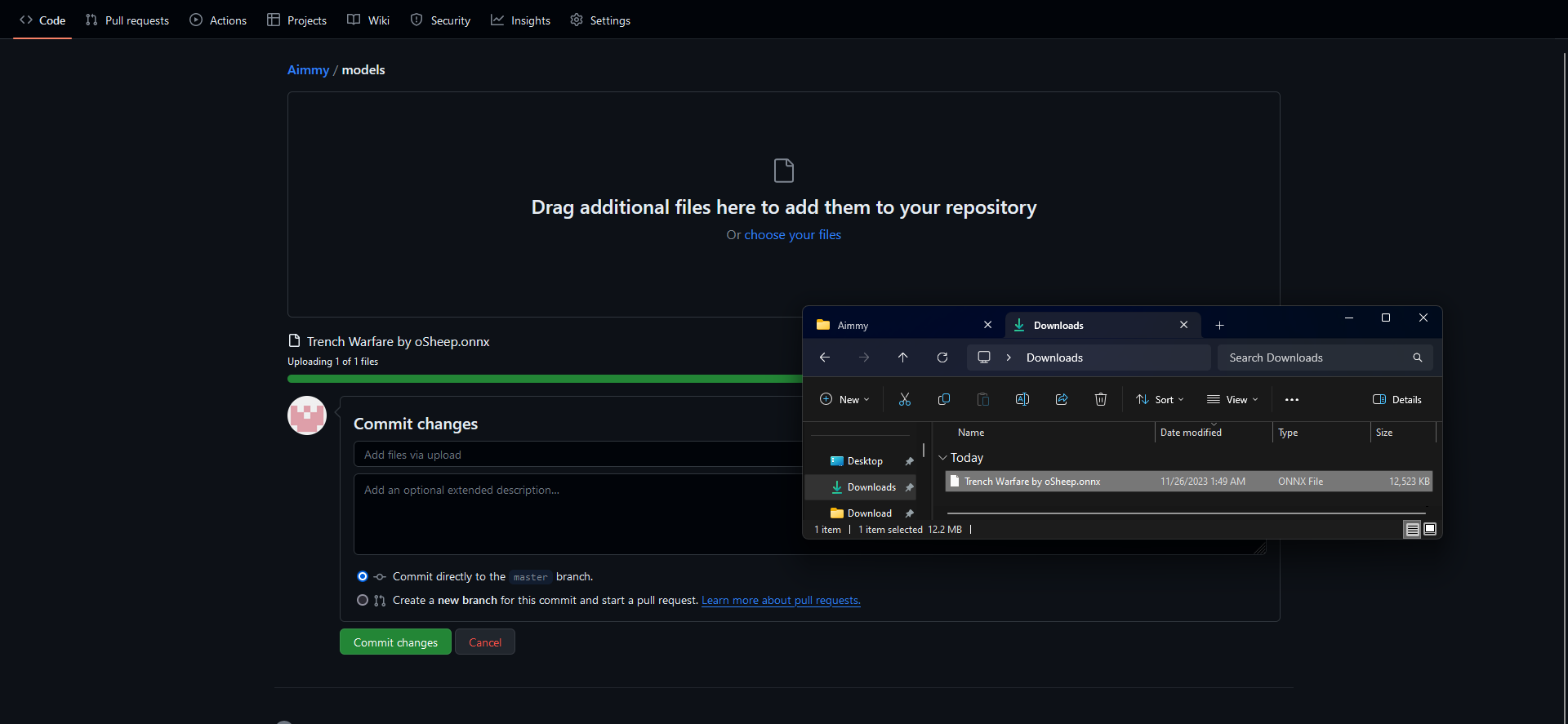

Drag your model onto the area that contains the text "Drag additional files here to add them to your repository"

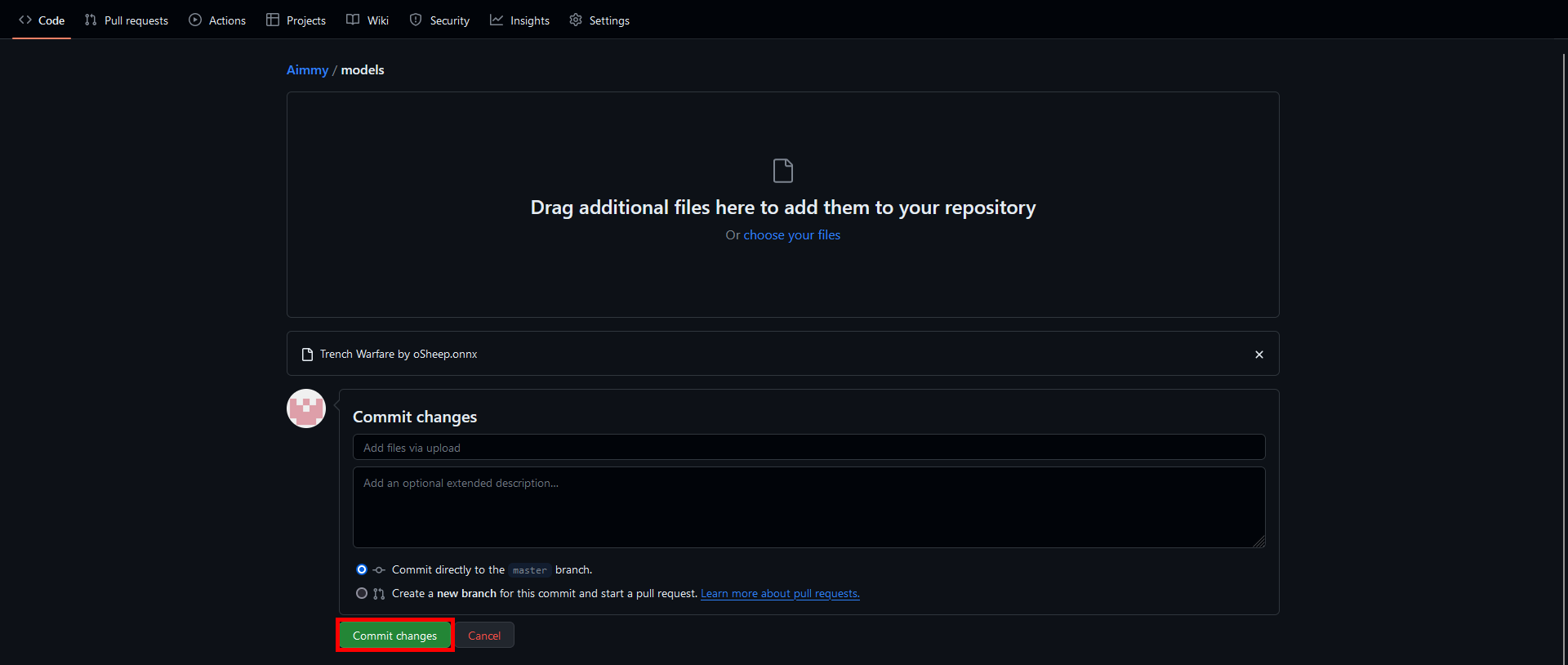

and press "Commit Changes" when the green progress bar disappears

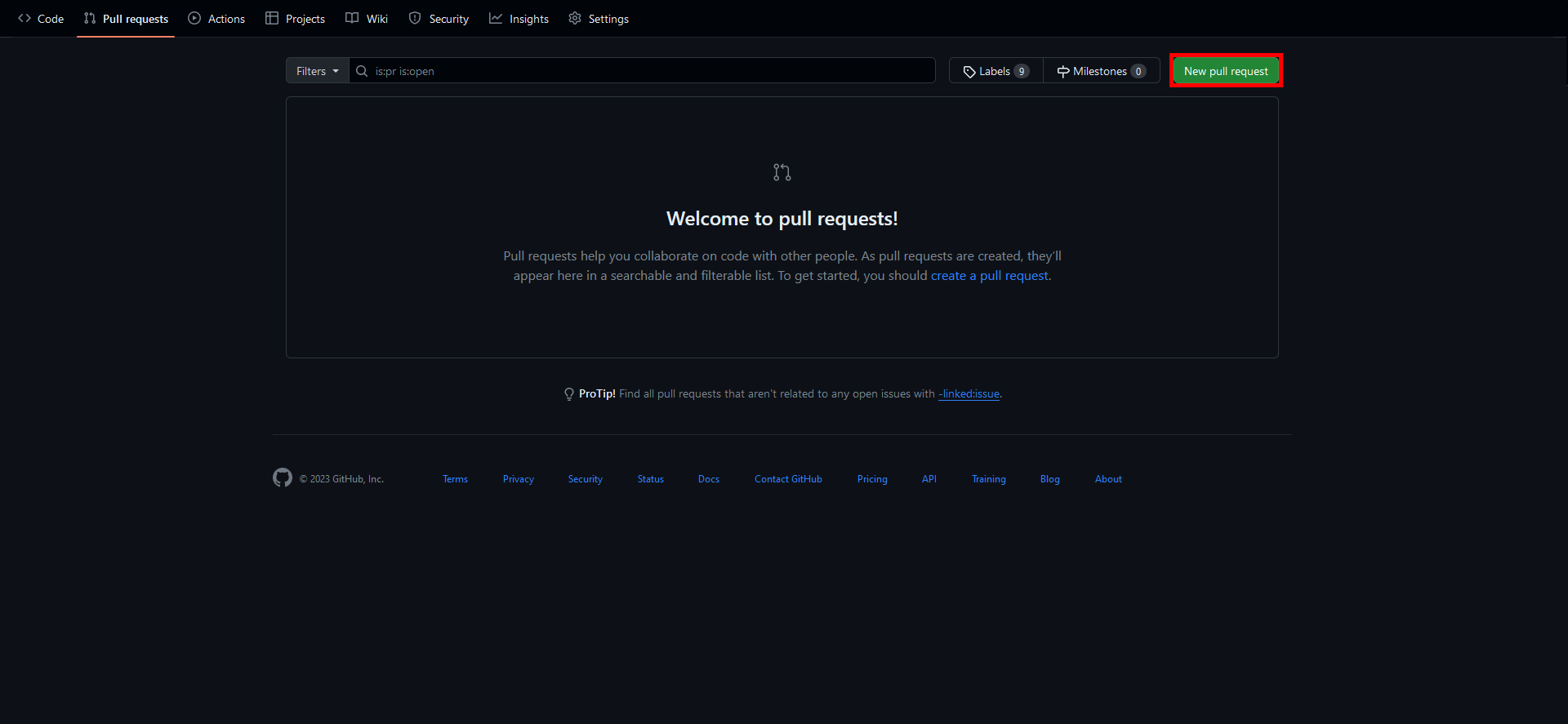

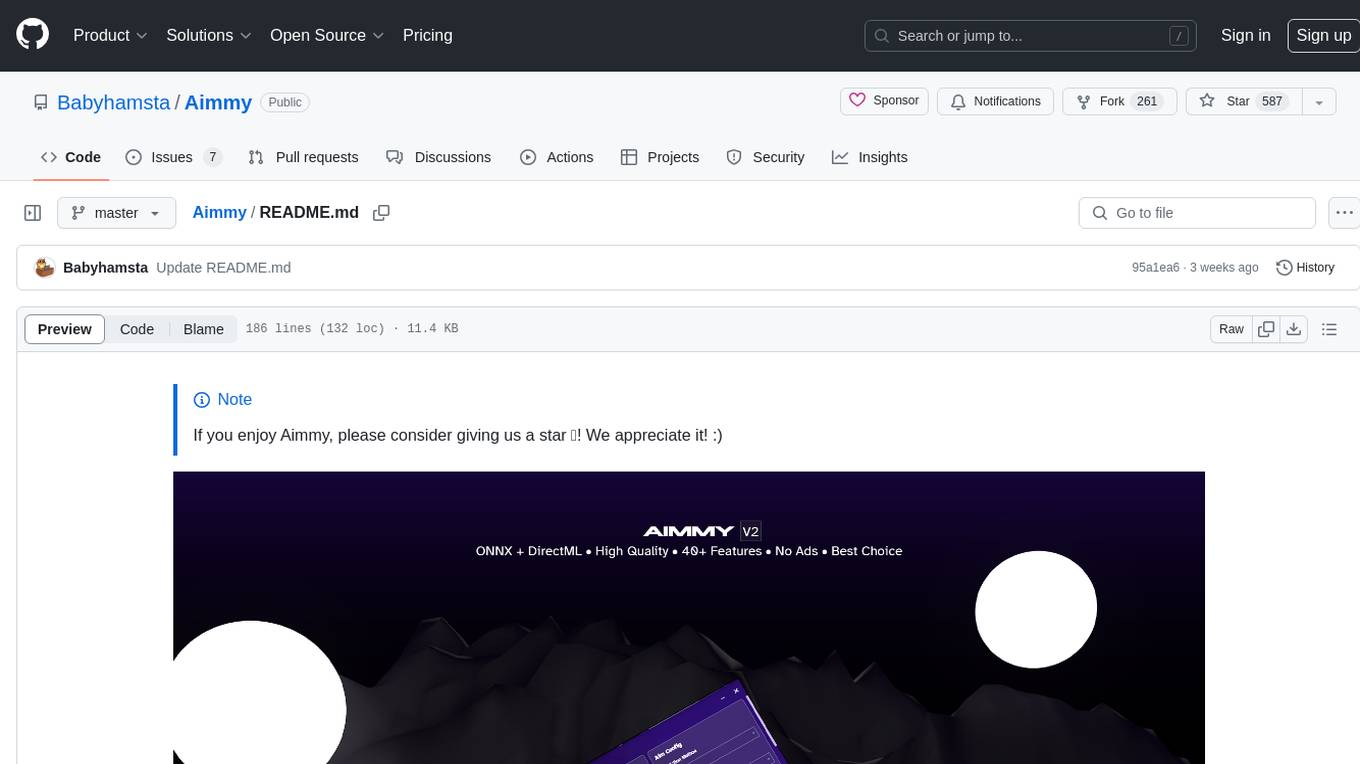

Now go to the "Pull requests" tab

Create the pull request (again)

You are done! We will review your pull request and your model will be added in 24-48 hours. If you would like to remove your model from the "Downloadable Models" tab, you may make another pull request or contact us on the Issues tab.

For anyone who does this, thank you so much =D, Aimmy genuinely thrives with community contributions and support, and making and sharing your Aimmy models genuinely means a lot to us! Thank you!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Aimmy

Similar Open Source Tools

Aimmy

Aimmy is a universal AI-Based Aim Alignment Mechanism developed by BabyHamsta, MarsQQ & Taylor to make gaming more accessible for users who have difficulty aiming. It utilizes DirectML, ONNX, and YOLOV8 for player detection, offering high accuracy and fast performance. Aimmy features an easy-to-use UI, extensive customizability, and is free of ads and paywalls. It is designed for gamers facing challenges like physical or mental disabilities, poor hand-eye coordination, or aiming difficulties due to environmental factors. Aimmy provides various features like AI detection, customizability, anti-recoil system, mouse movement methods, hotswappability, and a model/configuration store with repository support.

FreeChat

FreeChat is a native LLM appliance for macOS that runs completely locally. Download it and ask your LLM a question without doing any configuration. A local/llama version of OpenAI's chat without login or tracking. You should be able to install from the Mac App Store and use it immediately.

chaiNNer

ChaiNNer is a node-based image processing GUI aimed at making chaining image processing tasks easy and customizable. It gives users a high level of control over their processing pipeline and allows them to perform complex tasks by connecting nodes together. ChaiNNer is cross-platform, supporting Windows, MacOS, and Linux. It features an intuitive drag-and-drop interface, making it easy to create and modify processing chains. Additionally, ChaiNNer offers a wide range of nodes for various image processing tasks, including upscaling, denoising, sharpening, and color correction. It also supports batch processing, allowing users to process multiple images or videos at once.

rakis

Rakis is a decentralized verifiable AI network in the browser where nodes can accept AI inference requests, run local models, verify results, and arrive at consensus without servers. It is open-source, functional, multi-model, multi-chain, and browser-first, allowing anyone to participate in the network. The project implements an embedding-based consensus mechanism for verifiable inference. Users can run their own node on rakis.ai or use the compiled version hosted on Huggingface. The project is meant for educational purposes and is a work in progress.

wingman-ai

Wingman AI allows you to use your voice to talk to various AI providers and LLMs, process your conversations, and ultimately trigger actions such as pressing buttons or reading answers. Our _Wingmen_ are like characters and your interface to this world, and you can easily control their behavior and characteristics, even if you're not a developer. AI is complex and it scares people. It's also **not just ChatGPT**. We want to make it as easy as possible for you to get started. That's what _Wingman AI_ is all about. It's a **framework** that allows you to build your own Wingmen and use them in your games and programs. The idea is simple, but the possibilities are endless. For example, you could: * **Role play** with an AI while playing for more immersion. Have air traffic control (ATC) in _Star Citizen_ or _Flight Simulator_. Talk to Shadowheart in Baldur's Gate 3 and have her respond in her own (cloned) voice. * Get live data such as trade information, build guides, or wiki content and have it read to you in-game by a _character_ and voice you control. * Execute keystrokes in games/applications and create complex macros. Trigger them in natural conversations with **no need for exact phrases.** The AI understands the context of your dialog and is quite _smart_ in recognizing your intent. Say _"It's raining! I can't see a thing!"_ and have it trigger a command you simply named _WipeVisors_. * Automate tasks on your computer * improve accessibility * ... and much more

autoMate

autoMate is an AI-powered local automation tool designed to help users automate repetitive tasks and reclaim their time. It leverages AI and RPA technology to operate computer interfaces, understand screen content, make autonomous decisions, and support local deployment for data security. With natural language task descriptions, users can easily automate complex workflows without the need for programming knowledge. The tool aims to transform work by freeing users from mundane activities and allowing them to focus on tasks that truly create value, enhancing efficiency and liberating creativity.

AI-Studio

MindWork AI Studio is a desktop application that provides a unified chat interface for Large Language Models (LLMs). It is free to use for personal and commercial purposes, offers independence in choosing LLM providers, provides unrestricted usage through the providers API, and is cost-effective with pay-as-you-go pricing. The app prioritizes privacy, flexibility, minimal storage and memory usage, and low impact on system resources. Users can support the project through monthly contributions or one-time donations, with opportunities for companies to sponsor the project for public relations and marketing benefits. Planned features include support for more LLM providers, system prompts integration, text replacement for privacy, and advanced interactions tailored for various use cases.

AppAgent

AppAgent is a novel LLM-based multimodal agent framework designed to operate smartphone applications. Our framework enables the agent to operate smartphone applications through a simplified action space, mimicking human-like interactions such as tapping and swiping. This novel approach bypasses the need for system back-end access, thereby broadening its applicability across diverse apps. Central to our agent's functionality is its innovative learning method. The agent learns to navigate and use new apps either through autonomous exploration or by observing human demonstrations. This process generates a knowledge base that the agent refers to for executing complex tasks across different applications.

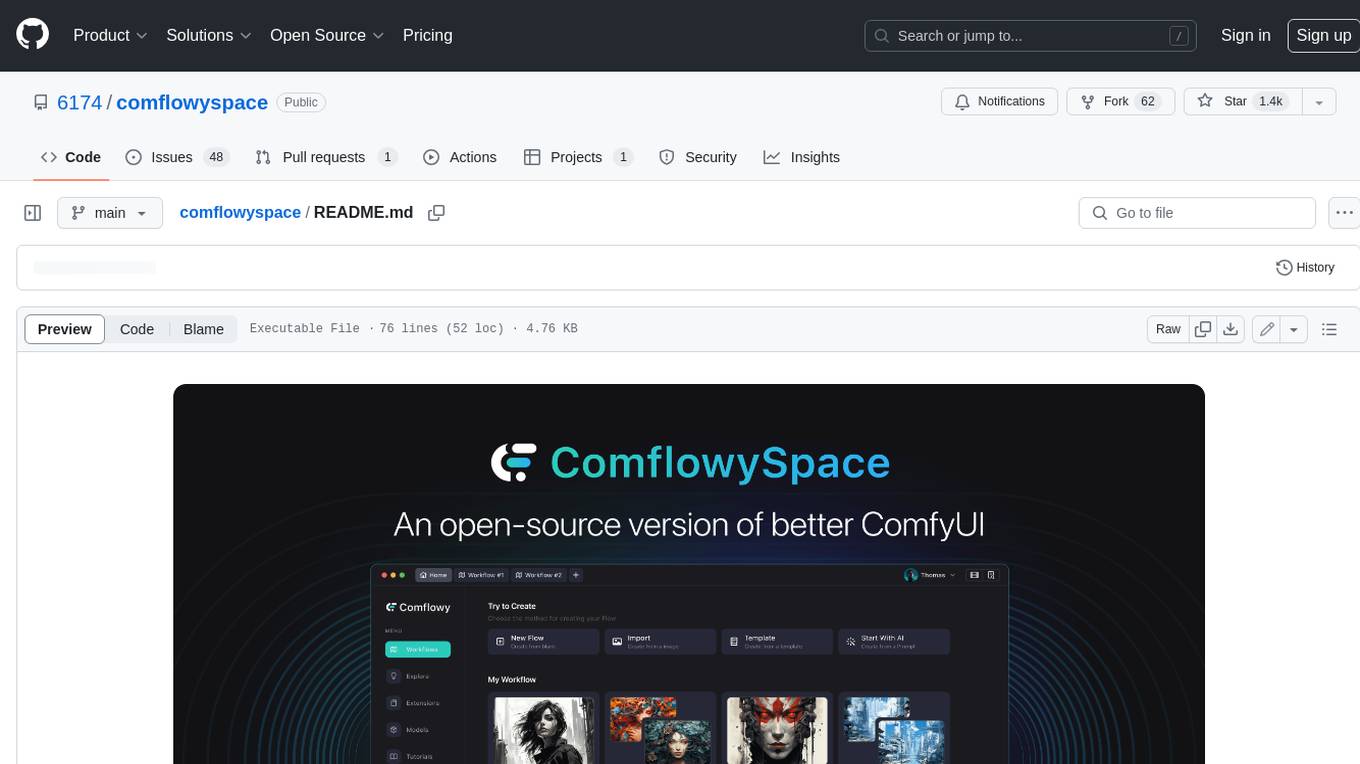

comflowyspace

Comflowyspace is an open-source AI image and video generation tool that aims to provide a more user-friendly and accessible experience than existing tools like SDWebUI and ComfyUI. It simplifies the installation, usage, and workflow management of AI image and video generation, making it easier for users to create and explore AI-generated content. Comflowyspace offers features such as one-click installation, workflow management, multi-tab functionality, workflow templates, and an improved user interface. It also provides tutorials and documentation to lower the learning curve for users. The tool is designed to make AI image and video generation more accessible and enjoyable for a wider range of users.

psychic

Psychic is a tool that provides a platform for users to access psychic readings and services. It offers a range of features such as tarot card readings, astrology consultations, and spiritual guidance. Users can connect with experienced psychics and receive personalized insights and advice on various aspects of their lives. The platform is designed to be user-friendly and intuitive, making it easy for users to navigate and explore the different services available. Whether you're looking for guidance on love, career, or personal growth, Psychic has you covered.

Powerpointer-For-Local-LLMs

PowerPointer For Local LLMs is a PowerPoint generator that uses python-pptx and local llm's via the Oobabooga Text Generation WebUI api to create beautiful and informative presentations. It runs locally on your computer, eliminating privacy concerns. The tool allows users to select from 7 designs, make placeholders for images, and easily customize presentations within PowerPoint. Users provide information for the PowerPoint, which is then used to generate text using optimized prompts and the text generation webui api. The generated text is converted into a PowerPoint presentation using the python-pptx library.

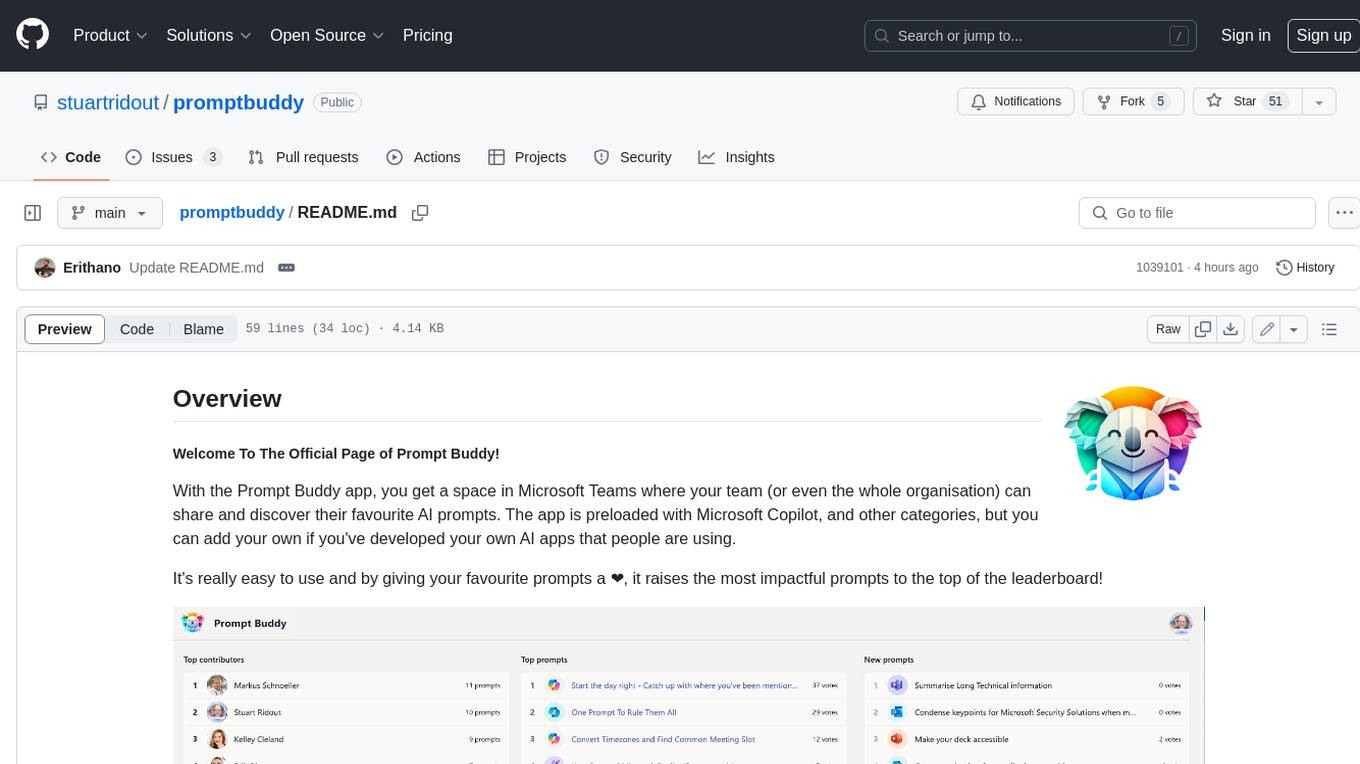

promptbuddy

Prompt Buddy is a Microsoft Teams app that provides a central location for teams to share and discover their favorite AI prompts. It comes preloaded with Microsoft Copilot and other categories, but users can also add their own custom prompts. The app is easy to use and allows users to upvote their favorite prompts, which raises them to the top of the leaderboard. Prompt Buddy also supports dark mode and offers a mobile layout for use on phones. It is built on the Power Platform and can be customized and extended by the installer.

Dot

Dot is a standalone, open-source application designed for seamless interaction with documents and files using local LLMs and Retrieval Augmented Generation (RAG). It is inspired by solutions like Nvidia's Chat with RTX, providing a user-friendly interface for those without a programming background. Pre-packaged with Mistral 7B, Dot ensures accessibility and simplicity right out of the box. Dot allows you to load multiple documents into an LLM and interact with them in a fully local environment. Supported document types include PDF, DOCX, PPTX, XLSX, and Markdown. Users can also engage with Big Dot for inquiries not directly related to their documents, similar to interacting with ChatGPT. Built with Electron JS, Dot encapsulates a comprehensive Python environment that includes all necessary libraries. The application leverages libraries such as FAISS for creating local vector stores, Langchain, llama.cpp & Huggingface for setting up conversation chains, and additional tools for document management and interaction.

EdgeChains

EdgeChains is an open-source chain-of-thought engineering framework tailored for Large Language Models (LLMs)- like OpenAI GPT, LLama2, Falcon, etc. - With a focus on enterprise-grade deployability and scalability. EdgeChains is specifically designed to **orchestrate** such applications. At EdgeChains, we take a unique approach to Generative AI - we think Generative AI is a deployment and configuration management challenge rather than a UI and library design pattern challenge. We build on top of a tech that has solved this problem in a different domain - Kubernetes Config Management - and bring that to Generative AI. Edgechains is built on top of jsonnet, originally built by Google based on their experience managing a vast amount of configuration code in the Borg infrastructure.

cannoli

Cannoli allows you to build and run no-code LLM scripts using the Obsidian Canvas editor. Cannolis are scripts that leverage the OpenAI API to read/write to your vault, and take actions using HTTP requests. They can be used to automate tasks, create custom llm-chatbots, and more.

graphrag

The GraphRAG project is a data pipeline and transformation suite designed to extract meaningful, structured data from unstructured text using LLMs. It enhances LLMs' ability to reason about private data. The repository provides guidance on using knowledge graph memory structures to enhance LLM outputs, with a warning about the potential costs of GraphRAG indexing. It offers contribution guidelines, development resources, and encourages prompt tuning for optimal results. The Responsible AI FAQ addresses GraphRAG's capabilities, intended uses, evaluation metrics, limitations, and operational factors for effective and responsible use.

For similar tasks

Aimmy

Aimmy is a universal AI-Based Aim Alignment Mechanism developed by BabyHamsta, MarsQQ & Taylor to make gaming more accessible for users who have difficulty aiming. It utilizes DirectML, ONNX, and YOLOV8 for player detection, offering high accuracy and fast performance. Aimmy features an easy-to-use UI, extensive customizability, and is free of ads and paywalls. It is designed for gamers facing challenges like physical or mental disabilities, poor hand-eye coordination, or aiming difficulties due to environmental factors. Aimmy provides various features like AI detection, customizability, anti-recoil system, mouse movement methods, hotswappability, and a model/configuration store with repository support.

Top-Osu-Hacks-2024-Aim-Assist-Bots-and-Mor

The Top-Osu!-Hacks-2024 repository offers a comprehensive suite of powerful cheats and mods designed to enhance gameplay experience in Osu!. It provides aim assist hacks, bots, and various cheats to improve gameplay. Users can easily download and install the cheats to automate gameplay, improve aim accuracy, and play with relaxed settings. The repository is optimized for search engines using targeted keywords and meta descriptions to help players find Osu! hacks easily.

LibreAim

Libre Aim is a free and open source first-person shooter (FPS) aim trainer developed using Godot. It is highly customizable, allowing users to modify all aspects of the tool easily. The focus is on providing a lightweight training tool that runs smoothly on low-end machines, offering high FPS and minimal input lag. Although still in early development stages, Libre Aim aims to provide a platform for users to enhance their aiming skills in FPS games through customizable features and simplicity.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.