Best AI tools for< Deduplicate Documents >

9 - AI tool Sites

Quetext

Quetext is a plagiarism checker and AI content detector that helps students, teachers, and professionals identify potential plagiarism and AI in their work. With its deep search technology, contextual analysis, and smart algorithms, Quetext makes checking writing easier and more accurate. Quetext also offers a variety of features such as bulk uploads, source exclusion, enhanced citation generator, grammar & spell check, and Deep Search. With its rich and intuitive feedback, Quetext helps users find plagiarism and AI with less stress.

Trust Stamp

Trust Stamp is a global provider of AI-powered identity services offering a full suite of identity tools, including biometric multi-factor authentication, document validation, identity validation, duplicate detection, and geolocation services. The application is designed to empower organizations across various sectors with advanced biometric identity solutions to reduce fraud, protect personal data privacy, increase operational efficiency, and reach a broader user base worldwide through unique data transformation and comparison capabilities. Founded in 2016, Trust Stamp has achieved significant milestones in net sales, gross profit, and strategic partnerships, positioning itself as a leader in the identity verification industry.

Nero Platinum Suite

Nero Platinum Suite is a comprehensive software collection for Windows PCs that provides a wide range of multimedia capabilities, including burning, managing, optimizing, and editing photos, videos, and music files. It includes various AI-powered features such as the Nero AI Image Upscaler, Nero AI Video Upscaler, and Nero AI Photo Tagger, which enhance and simplify multimedia tasks.

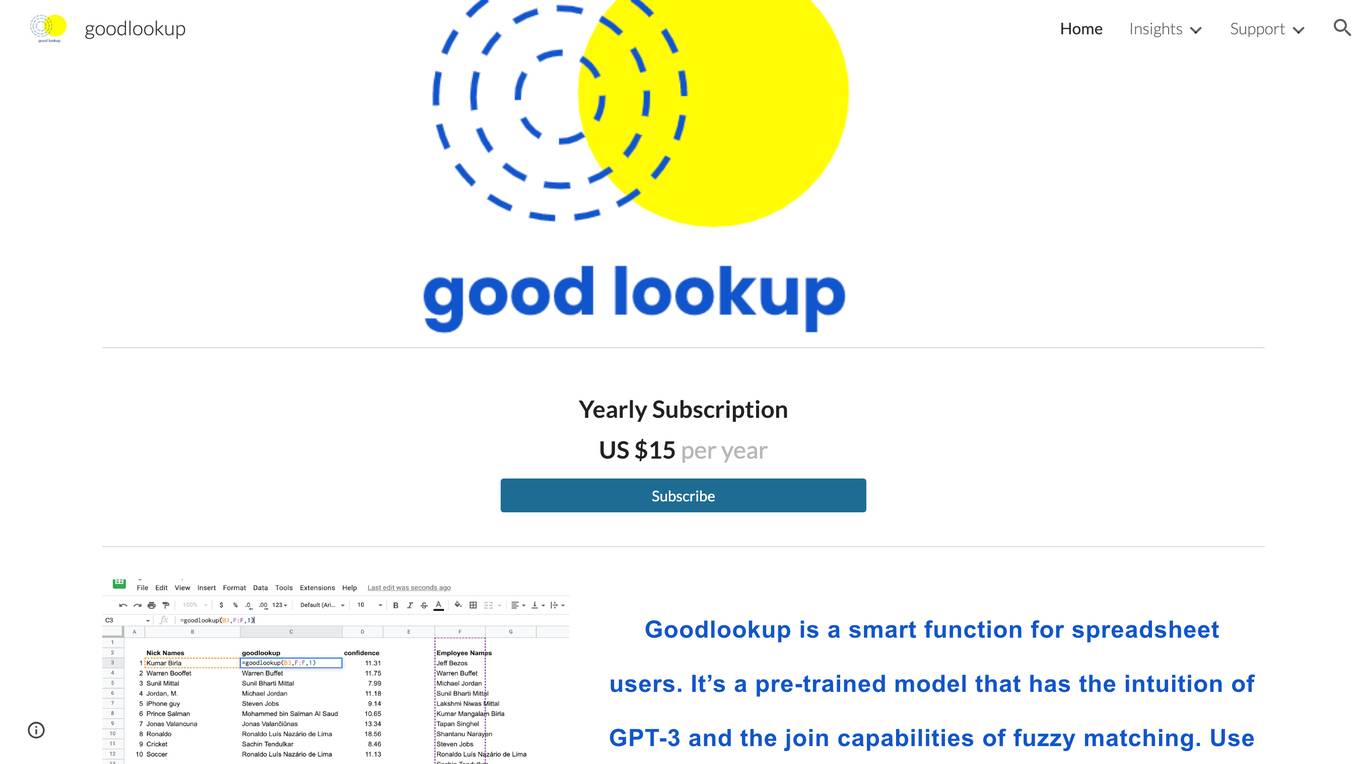

Goodlookup

Goodlookup is a smart function for spreadsheet users that gets very close to semantic understanding. It’s a pre-trained model that has the intuition of GPT-3 and the join capabilities of fuzzy matching. Use it like vlookup or index match to speed up your topic clustering work in google sheets!

Duplikate

Duplikate is a next-generation AI-powered Community Management tool designed to assist users in managing their social media accounts more efficiently. It helps users save time by retrieving relevant social media posts, categorizing them, and duplicating them with modifications to better suit their audience. The tool is powered by OpenAI and offers features such as post scraping, filtering, and copying, with upcoming features including image generation. Users have praised Duplikate for its ability to streamline content creation, improve engagement, and save time in managing social media accounts.

AppZen

AppZen is an AI-powered application designed for modern finance teams to streamline accounts payable processes, automate invoice and expense auditing, and improve compliance. It offers features such as Autonomous AP for invoice automation, Expense Audit for T&E spend management, and Card Audit for analyzing card spend. AppZen's AI learns and understands business practices, ensures compliance, and integrates with existing systems easily. The application helps prevent duplicate spend, fraud, and FCPA violations, making it a valuable tool for finance professionals.

Dart

Dart is an AI project management software that empowers teams to work smarter, streamline tasks, and achieve more with less effort. It incorporates AI from the ground up, offering features like AI agents, chat, property filling, planning, subtask generation, and duplicate detection. Dart provides integrations with various tools, templates for different project types, and solutions tailored for teams in sales, IT, marketing, startups, agencies, and universities. The application is designed to drive focus, innovation, and impact across different roles in an organization.

Snapy

Snapy is an AI-powered video editing and generation tool that helps content creators create short videos, edit podcasts, and remove silent parts from videos. It offers a range of features such as turning text prompts into short videos, condensing long videos into engaging short clips, automatically removing silent parts from audio files, and auto-trimming, removing duplicate sentences and filler words, and adding subtitles to short videos. Snapy is designed to save time and effort for content creators, allowing them to publish more content, create more engaging videos, and improve the quality of their audio and video content.

ONERECOVERY

ONERECOVERY is a professional data recovery solution for Windows that offers comprehensive and expert solutions to recover lost data from various storage devices. The software is designed to handle data loss for over 1,000 scenarios, including accidental deletion, formatting errors, virus attacks, and more. ONERECOVERY provides features such as file recovery for Windows and Mac, file duplicate finder, photo and video recovery, hard drive recovery, SD card data recovery, and more. With a user-friendly interface, quick and efficient scanning, and compatibility with diverse operating systems and storage devices, ONERECOVERY is a reliable and secure data recovery tool trusted by millions of users worldwide.

2 - Open Source AI Tools

dolma

Dolma is a dataset and toolkit for curating large datasets for (pre)-training ML models. The dataset consists of 3 trillion tokens from a diverse mix of web content, academic publications, code, books, and encyclopedic materials. The toolkit provides high-performance, portable, and extensible tools for processing, tagging, and deduplicating documents. Key features of the toolkit include built-in taggers, fast deduplication, and cloud support.

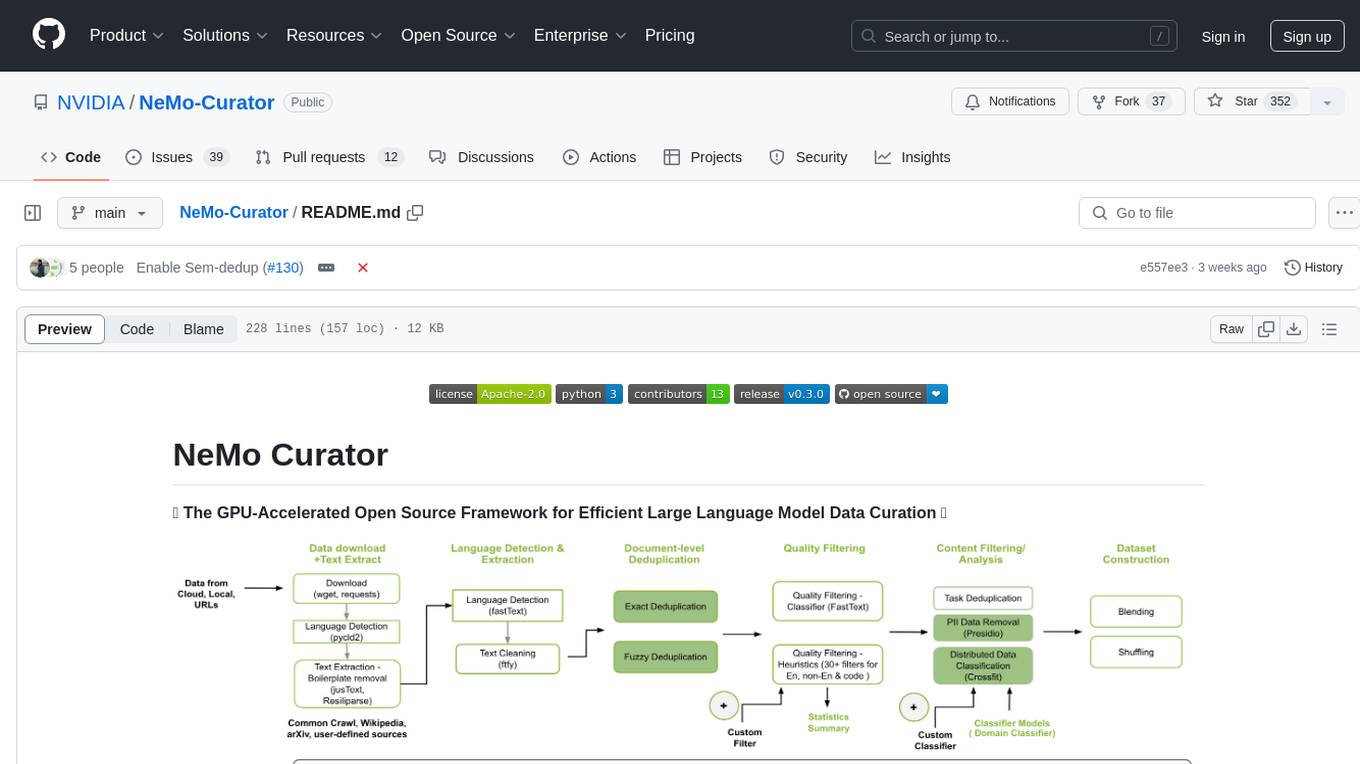

NeMo-Curator

NeMo Curator is a GPU-accelerated open-source framework designed for efficient large language model data curation. It provides scalable dataset preparation for tasks like foundation model pretraining, domain-adaptive pretraining, supervised fine-tuning, and parameter-efficient fine-tuning. The library leverages GPUs with Dask and RAPIDS to accelerate data curation, offering customizable and modular interfaces for pipeline expansion and model convergence. Key features include data download, text extraction, quality filtering, deduplication, downstream-task decontamination, distributed data classification, and PII redaction. NeMo Curator is suitable for curating high-quality datasets for large language model training.

8 - OpenAI Gpts

Data-Driven Messaging Campaign Generator

Create, analyze & duplicate customized automated message campaigns to boost retention & drive revenue for your website or app

Plagiarism Checker

Maintain the originality of your work with our Plagiarism Checker. This plagiarism checker identifies duplicate content, ensuring your work's uniqueness and integrity.

Image Theme Clone

Type “Start” and Get Exact Details on Image Generation and/or Duplication