Best AI tools for< Privacy Consultant >

Infographic

20 - AI tool Sites

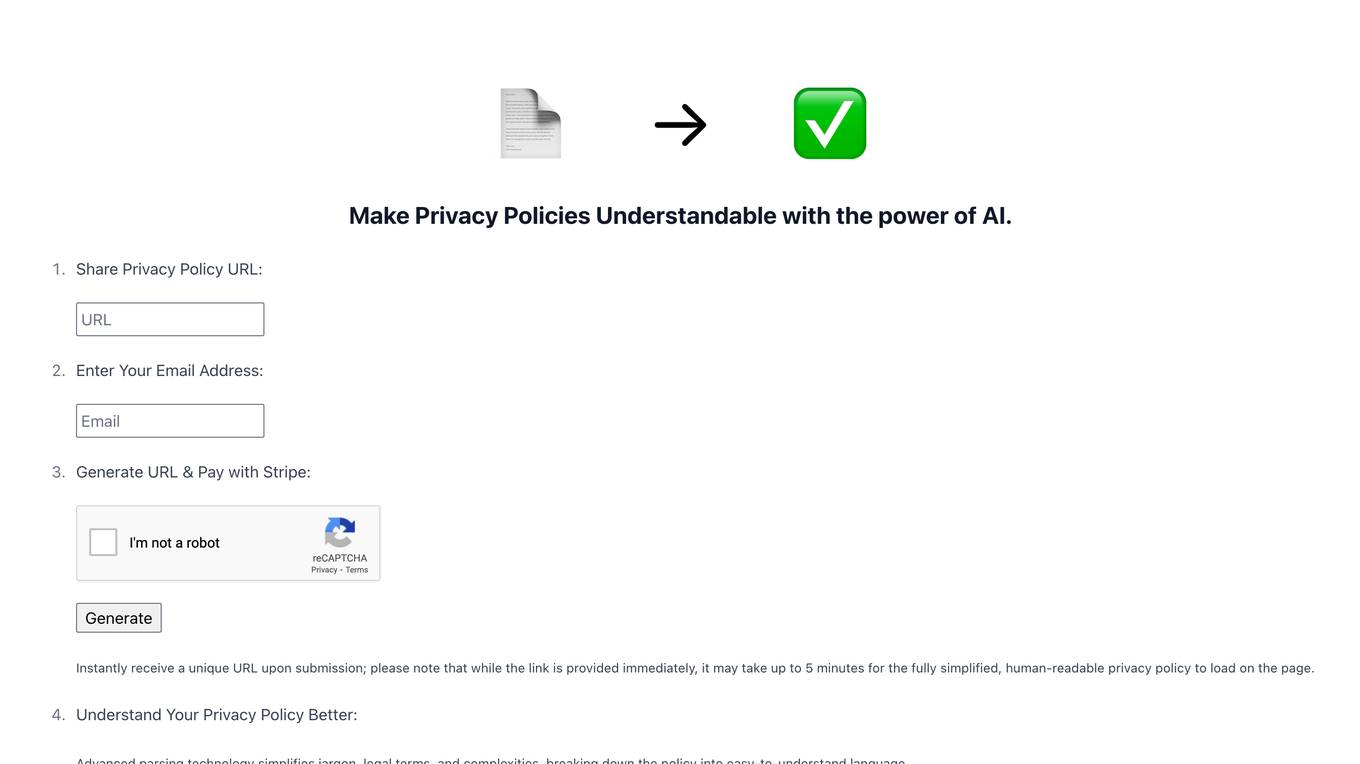

Parsepolicy

Parsepolicy is an AI-powered tool that aims to make privacy policies more understandable for users. By utilizing advanced parsing technology, the tool simplifies legal terms, jargon, and complexities in privacy policies, breaking them down into easy-to-understand language. Users can generate a unique URL by entering their email address and paying with Stripe, receiving a simplified, human-readable privacy policy within minutes. The tool helps users gain insights into how their data is handled, understand their rights, and make informed decisions to protect their privacy online. Privacy and data security are top priorities, with cutting-edge encryption and secure protocols in place to ensure the confidentiality of personal information. Currently, the website is at the MVP stage.

Cookiebot

The website is a platform that provides information about the use of cookies on the site. It explains the different types of cookies used, their purposes, and the providers of these cookies. Users can learn about the necessity of cookies for the website's operation, how to manage cookie preferences, and the importance of user consent for storing cookies. The site aims to enhance user understanding of online privacy and data protection regulations.

BlurOn

BlurOn is an AI tool designed for automatic mosaic insertion in image editing. It offers a seamless and efficient way to blur out specific areas in images, ensuring privacy and anonymity. With advanced algorithms, BlurOn simplifies the process of adding mosaic effects, making it ideal for various applications such as censoring sensitive content or protecting identities in photos.

Compliance.sh

Compliance.sh is a website that provides services related to compliance and privacy. It offers tools and resources to help individuals and businesses ensure they are following regulations and protecting sensitive information. The platform covers a wide range of compliance topics and provides guidance on best practices to maintain trust and security. Users can access information in multiple languages and receive technical support for any inquiries.

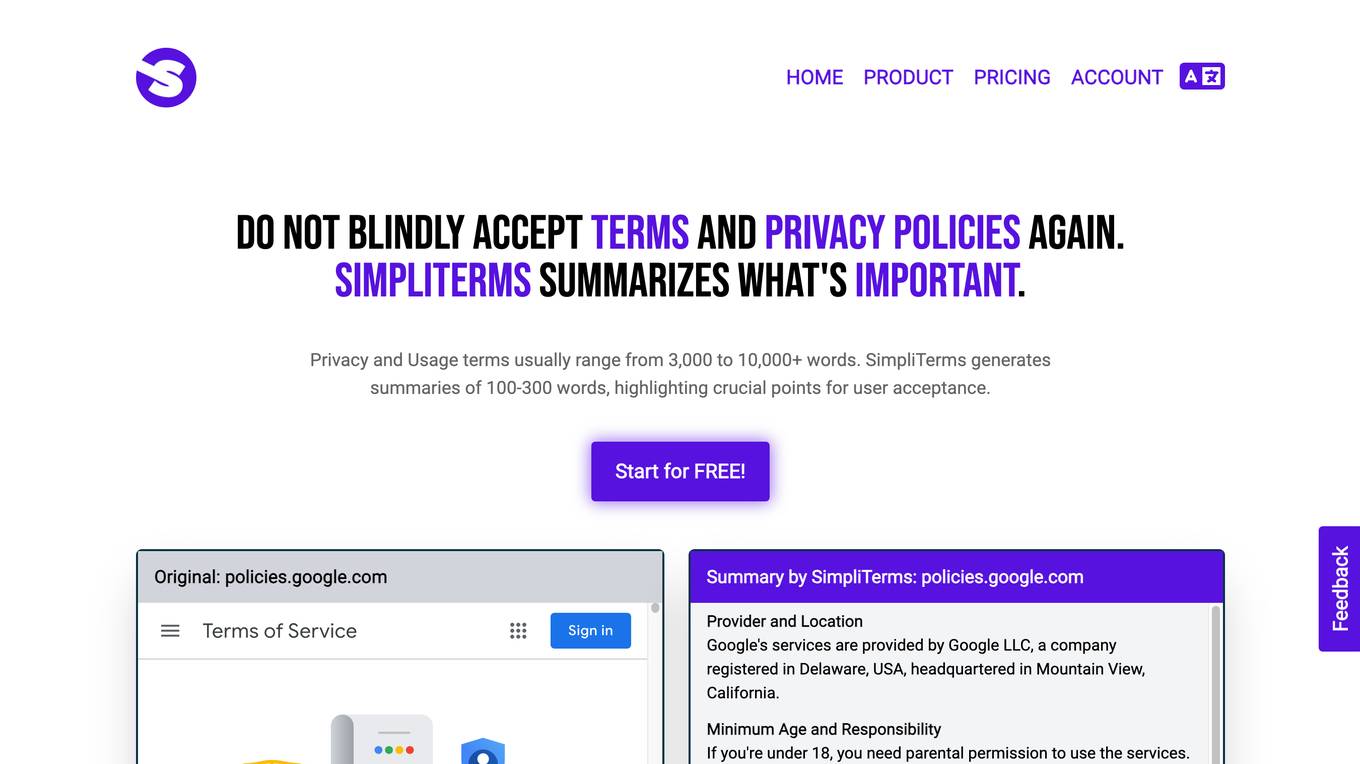

SimpliTerms

SimpliTerms is an AI-powered browser extension that simplifies and summarizes the complex and lengthy terms of use and privacy policies found on websites. By clicking on its icon, users can quickly understand and make informed decisions about the content they are accepting. The extension offers real-time summaries, saves time, helps avoid legal issues, and protects user privacy. It features an intuitive interface, supports multiple languages, and provides improved AI-generated responses.

Seventh Sense

Seventh Sense is an AI company focused on providing cutting-edge AI solutions for secure and private identity verification. Their innovative technologies, such as SenseCrypt, OpenCV FR, and SenseVantage, offer advanced biometric verification, face recognition, and AI video analysis. With a mission to make self-sovereign identity accessible to all, Seventh Sense ensures privacy, security, and compliance through their AI algorithms and cryptographic solutions.

SecureLabs

SecureLabs is an AI-powered platform that offers comprehensive security, privacy, and compliance management solutions for businesses. The platform integrates cutting-edge AI technology to provide continuous monitoring, incident response, risk mitigation, and compliance services. SecureLabs helps organizations stay current and compliant with major regulations such as HIPAA, GDPR, CCPA, and more. By leveraging AI agents, SecureLabs offers autonomous aids that tirelessly safeguard accounts, data, and compliance down to the account level. The platform aims to help businesses combat threats in an era of talent shortages while keeping costs down.

hCaptcha Enterprise

hCaptcha Enterprise is a comprehensive AI-powered security platform designed to detect and deter human and automated threats, including bot detection, fraud protection, and account defense. It offers highly accurate bot detection, fraud protection without false positives, and account takeover detection. The platform also provides privacy-preserving abuse detection with zero personally identifiable information (PII) required. hCaptcha Enterprise is trusted by category leaders in various industries worldwide, offering universal support, comprehensive security, and compliance with global privacy standards like GDPR, CCPA, and HIPAA.

Trust Stamp

Trust Stamp is an AI-powered digital identity solution that focuses on mitigating fraud through biometrics, privacy, and cybersecurity. The platform offers secure authentication and multi-factor authentication using biometric data, along with features like KYC/AML compliance, tokenization, and age estimation. Trust Stamp helps financial institutions, healthcare providers, dating platforms, and other industries prevent identity theft and fraud by providing innovative solutions for account recovery and user security.

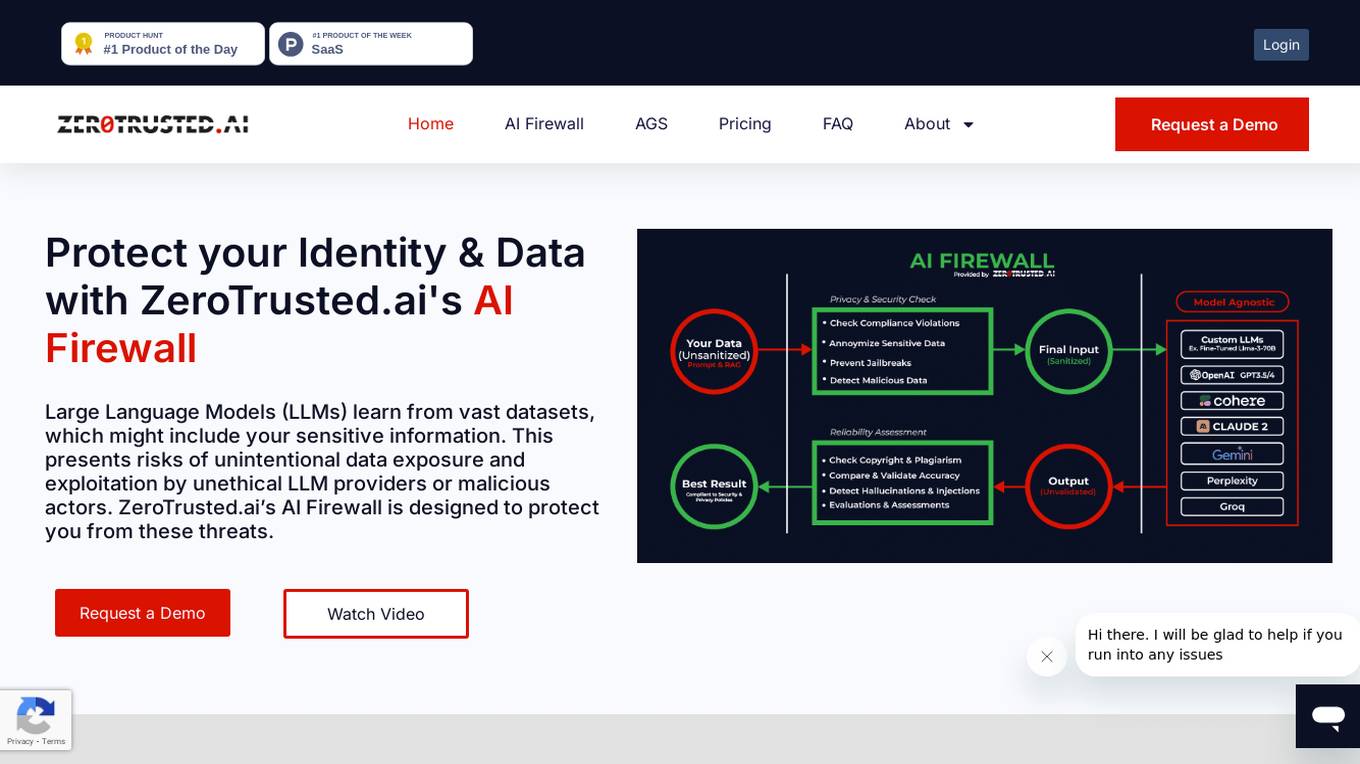

ZeroTrusted.ai

ZeroTrusted.ai is a cybersecurity platform that offers an AI Firewall to protect users from data exposure and exploitation by unethical providers or malicious actors. The platform provides features such as anonymity, security, reliability, integrations, and privacy to safeguard sensitive information. ZeroTrusted.ai empowers organizations with cutting-edge encryption techniques, AI & ML technologies, and decentralized storage capabilities for maximum security and compliance with regulations like PCI, GDPR, and NIST.

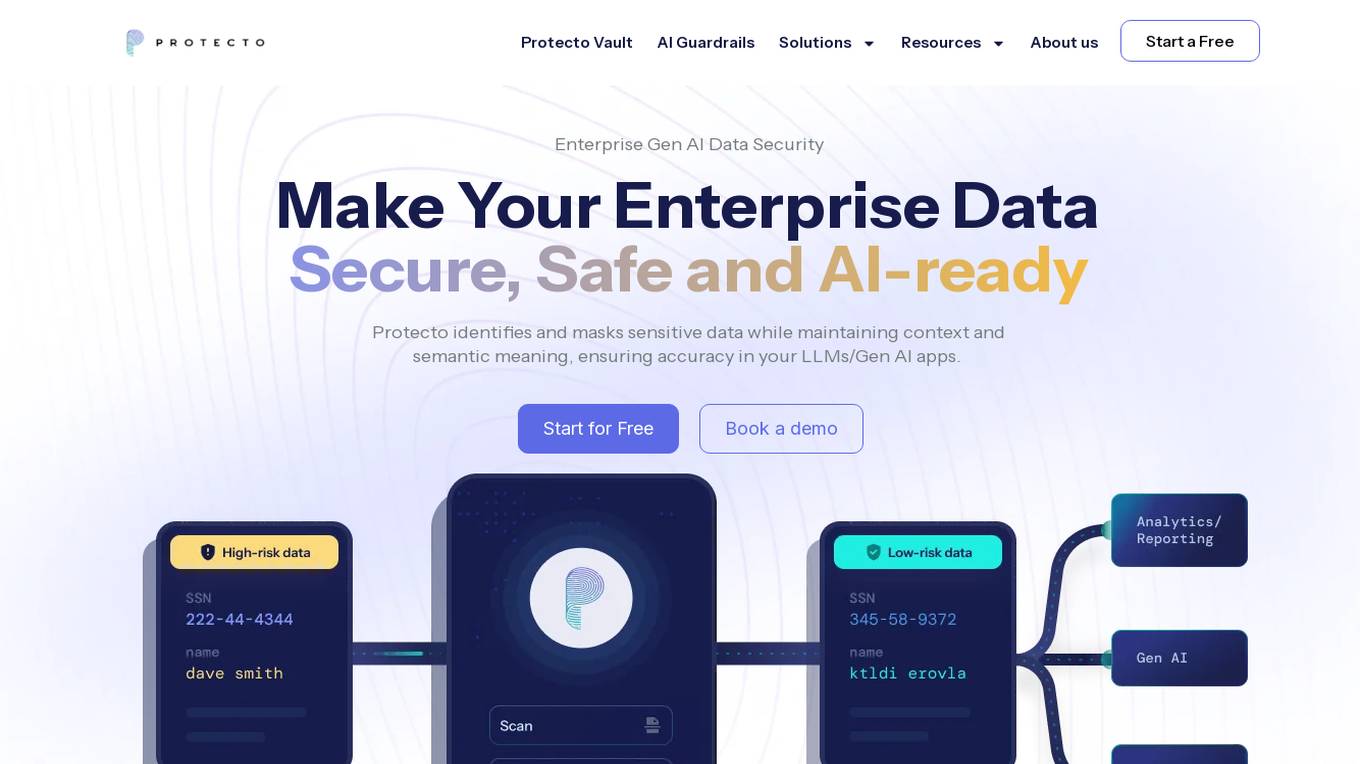

Protecto

Protecto is an Enterprise AI Data Security & Privacy Guardrails application that offers solutions for protecting sensitive data in AI applications. It helps organizations maintain data security and compliance with regulations like HIPAA, GDPR, and PCI. Protecto identifies and masks sensitive data while retaining context and semantic meaning, ensuring accuracy in AI applications. The application provides custom scans, unmasking controls, and versatile data protection across structured, semi-structured, and unstructured text. It is preferred by leading Gen AI companies for its robust and cost-effective data security solutions.

Research Center Trustworthy Data Science and Security

The Research Center Trustworthy Data Science and Security is a hub for interdisciplinary research focusing on building trust in artificial intelligence, machine learning, and cyber security. The center aims to develop trustworthy intelligent systems through research in trustworthy data analytics, explainable machine learning, and privacy-aware algorithms. By addressing the intersection of technological progress and social acceptance, the center seeks to enable private citizens to understand and trust technology in safety-critical applications.

Admorph AI

Admorph AI is a website that appears to be experiencing a privacy error related to its security certificate. The error message suggests that the connection is not private and warns of potential attackers trying to steal sensitive information such as passwords, messages, or credit cards. The site seems to be facing a security certificate issue with the domain *.up.railway.app, which may indicate a misconfiguration or a potential security threat. Users are advised to proceed with caution when accessing admorphai.com.

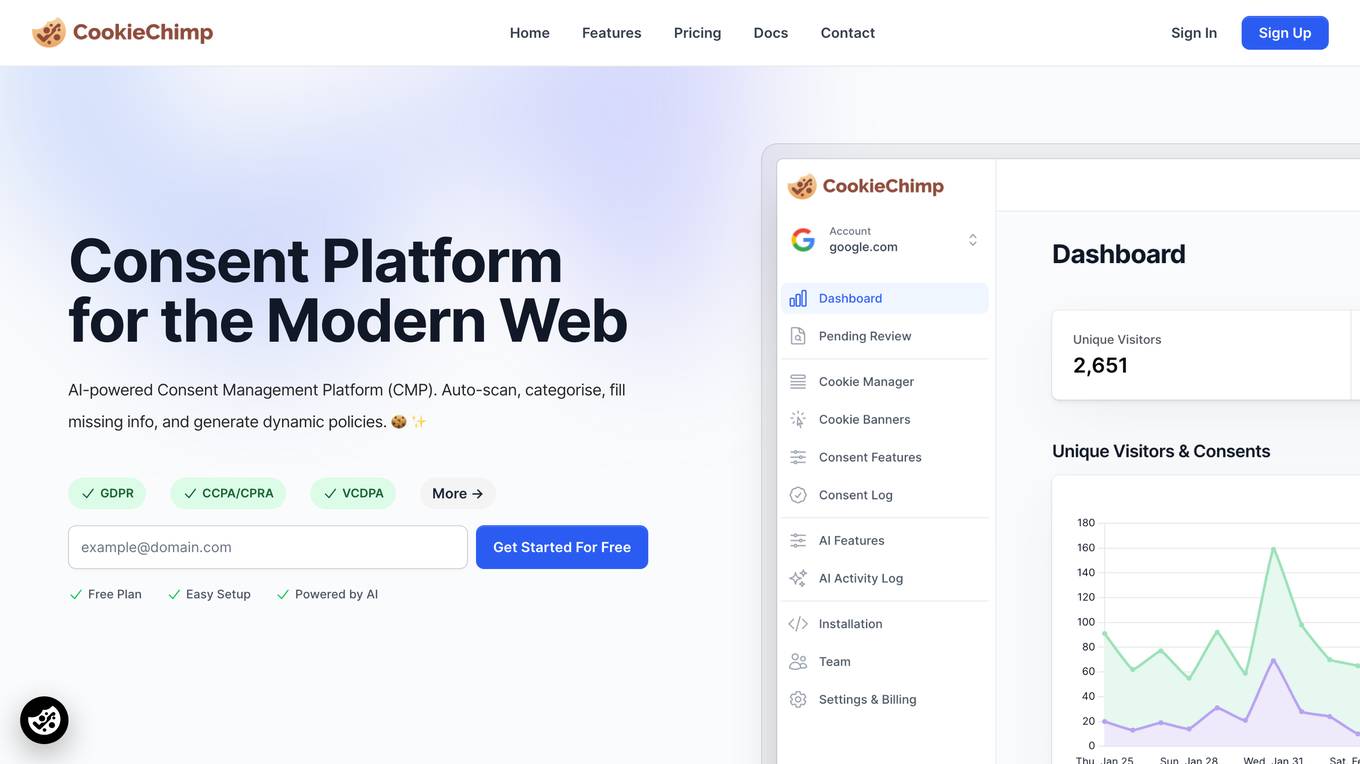

CookieChimp

CookieChimp is a modern consent management platform designed to help websites effortlessly collect user consent for 3rd party services while ensuring compliance with various privacy standards such as GDPR, CCPA, TCF 2.2, and Google Consent Mode. The platform offers features like dashboard monitoring, automated cookie scanning, customizable consent banners, integrations with CRM and marketing tools, global compliance solutions, robust consent logging, and AI-powered efficiency. CookieChimp is trusted by modern companies for its user-friendly interface, valuable insights, and quick setup process.

OpenBuckets

OpenBuckets is a web application designed to help users find and secure open buckets in cloud storage systems. It provides a user-friendly interface for scanning and identifying unprotected buckets, allowing users to take necessary actions to secure their data. With OpenBuckets, users can easily detect potential security risks and prevent unauthorized access to their sensitive information stored in the cloud.

ScamMinder

ScamMinder is an AI-powered tool designed to enhance online safety by analyzing and evaluating websites in real-time. It harnesses cutting-edge AI technology to provide users with a safety score and detailed insights, helping them detect potential risks and red flags. By utilizing advanced machine learning algorithms, ScamMinder assists users in making informed decisions about engaging with websites, businesses, and online entities. With a focus on trustworthiness assessment, the tool aims to protect users from deceptive traps and safeguard their digital presence.

MLSecOps

MLSecOps is an AI tool designed to drive the field of MLSecOps forward through high-quality educational resources and tools. It focuses on traditional cybersecurity principles, emphasizing people, processes, and technology. The MLSecOps Community educates and promotes the integration of security practices throughout the AI & machine learning lifecycle, empowering members to identify, understand, and manage risks associated with their AI systems.

Protect AI

Protect AI is a comprehensive platform designed to secure AI systems by providing visibility and manageability to detect and mitigate unique AI security threats. The platform empowers organizations to embrace a security-first approach to AI, offering solutions for AI Security Posture Management, ML model security enforcement, AI/ML supply chain vulnerability database, LLM security monitoring, and observability. Protect AI aims to safeguard AI applications and ML systems from potential vulnerabilities, enabling users to build, adopt, and deploy AI models confidently and at scale.

AImodelagency

Aimodelagency.com is an AI tool designed to provide robot challenge screen services. The website focuses on checking site connection security and requires cookies to be enabled in the browser settings. Users can ensure a secure connection by enabling cookies as per the site's instructions.

SurePath AI

SurePath AI is an AI platform solution company that governs the workforce use of GenAI. It provides solutions for detecting usage, mitigating risks, and controlling enterprise data access. SurePath AI offers a secure path for GenAI adoption by spotting, securing, and streamlining GenAI use effortlessly. The platform helps prevent data leaks, control access to private models and enterprise data, and manage access to public and private models. It also provides insights and analytics into user activity, policy enforcement, and potential risks.

3 - Open Source Tools

deid-examples

This repository contains examples demonstrating how to use the Private AI REST API for identifying and replacing Personally Identifiable Information (PII) in text. The API supports over 50 entity types, such as Credit Card information and Social Security numbers, across 50 languages. Users can access documentation and the API reference on Private AI's website. The examples include common API call scenarios and use cases in both Python and JavaScript, with additional content related to PrivateGPT for secure work with Language Models (LLMs).

Equivariant-Encryption-for-AI

At Nesa, privacy is a critical objective. Equivariant Encryption (EE) is a solution developed to perform inference on neural networks without exposing input and output data. EE integrates specialized transformations for neural networks, maintaining data privacy while ensuring inference operates correctly on encrypted inputs. It provides the same latency as plaintext inference with no slowdowns and offers strong security guarantees. EE avoids the computational costs of traditional Homomorphic Encryption (HE) by preserving non-linear neural functions. The tool is designed for modern neural architectures, ensuring accuracy, scalability, and compatibility with existing pipelines.

top_secret

Top Secret is a Ruby gem designed to filter sensitive information from free text before sending it to external services or APIs, such as chatbots and LLMs. It provides default filters for credit cards, emails, phone numbers, social security numbers, people's names, and locations, with the ability to add custom filters. Users can configure the tool to handle sensitive information redaction, scan for sensitive data, batch process messages, and restore filtered text from external services. Top Secret uses Regex and NER filters to detect and redact sensitive information, allowing users to override default filters, disable specific filters, and add custom filters globally. The tool is suitable for applications requiring data privacy and security measures.

11 - OpenAI Gpts

PrivacyGPT

Guides And Advise On Digital Privacy Ranging From The Well Known To The Underground....

👑 Data Privacy for Spa & Beauty Salons 👑

Spa and Beauty Salons collect Customer inforation, including personal details and treatment records, necessitating a high level of confidentiality and data protection.

👑 Data Privacy for Public Transportation 👑

Public transport authorities collect data on travel patterns, fares, and sometimes personal details of passengers, necessitating strong privacy measures.

Privacy Policy Generator

Privacy expert generating tailored privacy policies. Note: this is not legal advice!