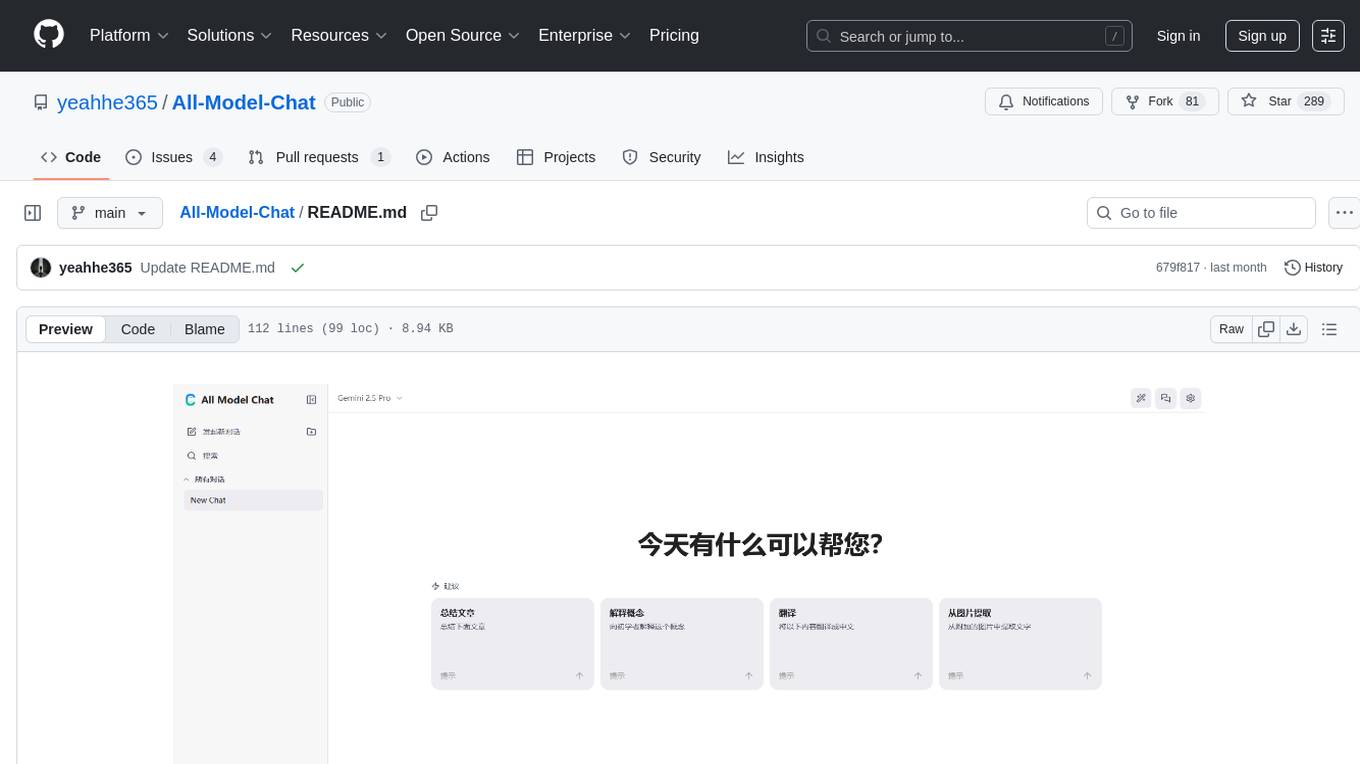

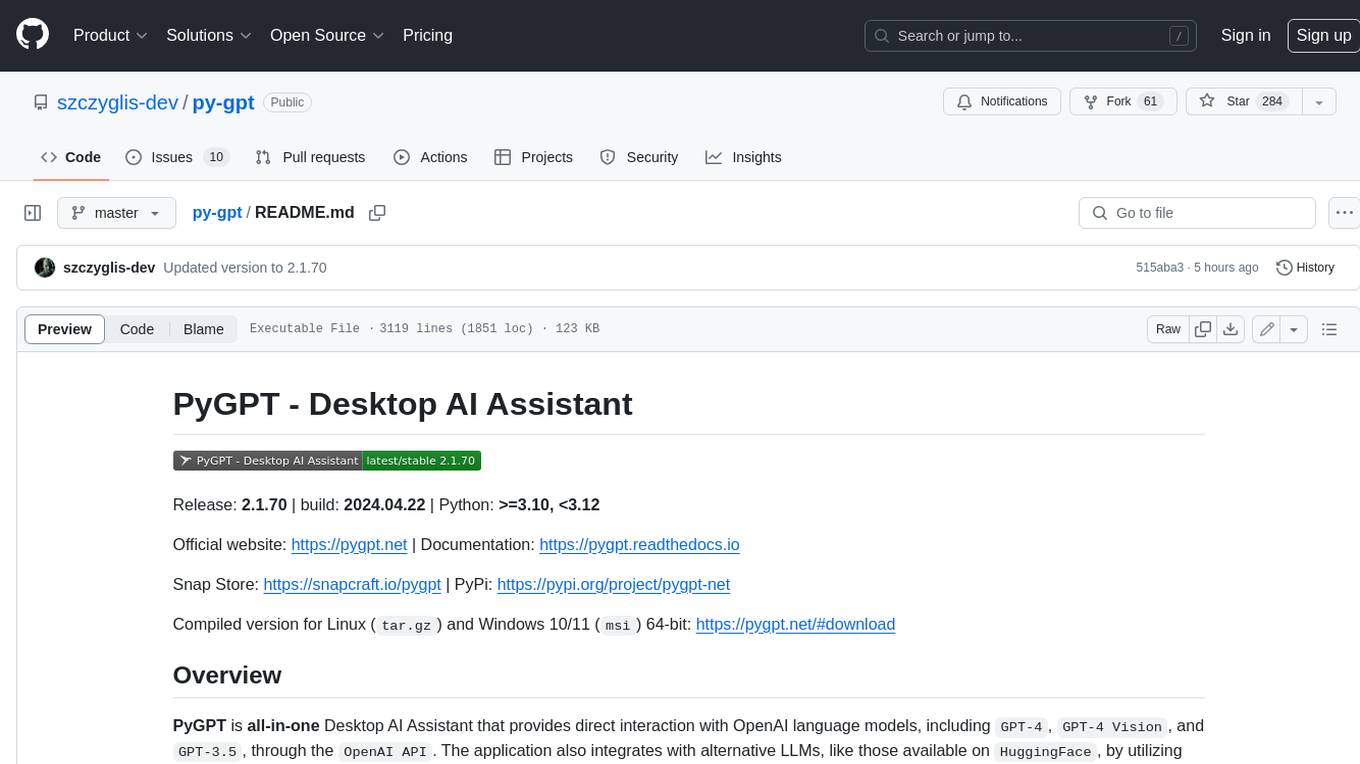

All-Model-Chat

All Model Chat 是一款功能强大、支持多模态输入的聊天机器人界面,旨在提供与 Google Gemini API 家族无缝交互的极致体验。它集成了动态模型选择、多模态文件输入、流式响应、全面的聊天历史管理以及广泛的自定义选项,为您带来无与伦比的 AI 互动体验。

Stars: 297

All Model Chat is a feature-rich, highly customizable web chat application designed specifically for the Google Gemini API family. It integrates dynamic model selection, multimodal file input, streaming responses, comprehensive chat history management, and extensive customization options to provide an unparalleled AI interactive experience.

README:

All Model Chat 是一款功能强大、支持多模态输入的聊天机器人界面,旨在提供与 Google Gemini API 家族无缝交互的极致体验。它集成了动态模型选择、多模态文件输入、流式响应、全面的聊天历史管理以及广泛的自定义选项,为您带来无与伦比的 AI 互动体验。

- 🤖 广泛的模型支持: 原生支持 Gemini 系列 (

2.5 Pro,Flash,Flash Lite)、Imagen 系列 (3.0,4.0) 图像生成模型以及文本转语音 (TTS) 模型。这是一个真正意义上的多模态AI应用平台。 - 🛠️ 强大的工具集: 无缝集成 Google 的强大工具,增强模型能力:

- 🌐 网页搜索: 允许模型访问实时信息以回答时事问题,并提供引用来源。

- 💻 代码执行器: 让模型能够执行代码来解决计算问题、分析数据。

- 🔗 URL 上下文: 允许模型读取和理解您提供的 URL 内容。

- ⚙️ 高级AI参数控制: 精确调整

Temperature和Top-P参数,以控制AI回复的创造性与确定性。您还可以为任意对话设置自定义的系统指令 (System Prompt),从而塑造AI的性格和行为模式。 - 🤔 展示“思考过程”: 洞察模型(如 Gemini 2.5 Flash/Pro)在生成回答前的中间思考步骤。此功能非常适合用于调试和理解AI的推理过程,您甚至可以配置“思考预算”来平衡质量与速度。

- 🎙️ 语音转文本 (STT): 使用强大的 Gemini 模型将您的语音实时转录为文字输入,准确率远超浏览器标准API。您甚至可以在设置中选择不同的 Gemini 模型用于转录。

- 🔊 文本转语音 (TTS): 将模型的文本回答一键转换为流畅的语音,并提供多种高质量音色供您选择,实现“听”AI的功能。

- 🎨 画布助手 (Canvas Assistant): 一个特别设计的系统指令,能将AI变为一名前端开发助手,生成丰富、可交互的 HTML/SVG 网页内容,例如使用 ECharts 创建图表、使用 Graphviz 生成流程图等。

- 📎 丰富的文件支持: 轻松上传和处理多种文件类型,包括图片、视频、音频、PDF文档以及各类代码和文本文件。

- 🖐️ 多样化的上传方式: 提供了极致便利的文件上传体验,支持拖拽、从剪贴板粘贴、使用文件选择器,甚至可以直接调用摄头拍照或使用麦克风录音。

- ✍️ 即时创建文本文件: 无需离开应用,即可在应用内快速创建和编辑文本文件,并将其作为上下文提交给模型。

- 🆔 通过文件ID引用: 对于高级用户,您可以直接引用已上传到 Gemini API 的文件(使用其

files/...ID),无需重复上传,节省时间和带宽。 - 🖼️ 交互式预览: 在应用内直接缩放和平移您上传的图片,或在交互式模态框中预览AI生成的HTML代码,甚至可以进入真正的全屏模式。

- 📊 智能文件管理: 提供实时上传进度条、进行中的上传可随时取消,并有清晰的错误处理提示,确保文件处理过程始终在您的掌控之中。

- 📚 持久化聊天历史: 所有对话都会自动保存在您的浏览器本地存储 (

localStorage) 中,确保了数据隐私,并允许您随时回顾过往的交流。 - 📂 对话分组: 将您的聊天会话整理到可折叠的群组中,便于管理和查找。

- 🎭 场景管理: 创建、保存、导入和导出“聊天模板”。这使得您可以快速设定复杂的对话背景(如编程问题、角色扮演),极大提升了沟通效率。

- ✏️ 完全的消息控制: 您可以编辑、删除或重试任何一条消息。智能编辑功能(编辑用户提示)会自动从该点截断并重新提交对话,从而正确地维持上下文。

- 📥 导出对话与消息: 将整个对话导出为 PNG 图片、HTML 文件 或 TXT 文件。您还可以将单条模型回复单独导出为 PNG 或 HTML。

- ⌨️ 键盘快捷键: 专为效率爱好者设计,提供新建对话、切换模型、打开日志等多种快捷键,让操作行云流水。

- 🛠️ 日志查看器与调试工具: 内置的日志查看器让高级用户可以洞察应用的内部行为、API调用详情以及API密钥的使用情况(当提供多个密钥时)。

本应用旨在浏览器中直接使用,无需任何后端或安装配置。

- 打开应用: 访问 all-model-chat.pages.dev。

- 打开设置: 点击页面右上角的齿轮图标 (⚙️)。

- 启用自定义配置: 在“API 配置”部分,打开“使用自定义 API 配置”的开关。

- 输入您的 API 密钥: 将您的 Google Gemini API 密钥粘贴到文本框中。您可以从 Google AI Studio 获取密钥。支持每行输入一个,以使用多个密钥轮换。

-

保存并开始聊天: 点击“保存”。您的密钥将安全地存储在您浏览器的

localStorage中,绝不会发送到任何其他地方。

- 框架: React 19 & TypeScript

-

AI SDK:

@google/genai - 样式: Tailwind CSS (通过 CDN) & CSS 变量(用于主题化)

-

Markdown 与渲染:

react-markdown,remark-gfm,remark-math,rehype-highlight,rehype-katex,highlight.js,DOMPurify,mermaid,viz.js -

图片导出:

html2canvas -

模块加载: 现代 ES 模块 & Import Maps (通过

esm.sh) - 图标: Lucide React

-

离线支持: Service Worker (

sw.js) 用于缓存应用外壳

All-Model-Chat/

├── public/ # 静态资源 (manifest.json, sw.js)

├── src/

│ ├── components/ # React UI 组件 (头部, 聊天输入, 模态框等)

│ │ ├── chat/ # 聊天输入子组件

│ │ ├── layout/ # 布局组件

│ │ ├── message/ # 消息渲染子组件 (代码块, 图表)

│ │ ├── modals/ # 应用级模态框

│ │ ├── shared/ # 可复用的通用组件

│ │ └── settings/ # 设置面板模块

│ ├── constants/ # 应用全局常量 (app, 主题, 文件, 模型)

│ ├── hooks/ # ✨ 应用核心逻辑所在地

│ │ ├── useChat.ts # 组织所有功能的主 Hook

│ │ ├── useAppSettings.ts # 管理全局设置、主题和语言

│ │ └── ... (其他自定义 Hooks)

│ ├── services/ # 外部服务封装

│ │ ├── api/ # 模块化的 API 调用函数

│ │ ├── geminiService.ts# 封装所有对 Google GenAI API 的调用

│ │ └── logService.ts # 为日志查看器提供应用内日志服务

│ ├── utils/ # 工具函数

│ │ ├── translations/ # 语言翻译文件

│ │ └── ... (API, 领域, UI 相关的工具函数)

│ ├── App.tsx # 应用根组件

│ ├── index.tsx # React 应用入口文件

│ └── types.ts # 核心 TypeScript 类型定义

│

├── index.html # 主 HTML 文件,包含 import maps 和核心样式

└── README.mdFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for All-Model-Chat

Similar Open Source Tools

All-Model-Chat

All Model Chat is a feature-rich, highly customizable web chat application designed specifically for the Google Gemini API family. It integrates dynamic model selection, multimodal file input, streaming responses, comprehensive chat history management, and extensive customization options to provide an unparalleled AI interactive experience.

Rodel.Agent

Rodel Agent is a Windows desktop application that integrates chat, text-to-image, text-to-speech, and machine translation services, providing users with a comprehensive desktop AI experience. The application supports mainstream AI services and aims to enhance user interaction through various AI functionalities.

nekro-agent

Nekro Agent is an AI chat plugin and proxy execution bot that is highly scalable, offers high freedom, and has minimal deployment requirements. It features context-aware chat for group/private chats, custom character settings, sandboxed execution environment, interactive image resource handling, customizable extension development interface, easy deployment with docker-compose, integration with Stable Diffusion for AI drawing capabilities, support for various file types interaction, hot configuration updates and command control, native multimodal understanding, visual application management control panel, CoT (Chain of Thought) support, self-triggered timers and holiday greetings, event notification understanding, and more. It allows for third-party extensions and AI-generated extensions, and includes features like automatic context trigger based on LLM, and a variety of basic commands for bot administrators.

llama.ui

llama.ui is an open-source desktop application that provides a beautiful, user-friendly interface for interacting with large language models powered by llama.cpp. It is designed for simplicity and privacy, allowing users to chat with powerful quantized models on their local machine without the need for cloud services. The project offers multi-provider support, conversation management with indexedDB storage, rich UI components including markdown rendering and file attachments, advanced features like PWA support and customizable generation parameters, and is privacy-focused with all data stored locally in the browser.

OllamaSharp

OllamaSharp is a .NET binding for the Ollama API, providing an intuitive API client to interact with Ollama. It offers support for all Ollama API endpoints, real-time streaming, progress reporting, and an API console for remote management. Users can easily set up the client, list models, pull models with progress feedback, stream completions, and build interactive chats. The project includes a demo console for exploring and managing the Ollama host.

AIaW

AIaW is a next-generation LLM client with full functionality, lightweight, and extensible. It supports various basic functions such as streaming transfer, image uploading, and latex formulas. The tool is cross-platform with a responsive interface design. It supports multiple service providers like OpenAI, Anthropic, and Google. Users can modify questions, regenerate in a forked manner, and visualize conversations in a tree structure. Additionally, it offers features like file parsing, video parsing, plugin system, assistant market, local storage with real-time cloud sync, and customizable interface themes. Users can create multiple workspaces, use dynamic prompt word variables, extend plugins, and benefit from detailed design elements like real-time content preview, optimized code pasting, and support for various file types.

chatluna

Chatluna is a machine learning model plugin that provides chat services with large language models. It is highly extensible, supports multiple output formats, and offers features like custom conversation presets, rate limiting, and context awareness. Users can deploy Chatluna under Koishi without additional configuration. The plugin supports various models/platforms like OpenAI, Azure OpenAI, Google Gemini, and more. It also provides preset customization using YAML files and allows for easy forking and development within Koishi projects. However, the project lacks web UI, HTTP server, and project documentation, inviting contributions from the community.

design-studio

Tiledesk Design Studio is an open-source, no-code development platform for creating chatbots and conversational apps. It offers a user-friendly, drag-and-drop interface with pre-ready actions and integrations. The platform combines the power of LLM/GPT AI with a flexible 'graph' approach for creating conversations and automations with ease. Users can automate customer conversations, prototype conversations, integrate ChatGPT, enhance user experience with multimedia, provide personalized product recommendations, set conditions, use random replies, connect to other tools like HubSpot CRM, integrate with WhatsApp, send emails, and seamlessly enhance existing setups.

google_workspace_mcp

The Google Workspace MCP Server is a production-ready server that integrates major Google Workspace services with AI assistants. It supports single-user and multi-user authentication via OAuth 2.1, making it a powerful backend for custom applications. Built with FastMCP for optimal performance, it features advanced authentication handling, service caching, and streamlined development patterns. The server provides full natural language control over Google Calendar, Drive, Gmail, Docs, Sheets, Slides, Forms, Tasks, and Chat through all MCP clients, AI assistants, and developer tools. It supports free Google accounts and Google Workspace plans with expanded app options like Chat & Spaces. The server also offers private cloud instance options.

odoo-llm

This repository provides a comprehensive framework for integrating Large Language Models (LLMs) into Odoo. It enables seamless interaction with AI providers like OpenAI, Anthropic, Ollama, and Replicate for chat completions, text embeddings, and more within the Odoo environment. The architecture includes external AI clients connecting via `llm_mcp_server` and Odoo AI Chat with built-in chat interface. The core module `llm` offers provider abstraction, model management, and security, along with tools for CRUD operations and domain-specific tool packs. Various AI providers, infrastructure components, and domain-specific tools are available for different tasks such as content generation, knowledge base management, and AI assistants creation.

opensearch-ai

OpenSearch GPT is a personalized AI search engine that adapts to user interests while browsing the web. It utilizes advanced technologies like Mem0 for automatic memory collection, Vercel AI ADK for AI applications, Next.js for React framework, Tailwind CSS for styling, Shadcn UI for UI components, Cobe for globe animation, GPT-4o-mini for AI capabilities, and Cloudflare Pages for web application deployment. Developed by Supermemory.ai team.

llms

llms.py is a lightweight CLI, API, and ChatGPT-like alternative to Open WebUI for accessing multiple LLMs. It operates entirely offline, ensuring all data is kept private in browser storage. The tool provides a convenient way to interact with various LLM models without the need for an internet connection, prioritizing user privacy and data security.

SolarLLMChatDemo

SolarLLM Chat Demo is a repository showcasing a chat demo using Streamlit and Gradio. It provides a visual demonstration of chat functionality using these tools. For more detailed usage examples, users can refer to the SolarLLM Cookbook available at the provided GitHub link.

memfree

MemFree is an open-source hybrid AI search engine that allows users to simultaneously search their personal knowledge base (bookmarks, notes, documents, etc.) and the Internet. It features a self-hosted super fast serverless vector database, local embedding and rerank service, one-click Chrome bookmarks index, and full code open source. Users can contribute by opening issues for bugs or making pull requests for new features or improvements.

tunacode

TunaCode CLI is an AI-powered coding assistant that provides a command-line interface for developers to enhance their coding experience. It offers features like model selection, parallel execution for faster file operations, and various commands for code management. The tool aims to improve coding efficiency and provide a seamless coding environment for developers.

py-gpt

Py-GPT is a Python library that provides an easy-to-use interface for OpenAI's GPT-3 API. It allows users to interact with the powerful GPT-3 model for various natural language processing tasks. With Py-GPT, developers can quickly integrate GPT-3 capabilities into their applications, enabling them to generate text, answer questions, and more with just a few lines of code.

For similar tasks

All-Model-Chat

All Model Chat is a feature-rich, highly customizable web chat application designed specifically for the Google Gemini API family. It integrates dynamic model selection, multimodal file input, streaming responses, comprehensive chat history management, and extensive customization options to provide an unparalleled AI interactive experience.

ai-chatbot

Next.js AI Chatbot is an open-source app template for building AI chatbots using Next.js, Vercel AI SDK, OpenAI, and Vercel KV. It includes features like Next.js App Router, React Server Components, Vercel AI SDK for streaming chat UI, support for various AI models, Tailwind CSS styling, Radix UI for headless components, chat history management, rate limiting, session storage with Vercel KV, and authentication with NextAuth.js. The template allows easy deployment to Vercel and customization of AI model providers.

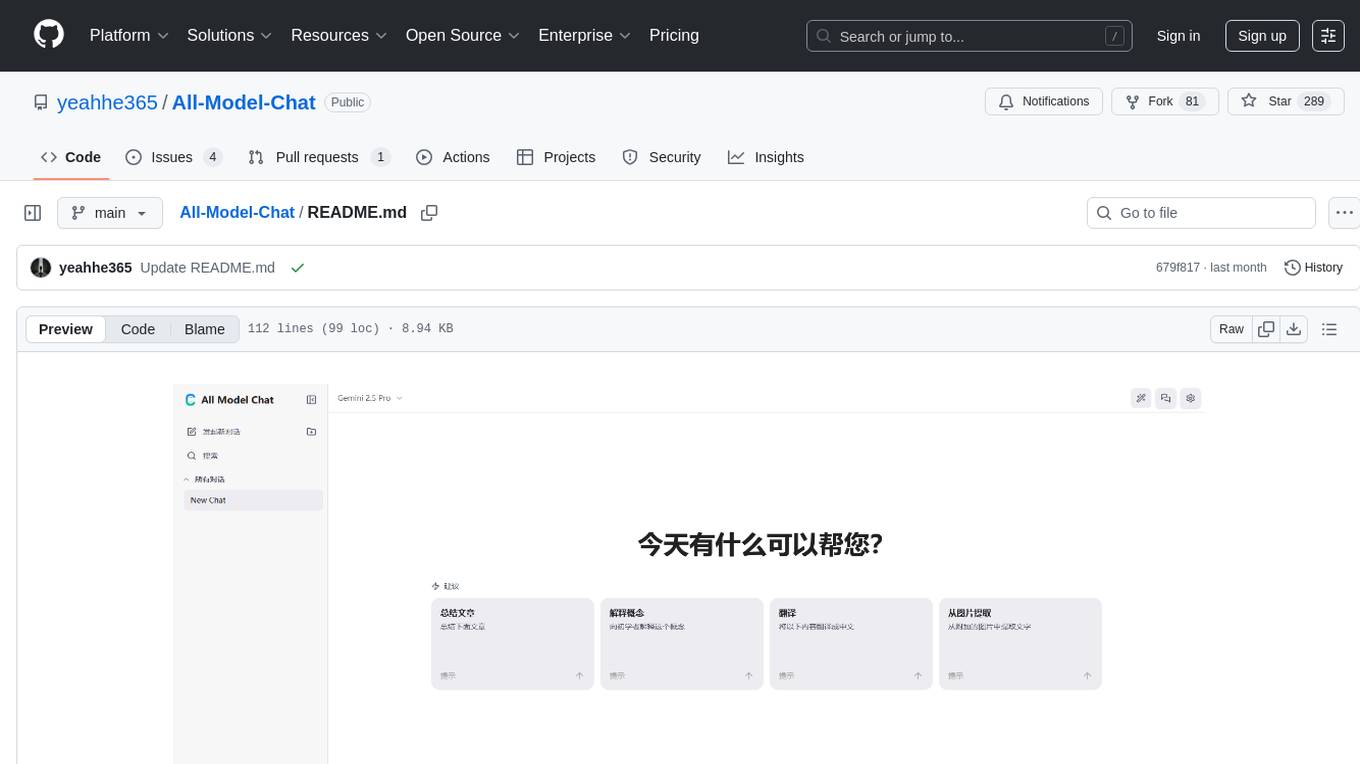

chatty

Chatty is a private AI tool that runs large language models natively and privately in the browser, ensuring in-browser privacy and offline usability. It supports chat history management, open-source models like Gemma and Llama2, responsive design, intuitive UI, markdown & code highlight, chat with files locally, custom memory support, export chat messages, voice input support, response regeneration, and light & dark mode. It aims to bring popular AI interfaces like ChatGPT and Gemini into an in-browser experience.

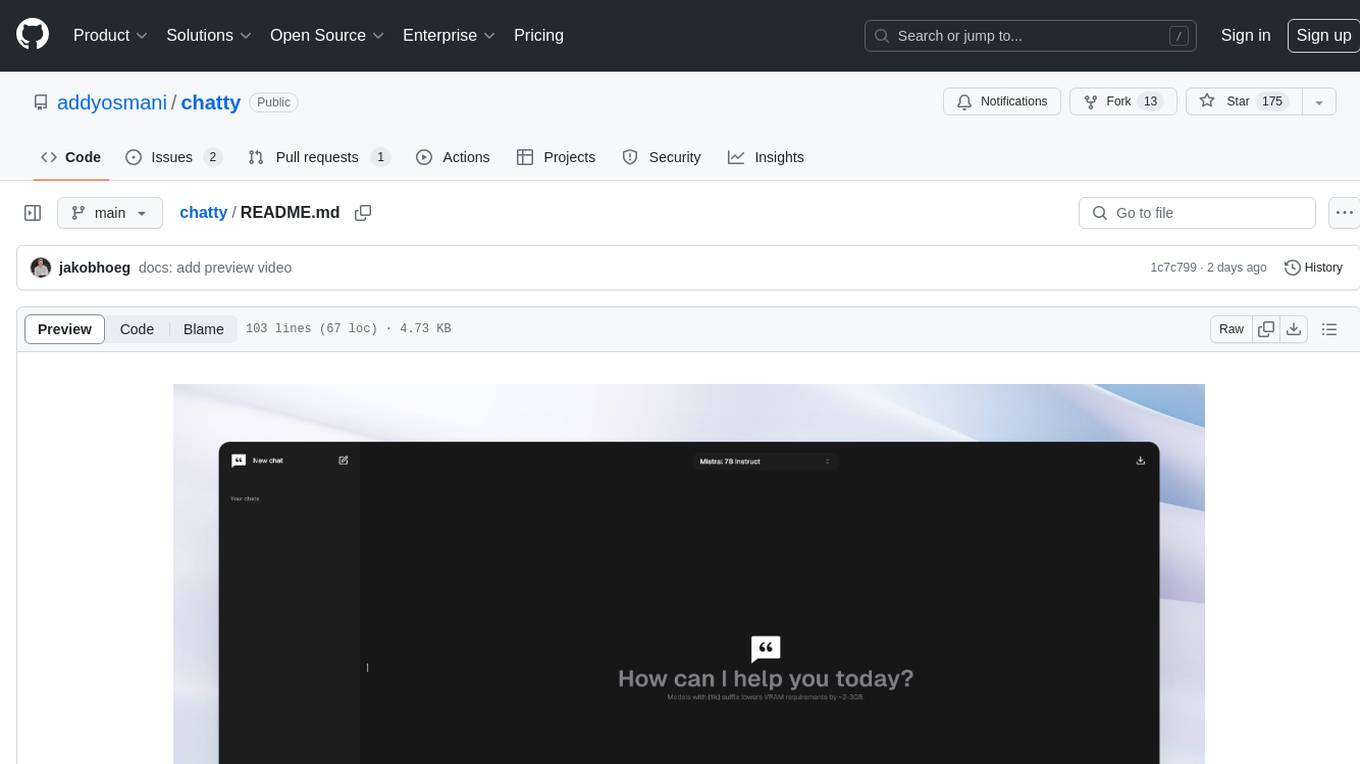

ollama-gui

Ollama GUI is a web interface for ollama.ai, a tool that enables running Large Language Models (LLMs) on your local machine. It provides a user-friendly platform for chatting with LLMs and accessing various models for text generation. Users can easily interact with different models, manage chat history, and explore available models through the web interface. The tool is built with Vue.js, Vite, and Tailwind CSS, offering a modern and responsive design for seamless user experience.

CodeFuse-muAgent

CodeFuse-muAgent is a Multi-Agent framework designed to streamline Standard Operating Procedure (SOP) orchestration for agents. It integrates toolkits, code libraries, knowledge bases, and sandbox environments for rapid construction of complex Multi-Agent interactive applications. The framework enables efficient execution and handling of multi-layered and multi-dimensional tasks.

pyqt-openai

VividNode is a cross-platform AI desktop chatbot application for LLM such as GPT, Claude, Gemini, Llama chatbot interaction and image generation. It offers customizable features, local chat history, and enhanced performance without requiring a browser. The application is powered by GPT4Free and allows users to interact with chatbots and generate images seamlessly. VividNode supports Windows, Mac, and Linux, securely stores chat history locally, and provides features like chat interface customization, image generation, focus and accessibility modes, and extensive customization options with keyboard shortcuts for efficient operations.

LLamaWorker

LLamaWorker is a HTTP API server developed to provide an OpenAI-compatible API for integrating Large Language Models (LLM) into applications. It supports multi-model configuration, streaming responses, text embedding, chat templates, automatic model release, function calls, API key authentication, and test UI. Users can switch models, complete chats and prompts, manage chat history, and generate tokens through the test UI. Additionally, LLamaWorker offers a Vulkan compiled version for download and provides function call templates for testing. The tool supports various backends and provides API endpoints for chat completion, prompt completion, embeddings, model information, model configuration, and model switching. A Gradio UI demo is also available for testing.

lmstudio-python

LM Studio Python SDK provides a convenient API for interacting with LM Studio instance, including text completion and chat response functionalities. The SDK allows users to manage websocket connections and chat history easily. It also offers tools for code consistency checks, automated testing, and expanding the API.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.