AI-windows-whl

Pre-compiled Python whl for Flash-attention, SageAttention, NATTEN, xFormer etc

Stars: 147

AI-windows-whl is a curated collection of pre-compiled Python wheels for difficult-to-install AI/ML libraries on Windows. It addresses the common pain point of building complex Python packages from source on Windows by providing direct links to pre-compiled `.whl` files for essential libraries like PyTorch, Flash Attention, xformers, SageAttention, NATTEN, Triton, bitsandbytes, and other packages. The goal is to save time for AI enthusiasts and developers on Windows, allowing them to focus on creating amazing things with AI.

README:

A curated collection of pre-compiled Python wheels for difficult-to-install AI/ML libraries on Windows.

Report a Broken Link

·

Request a New Wheel

Table of Contents

This repository was created to address a common pain point for AI enthusiasts and developers on the Windows platform: building complex Python packages from source. Libraries like flash-attention, xformers are essential for high-performance AI tasks but often lack official pre-built wheels for Windows, forcing users into a complicated and error-prone compilation process.

The goal here is to provide a centralized, up-to-date collection of direct links to pre-compiled .whl files for these libraries, primarily for the ComfyUI community and other PyTorch users on Windows. This saves you time and lets you focus on what's important: creating amazing things with AI.

Follow these simple steps to use the wheels from this repository.

- Python for Windows: Ensure you have a compatible Python version installed (PyTorch currently supports Python 3.9 - 3.12 on Windows). You can get it from the official Python website.

To install a wheel, use pip with the direct URL to the .whl file. Make sure to enclose the URL in quotes.

# Example of installing a specific flash-attention wheel

pip install "https://huggingface.co/lldacing/flash-attention-windows-wheel/blob/main/flash_attn-2.7.4.post1+cu128torch2.7.0cxx11abiFALSE-cp312-cp312-win_amd64.whl"[!TIP] Find the package you need in the Available Wheels section below, find the row that matches your environment (Python, PyTorch, CUDA version), and copy the link for the

pip installcommand.

Here is the list of tracked packages.

The foundation of everything. Install this first from the official source.

- Official Install Page: https://pytorch.org/get-started/locally/

For convenience, here are direct installation commands for specific versions on Linux/WSL with an NVIDIA GPU. For other configurations (CPU, macOS, ROCm), please use the official install page.

This is the recommended version for most users.

| CUDA Version | Pip Install Command |

|---|---|

| CUDA 12.9 | pip install torch torchvision --index-url https://download.pytorch.org/whl/cu129 |

| CUDA 12.8 | pip install torch torchvision --index-url https://download.pytorch.org/whl/cu128 |

| CUDA 12.6 | pip install torch torchvision --index-url https://download.pytorch.org/whl/cu126 |

| CPU only | pip install torch torchvision --index-url https://download.pytorch.org/whl/cpu |

| CUDA Version | Pip Install Command |

|---|---|

| CUDA 12.8 | pip install torch==2.7.1 torchvision==0.22.1 torchaudio==2.7.1 --index-url https://download.pytorch.org/whl/cu128 |

| CUDA 12.6 | pip install torch==2.7.1 torchvision==0.22.1 torchaudio==2.7.1 --index-url https://download.pytorch.org/whl/cu126 |

| CUDA 11.8 | pip install torch==2.7.1 torchvision==0.22.1 torchaudio==2.7.1 --index-url https://download.pytorch.org/whl/cu118 |

| CPU only | pip install torch==2.7.1 torchvision==0.22.1 torchaudio==2.7.1 --index-url https://download.pytorch.org/whl/cpu |

Use these for access to the latest features, but expect potential instability.

PyTorch 2.9 (Nightly)

| CUDA Version | Pip Install Command |

|---|---|

| CUDA 12.9 | pip install --pre torch torchvision --index-url https://download.pytorch.org/whl/nightly/cu130 |

| CUDA 12.8 | pip install --pre torch torchvision --index-url https://download.pytorch.org/whl/nightly/cu128 |

| CUDA 12.6 | pip install --pre torch torchvision --index-url https://download.pytorch.org/whl/nightly/cu126 |

Torchaudio

| Package Version | PyTorch Ver | CUDA Ver | Download Link |

|---|---|---|---|

2.8.0 |

2.9.0 |

12.8 |

Link |

▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲

High-performance attention implementation.

- Official Repo: Dao-AILab/flash-attention

- Pre-built Sources: lldacing's HF, Wildminder's HF, mjun0812 GitHub

| Package Version | PyTorch Ver | Python Ver | CUDA Ver | CXX11 ABI | Download Link |

|---|---|---|---|---|---|

2.8.3 |

2.9.0 |

3.12 |

12.8 |

✓ | Link |

2.8.3 |

2.8.0 |

3.12 |

12.8 |

✓ | Link |

2.8.2 |

2.9.0 |

3.12 |

12.8 |

✓ | Link |

2.8.2 |

2.8.0 |

3.10 |

12.8 |

✓ | Link |

2.8.2 |

2.8.0 |

3.11 |

12.8 |

✓ | Link |

2.8.2 |

2.8.0 |

3.12 |

12.8 |

✓ | Link |

2.8.2 |

2.7.0 |

3.10 |

12.8 |

✗ | Link |

2.8.2 |

2.7.0 |

3.11 |

12.8 |

✗ | Link |

2.8.2 |

2.7.0 |

3.12 |

12.8 |

✗ | Link |

2.8.1 |

2.8.0 |

3.12 |

12.8 |

✓ | Link |

2.8.0.post2 |

2.8.0 |

3.12 |

12.8 |

✓ | Link |

2.7.4.post1 |

2.8.0 |

3.10 |

12.8 |

✓ | Link |

2.7.4.post1 |

2.8.0 |

3.12 |

12.8 |

✓ | Link |

2.7.4.post1 |

2.7.0 |

3.10 |

12.8 |

✗ | Link |

2.7.4.post1 |

2.7.0 |

3.11 |

12.8 |

✗ | Link |

2.7.4.post1 |

2.7.0 |

3.12 |

12.8 |

✗ | Link |

2.7.4 |

2.8.0 |

3.10 |

12.8 |

✓ | Link |

2.7.4 |

2.8.0 |

3.11 |

12.8 |

✓ | Link |

2.7.4 |

2.8.0 |

3.12 |

12.8 |

✓ | Link |

2.7.4 |

2.7.0 |

3.10 |

12.8 |

✗ | Link |

2.7.4 |

2.7.0 |

3.11 |

12.8 |

✗ | Link |

2.7.4 |

2.7.0 |

3.12 |

12.8 |

✗ | Link |

2.7.4 |

2.6.0 |

3.10 |

12.6 |

✗ | Link |

2.7.4 |

2.6.0 |

3.11 |

12.6 |

✗ | Link |

2.7.4 |

2.6.0 |

3.12 |

12.6 |

✗ | Link |

2.7.4 |

2.6.0 |

3.10 |

12.4 |

✗ | Link |

2.7.4 |

2.6.0 |

3.11 |

12.4 |

✗ | Link |

2.7.4 |

2.6.0 |

3.12 |

12.4 |

✗ | Link |

▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲

Another library for memory-efficient attention and other optimizations.

- Official Repo: facebookresearch/xformers

- PyTorch Pre-built Index: https://download.pytorch.org/whl/xformers/

[!NOTE] PyTorch provides official pre-built wheels for xformers. You can often install it with

pip install xformersif you installed PyTorch correctly. If that fails, find your matching wheel at the index link above.

ABI3 version, any Python 3.9-3.12

| Package Version | PyTorch Ver | CUDA Ver | Download Link |

|---|---|---|---|

0.0.32.post2 |

2.8.0 |

12.8 |

Link |

0.0.32.post2 |

2.8.0 |

12.9 |

Link |

▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲

- Official Repo: thu-ml/SageAttention

- Pre-built Sources: woct0rdho's Releases, Wildminder's HF

| Package Version | PyTorch Ver | Python Ver | CUDA Ver | Download Link |

|---|---|---|---|---|

2.1.1 |

2.5.1 |

3.9 |

12.4 |

Link |

2.1.1 |

2.5.1 |

3.10 |

12.4 |

Link |

2.1.1 |

2.5.1 |

3.11 |

12.4 |

Link |

2.1.1 |

2.5.1 |

3.12 |

12.4 |

Link |

2.1.1 |

2.6.0 |

3.9 |

12.6 |

Link |

2.1.1 |

2.6.0 |

3.10 |

12.6 |

Link |

2.1.1 |

2.6.0 |

3.11 |

12.6 |

Link |

2.1.1 |

2.6.0 |

3.12 |

12.6 |

Link |

2.1.1 |

2.6.0 |

3.12 |

12.6 |

Link |

2.1.1 |

2.6.0 |

3.13 |

12.6 |

Link |

2.1.1 |

2.7.0 |

3.10 |

12.8 |

Link |

2.1.1 |

2.8.0 |

3.12 |

12.8 |

Link |

◇ ◇ ◇ ◇ ◇ ◇ ◇ ◇ ◇ ◇ ◇ ◇ ◇ ◇ ◇ ◇ ◇

[!NOTE] Only supports CUDA >= 12.8, therefore PyTorch >= 2.7.

| Package Version | PyTorch Ver | Python Ver | CUDA Ver | Download Link |

|---|---|---|---|---|

2.2.0.post2 |

2.5.1 |

>3.9 |

12.4 |

Link |

2.2.0.post2 |

2.6.0 |

>3.9 |

12.6 |

Link |

2.2.0.post2 |

2.7.1 |

>3.9 |

12.8 |

Link |

2.2.0.post2 |

2.8.0 |

>3.9 |

12.8 |

Link |

2.2.0.post2 |

2.9.0 |

>3.9 |

12.8 |

Link |

2.2.0 |

2.7.1 |

3.9 |

12.8 |

Link |

2.2.0 |

2.7.1 |

3.10 |

12.8 |

Link |

2.2.0 |

2.7.1 |

3.11 |

12.8 |

Link |

2.2.0 |

2.7.1 |

3.12 |

12.8 |

Link |

2.2.0 |

2.7.1 |

3.13 |

12.8 |

Link |

2.2.0 |

2.8.0 |

3.9 |

12.8 |

Link |

2.2.0 |

2.8.0 |

3.10 |

12.8 |

Link |

2.2.0 |

2.8.0 |

3.11 |

12.8 |

Link |

2.2.0 |

2.8.0 |

3.12 |

12.8 |

Link |

2.2.0 |

2.8.0 |

3.13 |

12.8 |

Link |

▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲

- Official Repo: thu-ml/SpargeAttn

- Pre-built Sources: woct0rdho's Releases

| Package Version | PyTorch Ver | CUDA Ver | Download Link |

|---|---|---|---|

0.1.0.post1 |

2.7.1 |

12.8 |

Link |

0.1.0.post1 |

2.8.0 |

12.8 |

Link |

▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲

- Official Repo: : mit-han-lab/nunchaku

| Package Version | PyTorch Ver | Python Ver | Download Link |

|---|---|---|---|

1.0.0 |

2.5 |

3.10 |

Link |

1.0.0 |

2.5 |

3.11 |

Link |

1.0.0 |

2.5 |

3.12 |

Link |

1.0.0 |

2.6 |

3.10 |

Link |

1.0.0 |

2.6 |

3.11 |

Link |

1.0.0 |

2.6 |

3.12 |

Link |

1.0.0 |

2.6 |

3.13 |

Link |

1.0.0 |

2.7 |

3.10 |

Link |

1.0.0 |

2.7 |

3.11 |

Link |

1.0.0 |

2.7 |

3.12 |

Link |

1.0.0 |

2.7 |

3.13 |

Link |

1.0.0 |

2.8 |

3.10 |

Link |

1.0.0 |

2.8 |

3.11 |

Link |

1.0.0 |

2.8 |

3.12 |

Link |

1.0.0 |

2.8 |

3.13 |

Link |

1.0.0 |

2.9 |

3.10 |

Link |

1.0.0 |

2.9 |

3.11 |

Link |

1.0.0 |

2.9 |

3.12 |

Link |

1.0.0 |

2.9 |

3.13 |

Link |

0.3.2 |

2.5 |

3.10 |

Link |

0.3.2 |

2.5 |

3.11 |

Link |

0.3.2 |

2.5 |

3.12 |

Link |

0.3.2 |

2.6 |

3.10 |

Link |

0.3.2 |

2.6 |

3.11 |

Link |

0.3.2 |

2.6 |

3.12 |

Link |

0.3.2 |

2.7 |

3.10 |

Link |

0.3.2 |

2.7 |

3.11 |

Link |

0.3.2 |

2.7 |

3.12 |

Link |

0.3.2 |

2.8 |

3.10 |

Link |

0.3.2 |

2.8 |

3.11 |

Link |

0.3.2 |

2.8 |

3.12 |

Link |

0.3.2 |

2.9 |

3.12 |

Link |

▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲

Neighborhood Attention Transformer.

- Official Repo: SHI-Labs/NATTEN

- Pre-built Source: lldacing's HF

| Package Version | PyTorch Ver | Python Ver | CUDA Ver | Download Link |

|---|---|---|---|---|

0.17.5 |

2.6.0 |

3.10 |

12.6 |

Link |

0.17.5 |

2.6.0 |

3.11 |

12.6 |

Link |

0.17.5 |

2.6.0 |

3.12 |

12.6 |

Link |

0.17.5 |

2.7.0 |

3.10 |

12.8 |

Link |

0.17.5 |

2.7.0 |

3.11 |

12.8 |

Link |

0.17.5 |

2.7.0 |

3.12 |

12.8 |

Link |

0.17.3 |

2.4.0 |

3.10 |

12.4 |

Link |

0.17.3 |

2.4.0 |

3.11 |

12.4 |

Link |

0.17.3 |

2.4.0 |

3.12 |

12.4 |

Link |

0.17.3 |

2.4.1 |

3.10 |

12.4 |

Link |

0.17.3 |

2.4.1 |

3.11 |

12.4 |

Link |

0.17.3 |

2.4.1 |

3.12 |

12.4 |

Link |

0.17.3 |

2.5.0 |

3.10 |

12.4 |

Link |

0.17.3 |

2.5.0 |

3.11 |

12.4 |

Link |

0.17.3 |

2.5.0 |

3.12 |

12.4 |

Link |

0.17.3 |

2.5.1 |

3.10 |

12.4 |

Link |

0.17.3 |

2.5.1 |

3.11 |

12.4 |

Link |

0.17.3 |

2.5.1 |

3.12 |

12.4 |

Link |

▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲

Triton is a language and compiler for writing highly efficient custom deep-learning primitives. Not officially supported on Windows, but a fork provides pre-built wheels.

- Windows Fork: woct0rdho/triton-windows

-

Installation:

pip install -U "triton-windows<3.5"

▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲

A lightweight wrapper around CUDA custom functions, particularly for 8-bit optimizers, matrix multiplication (LLM.int8()), and quantization functions.

- Official Repo: bitsandbytes-foundation/bitsandbytes

▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲

- Nodes: ComfyUI-RadialAttn

▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀

All wheel information in this repository is managed in the wheels.json file, which serves as the single source of truth. The tables in this README are automatically generated from this file.

This provides a stable, structured JSON endpoint for any external tool or application that needs to access this data without parsing Markdown.

You can access the raw JSON file directly via the following URL:

https://raw.githubusercontent.com/wildminder/AI-windows-whl/main/wheels.json

Example using curl:

curl -L -o wheels.json https://raw.githubusercontent.com/wildminder/AI-windows-whl/main/wheels.jsonThe file contains a list of packages, each with its metadata and an array of wheels, where each wheel object contains version details and a direct download url.

▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀

Contributions are what make the open source community such an amazing place to learn, inspire, and create. Any contributions you make are greatly appreciated.

If you have found a new pre-built wheel or a reliable source, please fork the repo and create a pull request, or simply open an issue with the link.

This repository is simply a collection of links. Huge thanks to the individuals and groups who do the hard work of building and hosting these wheels for the community:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AI-windows-whl

Similar Open Source Tools

AI-windows-whl

AI-windows-whl is a curated collection of pre-compiled Python wheels for difficult-to-install AI/ML libraries on Windows. It addresses the common pain point of building complex Python packages from source on Windows by providing direct links to pre-compiled `.whl` files for essential libraries like PyTorch, Flash Attention, xformers, SageAttention, NATTEN, Triton, bitsandbytes, and other packages. The goal is to save time for AI enthusiasts and developers on Windows, allowing them to focus on creating amazing things with AI.

we-mp-rss

We-MP-RSS is a tool for subscribing to and managing WeChat official account content, providing RSS subscription functionality. It allows users to fetch and parse WeChat official account content, generate RSS feeds, manage subscriptions via a user-friendly web interface, automatically update content on a schedule, support multiple databases (default SQLite, optional MySQL), various fetching methods, multiple RSS clients, and expiration reminders for authorizations.

search2ai

S2A allows your large model API to support networking, searching, news, and web page summarization. It currently supports OpenAI, Gemini, and Moonshot (non-streaming). The large model will determine whether to connect to the network based on your input, and it will not connect to the network for searching every time. You don't need to install any plugins or replace keys. You can directly replace the custom address in your commonly used third-party client. You can also deploy it yourself, which will not affect other functions you use, such as drawing and voice.

daily_stock_analysis

The daily_stock_analysis repository is an intelligent stock analysis system based on AI large models for A-share/Hong Kong stock/US stock selection. It automatically analyzes and pushes a 'decision dashboard' to WeChat Work/Feishu/Telegram/email daily. The system features multi-dimensional analysis, global market support, market review, AI backtesting validation, multi-channel notifications, and scheduled execution using GitHub Actions. It utilizes AI models like Gemini, OpenAI, DeepSeek, and data sources like AkShare, Tushare, Pytdx, Baostock, YFinance for analysis. The system includes built-in trading disciplines like risk warning, trend trading, precise entry/exit points, and checklist marking for conditions.

devops-gpt

DevOpsGPT is a revolutionary tool designed to streamline your workflow and empower you to build systems and automate tasks with ease. Tired of spending hours on repetitive DevOps tasks? DevOpsGPT is here to help! Whether you're setting up infrastructure, speeding up deployments, or tackling any other DevOps challenge, our app can make your life easier and more productive. With DevOpsGPT, you can expect faster task completion, simplified workflows, and increased efficiency. Ready to experience the DevOpsGPT difference? Visit our website, sign in or create an account, start exploring the features, and share your feedback to help us improve. DevOpsGPT will become an essential tool in your DevOps toolkit.

OneClickLLAMA

OneClickLLAMA is a tool designed to run local LLM models such as Qwen2.5 and SakuraLLM with ease. It can be used in conjunction with various OpenAI format translators and analyzers, including LinguaGacha and KeywordGacha. By following the setup guides provided on the page, users can optimize performance and achieve a 3-5 times speed improvement compared to default settings. The tool requires a minimum of 8GB dedicated graphics memory, preferably NVIDIA, and the latest version of graphics drivers installed. Users can download the tool from the release page, choose the appropriate model based on usage and memory size, and start the tool by selecting the corresponding launch script.

xiaogpt

xiaogpt is a tool that allows you to play ChatGPT and other LLMs with Xiaomi AI Speaker. It supports ChatGPT, New Bing, ChatGLM, Gemini, Doubao, and Tongyi Qianwen. You can use it to ask questions, get answers, and have conversations with AI assistants. xiaogpt is easy to use and can be set up in a few minutes. It is a great way to experience the power of AI and have fun with your Xiaomi AI Speaker.

apidash

API Dash is an open-source cross-platform API Client that allows users to easily create and customize API requests, visually inspect responses, and generate API integration code. It supports various HTTP methods, GraphQL requests, and multimedia API responses. Users can organize requests in collections, preview data in different formats, and generate code for multiple languages. The tool also offers dark mode support, data persistence, and various customization options.

react-native-nitro-mlx

The react-native-nitro-mlx repository allows users to run LLMs, Text-to-Speech, and Speech-to-Text on-device in React Native using MLX Swift. It provides functionalities for downloading models, loading and generating responses, streaming audio, text-to-speech, and speech-to-text capabilities. Users can interact with various MLX-compatible models from Hugging Face, with pre-defined models available for convenience. The repository supports iOS 26.0+ and offers detailed API documentation for each feature.

llmio

LLMIO is a Go-based LLM load balancing gateway that provides a unified REST API, weight scheduling, logging, and modern management interface for your LLM clients. It helps integrate different model capabilities from OpenAI, Anthropic, Gemini, and more in a single service. Features include unified API compatibility, weight scheduling with two strategies, visual management dashboard, rate and failure handling, and local persistence with SQLite. The tool supports multiple vendors' APIs and authentication methods, making it versatile for various AI model integrations.

XiaoXinAir14IML_2019_hackintosh

XiaoXinAir14IML_2019_hackintosh is a repository dedicated to enabling macOS installation on Lenovo XiaoXin Air-14 IML 2019 laptops. The repository provides detailed information on the hardware specifications, supported systems, BIOS versions, related models, installation methods, updates, patches, and recommended settings. It also includes tools and guides for BIOS modifications, enabling high-resolution display settings, Bluetooth synchronization between macOS and Windows 10, voltage adjustments for efficiency, and experimental support for YogaSMC. The repository offers solutions for various issues like sleep support, sound card emulation, and battery information. It acknowledges the contributions of developers and tools like OpenCore, itlwm, VoodooI2C, and ALCPlugFix.

petercat

Peter Cat is an intelligent Q&A chatbot solution designed for community maintainers and developers. It provides a conversational Q&A agent configuration system, self-hosting deployment solutions, and a convenient integrated application SDK. Users can easily create intelligent Q&A chatbots for their GitHub repositories and quickly integrate them into various official websites or projects to provide more efficient technical support for the community.

agentkit-samples

AgentKit Samples is a repository containing a series of examples and tutorials to help users understand, implement, and integrate various functionalities of AgentKit into their applications. The platform offers a complete solution for building, deploying, and maintaining AI agents, significantly reducing the complexity of developing intelligent applications. The repository provides different levels of examples and tutorials, including basic tutorials for understanding AgentKit's concepts and use cases, as well as more complex examples for experienced developers.

LightMem

LightMem is a lightweight and efficient memory management framework designed for Large Language Models and AI Agents. It provides a simple yet powerful memory storage, retrieval, and update mechanism to help you quickly build intelligent applications with long-term memory capabilities. The framework is minimalist in design, ensuring minimal resource consumption and fast response times. It offers a simple API for easy integration into applications with just a few lines of code. LightMem's modular architecture supports custom storage engines and retrieval strategies, making it flexible and extensible. It is compatible with various cloud APIs like OpenAI and DeepSeek, as well as local models such as Ollama and vLLM.

jimeng-free-api-all

Jimeng AI Free API is a reverse-engineered API server that encapsulates Jimeng AI's image and video generation capabilities into OpenAI-compatible API interfaces. It supports the latest jimeng-5.0-preview, jimeng-4.6 text-to-image models, Seedance 2.0 multi-image intelligent video generation, zero-configuration deployment, and multi-token support. The API is fully compatible with OpenAI API format, seamlessly integrating with existing clients and supporting multiple session IDs for polling usage.

lingti-bot

lingti-bot is an AI Bot platform that integrates MCP Server, multi-platform message gateway, rich toolset, intelligent conversation, and voice interaction. It offers core advantages like zero-dependency deployment with a single 30MB binary file, cloud relay support for quick integration with enterprise WeChat/WeChat Official Account, built-in browser automation with CDP protocol control, 75+ MCP tools covering various scenarios, native support for Chinese platforms like DingTalk, Feishu, enterprise WeChat, WeChat Official Account, and more. It is embeddable, supports multiple AI backends like Claude, DeepSeek, Kimi, MiniMax, and Gemini, and allows access from platforms like DingTalk, Feishu, enterprise WeChat, WeChat Official Account, Slack, Telegram, and Discord. The bot is designed with simplicity as the highest design principle, focusing on zero-dependency deployment, embeddability, plain text output, code restraint, and cloud relay support.

For similar tasks

AI-windows-whl

AI-windows-whl is a curated collection of pre-compiled Python wheels for difficult-to-install AI/ML libraries on Windows. It addresses the common pain point of building complex Python packages from source on Windows by providing direct links to pre-compiled `.whl` files for essential libraries like PyTorch, Flash Attention, xformers, SageAttention, NATTEN, Triton, bitsandbytes, and other packages. The goal is to save time for AI enthusiasts and developers on Windows, allowing them to focus on creating amazing things with AI.

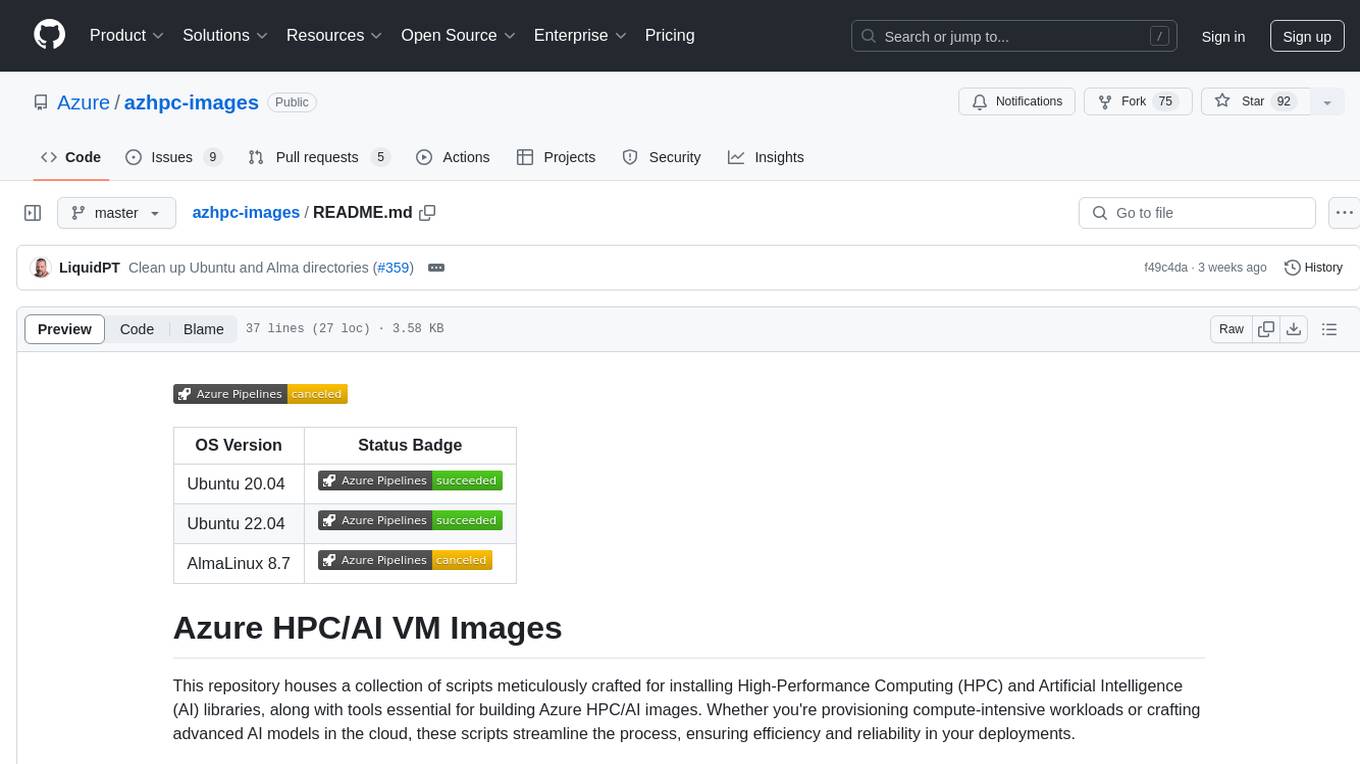

azhpc-images

This repository contains scripts for installing HPC and AI libraries and tools to build Azure HPC/AI images. It streamlines the process of provisioning compute-intensive workloads and crafting advanced AI models in the cloud, ensuring efficiency and reliability in deployments.

Aidan-Bench

Aidan Bench is a tool that rewards creativity, reliability, contextual attention, and instruction following. It is weakly correlated with Lmsys, has no score ceiling, and aligns with real-world open-ended use. The tool involves giving LLMs open-ended questions and evaluating their answers based on novelty scores. Users can set up the tool by installing required libraries and setting up API keys. The project allows users to run benchmarks for different models and provides flexibility in threading options.

llm-chatbot-python

This repository provides resources for building a chatbot backed by Neo4j using Python. It includes instructions on running the application, setting up tests, and installing necessary libraries. The chatbot is designed to interact with users and provide recommendations based on data stored in a Neo4j database. The repository is part of the Neo4j GraphAcademy course on building chatbots with Python.

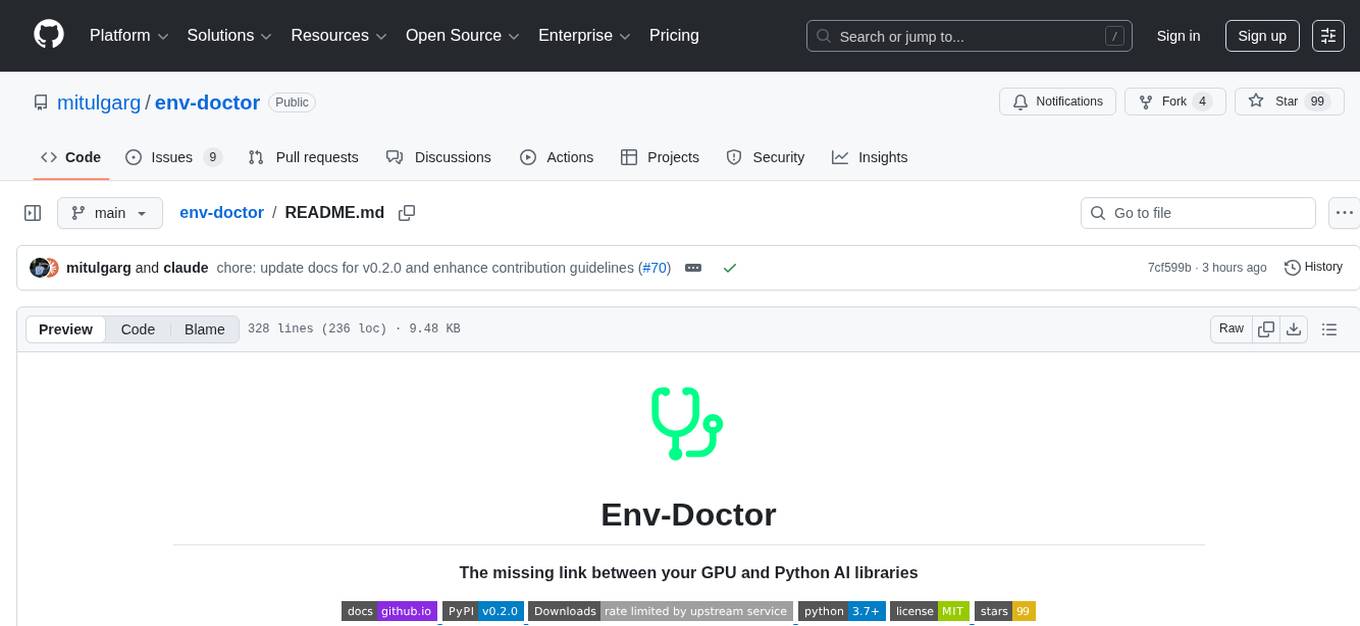

env-doctor

Env-Doctor is a tool designed to diagnose and fix mismatched CUDA versions between NVIDIA driver, system toolkit, cuDNN, and Python libraries, providing a quick solution to the common frustration in GPU computing. It offers one-command diagnosis, safe install commands, extension library support, AI model compatibility checks, WSL2 GPU support, deep CUDA analysis, container validation, MCP server integration, and CI/CD readiness. The tool helps users identify and resolve environment issues efficiently, ensuring smooth operation of AI libraries on their GPUs.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.