Deej-AI

Create automatic playlists by using Deep Learning to *listen* to the music.

Stars: 312

Deej-A.I. is an advanced machine learning project that aims to revolutionize music recommendation systems by using artificial intelligence to analyze and recommend songs based on their content and characteristics. The project involves scraping playlists from Spotify, creating embeddings of songs, training neural networks to analyze spectrograms, and generating recommendations based on similarities in music features. Deej-A.I. offers a unique approach to music curation, focusing on the 'what' rather than the 'how' of DJing, and providing users with personalized and creative music suggestions.

README:

Robert Dargavel Smith - Advanced Machine Learning end of Masters project (MBIT School, Madrid, Spain)

UPDATE: After nearly 5 years, I have finally got around to re-training the model deployed at https://deej-ai.online with a million playlists and a million tracks. The fact that people have been consistently continuing to use it is testament to how well it works, but it was about time I included tracks released since 2018! I have added a train directory to this repo where you can find a README with detailed instructions on how to obtain datasets and train your own model from scratch.

UPDATE: You can now use this model even more easily than before in the Hugging Face hub.

UPDATE: Check out the results after applying to 320,000 tracks on Spotify here. The code for the website is available here.

There are a number of automatic DJ tools around, which cleverly match the tempo of one song with another and mix the beats. To be honest, I have always found that kind of DJ rather boring: the better they are technically, the more it sounds just like one interminable song. In my book, it's not about how you play, but what you play. I have collected many rare records over the years and done a bit of deejaying on the radio and in clubs. I can almost instantly tell whether I am going to like a song or not just by listening to it for a few seconds. Or, if a song is playing, one that would go well with it usually comes to mind immediately. I thought that artificial intelligence could be applied to this "music intuition" as a music recommendation system based on simply listening to a song (and, of course, having an encyclopaedic knowledge of music).

Some years ago, the iPod had a very cool feature called Genius, which created a playlist on-the-fly based on a few example songs. Apple decided to remove this functionality (although it is still available in iTunes), presumably in a move to persuade people to subscribe to their music streaming service. Of course, Spotify now offers this functionality but, personally, I find the recommendations that it makes to be, at best, music I already know and, at worst, rather commercial and uncreative. I have a large library of music and I miss having a simple way to say "keep playing songs like this" (especially when I am driving) and something to help me discover new music, even within my own collection. I spent some time looking for an alternative solution but didn't find anything.

A common approach is to use music genres to classify music, but I find this to be too simplistic and constraining. Is Roxanne by The Police reggae, pop or rock? And what about all the constantly evolving subdivisions of electronic music? I felt it necessary to find a higher dimensional, more continuous description of music and one that did not require labelling each track (i.e., an unsupervised learning approach).

The first thing I did was to scrape as many playlists from Spotify as possible. (Unfortunately, I had the idea to work on this after a competition to do something similar had already been closed, in which access to a million songs was granted.) The idea was that grouping by playlists would give some context or meaning to the individual songs - for example, "80s disco music" or "My favourite songs for the beach". People tend to make playlists of songs by similar artists, with a similar mood, style, genre or for a particular purpose (e.g., for a workout in the gym). Unfortunately, the Spotify API doesn't make it particularly easy to download playlists, so the method was rather crude: I searched for all the playlists with the letter 'a' in the name, the letter 'b', and so on, up to 'Z'. In this way, I managed to grab 240,000 playlists comprising 4 million unique songs. I deliberately excluded all playlists curated by Spotify as these were particularly commercial (I believe that artists can pay to feature in them).

Then, I created an embedding ("Track2Vec") of these songs using the Word2Vec algorithm by considering each song as a "word" and each playlist as a "sentence". (If you can believe me, I had the same idea independently of these guys.) I found 100 dimensions to be a good size. Given a particular song, the model was able to convincingly suggest Spotify songs by the same artist or similar, or from the same period and genre. As the number of unique songs was huge, I limited the "vocabulary" to those which appeared in at least 10 playlists, leaving me with 450,000 tracks.

One nice thing about the Spotify API is that it provides a URL for most songs, which allows you to download a 30 second sample as an MP3. I downloaded all of these MP3s and converted them to a Mel Spectrogram - a compact representation of each song, which supposedly reflects how the human ear responds to sound. In the same way as a human being can think of related music just by listening to a few seconds of a song, I thought that a window of just 5 seconds would be enough to capture the gist of a song. Even with such a limited representation, the zipped size of all the spectrograms came to 4.5 gigabytes!

The next step was to try to use the information gleaned from Spotify to extract features from the spectrograms in order to meaningfully relate them to each other. I trained a convolutional neural network to reproduce as closely as possible (in cosine proximity) the Track2Vec vector (output y) corresponding to a given spectrogram (input x). I tried both one dimensional (in the time axis) and two dimensional convolutional networks and compared the results to a baseline model. The baseline model tried to come up with the closest Track2Vec vector without actually listening to the music. This led to a song that, in theory, everybody should either like (or hate) a little bit ;-) (SBTRKT - Sanctuary), with a cosine proximity of 0.52. The best score I was able to obtain with the validation data before overfitting set in was 0.70. With a 300-dimensional embedding, the validation score was better, but so was that of the baseline: I felt it was more important to have a lower baseline score and a bigger difference between the two, reflecting a latent representation with more diversity and capacity for discrimination. The score, of course, is still very low, but it is not really reasonable to expect that a spectrogram can capture the similarities between songs that human beings group together based on cultural and historical factors. Also, some songs were quite badly represented by the 5 second window (for example, in the case of "Don't stop me now" by Queen, this section corresponded to Brian May's guitar solo...). I played around with an Auto-Encoder and a Variational Auto-Encoder in the hope of forcing the internal latent representation of the spectrograms to be more continuous, disentangled and therefore meaningful. The initial results appeared to indicate that a two dimensional convolutional network is better at capturing the information contained in the spectrograms. I also considered training a Siamese network to directly compare two spectrograms. I've left these ideas for possible future research.

Finally, with a library of MP3 files, I mapped each MP3 to a series of Track2Vec vectors for each 5 second time slice. Most songs vary significantly from beginning to end and so the slice by slice recommendations are all over the place. In the same way as we can apply a Doc2Vec model to compare similar documents, I calculated a "MP3ToVec" vector for each MP3, including each constituent Track2Vec vector according to its TF-IDF (Term Frequency, Inverse Document Frequency) weight. This scheme gives more importance to recommendations which are frequent and specific to a particular song. As this is an algorithm, it was necessary to break the library of MP3s into batches of 100 (my 8,000 MP3s would have taken 10 days to process otherwise!). I checked that this had a negligible impact on the calculated vectors.

You can see the some of the results at the end of this workbook and judge them for yourself. It is particularly good at recognizing classical music, spoken word, hip-hop and electronic music. In fact, I was so surprised by how well it worked, that I started to wonder how much was due to the TF-IDF algorithm and how much was due to the neural network. So I created another baseline model using the neural network with randomly initialized weights to map the spectrograms to vectors. I found that this baseline model was good at spotting genres and structurally similar songs, but, when in doubt, would propose something totally inappropriate. In these cases, the trained neural net seemed to choose something that had a similar energy, mood or instrumentation. In many ways, this was exactly what I was looking for: a creative approach that transcended rigid genre boundaries. By playing around with the parameter which determines whether two vectors are the same or not for the purposes of the TF-IDF algorithm, it is possible to find a good trade-off between the genre (global) and the "feel" (local) characteristics. I also compared the results to playlists generated by Genius in iTunes and, although it is very subjective, I felt that Genius was sticking to genres even if the songs didn't quite go together, and came up with less "inspired" choices. Perhaps a crowd sourced "Coca Cola" test is called for to be the final judge.

Certainly, given the limitations of data, computing power and time, I think that the results serve as a proof of concept.

Apart from the original idea of an automatic (radio as opposed to club) DJ, there are several other interesting things you can do. For example, as the vector mapping is continuous, you can easily create a playlist which smoothly "joins the dots" between one song and another, passing through as many waypoints as you like. For example, you could travel from soul to techno via funk and drum 'n' bass. Or from rock to opera :-).

Another simple idea is to listen to music using a microphone and to propose a set of next songs to play on the fly. Rather than comparing with the overall MP3ToVec, it might be more appropriate to just take into account the beginning of each song, so that the music segues more naturally from one track to another.

Once you have installed the required python packages with

pip install -r requirements.txtand downloaded the model weights to the directory where you have the python files, you can process your library of MP3s (and M4As). Simply run the following command and wait...

python MP3ToVec.py Pickles mp3tovec --scan c:/your_music_libraryIt will create a directory called "Pickles" and, within the subdirectory "mp3tovecs" a file called "mp3tovec.p". Once this has completed, you can try it out with

python Deej-A.I.py Pickles mp3tovecThen go to http://localhost:8050 in your browser. If you add the parameter --demo 5, you don't have to wait until the end of each song. Simply load an MP3 or M4A on which you wish to base the playlist; it doesn't necessarily have to be one from your music library. Finally, there are a couple of controls you can fiddle with (as it is currently programmed, these only take effect after the next song if one is already playing). "Keep on" determines the number of previous tracks to take into account in the generation of the playlist and "Drunk" specifies how much randomness to throw into the mix. Alternatively, you can create an MP3 mix of a musical journey of your choosing with

python Join_the_dots.py Pickles\mp3tovecs\mp3tovec.p --input tracks.txt mix.mp3 9where "tracks.txt" is a text file containing a list of MP3 or M4A files and, here, 9 is the number of additional tracks you want to generate between each of these.

If you are interested in the data I used to train the neural network, feel free to drop me an email.

Optionally you can generate an .m3u file with relative path to export the playlist and play it on another device.

The playlist file uses relative paths. So if you specifiy --playlist as /home/user/playlists/playlist_outfile.m3u and one of its tracks is located at /home/user/music/some_awesome_music.mp3 the resulting playlist will write that track out as ../music/some_awesome_music.mp3.

Run with:

python Deej-A.I.py Pickles mp3tovec --playlist playlist_outfile.m3u --inputsong startingsong.mp3It additionally takes optional parameters:

--nsongs # Number of songs in the playlist

--noise # Amount of noise in the playlist (default 0)

--lookback # Amount of lookback in the playlist (default 3)For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Deej-AI

Similar Open Source Tools

Deej-AI

Deej-A.I. is an advanced machine learning project that aims to revolutionize music recommendation systems by using artificial intelligence to analyze and recommend songs based on their content and characteristics. The project involves scraping playlists from Spotify, creating embeddings of songs, training neural networks to analyze spectrograms, and generating recommendations based on similarities in music features. Deej-A.I. offers a unique approach to music curation, focusing on the 'what' rather than the 'how' of DJing, and providing users with personalized and creative music suggestions.

bidirectional_streaming_ai_voice

This repository contains Python scripts that enable two-way voice conversations with Anthropic Claude, utilizing ElevenLabs for text-to-speech, Faster-Whisper for speech-to-text, and Pygame for audio playback. The tool operates by transcribing human audio using Faster-Whisper, sending the transcription to Anthropic Claude for response generation, and converting the LLM's response into audio using ElevenLabs. The audio is then played back through Pygame, allowing for a seamless and interactive conversation between the user and the AI. The repository includes variations of the main script to support different operating systems and configurations, such as using CPU transcription on Linux or employing the AssemblyAI API instead of Faster-Whisper.

AnnA_Anki_neuronal_Appendix

AnnA is a Python script designed to create filtered decks in optimal review order for Anki flashcards. It uses Machine Learning / AI to ensure semantically linked cards are reviewed far apart. The script helps users manage their daily reviews by creating special filtered decks that prioritize reviewing cards that are most different from the rest. It also allows users to reduce the number of daily reviews while increasing retention and automatically identifies semantic neighbors for each note.

WeeaBlind

Weeablind is a program that uses modern AI speech synthesis, diarization, language identification, and voice cloning to dub multi-lingual media and anime. It aims to create a pleasant alternative for folks facing accessibility hurdles such as blindness, dyslexia, learning disabilities, or simply those that don't enjoy reading subtitles. The program relies on state-of-the-art technologies such as ffmpeg, pydub, Coqui TTS, speechbrain, and pyannote.audio to analyze and synthesize speech that stays in-line with the source video file. Users have the option of dubbing every subtitle in the video, setting the start and end times, dubbing only foreign-language content, or full-blown multi-speaker dubbing with speaking rate and volume matching.

prompt-tuning-playbook

The LLM Prompt Tuning Playbook is a comprehensive guide for improving the performance of post-trained Language Models (LLMs) through effective prompting strategies. It covers topics such as pre-training vs. post-training, considerations for prompting, a rudimentary style guide for prompts, and a procedure for iterating on new system instructions. The playbook emphasizes the importance of clear, concise, and explicit instructions to guide LLMs in generating desired outputs. It also highlights the iterative nature of prompt development and the need for systematic evaluation of model responses.

commonplace-bot

Commonplace Bot is a modern representation of the commonplace book, leveraging modern technological advancements in computation, data storage, machine learning, and networking. It aims to capture, engage, and share knowledge by providing a platform for users to collect ideas, quotes, and information, organize them efficiently, engage with the data through various strategies and triggers, and transform the data into new mediums for sharing. The tool utilizes embeddings and cached transformations for efficient data storage and retrieval, flips traditional engagement rules by engaging with the user, and enables users to alchemize raw data into new forms like art prompts. Commonplace Bot offers a unique approach to knowledge management and creative expression.

Bagatur

Bagatur chess engine is a powerful Java chess engine that can run on Android devices and desktop computers. It supports the UCI protocol and can be easily integrated into chess programs with user interfaces. The engine is available for download on various platforms and has advanced features like SMP (multicore) support and NNUE evaluation function. Bagatur also includes syzygy endgame tablebases and offers various UCI options for customization. The project started as a personal challenge to create a chess program that could defeat a friend, leading to years of development and improvements.

dota2ai

The Dota2 AI Framework project aims to provide a framework for creating AI bots for Dota2, focusing on coordination and teamwork. It offers a LUA sandbox for scripting, allowing developers to code bots that can compete in standard matches. The project acts as a proxy between the game and a web service through JSON objects, enabling bots to perform actions like moving, attacking, casting spells, and buying items. It encourages contributions and aims to enhance the AI capabilities in Dota2 modding.

yet-another-applied-llm-benchmark

Yet Another Applied LLM Benchmark is a collection of diverse tests designed to evaluate the capabilities of language models in performing real-world tasks. The benchmark includes tests such as converting code, decompiling bytecode, explaining minified JavaScript, identifying encoding formats, writing parsers, and generating SQL queries. It features a dataflow domain-specific language for easily adding new tests and has nearly 100 tests based on actual scenarios encountered when working with language models. The benchmark aims to assess whether models can effectively handle tasks that users genuinely care about.

local-chat

LocalChat is a simple, easy-to-set-up, and open-source local AI chat tool that allows users to interact with generative language models on their own computers without transmitting data to a cloud server. It provides a chat-like interface for users to experience ChatGPT-like behavior locally, ensuring GDPR compliance and data privacy. Users can download LocalChat for macOS, Windows, or Linux to chat with open-weight generative language models.

femtoGPT

femtoGPT is a pure Rust implementation of a minimal Generative Pretrained Transformer. It can be used for both inference and training of GPT-style language models using CPUs and GPUs. The tool is implemented from scratch, including tensor processing logic and training/inference code of a minimal GPT architecture. It is a great start for those fascinated by LLMs and wanting to understand how these models work at deep levels. The tool uses random generation libraries, data-serialization libraries, and a parallel computing library. It is relatively fast on CPU and correctness of gradients is checked using the gradient-check method.

aiohomekit

aiohomekit is a Python library that implements the HomeKit protocol for controlling HomeKit accessories using asyncio. It is primarily used with Home Assistant, targeting the same versions of Python and following their code standards. The library is still under development and does not offer API guarantees yet. It aims to match the behavior of real HAP controllers, even when not strictly specified, and works around issues like JSON formatting, boolean encoding, header sensitivity, and TCP packet splitting. aiohomekit is primarily tested with Phillips Hue and Eve Extend bridges via Home Assistant, but is known to work with many more devices. It does not support BLE accessories and is intended for client-side use only.

discourse-chatbot

The discourse-chatbot is an original AI chatbot for Discourse forums that allows users to converse with the bot in posts or chat channels. Users can customize the character of the bot, enable RAG mode for expert answers, search Wikipedia, news, and Google, provide market data, perform accurate math calculations, and experiment with vision support. The bot uses cutting-edge Open AI API and supports Azure and proxy server connections. It includes a quota system for access management and can be used in RAG mode or basic bot mode. The setup involves creating embeddings to make the bot aware of forum content and setting up bot access permissions based on trust levels. Users must obtain an API token from Open AI and configure group quotas to interact with the bot. The plugin is extensible to support other cloud bots and content search beyond the provided set.

Winter

Winter is a UCI chess engine that has competed at top invite-only computer chess events. It is the top-rated chess engine from Switzerland and has a level of play that is super human but below the state of the art reached by large, distributed, and resource-intensive open-source projects like Stockfish and Leela Chess Zero. Winter has relied on many machine learning algorithms and techniques over the course of its development, including certain clustering methods not used in any other chess programs, such as Gaussian Mixture Models and Soft K-Means. As of Winter 0.6.2, the evaluation function relies on a small neural network for more precise evaluations.

iris-llm

iris-llm is a personal project aimed at creating an Intelligent Residential Integration System (IRIS) with a voice interface to local language models or GPT. It provides options for chat engines, text-to-speech engines, speech-to-text engines, feedback sounds, and push-to-talk or wake word features. The tool is still in early development and serves as a tutorial for Python coders interested in working with language models.

WritingAIPaper

WritingAIPaper is a comprehensive guide for beginners on crafting AI conference papers. It covers topics like paper structure, core ideas, framework construction, result analysis, and introduction writing. The guide aims to help novices navigate the complexities of academic writing and contribute to the field with clarity and confidence. It also provides tips on readability improvement, logical strength, defensibility, confusion time reduction, and information density increase. The appendix includes sections on AI paper production, a checklist for final hours, common negative review comments, and advice on dealing with paper rejection.

For similar tasks

Deej-AI

Deej-A.I. is an advanced machine learning project that aims to revolutionize music recommendation systems by using artificial intelligence to analyze and recommend songs based on their content and characteristics. The project involves scraping playlists from Spotify, creating embeddings of songs, training neural networks to analyze spectrograms, and generating recommendations based on similarities in music features. Deej-A.I. offers a unique approach to music curation, focusing on the 'what' rather than the 'how' of DJing, and providing users with personalized and creative music suggestions.

nntrainer

NNtrainer is a software framework for training neural network models on devices with limited resources. It enables on-device fine-tuning of neural networks using user data for personalization. NNtrainer supports various machine learning algorithms and provides examples for tasks such as few-shot learning, ResNet, VGG, and product rating. It is optimized for embedded devices and utilizes CBLAS and CUBLAS for accelerated calculations. NNtrainer is open source and released under the Apache License version 2.0.

uvadlc_notebooks

The UvA Deep Learning Tutorials repository contains a series of Jupyter notebooks designed to help understand theoretical concepts from lectures by providing corresponding implementations. The notebooks cover topics such as optimization techniques, transformers, graph neural networks, and more. They aim to teach details of the PyTorch framework, including PyTorch Lightning, with alternative translations to JAX+Flax. The tutorials are integrated as official tutorials of PyTorch Lightning and are relevant for graded assignments and exams.

awesome-ai

Awesome AI is a curated list of artificial intelligence resources including courses, tools, apps, and open-source projects. It covers a wide range of topics such as machine learning, deep learning, natural language processing, robotics, conversational interfaces, data science, and more. The repository serves as a comprehensive guide for individuals interested in exploring the field of artificial intelligence and its applications across various domains.

netsaur

Netsaur is a powerful machine learning library for Deno, offering a lightweight and easy-to-use neural network solution. It is blazingly fast and efficient, providing a simple API for creating and training neural networks. Netsaur can run on both CPU and GPU, making it suitable for serverless environments. With Netsaur, users can quickly build and deploy machine learning models for various applications with minimal dependencies. This library is perfect for both beginners and experienced machine learning practitioners.

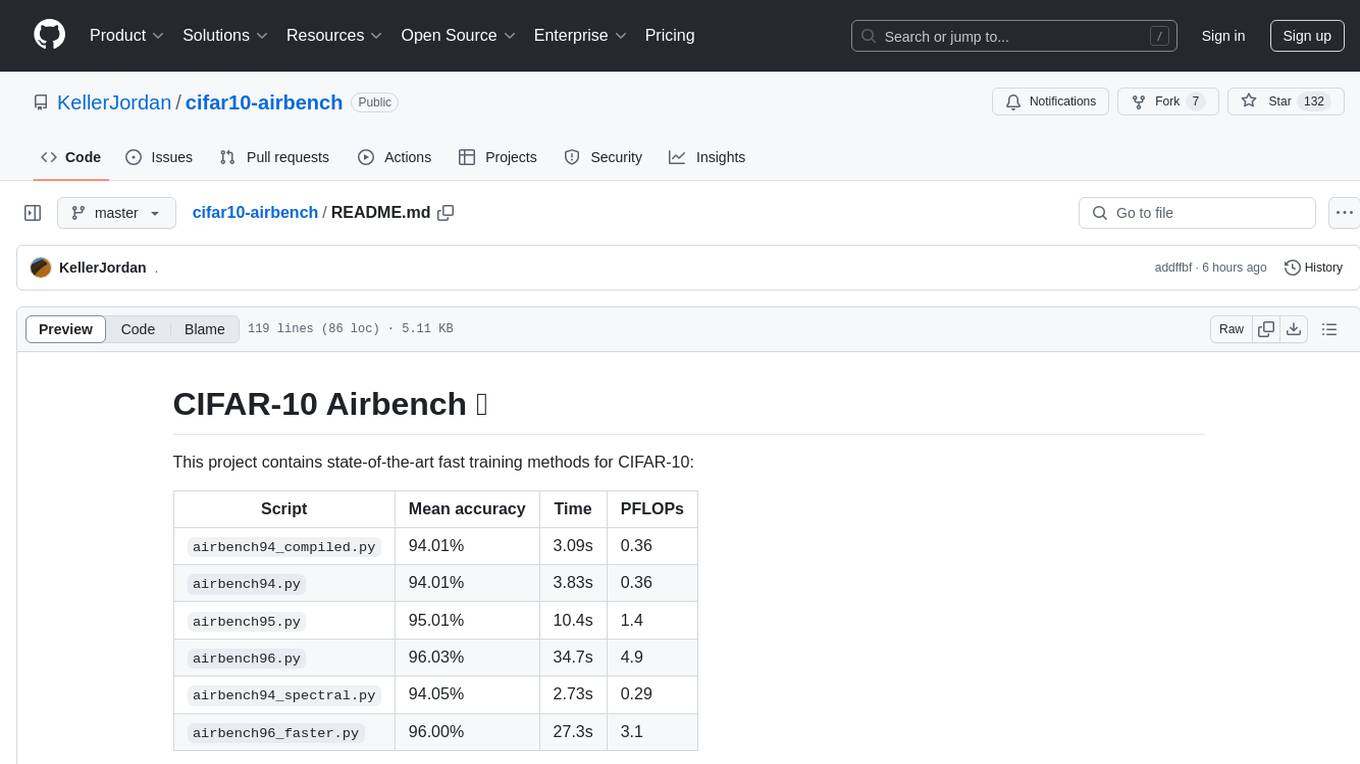

cifar10-airbench

CIFAR-10 Airbench is a project offering fast and stable training baselines for CIFAR-10 dataset, facilitating machine learning research. It provides easily runnable PyTorch scripts for training neural networks with high accuracy levels. The methods used in this project aim to accelerate research on fundamental properties of deep learning. The project includes GPU-accelerated dataloader for custom experiments and trainings, and can be used for data selection and active learning experiments. The training methods provided are faster than standard ResNet training, offering improved performance for research projects.

ai-algorithms

This repository is a work in progress that contains first-principle implementations of groundbreaking AI algorithms using various deep learning frameworks. Each implementation is accompanied by supporting research papers, aiming to provide comprehensive educational resources for understanding and implementing foundational AI algorithms from scratch.

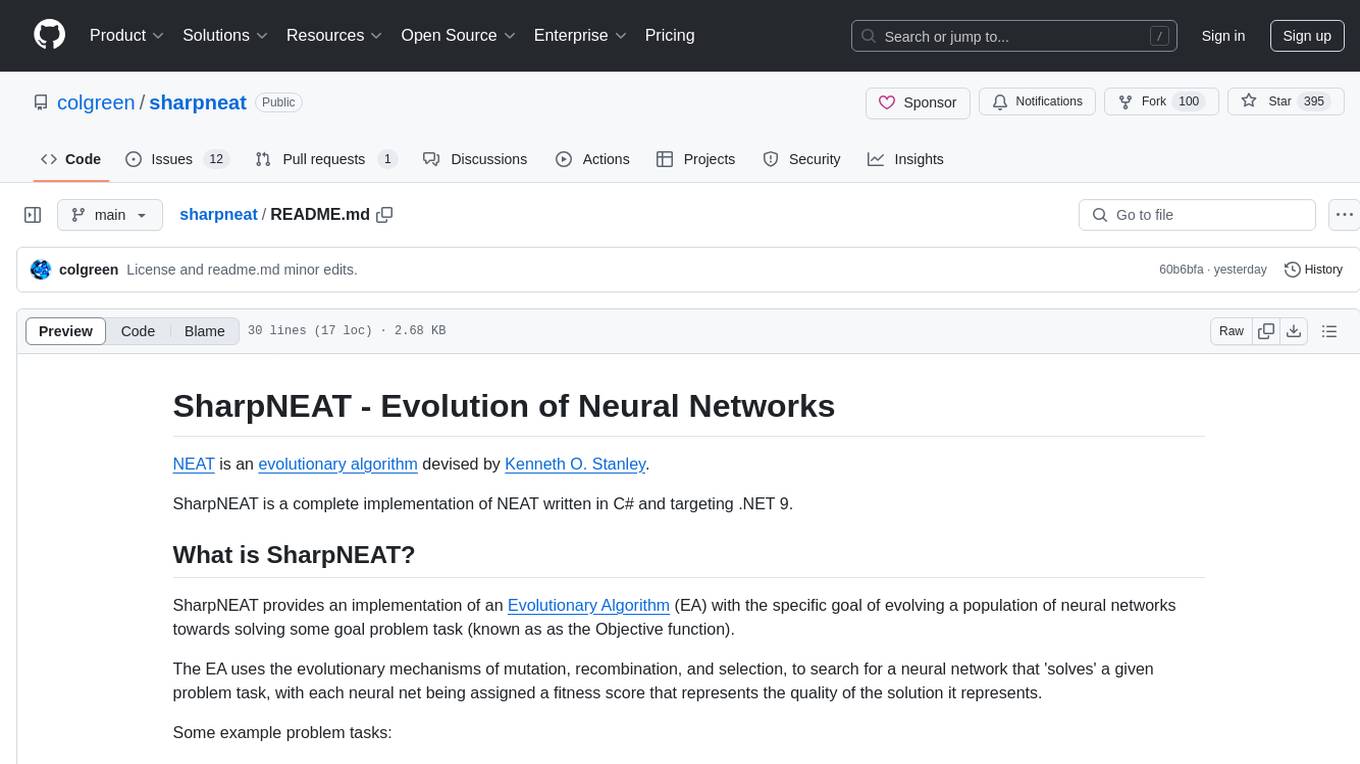

sharpneat

SharpNEAT is a complete implementation of NEAT written in C# and targeting .NET 9. It provides an implementation of an Evolutionary Algorithm (EA) with the specific goal of evolving a population of neural networks towards solving some goal problem task. The framework facilitates research into evolutionary computation and specifically evolution of neural networks, allowing for modular experimentation with genetic coding and evolutionary algorithms.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.