TFTMuZeroAgent

Team fight tactics AI

Stars: 117

TFTMuZeroAgent is an implementation of a purely artificial intelligence algorithm to play Teamfight Tactics, an auto chess game made by Riot. It uses a simulation of TFT Set 4 and the MuZero reinforcement learning algorithm. The project provides a multi-agent petting zoo environment where players, pool, and game round classes are designed for AI project. The implementation excludes graphics and sounds but covers all aspects of the game from set 4. The codebase is open for contributions and improvements, allowing for additional models to be added to the environment.

README:

Teamfight Tactics is an auto chess game made by Riot.

To my knowledge, this is the first attempt at purely artificial intelligence algorithm to play Team Fight Tactics.

This implementation uses a simulation of TFT Set 4 based on Avadaa's project.

The player rounds as well as the player, pool, and game round classes were designed for this AI project. All aspects of the game from set 4 minus graphics and sounds are implemented in our simulation. It is set up as a multi-agent petting zoo environment.

The reinforcement learning algorithm currently in use is MuZero based on google's implementation but adjusted for use here. Many features in google's implementation were removed and I adjusted the tree to allow for multiple players to take actions at the same time to support batching.

Any and all questions related to this project are welcome at [email protected]

Any and all further improvements to this project will be looked at, discussed, and highly likely accepted.

The environment is separated from the model so if someone wants to add an additional model to this environment, they are welcome to do so following the same examples as the current model is set up.

If anyone wants to participate in the project, all are welcome to join the discord at https://discord.gg/cPKwGU7dbU

Python version: ~ Python 3.8

- Create a virtual env to use with the project & activate virtual env

- Install all necessary libraries in codebase

Before starting training, you need to build the c++/cython style external packages. (GCC version 7.5+ is required.)

cd core/ctree

bash make.sh

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for TFTMuZeroAgent

Similar Open Source Tools

TFTMuZeroAgent

TFTMuZeroAgent is an implementation of a purely artificial intelligence algorithm to play Teamfight Tactics, an auto chess game made by Riot. It uses a simulation of TFT Set 4 and the MuZero reinforcement learning algorithm. The project provides a multi-agent petting zoo environment where players, pool, and game round classes are designed for AI project. The implementation excludes graphics and sounds but covers all aspects of the game from set 4. The codebase is open for contributions and improvements, allowing for additional models to be added to the environment.

Bagatur

Bagatur chess engine is a powerful Java chess engine that can run on Android devices and desktop computers. It supports the UCI protocol and can be easily integrated into chess programs with user interfaces. The engine is available for download on various platforms and has advanced features like SMP (multicore) support and NNUE evaluation function. Bagatur also includes syzygy endgame tablebases and offers various UCI options for customization. The project started as a personal challenge to create a chess program that could defeat a friend, leading to years of development and improvements.

Winter

Winter is a UCI chess engine that has competed at top invite-only computer chess events. It is the top-rated chess engine from Switzerland and has a level of play that is super human but below the state of the art reached by large, distributed, and resource-intensive open-source projects like Stockfish and Leela Chess Zero. Winter has relied on many machine learning algorithms and techniques over the course of its development, including certain clustering methods not used in any other chess programs, such as Gaussian Mixture Models and Soft K-Means. As of Winter 0.6.2, the evaluation function relies on a small neural network for more precise evaluations.

gdx-ai

An artificial intelligence framework entirely written in Java for game development with libGDX. It is a high-performance framework providing common AI techniques used in the game industry, covering movement AI, pathfinding, decision making, and infrastructure. The framework is designed to be used with libGDX but can be used independently. Current features include steering behaviors, formation motion, A* pathfinding, hierarchical pathfinding, behavior trees, state machine, message handling, and scheduling.

skyeye

SkyEye is an AI-powered Ground Controlled Intercept (GCI) bot designed for the flight simulator Digital Combat Simulator (DCS). It serves as an advanced replacement for the in-game E-2, E-3, and A-50 AI aircraft, offering modern voice recognition, natural-sounding voices, real-world brevity and procedures, a wide range of commands, and intelligent battlespace monitoring. The tool uses Speech-To-Text and Text-To-Speech technology, can run locally or on a cloud server, and is production-ready software used by various DCS communities.

Simulator-Controller

Simulator Controller is a modular administration and controller application for Sim Racing, featuring a comprehensive plugin automation framework for external controller hardware. It includes voice chat capable Assistants like Virtual Race Engineer, Race Strategist, Race Spotter, and Driving Coach. The tool offers features for setup, strategy development, monitoring races, and more. Developed in AutoHotkey, it supports various simulation games and integrates with third-party applications for enhanced functionality.

AI4U

AI4U is a tool that provides a framework for modeling virtual reality and game environments. It offers an alternative approach to modeling Non-Player Characters (NPCs) in Godot Game Engine. AI4U defines an agent living in an environment and interacting with it through sensors and actuators. Sensors provide data to the agent's brain, while actuators send actions from the agent to the environment. The brain processes the sensor data and makes decisions (selects an action by time). AI4U can also be used in other situations, such as modeling environments for artificial intelligence experiments.

linesight

Linesight is a reinforcement learning project focused on advancing AI capabilities in the racing game Trackmania. It aims to push the boundaries of AI performance by utilizing deep learning algorithms to achieve human-level driving and beat world records on official campaign tracks. The project provides an interface to interact with Trackmania Nations Forever programmatically, enabling tasks such as sending inputs, retrieving car states, and capturing screenshots. With a strong emphasis on equality of input devices, Linesight serves as a benchmark for testing various reinforcement learning algorithms in a challenging and dynamic gaming environment.

dota2ai

The Dota2 AI Framework project aims to provide a framework for creating AI bots for Dota2, focusing on coordination and teamwork. It offers a LUA sandbox for scripting, allowing developers to code bots that can compete in standard matches. The project acts as a proxy between the game and a web service through JSON objects, enabling bots to perform actions like moving, attacking, casting spells, and buying items. It encourages contributions and aims to enhance the AI capabilities in Dota2 modding.

trackmania_rl_public

This repository contains the reinforcement learning training code for Trackmania AI with Reinforcement Learning. It is a research work-in-progress project that aims to apply reinforcement learning principles to play Trackmania. The code is constantly evolving and may not be clean or easily usable. The training hyperparameters are intentionally changed in the public repository to encourage understanding of reinforcement learning principles. The project may not receive active support for setup or usage at the moment.

tank-royale

Robocode Tank Royale is a programming game where the goal is to code a bot in the form of a virtual tank to compete against other bots in a virtual battle arena. The player is the programmer of a bot, who will have no direct influence on the game him/herself. Instead, the player must write a program with the logic for the brain of the bot. The program contains instructions to the bot about how it should move, scan for opponent bots, fire its gun, and how it should react to various events occurring during a battle. The name **Robocode** is short for "Robot code," which originates from the original/first version of the game. **Robocode Tank Royale** is the next evolution/version of the game, where bots can participate via the Internet/network. All bots run over a web socket. The game aims to help you learn how to program and improve your programming skills, and have fun while doing it. Robocode is also useful when studying or improving machine learning in a fast-running real-time game. Robocode's battles take place on a "battlefield," where bots fight it out until only one is left, like a Battle Royale game. Hence the name **Tank Royale**. Note that Robocode contains no gore, blood, people, and politics. The battles are simply for the excitement of the competition we appreciate so much.

husky

Husky is a research-focused programming language designed for next-generation computing. It aims to provide a powerful and ergonomic development experience for various tasks, including system level programming, web/native frontend development, parser/compiler tasks, game development, formal verification, machine learning, and more. With a strong type system and support for human-in-the-loop programming, Husky enables users to tackle complex tasks such as explainable image classification, natural language processing, and reinforcement learning. The language prioritizes debugging, visualization, and human-computer interaction, offering agile compilation and evaluation, multiparadigm support, and a commitment to a good ecosystem.

god-level-ai

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This is a drill for people who aim to be in the top 1% of Data and AI experts. The repository provides a routine for deep and shallow work sessions, covering topics from Python to AI/ML System Design and Personal Branding & Portfolio. It emphasizes the importance of continuous effort and action in the tech field.

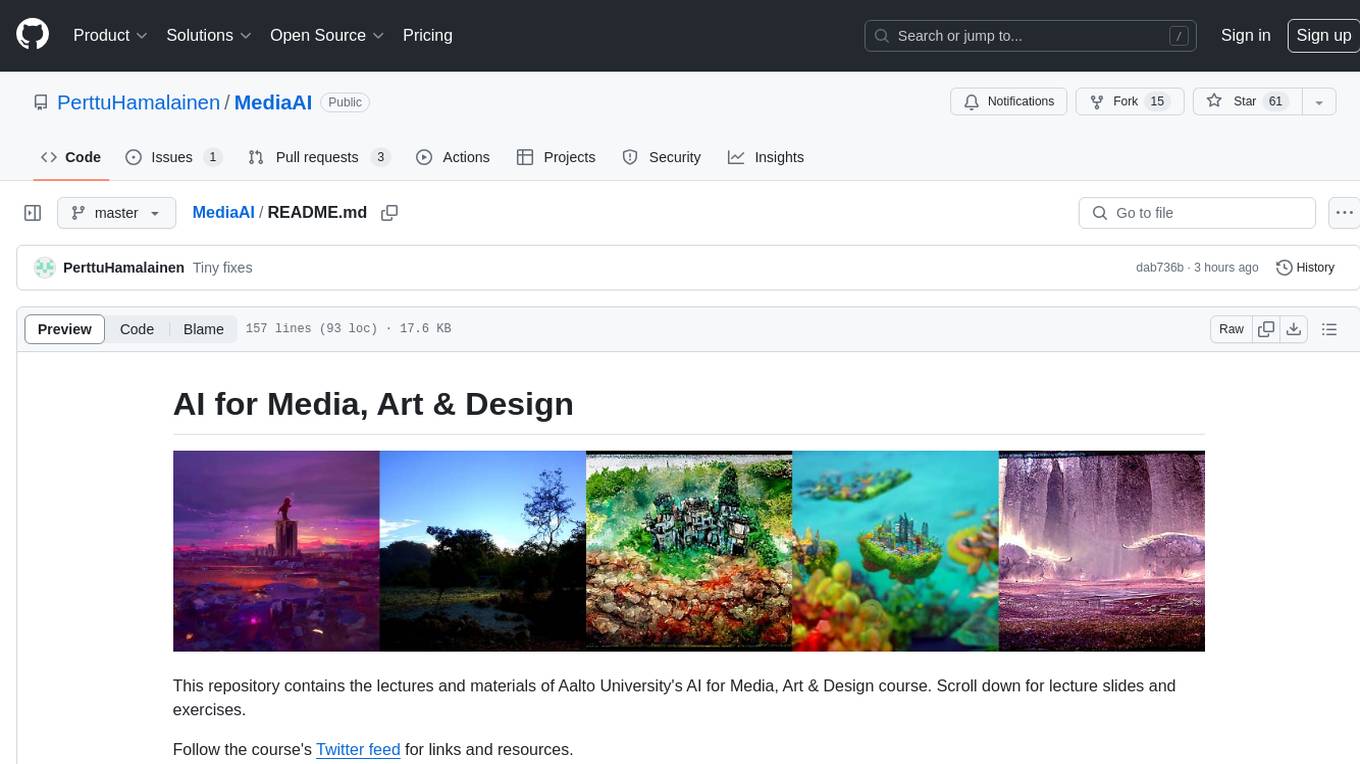

MediaAI

MediaAI is a repository containing lectures and materials for Aalto University's AI for Media, Art & Design course. The course is a hands-on, project-based crash course focusing on deep learning and AI techniques for artists and designers. It covers common AI algorithms & tools, their applications in art, media, and design, and provides hands-on practice in designing, implementing, and using these tools. The course includes lectures, exercises, and a final project based on students' interests. Students can complete the course without programming by creatively utilizing existing tools like ChatGPT and DALL-E. The course emphasizes collaboration, peer-to-peer tutoring, and project-based learning. It covers topics such as text generation, image generation, optimization, and game AI.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

Large-Language-Model-Notebooks-Course

This practical free hands-on course focuses on Large Language models and their applications, providing a hands-on experience using models from OpenAI and the Hugging Face library. The course is divided into three major sections: Techniques and Libraries, Projects, and Enterprise Solutions. It covers topics such as Chatbots, Code Generation, Vector databases, LangChain, Fine Tuning, PEFT Fine Tuning, Soft Prompt tuning, LoRA, QLoRA, Evaluate Models, Knowledge Distillation, and more. Each section contains chapters with lessons supported by notebooks and articles. The course aims to help users build projects and explore enterprise solutions using Large Language Models.

For similar tasks

TFTMuZeroAgent

TFTMuZeroAgent is an implementation of a purely artificial intelligence algorithm to play Teamfight Tactics, an auto chess game made by Riot. It uses a simulation of TFT Set 4 and the MuZero reinforcement learning algorithm. The project provides a multi-agent petting zoo environment where players, pool, and game round classes are designed for AI project. The implementation excludes graphics and sounds but covers all aspects of the game from set 4. The codebase is open for contributions and improvements, allowing for additional models to be added to the environment.

understand-r1-zero

The 'understand-r1-zero' repository focuses on understanding R1-Zero-like training from a critical perspective. It provides insights into base models and reinforcement learning components, highlighting findings and proposing solutions for biased optimization. The repository offers a minimalist recipe for R1-Zero training, detailing the RL-tuning process and achieving state-of-the-art performance with minimal compute resources. It includes codebase, models, and paper related to R1-Zero training implemented with the Oat framework, emphasizing research-friendly and efficient LLM RL techniques.

tetris-ai

A bot that plays Tetris using deep reinforcement learning. The agent learns to play by training itself with a neural network and Q Learning algorithm. It explores different 'paths' to achieve higher scores and makes decisions based on predicted scores for possible moves. The game state includes attributes like lines cleared, holes, bumpiness, and total height. The agent is implemented in Python using Keras framework with a deep neural network structure. Training involves a replay queue, random sampling, and optimization techniques. Results show the agent's progress in achieving higher scores over episodes.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.