LLM-workshop-2024

A 4-hour coding workshop to understand how LLMs are implemented and used

Stars: 610

LLM-workshop-2024 is a tutorial designed for coders interested in understanding the building blocks of large language models (LLMs), how LLMs work, and how to code them from scratch in PyTorch. The tutorial covers topics such as introduction to LLMs, understanding LLM input data, coding LLM architecture, pretraining LLMs, loading pretrained weights, and finetuning LLMs using open-source libraries. Participants will learn to implement a small GPT-like LLM, including data input pipeline, core architecture components, and pretraining code.

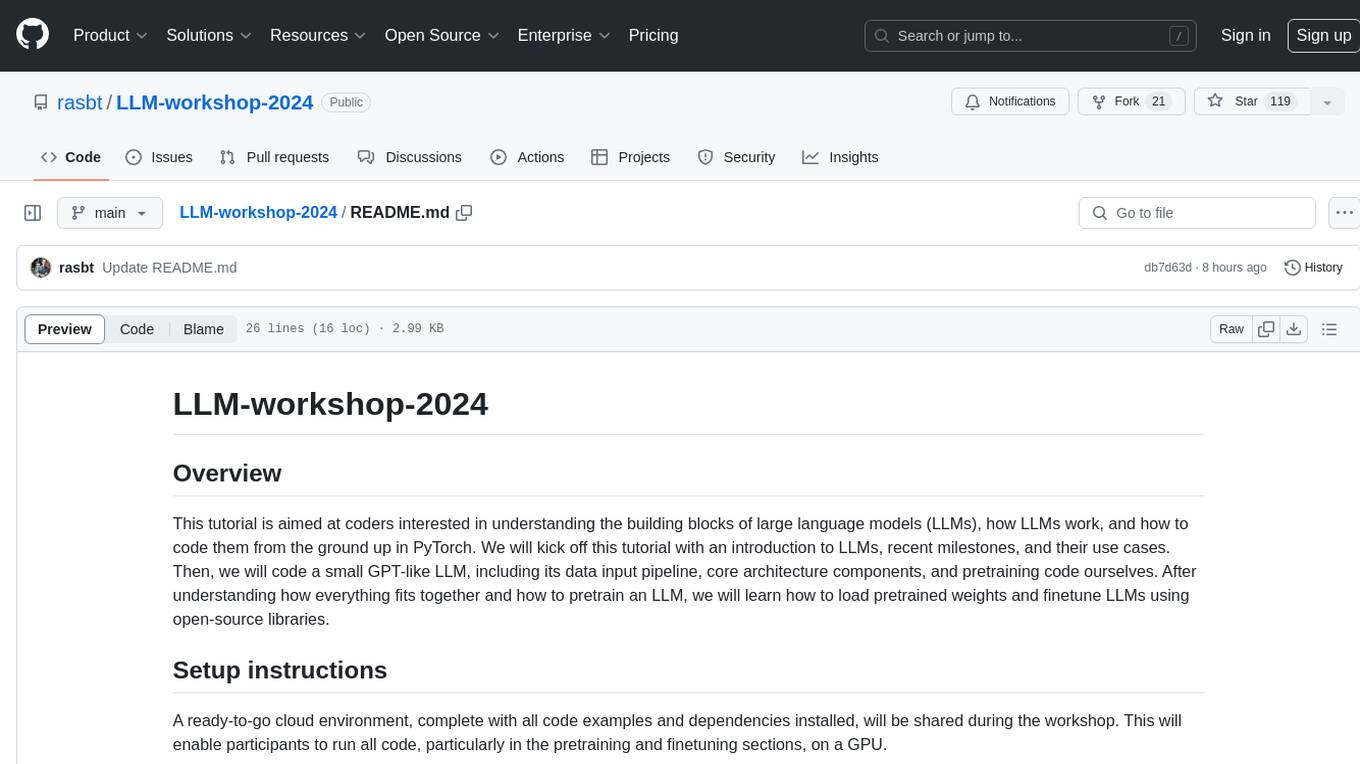

README:

This tutorial is aimed at coders interested in understanding the building blocks of large language models (LLMs), how LLMs work, and how to code them from the ground up in PyTorch. We will kick off this tutorial with an introduction to LLMs, recent milestones, and their use cases. Then, we will code a small GPT-like LLM, including its data input pipeline, core architecture components, and pretraining code ourselves. After understanding how everything fits together and how to pretrain an LLM, we will learn how to load pretrained weights and finetune LLMs using open-source libraries.

The code material is based on my Build a Large Language Model From Scratch book and also uses the LitGPT library.

A ready-to-go cloud environment, complete with all code examples and dependencies installed, is available here. This enables participants to run all code, particularly in the pretraining and finetuning sections, on a GPU.

In addition, see the instructions in the setup folder to set up your computer to run the code locally.

| Title | Description | Folder | |

|---|---|---|---|

| 1 | Introduction to LLMs | An introduction to the workshop introducing LLMs, the topics being covered in this workshop, and setup instructions. | 01_intro |

| 2 | Understanding LLM Input Data | In this section, we are coding the text input pipeline by implementing a text tokenizer and a custom PyTorch DataLoader for our LLM | 02_data |

| 3 | Coding an LLM architecture | In this section, we will go over the individual building blocks of LLMs and assemble them in code. We will not cover all modules in meticulous detail but will focus on the bigger picture and how to assemble them into a GPT-like model. | 03_architecture |

| 4 | Pretraining LLMs | In part 4, we will cover the pretraining process of LLMs and implement the code to pretrain the model architecture we implemented previously. Since pretraining is expensive, we will only pretrain it on a small text sample available in the public domain so that the LLM is capable of generating some basic sentences. | 04_pretraining |

| 5 | Loading pretrained weights | Since pretraining is a long and expensive process, we will now load pretrained weights into our self-implemented architecture. Then, we will introduce the LitGPT open-source library, which provides more sophisticated (but still readable) code for training and finetuning LLMs. We will learn how to load weights of pretrained LLMs (Llama, Phi, Gemma, Mistral) in LitGPT. | 05_weightloading |

| 6 | Finetuning LLMs | This section will introduce LLM finetuning techniques, and we will prepare a small dataset for instruction finetuning, which we will then use to finetune an LLM in LitGPT. | 06_finetuning |

(The code material is based on my Build a Large Language Model From Scratch book and also uses the LitGPT library.)

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLM-workshop-2024

Similar Open Source Tools

LLM-workshop-2024

LLM-workshop-2024 is a tutorial designed for coders interested in understanding the building blocks of large language models (LLMs), how LLMs work, and how to code them from scratch in PyTorch. The tutorial covers topics such as introduction to LLMs, understanding LLM input data, coding LLM architecture, pretraining LLMs, loading pretrained weights, and finetuning LLMs using open-source libraries. Participants will learn to implement a small GPT-like LLM, including data input pipeline, core architecture components, and pretraining code.

Large-Language-Model-Notebooks-Course

This practical free hands-on course focuses on Large Language models and their applications, providing a hands-on experience using models from OpenAI and the Hugging Face library. The course is divided into three major sections: Techniques and Libraries, Projects, and Enterprise Solutions. It covers topics such as Chatbots, Code Generation, Vector databases, LangChain, Fine Tuning, PEFT Fine Tuning, Soft Prompt tuning, LoRA, QLoRA, Evaluate Models, Knowledge Distillation, and more. Each section contains chapters with lessons supported by notebooks and articles. The course aims to help users build projects and explore enterprise solutions using Large Language Models.

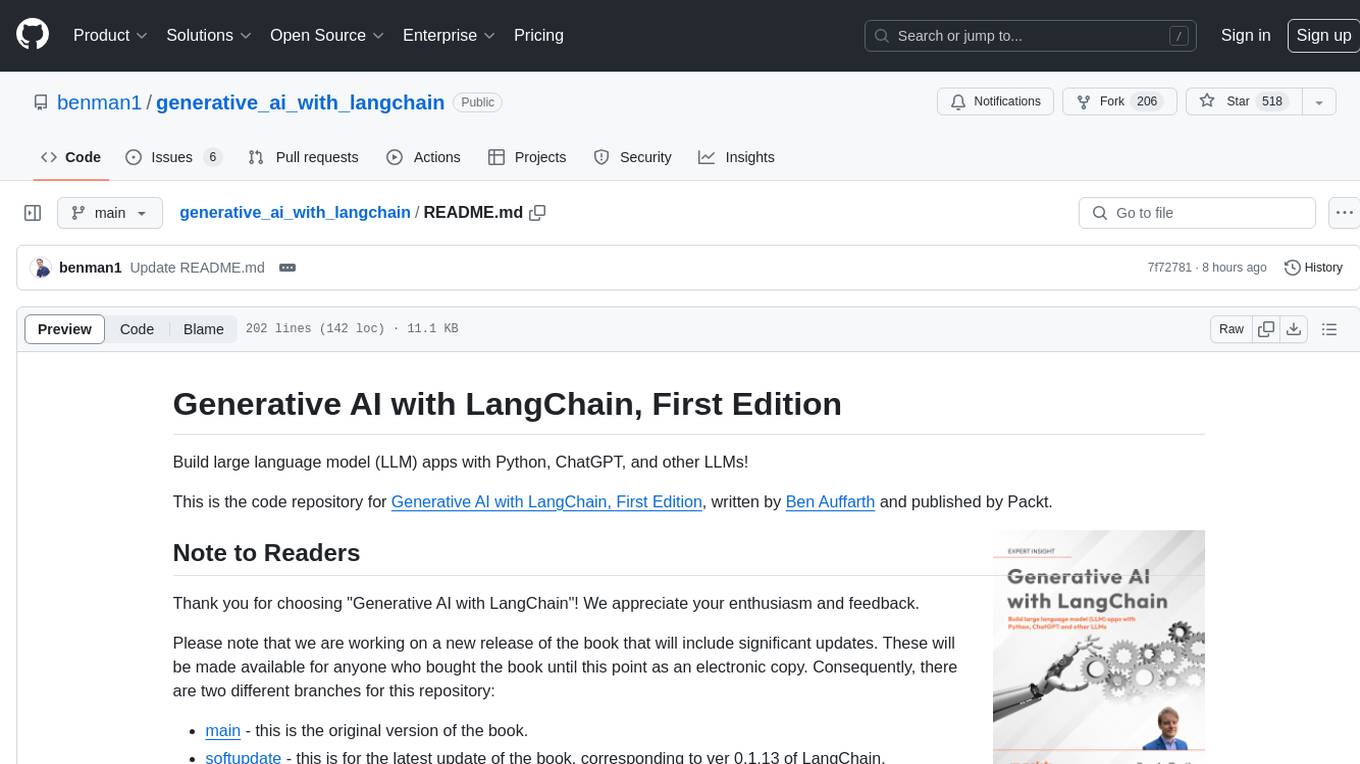

generative_ai_with_langchain

Generative AI with LangChain is a code repository for building large language model (LLM) apps with Python, ChatGPT, and other LLMs. The repository provides code examples, instructions, and configurations for creating generative AI applications using the LangChain framework. It covers topics such as setting up the development environment, installing dependencies with Conda or Pip, using Docker for environment setup, and setting API keys securely. The repository also emphasizes stability, code updates, and user engagement through issue reporting and feedback. It aims to empower users to leverage generative AI technologies for tasks like building chatbots, question-answering systems, software development aids, and data analysis applications.

aitour-interact-with-llms

This repository is for the AI Tour workshop: Interacting with Multimodal models in Azure AI Foundry. The workshop provides a hands-on introduction to core concepts and best practices for interacting with OpenAI models in Azure AI Foundry portal. Participants can innovate with Azure OpenAI's GPT-4o multimodal model to generate text, sound, and images using GPT-4o-mini, DALL-E, and GPT-4o-realtime. The workshop also covers creating AI Agents to enhance user experiences and drive innovation. It includes instructions, resources for continued learning, and information on responsible AI practices.

mlforpublicpolicylab

The Machine Learning for Public Policy Lab is a project-based course focused on solving real-world problems using machine learning in the context of public policy and social good. Students will gain hands-on experience building end-to-end machine learning systems, developing skills in problem formulation, working with messy data, communicating with non-technical stakeholders, model interpretability, and understanding algorithmic bias & disparities. The course covers topics such as project scoping, data acquisition, feature engineering, model evaluation, bias and fairness, and model interpretability. Students will work in small groups on policy projects, with graded components including project proposals, presentations, and final reports.

rag-cookbooks

Welcome to the comprehensive collection of advanced + agentic Retrieval-Augmented Generation (RAG) techniques. This repository covers the most effective advanced + agentic RAG techniques with clear implementations and explanations. It aims to provide a helpful resource for researchers and developers looking to use advanced RAG techniques in their projects, offering ready-to-use implementations and guidance on evaluation methods. The RAG framework addresses limitations of Large Language Models by using external documents for in-context learning, ensuring contextually relevant and accurate responses. The repository includes detailed descriptions of various RAG techniques, tools used, and implementation guidance for each technique.

MediaAI

MediaAI is a repository containing lectures and materials for Aalto University's AI for Media, Art & Design course. The course is a hands-on, project-based crash course focusing on deep learning and AI techniques for artists and designers. It covers common AI algorithms & tools, their applications in art, media, and design, and provides hands-on practice in designing, implementing, and using these tools. The course includes lectures, exercises, and a final project based on students' interests. Students can complete the course without programming by creatively utilizing existing tools like ChatGPT and DALL-E. The course emphasizes collaboration, peer-to-peer tutoring, and project-based learning. It covers topics such as text generation, image generation, optimization, and game AI.

god-level-ai

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This is a drill for people who aim to be in the top 1% of Data and AI experts. The repository provides a routine for deep and shallow work sessions, covering topics from Python to AI/ML System Design and Personal Branding & Portfolio. It emphasizes the importance of continuous effort and action in the tech field.

claudine

Claudine is an AI agent designed to reason and act autonomously, leveraging the Anthropic API, Unix command line tools, HTTP, local hard drive data, and internet data. It can administer computers, analyze files, implement features in source code, create new tools, and gather contextual information from the internet. Users can easily add specialized tools. Claudine serves as a blueprint for implementing complex autonomous systems, with potential for customization based on organization-specific needs. The tool is based on the anthropic-kotlin-sdk and aims to evolve into a versatile command line tool similar to 'git', enabling branching sessions for different tasks.

dialog

Dialog is an API-focused tool designed to simplify the deployment of Large Language Models (LLMs) for programmers interested in AI. It allows users to deploy any LLM based on the structure provided by dialog-lib, enabling them to spend less time coding and more time training their models. The tool aims to humanize Retrieval-Augmented Generative Models (RAGs) and offers features for better RAG deployment and maintenance. Dialog requires a knowledge base in CSV format and a prompt configuration in TOML format to function effectively. It provides functionalities for loading data into the database, processing conversations, and connecting to the LLM, with options to customize prompts and parameters. The tool also requires specific environment variables for setup and configuration.

uvadlc_notebooks

The UvA Deep Learning Tutorials repository contains a series of Jupyter notebooks designed to help understand theoretical concepts from lectures by providing corresponding implementations. The notebooks cover topics such as optimization techniques, transformers, graph neural networks, and more. They aim to teach details of the PyTorch framework, including PyTorch Lightning, with alternative translations to JAX+Flax. The tutorials are integrated as official tutorials of PyTorch Lightning and are relevant for graded assignments and exams.

ai-powered-search

AI-Powered Search provides code examples for the book 'AI-Powered Search' by Trey Grainger, Doug Turnbull, and Max Irwin. The book teaches modern machine learning techniques for building search engines that continuously learn from users and content to deliver more intelligent and domain-aware search experiences. It covers semantic search, retrieval augmented generation, question answering, summarization, fine-tuning transformer-based models, personalized search, machine-learned ranking, click models, and more. The code examples are in Python, leveraging PySpark for data processing and Apache Solr as the default search engine. The repository is open source under the Apache License, Version 2.0.

TagUI

TagUI is an open-source RPA tool that allows users to automate repetitive tasks on their computer, including tasks on websites, desktop apps, and the command line. It supports multiple languages and offers features like interacting with identifiers, automating data collection, moving data between TagUI and Excel, and sending Telegram notifications. Users can create RPA robots using MS Office Plug-ins or text editors, run TagUI on the cloud, and integrate with other RPA tools. TagUI prioritizes enterprise security by running on users' computers and not storing data. It offers detailed logs, enterprise installation guides, and support for centralised reporting.

AliceVision

AliceVision is a photogrammetric computer vision framework which provides a 3D reconstruction pipeline. It is designed to process images from different viewpoints and create detailed 3D models of objects or scenes. The framework includes various algorithms for feature detection, matching, and structure from motion. AliceVision is suitable for researchers, developers, and enthusiasts interested in computer vision, photogrammetry, and 3D modeling. It can be used for applications such as creating 3D models of buildings, archaeological sites, or objects for virtual reality and augmented reality experiences.

VectorHub

VectorHub is a free and open-sourced learning hub for people interested in adding vector retrieval to their ML stack. On VectorHub you will find practical resources to help you create MVPs with easy-to-follow learning materials, solve use case specific challenges in vector retrieval, get confident in taking their MVPs to production and making them actually useful, and learn about vendors in the space and select the ones that fit their use-case.

Large-Language-Models

Large Language Models (LLM) are used to browse the Wolfram directory and associated URLs to create the category structure and good word embeddings. The goal is to generate enriched prompts for GPT, Wikipedia, Arxiv, Google Scholar, Stack Exchange, or Google search. The focus is on one subdirectory: Probability & Statistics. Documentation is in the project textbook `Projects4.pdf`, which is available in the folder. It is recommended to download the document and browse your local copy with Chrome, Edge, or other viewers. Unlike on GitHub, you will be able to click on all the links and follow the internal navigation features. Look for projects related to NLP and LLM / xLLM. The best starting point is project 7.2.2, which is the core project on this topic, with references to all satellite projects. The project textbook (with solutions to all projects) is the core document needed to participate in the free course (deep tech dive) called **GenAI Fellowship**. For details about the fellowship, follow the link provided. An uncompressed version of `crawl_final_stats.txt.gz` is available on Google drive, which contains all the crawled data needed as input to the Python scripts in the XLLM5 and XLLM6 folders.

For similar tasks

LLM-workshop-2024

LLM-workshop-2024 is a tutorial designed for coders interested in understanding the building blocks of large language models (LLMs), how LLMs work, and how to code them from scratch in PyTorch. The tutorial covers topics such as introduction to LLMs, understanding LLM input data, coding LLM architecture, pretraining LLMs, loading pretrained weights, and finetuning LLMs using open-source libraries. Participants will learn to implement a small GPT-like LLM, including data input pipeline, core architecture components, and pretraining code.

litgpt

LitGPT is a command-line tool designed to easily finetune, pretrain, evaluate, and deploy 20+ LLMs **on your own data**. It features highly-optimized training recipes for the world's most powerful open-source large-language-models (LLMs).

femtoGPT

femtoGPT is a pure Rust implementation of a minimal Generative Pretrained Transformer. It can be used for both inference and training of GPT-style language models using CPUs and GPUs. The tool is implemented from scratch, including tensor processing logic and training/inference code of a minimal GPT architecture. It is a great start for those fascinated by LLMs and wanting to understand how these models work at deep levels. The tool uses random generation libraries, data-serialization libraries, and a parallel computing library. It is relatively fast on CPU and correctness of gradients is checked using the gradient-check method.

LLMForEverybody

LLMForEverybody is a comprehensive repository covering various aspects of large language models (LLMs) including pre-training, architecture, optimizers, activation functions, attention mechanisms, tokenization, parallel strategies, training frameworks, deployment, fine-tuning, quantization, GPU parallelism, prompt engineering, agent design, RAG architecture, enterprise deployment challenges, evaluation metrics, and current hot topics in the field. It provides detailed explanations, tutorials, and insights into the workings and applications of LLMs, making it a valuable resource for researchers, developers, and enthusiasts interested in understanding and working with large language models.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.