octelium

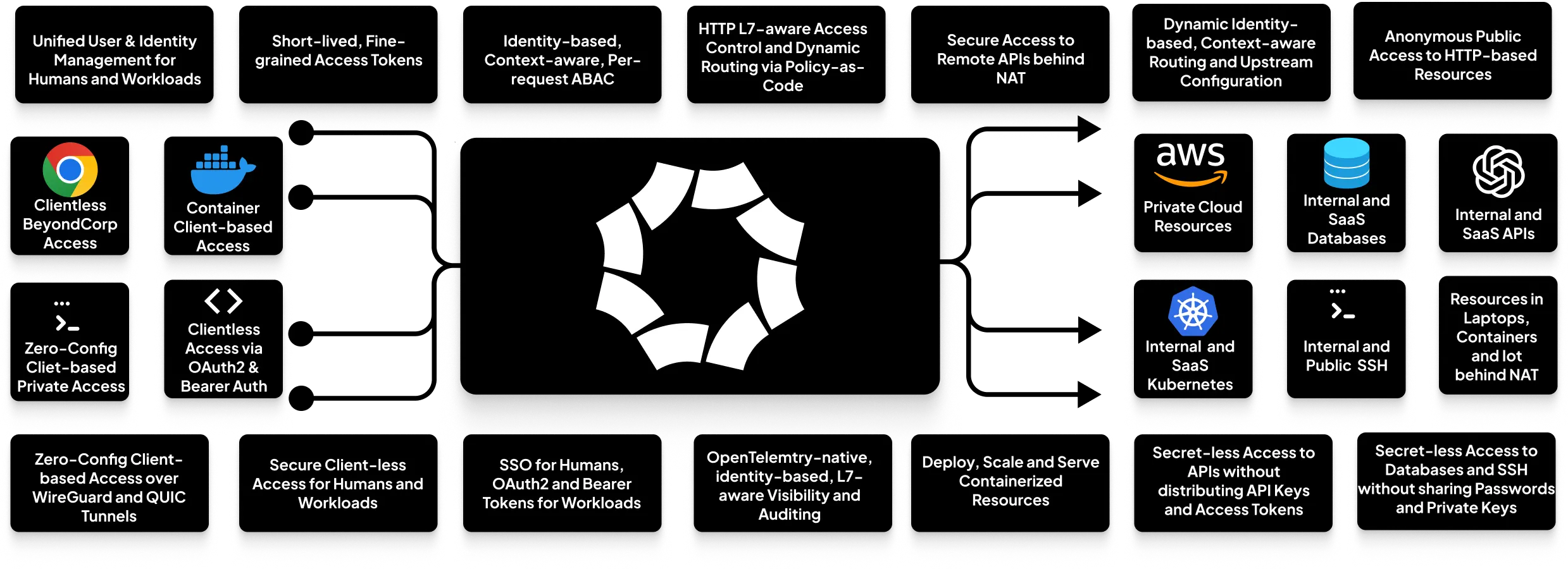

A next-gen FOSS self-hosted unified zero trust secure access platform that can operate as a remote access VPN, a ZTNA/BeyondCorp architecture, API/AI gateway, a PaaS, an infrastructure for MCP & A2A architectures or even as an ngrok-alternative and a homelab infrastructure.

Stars: 2313

Octelium is a free and open source, self-hosted, unified zero trust secure access platform that operates as a modern zero-config remote access VPN, a comprehensive Zero Trust Network Access (ZTNA)/BeyondCorp platform, an ngrok/Cloudflare Tunnel alternative, an API gateway, an AI/LLM gateway, a PaaS-like platform, a Kubernetes gateway/ingress, and a homelab infrastructure. It provides scalable zero trust architecture for identity-based, application-layer aware secure access via private client-based access over WireGuard/QUIC tunnels and public clientless access, with context-aware access control. Octelium offers dynamic secretless access, fine-grained access control, identity-based routing, continuous strong authentication, OpenTelemetry-native auditing, passwordless SSH, effortless deployment of containerized applications, centralized management, and more. It is open source, designed for self-hosting, and provides a commercial license option for businesses.

README:

- What is Octelium?

- Use Cases

- Main Features

- Try Octelium in a Codespace

- Install CLI Tools

- Install your First Cluster

- Useful Links

- License

- Support

- Frequently Asked Questions

- Legal

Octelium is a free and open source, self-hosted, unified zero trust secure access platform that is flexible enough to operate as a modern zero-config remote access VPN, a comprehensive Zero Trust Network Access (ZTNA)/BeyondCorp platform, an ngrok/Cloudflare Tunnel alternative, an API gateway, an AI/LLM gateway, a scalable infrastructure for access and deployment to build MCP gateways and A2A architectures/meshes, a PaaS-like platform, a Kubernetes gateway/ingress and even as a homelab infrastructure.

Octelium provides a scalable zero trust architecture (ZTA) for identity-based, application-layer (L7) aware secretless secure access via both private client-based access over WireGuard/QUIC tunnels as well as public clientless access, for both humans and workloads, to any private/internal resource behind NAT in any environment as well as to publicly protected resources such as SaaS APIs and databases, via context-aware access control on a per-request basis.

Octelium is a versatile platform that can serve as a complete or partial solution for many different needs. Here are some of the key use cases:

- Modern Remote Access VPN: A zero-trust, layer-7 aware alternative to commercial remote access/corporate VPNs like OpenVPN Access Server, Twingate, and Tailscale, providing both zero-config client access over WireGuard/QUIC and client-less access via dynamic, identity-based, context-aware Policies.

- Unified ZTNA/BeyondCorp Architecture: A comprehensive Zero Trust Network Access (ZTNA) platform, similar to Cloudflare Access, Google BeyondCorp, or Teleport.

- Self-Hosted Secure Tunnels: A programmable infrastructure for secure tunnels and reverse proxies for both secure identity-based as well as anonymous clientless access, offering a powerful, self-hosted alternative to ngrok or Cloudflare Tunnel. You can see an example here.

- Self-Hosted PaaS: A scalable platform to deploy, manage, and host your containerized applications, similar to Vercel or Netlify. See an example for Next.js/Vite apps.

- API Gateway: A self-hosted, scalable, and secure API gateway for microservices, providing a robust alternative to Kong Gateway or Apigee. You can see an example here.

- AI Gateway: A scalable AI gateway with identity-based access control, routing, and visibility for any AI LLM provider. See an example here.

- Unified Zero Trust Access to SaaS APIs: Provides secretless access to SaaS APIs for both teams and workloads, eliminating the need to manage and distribute long-lived and over-privileged API keys. See a generic example here, AWS Lambda here, and AWS S3 here.

- MCP Gateways and A2A-based Architectures A secure infrastructure for Model Context Protocol (MCP) gateways and Agent2Agent Protocol (A2A)-based architectures that provides identity management, authentication over standard OAuth2 client credentials and bearer authentication, secure remote access and deployment as well as identity-based, L7-aware access control via policy-as-code and visibility (see an example here).

- Homelab: A unified self-hosted Homelab infrastructure to connect and provide secure remote access to all your resources behind NAT from anywhere (e.g. all your devices including your laptop, IoT, cloud providers, Raspberry Pis, routers, etc...) as well as a secure deployment platform to deploy and privately as well as publicly host your websites, blogs, APIs or to remotely test heavy containers (e.g. LLM runtimes such as Ollama, databases such as ClickHouse and Elasticsearch, Pi-hole, etc...). See examples for remote VSCode, and Pi-hole.

- Kubernetes Ingress Alternative: A more advanced alternative to standard Kubernetes ingress controllers and load balancers, allowing you to route to any Kubernetes service via dynamic, L7-aware policy-as-code.

-

A Modern, Unified Zero Trust Architecture Built on a scalable architecture of identity-aware proxies to control access at the application layer (L7), Octelium unifies access for humans and workloads to both private and protected public resources. It supports both zero-config VPN-like client-based access over WireGuard/QUIC and client-less BeyondCorp access, all built on top of Kubernetes for automatic scalability (read in detail about how Octelium works here).

-

Dynamic Secretless Access Octelium's layer-7 awareness enables Users to seamlessly access resources protected by application-layer credentials without exposing, managing and distributing such secrets (read more here). This works for HTTP APIs without sharing API keys and access tokens, SSH servers with sharing passwords and private keys, Kubernetes clusters, PostgreSQL/MySQL databases as well as any L7 protocol protected by mTLS.

-

Modern, Dynamic, Fine-grained Access Control Octelium provides you a modern, centralized, scalable, fine-grained, dynamic, context-aware, layer-7 aware, attribute-based access control system (ABAC) on a per-request basis (read more here) with policy-as-code using CEL and OPA (Open Policy Agent). Octelium has no notion of an "admin" user, enforcing zero standing privileges by default.

-

Context-aware, identity-based, L7-aware dynamic configuration and routing Route to different upstreams, different credentials representing different upstream contexts and accounts using policy-as-code with CEL and OPA on a per-request basis. You can read in detail about dynamic configuration here.

-

Continuous Strong Authentication A unified authentication system for both human and workload Users, supporting any web identity provider (IdP) that uses OpenID Connect or SAML 2.0 as well as GitHub OAuth2 (read more here). It also allows for secretless authentication for workloads via OIDC-based assertions (read more here).

-

OpenTelemetry-native Auditing and Visibility Real-time, identity-based, L7-aware visibility and access logging. Every request is logged and exported to your OpenTelemetry OTLP receivers for seamless integration with your log management and SIEM tools.

-

Effortless, Passwordless SSH Octelium clients can serve SSH even without root access, enabling you to SSH into containers, IoT devices, or other hosts that can't run an SSH server (read more here).

-

Effortlessly deploy, scale and secure access to your containerized applications as Services Octelium provides you out-of-the-box PaaS-like capabilities to effortlessly deploy, manage and scale your containerized applications and serve them as Services to provide seamless secure client-based private access, client-less public BeyondCorp access as well as public anonymous access. You can read in detail about managed containers here.

-

Centralized and Declarative Management Manage your Octelium Clusters like Kubernetes with declarative management using the

octeliumctlCLI (read this quick management guide here). You can store your Cluster configurations in Git for easy reproduction and GitOps workflows. -

No change in your infrastructure is needed Your upstream resources don't need to be aware of Octelium at all. They can be listening to any behind-NAT private network, even to localhost. No public gateways, no need to open ports behind firewalls to serve your resources wherever they are.

-

Avoids Traditional VPN Networking Problems Octelium’s client-based networking eliminates a whole class of networking and routing issues that traditional VPNs suffer from. Support for dual-stack private networking regardless of the support at the upstreams and without having to deal with the pain and inconsistency of NAT64/DNS64. Unified private DNS using your own domain. Simultaneous support for WireGuard (Kernel, TUN as well as unprivileged implementations via gVisor) as well as experimentally QUIC (both TUN and unprivileged via gVisor) tunnels via a lightweight zero-config client that can run in any Linux, MacOS, Windows environment as well as container environments (e.g. Kubernetes sidecar containers for your workloads).

-

Open source and designed for self-hosting Octelium is fully open source and it is designed for single-tenant self-hosting. There is no proprietary cloud-based control plane, nor is this some crippled demo open source version of a separate fully functional SaaS paid service. You can host it on top of a single-node Kubernetes cluster running on a cheap cloud VM/VPS and you can also host it on scalable production cloud-based or on-prem multi-node Kubernetes installations with no vendor lock-in.

Read this quick guide here to install a single-node Octelium Cluster on top of any cheap cloud VM/VPS instance (e.g. DigitalOcean Droplet, Hetzner server, AWS EC2, Vultr, etc...) or a local Linux machine/Linux VM inside a MacOS/Windows machine with at least 2GB of RAM and 20GB of disk storage running a recent Linux distribution (Ubuntu 24.04 LTS or later, Debian 12+, etc...), which is good enough for most development, personal or undemanding production use cases that do not require highly available multi-node Clusters. Once you SSH into your VPS/VM as root, you can install the Cluster as follows:

curl -o install-demo-cluster.sh https://octelium.com/install-demo-cluster.sh

chmod +x install-demo-cluster.sh

# Replace <DOMAIN> with your actual domain

./install-demo-cluster.sh --domain <DOMAIN>Once the Cluster is installed. You can start managing it as shown in the guide here.

You can install and manage a demo Octelium Cluster inside a GitHub Codespace without having to install it on a real VM/machine/Kubernetes cluster and simply use it as a playground to get familiar with how the Cluster is managed. Visit the playground GitHub repository here and run it in a Codespace then follow the README instructions there to install the Cluster and start interacting with it.

You can see all available options here. You can quickly install the CLIs of the pre-built binaries as follows:

For Linux and MacOS

curl -fsSL https://octelium.com/install.sh | bashFor Windows in Powershell

iwr https://octelium.com/install.ps1 -useb | iex- What is Octelium?

- What is Zero Trust?

- How Octelium works

- First Steps to Managing the Cluster

- Policies and Access Control

- Secretless Access

- Connecting to Clusters

Octelium is free and open source software:

- The Client-side components are licensed with the Apache 2.0 License. This includes:

- The code of the

octelium,octeliumctlandoctopsCLIs as seen in the/clientdirectory. - The

octelium-goGolang SDK and the Golang protobuf APIs in the/apisdirectory. - The

/pkgdirectory.

- The code of the

- The Cluster-side components (all the components in the

/clusterdirectory) are licensed with the GNU Affero General Public (AGPLv3) License. Octelium Labs also provides a commercial license as an alternative for businesses that do not want to comply with the AGPLv3 license (read more here).

-

What is the current status of the project?

It's now in public beta. It's basically v1.0 but with bugs. The architecture, main features and APIs had been stabilized before the project was open sourced and made publicly available.

-

Why are there so few commits for such a big project?

Octelium has been in active development since early 2020 with nearly 9000 manual commits but was only open sourced in May 2025 in a new repository when it became mature and stable enough.

-

Who's behind this project?

Octelium, so far, has been developed by George Badawi, the sole owner of Octelium Labs LLC. See how to contact me at https://octelium.com/contact. You can also email me directly at [email protected].

-

Is Octelium a remote access VPN?

Octelium can seamlessly operate as a zero-config remote WireGuard/QUIC-based access/corporate VPN from a layer-3 perspective. It is, however, a modern zero trust architecture that's based on identity-aware proxies (read about how Octelium works here) instead of operating at layer-3 to provide dynamic fine-grained application-layer (L7) aware access control, dynamic configuration and routing, secretless access and visibility. You can read more about the main features here.

-

Why is Octelium FOSS? What's the catch?

Octelium is a totally free and open source software. It is designed to be fully self-hosted and it has no hidden "server-side" components, nor does it pose artificial limits (e.g. SSO tax). Octelium isn't released as a yet another "fake" open source software project that only provides a very limited functionality or makes your life hard trying to self-host it in order to force you to eventually give up and switch to a separate fully functional paid SaaS version. In other words, Octelium Labs LLC is not a SaaS company. It is not a VC funded company either and it has no external funding as of today whatsoever besides from its sole owner. Therefore, you might ask: what's the catch? What's the business model? the answer is that the project is funded by a mix of dedicated support for businesses, alternative commercial licensing to AGPLv3-licensed components as well as providing additional enterprise-tier proprietary features and integrations (e.g. SIEM integrations for Splunk and similar vendors, SCIM 2.0/directory syncing from Microsoft Entra ID and Okta, managed Secret encryption at rest backed by Hashicorp Vault and similar vault providers, EDR integrations, etc...). You can read more here.

-

Is this project open to external contributions?

You are more than welcome to report bugs and request features. However, the project is not currently open to external contributions. In other words, pull requests will not be accepted. This, however, might change in the foreseeable future.

-

How to report security-related bugs and vulnerabilities?

Email us at [email protected].

Octelium and Octelium logo are trademarks of Octelium Labs, LLC.

WireGuard is a registered trademark of Jason A. Donenfeld.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for octelium

Similar Open Source Tools

octelium

Octelium is a free and open source, self-hosted, unified zero trust secure access platform that operates as a modern zero-config remote access VPN, a comprehensive Zero Trust Network Access (ZTNA)/BeyondCorp platform, an ngrok/Cloudflare Tunnel alternative, an API gateway, an AI/LLM gateway, a PaaS-like platform, a Kubernetes gateway/ingress, and a homelab infrastructure. It provides scalable zero trust architecture for identity-based, application-layer aware secure access via private client-based access over WireGuard/QUIC tunnels and public clientless access, with context-aware access control. Octelium offers dynamic secretless access, fine-grained access control, identity-based routing, continuous strong authentication, OpenTelemetry-native auditing, passwordless SSH, effortless deployment of containerized applications, centralized management, and more. It is open source, designed for self-hosting, and provides a commercial license option for businesses.

k8sgateway

K8sGateway is a feature-rich, fast, and flexible Kubernetes-native API gateway built on Envoy proxy and Kubernetes Gateway API. It excels in function-level routing, supports legacy apps, microservices, and serverless. It offers robust discovery capabilities, seamless integration with open-source projects, and supports hybrid applications with various technologies, architectures, protocols, and clouds.

AgentUp

AgentUp is an active development tool that provides a developer-first agent framework for creating AI agents with enterprise-grade infrastructure. It allows developers to define agents with configuration, ensuring consistent behavior across environments. The tool offers secure design, configuration-driven architecture, extensible ecosystem for customizations, agent-to-agent discovery, asynchronous task architecture, deterministic routing, and MCP support. It supports multiple agent types like reactive agents and iterative agents, making it suitable for chatbots, interactive applications, research tasks, and more. AgentUp is built by experienced engineers from top tech companies and is designed to make AI agents production-ready, secure, and reliable.

Robyn

Robyn is an experimental, semi-automated and open-sourced Marketing Mix Modeling (MMM) package from Meta Marketing Science. It uses various machine learning techniques to define media channel efficiency and effectivity, explore adstock rates and saturation curves. Built for granular datasets with many independent variables, especially suitable for digital and direct response advertisers with rich data sources. Aiming to democratize MMM, make it accessible for advertisers of all sizes, and contribute to the measurement landscape.

reductstore

ReductStore is a high-performance time series database designed for storing and managing large amounts of unstructured blob data. It offers features such as real-time querying, batching data, and HTTP(S) API for edge computing, computer vision, and IoT applications. The database ensures data integrity, implements retention policies, and provides efficient data access, making it a cost-effective solution for applications requiring unstructured data storage and access at specific time intervals.

knavigator

Knavigator is a project designed to analyze, optimize, and compare scheduling systems, with a focus on AI/ML workloads. It addresses various needs, including testing, troubleshooting, benchmarking, chaos engineering, performance analysis, and optimization. Knavigator interfaces with Kubernetes clusters to manage tasks such as manipulating with Kubernetes objects, evaluating PromQL queries, as well as executing specific operations. It can operate both outside and inside a Kubernetes cluster, leveraging the Kubernetes API for task management. To facilitate large-scale experiments without the overhead of running actual user workloads, Knavigator utilizes KWOK for creating virtual nodes in extensive clusters.

PulsarRPA

PulsarRPA is a high-performance, distributed, open-source Robotic Process Automation (RPA) framework designed to handle large-scale RPA tasks with ease. It provides a comprehensive solution for browser automation, web content understanding, and data extraction. PulsarRPA addresses challenges of browser automation and accurate web data extraction from complex and evolving websites. It incorporates innovative technologies like browser rendering, RPA, intelligent scraping, advanced DOM parsing, and distributed architecture to ensure efficient, accurate, and scalable web data extraction. The tool is open-source, customizable, and supports cutting-edge information extraction technology, making it a preferred solution for large-scale web data extraction.

llm-app

Pathway's LLM (Large Language Model) Apps provide a platform to quickly deploy AI applications using the latest knowledge from data sources. The Python application examples in this repository are Docker-ready, exposing an HTTP API to the frontend. These apps utilize the Pathway framework for data synchronization, API serving, and low-latency data processing without the need for additional infrastructure dependencies. They connect to document data sources like S3, Google Drive, and Sharepoint, offering features like real-time data syncing, easy alert setup, scalability, monitoring, security, and unification of application logic.

chatgpt-universe

ChatGPT is a large language model that can generate human-like text, translate languages, write different kinds of creative content, and answer your questions in a conversational way. It is trained on a massive amount of text data, and it is able to understand and respond to a wide range of natural language prompts. Here are 5 jobs suitable for this tool, in lowercase letters: 1. content writer 2. chatbot assistant 3. language translator 4. creative writer 5. researcher

supersonic

SuperSonic is a next-generation BI platform that integrates Chat BI (powered by LLM) and Headless BI (powered by semantic layer) paradigms. This integration ensures that Chat BI has access to the same curated and governed semantic data models as traditional BI. Furthermore, the implementation of both paradigms benefits from the integration: * Chat BI's Text2SQL gets augmented with context-retrieval from semantic models. * Headless BI's query interface gets extended with natural language API. SuperSonic provides a Chat BI interface that empowers users to query data using natural language and visualize the results with suitable charts. To enable such experience, the only thing necessary is to build logical semantic models (definition of metric/dimension/tag, along with their meaning and relationships) through a Headless BI interface. Meanwhile, SuperSonic is designed to be extensible and composable, allowing custom implementations to be added and configured with Java SPI. The integration of Chat BI and Headless BI has the potential to enhance the Text2SQL generation in two dimensions: 1. Incorporate data semantics (such as business terms, column values, etc.) into the prompt, enabling LLM to better understand the semantics and reduce hallucination. 2. Offload the generation of advanced SQL syntax (such as join, formula, etc.) from LLM to the semantic layer to reduce complexity. With these ideas in mind, we develop SuperSonic as a practical reference implementation and use it to power our real-world products. Additionally, to facilitate further development we decide to open source SuperSonic as an extensible framework.

ais-k8s

AIStore on Kubernetes is a toolkit for deploying a lightweight, scalable object storage solution designed for AI applications in a Kubernetes environment. It includes documentation, Ansible playbooks, Kubernetes operator, Helm charts, and Terraform definitions for deployment on public cloud platforms. The system overview shows deployment across nodes with proxy and target pods utilizing Persistent Volumes. The AIStore Operator automates cluster management tasks. The repository focuses on production deployments but offers different deployment options. Thorough planning and configuration decisions are essential for successful multi-node deployment. The AIStore Operator simplifies tasks like starting, deploying, adjusting size, and updating AIStore resources within Kubernetes.

llmops-promptflow-template

LLMOps with Prompt flow is a template and guidance for building LLM-infused apps using Prompt flow. It provides centralized code hosting, lifecycle management, variant and hyperparameter experimentation, A/B deployment, many-to-many dataset/flow relationships, multiple deployment targets, comprehensive reporting, BYOF capabilities, configuration-based development, local prompt experimentation and evaluation, endpoint testing, and optional Human-in-loop validation. The tool is customizable to suit various application needs.

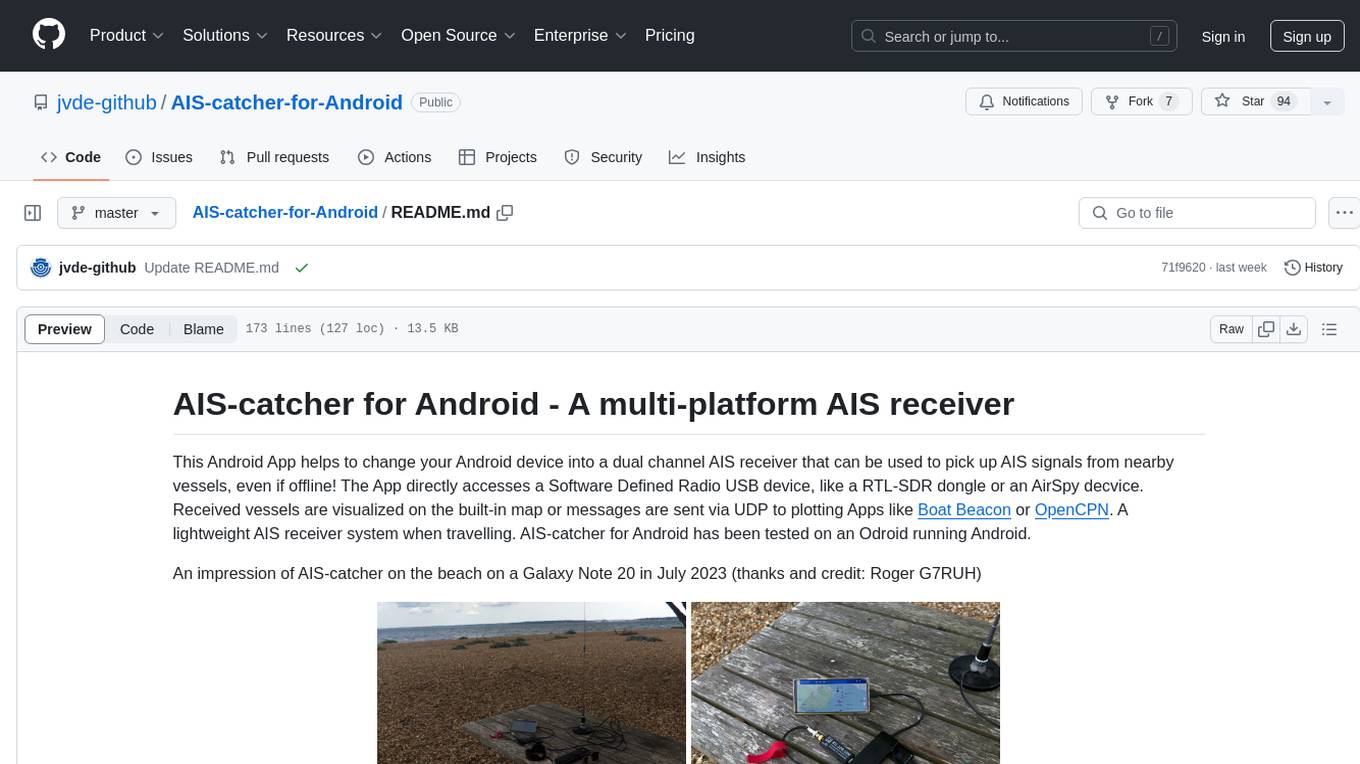

AIS-catcher-for-Android

AIS-catcher for Android is a multi-platform AIS receiver app that transforms your Android device into a dual channel AIS receiver. It directly accesses a Software Defined Radio USB device to pick up AIS signals from nearby vessels, visualizing them on a built-in map or sending messages via UDP to plotting apps. The app requires a RTL-SDR dongle or an AirSpy device, a simple antenna, an Android device with USB connector, and an OTG cable. It is designed for research and educational purposes under the GPL license, with no warranty. Users are responsible for prudent use and compliance with local regulations. The app is not intended for navigation or safety purposes.

goose

Codename Goose is an open-source, extensible AI agent designed to provide functionalities beyond code suggestions. Users can install, execute, edit, and test with any LLM. The tool aims to enhance the coding experience by offering advanced features and capabilities. Stay updated for the upcoming 1.0 release scheduled by the end of January 2025. Explore the v0.X documentation available on the project's GitHub pages.

floki

Floki is an open-source framework for researchers and developers to experiment with LLM-based autonomous agents. It provides tools to create, orchestrate, and manage agents while seamlessly connecting to LLM inference APIs. Built on Dapr, Floki leverages a unified programming model that simplifies microservices and supports both deterministic workflows and event-driven interactions. By bringing together these features, Floki provides a powerful way to explore agentic workflows and the components that enable multi-agent systems to collaborate and scale, all powered by Dapr.

csghub

CSGHub is an open source platform for managing large model assets, including datasets, model files, and codes. It offers functionalities similar to a privatized Huggingface, managing assets in a manner akin to how OpenStack Glance manages virtual machine images. Users can perform operations such as uploading, downloading, storing, verifying, and distributing assets through various interfaces. The platform provides microservice submodules and standardized OpenAPIs for easy integration with users' systems. CSGHub is designed for large models and can be deployed On-Premise for offline operation.

For similar tasks

octelium

Octelium is a free and open source, self-hosted, unified zero trust secure access platform that operates as a modern zero-config remote access VPN, a comprehensive Zero Trust Network Access (ZTNA)/BeyondCorp platform, an ngrok/Cloudflare Tunnel alternative, an API gateway, an AI/LLM gateway, a PaaS-like platform, a Kubernetes gateway/ingress, and a homelab infrastructure. It provides scalable zero trust architecture for identity-based, application-layer aware secure access via private client-based access over WireGuard/QUIC tunnels and public clientless access, with context-aware access control. Octelium offers dynamic secretless access, fine-grained access control, identity-based routing, continuous strong authentication, OpenTelemetry-native auditing, passwordless SSH, effortless deployment of containerized applications, centralized management, and more. It is open source, designed for self-hosting, and provides a commercial license option for businesses.

MCPJungle

MCPJungle is a self-hosted MCP Gateway for private AI agents, serving as a registry for Model Context Protocol Servers. Developers use it to manage servers and tools centrally, while clients discover and consume tools from a single 'Gateway' MCP Server. Suitable for developers using MCP Clients like Claude & Cursor, building production-grade AI Agents, and organizations managing client-server interactions. The tool allows quick start, installation, usage, server and client setup, connection to Claude and Cursor, enabling/disabling tools, managing tool groups, authentication, enterprise features like access control and OpenTelemetry metrics. Limitations include lack of long-running connections to servers and no support for OAuth flow. Contributions are welcome.

For similar jobs

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.

AI-in-a-Box

AI-in-a-Box is a curated collection of solution accelerators that can help engineers establish their AI/ML environments and solutions rapidly and with minimal friction, while maintaining the highest standards of quality and efficiency. It provides essential guidance on the responsible use of AI and LLM technologies, specific security guidance for Generative AI (GenAI) applications, and best practices for scaling OpenAI applications within Azure. The available accelerators include: Azure ML Operationalization in-a-box, Edge AI in-a-box, Doc Intelligence in-a-box, Image and Video Analysis in-a-box, Cognitive Services Landing Zone in-a-box, Semantic Kernel Bot in-a-box, NLP to SQL in-a-box, Assistants API in-a-box, and Assistants API Bot in-a-box.

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

generative-ai-cdk-constructs

The AWS Generative AI Constructs Library is an open-source extension of the AWS Cloud Development Kit (AWS CDK) that provides multi-service, well-architected patterns for quickly defining solutions in code to create predictable and repeatable infrastructure, called constructs. The goal of AWS Generative AI CDK Constructs is to help developers build generative AI solutions using pattern-based definitions for their architecture. The patterns defined in AWS Generative AI CDK Constructs are high level, multi-service abstractions of AWS CDK constructs that have default configurations based on well-architected best practices. The library is organized into logical modules using object-oriented techniques to create each architectural pattern model.

model_server

OpenVINO™ Model Server (OVMS) is a high-performance system for serving models. Implemented in C++ for scalability and optimized for deployment on Intel architectures, the model server uses the same architecture and API as TensorFlow Serving and KServe while applying OpenVINO for inference execution. Inference service is provided via gRPC or REST API, making deploying new algorithms and AI experiments easy.

dify-helm

Deploy langgenius/dify, an LLM based chat bot app on kubernetes with helm chart.