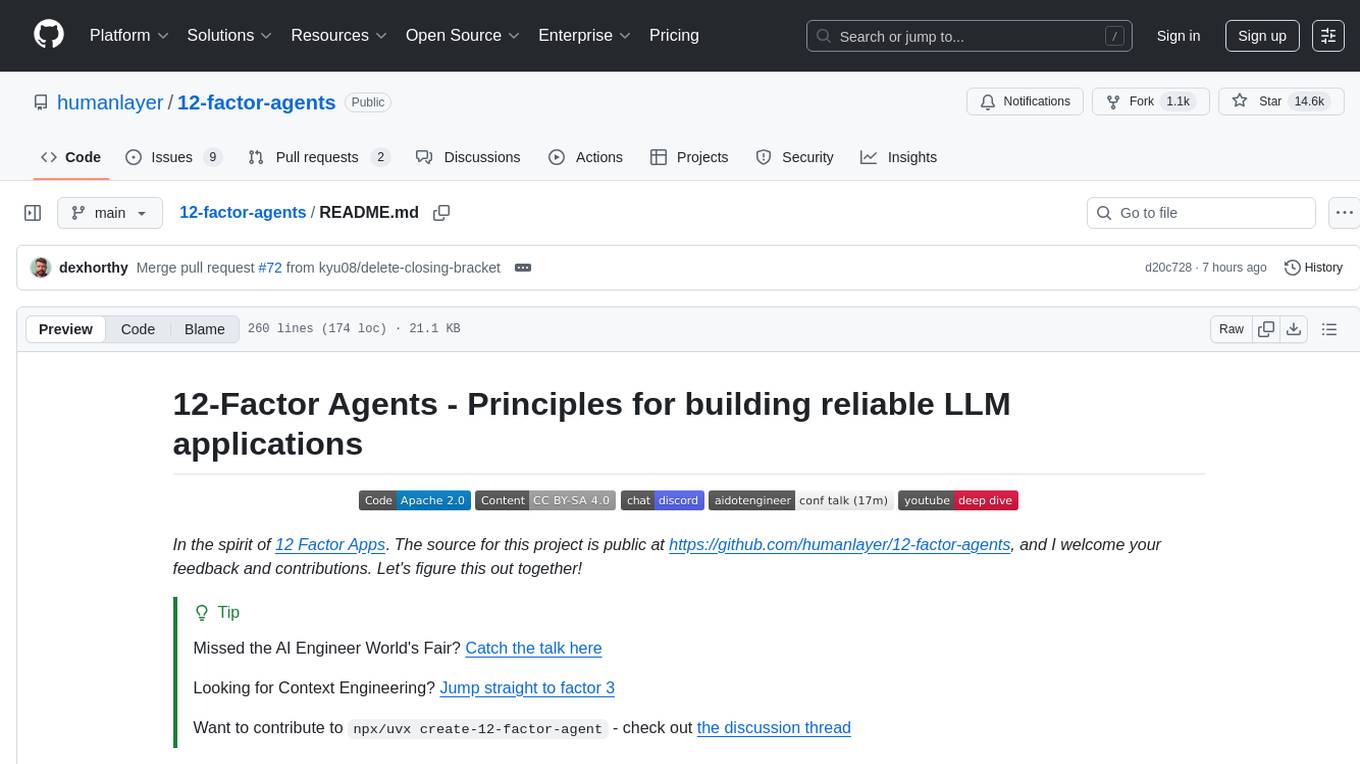

12-factor-agents

What are the principles we can use to build LLM-powered software that is actually good enough to put in the hands of production customers?

Stars: 14634

12-Factor Agents is a project focused on building reliable LLM-powered software by outlining 12 core engineering principles. The project aims to provide guidance on creating production-ready customer-facing agents that leverage AI technology effectively. It emphasizes the importance of software design, context management, tool integration, and control flow in developing high-quality AI agents. The project offers insights, design patterns, and practical advice for software engineers looking to enhance their AI applications with agent-based approaches.

README:

In the spirit of 12 Factor Apps. The source for this project is public at https://github.com/humanlayer/12-factor-agents, and I welcome your feedback and contributions. Let's figure this out together!

[!TIP] Missed the AI Engineer World's Fair? Catch the talk here

Looking for Context Engineering? Jump straight to factor 3

Want to contribute to

npx/uvx create-12-factor-agent- check out the discussion thread

Hi, I'm Dex. I've been hacking on AI agents for a while.

I've tried every agent framework out there, from the plug-and-play crew/langchains to the "minimalist" smolagents of the world to the "production grade" langraph, griptape, etc.

I've talked to a lot of really strong founders, in and out of YC, who are all building really impressive things with AI. Most of them are rolling the stack themselves. I don't see a lot of frameworks in production customer-facing agents.

I've been surprised to find that most of the products out there billing themselves as "AI Agents" are not all that agentic. A lot of them are mostly deterministic code, with LLM steps sprinkled in at just the right points to make the experience truly magical.

Agents, at least the good ones, don't follow the "here's your prompt, here's a bag of tools, loop until you hit the goal" pattern. Rather, they are comprised of mostly just software.

So, I set out to answer:

Welcome to 12-factor agents. As every Chicago mayor since Daley has consistently plastered all over the city's major airports, we're glad you're here.

Special thanks to @iantbutler01, @tnm, @hellovai, @stantonk, @balanceiskey, @AdjectiveAllison, @pfbyjy, @a-churchill, and the SF MLOps community for early feedback on this guide.

Even if LLMs continue to get exponentially more powerful, there will be core engineering techniques that make LLM-powered software more reliable, more scalable, and easier to maintain.

- How We Got Here: A Brief History of Software

- Factor 1: Natural Language to Tool Calls

- Factor 2: Own your prompts

- Factor 3: Own your context window

- Factor 4: Tools are just structured outputs

- Factor 5: Unify execution state and business state

- Factor 6: Launch/Pause/Resume with simple APIs

- Factor 7: Contact humans with tool calls

- Factor 8: Own your control flow

- Factor 9: Compact Errors into Context Window

- Factor 10: Small, Focused Agents

- Factor 11: Trigger from anywhere, meet users where they are

- Factor 12: Make your agent a stateless reducer

|

|

|

|

|

|

|

|

|

|

|

|

For a deeper dive on my agent journey and what led us here, check out A Brief History of Software - a quick summary here:

We're gonna talk a lot about Directed Graphs (DGs) and their Acyclic friends, DAGs. I'll start by pointing out that...well...software is a directed graph. There's a reason we used to represent programs as flow charts.

Around 20 years ago, we started to see DAG orchestrators become popular. We're talking classics like Airflow, Prefect, some predecessors, and some newer ones like (dagster, inggest, windmill). These followed the same graph pattern, with the added benefit of observability, modularity, retries, administration, etc.

I'm not the first person to say this, but my biggest takeaway when I started learning about agents, was that you get to throw the DAG away. Instead of software engineers coding each step and edge case, you can give the agent a goal and a set of transitions:

And let the LLM make decisions in real time to figure out the path

The promise here is that you write less software, you just give the LLM the "edges" of the graph and let it figure out the nodes. You can recover from errors, you can write less code, and you may find that LLMs find novel solutions to problems.

As we'll see later, it turns out this doesn't quite work.

Let's dive one step deeper - with agents you've got this loop consisting of 3 steps:

- LLM determines the next step in the workflow, outputting structured json ("tool calling")

- Deterministic code executes the tool call

- The result is appended to the context window

- Repeat until the next step is determined to be "done"

initial_event = {"message": "..."}

context = [initial_event]

while True:

next_step = await llm.determine_next_step(context)

context.append(next_step)

if (next_step.intent === "done"):

return next_step.final_answer

result = await execute_step(next_step)

context.append(result)Our initial context is just the starting event (maybe a user message, maybe a cron fired, maybe a webhook, etc), and we ask the llm to choose the next step (tool) or to determine that we're done.

Here's a multi-step example:

At the end of the day, this approach just doesn't work as well as we want it to.

In building HumanLayer, I've talked to at least 100 SaaS builders (mostly technical founders) looking to make their existing product more agentic. The journey usually goes something like:

- Decide you want to build an agent

- Product design, UX mapping, what problems to solve

- Want to move fast, so grab $FRAMEWORK and get to building

- Get to 70-80% quality bar

- Realize that 80% isn't good enough for most customer-facing features

- Realize that getting past 80% requires reverse-engineering the framework, prompts, flow, etc.

- Start over from scratch

Random Disclaimers

DISCLAIMER: I'm not sure the exact right place to say this, but here seems as good as any: this in BY NO MEANS meant to be a dig on either the many frameworks out there, or the pretty dang smart people who work on them. They enable incredible things and have accelerated the AI ecosystem.

I hope that one outcome of this post is that agent framework builders can learn from the journeys of myself and others, and make frameworks even better.

Especially for builders who want to move fast but need deep control.

DISCLAIMER 2: I'm not going to talk about MCP. I'm sure you can see where it fits in.

DISCLAIMER 3: I'm using mostly typescript, for reasons but all this stuff works in python or any other language you prefer.

Anyways back to the thing...

After digging through hundreds of AI libriaries and working with dozens of founders, my instinct is this:

- There are some core things that make agents great

- Going all in on a framework and building what is essentially a greenfield rewrite may be counter-productive

- There are some core principles that make agents great, and you will get most/all of them if you pull in a framework

- BUT, the fastest way I've seen for builders to get high-quality AI software in the hands of customers is to take small, modular concepts from agent building, and incorporate them into their existing product

- These modular concepts from agents can be defined and applied by most skilled software engineers, even if they don't have an AI background

- How We Got Here: A Brief History of Software

- Factor 1: Natural Language to Tool Calls

- Factor 2: Own your prompts

- Factor 3: Own your context window

- Factor 4: Tools are just structured outputs

- Factor 5: Unify execution state and business state

- Factor 6: Launch/Pause/Resume with simple APIs

- Factor 7: Contact humans with tool calls

- Factor 8: Own your control flow

- Factor 9: Compact Errors into Context Window

- Factor 10: Small, Focused Agents

- Factor 11: Trigger from anywhere, meet users where they are

- Factor 12: Make your agent a stateless reducer

- Contribute to this guide here

- I talked about a lot of this on an episode of the Tool Use podcast in March 2025

- I write about some of this stuff at The Outer Loop

- I do webinars about Maximizing LLM Performance with @hellovai

- We build OSS agents with this methodology under got-agents/agents

- We ignored all our own advice and built a framework for running distributed agents in kubernetes

- Other links from this guide:

- 12 Factor Apps

- Building Effective Agents (Anthropic)

- Prompts are Functions

- Library patterns: Why frameworks are evil

- The Wrong Abstraction

- Mailcrew Agent

- Mailcrew Demo Video

- Chainlit Demo

- TypeScript for LLMs

- Schema Aligned Parsing

- Function Calling vs Structured Outputs vs JSON Mode

- BAML on GitHub

- OpenAI JSON vs Function Calling

- Outer Loop Agents

- Airflow

- Prefect

- Dagster

- Inngest

- Windmill

- The AI Agent Index (MIT)

- NotebookLM on Finding Model Capability Boundaries

Thanks to everyone who has contributed to 12-factor agents!

All content and images are licensed under a CC BY-SA 4.0 License

Code is licensed under the Apache 2.0 License

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for 12-factor-agents

Similar Open Source Tools

12-factor-agents

12-Factor Agents is a project focused on building reliable LLM-powered software by outlining 12 core engineering principles. The project aims to provide guidance on creating production-ready customer-facing agents that leverage AI technology effectively. It emphasizes the importance of software design, context management, tool integration, and control flow in developing high-quality AI agents. The project offers insights, design patterns, and practical advice for software engineers looking to enhance their AI applications with agent-based approaches.

humanlayer

HumanLayer is a Python toolkit designed to enable AI agents to interact with humans in tool-based and asynchronous workflows. By incorporating humans-in-the-loop, agentic tools can access more powerful and meaningful tasks. The toolkit provides features like requiring human approval for function calls, human as a tool for contacting humans, omni-channel contact capabilities, granular routing, and support for various LLMs and orchestration frameworks. HumanLayer aims to ensure human oversight of high-stakes function calls, making AI agents more reliable and safe in executing impactful tasks.

burr

Burr is a Python library and UI that makes it easy to develop applications that make decisions based on state (chatbots, agents, simulations, etc...). Burr includes a UI that can track/monitor those decisions in real time.

uptrain

UpTrain is an open-source unified platform to evaluate and improve Generative AI applications. We provide grades for 20+ preconfigured evaluations (covering language, code, embedding use cases), perform root cause analysis on failure cases and give insights on how to resolve them.

machinascript-for-robots

MachinaScript For Robots is a dynamic set of tools and a LLM-JSON-based language designed to empower humans in the creation of their own robots. It facilitates the animation of generative movements, the integration of personality, and the teaching of new skills with a high degree of autonomy. With MachinaScript, users can control a wide range of electronic components, including Arduinos, Raspberry Pis, servo motors, cameras, sensors, and more. The tool enables the creation of intelligent robots accessible to everyone, allowing for complex tasks to be performed with elegance and precision.

EdgeChains

EdgeChains is an open-source chain-of-thought engineering framework tailored for Large Language Models (LLMs)- like OpenAI GPT, LLama2, Falcon, etc. - With a focus on enterprise-grade deployability and scalability. EdgeChains is specifically designed to **orchestrate** such applications. At EdgeChains, we take a unique approach to Generative AI - we think Generative AI is a deployment and configuration management challenge rather than a UI and library design pattern challenge. We build on top of a tech that has solved this problem in a different domain - Kubernetes Config Management - and bring that to Generative AI. Edgechains is built on top of jsonnet, originally built by Google based on their experience managing a vast amount of configuration code in the Borg infrastructure.

hal-9100

This repository is now archived and the code is privately maintained. If you are interested in this infrastructure, please contact the maintainer directly.

argilla

Argilla is a collaboration platform for AI engineers and domain experts that require high-quality outputs, full data ownership, and overall efficiency. It helps users improve AI output quality through data quality, take control of their data and models, and improve efficiency by quickly iterating on the right data and models. Argilla is an open-source community-driven project that provides tools for achieving and maintaining high-quality data standards, with a focus on NLP and LLMs. It is used by AI teams from companies like the Red Cross, Loris.ai, and Prolific to improve the quality and efficiency of AI projects.

PocketFlow

Pocket Flow is a 100-line minimalist LLM framework designed for (Multi-)Agents, Task Decomposition, RAG, etc. It aims to be the framework used by LLMs, focusing on stripping away low-level implementation details and emphasizing high-level programming paradigms. Pocket Flow serves as a learning resource and provides a core abstraction of a nested directed graph for breaking down tasks into multiple steps.

searchGPT

searchGPT is an open-source project that aims to build a search engine based on Large Language Model (LLM) technology to provide natural language answers. It supports web search with real-time results, file content search, and semantic search from sources like the Internet. The tool integrates LLM technologies such as OpenAI and GooseAI, and offers an easy-to-use frontend user interface. The project is designed to provide grounded answers by referencing real-time factual information, addressing the limitations of LLM's training data. Contributions, especially from frontend developers, are welcome under the MIT License.

edenai-apis

Eden AI aims to simplify the use and deployment of AI technologies by providing a unique API that connects to all the best AI engines. With the rise of **AI as a Service** , a lot of companies provide off-the-shelf trained models that you can access directly through an API. These companies are either the tech giants (Google, Microsoft , Amazon) or other smaller, more specialized companies, and there are hundreds of them. Some of the most known are : DeepL (translation), OpenAI (text and image analysis), AssemblyAI (speech analysis). There are **hundreds of companies** doing that. We're regrouping the best ones **in one place** !

OpenDevin

OpenDevin is an open-source project aiming to replicate Devin, an autonomous AI software engineer capable of executing complex engineering tasks and collaborating actively with users on software development projects. The project aspires to enhance and innovate upon Devin through the power of the open-source community. Users can contribute to the project by developing core functionalities, frontend interface, or sandboxing solutions, participating in research and evaluation of LLMs in software engineering, and providing feedback and testing on the OpenDevin toolset.

ComfyUI-HunyuanVideo-Nyan

ComfyUI-HunyuanVideo-Nyan is a repository that provides tools for manipulating the attention of LLM models, allowing users to shuffle the AI's attention and cause confusion. The repository includes a Nerdy Transformer Shuffle node that enables users to mess with the LLM's attention layers, providing a workflow for installation and usage. It also offers a new SAE-informed Long-CLIP model with high accuracy, along with recommendations for CLIP models. Users can find detailed instructions on how to use the provided nodes to scale CLIP & LLM factors and create high-quality nature videos. The repository emphasizes compatibility with other related tools and provides insights into the functionality of the included nodes.

AgentLab

AgentLab is an open, easy-to-use, and extensible framework designed to accelerate web agent research. It provides features for developing and evaluating agents on various benchmarks supported by BrowserGym. The framework allows for large-scale parallel agent experiments using ray, building blocks for creating agents over BrowserGym, and a unified LLM API for OpenRouter, OpenAI, Azure, or self-hosted using TGI. AgentLab also offers reproducibility features, a unified LeaderBoard, and supports multiple benchmarks like WebArena, WorkArena, WebLinx, VisualWebArena, AssistantBench, GAIA, Mind2Web-live, and MiniWoB.

model2vec

Model2Vec is a technique to turn any sentence transformer into a really small static model, reducing model size by 15x and making the models up to 500x faster, with a small drop in performance. It outperforms other static embedding models like GLoVe and BPEmb, is lightweight with only `numpy` as a major dependency, offers fast inference, dataset-free distillation, and is integrated into Sentence Transformers, txtai, and Chonkie. Model2Vec creates powerful models by passing a vocabulary through a sentence transformer model, reducing dimensionality using PCA, and weighting embeddings using zipf weighting. Users can distill their own models or use pre-trained models from the HuggingFace hub. Evaluation can be done using the provided evaluation package. Model2Vec is licensed under MIT.

simple_GRPO

simple_GRPO is a very simple implementation of the GRPO algorithm for reproducing r1-like LLM thinking. It provides a codebase that supports saving GPU memory, understanding RL processes, trying various improvements like multi-answer generation, regrouping, penalty on KL, and parameter tuning. The project focuses on simplicity, performance, and core loss calculation based on Hugging Face's trl. It offers a straightforward setup with minimal dependencies and efficient training on multiple GPUs.

For similar tasks

12-factor-agents

12-Factor Agents is a project focused on building reliable LLM-powered software by outlining 12 core engineering principles. The project aims to provide guidance on creating production-ready customer-facing agents that leverage AI technology effectively. It emphasizes the importance of software design, context management, tool integration, and control flow in developing high-quality AI agents. The project offers insights, design patterns, and practical advice for software engineers looking to enhance their AI applications with agent-based approaches.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.

-white)