frost

Get your app online, for the AI coding era.

Stars: 300

Frost is an open-source, self-hosted tool designed to streamline the process of deploying AI-built applications online. It eliminates the complexities associated with deployment, such as lengthy guides, YAML configurations, IAM policies, and cryptic errors. With Frost, users can easily deploy their apps with a simple AI-generated config, clear feedback, and Docker-based infrastructure. The tool supports various features like automatic SSL, custom domains, GitHub webhooks, PR preview environments, instant rollbacks, health checks, resource limits, and a full REST API. Frost is Docker-native and can deploy a wide range of applications, databases, multi-service projects, private images, and long-running jobs. It is built on a stack that includes Next.js, SQLite, Tailwind, and Docker, making it ideal for developers looking to get their apps online quickly and efficiently.

README:

Get your app online, for the AI coding era.

Open source · Self-hosted · No usage fees

AI lets you build apps in hours. Getting them online still takes forever.

- 47-step deployment guides

- YAML configs AI hallucinates

- IAM policies that take hours

- Cryptic errors, impossible to debug

Frost fixes that.

With AI agent — give your agent: https://frost.build/install.md

Manual — run on your server:

curl -fsSL https://frost.build/install.sh | sudo bashNeed a server? See INSTALL.md for AI-assisted VPS provisioning.

AI-native by design

- Simple config AI writes perfectly

- Clear errors, actionable feedback

- No K8s complexity to hallucinate

- Just Docker. Predictable.

Everything you need

- Git push → deployed

- Automatic SSL (Let's Encrypt)

- Custom domains

- GitHub webhooks

- PR preview environments

- Instant rollbacks

- Health checks

- Resource limits

- Full REST API

Docker-native. If it has a Dockerfile, Frost runs it.

- Web apps — Next.js, Rails, Django, Go, etc.

- Databases — Postgres, MySQL, Redis, MongoDB

- Multi-service projects — frontend, API, workers on shared Docker network

- Private images — pull from GHCR, Docker Hub, custom registries

- Long-running jobs — workers, queues, background processes

- Next.js + Bun

- SQLite + Kysely

- Tailwind + shadcn/ui

- Docker

bun install

bun run dev

bun run e2e:local

bun run e2e:smoke

bun run e2e:changed

bun run e2e:changed:fast

bun run e2e:profile:week1# Fast local loop (changed groups + retry + report)

bun run e2e:changed:fast

# Full profile artifacts in /tmp/frost-e2e-week1

bun run e2e:profile:week1

# Standardized knobs

E2E_GROUPS='01-basic,04-update,29-mcp' bun run e2e:local

E2E_GROUP_GLOB='group-2*.sh' bun run e2e:local

E2E_BATCH_SIZE=4 E2E_START_STAGGER_SEC=1 bun run e2e:local- Runtime image pulls automatically retry with backoff (

FROST_IMAGE_PULL_RETRIES,FROST_IMAGE_PULL_BACKOFF_MS,FROST_IMAGE_PULL_MAX_BACKOFF_MS). - Timeout signatures such as

context deadline exceeded,i/o timeout, andproxyconnect tcpare treated as transient infra failures in deployment logs. - If a local run flakes on pull, rerun with the managed runner (

bun run e2e:local) so pre-pull and retries are applied.

- VPS or server with Docker

- Ubuntu 20.04+ recommended

MIT

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for frost

Similar Open Source Tools

For similar tasks

frost

Frost is an open-source, self-hosted tool designed to streamline the process of deploying AI-built applications online. It eliminates the complexities associated with deployment, such as lengthy guides, YAML configurations, IAM policies, and cryptic errors. With Frost, users can easily deploy their apps with a simple AI-generated config, clear feedback, and Docker-based infrastructure. The tool supports various features like automatic SSL, custom domains, GitHub webhooks, PR preview environments, instant rollbacks, health checks, resource limits, and a full REST API. Frost is Docker-native and can deploy a wide range of applications, databases, multi-service projects, private images, and long-running jobs. It is built on a stack that includes Next.js, SQLite, Tailwind, and Docker, making it ideal for developers looking to get their apps online quickly and efficiently.

open-saas

Open SaaS is a free and open-source React and Node.js template for building SaaS applications. It comes with a variety of features out of the box, including authentication, payments, analytics, and more. Open SaaS is built on top of the Wasp framework, which provides a number of features to make it easy to build SaaS applications, such as full-stack authentication, end-to-end type safety, jobs, and one-command deploy.

airbroke

Airbroke is an open-source error catcher tool designed for modern web applications. It provides a PostgreSQL-based backend with an Airbrake-compatible HTTP collector endpoint and a React-based frontend for error management. The tool focuses on simplicity, maintaining a small database footprint even under heavy data ingestion. Users can ask AI about issues, replay HTTP exceptions, and save/manage bookmarks for important occurrences. Airbroke supports multiple OAuth providers for secure user authentication and offers occurrence charts for better insights into error occurrences. The tool can be deployed in various ways, including building from source, using Docker images, deploying on Vercel, Render.com, Kubernetes with Helm, or Docker Compose. It requires Node.js, PostgreSQL, and specific system resources for deployment.

llmops-promptflow-template

LLMOps with Prompt flow is a template and guidance for building LLM-infused apps using Prompt flow. It provides centralized code hosting, lifecycle management, variant and hyperparameter experimentation, A/B deployment, many-to-many dataset/flow relationships, multiple deployment targets, comprehensive reporting, BYOF capabilities, configuration-based development, local prompt experimentation and evaluation, endpoint testing, and optional Human-in-loop validation. The tool is customizable to suit various application needs.

cheat-sheet-pdf

The Cheat-Sheet Collection for DevOps, Engineers, IT professionals, and more is a curated list of cheat sheets for various tools and technologies commonly used in the software development and IT industry. It includes cheat sheets for Nginx, Docker, Ansible, Python, Go (Golang), Git, Regular Expressions (Regex), PowerShell, VIM, Jenkins, CI/CD, Kubernetes, Linux, Redis, Slack, Puppet, Google Cloud Developer, AI, Neural Networks, Machine Learning, Deep Learning & Data Science, PostgreSQL, Ajax, AWS, Infrastructure as Code (IaC), System Design, and Cyber Security.

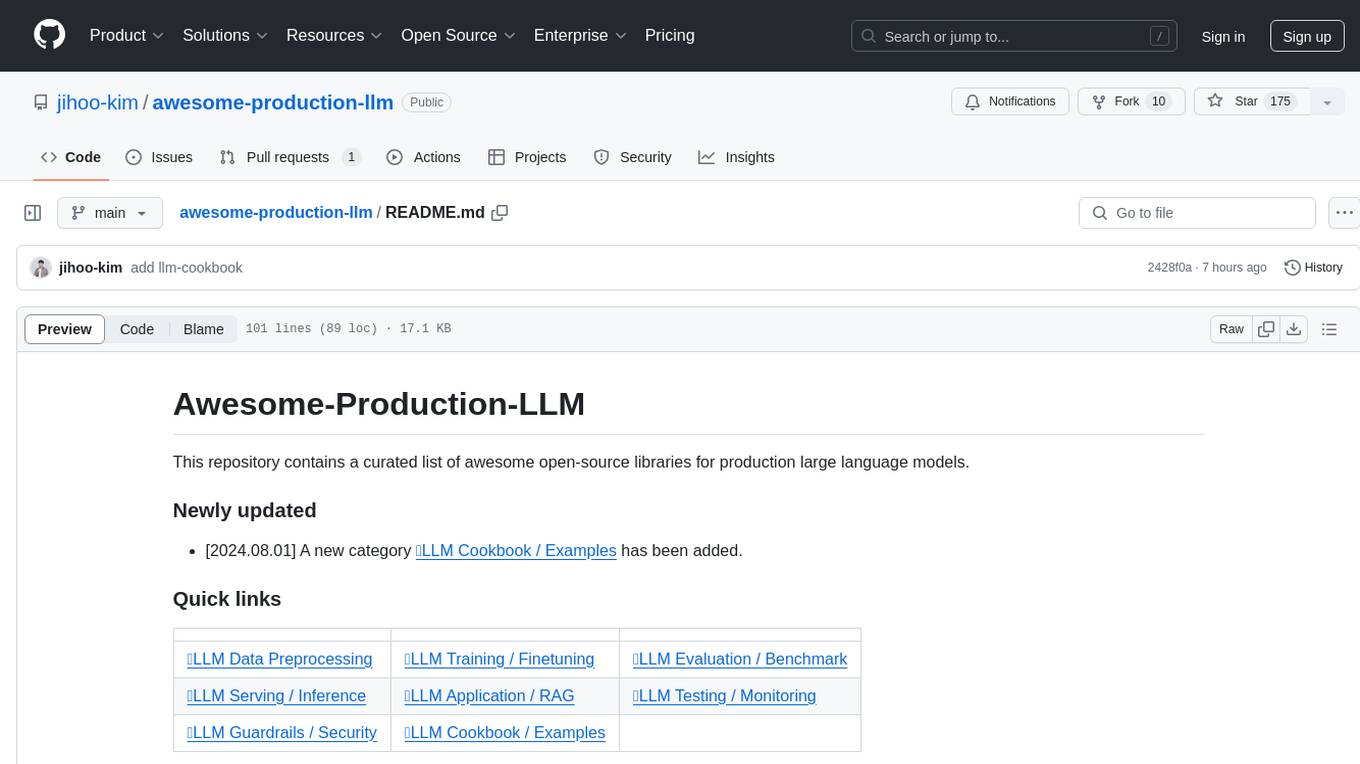

awesome-production-llm

This repository is a curated list of open-source libraries for production large language models. It includes tools for data preprocessing, training/finetuning, evaluation/benchmarking, serving/inference, application/RAG, testing/monitoring, and guardrails/security. The repository also provides a new category called LLM Cookbook/Examples for showcasing examples and guides on using various LLM APIs.

generative-ai-on-aws

Generative AI on AWS by O'Reilly Media provides a comprehensive guide on leveraging generative AI models on the AWS platform. The book covers various topics such as generative AI use cases, prompt engineering, large-language models, fine-tuning techniques, optimization, deployment, and more. Authors Chris Fregly, Antje Barth, and Shelbee Eigenbrode offer insights into cutting-edge AI technologies and practical applications in the field. The book is a valuable resource for data scientists, AI enthusiasts, and professionals looking to explore generative AI capabilities on AWS.

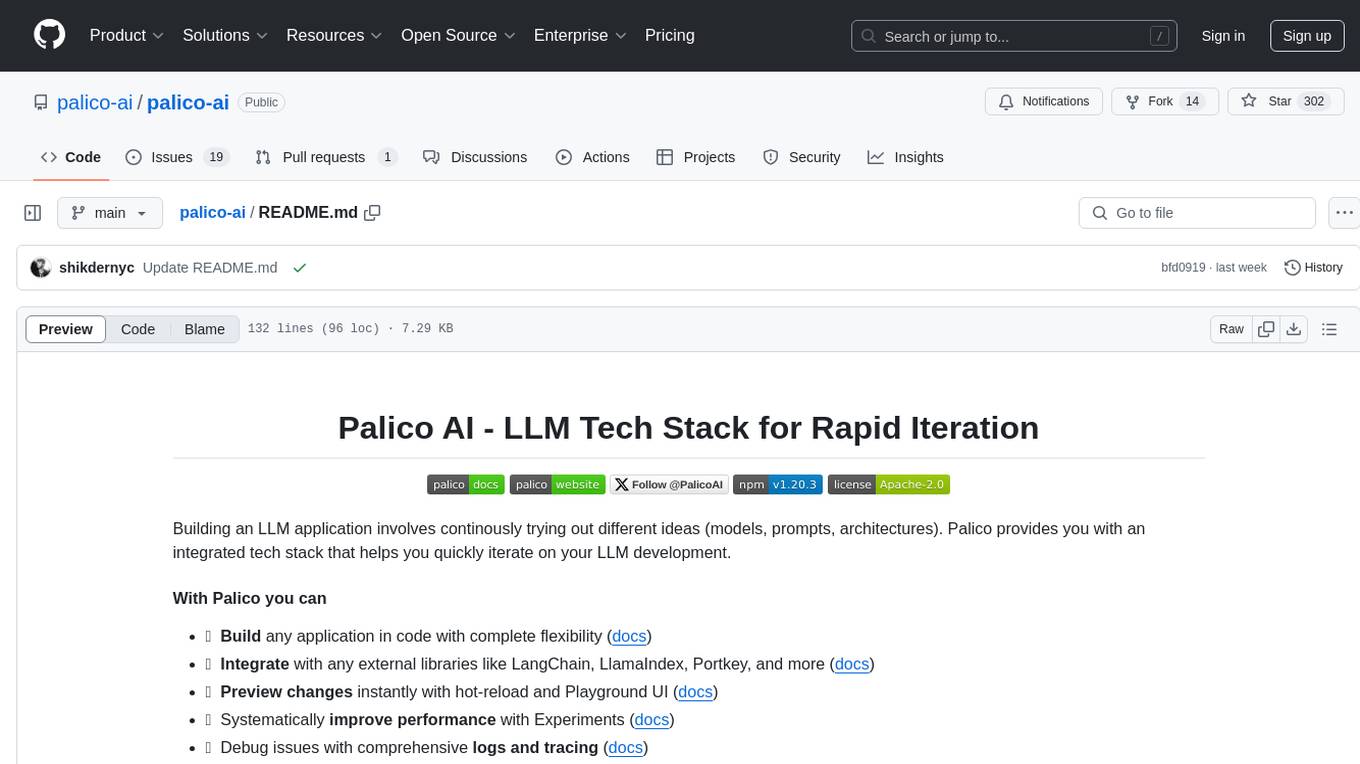

palico-ai

Palico AI is a tech stack designed for rapid iteration of LLM applications. It allows users to preview changes instantly, improve performance through experiments, debug issues with logs and tracing, deploy applications behind a REST API, and manage applications with a UI control panel. Users have complete flexibility in building their applications with Palico, integrating with various tools and libraries. The tool enables users to swap models, prompts, and logic easily using AppConfig. It also facilitates performance improvement through experiments and provides options for deploying applications to cloud providers or using managed hosting. Contributions to the project are welcomed, with easy ways to get involved by picking issues labeled as 'good first issue'.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.