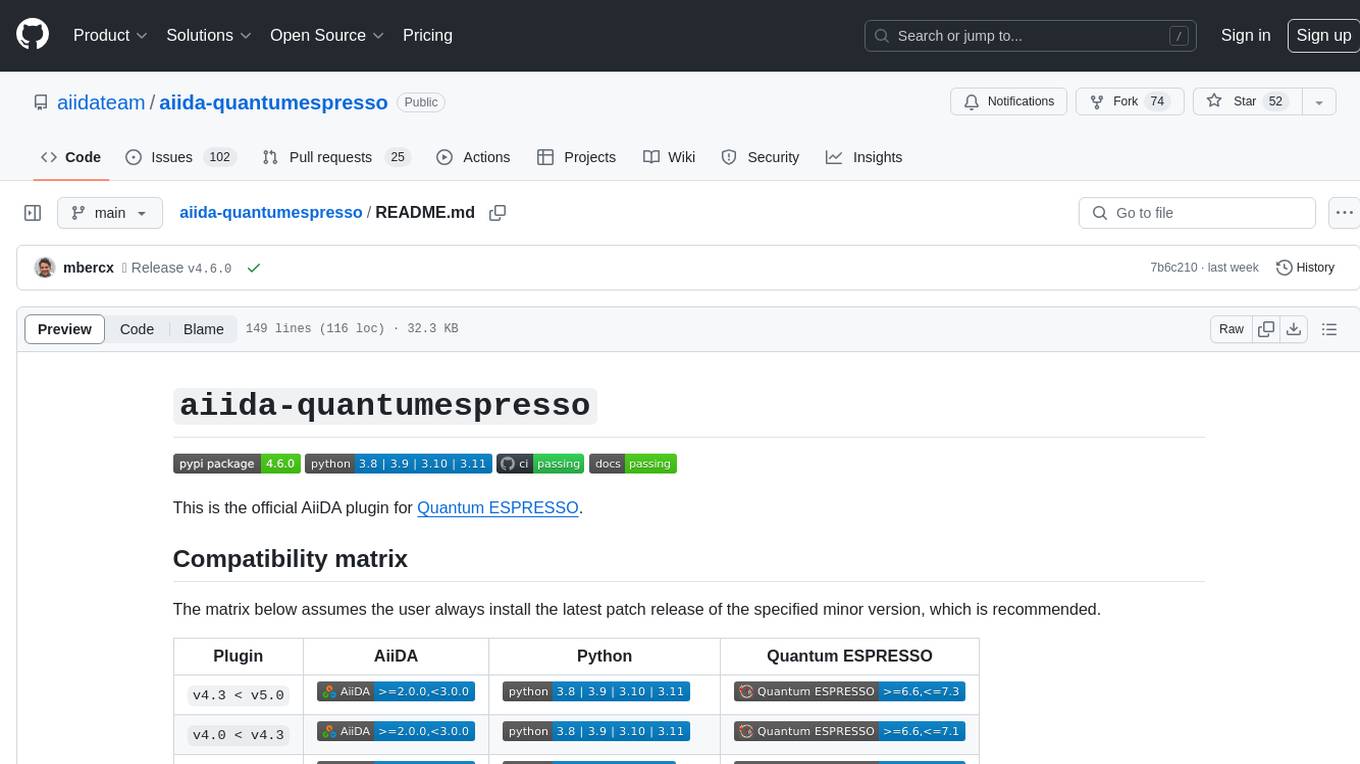

aiida-quantumespresso

The official AiiDA plugin for Quantum ESPRESSO

Stars: 52

README:

This is the official AiiDA plugin for Quantum ESPRESSO.

The matrix below assumes the user always install the latest patch release of the specified minor version, which is recommended.

| Plugin | AiiDA | Python | Quantum ESPRESSO |

|---|---|---|---|

v4.3 < v5.0 |

|

|

|

v4.0 < v4.3 |

|

|

|

v3.5 < v4.0 |

|

|

|

v3.4 < v3.5 |

|

|

|

v3.3 < v3.4 |

|

|

|

v3.1 < v3.3 |

|

|

|

v3.0 < v3.1 |

|

|

|

v2.0 < v3.0 |

|

|

|

Starting from aiida-quantumespresso==4.0, the last three minor versions of Quantum ESPRESSO are supported.

Older versions are supported up to a maximum of two years.

To install from PyPI, simply execute:

pip install aiida-quantumespresso

or when installing from source:

git clone https://github.com/aiidateam/aiida-quantumespresso

pip install aiida-quantumespresso

The plugin comes with a builtin CLI tool: aiida-quantumespresso.

This tool is built using the click library and supports tab-completion.

To enable it, add the following to your shell loading script, e.g. the .bashrc or virtual environment activate script:

eval "$(_AIIDA_QUANTUMESPRESSO_COMPLETE=source aiida-quantumespresso)"

The tool comes with various sub commands, for example to quickly launch some calculations and workchains

For example, to launch a test PwCalculation you can run the following command:

aiida-quantumespresso calculation launch pw -X pw-v6.1 -F SSSP/1.1/PBE/efficiency

Note that this requires the code pw-v6.1 and pseudopotential family SSSP/1.1/PBE/efficiency to be configured.

See the pseudopotentials section on how to install them easily.

Each command has a fully documented command line interface, which can be printed to screen with the help flag:

aiida-quantumespresso calculation launch ph --help

which should print something like the following:

Usage: aiida-quantumespresso calculation launch ph [OPTIONS]

Run a PhCalculation.

Options:

-X, --code CODE A single code identified by its ID, UUID or

label. [required]

-C, --calculation CALCULATION A single calculation identified by its ID or

UUID. [required]

-k, --kpoints-mesh INTEGER... The number of points in the kpoint mesh

along each basis vector. [default: 1, 1, 1]

-m, --max-num-machines INTEGER The maximum number of machines (nodes) to

use for the calculations. [default: 1]

-w, --max-wallclock-seconds INTEGER

the maximum wallclock time in seconds to set

for the calculations. [default: 1800]

-i, --with-mpi Run the calculations with MPI enabled.

[default: False]

-d, --daemon Submit the process to the daemon instead of

running it locally. [default: False]

-h, --help Show this message and exit.

Pseudopotentials are installed and managed through the aiida-pseudo plugin.

The easiest way to install pseudopotentials, is to install a version of the SSSP through the CLI of aiida-pseudo.

Simply run

aiida-pseudo install sssp

to install the default SSSP version.

List the installed pseudopotential families with the command aiida-pseudo list.

You can then use the name of any family in the command line using the -F flag.

To run the tests, simply clone and install the package locally with the [tests] optional dependencies:

git clone https://github.com/aiidateam/aiida-quantumespresso .

cd aiida-quantumespresso

pip install -e .[tests] # install extra dependencies for test

pytest # run testsYou can also use tox to run the test set. Here you can also use the -e option to specify the Python version for the test run:

pip install tox

tox -e py39 -- tests/calculations/test_pw.pyTo contribute to this repository, please enable pre-commit so the code in commits are conform to the standards.

Simply install the repository with the pre-commit extra dependencies:

cd aiida-quantumespresso

pip install -e .[pre-commit]

pre-commit installThe aiida-quantumespresso plugin package is released under the MIT license.

See the LICENSE.txt file for more details.

We acknowledge support from:

- the NCCR MARVEL funded by the Swiss National Science Foundation;

- the EU Centre of Excellence "MaX – Materials Design at the Exascale" (Horizon 2020 EINFRA-5, Grant No. 676598; H2020-INFRAEDI-2018-1, Grant No. 824143; HORIZON-EUROHPC-JU-2021-COE-1, Grant No. 101093374);

- the European Union's Horizon 2020 research and innovation programme (Grant No. 957189, project BIG-MAP, also part of the BATTERY 2030+ initiative, Grant No. 957213);

- the swissuniversities P-5 project "Materials Cloud".

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiida-quantumespresso

Similar Open Source Tools

WristAssist

WristAssist is the first app for all WearOS watches that fully brings the classic ChatGPT and DALL-E features to your wrist. It allows users to chat, save chats, edit chats, view galleries, create images, and share images directly from their wrist. The app provides a seamless user experience with easy installation from Google Play and free lifetime updates. Users can refer to the Wiki page for detailed setup instructions. WristAssist is licensed under the terms of the Apache 2.0 license.

david-ai

David UI is a free and open-source collection of customizable, production-ready UI components built with Tailwind CSS. It is designed to be developer-friendly and performance-focused, streamlining the creation of modern, visually appealing interfaces to help deliver high-quality user experiences faster.

neural-compressor

Intel® Neural Compressor is an open-source Python library that supports popular model compression techniques such as quantization, pruning (sparsity), distillation, and neural architecture search on mainstream frameworks such as TensorFlow, PyTorch, ONNX Runtime, and MXNet. It provides key features, typical examples, and open collaborations, including support for a wide range of Intel hardware, validation of popular LLMs, and collaboration with cloud marketplaces, software platforms, and open AI ecosystems.

intel-extension-for-transformers

Intel® Extension for Transformers is an innovative toolkit designed to accelerate GenAI/LLM everywhere with the optimal performance of Transformer-based models on various Intel platforms, including Intel Gaudi2, Intel CPU, and Intel GPU. The toolkit provides the below key features and examples: * Seamless user experience of model compressions on Transformer-based models by extending [Hugging Face transformers](https://github.com/huggingface/transformers) APIs and leveraging [Intel® Neural Compressor](https://github.com/intel/neural-compressor) * Advanced software optimizations and unique compression-aware runtime (released with NeurIPS 2022's paper [Fast Distilbert on CPUs](https://arxiv.org/abs/2211.07715) and [QuaLA-MiniLM: a Quantized Length Adaptive MiniLM](https://arxiv.org/abs/2210.17114), and NeurIPS 2021's paper [Prune Once for All: Sparse Pre-Trained Language Models](https://arxiv.org/abs/2111.05754)) * Optimized Transformer-based model packages such as [Stable Diffusion](examples/huggingface/pytorch/text-to-image/deployment/stable_diffusion), [GPT-J-6B](examples/huggingface/pytorch/text-generation/deployment), [GPT-NEOX](examples/huggingface/pytorch/language-modeling/quantization#2-validated-model-list), [BLOOM-176B](examples/huggingface/pytorch/language-modeling/inference#BLOOM-176B), [T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), [Flan-T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), and end-to-end workflows such as [SetFit-based text classification](docs/tutorials/pytorch/text-classification/SetFit_model_compression_AGNews.ipynb) and [document level sentiment analysis (DLSA)](workflows/dlsa) * [NeuralChat](intel_extension_for_transformers/neural_chat), a customizable chatbot framework to create your own chatbot within minutes by leveraging a rich set of [plugins](https://github.com/intel/intel-extension-for-transformers/blob/main/intel_extension_for_transformers/neural_chat/docs/advanced_features.md) such as [Knowledge Retrieval](./intel_extension_for_transformers/neural_chat/pipeline/plugins/retrieval/README.md), [Speech Interaction](./intel_extension_for_transformers/neural_chat/pipeline/plugins/audio/README.md), [Query Caching](./intel_extension_for_transformers/neural_chat/pipeline/plugins/caching/README.md), and [Security Guardrail](./intel_extension_for_transformers/neural_chat/pipeline/plugins/security/README.md). This framework supports Intel Gaudi2/CPU/GPU. * [Inference](https://github.com/intel/neural-speed/tree/main) of Large Language Model (LLM) in pure C/C++ with weight-only quantization kernels for Intel CPU and Intel GPU (TBD), supporting [GPT-NEOX](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox), [LLAMA](https://github.com/intel/neural-speed/tree/main/neural_speed/models/llama), [MPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/mpt), [FALCON](https://github.com/intel/neural-speed/tree/main/neural_speed/models/falcon), [BLOOM-7B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/bloom), [OPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/opt), [ChatGLM2-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/chatglm), [GPT-J-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptj), and [Dolly-v2-3B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox). Support AMX, VNNI, AVX512F and AVX2 instruction set. We've boosted the performance of Intel CPUs, with a particular focus on the 4th generation Intel Xeon Scalable processor, codenamed [Sapphire Rapids](https://www.intel.com/content/www/us/en/products/docs/processors/xeon-accelerated/4th-gen-xeon-scalable-processors.html).

airweave

Airweave is an open-core tool that simplifies the process of making data searchable by unifying apps, APIs, and databases into a vector database with minimal configuration. It offers over 120 integrations, simplicity in syncing data from diverse sources, extensibility through 'sources', 'destinations', and 'embedders', and an async-first approach for large-scale data synchronization. With features like no-code setup, white-labeled multi-tenant support, chunk generators, automated sync, versioning & hashing, multi-source support, and scalability, Airweave provides a comprehensive solution for building applications that require semantic search.

swift

SWIFT (Scalable lightWeight Infrastructure for Fine-Tuning) supports training, inference, evaluation and deployment of nearly **200 LLMs and MLLMs** (multimodal large models). Developers can directly apply our framework to their own research and production environments to realize the complete workflow from model training and evaluation to application. In addition to supporting the lightweight training solutions provided by [PEFT](https://github.com/huggingface/peft), we also provide a complete **Adapters library** to support the latest training techniques such as NEFTune, LoRA+, LLaMA-PRO, etc. This adapter library can be used directly in your own custom workflow without our training scripts. To facilitate use by users unfamiliar with deep learning, we provide a Gradio web-ui for controlling training and inference, as well as accompanying deep learning courses and best practices for beginners. Additionally, we are expanding capabilities for other modalities. Currently, we support full-parameter training and LoRA training for AnimateDiff.

llama.cpp

llama.cpp is a C++ implementation of LLaMA, a large language model from Meta. It provides a command-line interface for inference and can be used for a variety of tasks, including text generation, translation, and question answering. llama.cpp is highly optimized for performance and can be run on a variety of hardware, including CPUs, GPUs, and TPUs.

llama.cpp

The main goal of llama.cpp is to enable LLM inference with minimal setup and state-of-the-art performance on a wide range of hardware - locally and in the cloud. It provides a Plain C/C++ implementation without any dependencies, optimized for Apple silicon via ARM NEON, Accelerate and Metal frameworks, and supports various architectures like AVX, AVX2, AVX512, and AMX. It offers integer quantization for faster inference, custom CUDA kernels for NVIDIA GPUs, Vulkan and SYCL backend support, and CPU+GPU hybrid inference. llama.cpp is the main playground for developing new features for the ggml library, supporting various models and providing tools and infrastructure for LLM deployment.

LLaMA-Factory

LLaMA Factory is a unified framework for fine-tuning 100+ large language models (LLMs) with various methods, including pre-training, supervised fine-tuning, reward modeling, PPO, DPO and ORPO. It features integrated algorithms like GaLore, BAdam, DoRA, LongLoRA, LLaMA Pro, LoRA+, LoftQ and Agent tuning, as well as practical tricks like FlashAttention-2, Unsloth, RoPE scaling, NEFTune and rsLoRA. LLaMA Factory provides experiment monitors like LlamaBoard, TensorBoard, Wandb, MLflow, etc., and supports faster inference with OpenAI-style API, Gradio UI and CLI with vLLM worker. Compared to ChatGLM's P-Tuning, LLaMA Factory's LoRA tuning offers up to 3.7 times faster training speed with a better Rouge score on the advertising text generation task. By leveraging 4-bit quantization technique, LLaMA Factory's QLoRA further improves the efficiency regarding the GPU memory.

gitmesh

GitMesh is an AI-powered Git collaboration network designed to address contributor dropout in open source projects. It offers real-time branch-level insights, intelligent contributor-task matching, and automated workflows. The platform transforms complex codebases into clear contribution journeys, fostering engagement through gamified rewards and integration with open source support programs. GitMesh's mascot, Meshy/Mesh Wolf, symbolizes agility, resilience, and teamwork, reflecting the platform's ethos of efficiency and power through collaboration.

ASTRA.ai

Astra.ai is a multimodal agent powered by TEN, showcasing its capabilities in speech, vision, and reasoning through RAG from local documentation. It provides a platform for developing AI agents with features like RTC transportation, extension store, workflow builder, and local deployment. Users can build and test agents locally using Docker and Node.js, with prerequisites including Agora App ID, Azure's speech-to-text and text-to-speech API keys, and OpenAI API key. The platform offers advanced customization options through config files and API keys setup, enabling users to create and deploy their AI agents for various tasks.

ASTRA.ai

ASTRA is an open-source platform designed for developing applications utilizing large language models. It merges the ideas of Backend-as-a-Service and LLM operations, allowing developers to swiftly create production-ready generative AI applications. Additionally, it empowers non-technical users to engage in defining and managing data operations for AI applications. With ASTRA, you can easily create real-time, multi-modal AI applications with low latency, even without any coding knowledge.

L3AGI

L3AGI is an open-source tool that enables AI Assistants to collaborate together as effectively as human teams. It provides a robust set of functionalities that empower users to design, supervise, and execute both autonomous AI Assistants and Teams of Assistants. Key features include the ability to create and manage Teams of AI Assistants, design and oversee standalone AI Assistants, equip AI Assistants with the ability to retain and recall information, connect AI Assistants to an array of data sources for efficient information retrieval and processing, and employ curated sets of tools for specific tasks. L3AGI also offers a user-friendly interface, APIs for integration with other systems, and a vibrant community for support and collaboration.

helicone

Helicone is an open-source observability platform designed for Language Learning Models (LLMs). It logs requests to OpenAI in a user-friendly UI, offers caching, rate limits, and retries, tracks costs and latencies, provides a playground for iterating on prompts and chat conversations, supports collaboration, and will soon have APIs for feedback and evaluation. The platform is deployed on Cloudflare and consists of services like Web (NextJs), Worker (Cloudflare Workers), Jawn (Express), Supabase, and ClickHouse. Users can interact with Helicone locally by setting up the required services and environment variables. The platform encourages contributions and provides resources for learning, documentation, and integrations.