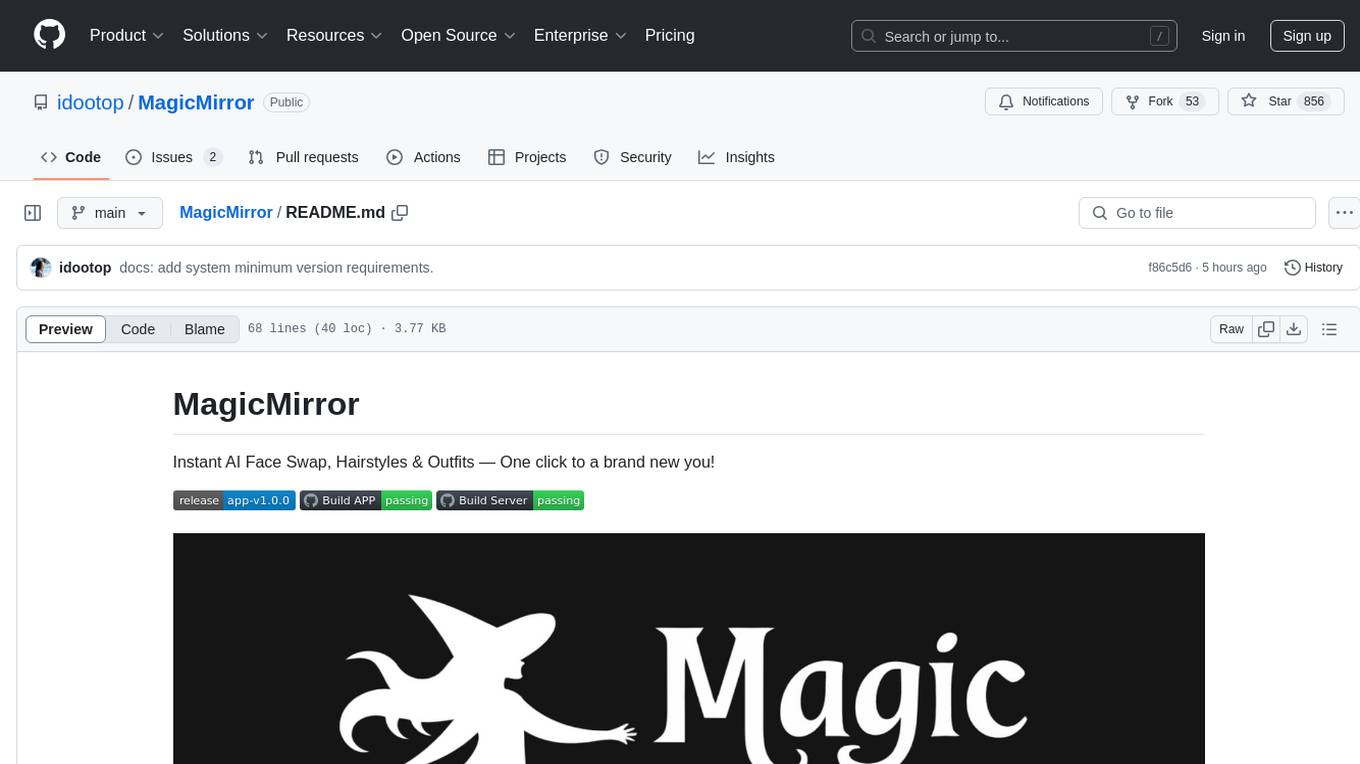

SystemAnimatorOnline

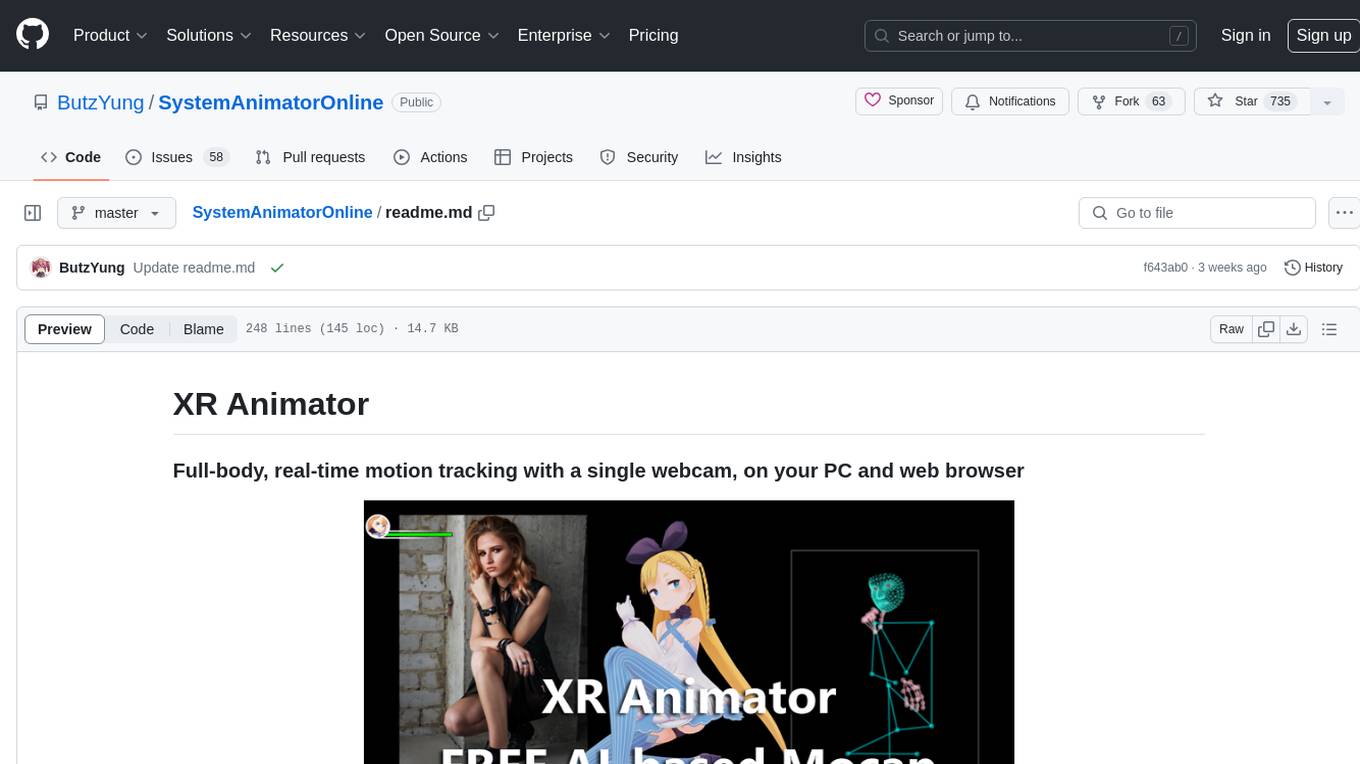

XR Animator, AI-based Full Body Motion Capture and Extended Reality (XR) solution, powered by System Animator Online

Stars: 1285

XR Animator is a video/webcam-based AI motion capture application designed for VTubing and the metaverse era. It uses machine learning solutions to detect 3D poses from a live webcam video, driving a 3D avatar as if controlled by the user's body. It supports full-body AI motion tracking, face tracking, and various XR/3D purposes. The tool can be used for VTubing, recording mocap motion, exporting motions to different formats, customizing backgrounds and scenes, and animating 3D models in other applications. It also supports AR on Android Chrome browser, AR selfie feature, and has relatively low system requirements for wide device compatibility.

README:

XR Animator, inherited from my previous desktop gadget project known as System Animator, is a video/webcam-based AI motion capture application designed for VTubing and the metaverse era. It uses the machine learning (ML) solution from MediaPipe and TensorFlow.js to detect the 3D poses from a live webcam video, which is then used to drive the 3D avatar (MMD/VRM model) as if you are controlling it with your body. It can be used for VTubing and various XR/3D purposes.

It has a variety of motion tracking options. You can choose to track the face, full body, or something in between (any combination of face/body/hands).

The web app version works on all major web browsers both on desktop and smartphone. On browsers supporting both web worker and OffscreenCanvas (e.g. Chrome), it can achieve 60fps visual rendering and 30fps body pose detection on a mediocre PC. On smartphones with limited processing power, you may want to use limit its usage on face tracking.

The Windows/Linux/macOS app version (powered by Electron) is also availabe for download, which provides a few extra features (e.g. VMC-protocol, transparent background) available only in a native-OS environment.

-

Support full-body AI motion tracking using a single webcam or media file (image/video)

-

Support "Perfect Sync"/ARKit-compatible 52 blendshapes for realistic face tracking

-

Support using any MMD/VRM model as your 3D avatar

-

Record mocap motion and export it to VMD/BVH/glTF motion format

-

Support loading VMD/FBX/BVH format 3D motions

-

Export FBX/BVH motions to VMD format

-

Support VMC-protocol to animate a 3D model elsewhere in other VMC-enabled applications such as VSeeFace, VNyan and Warudo (Electron mode only)

-

Customize the background and 3D scene with 2D image/video, 3D panorama and 3D objects (.x/.glb format)

-

Support 2D image as 3D backdrop by assigning an auto AI-generated depth map (video demo), as well as optionally running independently on Windows background (Electron mode) as 3D wallpaper gadget, and web app mode as a "2D-to-3D image viewer"

-

Support webcam object tracking, mapping tracked IRL objects to 3D props (video demo)

-

Support frameless window with transparent background on video capture apps such as OBS (Electron mode only)

-

Support AR (Augmented Reality) on Android Chrome browser

Check out these video demos and tutorials and watch XR Animator in action!

XR Animator has relatively low system requirements, making it usable on a wide range of devices, including laptops and even smartphones. On an entry-level PC with GTX1650-class GPU running XR Animator with full body mocap, you can expect 20+ fps on pose/fingers tracking, 40+ fps (capped at 30) on face tracking, and 60fps on 3D rendering.

However, if you are using a laptop but you are experiencing lower-than-expected frame rate, the app may be using the slower integrated GPU. This is a pretty common problem for laptop users. Configure your graphics card settings and make sure that the faster dedicated GPU is used. Check out the article below if you don't know how.

How to Force Windows to Use Dedicated Graphics

XR Animator and some other demos of System Animator Online support the "Augmented Reality" (AR) mode on mobile phones, which renders the 3D models that appear as if they exist in the real world. The AR mode requires mobile phones that support Google's ARCore technology, Chrome browser and the new WebXR API. Follow the steps below.

-

Check here for a list of ARCore-supported devices and see if your device is supported.

-

Install Google Play Services for AR (ARCore) on Google Play.

-

Install Chrome browser for Android.

Are you ready for the AR experience? Check out the online version of XR Animator on your Android Chrome browser!

After the page has been fully loaded, click on the little phone button on the top-left (or bottom-left) menu to activate the AR mode. Once the AR mode is enabled, you will see what your phone's camera is showing. Move your camera around the ground where you want to place the 3D model, and a white circle should apppear. Double-tap on the screen, and the 3D model will be placed over the white circle. Double-tab again to re-summon the white circle if you want to place the model elsewhere.

Check out these YouTube videos for demonstration.

The future of XR Animator relies on your support🙇 Some IRL family issues have significantly increased my financial burden. While it was fun to develop the app, financial return was next to minimal. Reality forces me to evaluate the sustainability of this project, or soon I will have to give up...😢

If you like XR Animator, please consider making a donation🙇 Or even better, join my membership with perks such as EARLY ACCESS to the latest version XR Animator (at least 5 months ahead of the public release on GitHub), insider stories/tips and other benefits🎁 Sponsor us, and help keep this project free and sustainable🙏

XR Animator is currently sponsored by the following people❤️

- NewruGuru, Kai, Nymph, KuraiNoOni, LouLi Lou, coffee-addict, skeh, Swoonifer, Nyaarium, The Infamous Moistener, Kyonko_VT, CoCoNo, Motoko Library

- Other supporters

System Animator was originally a desktop gadget project, born more than 10 years ago. The latest version, System Animator Online, is a major version advancement with focus on working as a web app instead of being just a desktop gadget. It fully supports MikuMikuDance (MMD) models and motions, as well as the latest VRM models and FBX/BVH motions, to create an immersive 3D environment.

It's hard to describe what System Animator Online can do in a few words. From a simple animated CPU meter to an interactive 3D music visualizer, a simple AR gadget on your phone to a full-body motion tracking app on your PC, the possibility is endless.

For more information about the desktop gadget version of System Animator, please visit the following page. https://www.animetheme.com/sidebar/

System Animator was born more than 10 years ago as a personal and tiny 100-line-ish JavaScript desktop gadget project for Windows Vista which shows an animated rocket Anime girl as a CPU meter (the animation is still in XR Animator).

As time goes by, I decided to add more features, multi-purpose system meter, music visualizer, 3D/MMD support, animated wallpaper engine, RPG engine and eventually what you see in XR Animator. The codebase has grown exponentially while the core is still an Internet-Explorer-based JavaScript gadget, and things were becoming more and more clumsy, to a point when I had to decide whether to rewrite everything from scratch to match the modern coding standard (open source, module based, etc). However, I gave up and decided to carry on with what I have written, as a total restart would require too much time and efforts, probably not worthy as a personal project. Besides, as the rule of programming says, "If it works, don't touch it" LOL

Eventually, I decided to put the project on Github for my own convenience, but technically speaking you can consider it open source, though I have to admit that some of the codes are outdated, clumsy and confusing. Everything is fine if you are just an end-user of XR Animator/System Animator as an app, but if you want to build your own things from my codes, be warned that they can be pretty incomprehensible LOL

-

3D Miku The Dancer (drop any MP3 and she will dance for you)

-

3D Multiplayer RPG (up to 3 players)

All demos support the use of custom MMD (MikuMikuDance) model. Drop a zip of your favorite MMD model at the beginning, press the START button, and the demo will proceed with your model instead of the default one.

- License (CC BY-NC-SA 4.0) - http://creativecommons.org/licenses/by-nc-sa/4.0/

- This license applies if you are adapting XR Animator's source code for your own purpose, such as building another software or service.

- This license does not cover any third-party assets which may have incompatible licenses of their own.

- This license does not apply to content generated from the functionality of XR Animator, such as video content generated from the motion capture feature of System Animator using your own assets. XR Animator claims no right or responsibility over such content.

-

System Animator © Butz Yung/Anime Theme - http://www.animetheme.com/sidebar/

-

jThree v2 (NOTE: jThree has been discontinued. Its successor is known as "Grimoire.js")

-

ammo.js, a port of Bullet Physics to JavaScript, zlib licensed

-

JSZip (used under MIT license)

-

"Appearance Miku" MMD Model - Readme/License

-

Some texture/image/icon sources https://3dtextures.me/ https://opengameart.org/content/rpg-inventory https://opengameart.org/content/fantasy-icon-pack-by-ravenmore-0 https://opengameart.org/content/potion-bottles https://www.flaticon.com/ https://www.iconfinder.com/ https://icon-icons.com/en/pack/Social-Distancing/2274 https://github.com/icons8/flat-color-icons https://www.behance.net/gallery/41818673/FREE-SPORT-ICONS

-

Simple Explosion by Bleed https://remusprites.carbonmade.com/ https://opengameart.org/content/simple-explosion-bleeds-game-art

-

Various 3D background effects ported and modified from codes found on Shadertoy

-

Some icons and backgrounds from Freepik

-

For some other third-party programming libraries/3D data/assets used in System Animator, please refer to the corresponding script/readme for license and terms (can be found on the downloadable/Github version of System Animator).

-

もぐ式りょう/りく/りょく/りん by Mogg https://3d.nicovideo.jp/works/td55798 https://3d.nicovideo.jp/works/td55973 https://3d.nicovideo.jp/works/td56074 https://3d.nicovideo.jp/works/td56604

-

"Stranger Things" - A Remix ft. Michael Jobity https://soundcloud.com/foreignmachine/stranger-remix

-

Dragon Ball Super I Ultra Instinct OST I Clash of Gods Remix I Hip Hop Instrumental I @AndrezoWorks https://www.youtube.com/watch?v=KJ71dY4mkNo

-

Credits are given to the authors of any other image/media files used in System Animator.

-

Twitter: https://twitter.com/butz_yung

-

Discord: https://discord.gg/Xs4YEMVtkx

-

Ko-fi: https://ko-fi.com/butzyung

-

FANBOX: https://xra.fanbox.cc/

-

Homepage (System Animator): https://www.animetheme.com/sidebar/

-

Email: [email protected]

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for SystemAnimatorOnline

Similar Open Source Tools

SystemAnimatorOnline

XR Animator is a video/webcam-based AI motion capture application designed for VTubing and the metaverse era. It uses machine learning solutions to detect 3D poses from a live webcam video, driving a 3D avatar as if controlled by the user's body. It supports full-body AI motion tracking, face tracking, and various XR/3D purposes. The tool can be used for VTubing, recording mocap motion, exporting motions to different formats, customizing backgrounds and scenes, and animating 3D models in other applications. It also supports AR on Android Chrome browser, AR selfie feature, and has relatively low system requirements for wide device compatibility.

aitools_client

Seth's AI Tools is a Unity-based front-end that interfaces with various AI APIs to perform tasks such as generating Twine games, quizzes, posters, and more. The tool is a native Windows application that supports features like live update integration with image editors, text-to-image conversion, image processing, mask painting, and more. It allows users to connect to multiple servers for fast generation using GPUs and offers a neat workflow for evolving images in real-time. The tool respects user privacy by operating locally and includes built-in games and apps to test AI/SD capabilities. Additionally, it features an AI Guide for creating motivational posters and illustrated stories, as well as an Adventure mode with presets for generating web quizzes and Twine game projects.

Generative-AI-Pharmacist

Generative AI Pharmacist is a project showcasing the use of generative AI tools to create an animated avatar named Macy, who delivers medication counseling in a realistic and professional manner. The project utilizes tools like Midjourney for image generation, ChatGPT for text generation, ElevenLabs for text-to-speech conversion, and D-ID for creating a photorealistic talking avatar video. The demo video featuring Macy discussing commonly-prescribed medications demonstrates the potential of generative AI in healthcare communication.

Aimmy

Aimmy is a universal AI-Based Aim Alignment Mechanism developed by BabyHamsta, MarsQQ & Taylor to make gaming more accessible for users who have difficulty aiming. It utilizes DirectML, ONNX, and YOLOV8 for player detection, offering high accuracy and fast performance. Aimmy features an easy-to-use UI, extensive customizability, and is free of ads and paywalls. It is designed for gamers facing challenges like physical or mental disabilities, poor hand-eye coordination, or aiming difficulties due to environmental factors. Aimmy provides various features like AI detection, customizability, anti-recoil system, mouse movement methods, hotswappability, and a model/configuration store with repository support.

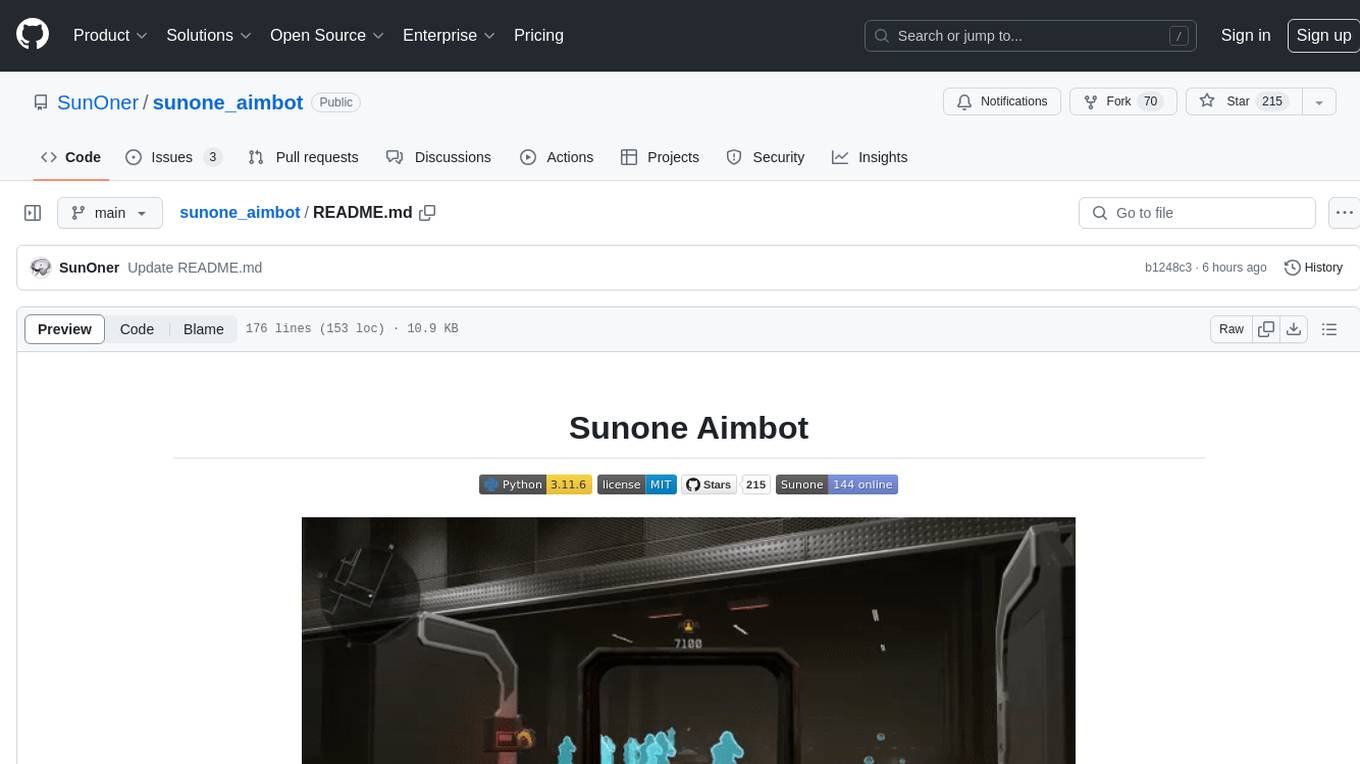

sunone_aimbot

Sunone Aimbot is an AI-powered aim bot for first-person shooter games. It leverages YOLOv8 and YOLOv10 models, PyTorch, and various tools to automatically target and aim at enemies within the game. The AI model has been trained on more than 30,000 images from popular first-person shooter games like Warface, Destiny 2, Battlefield 2042, CS:GO, Fortnite, The Finals, CS2, and more. The aimbot can be configured through the `config.ini` file to adjust various settings related to object search, capture methods, aiming behavior, hotkeys, mouse settings, shooting options, Arduino integration, AI model parameters, overlay display, debug window, and more. Users are advised to follow specific recommendations to optimize performance and avoid potential issues while using the aimbot.

FreeChat

FreeChat is a native LLM appliance for macOS that runs completely locally. Download it and ask your LLM a question without doing any configuration. A local/llama version of OpenAI's chat without login or tracking. You should be able to install from the Mac App Store and use it immediately.

CodeProject.AI-Server

CodeProject.AI Server is a standalone, self-hosted, fast, free, and open-source Artificial Intelligence microserver designed for any platform and language. It can be installed locally without the need for off-device or out-of-network data transfer, providing an easy-to-use solution for developers interested in AI programming. The server includes a HTTP REST API server, backend analysis services, and the source code, enabling users to perform various AI tasks locally without relying on external services or cloud computing. Current capabilities include object detection, face detection, scene recognition, sentiment analysis, and more, with ongoing feature expansions planned. The project aims to promote AI development, simplify AI implementation, focus on core use-cases, and leverage the expertise of the developer community.

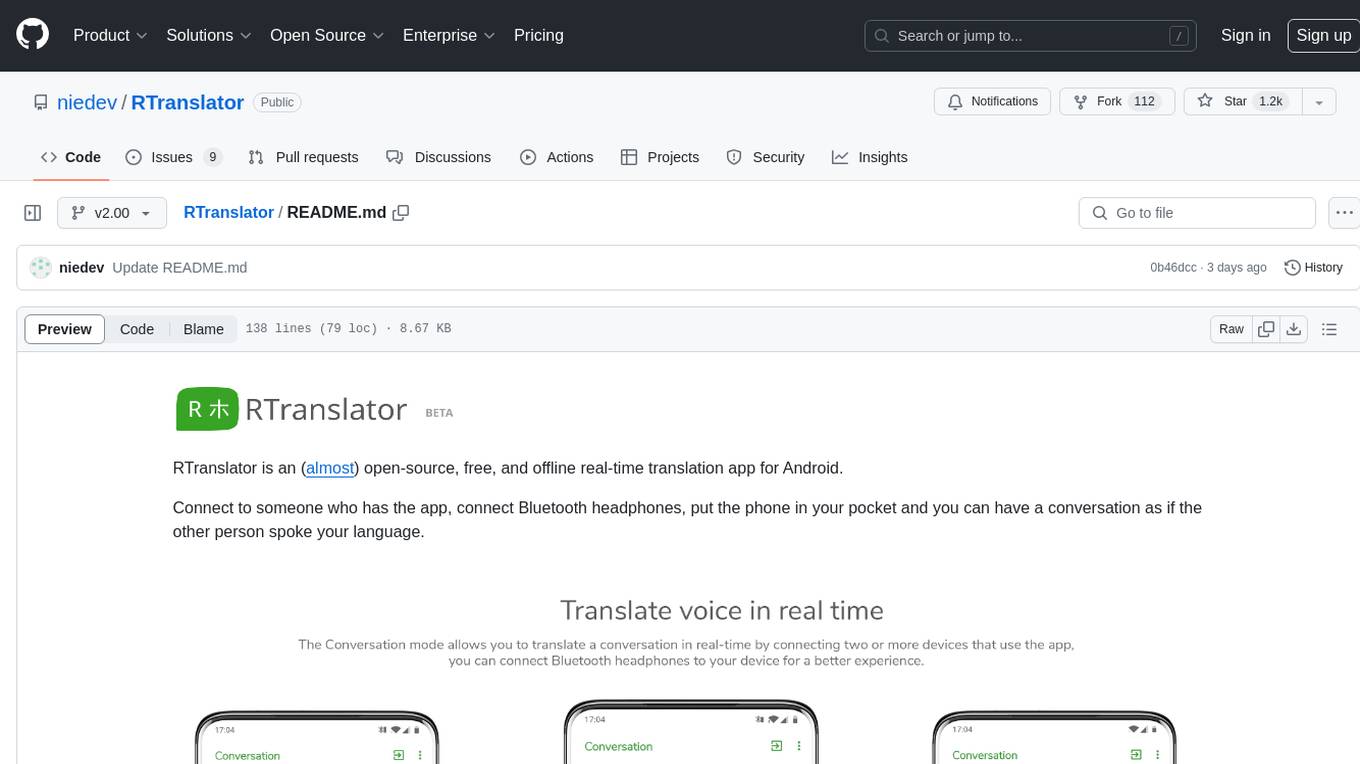

RTranslator

RTranslator is an almost open-source, free, and offline real-time translation app for Android. It offers Conversation mode for multi-user translations, WalkieTalkie mode for quick conversations, and Text translation mode. It uses Meta's NLLB for translation and OpenAi's Whisper for speech recognition, ensuring privacy. The app is optimized for performance and supports multiple languages. It is ad-free and donation-supported.

NeuroSync_Player

NeuroSync Player is a real-time AI endpoint server that combines text-to-speech and NeuroSync generations. It includes code for various AI endpoints such as speech-to-text, text-to-speech, embedding, and vision. The tool allows users to connect their llm to Twitch and YouTube, enabling the llm-powered metahuman to respond to viewers in real-time. Additionally, it offers features like push-to-talk, face animation integration, and support for blendshapes generated from audio inputs for Unreal Engine 5. Users can train and fine-tune their own models using NeuroSync Trainer Lite, with simplified loss functions and mixed precision for faster training. The tool also supports data augmentation to help with fine detail reproduction.

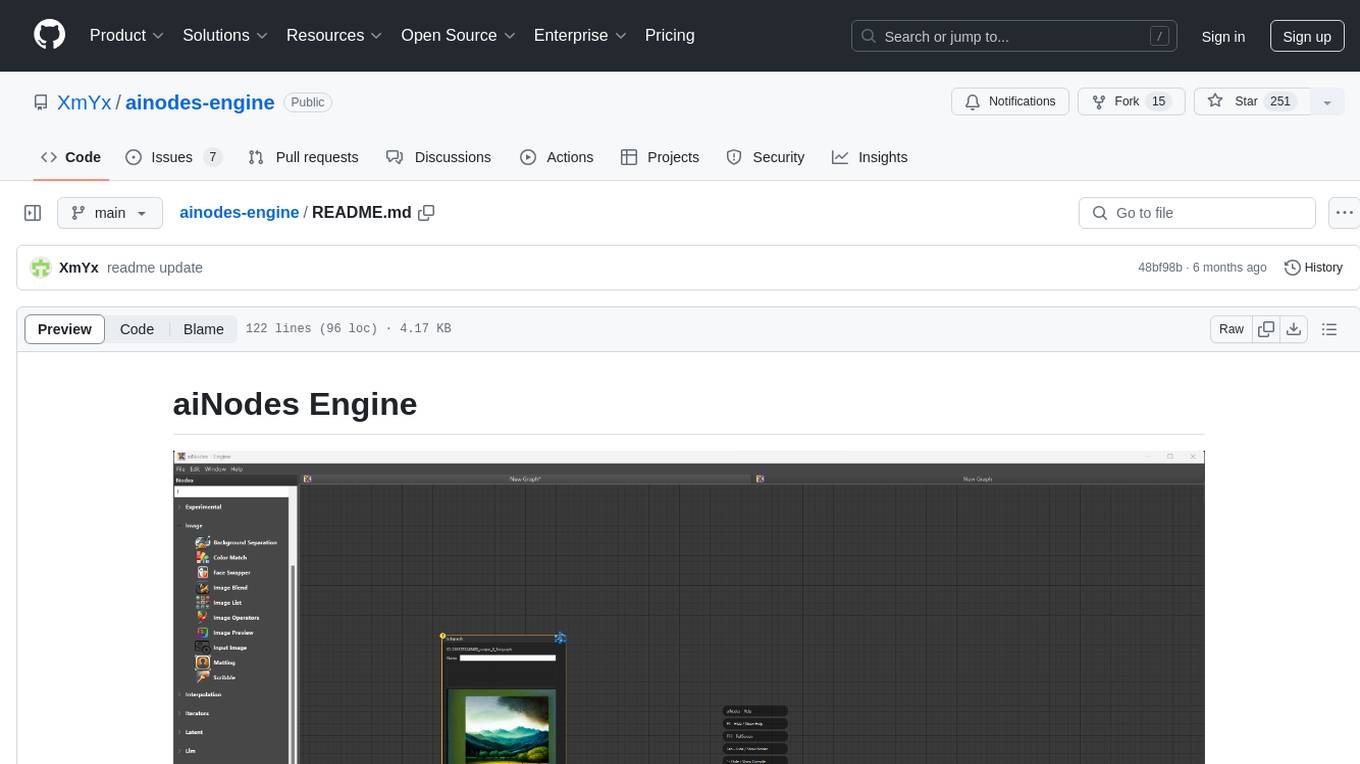

ainodes-engine

aiNodes Engine is a Python-based AI image/motion picture generator node engine with a live execution chain, python code editor node, and plug-in support. It offers full modularity, colored background drop, and easy node creation with IDE annotations. The project is officially supported by Deforum and incorporates various open-source projects like ComfyUI. It is designed to be flexible, with an Unreal-like execution chain, supporting features such as Deforum, Stable Diffusion, Upscalers, Kandinsky, ControlNet, and more. The engine allows for background separation, human matting/masking, compositing, drag and drop, subgraphs, and graph saving/loading from image metadata. It aims to provide a unique, controllable manner of working with a strict user-declared execution chain.

dream-textures

Dream Textures is a tool integrated into Blender that allows users to create textures, concept art, background assets, and more using simple text prompts. It offers features like seamless texture creation, texture projection for entire scenes, restyling animations, and running models on the user's machine for faster iteration. The tool supports CUDA and Apple Silicon GPUs, with over 4GB of VRAM recommended. Users can troubleshoot issues by checking Blender's system console or seeking help from the community on Discord.

pyOllaMx

PyOllaMx is a chatbot application that serves as a gateway to both Ollama and Apple MlX models. It allows users to chat with these models through a native MacOS interface, eliminating the need for external applications like Bing or Chat-GPT. The tool provides a versatile and user-friendly experience for interacting with different models, enabling tasks such as model discovery, model management, chatting, and web searching. PyOllaMx simplifies the workflow for users who want to leverage Ollama and MlX models on their Mac devices.

chatgpt-universe

ChatGPT is a large language model that can generate human-like text, translate languages, write different kinds of creative content, and answer your questions in a conversational way. It is trained on a massive amount of text data, and it is able to understand and respond to a wide range of natural language prompts. Here are 5 jobs suitable for this tool, in lowercase letters: 1. content writer 2. chatbot assistant 3. language translator 4. creative writer 5. researcher

Godot4ThirdPersonCombatPrototype

Godot4ThirdPersonCombatPrototype is a base project for third person combat, featuring player movement and camera controls with lock-on functionality. It includes setups for models, animations, AI behavior, state machines, audio, and custom resources. The project aims to provide a foundation for developers to create third-person combat mechanics in their games.

MagicMirror

MagicMirror is an AI-powered tool that allows users to instantly try on new faces, hairstyles, and outfits with a simple drag and drop interface. It runs smoothly on standard computers without the need for dedicated GPU hardware, ensuring privacy with completely offline processing. The tool is ultra-lightweight with a small installer size and model files, providing a fun and easy way to experiment with different looks.

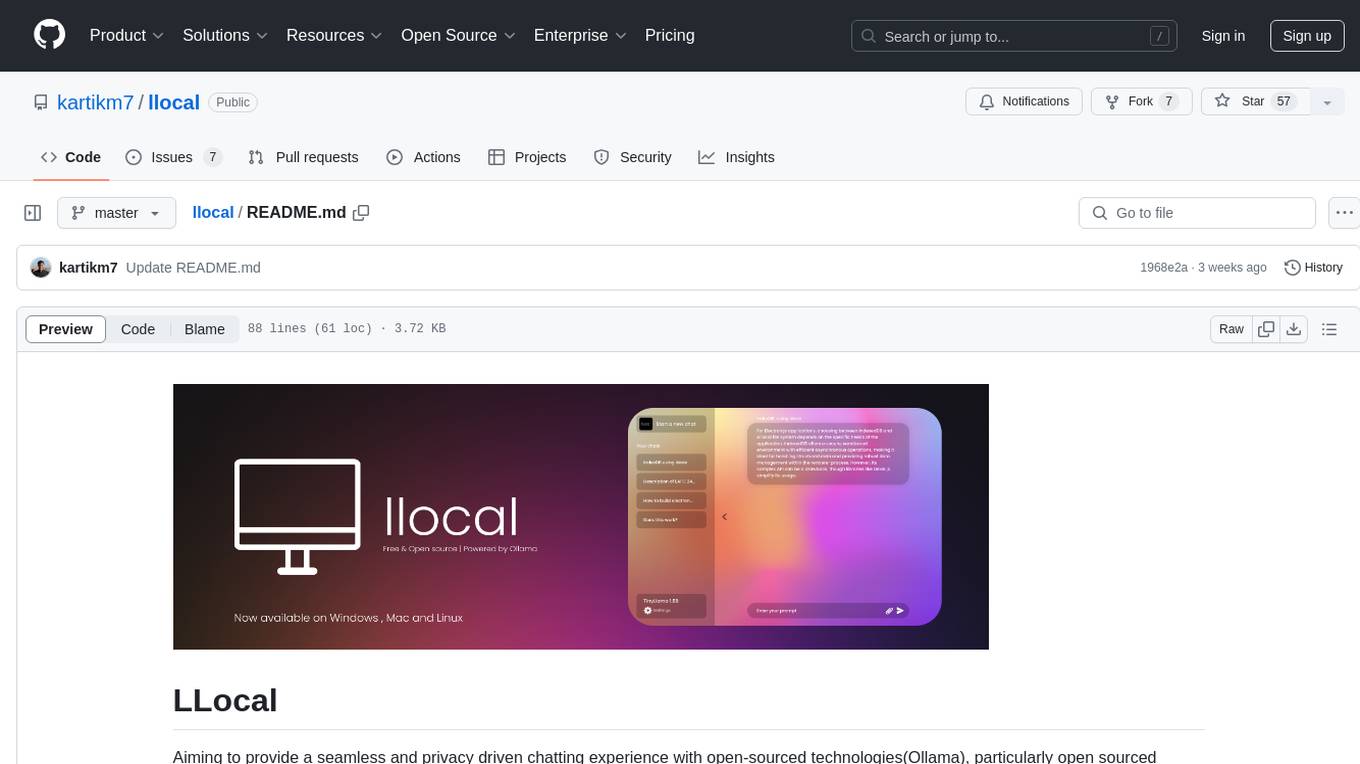

llocal

LLocal is an Electron application focused on providing a seamless and privacy-driven chatting experience using open-sourced technologies, particularly open-sourced LLM's. It allows users to store chats locally, switch between models, pull new models, upload images, perform web searches, and render responses as markdown. The tool also offers multiple themes, seamless integration with Ollama, and upcoming features like chat with images, web search improvements, retrieval augmented generation, multiple PDF chat, text to speech models, community wallpapers, lofi music, speech to text, and more. LLocal's builds are currently unsigned, requiring manual builds or using the universal build for stability.

For similar tasks

SystemAnimatorOnline

XR Animator is a video/webcam-based AI motion capture application designed for VTubing and the metaverse era. It uses machine learning solutions to detect 3D poses from a live webcam video, driving a 3D avatar as if controlled by the user's body. It supports full-body AI motion tracking, face tracking, and various XR/3D purposes. The tool can be used for VTubing, recording mocap motion, exporting motions to different formats, customizing backgrounds and scenes, and animating 3D models in other applications. It also supports AR on Android Chrome browser, AR selfie feature, and has relatively low system requirements for wide device compatibility.

Open-LLM-VTuber-Web

Open LLM Vtuber is an Electron application built using React and TypeScript. It allows users to create virtual avatars for live streaming or video content creation. The application provides a user-friendly interface for customizing avatars and integrating them into various streaming platforms. With recommended IDE setup including VSCode, ESLint, and Prettier, users can easily develop and customize their virtual avatars. The project setup involves installation, development, and building for different operating systems such as Windows, macOS, and Linux.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.