AI tools for flicker

Related Tools:

Kameleoon

Kameleoon is an AI-driven A/B testing platform and personalization tool designed to optimize web experiences through experimentation and feature management. It offers a single platform with AI-powered conversion capabilities, strong security features, and powerful integrations. Kameleoon caters to a wide range of industries, including E-commerce, Retail, Travel, Automotive, Financial Services, Media, Healthcare, and B2B SaaS. The platform enables users to run experiments, personalize content, manage features, and analyze real-time data to enhance user experiences and drive growth.

TensorPix

TensorPix is an online AI-powered video enhancer and upscaler that can improve and upscale videos in less than 3 minutes. It is a cloud-based service that can be used to enhance videos from any device, including smartphones and tablets. TensorPix uses AI to enhance video quality, including resolution, framerate, and color correction. It can also remove flickering, film dirt, and interlacing artifacts from old videos. TensorPix is used by thousands of users, including filmmakers, studios, and businesses. It is a powerful tool that can help you improve the quality of your videos and images.

neocodeium

NeoCodeium is a free AI completion plugin powered by Codeium, designed for Neovim users. It aims to provide a smoother experience by eliminating flickering suggestions and allowing for repeatable completions using the `.` key. The plugin offers performance improvements through cache techniques, displays suggestion count labels, and supports Lua scripting. Users can customize keymaps, manage suggestions, and interact with the AI chat feature. NeoCodeium enhances code completion in Neovim, making it a valuable tool for developers seeking efficient coding assistance.

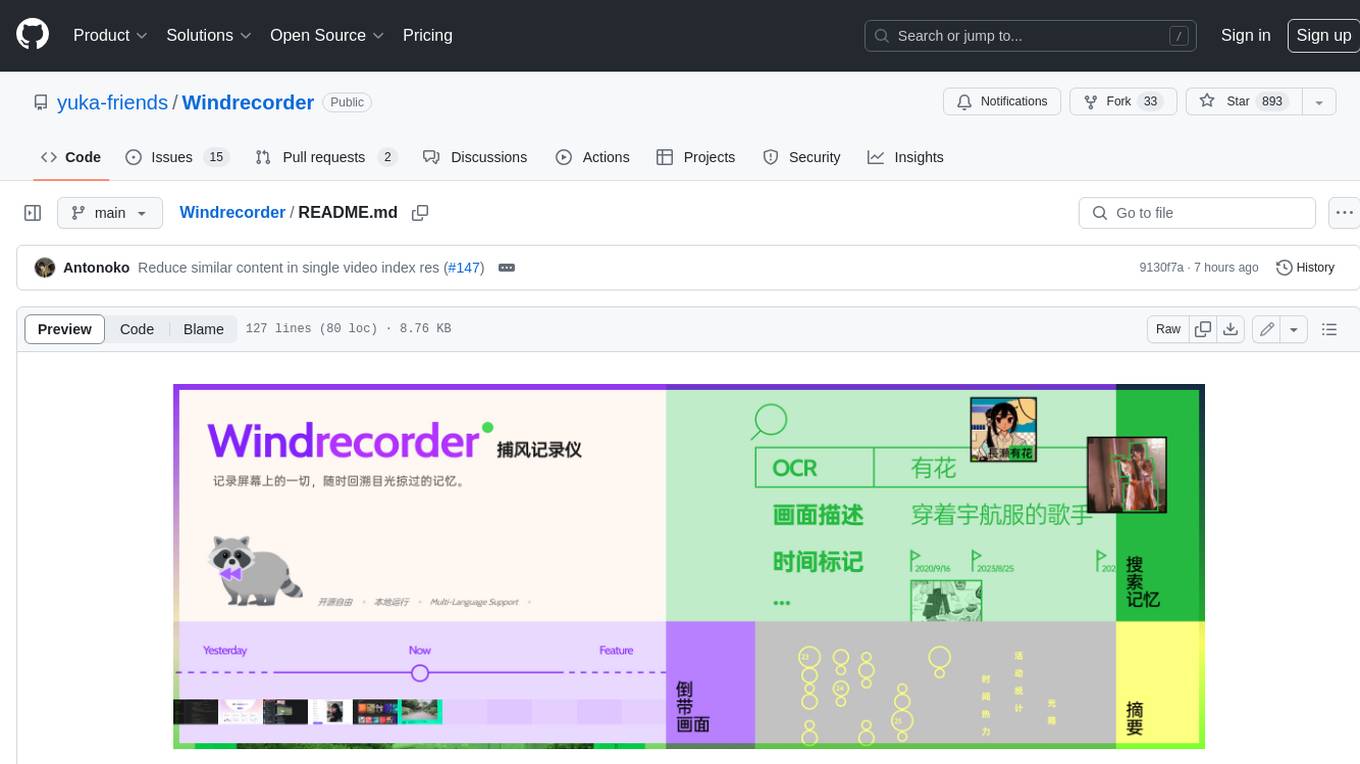

Windrecorder

Windrecorder is an open-source tool that helps you retrieve memory cues by recording everything on your screen. It can search based on OCR text or image descriptions and provides a summary of your activities. All of its capabilities run entirely locally, without the need for an internet connection or uploading any data, giving you complete ownership of your data.

fount

fount is a character card frontend page that decouples AI sources, AI characters, user personas, dialogue environments, and AI plugins, allowing them to be freely combined to spark infinite possibilities. It serves as a bridge connecting imagination and reality, a lighthouse guiding characters and stories, and a free garden for AI sources, characters, personas, dialogue environments, and plugins to grow and bloom. It integrates AI sources without the need for reverse proxy servers, improves web experience with features like multi-device synchronization and unfiltered HTML rendering, and extends companionship beyond the web by connecting characters to Discord groups and providing gentle reminders through fount-pwsh. For character creators, fount offers infinite possibilities with JavaScript or TypeScript code customization, execution of code without filtering, loading npm packages, and creating custom HTML pages. It encourages extension through modularization and community contributions.

CogAgent

CogAgent is an advanced intelligent agent model designed for automating operations on graphical interfaces across various computing devices. It supports platforms like Windows, macOS, and Android, enabling users to issue commands, capture device screenshots, and perform automated operations. The model requires a minimum of 29GB of GPU memory for inference at BF16 precision and offers capabilities for executing tasks like sending Christmas greetings and sending emails. Users can interact with the model by providing task descriptions, platform specifications, and desired output formats.

OpenAI-CLIP-Feature

This repository provides code for extracting image and text features using OpenAI CLIP models, supporting both global and local grid visual features. It aims to facilitate multi visual-and-language downstream tasks by allowing users to customize input and output grid resolution easily. The extracted features have shown comparable or superior results in image captioning tasks without hyperparameter tuning. The repo supports various CLIP models and provides detailed information on supported settings and results on MSCOCO image captioning. Users can get started by setting up experiments with the extracted features using X-modaler.

airport-codes

A website that tries to make sense of those three-letter airport codes. It provides detailed information about each airport, including its name, location, and a description. The site also includes a search function that allows users to find airports by name, city, or country. Airport content can be found in `/data` in individual files. Use the three-letter airport code as the filename (e.g. `phx.json`). Content in each `json` file: `id` = three-letter code (e.g. phx), `name` = airport name (Sky Harbor International Airport), `city` = primary city name (Phoenix), `state` = state name, if applicable (Arizona), `stateShort` = state abbreviation, if applicable (AZ), `country` = country name (USA), `description` = description, accepts markdown, use * for emphasis on letters, `imageCredit` = name of photographer, `imageCreditLink` = URL of photographer's Flickr page. You can also optionally add for aid in searching: `city2` = another city or country the airport may be known for. Adding a `json` file to `/data` will automatically render it. You do not need to manually add the path anywhere.

InternVL

InternVL scales up the ViT to _**6B parameters**_ and aligns it with LLM. It is a vision-language foundation model that can perform various tasks, including: **Visual Perception** - Linear-Probe Image Classification - Semantic Segmentation - Zero-Shot Image Classification - Multilingual Zero-Shot Image Classification - Zero-Shot Video Classification **Cross-Modal Retrieval** - English Zero-Shot Image-Text Retrieval - Chinese Zero-Shot Image-Text Retrieval - Multilingual Zero-Shot Image-Text Retrieval on XTD **Multimodal Dialogue** - Zero-Shot Image Captioning - Multimodal Benchmarks with Frozen LLM - Multimodal Benchmarks with Trainable LLM - Tiny LVLM InternVL has been shown to achieve state-of-the-art results on a variety of benchmarks. For example, on the MMMU image classification benchmark, InternVL achieves a top-1 accuracy of 51.6%, which is higher than GPT-4V and Gemini Pro. On the DocVQA question answering benchmark, InternVL achieves a score of 82.2%, which is also higher than GPT-4V and Gemini Pro. InternVL is open-sourced and available on Hugging Face. It can be used for a variety of applications, including image classification, object detection, semantic segmentation, image captioning, and question answering.

AGI-Papers

This repository contains a collection of papers and resources related to Large Language Models (LLMs), including their applications in various domains such as text generation, translation, question answering, and dialogue systems. The repository also includes discussions on the ethical and societal implications of LLMs. **Description** This repository is a collection of papers and resources related to Large Language Models (LLMs). LLMs are a type of artificial intelligence (AI) that can understand and generate human-like text. They have a wide range of applications, including text generation, translation, question answering, and dialogue systems. **For Jobs** - **Content Writer** - **Copywriter** - **Editor** - **Journalist** - **Marketer** **AI Keywords** - **Large Language Models** - **Natural Language Processing** - **Machine Learning** - **Artificial Intelligence** - **Deep Learning** **For Tasks** - **Generate text** - **Translate text** - **Answer questions** - **Engage in dialogue** - **Summarize text**

llm-course

The llm-course repository is a collection of resources and materials for a course on Legal and Legislative Drafting. It includes lecture notes, assignments, readings, and other educational materials to help students understand the principles and practices of drafting legal documents. The course covers topics such as statutory interpretation, legal drafting techniques, and the role of legislation in the legal system. Whether you are a law student, legal professional, or someone interested in understanding the intricacies of legal language, this repository provides valuable insights and resources to enhance your knowledge and skills in legal drafting.

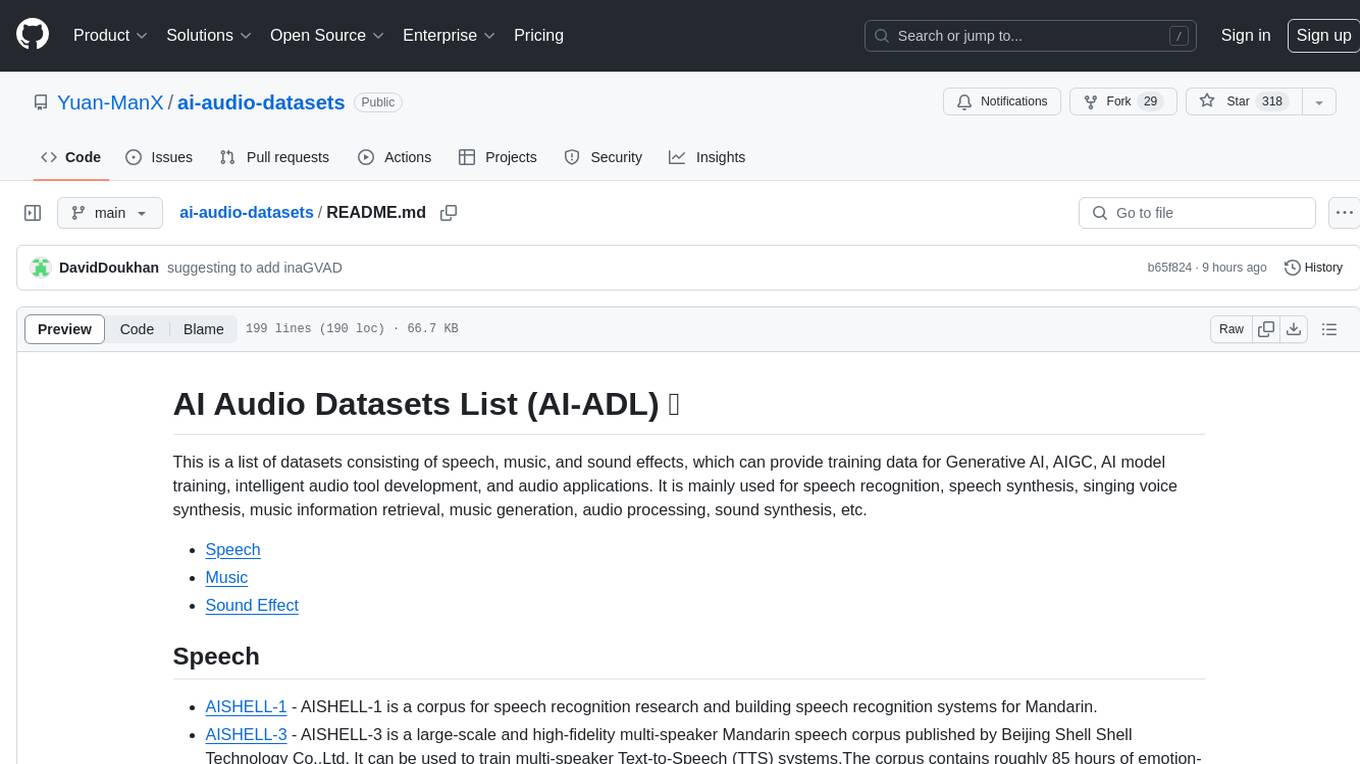

ai-audio-datasets

AI Audio Datasets List (AI-ADL) is a comprehensive collection of datasets consisting of speech, music, and sound effects, used for Generative AI, AIGC, AI model training, and audio applications. It includes datasets for speech recognition, speech synthesis, music information retrieval, music generation, audio processing, sound synthesis, and more. The repository provides a curated list of diverse datasets suitable for various AI audio tasks.

llamabot

LlamaBot is a Pythonic bot interface to Large Language Models (LLMs), providing an easy way to experiment with LLMs in Jupyter notebooks and build Python apps utilizing LLMs. It supports all models available in LiteLLM. Users can access LLMs either through local models with Ollama or by using API providers like OpenAI and Mistral. LlamaBot offers different bot interfaces like SimpleBot, ChatBot, QueryBot, and ImageBot for various tasks such as rephrasing text, maintaining chat history, querying documents, and generating images. The tool also includes CLI demos showcasing its capabilities and supports contributions for new features and bug reports from the community.