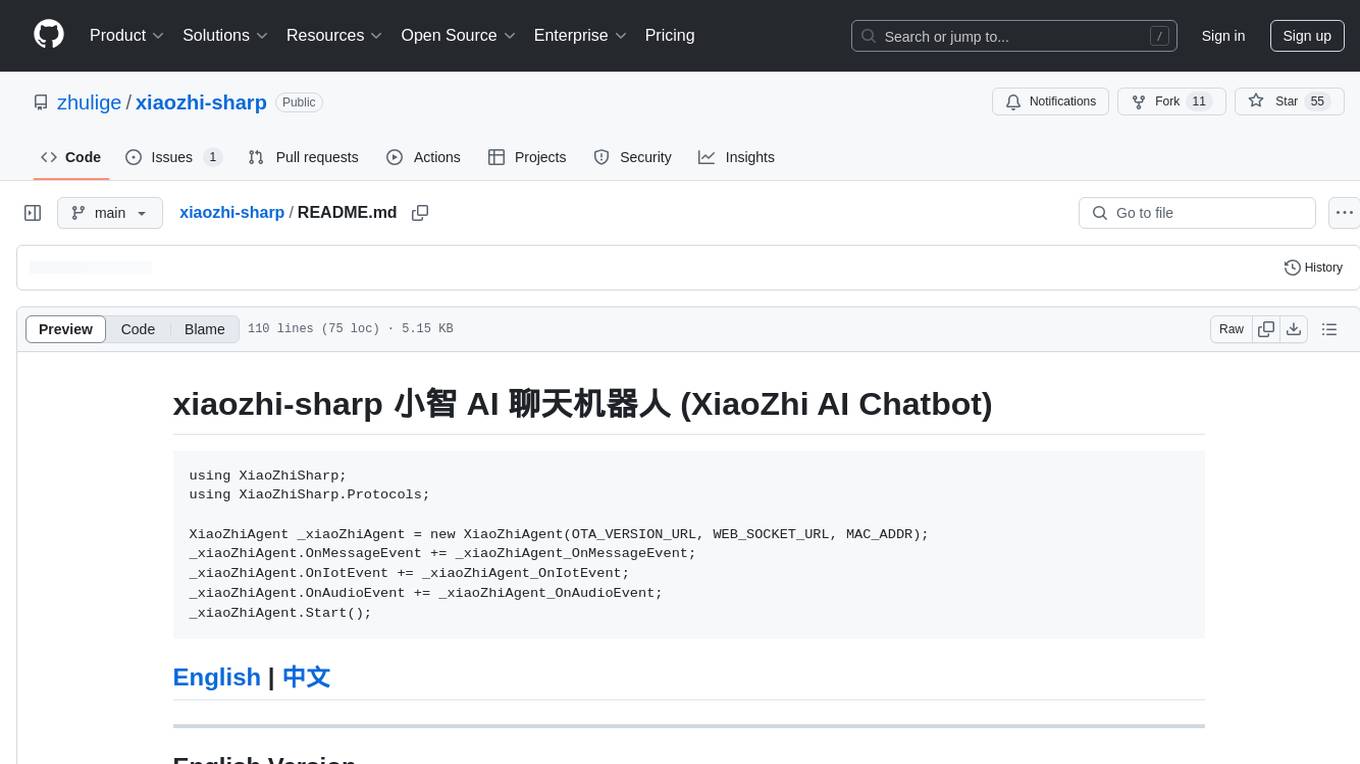

xiaozhi-sharp

C#实现的小智客户端,用于代码学习和在没有硬件条件下体验小智AI。

Stars: 55

xiaozhi-sharp is a meticulously crafted XiaoZhi client in C#, serving as a code learning example and enabling intelligent interaction with XiaoZhi AI without related hardware. It connects to xiaozhi.me server for stable services. The tool includes a debugging feature to understand XiaoZhi's commands and a console client for interaction. Users need .NET Core SDK to run the project smoothly, ensuring stable network connection for optimal usage. Contributions and feedback are welcome for project improvement and community engagement.

README:

using XiaoZhiSharp;

using XiaoZhiSharp.Protocols;

XiaoZhiAgent _xiaoZhiAgent = new XiaoZhiAgent(OTA_VERSION_URL, WEB_SOCKET_URL, MAC_ADDR);

_xiaoZhiAgent.OnMessageEvent += _xiaoZhiAgent_OnMessageEvent;

_xiaoZhiAgent.OnIotEvent += _xiaoZhiAgent_OnIotEvent;

_xiaoZhiAgent.OnAudioEvent += _xiaoZhiAgent_OnAudioEvent;

_xiaoZhiAgent.Start();

xiaozhi-sharp is a meticulously crafted XiaoZhi client in C#, which not only serves as an excellent code learning example but also allows you to easily experience the intelligent interaction brought by XiaoZhi AI without the need for related hardware.

This client defaults to connecting to the xiaozhi.me official server, providing you with stable and reliable services.

Outputs all commands and lets you understand how XiaoZhi works. Why wait? Just use it!

To run this project, follow the steps below:

Ensure that your system has installed the .NET Core SDK. If not installed, you can download and install the version suitable for your system from the official website.

After successful compilation, use the following command to run the project:

dotnet runAfter the project starts, you will see relevant information output to the console. Follow the prompts to start chatting with XiaoZhi AI.

Ensure that your network connection is stable to use XiaoZhi AI smoothly.

If you encounter any issues during the process, first check the error messages output to the console or verify if the project configuration is correct, such as whether the global variable MAC_ADDR has been modified as required.

If you find any issues with the project or have suggestions for improvement, feel free to submit an Issue or Pull Request. Your feedback and contributions are essential for the development and improvement of the project.

Welcome to join our community to share experiences, propose suggestions, or get help!

xiaozhi-sharp 是一个用 C# 精心打造的小智客户端,它不仅可以作为代码学习的优质示例,还能让你在没有相关硬件条件的情况下,轻松体验到小智 AI 带来的智能交互乐趣。

本客户端默认接入 xiaozhi.me 官方服务器,为你提供稳定可靠的服务。

要运行本项目,你需要按照以下步骤操作:

确保你的系统已经安装了 .NET Core SDK。如果尚未安装,可以从 官方网站 下载并安装适合你系统的版本。

编译成功后,使用以下命令运行项目:

dotnet run项目启动后,你将看到控制台输出相关信息,按照提示进行操作,即可开始与小智 AI 进行畅快的聊天互动。

请确保你的网络连接正常,这样才能顺利使用小智AI。

在运行过程中,如果遇到任何问题,可以先查看控制台输出的错误信息,或者检查项目的配置是否正确,例如全局变量 MAC_ADDR 是否已经按照要求进行修改。

如果你在使用过程中发现了项目中的问题,或者有任何改进的建议,欢迎随时提交 Issue 或者 Pull Request。你的反馈和贡献将对项目的发展和完善起到重要的作用。

欢迎加入我们的社区,分享经验、提出建议或获取帮助!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for xiaozhi-sharp

Similar Open Source Tools

xiaozhi-sharp

xiaozhi-sharp is a meticulously crafted XiaoZhi client in C#, serving as a code learning example and enabling intelligent interaction with XiaoZhi AI without related hardware. It connects to xiaozhi.me server for stable services. The tool includes a debugging feature to understand XiaoZhi's commands and a console client for interaction. Users need .NET Core SDK to run the project smoothly, ensuring stable network connection for optimal usage. Contributions and feedback are welcome for project improvement and community engagement.

RapidRAG

RapidRAG is a project focused on Knowledge QA with LLM, combining Questions & Answers based on local knowledge base with a large language model. The project aims to provide a flexible and deployment-friendly solution for building a knowledge question answering system. It is modularized, allowing easy replacement of parts and simple code understanding. The tool supports various document formats and can utilize CPU for most parts, with the large language model interface requiring separate deployment.

Second-Me

Second Me is an open-source prototype that allows users to craft their own AI self, preserving their identity, context, and interests. It is locally trained and hosted, yet globally connected, scaling intelligence across an AI network. It serves as an AI identity interface, fostering collaboration among AI selves and enabling the development of native AI apps. The tool prioritizes individuality and privacy, ensuring that user information and intelligence remain local and completely private.

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

Transtation-KMP

Transtation is an easy-to-use and powerful translation software for Android/Desktop based on Kotlin Multiplatform + Compose Multiplatform. It allows users to translate one item using multiple engines simultaneously, utilize advanced Large Language Models for translation, chat with LLMs for translation, translate long text, support plugin development, image translation, and screen translation. The application is designed for Chinese users and serves as a reference for learning Jetpack Compose or Compose Multiplatform. It features Kotlin Multiplatform, Compose Multiplatform, MVVM, Kotlin Coroutine, Flow, SqlDelight, synchronized translation with multiple engines, plugin development, and makes use of Kotlin language features like lazy loading, Coroutine, sealed classes, and reflection. The application gradually adapts to Android13 with features like setting application language separately and supporting Monet icon.

pyqt-openai

VividNode is a cross-platform AI desktop chatbot application for LLM such as GPT, Claude, Gemini, Llama chatbot interaction and image generation. It offers customizable features, local chat history, and enhanced performance without requiring a browser. The application is powered by GPT4Free and allows users to interact with chatbots and generate images seamlessly. VividNode supports Windows, Mac, and Linux, securely stores chat history locally, and provides features like chat interface customization, image generation, focus and accessibility modes, and extensive customization options with keyboard shortcuts for efficient operations.

openroleplay.ai

Open Roleplay is an open-source alternative to Character.ai. It allows users to create their own AI characters, customize them, and generate images and voices for them. Open Roleplay also supports group chat and automatic translation. The tool is built with Next.js, React.js, Tailwind CSS, Vercel, Convex, and Clerk.

singulatron

Singulatron is an AI Superplatform that runs on your computer(s) and server(s) without using third party APIs, providing complete control over data and privacy. It offers AI functionality, user management, supports different database backends, collaboration, and mini-apps. It aims to be a desktop app for local usage and a distributed daemon for servers, with a web app frontend client. The tool is stack-based on Electron, Angular, and Go, and currently dual-licensed under AGPL-3.0-or-later and a commercial license.

companion-vscode

Quack Companion is a VSCode extension that provides smart linting, code chat, and coding guideline curation for developers. It aims to enhance the coding experience by offering a new tab with features like curating software insights with the team, code chat similar to ChatGPT, smart linting, and upcoming code completion. The extension focuses on creating a smooth contribution experience for developers by turning contribution guidelines into a live pair coding experience, helping developers find starter contribution opportunities, and ensuring alignment between contribution goals and project priorities. Quack collects limited telemetry data to improve its services and products for developers, with options for anonymization and disabling telemetry available to users.

midjourney-bot

Discord Midjourney Bot is an open-source bot designed for AI enthusiasts, providing various AI art functionalities without any paywalls. Users can enjoy features like text to image conversion, image transformation, logo generation, face swap, image upscaling, and more. The bot aims to offer advanced customizable image generation capabilities, including access to language models and canvas size customization. Additionally, the project is open to partnerships and investments, with opportunities for bloggers to review the product. The bot requires Node v18+ to run and integrates with Replicate API for certain functionalities.

SunoApi

SunoAPI is an unofficial client for Suno AI, built on Python and Streamlit. It supports functions like generating music and obtaining music information. Users can set up multiple account information to be saved for use. The tool also features built-in maintenance and activation functions for tokens, eliminating concerns about token expiration. It supports multiple languages and allows users to upload pictures for generating songs based on image content analysis.

Ollama-SwiftUI

Ollama-SwiftUI is a user-friendly interface for Ollama.ai created in Swift. It allows seamless chatting with local Large Language Models on Mac. Users can change models mid-conversation, restart conversations, send system prompts, and use multimodal models with image + text. The app supports managing models, including downloading, deleting, and duplicating them. It offers light and dark mode, multiple conversation tabs, and a localized interface in English and Arabic.

Akagi

Akagi is a project designed to help users understand and improve their performance in Majsoul game matches in real-time. It provides educational insights and tools for analyzing gameplay. Users can install Akagi on Windows or Mac systems and follow the setup instructions to enhance their gaming experience. The project aims to offer features like Autoplay, Auto Ron, and integration with MajsoulUnlocker. It also focuses on enhancing user safety by providing guidelines to minimize the risk of account suspension. Akagi is a tool that combines MITM interception, AI decision-making, and user interaction to optimize gameplay strategies and performance.

supallm

Supallm is a Python library for super resolution of images using deep learning techniques. It provides pre-trained models for enhancing image quality by increasing resolution. The library is easy to use and allows users to upscale images with high fidelity and detail. Supallm is suitable for tasks such as enhancing image quality, improving visual appearance, and increasing the resolution of low-quality images. It is a valuable tool for researchers, photographers, graphic designers, and anyone looking to enhance image quality using AI technology.

OpenHands

OpenDevin is a platform for autonomous software engineers powered by AI and LLMs. It allows human developers to collaborate with agents to write code, fix bugs, and ship features. The tool operates in a secured docker sandbox and provides access to different LLM providers for advanced configuration options. Users can contribute to the project through code contributions, research and evaluation of LLMs in software engineering, and providing feedback and testing. OpenDevin is community-driven and welcomes contributions from developers, researchers, and enthusiasts looking to advance software engineering with AI.

wingman-ai

Wingman-AI is a free and open-source AI coding assistant that brings high-quality AI-assisted coding right to your computer. It offers features such as code completion, interactive chat, and support for multiple AI providers, including Ollama, Hugging Face, and OpenAI. Wingman-AI is designed to enhance your coding workflow by providing real-time assistance and suggestions, making it an ideal tool for developers of all levels.

For similar tasks

h2ogpt

h2oGPT is an Apache V2 open-source project that allows users to query and summarize documents or chat with local private GPT LLMs. It features a private offline database of any documents (PDFs, Excel, Word, Images, Video Frames, Youtube, Audio, Code, Text, MarkDown, etc.), a persistent database (Chroma, Weaviate, or in-memory FAISS) using accurate embeddings (instructor-large, all-MiniLM-L6-v2, etc.), and efficient use of context using instruct-tuned LLMs (no need for LangChain's few-shot approach). h2oGPT also offers parallel summarization and extraction, reaching an output of 80 tokens per second with the 13B LLaMa2 model, HYDE (Hypothetical Document Embeddings) for enhanced retrieval based upon LLM responses, a variety of models supported (LLaMa2, Mistral, Falcon, Vicuna, WizardLM. With AutoGPTQ, 4-bit/8-bit, LORA, etc.), GPU support from HF and LLaMa.cpp GGML models, and CPU support using HF, LLaMa.cpp, and GPT4ALL models. Additionally, h2oGPT provides Attention Sinks for arbitrarily long generation (LLaMa-2, Mistral, MPT, Pythia, Falcon, etc.), a UI or CLI with streaming of all models, the ability to upload and view documents through the UI (control multiple collaborative or personal collections), Vision Models LLaVa, Claude-3, Gemini-Pro-Vision, GPT-4-Vision, Image Generation Stable Diffusion (sdxl-turbo, sdxl) and PlaygroundAI (playv2), Voice STT using Whisper with streaming audio conversion, Voice TTS using MIT-Licensed Microsoft Speech T5 with multiple voices and Streaming audio conversion, Voice TTS using MPL2-Licensed TTS including Voice Cloning and Streaming audio conversion, AI Assistant Voice Control Mode for hands-free control of h2oGPT chat, Bake-off UI mode against many models at the same time, Easy Download of model artifacts and control over models like LLaMa.cpp through the UI, Authentication in the UI by user/password via Native or Google OAuth, State Preservation in the UI by user/password, Linux, Docker, macOS, and Windows support, Easy Windows Installer for Windows 10 64-bit (CPU/CUDA), Easy macOS Installer for macOS (CPU/M1/M2), Inference Servers support (oLLaMa, HF TGI server, vLLM, Gradio, ExLLaMa, Replicate, OpenAI, Azure OpenAI, Anthropic), OpenAI-compliant, Server Proxy API (h2oGPT acts as drop-in-replacement to OpenAI server), Python client API (to talk to Gradio server), JSON Mode with any model via code block extraction. Also supports MistralAI JSON mode, Claude-3 via function calling with strict Schema, OpenAI via JSON mode, and vLLM via guided_json with strict Schema, Web-Search integration with Chat and Document Q/A, Agents for Search, Document Q/A, Python Code, CSV frames (Experimental, best with OpenAI currently), Evaluate performance using reward models, and Quality maintained with over 1000 unit and integration tests taking over 4 GPU-hours.

serverless-chat-langchainjs

This sample shows how to build a serverless chat experience with Retrieval-Augmented Generation using LangChain.js and Azure. The application is hosted on Azure Static Web Apps and Azure Functions, with Azure Cosmos DB for MongoDB vCore as the vector database. You can use it as a starting point for building more complex AI applications.

react-native-vercel-ai

Run Vercel AI package on React Native, Expo, Web and Universal apps. Currently React Native fetch API does not support streaming which is used as a default on Vercel AI. This package enables you to use AI library on React Native but the best usage is when used on Expo universal native apps. On mobile you get back responses without streaming with the same API of `useChat` and `useCompletion` and on web it will fallback to `ai/react`

LLamaSharp

LLamaSharp is a cross-platform library to run 🦙LLaMA/LLaVA model (and others) on your local device. Based on llama.cpp, inference with LLamaSharp is efficient on both CPU and GPU. With the higher-level APIs and RAG support, it's convenient to deploy LLM (Large Language Model) in your application with LLamaSharp.

gpt4all

GPT4All is an ecosystem to run powerful and customized large language models that work locally on consumer grade CPUs and any GPU. Note that your CPU needs to support AVX or AVX2 instructions. Learn more in the documentation. A GPT4All model is a 3GB - 8GB file that you can download and plug into the GPT4All open-source ecosystem software. Nomic AI supports and maintains this software ecosystem to enforce quality and security alongside spearheading the effort to allow any person or enterprise to easily train and deploy their own on-edge large language models.

ChatGPT-Telegram-Bot

ChatGPT Telegram Bot is a Telegram bot that provides a smooth AI experience. It supports both Azure OpenAI and native OpenAI, and offers real-time (streaming) response to AI, with a faster and smoother experience. The bot also has 15 preset bot identities that can be quickly switched, and supports custom bot identities to meet personalized needs. Additionally, it supports clearing the contents of the chat with a single click, and restarting the conversation at any time. The bot also supports native Telegram bot button support, making it easy and intuitive to implement required functions. User level division is also supported, with different levels enjoying different single session token numbers, context numbers, and session frequencies. The bot supports English and Chinese on UI, and is containerized for easy deployment.

twinny

Twinny is a free and open-source AI code completion plugin for Visual Studio Code and compatible editors. It integrates with various tools and frameworks, including Ollama, llama.cpp, oobabooga/text-generation-webui, LM Studio, LiteLLM, and Open WebUI. Twinny offers features such as fill-in-the-middle code completion, chat with AI about your code, customizable API endpoints, and support for single or multiline fill-in-middle completions. It is easy to install via the Visual Studio Code extensions marketplace and provides a range of customization options. Twinny supports both online and offline operation and conforms to the OpenAI API standard.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.