frigate

NVR with realtime local object detection for IP cameras

Stars: 26149

Frigate is a complete and local NVR designed for Home Assistant with AI object detection. It uses OpenCV and Tensorflow to perform realtime object detection locally for IP cameras. Use of a Google Coral Accelerator is optional, but highly recommended. The Coral will outperform even the best CPUs and can process 100+ FPS with very little overhead.

README:

[English] | 简体中文

A complete and local NVR designed for Home Assistant with AI object detection. Uses OpenCV and Tensorflow to perform realtime object detection locally for IP cameras.

Use of a GPU or AI accelerator such as a Google Coral or Hailo is highly recommended. AI accelerators will outperform even the best CPUs with very little overhead.

- Tight integration with Home Assistant via a custom component

- Designed to minimize resource use and maximize performance by only looking for objects when and where it is necessary

- Leverages multiprocessing heavily with an emphasis on realtime over processing every frame

- Uses a very low overhead motion detection to determine where to run object detection

- Object detection with TensorFlow runs in separate processes for maximum FPS

- Communicates over MQTT for easy integration into other systems

- Records video with retention settings based on detected objects

- 24/7 recording

- Re-streaming via RTSP to reduce the number of connections to your camera

- WebRTC & MSE support for low-latency live view

View the documentation at https://docs.frigate.video

If you would like to make a donation to support development, please use Github Sponsors.

We use Weblate to support language translations. Contributions are always welcome.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for frigate

Similar Open Source Tools

frigate

Frigate is a complete and local NVR designed for Home Assistant with AI object detection. It uses OpenCV and Tensorflow to perform realtime object detection locally for IP cameras. Use of a Google Coral Accelerator is optional, but highly recommended. The Coral will outperform even the best CPUs and can process 100+ FPS with very little overhead.

frigate-hass-integration

Frigate Home Assistant Integration provides a rich media browser with thumbnails and navigation, sensor entities for camera FPS, detection FPS, process FPS, skipped FPS, and objects detected, binary sensor entities for object motion, camera entities for live view and object detected snapshot, switch entities for clips, detection, snapshots, and improve contrast, and support for multiple Frigate instances. It offers easy installation via HACS and manual installation options for advanced users. Users need to configure the `mqtt` integration for Frigate to work. Additionally, media browsing and a companion Lovelace card are available for enhanced user experience. Refer to the main Frigate documentation for detailed installation instructions and usage guidance.

StratosphereLinuxIPS

Slips is a powerful endpoint behavioral intrusion prevention and detection system that uses machine learning to detect malicious behaviors in network traffic. It can work with network traffic in real-time, PCAP files, and network flows from tools like Suricata, Zeek/Bro, and Argus. Slips threat detection is based on machine learning models, threat intelligence feeds, and expert heuristics. It gathers evidence of malicious behavior and triggers alerts when enough evidence is accumulated. The tool is Python-based and supported on Linux and MacOS, with blocking features only on Linux. Slips relies on Zeek network analysis framework and Redis for interprocess communication. It offers a graphical user interface for easy monitoring and analysis.

toolhive-studio

ToolHive Studio is an experimental project under active development and testing, providing an easy way to discover, deploy, and manage Model Context Protocol (MCP) servers securely. Users can launch any MCP server in a locked-down container with just a few clicks, eliminating manual setup, security concerns, and runtime issues. The tool ensures instant deployment, default security measures, cross-platform compatibility, and seamless integration with popular clients like GitHub Copilot, Cursor, and Claude Code.

Vento

Vento is an AI-driven machine automation platform that utilizes a Large Language Model (LLM) to automate the control of physical devices and machines. It features a natural language autopilot system for smart and industrial devices, providing a continuous decision loop for sensor states evaluation and actuator triggering. The platform offers a user-friendly UI for device onboarding, rule configuration, and real-time monitoring. Vento supports connected devices (IoT) based on ESP32 with ESPHome, allowing users to program, deploy, and manage IoT networks visually. Additionally, it provides AI assistance for creating rules and system management through automatic context transfer and prompt cascading.

HAMi

HAMi is a Heterogeneous AI Computing Virtualization Middleware designed to manage Heterogeneous AI Computing Devices in a Kubernetes cluster. It allows for device sharing, device memory control, device type specification, and device UUID specification. The tool is easy to use and does not require modifying task YAML files. It includes features like hard limits on device memory, partial device allocation, streaming multiprocessor limits, and core usage specification. HAMi consists of components like a mutating webhook, scheduler extender, device plugins, and in-container virtualization techniques. It is suitable for scenarios requiring device sharing, specific device memory allocation, GPU balancing, low utilization optimization, and scenarios needing multiple small GPUs. The tool requires prerequisites like NVIDIA drivers, CUDA version, nvidia-docker, Kubernetes version, glibc version, and helm. Users can install, upgrade, and uninstall HAMi, submit tasks, and monitor cluster information. The tool's roadmap includes supporting additional AI computing devices, video codec processing, and Multi-Instance GPUs (MIG).

dify

Dify is an open-source LLM app development platform that combines AI workflow, RAG pipeline, agent capabilities, model management, observability features, and more. It allows users to quickly go from prototype to production. Key features include: 1. Workflow: Build and test powerful AI workflows on a visual canvas. 2. Comprehensive model support: Seamless integration with hundreds of proprietary / open-source LLMs from dozens of inference providers and self-hosted solutions. 3. Prompt IDE: Intuitive interface for crafting prompts, comparing model performance, and adding additional features. 4. RAG Pipeline: Extensive RAG capabilities that cover everything from document ingestion to retrieval. 5. Agent capabilities: Define agents based on LLM Function Calling or ReAct, and add pre-built or custom tools. 6. LLMOps: Monitor and analyze application logs and performance over time. 7. Backend-as-a-Service: All of Dify's offerings come with corresponding APIs for easy integration into your own business logic.

buildel

Buildel is an AI automation platform that empowers users to create versatile workflows without writing code. It supports multiple providers and interfaces, offers pre-built use cases, and allows users to bring their own API keys. Ideal for AI-powered document retrieval, conversational interfaces, and data integration. Users can get started at app.buildel.ai or run Buildel locally with Node.js, Elixir/Erlang, Docker, Git, and JQ installed. Join the community on Discord for support and discussions.

Protofy

Protofy is a full-stack, batteries-included low-code enabled web/app and IoT system with an API system and real-time messaging. It is based on Protofy (protoflow + visualui + protolib + protodevices) + Expo + Next.js + Tamagui + Solito + Express + Aedes + Redbird + Many other amazing packages. Protofy can be used to fast prototype Apps, webs, IoT systems, automations, or APIs. It is a ultra-extensible CMS with supercharged capabilities, mobile support, and IoT support (esp32 thanks to esphome).

kalavai-client

Kalavai is an open-source platform that transforms everyday devices into an AI supercomputer by aggregating resources from multiple machines. It facilitates matchmaking of resources for large AI projects, making AI hardware accessible and affordable. Users can create local and public pools, connect with the community's resources, and share computing power. The platform aims to be a management layer for research groups and organizations, enabling users to unlock the power of existing hardware without needing a devops team. Kalavai CLI tool helps manage both versions of the platform.

tuff

Tuff is a local-first, AI-native, and infinitely extensible desktop command center designed to enhance workflow efficiency. It offers a seamless integration of core utilities, AI-powered search, contextual intelligence, and extensibility through custom plugins. With a beautiful UI design, rich functionality, simple operations, and a focus on security and reliability, Tuff provides users with a cross-platform desktop software that is easy to use and offers a good user experience.

nexent

Nexent is a powerful tool for analyzing and visualizing network traffic data. It provides comprehensive insights into network behavior, helping users to identify patterns, anomalies, and potential security threats. With its user-friendly interface and advanced features, Nexent is suitable for network administrators, cybersecurity professionals, and anyone looking to gain a deeper understanding of their network infrastructure.

agentgateway

Agentgateway is an open source data plane optimized for agentic AI connectivity within or across any agent framework or environment. It provides drop-in security, observability, and governance for agent-to-agent and agent-to-tool communication, supporting leading interoperable protocols like Agent2Agent (A2A) and Model Context Protocol (MCP). Highly performant, security-first, multi-tenant, dynamic, and supporting legacy API transformation, agentgateway is designed to handle any scale and run anywhere with any agent framework.

colors_ai

Colors AI is a cross-platform color scheme generator that uses deep learning from public API providers. It is available for all mainstream operating systems, including mobile. Features: - Choose from open APIs, with the ability to set up custom settings - Export section with many export formats to save or clipboard copy - URL providers to other static color generators - Localized to several languages - Dark and light theme - Material Design 3 - Data encryption - Accessibility - And much more

Memoh

Memoh is a multi-member, structured long-memory, containerized AI agent system platform that allows users to create AI bots for communication via platforms like Telegram, Discord, and Lark. Each bot operates in its own isolated container with a memory system for file editing, command execution, and self-building. Memoh offers a secure, flexible, and scalable solution for multi-bot management, distinguishing and remembering requests from multiple users and bots.

miyagi

Project Miyagi showcases Microsoft's Copilot Stack in an envisioning workshop aimed at designing, developing, and deploying enterprise-grade intelligent apps. By exploring both generative and traditional ML use cases, Miyagi offers an experiential approach to developing AI-infused product experiences that enhance productivity and enable hyper-personalization. Additionally, the workshop introduces traditional software engineers to emerging design patterns in prompt engineering, such as chain-of-thought and retrieval-augmentation, as well as to techniques like vectorization for long-term memory, fine-tuning of OSS models, agent-like orchestration, and plugins or tools for augmenting and grounding LLMs.

For similar tasks

frigate

Frigate is a complete and local NVR designed for Home Assistant with AI object detection. It uses OpenCV and Tensorflow to perform realtime object detection locally for IP cameras. Use of a Google Coral Accelerator is optional, but highly recommended. The Coral will outperform even the best CPUs and can process 100+ FPS with very little overhead.

aiohomekit

aiohomekit is a Python library that implements the HomeKit protocol for controlling HomeKit accessories using asyncio. It is primarily used with Home Assistant, targeting the same versions of Python and following their code standards. The library is still under development and does not offer API guarantees yet. It aims to match the behavior of real HAP controllers, even when not strictly specified, and works around issues like JSON formatting, boolean encoding, header sensitivity, and TCP packet splitting. aiohomekit is primarily tested with Phillips Hue and Eve Extend bridges via Home Assistant, but is known to work with many more devices. It does not support BLE accessories and is intended for client-side use only.

ha-llmvision

LLM Vision is a Home Assistant integration that allows users to analyze images, videos, and camera feeds using multimodal LLMs. It supports providers such as OpenAI, Anthropic, Google Gemini, LocalAI, and Ollama. Users can input images and videos from camera entities or local files, with the option to downscale images for faster processing. The tool provides detailed instructions on setting up LLM Vision and each supported provider, along with usage examples and service call parameters.

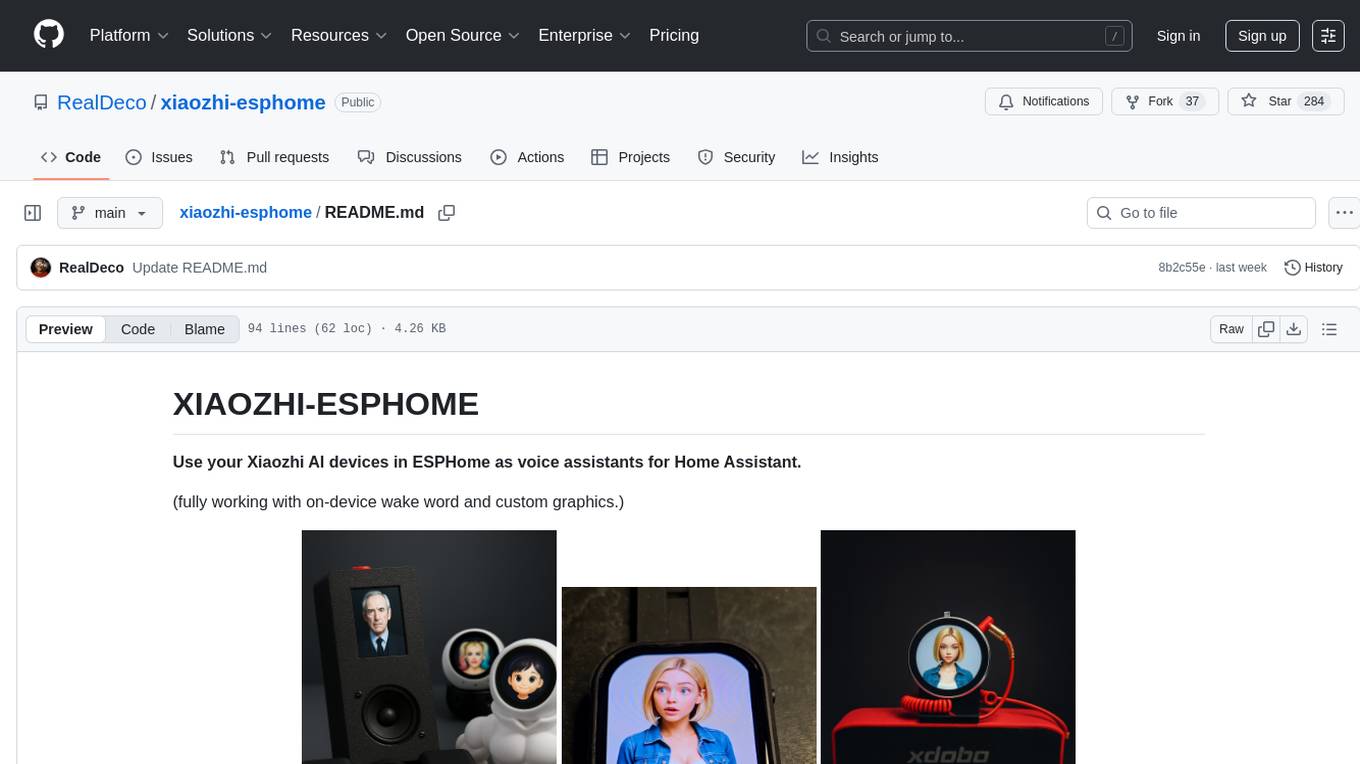

xiaozhi-esphome

This GitHub project provides a simple way to use Xiaozhi-based devices with ESPHome, allowing them to serve as voice assistants integrated with Home Assistant. Users can follow a step-by-step installation guide to connect their devices, edit configurations, and set up the voice assistant. The project supports various devices such as Spotpear Ball, Muma Box, Puck, Guition Taichi pi, Xingzhi Cube, and more. Additionally, it offers links to purchase supported devices and accessories, including 3D files for holders and wireless chargers.

aiohomematic

AIO Homematic (hahomematic) is a lightweight Python 3 library for controlling and monitoring HomeMatic and HomematicIP devices, with support for third-party devices/gateways. It automatically creates entities for device parameters, offers custom entity classes for complex behavior, and includes features like caching paramsets for faster restarts. Designed to integrate with Home Assistant, it requires specific firmware versions for HomematicIP devices. The public API is defined in modules like central, client, model, exceptions, and const, with example usage provided. Useful links include changelog, data point definitions, troubleshooting, and developer resources for architecture, data flow, model extension, and Home Assistant lifecycle.

toshiba_air_cond

The toshiba_air_cond project implements functions to decode Toshiba AB protocol from indoor units to wired controllers and provides a hardware design for communication. It is developed for ESP8266 using PlatformIO and Wemos D1 mini board. The project offers features such as WebServer, WebSockets communication, Home Assistant integration, OTA updates, file uploading, and WiFiManager. It has been tested with specific Toshiba units and provides a web interface for controlling functions like power ON/OFF, setting target temperature, changing modes, adjusting fan speed, setting timers, and displaying current temperature and system information.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.