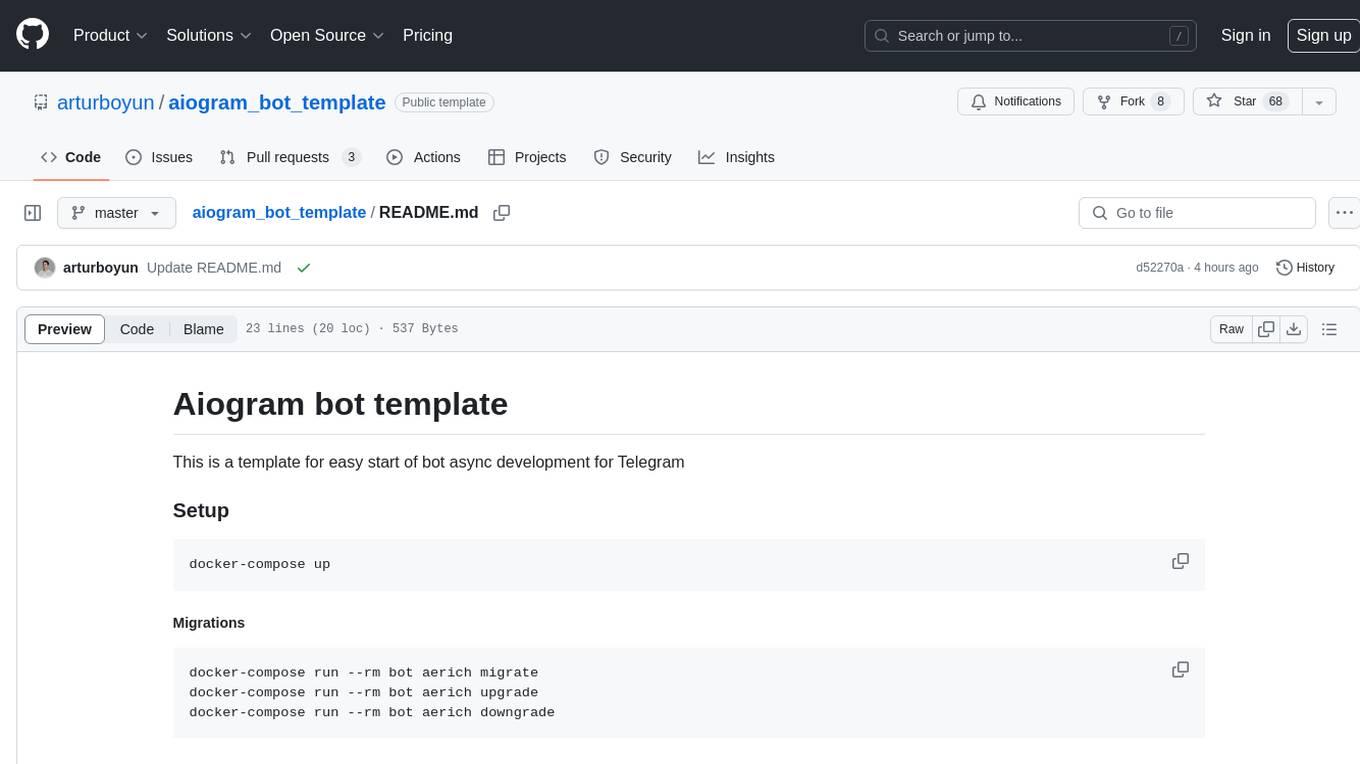

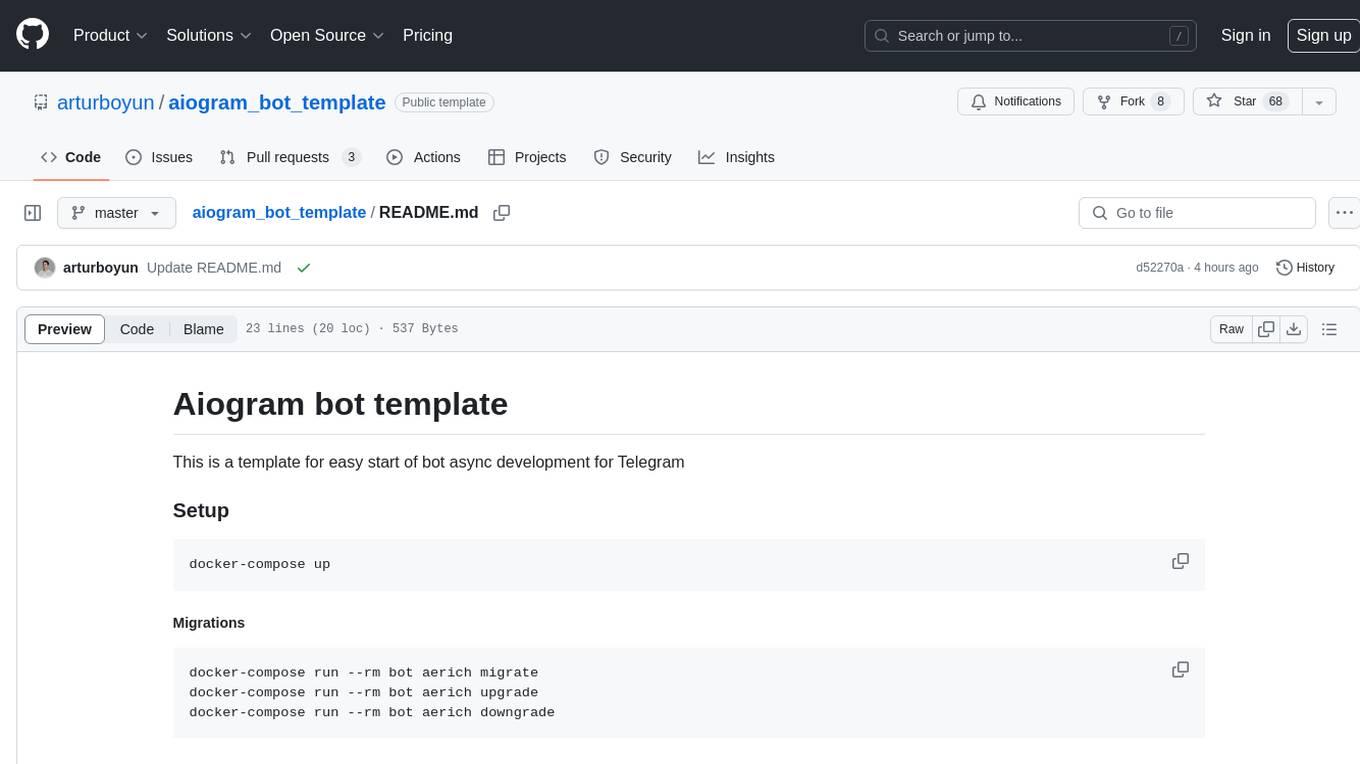

aiogram_bot_template

This is a template for easy start of bot development for Telegram

Stars: 68

This repository provides a template for easy asynchronous bot development for Telegram using Aiogram library. It includes setup instructions using Docker Compose, migration commands for TortoiseORM, and examples for working with TortoiseORM. The template aims to simplify the process of creating Telegram bots with features like FastAPI integration and Docker Compose setup. The repository also includes a list of tasks to be completed, such as changing SQLAlchemy to TortoiseORM, adding FastAPI for webhook, and setting up CI/CD examples.

README:

This is a template for easy start of bot async development for Telegram

docker-compose updocker-compose run --rm bot aerich migrate

docker-compose run --rm bot aerich upgrade

docker-compose run --rm bot aerich downgrade- [X] Upload template

- [X] Change SQLAlchemy to TortoiseORM

- [X] Migrations for TortoiseORM

- [X] Add example for work with TortoiseORM

- [ ] Add FastAPI for webhook and other stuff

- [X] Add Docker Compose

- [ ] CI/CD example

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiogram_bot_template

Similar Open Source Tools

aiogram_bot_template

This repository provides a template for easy asynchronous bot development for Telegram using Aiogram library. It includes setup instructions using Docker Compose, migration commands for TortoiseORM, and examples for working with TortoiseORM. The template aims to simplify the process of creating Telegram bots with features like FastAPI integration and Docker Compose setup. The repository also includes a list of tasks to be completed, such as changing SQLAlchemy to TortoiseORM, adding FastAPI for webhook, and setting up CI/CD examples.

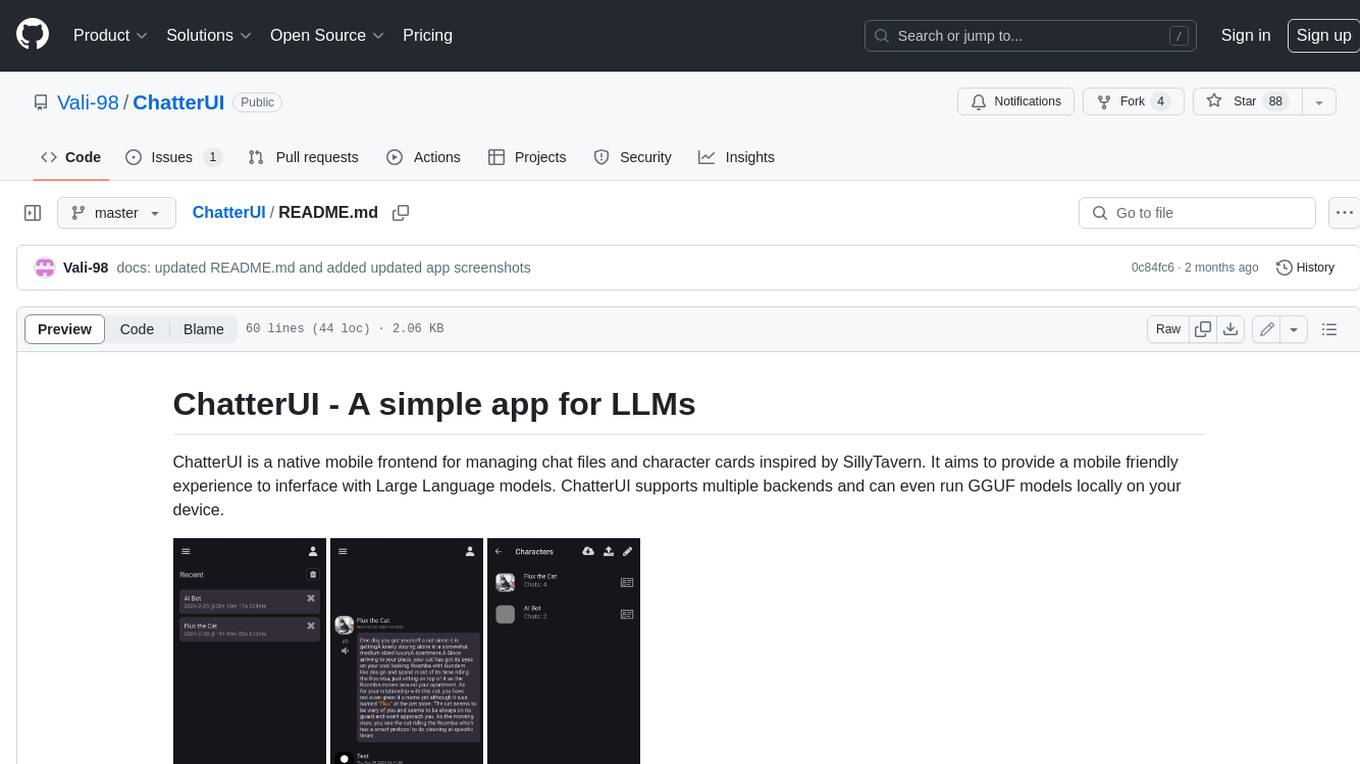

ChatterUI

ChatterUI is a mobile app that allows users to manage chat files and character cards, and to interact with Large Language Models (LLMs). It supports multiple backends, including local, koboldcpp, text-generation-webui, Generic Text Completions, AI Horde, Mancer, Open Router, and OpenAI. ChatterUI provides a mobile-friendly interface for interacting with LLMs, making it easy to use them for a variety of tasks, such as generating text, translating languages, writing code, and answering questions.

mesop

Mesop is a Python-based UI framework designed for rapid web app development, particularly for demos and internal apps. It offers an intuitive interface for UI novices, frictionless developer workflows with hot reload and IDE support, and flexibility to build custom UIs without the need for JavaScript/CSS/HTML. Mesop allows users to write UI in idiomatic Python code and compose UI into components using Python functions. It is used at Google for internal app development and provides a quick way to build delightful web apps in Python.

mesop

Mesop is a Python-based UI framework designed for rapid web app development, particularly for demos and internal apps. It allows users to write UI in Python code, offers reactive UI paradigm, ready-to-use components, hot reload feature, rich IDE support, and the ability to build custom UIs without writing Javascript/CSS/HTML. Mesop is intuitive for UI novices, provides frictionless developer workflows, and is flexible for creating delightful demos. It is used at Google for rapid internal app development.

shire

The Shire is an AI Coding Agent Language that facilitates communication between an LLM and control IDE for automated programming. It offers a straightforward approach to creating AI agents tailored to individual IDEs, enabling users to build customized AI-driven development environments. The concept of Shire originated from AutoDev, a subproject of UnitMesh, with DevIns as its precursor. The tool provides documentation and resources for implementing AI in software engineering projects.

chat-vue

Full-featured AI Chatbot Vue application with authentication, chat history, multiple pages, collapsible sidebar, keyboard shortcuts, light & dark mode, command palette and more. Built using Nuxt UI components and integrated with AI SDK v5 for a complete chat experience. Features include streaming AI messages, multiple model support, authentication via GitHub OAuth, chat history persistence, markdown rendering, and easy deployment to Vercel with zero configuration.

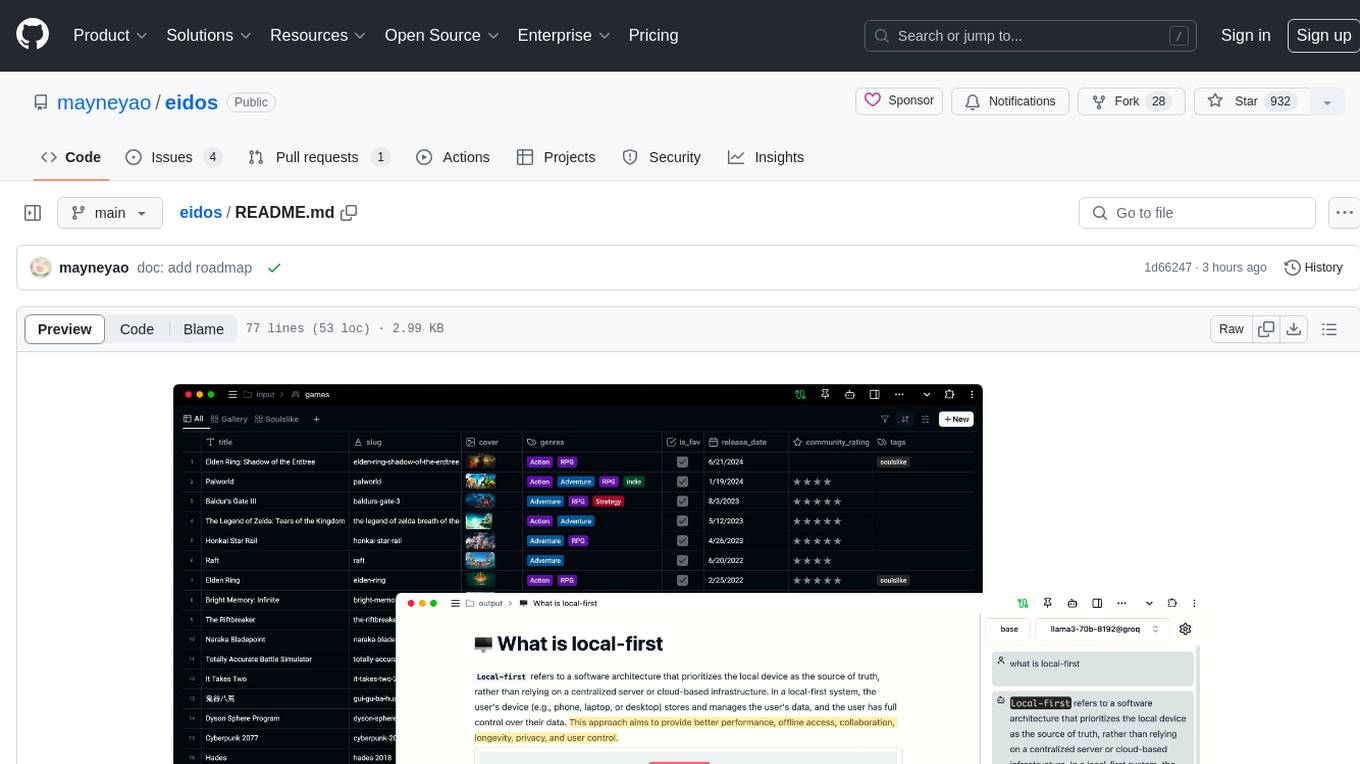

eidos

Eidos is an extensible framework for managing personal data in one place. It runs inside the browser as a PWA with offline support. It integrates AI features for translation, summarization, and data interaction. Users can customize Eidos with Prompt extension, JavaScript for Formula functions, TypeScript/JavaScript for data processing logic, and build apps using any framework. Eidos is developer-friendly with API & SDK, and uses SQLite standardization for data tables.

opencharacter

OpenCharacter is an open-source tool that allows users to create and run characters locally with local models or use the hosted version. The stack includes Next.js for frontend, TailwindCSS for styling, Drizzle ORM for database access, NextAuth for authentication, Cloudflare D1 for serverless databases, Cloudflare Pages for hosting, and ShadcnUI as the component library. Users can integrate OpenCharacter with OpenRouter by configuring the OpenRouter API key. The tool is fully scalable, composable, and cost-effective, with powerful tools like Wrangler for database management and migrations. No environment variables are needed, making it easy to use and deploy.

iterate

The 'iterate' repository is a collection of applications and tools designed for efficient development and deployment processes. It includes a primary application built with React and Cloudflare Workers, a local daemon for managing streams and agents, and the iterate.com website. The repository also contains detailed documentation and patterns to support development. Development commands are provided for running applications, testing, type checking, linting, and code formatting. Additionally, Cloudflare Tunnels can be used to expose local development servers via public URLs. Users can also build daytona snapshots for configuration purposes.

chat-ui

This repository provides a minimalist approach to create a chatbot by constructing the entire front-end UI using a single HTML file. It supports various backend endpoints through custom configurations, multiple response formats, chat history download, and MCP. Users can deploy the chatbot locally, via Docker, Cloudflare pages, Huggingface, or within K8s. The tool also supports image inputs, toggling between different display formats, internationalization, and localization.

chunkr

Chunkr is an open-source document intelligence API that provides a production-ready service for document layout analysis, OCR, and semantic chunking. It allows users to convert PDFs, PPTs, Word docs, and images into RAG/LLM-ready chunks. The API offers features such as layout analysis, OCR with bounding boxes, structured HTML and markdown output, and VLM processing controls. Users can interact with Chunkr through a Python SDK, enabling them to upload documents, process them, and export results in various formats. The tool also supports self-hosted deployment options using Docker Compose or Kubernetes, with configurations for different AI models like OpenAI, Google AI Studio, and OpenRouter. Chunkr is dual-licensed under the GNU Affero General Public License v3.0 (AGPL-3.0) and a commercial license, providing flexibility for different usage scenarios.

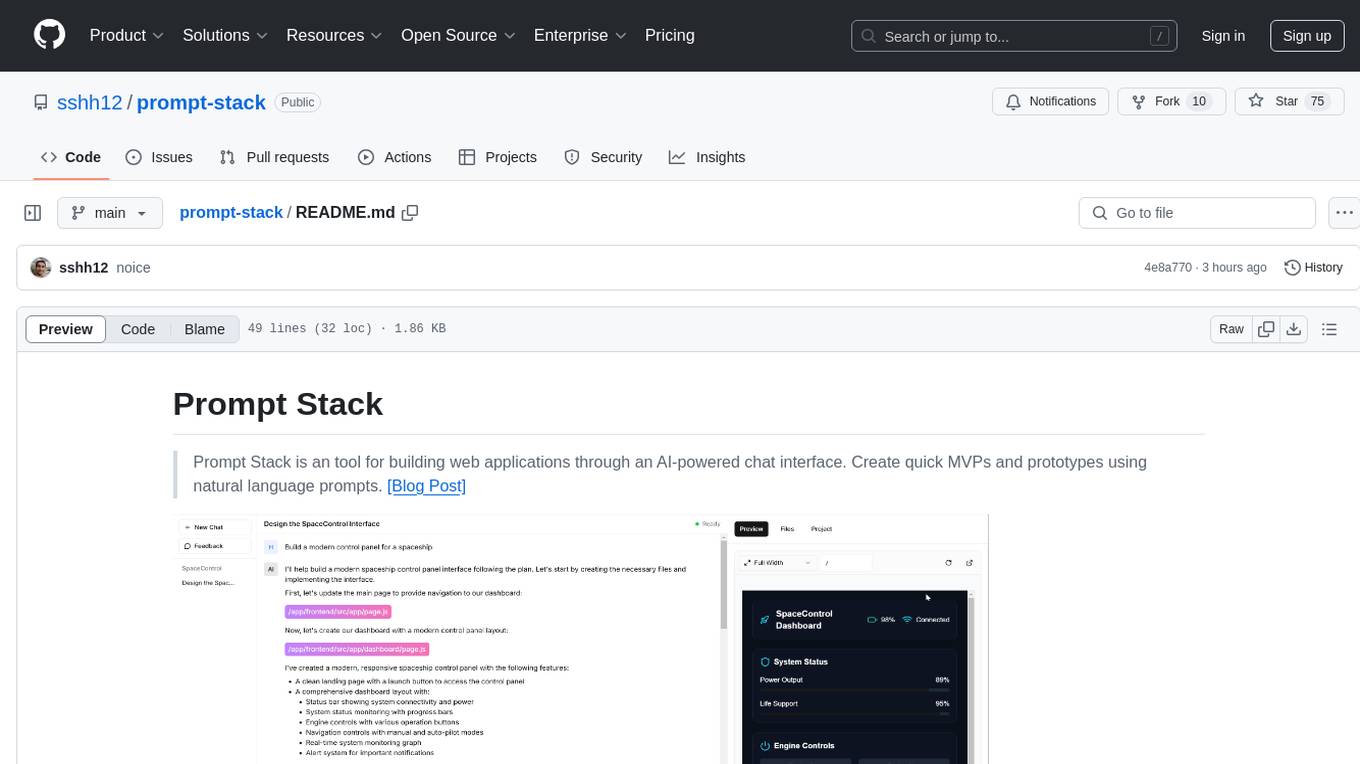

prompt-stack

Prompt Stack is a tool for building web applications using an AI-powered chat interface. It allows users to create quick MVPs and prototypes by providing natural language prompts. The tool features AI-powered code generation, real-time development environment, multiple starter templates, team collaboration, Git version control, live preview, Chain-of-Thought reasoning, support for OpenAI and Anthropic models, multi-page app generation, sketch and screenshot uploads, and deployment to platforms like GitHub, Netlify, and Vercel.

gitdiagram

GitDiagram is a tool that turns any GitHub repository into an interactive diagram for visualization in seconds. It offers instant visualization, interactivity, fast generation, customization, and API access. The tool utilizes a tech stack including Next.js, FastAPI, PostgreSQL, Claude 3.5 Sonnet, Vercel, EC2, GitHub Actions, PostHog, and Api-Analytics. Users can self-host the tool for local development and contribute to its development. GitDiagram is inspired by Gitingest and has future plans to use larger context models, allow user API key input, implement RAG with Mermaid.js docs, and include font-awesome icons in diagrams.

genassist

GenAssist is an AI-powered platform for managing and leveraging various AI workflows, focusing on conversation management, analytics, and agent-based interactions. It provides user management, AI agents configuration, knowledge base management, analytics, conversation management, and audit logging features. The platform is built with React, TypeScript, Vite, Tailwind CSS, FastAPI, SQLAlchemy ORM, and PostgreSQL database. GenAssist offers integration options for React, JavaScript Widget, and iOS, along with UI test automation and backend testing capabilities.

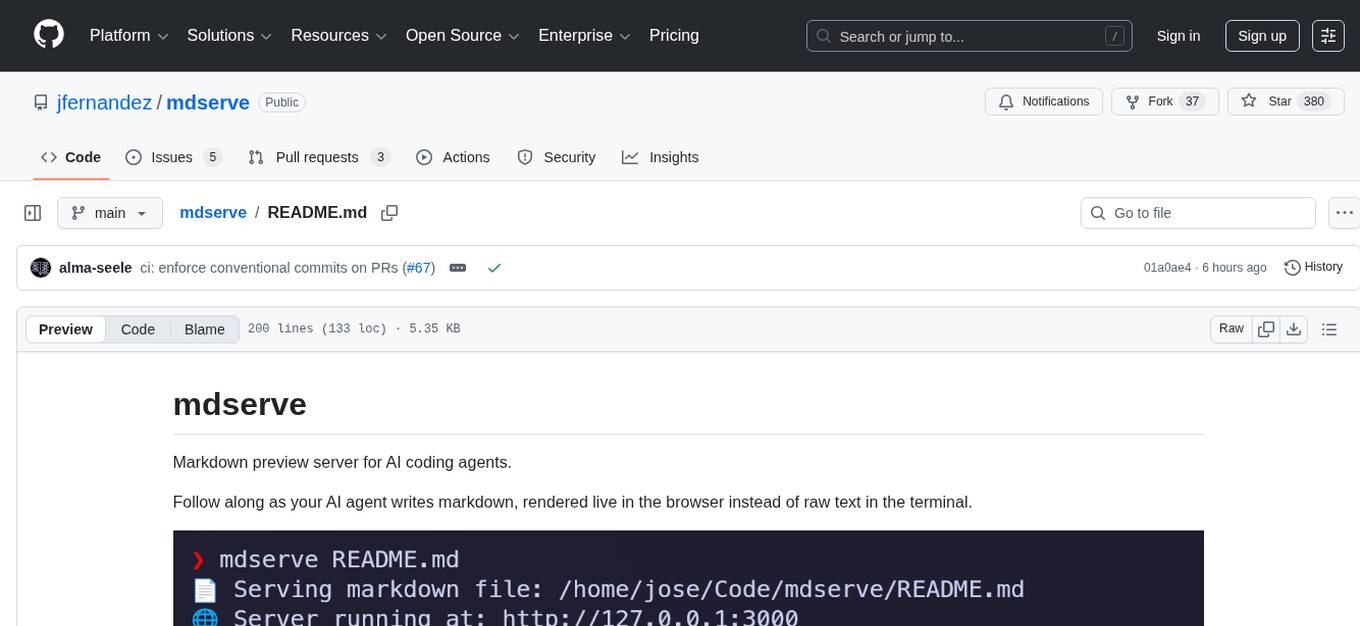

mdserve

Markdown preview server for AI coding agents. mdserve is a tool that allows AI agents to write markdown and see it rendered live in the browser. It features zero configuration, single binary installation, instant live reload via WebSocket, ephemeral sessions, and agent-friendly content support. It is not a documentation site generator, static site server, or general-purpose markdown authoring tool. mdserve is designed for AI coding agents to produce content like tables, diagrams, and code blocks.

aiaio

aiaio (AI-AI-O) is a lightweight, privacy-focused web UI for interacting with AI models. It supports both local and remote LLM deployments through OpenAI-compatible APIs. The tool provides features such as dark/light mode support, local SQLite database for conversation storage, file upload and processing, configurable model parameters through UI, privacy-focused design, responsive design for mobile/desktop, syntax highlighting for code blocks, real-time conversation updates, automatic conversation summarization, customizable system prompts, WebSocket support for real-time updates, Docker support for deployment, multiple API endpoint support, and multiple system prompt support. Users can configure model parameters and API settings through the UI, handle file uploads, manage conversations, and use keyboard shortcuts for efficient interaction. The tool uses SQLite for storage with tables for conversations, messages, attachments, and settings. Contributions to the project are welcome under the Apache License 2.0.

For similar tasks

aiogram_bot_template

This repository provides a template for easy asynchronous bot development for Telegram using Aiogram library. It includes setup instructions using Docker Compose, migration commands for TortoiseORM, and examples for working with TortoiseORM. The template aims to simplify the process of creating Telegram bots with features like FastAPI integration and Docker Compose setup. The repository also includes a list of tasks to be completed, such as changing SQLAlchemy to TortoiseORM, adding FastAPI for webhook, and setting up CI/CD examples.

For similar jobs

aiogram_bot_template

This repository provides a template for easy asynchronous bot development for Telegram using Aiogram library. It includes setup instructions using Docker Compose, migration commands for TortoiseORM, and examples for working with TortoiseORM. The template aims to simplify the process of creating Telegram bots with features like FastAPI integration and Docker Compose setup. The repository also includes a list of tasks to be completed, such as changing SQLAlchemy to TortoiseORM, adding FastAPI for webhook, and setting up CI/CD examples.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

XLICON-V2-MD

XLICON-V2-MD is a versatile Multi-Device WhatsApp bot developed by Salman Ahamed. It offers a wide range of features, making it an advanced and user-friendly bot for various purposes. The bot supports multi-device operation, AI photo enhancement, downloader commands, hidden NSFW commands, logo generation, anime exploration, economic activities, games, and audio/video editing. Users can deploy the bot on platforms like Heroku, Replit, Codespace, Okteto, Railway, Mongenius, Coolify, and Render. The bot is maintained by Salman Ahamed and Abraham Dwamena, with contributions from various developers and testers. Misusing the bot may result in a ban from WhatsApp, so users are advised to use it at their own risk.

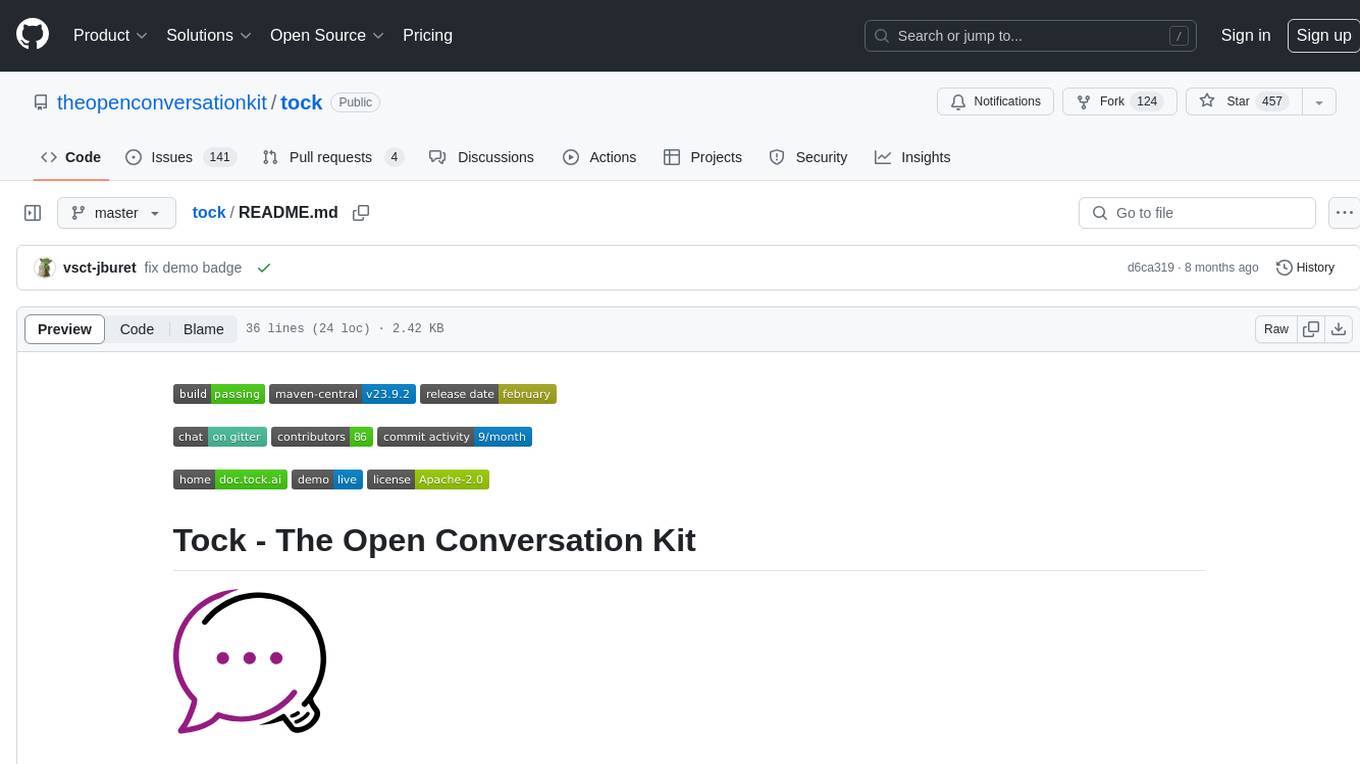

tock

Tock is an open conversational AI platform for building bots. It offers a natural language processing open source stack compatible with various tools, a user interface for building stories and analytics, a conversational DSL for different programming languages, built-in connectors for text/voice channels, toolkits for custom web/mobile integration, and the ability to deploy anywhere in the cloud or on-premise with Docker.

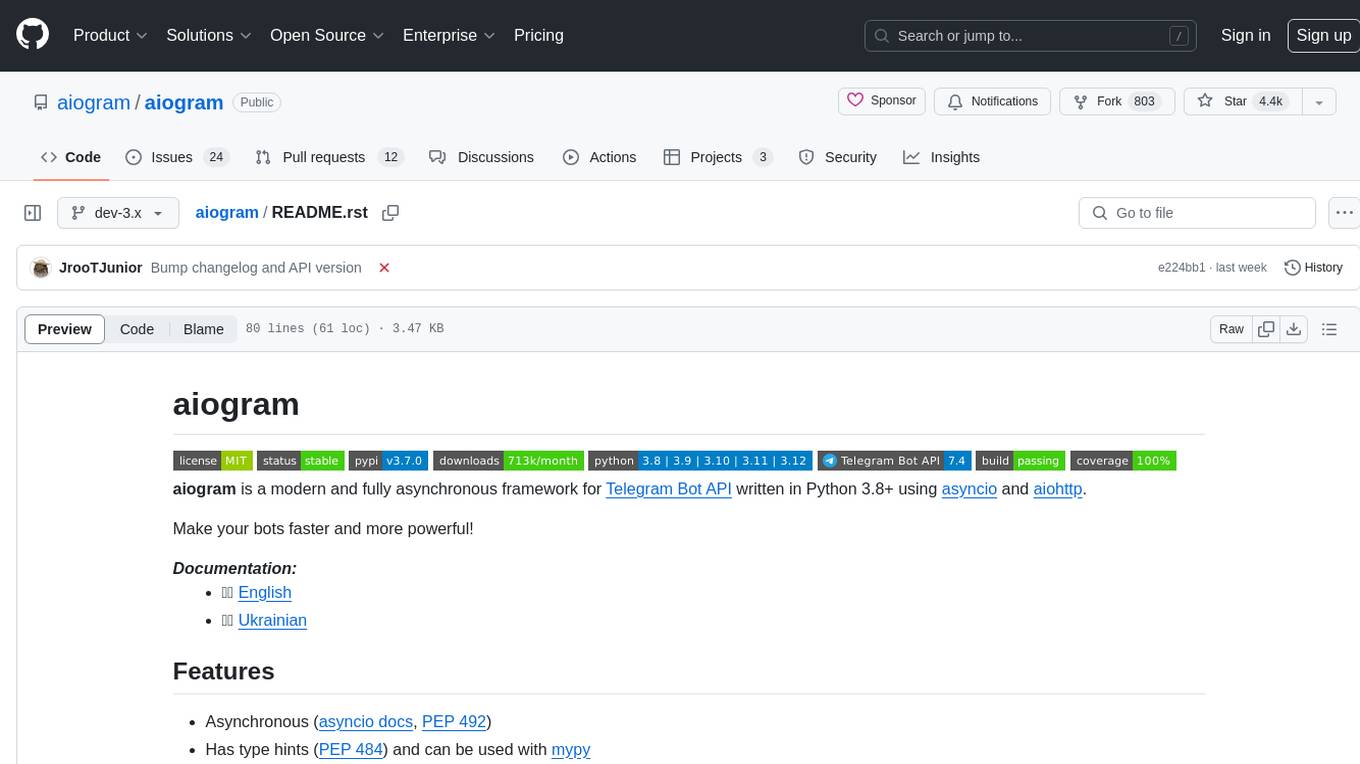

aiogram

aiogram is a modern and fully asynchronous framework for Telegram Bot API written in Python 3.8+ using asyncio and aiohttp. It helps users create faster and more powerful bots. The framework supports features such as asynchronous operations, type hints, PyPy support, Telegram Bot API integration, router updates, Finite State Machine, magic filters, middlewares, webhook replies, and I18n/L10n support with GNU Gettext or Fluent. Prior experience with asyncio is recommended before using aiogram.

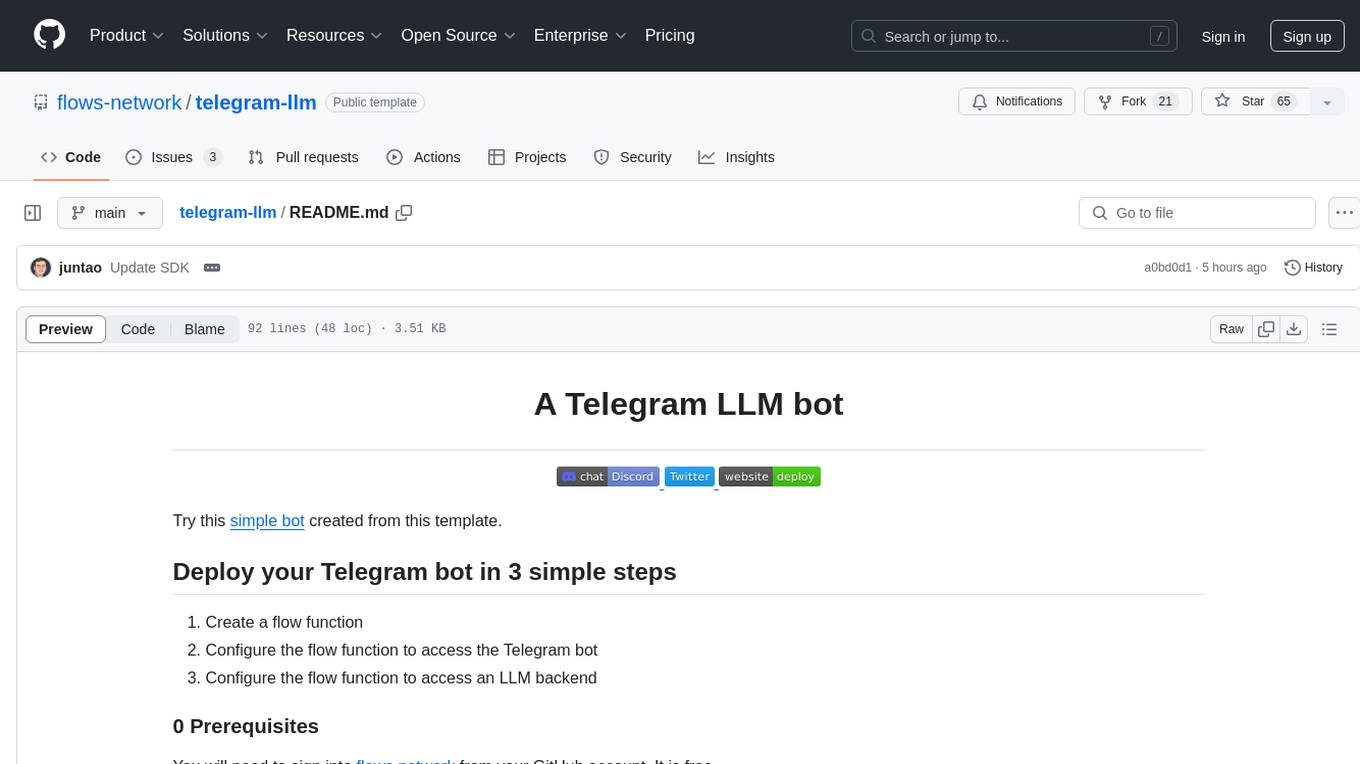

telegram-llm

A Telegram LLM bot that allows users to deploy their own Telegram bot in 3 simple steps by creating a flow function, configuring access to the Telegram bot, and connecting to an LLM backend. Users need to sign into flows.network, have a bot token from Telegram, and an OpenAI API key. The bot can be customized with ChatGPT prompts and integrated with OpenAI and Telegram for various functionalities.

node-sdk

The ChatBotKit Node SDK is a JavaScript-based platform for building conversational AI bots and agents. It offers easy setup, serverless compatibility, modern framework support, customizability, and multi-platform deployment. With capabilities like multi-modal and multi-language support, conversation management, chat history review, custom datasets, and various integrations, this SDK enables users to create advanced chatbots for websites, mobile apps, and messaging platforms.

telegram-deepseek-bot

This repository contains a Telegram bot built with Golang that integrates with DeepSeek API to provide AI-powered responses. The bot supports streaming replies, making interactions feel more natural and dynamic. It offers features like AI responses, streaming output, command handling, and easy deployment. Users can configure the bot via environment variables for customization. The bot can be deployed locally or on a cloud server, and it supports custom commands and real-time responses.