stability-sdk

SDK for interacting with stability.ai APIs (e.g. stable diffusion inference)

Stars: 2413

The stability-sdk is a Python package that provides a client implementation for interacting with the Stability API. This API allows users to generate images, upscale images, and animate images using a variety of different models and settings. The stability-sdk makes it easy to use the Stability API from Python code, and it provides a number of helpful features such as command line usage, support for multiple models, and the ability to filter artifacts by type.

README:

Client implementations that interact with the Stability API.

Follow the instructions on Platform to obtain an API key.

Install the PyPI package via:

pip install stability-sdk

client.py is both a command line client and an API class that wraps the gRPC based API. To try the client:

- Use Python venv:

python3 -m venv pyenv - Set up in venv dependencies:

pyenv/bin/pip3 install -e . -

pyenv/bin/activateto use the venv. - Set the

STABILITY_HOSTenvironment variable. This is by default set to the production endpointgrpc.stability.ai:443. - Set the

STABILITY_KEYenvironment variable.

Then to invoke:

python3 -m stability_sdk generate -W 1024 -H 1024 "A stunning house."

It will generate and put PNGs in your current directory.

To upscale:

python3 -m stability_sdk upscale -i "/path/to/image.png"

Install with

pip install stability-sdk[anim_ui]

Then run with

python3 -m stability_sdk animate --gui

Be sure to check out Platform for comprehensive documentation on how to interact with our API.

usage: python -m stability_sdk generate [-h] [--height HEIGHT] [--width WIDTH]

[--start_schedule START_SCHEDULE] [--end_schedule END_SCHEDULE]

[--cfg_scale CFG_SCALE] [--sampler SAMPLER] [--steps STEPS]

[--style_preset STYLE_PRESET] [--seed SEED] [--prefix PREFIX] [--engine ENGINE]

[--num_samples NUM_SAMPLES] [--artifact_types ARTIFACT_TYPES]

[--no-store] [--show] [--init_image INIT_IMAGE] [--mask_image MASK_IMAGE]

[prompt ...]

positional arguments:

prompt

options:

-h, --help show this help message and exit

--height HEIGHT, -H HEIGHT

[1024] height of image

--width WIDTH, -W WIDTH

[1024] width of image

--start_schedule START_SCHEDULE

[0.5] start schedule for init image (must be greater than 0; 1 is full strength

text prompt, no trace of image)

--end_schedule END_SCHEDULE

[0.01] end schedule for init image

--cfg_scale CFG_SCALE, -C CFG_SCALE

[7.0] CFG scale factor

--sampler SAMPLER, -A SAMPLER

[auto-select] (ddim, plms, k_euler, k_euler_ancestral, k_heun, k_dpm_2,

k_dpm_2_ancestral, k_lms, k_dpmpp_2m, k_dpmpp_2s_ancestral)

--steps STEPS, -s STEPS

[auto] number of steps

--style_preset STYLE_PRESET

[none] (3d-model, analog-film, anime, cinematic, comic-book, digital-art, enhance,

fantasy-art, isometric, line-art, low-poly, modeling-compound, neon-punk, origami,

photographic, pixel-art, tile-texture)

--seed SEED, -S SEED random seed to use

--prefix PREFIX, -p PREFIX

output prefixes for artifacts

--artifact_types ARTIFACT_TYPES, -t ARTIFACT_TYPES

filter artifacts by type (ARTIFACT_IMAGE, ARTIFACT_TEXT, ARTIFACT_CLASSIFICATIONS, etc)

--no-store do not write out artifacts

--num_samples NUM_SAMPLES, -n NUM_SAMPLES

number of samples to generate

--show open artifacts using PIL

--engine ENGINE, -e ENGINE

engine to use for inference

--init_image INIT_IMAGE, -i INIT_IMAGE

Init image

--mask_image MASK_IMAGE, -m MASK_IMAGE

Mask image

For upscale:

usage: client.py upscale

[-h]

--init_image INIT_IMAGE

[--height HEIGHT] [--width WIDTH] [--prefix PREFIX] [--artifact_types ARTIFACT_TYPES]

[--no-store] [--show] [--engine ENGINE]

positional arguments:

prompt (ignored in esrgan engines)

options:

-h, --help show this help message and exit

--init_image INIT_IMAGE, -i INIT_IMAGE

Init image

--height HEIGHT, -H HEIGHT

height of upscaled image in pixels

--width WIDTH, -W WIDTH

width of upscaled image in pixels

--steps STEPS, -s STEPS

[auto] number of steps (ignored in esrgan engines)

--seed SEED, -S SEED random seed to use (ignored in esrgan engines)

--cfg_scale CFG_SCALE, -C CFG_SCALE

[7.0] CFG scale factor (ignored in esrgan engines)

--prefix PREFIX, -p PREFIX

output prefixes for artifacts

--artifact_types ARTIFACT_TYPES, -t ARTIFACT_TYPES

filter artifacts by type (ARTIFACT_IMAGE, ARTIFACT_TEXT, ARTIFACT_CLASSIFICATIONS, etc)

--no-store do not write out artifacts

--show open artifacts using PIL

--engine ENGINE, -e ENGINE

engine to use for upscale

If a language you would like to connect to the API with is not currently documented on Platform you can use the following protobuf definition to compile stubs for your language:

- Typescript client: https://github.com/jakiestfu/stability-ts

- Guide to building for Ruby: https://github.com/kmcphillips/stability-sdk/blob/main/src/ruby/README.md

- C# client: https://github.com/Katarzyna-Kadziolka/StabilityClient.Net

Usage of the Stability API falls under the STABILITY AI API Terms of Service.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for stability-sdk

Similar Open Source Tools

stability-sdk

The stability-sdk is a Python package that provides a client implementation for interacting with the Stability API. This API allows users to generate images, upscale images, and animate images using a variety of different models and settings. The stability-sdk makes it easy to use the Stability API from Python code, and it provides a number of helpful features such as command line usage, support for multiple models, and the ability to filter artifacts by type.

instructor_ex

Instructor is a tool designed to structure outputs from OpenAI and other OSS LLMs by coaxing them to return JSON that maps to a provided Ecto schema. It allows for defining validation logic to guide LLMs in making corrections, and supports automatic retries. Instructor is primarily used with the OpenAI API but can be extended to work with other platforms. The tool simplifies usage by creating an ecto schema, defining a validation function, and making calls to chat_completion with instructions for the LLM. It also offers features like max_retries to fix validation errors iteratively.

LLMsKnow

LLMs Know More Than They Show is a repository containing code to reproduce the results in the paper. It includes scripts to generate model answers, extract exact answers, probe all layers and tokens, probe specific layers and tokens, conduct generalization experiments, perform resampling for error type probing and answer selection experiments, and run other baselines like logprob detection and p_true detection. The repository supports various datasets such as TriviaQA, Movies, HotpotQA, Winobias, Winogrande, NLI, IMDB, Math, and Natural questions. It also provides supported models like Mistral-7B-Instruct-v0.2, Mistral-7B-v0.3, Meta-Llama-3-8B, and Meta-Llama-3-8B-Instruct.

openai-kit

OpenAIKit is a Swift package designed to facilitate communication with the OpenAI API. It provides methods to interact with various OpenAI services such as chat, models, completions, edits, images, embeddings, files, moderations, and speech to text. The package encourages the use of environment variables to securely inject the OpenAI API key and organization details. It also offers error handling for API requests through the `OpenAIKit.APIErrorResponse`.

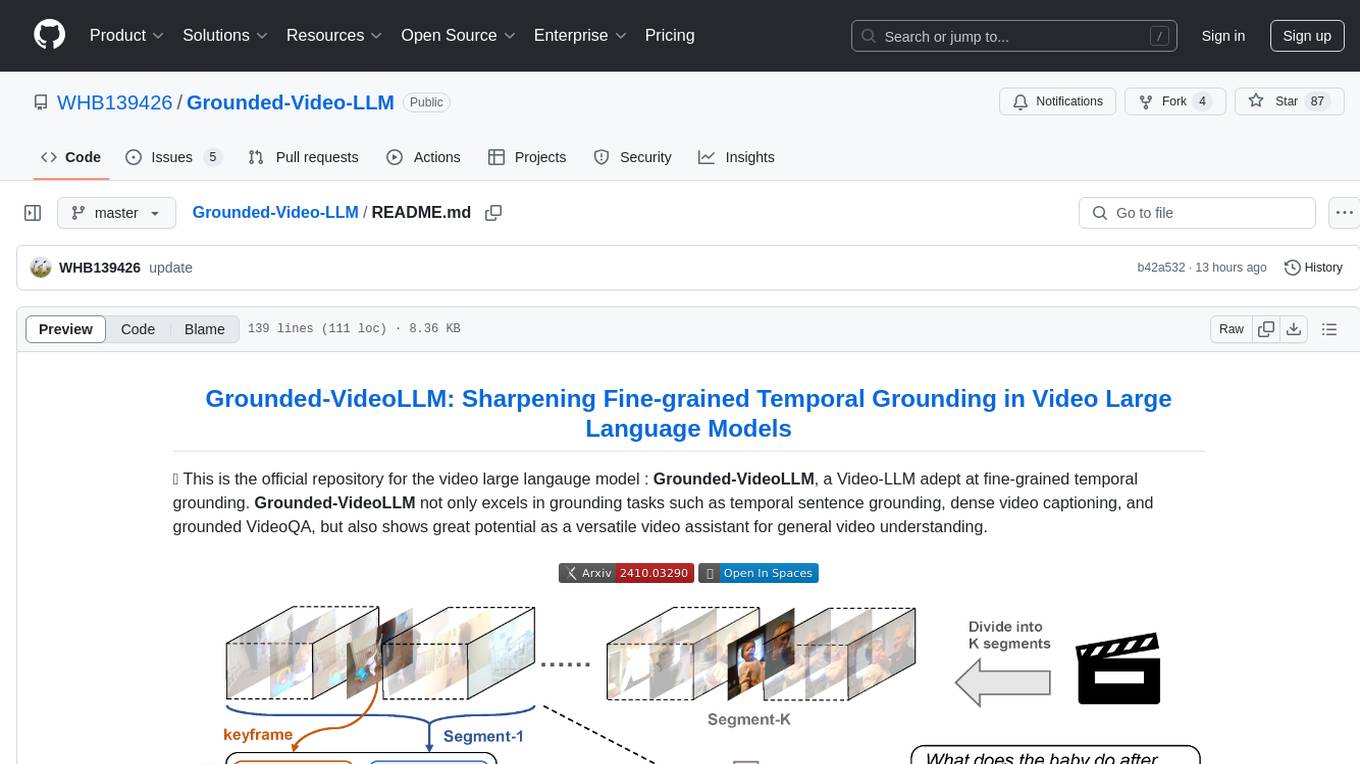

Grounded-Video-LLM

Grounded-VideoLLM is a Video Large Language Model specialized in fine-grained temporal grounding. It excels in tasks such as temporal sentence grounding, dense video captioning, and grounded VideoQA. The model incorporates an additional temporal stream, discrete temporal tokens with specific time knowledge, and a multi-stage training scheme. It shows potential as a versatile video assistant for general video understanding. The repository provides pretrained weights, inference scripts, and datasets for training. Users can run inference queries to get temporal information from videos and train the model from scratch.

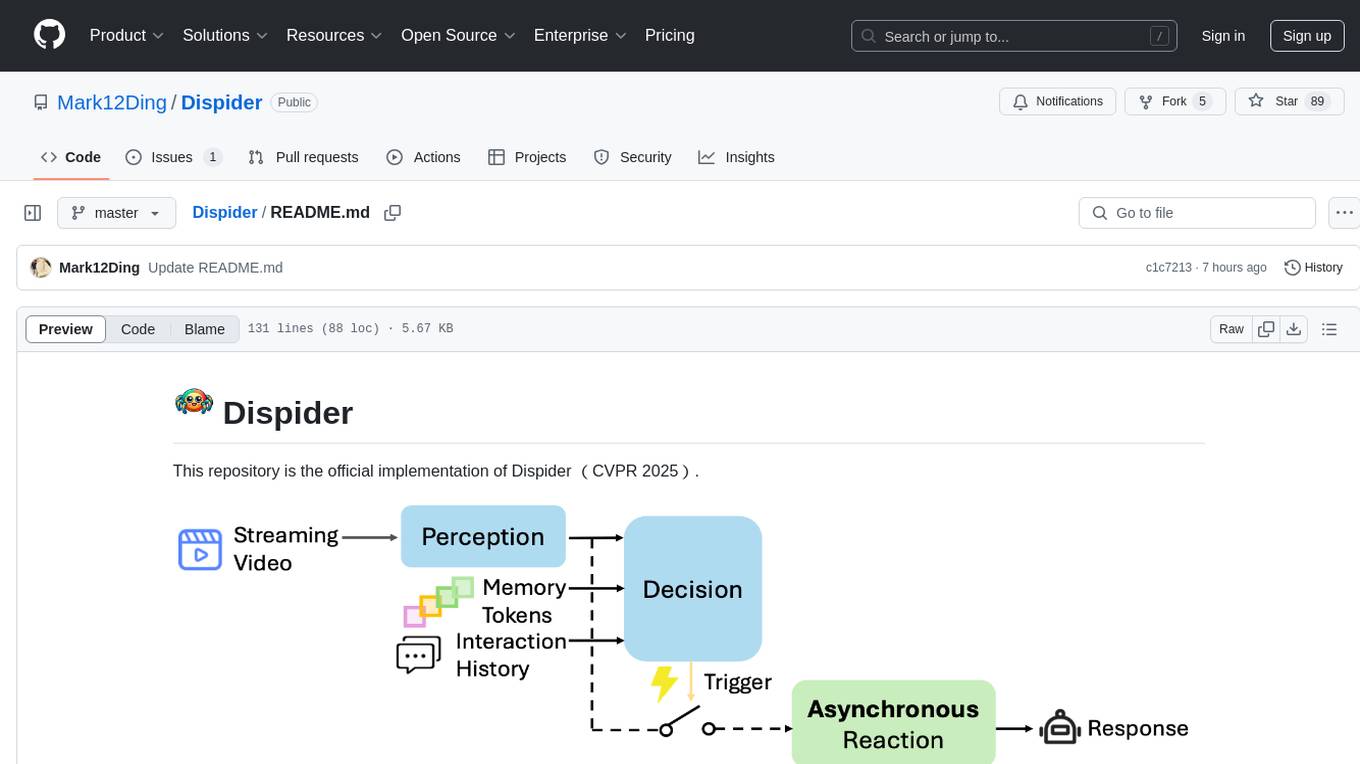

Dispider

Dispider is an implementation enabling real-time interactions with streaming videos, providing continuous feedback in live scenarios. It separates perception, decision-making, and reaction into asynchronous modules, ensuring timely interactions. Dispider outperforms VideoLLM-online on benchmarks like StreamingBench and excels in temporal reasoning. The tool requires CUDA 11.8 and specific library versions for optimal performance.

DemoGPT

DemoGPT is an all-in-one agent library that provides tools, prompts, frameworks, and LLM models for streamlined agent development. It leverages GPT-3.5-turbo to generate LangChain code, creating interactive Streamlit applications. The tool is designed for creating intelligent, interactive, and inclusive solutions in LLM-based application development. It offers model flexibility, iterative development, and a commitment to user engagement. Future enhancements include integrating Gorilla for autonomous API usage and adding a publicly available database for refining the generation process.

oasis

OASIS is a scalable, open-source social media simulator that integrates large language models with rule-based agents to realistically mimic the behavior of up to one million users on platforms like Twitter and Reddit. It facilitates the study of complex social phenomena such as information spread, group polarization, and herd behavior, offering a versatile tool for exploring diverse social dynamics and user interactions in digital environments. With features like scalability, dynamic environments, diverse action spaces, and integrated recommendation systems, OASIS provides a comprehensive platform for simulating social media interactions at a large scale.

KIVI

KIVI is a plug-and-play 2bit KV cache quantization algorithm optimizing memory usage by quantizing key cache per-channel and value cache per-token to 2bit. It enables LLMs to maintain quality while reducing memory usage, allowing larger batch sizes and increasing throughput in real LLM inference workloads.

aiogram_bot_template

This repository provides a template for easy asynchronous bot development for Telegram using Aiogram library. It includes setup instructions using Docker Compose, migration commands for TortoiseORM, and examples for working with TortoiseORM. The template aims to simplify the process of creating Telegram bots with features like FastAPI integration and Docker Compose setup. The repository also includes a list of tasks to be completed, such as changing SQLAlchemy to TortoiseORM, adding FastAPI for webhook, and setting up CI/CD examples.

docs-ai

Docs AI is a platform that allows users to train their documents, chat with their documents, and create chatbots to solve queries. It is built using NextJS, Tailwind, tRPC, ShadcnUI, Prisma, Postgres, NextAuth, Pinecone, and Cloudflare R2. The platform requires Node.js (Version: >=18.x), PostgreSQL, and Redis for setup. Users can utilize Docker for development by using the provided `docker-compose.yml` file in the `/app` directory.

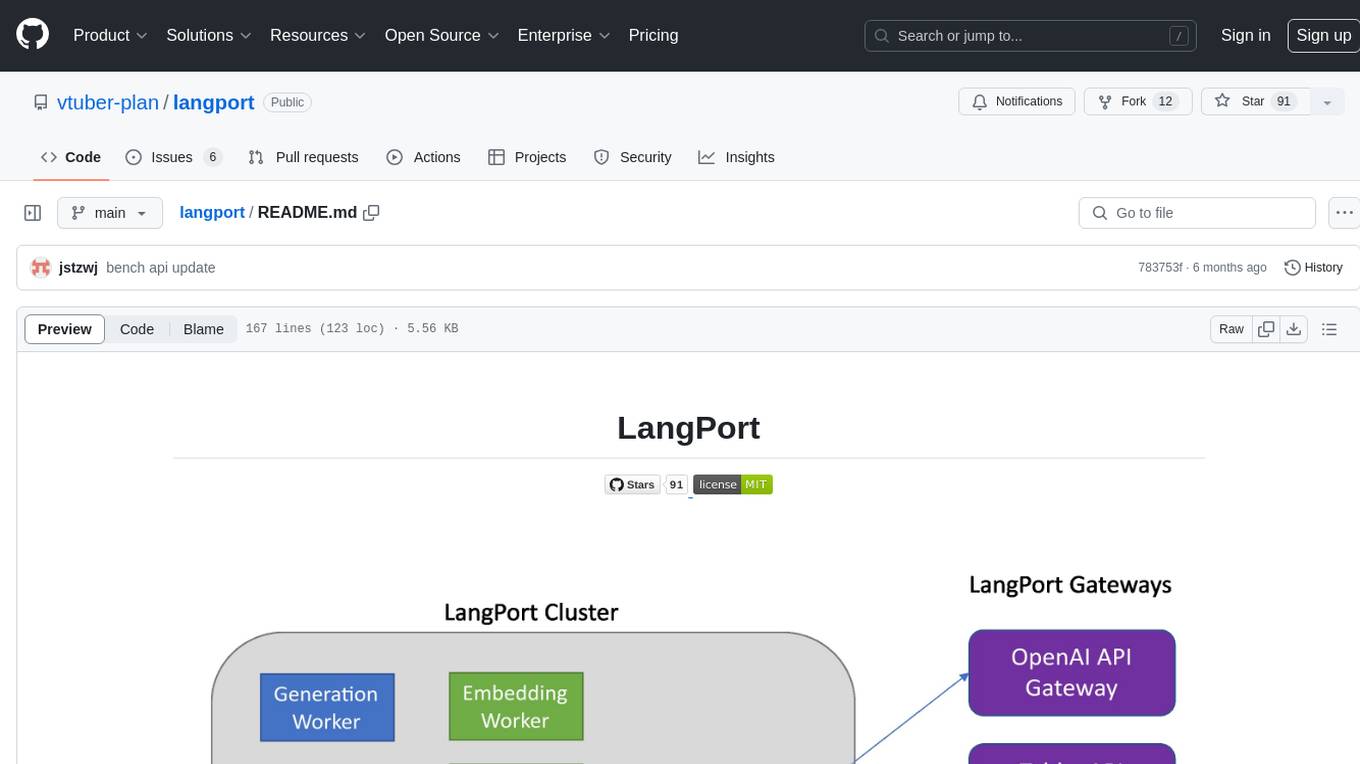

langport

LangPort is an open-source platform for serving large language models. It aims to provide a super fast LLM inference service with core features including Huggingface transformers support, distributed serving system, streaming generation, batch inference, and support for various model architectures. It offers compatibility with OpenAI, FauxPilot, HuggingFace, and Tabby APIs. The project supports model architectures like LLaMa, GLM, GPT2, and GPT Neo, and has been tested with models such as NingYu, Vicuna, ChatGLM, and WizardLM. LangPort also provides features like dynamic batch inference, int4 quantization, and generation logprobs parameter.

MemoryLLM

MemoryLLM is a large language model designed for self-updating capabilities. It offers pretrained models with different memory capacities and features, such as chat models. The repository provides training code, evaluation scripts, and datasets for custom experiments. MemoryLLM aims to enhance knowledge retention and performance on various natural language processing tasks.

skyrim

Skyrim is a weather forecasting tool that enables users to run large weather models using consumer-grade GPUs. It provides access to state-of-the-art foundational weather models through a well-maintained infrastructure. Users can forecast weather conditions, such as wind speed and direction, by running simulations on their own GPUs or using modal volume or cloud services like s3 buckets. Skyrim supports various large weather models like Graphcast, Pangu, Fourcastnet, and DLWP, with plans for future enhancements like ensemble prediction and model quantization.

ai-renamer

ai-renamer is a Node.js CLI tool that intelligently renames files in a specified directory using Ollama models like Llama, Gemma, Phi, etc. It allows users to set case style, model, maximum characters in the filename, and output language. The tool utilizes the change-case library for case styling and requires Ollama and at least one LLM to be installed on the system. Users can contribute by opening new issues or making pull requests. Licensed under GPL-3.0.

rosa

ROSA is an AI Agent designed to interact with ROS-based robotics systems using natural language queries. It can generate system reports, read and parse ROS log files, adapt to new robots, and run various ROS commands using natural language. The tool is versatile for robotics research and development, providing an easy way to interact with robots and the ROS environment.

For similar tasks

stability-sdk

The stability-sdk is a Python package that provides a client implementation for interacting with the Stability API. This API allows users to generate images, upscale images, and animate images using a variety of different models and settings. The stability-sdk makes it easy to use the Stability API from Python code, and it provides a number of helpful features such as command line usage, support for multiple models, and the ability to filter artifacts by type.

ap-plugin

AP-PLUGIN is an AI drawing plugin for the Yunzai series robot framework, allowing you to have a convenient AI drawing experience in the input box. It uses the open source Stable Diffusion web UI as the backend, deploys it for free, and generates a variety of images with richer functions.

fabric

Fabric is an open-source framework for augmenting humans using AI. It provides a structured approach to breaking down problems into individual components and applying AI to them one at a time. Fabric includes a collection of pre-defined Patterns (prompts) that can be used for a variety of tasks, such as extracting the most interesting parts of YouTube videos and podcasts, writing essays, summarizing academic papers, creating AI art prompts, and more. Users can also create their own custom Patterns. Fabric is designed to be easy to use, with a command-line interface and a variety of helper apps. It is also extensible, allowing users to integrate it with their own AI applications and infrastructure.

comflowy

Comflowy is a community dedicated to providing comprehensive tutorials, fostering discussions, and building a database of workflows and models for ComfyUI and Stable Diffusion. Our mission is to lower the entry barrier for ComfyUI users, promote its mainstream adoption, and contribute to the growth of the AI generative graphics community.

Building-AI-Applications-with-ChatGPT-APIs

This repository is for the book 'Building AI Applications with ChatGPT APIs' published by Packt. It provides code examples and instructions for mastering ChatGPT, Whisper, and DALL-E APIs through building innovative AI projects. Readers will learn to develop AI applications using ChatGPT APIs, integrate them with frameworks like Flask and Django, create AI-generated art with DALL-E APIs, and optimize ChatGPT models through fine-tuning.

comfyui-photoshop

ComfyUI for Photoshop is a plugin that integrates with an AI-powered image generation system to enhance the Photoshop experience with features like unlimited generative fill, customizable back-end, AI-powered artistry, and one-click transformation. The plugin requires a minimum of 6GB graphics memory and 12GB RAM. Users can install the plugin and set up the ComfyUI workflow using provided links and files. Additionally, specific files like Check points, Loras, and Detailer Lora are required for different functionalities. Support and contributions are encouraged through GitHub.

awesome-generative-ai

Awesome Generative AI is a curated list of modern Generative Artificial Intelligence projects and services. Generative AI technology creates original content like images, sounds, and texts using machine learning algorithms trained on large data sets. It can produce unique and realistic outputs such as photorealistic images, digital art, music, and writing. The repo covers a wide range of applications in art, entertainment, marketing, academia, and computer science.

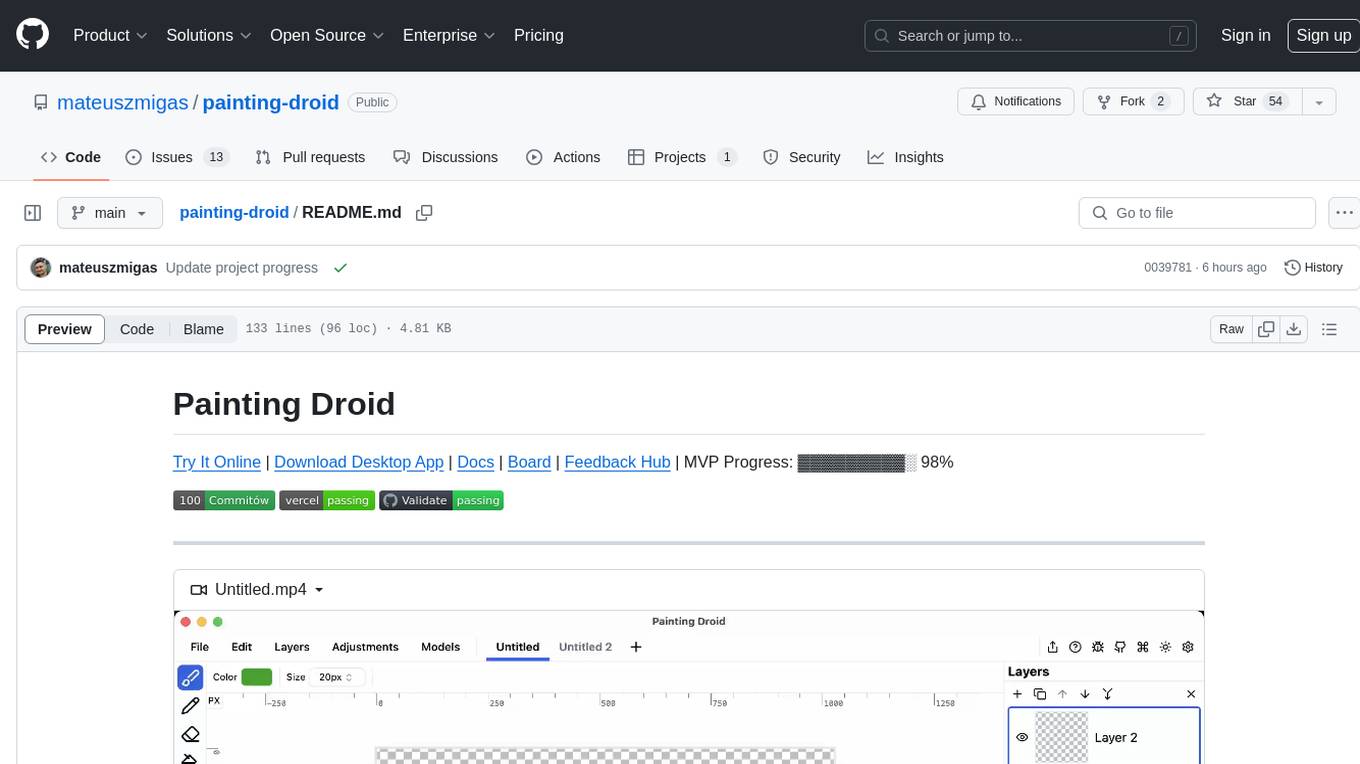

painting-droid

Painting Droid is an AI-powered cross-platform painting app inspired by MS Paint, expandable with plugins and open. It utilizes various AI models, from paid providers to self-hosted open-source models, as well as some lightweight ones built into the app. Features include regular painting app features, AI-generated content filling and augmentation, filters and effects, image manipulation, plugin support, and cross-platform compatibility.

For similar jobs

ap-plugin

AP-PLUGIN is an AI drawing plugin for the Yunzai series robot framework, allowing you to have a convenient AI drawing experience in the input box. It uses the open source Stable Diffusion web UI as the backend, deploys it for free, and generates a variety of images with richer functions.

99AI

99AI is a commercializable AI web application based on NineAI 2.4.2 (no authorization, no backdoors, no piracy, integrated front-end and back-end integration packages, supports Docker rapid deployment). The uncompiled source code is temporarily closed. Compared with the stable version, the development version is faster.

midjourney-proxy

Midjourney-proxy is a proxy for the Discord channel of MidJourney, enabling API-based calls for AI drawing. It supports Imagine instructions, adding image base64 as a placeholder, Blend and Describe commands, real-time progress tracking, Chinese prompt translation, prompt sensitive word pre-detection, user-token connection to WSS, multi-account configuration, and more. For more advanced features, consider using midjourney-proxy-plus, which includes Shorten, focus shifting, image zooming, local redrawing, nearly all associated button actions, Remix mode, seed value retrieval, account pool persistence, dynamic maintenance, /info and /settings retrieval, account settings configuration, Niji bot robot, InsightFace face replacement robot, and an embedded management dashboard.

comflowyspace

Comflowyspace is an open-source AI image and video generation tool that aims to provide a more user-friendly and accessible experience than existing tools like SDWebUI and ComfyUI. It simplifies the installation, usage, and workflow management of AI image and video generation, making it easier for users to create and explore AI-generated content. Comflowyspace offers features such as one-click installation, workflow management, multi-tab functionality, workflow templates, and an improved user interface. It also provides tutorials and documentation to lower the learning curve for users. The tool is designed to make AI image and video generation more accessible and enjoyable for a wider range of users.

comflowy

Comflowy is a community dedicated to providing comprehensive tutorials, fostering discussions, and building a database of workflows and models for ComfyUI and Stable Diffusion. Our mission is to lower the entry barrier for ComfyUI users, promote its mainstream adoption, and contribute to the growth of the AI generative graphics community.

stability-sdk

The stability-sdk is a Python package that provides a client implementation for interacting with the Stability API. This API allows users to generate images, upscale images, and animate images using a variety of different models and settings. The stability-sdk makes it easy to use the Stability API from Python code, and it provides a number of helpful features such as command line usage, support for multiple models, and the ability to filter artifacts by type.

awesome-generative-ai

A curated list of Generative AI projects, tools, artworks, and models

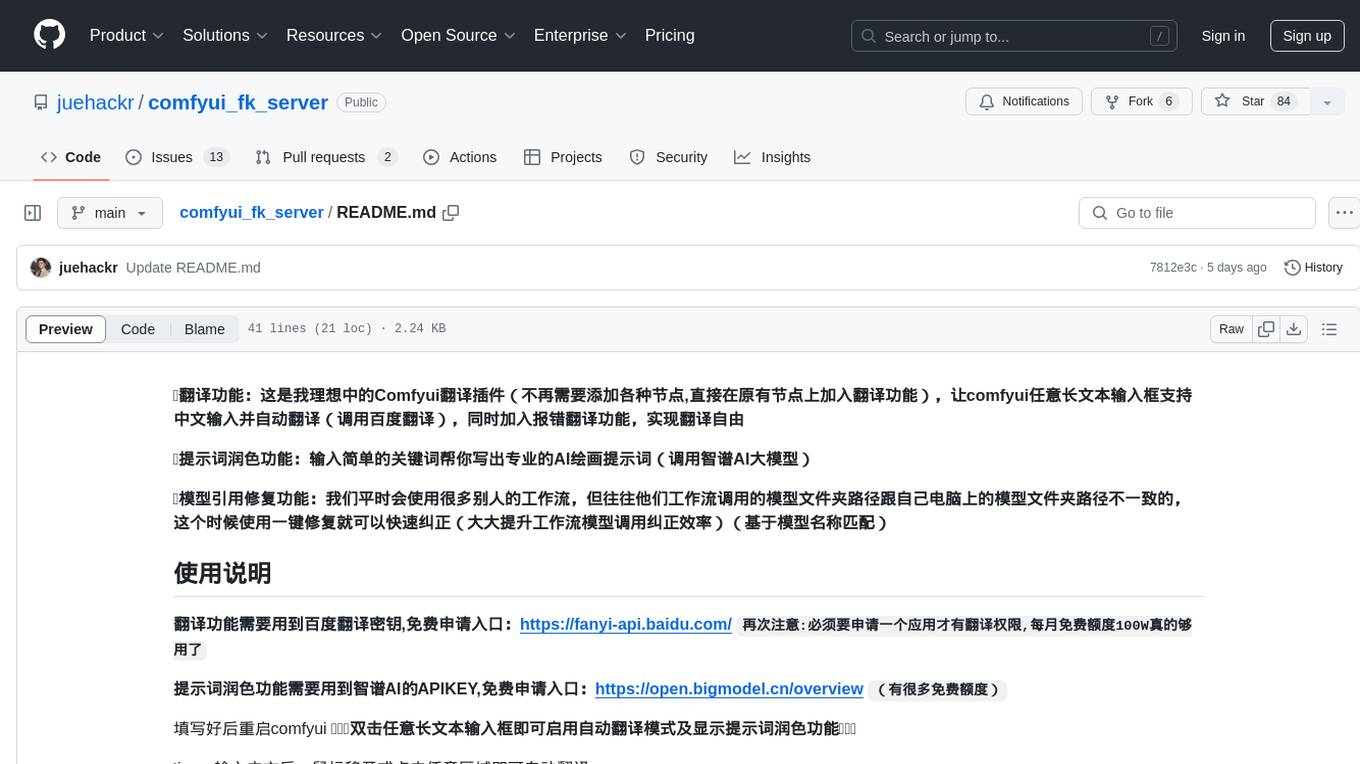

comfyui_fk_server

This is an ideal Comfyui translation plugin that allows any long text input box in Comfyui to support Chinese input and automatic translation (using Baidu translation). It also includes error correction translation feature and keyword polishing feature for generating professional AI drawing prompts (using Zhipu AI big model). Additionally, it provides a one-click fix feature for correcting model references in workflows, greatly improving workflow model call correction efficiency (based on model name matching). The plugin requires Baidu translation API key for translation functionality and Zhipu AI API key for keyword polishing functionality. After installation, users can enable automatic translation mode and keyword polishing feature by double-clicking any long text input box in Comfyui.