free-threaded-compatibility

A central repository to keep track of the status of work on and support for free-threaded CPython (see PEP 703), with a focus on the scientific and ML/AI ecosystem

Stars: 237

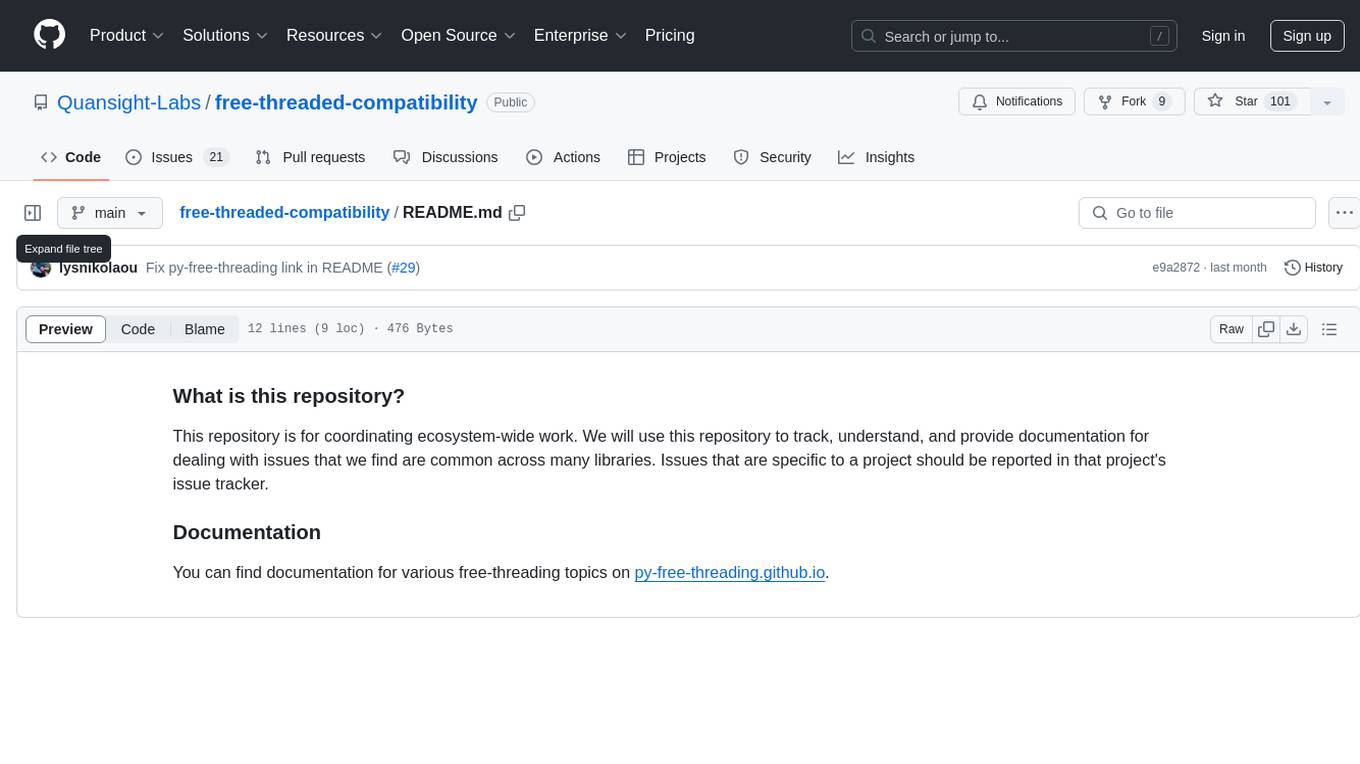

This repository serves as a platform for coordinating ecosystem-wide work related to free-threading topics in Python. It aims to track, understand, and provide documentation for common issues across multiple libraries. Specific project-related issues should be reported in the respective project's issue tracker. For detailed documentation on free-threading topics, visit py-free-threading.github.io.

README:

This repository is for coordinating ecosystem-wide work. We will use this repository to track, understand, and provide documentation for dealing with issues that we find are common across many libraries. Issues that are specific to a project should be reported in that project's issue tracker.

You can find documentation for various free-threading topics on py-free-threading.github.io.

You can find contribution instructions in the documentation.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for free-threaded-compatibility

Similar Open Source Tools

free-threaded-compatibility

This repository serves as a platform for coordinating ecosystem-wide work related to free-threading topics in Python. It aims to track, understand, and provide documentation for common issues across multiple libraries. Specific project-related issues should be reported in the respective project's issue tracker. For detailed documentation on free-threading topics, visit py-free-threading.github.io.

paig

PAIG is an open-source project focused on protecting Generative AI applications by ensuring security, safety, and observability. It offers a versatile framework to address the latest security challenges and integrate point security solutions without rewriting applications. The project aims to provide a secure environment for developing and deploying GenAI applications.

dewhale

Dewhale is a GitHub-Powered AI tool designed for effortless development. It utilizes prompt engineering techniques under the GPT-4 model to issue commands, allowing users to generate code with lower usage costs and easy customization. The tool seamlessly integrates with GitHub, providing version control, code review, and collaborative features. Users can join discussions on the design philosophy of Dewhale and explore detailed instructions and examples for setting up and using the tool.

google.aip.dev

API Improvement Proposals (AIPs) are design documents that provide high-level, concise documentation for API development at Google. The goal of AIPs is to serve as the source of truth for API-related documentation and to facilitate discussion and consensus among API teams. AIPs are similar to Python's enhancement proposals (PEPs) and are organized into different areas within Google to accommodate historical differences in customs, styles, and guidance.

graphrag

The GraphRAG project is a data pipeline and transformation suite designed to extract meaningful, structured data from unstructured text using LLMs. It enhances LLMs' ability to reason about private data. The repository provides guidance on using knowledge graph memory structures to enhance LLM outputs, with a warning about the potential costs of GraphRAG indexing. It offers contribution guidelines, development resources, and encourages prompt tuning for optimal results. The Responsible AI FAQ addresses GraphRAG's capabilities, intended uses, evaluation metrics, limitations, and operational factors for effective and responsible use.

jentic-public-apis

The Jentic Public APIs repository aims to collate all knowledge about the world's APIs into a detailed, comprehensive, structured documentation catalog designed for use by AI. It focuses on standardized OpenAPI specifications, Arazzo workflows, associated tooling, evaluations, and RFCs for extensions to open formats. The project is in ALPHA stage and welcomes contributions to accelerate the effort of building an open knowledge foundation for AI agents.

examples

This repository contains a collection of sample applications and Jupyter Notebooks for hands-on experience with Pinecone vector databases and common AI patterns, tools, and algorithms. It includes production-ready examples for review and support, as well as learning-optimized examples for exploring AI techniques and building applications. Users can contribute, provide feedback, and collaborate to improve the resource.

aily-blockly

Aily Blockly is a blockly IDE under the Aily Project, providing AI-assisted programming capabilities for non-professional users. It aims to integrate numerous AI capabilities to help hardware developers develop more smoothly, ultimately achieving natural language programming. The software offers features like Engineering Project Management, Library Manager, Serial Debug Tool, AI Project Generation, AI Code Generation, AI Library Conversion, Development Board Configuration Generation, and Lightning Compilation Tool. It is currently in the alpha stage, suitable for prototype verification and educational teaching.

GenAI-Showcase

The Generative AI Use Cases Repository showcases a wide range of applications in generative AI, including Retrieval-Augmented Generation (RAG), AI Agents, and industry-specific use cases. It provides practical notebooks and guidance on utilizing frameworks such as LlamaIndex and LangChain, and demonstrates how to integrate models from leading AI research companies like Anthropic and OpenAI.

pearai-master

PearAI is an inventory that curates cutting-edge AI tools in one place, offering a unified interface for seamless tool integration. The repository serves as the conglomeration of all PearAI project repositories, including VSCode fork, AI chat functionalities, landing page, documentation, and server. Contributions are welcome through quests and issue tackling, with the project stack including TypeScript/Electron.js, Next.js/React, Python FastAPI, and Axiom for logging/telemetry.

atomic_agents

Atomic Agents is a modular and extensible framework designed for creating powerful applications. It follows the principles of Atomic Design, emphasizing small and single-purpose components. Leveraging Pydantic for data validation and serialization, the framework offers a set of tools and agents that can be combined to build AI applications. It depends on the Instructor package and supports various APIs like OpenAI, Cohere, Anthropic, and Gemini. Atomic Agents is suitable for developers looking to create AI agents with a focus on modularity and flexibility.

bpf-developer-tutorial

This is a development tutorial for eBPF based on CO-RE (Compile Once, Run Everywhere). It provides practical eBPF development practices from beginner to advanced, including basic concepts, code examples, and real-world applications. The tutorial focuses on eBPF examples in observability, networking, security, and more. It aims to help eBPF application developers quickly grasp eBPF development methods and techniques through examples in languages such as C, Go, and Rust. The tutorial is structured with independent eBPF tool examples in each directory, covering topics like kprobes, fentry, opensnoop, uprobe, sigsnoop, execsnoop, exitsnoop, runqlat, hardirqs, and more. The project is based on libbpf and frameworks like libbpf, Cilium, libbpf-rs, and eunomia-bpf for development.

autoMate

autoMate is an AI-powered local automation tool designed to help users automate repetitive tasks and reclaim their time. It leverages AI and RPA technology to operate computer interfaces, understand screen content, make autonomous decisions, and support local deployment for data security. With natural language task descriptions, users can easily automate complex workflows without the need for programming knowledge. The tool aims to transform work by freeing users from mundane activities and allowing them to focus on tasks that truly create value, enhancing efficiency and liberating creativity.

xef

xef.ai is a one-stop library designed to bring the power of modern AI to applications and services. It offers integration with Large Language Models (LLM), image generation, and other AI services. The library is packaged in two layers: core libraries for basic AI services integration and integrations with other libraries. xef.ai aims to simplify the transition to modern AI for developers by providing an idiomatic interface, currently supporting Kotlin. Inspired by LangChain and Hugging Face, xef.ai may transmit source code and user input data to third-party services, so users should review privacy policies and take precautions. Libraries are available in Maven Central under the `com.xebia` group, with `xef-core` as the core library. Developers can add these libraries to their projects and explore examples to understand usage.

partykit

PartyServer is a repository containing libraries, examples, and documentation for building real-time apps with Cloudflare Workers. It includes core libraries for working with Durable Objects, WebSockets, Yjs support for real-time collaborative editing, pubsub at scale, state synchronization, and task scheduling. The repository also offers small examples in the `fixtures` directory to demonstrate usage. PartyServer aims to simplify the development of real-time applications by providing enhanced features and utilities for working with various technologies.

DevOpsGPT

DevOpsGPT is an AI-driven software development automation solution that combines Large Language Models (LLM) with DevOps tools to convert natural language requirements into working software. It improves development efficiency by eliminating the need for tedious requirement documentation, shortens development cycles, reduces communication costs, and ensures high-quality deliverables. The Enterprise Edition offers features like existing project analysis, professional model selection, and support for more DevOps platforms. The tool automates requirement development, generates interface documentation, provides pseudocode based on existing projects, facilitates code refinement, enables continuous integration, and supports software version release. Users can run DevOpsGPT with source code or Docker, and the tool comes with limitations in precise documentation generation and understanding existing project code. The product roadmap includes accurate requirement decomposition, rapid import of development requirements, and integration of more software engineering and professional tools for efficient software development tasks under AI planning and execution.

For similar tasks

free-threaded-compatibility

This repository serves as a platform for coordinating ecosystem-wide work related to free-threading topics in Python. It aims to track, understand, and provide documentation for common issues across multiple libraries. Specific project-related issues should be reported in the respective project's issue tracker. For detailed documentation on free-threading topics, visit py-free-threading.github.io.

For similar jobs

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.