chief

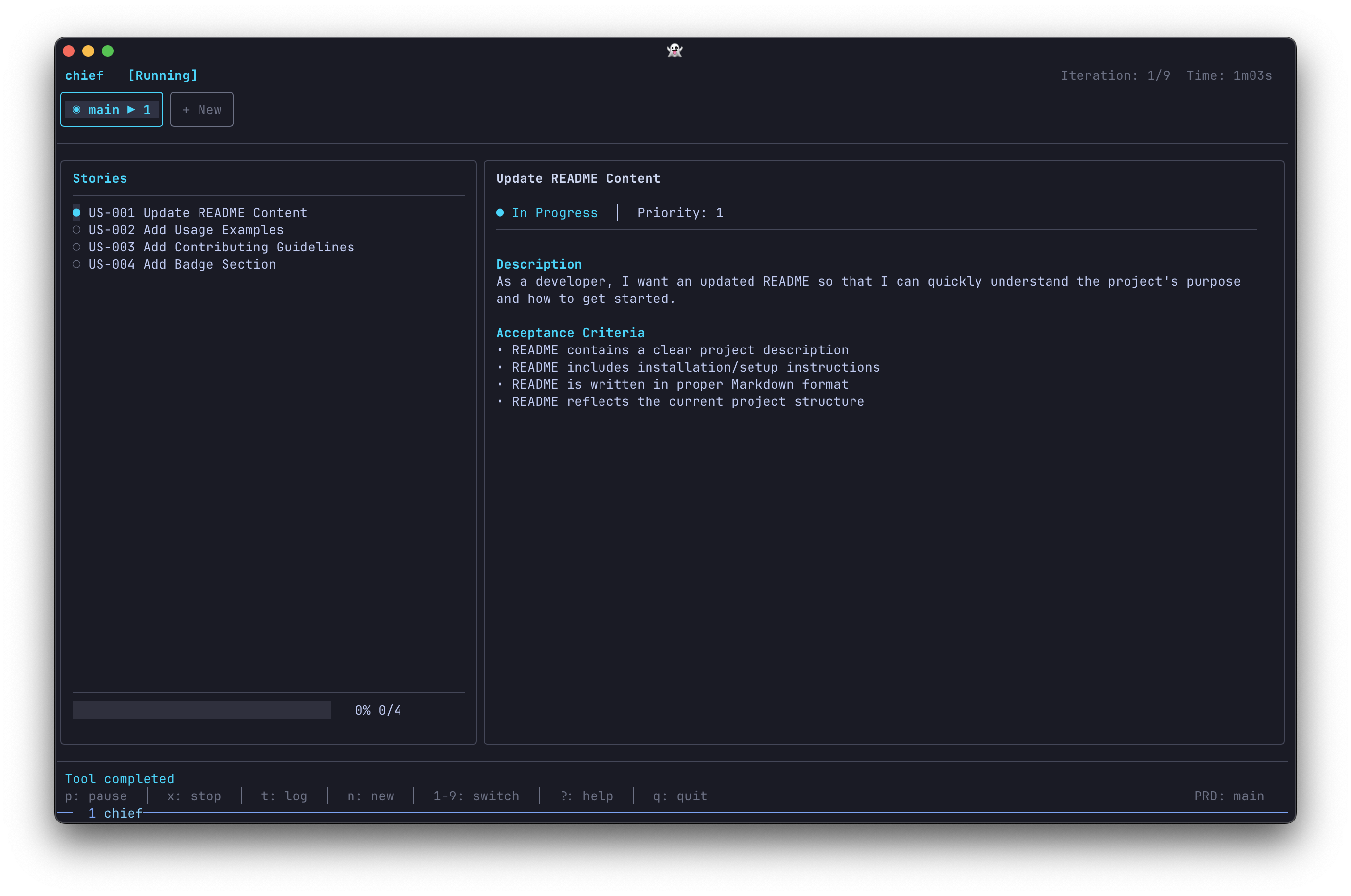

Build big projects with Claude. Chief breaks your work into tasks and runs Claude Code in a loop until they're done.

Stars: 92

Chief is a tool designed to help users build big projects by breaking work into tasks and running Claude Code in a loop until they're done. It allows users to describe their projects as a series of tasks, with Chief running Claude one task at a time and committing changes per task to maintain a clean git history. Chief is ideal for managing large projects efficiently and collaboratively.

README:

Build big projects with Claude. Chief breaks your work into tasks and runs Claude Code in a loop until they're done.

brew install minicodemonkey/chief/chiefOr via install script:

curl -fsSL https://raw.githubusercontent.com/MiniCodeMonkey/chief/refs/heads/main/install.sh | sh# Create a new project

chief new

# Launch the TUI and press 's' to start

chiefChief runs Claude in a Ralph Wiggum loop: each iteration starts with a fresh context window, but progress is persisted between runs. This lets Claude work through large projects without hitting context limits.

- Describe your project as a series of tasks

- Chief runs Claude in a loop, one task at a time

- One commit per task — clean git history, easy to review

See the documentation for details.

- Claude Code CLI installed and authenticated

MIT

- snarktank/ralph — The original Ralph implementation that inspired this project

- Geoffrey Huntley — For coining the "Ralph Wiggum loop" pattern

- Bubble Tea — TUI framework

- Lip Gloss — Terminal styling

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for chief

Similar Open Source Tools

For similar tasks

chief

Chief is a tool designed to help users build big projects by breaking work into tasks and running Claude Code in a loop until they're done. It allows users to describe their projects as a series of tasks, with Chief running Claude one task at a time and committing changes per task to maintain a clean git history. Chief is ideal for managing large projects efficiently and collaboratively.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and enhancing collaboration between teams. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, setting project and model configuration, launching and monitoring training jobs, and media upload and prediction. The SDK also includes tutorial-style Jupyter notebooks demonstrating its usage.

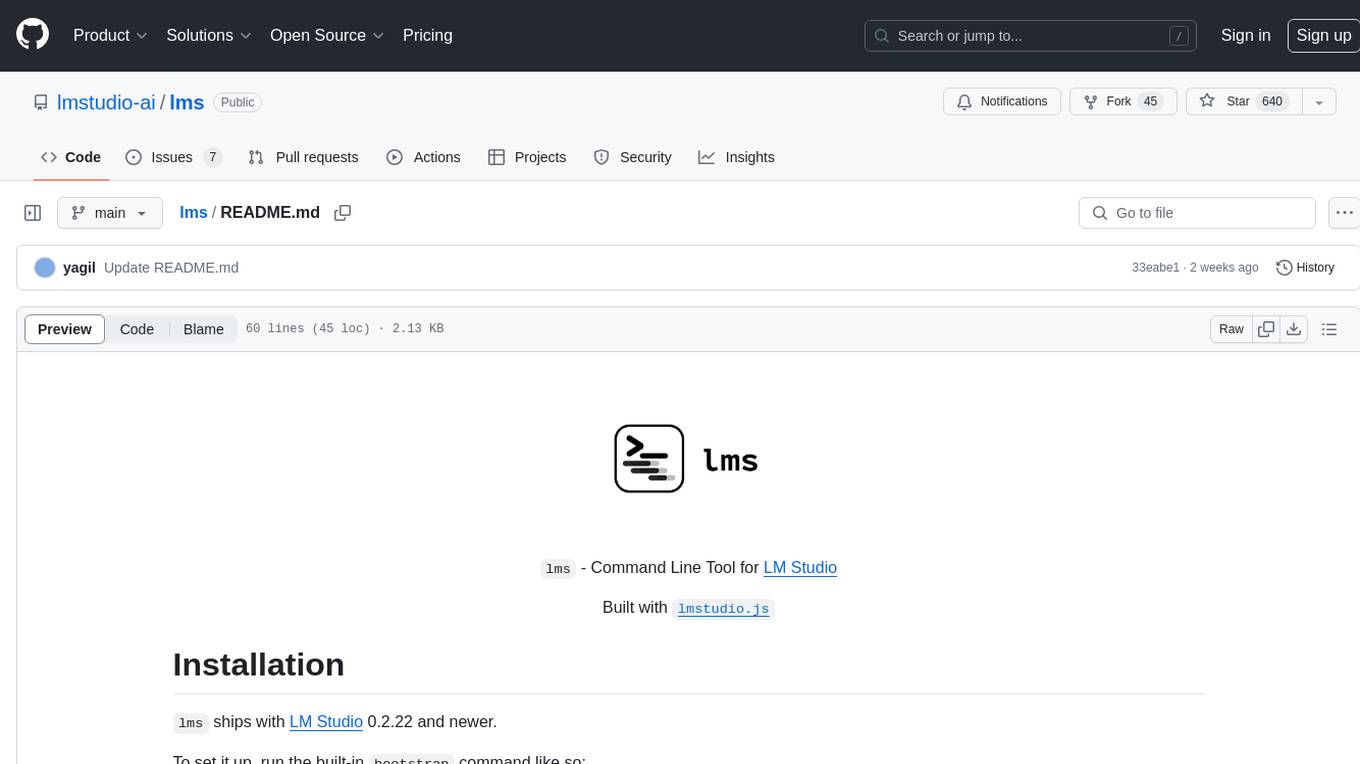

lms

The `lms` Command Line Tool for LM Studio is a powerful tool built with `lmstudio.js` that allows users to interact with LM Studio functionalities through the command line interface. It provides a wide range of commands for managing models, starting and stopping servers, creating projects, and streaming logs. Users can easily bootstrap the tool and access detailed information about each subcommand. The tool is designed to enhance the user experience and streamline workflows when working with LM Studio.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and fostering collaboration. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, configuration management, training job monitoring, media upload, and prediction. The repository also includes tutorial-style Jupyter notebooks demonstrating SDK usage.

modelence

Modelence is an all-in-one TypeScript framework for startups shipping production apps, aiming to eliminate boilerplate for standard web app features. It provides authentication, database setup, cron jobs, AI observability, and email functionalities. Modelence requires Node.js 20.20 or higher. Developers can create projects, install dependencies, and start the development server quickly. For local development, contributors can clone the repository, install dependencies, build the package, and test changes in a real application. Modelence offers examples for further guidance.

For similar jobs

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.