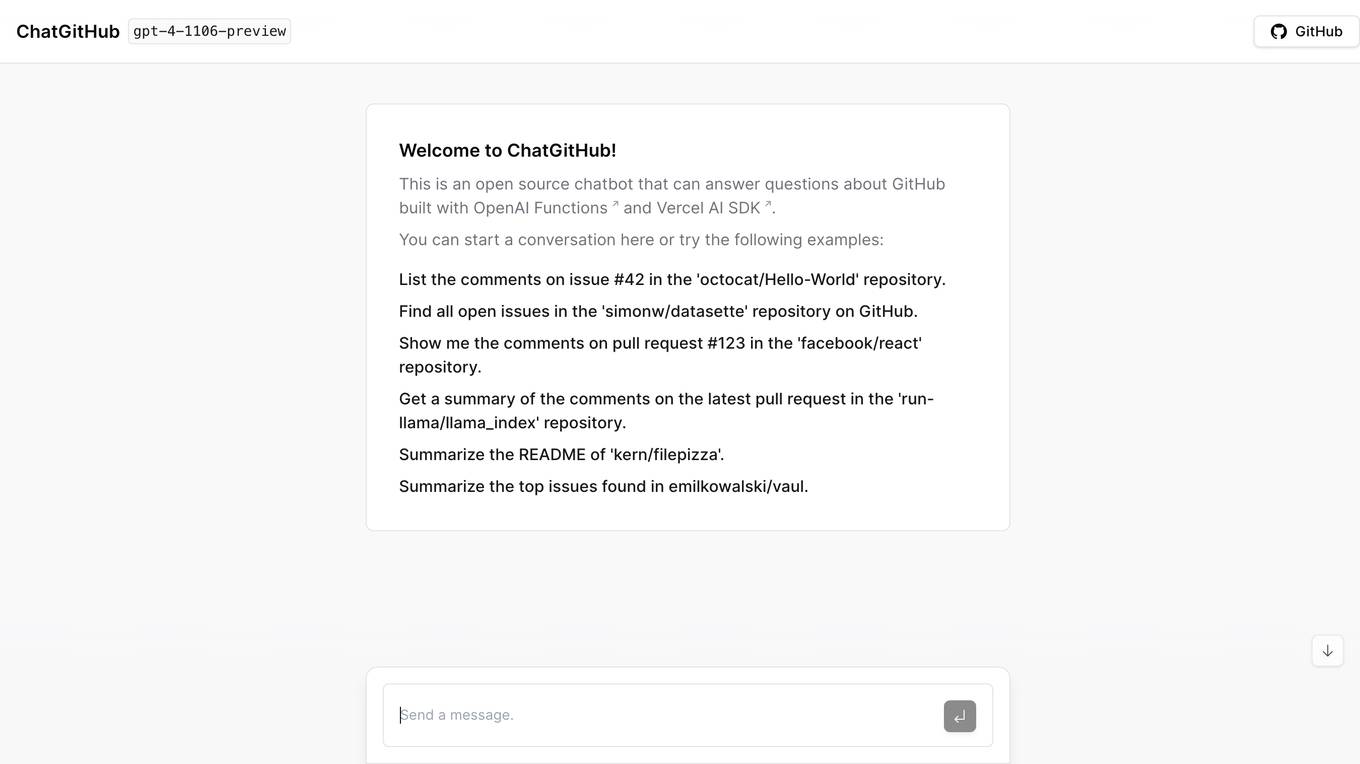

Best AI tools for< refer software products >

20 - AI tool Sites

Pare

Pare is a platform that allows users to earn commissions by referring software products. Users can create and share unique links to earn commissions on successful referrals. Pare is currently in private beta.

404 Error Page

The website displays a '404: NOT_FOUND' error message indicating that the deployment cannot be found. It provides a code 'DEPLOYMENT_NOT_FOUND' and an ID 'sin1::lx7ln-1719944180468-135ab3b8e59a'. Users are directed to refer to the documentation for further information and troubleshooting.

Error 404 Page

The website displays a '404: NOT_FOUND' error message indicating that the deployment cannot be found. It provides a code 'DEPLOYMENT_NOT_FOUND' and an ID 'sin1::2ssqd-1718650107450-b8805d347219'. Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Page

The website displays a '404: NOT_FOUND' error message indicating that the deployment cannot be found. It provides a code 'DEPLOYMENT_NOT_FOUND' and an ID 'sin1::gstqd-1719857339376-c625860a2ca4'. Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Notifier

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::2vhpp-1719857711860-edebb7852146) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Page

The website displays a '404: NOT_FOUND' error message indicating that the deployment cannot be found. It provides a code 'DEPLOYMENT_NOT_FOUND' and an ID 'sin1::wd6pg-1719338307949-651a189e1496'. Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Assistant

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::vjjbl-1719339872227-523e6fe392b0). Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. Users encountering this error are directed to refer to the documentation for more information and troubleshooting assistance.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. Users encountering this error are advised to refer to the documentation for more information and troubleshooting assistance.

Learn Playwright

Learn Playwright is a comprehensive platform providing resources for learning end-to-end testing using the Playwright automation framework. It offers a blog with in-depth subjects about end-to-end testing, an 'Ask AI' feature for querying ChatGPT about Playwright, and a Dev Tools section that serves as an all-in-one toolbox for QA engineers. Additionally, users can explore QA job opportunities, access answered questions about Playwright, browse a Discord forum archive, watch tutorials and conference talks, utilize a browser extension for generating Playwright locators, and refer to a QA Wiki for definitions of common testing terms. With a user-friendly interface and valuable content, Learn Playwright aims to enhance the testing experience for professionals and enthusiasts alike.

Error Message 402: PAYMENT_REQUIRED

The website page displays a 402: PAYMENT_REQUIRED error message indicating that the deployment has been disabled. It suggests that the connection to Vercel is working correctly but the deployment is disabled. The error code provided is DEPLOYMENT_DISABLED with an ID of sin1::c9knz-1719339218649-72a06805f794. It advises visitors to contact the website owner or try again later, and owners to refer to the documentation section for further information.

LLMChess

LLMChess is a web-based chess game that utilizes large language models (LLMs) to power the gameplay. Players can select the LLM model they wish to play against, and the game will commence once the "Start" button is clicked. The game logs are displayed in a black-bordered pane on the right-hand side of the screen. LLMChess is compatible with the Google Chrome browser. For more information on the game's functionality and participation guidelines, please refer to the provided link.

Winston AI

Winston AI is an AI-powered platform offering an affiliate program for detecting AI-generated content. Users can sign up to refer paying customers and earn a 40% commission on all payments within the first 12 months. The platform prohibits the use of paid advertisements that may rival their marketing efforts. Powered by Rewardful, Winston AI provides a seamless experience for affiliates to promote and earn from the software.

OpenResty

The website is currently displaying a '403 Forbidden' error message, which indicates that the server understood the request but refuses to authorize it. This error is typically caused by insufficient permission to access the requested resource. The 'openresty' mentioned in the message refers to a web platform based on NGINX and LuaJIT, known for its high performance and scalability in handling web traffic. It is commonly used for building dynamic web applications and APIs.

403 Forbidden OpenResty

The website is currently displaying a '403 Forbidden' error message, which indicates that the server understood the request but refuses to authorize it. This error is typically caused by insufficient permissions or misconfiguration on the server side. The 'openresty' mentioned in the message refers to a web platform based on NGINX and LuaJIT, often used for building high-performance web applications. It seems that the website is currently inaccessible due to server-side issues.

OpenResty

The website is currently displaying a '403 Forbidden' error message, which indicates that the server understood the request but refuses to authorize it. This error is often caused by incorrect file permissions or misconfigured directives in the server. The 'openresty' mentioned in the message refers to a web platform based on NGINX and LuaJIT, commonly used for building dynamic web applications. It is important to troubleshoot and resolve the underlying issue causing the 403 error to restore access to the website.

OpenResty

The website is currently displaying a '403 Forbidden' error, which indicates that the server understood the request but refuses to authorize it. This error message is typically encountered when trying to access a webpage or resource that is restricted or unavailable to the user. The 'openresty' mentioned in the text refers to a web platform based on NGINX and LuaJIT, often used for building high-performance web applications. It seems that the website is using OpenResty as its server software.

OpenResty

The website is currently displaying a '403 Forbidden' error message, which indicates that the server understood the request but refuses to authorize it. This error is often due to insufficient permissions or access restrictions on the server side. The 'openresty' mentioned in the message refers to a web platform based on NGINX and LuaJIT, commonly used for building dynamic web applications. The website may be experiencing technical issues or undergoing maintenance.

Superbooking.ai

Superbooking.ai is an AI-powered platform revolutionizing bookings for businesses and consumers. It offers a comprehensive solution for scheduling and managing a variety of beauty services, providing tailor-made, personalized services. The platform integrates Web2 and Web3 functionalities to ensure a seamless and trustworthy booking experience, leveraging the benefits of both worlds. With advanced tools and AI capabilities, Superbooking.ai aims to empower businesses and consumers alike by simplifying appointment scheduling, payments, and referral rewards.

postai.pro

postai.pro is a domain that is currently up for sale. The website serves as a platform for the sale of the domain postai.pro. It is generated by Sedo Domain Parking, and the domain owner is looking to sell it. The site provides information about the domain and offers it for purchase. Users interested in acquiring the domain can find details and contact the owner through the website. Sedo, the platform hosting the domain, does not have any direct relationship with third-party advertisers. The website does not endorse or recommend any specific services or trademarks. For more information, users can refer to the privacy policy on the site.

20 - Open Source AI Tools

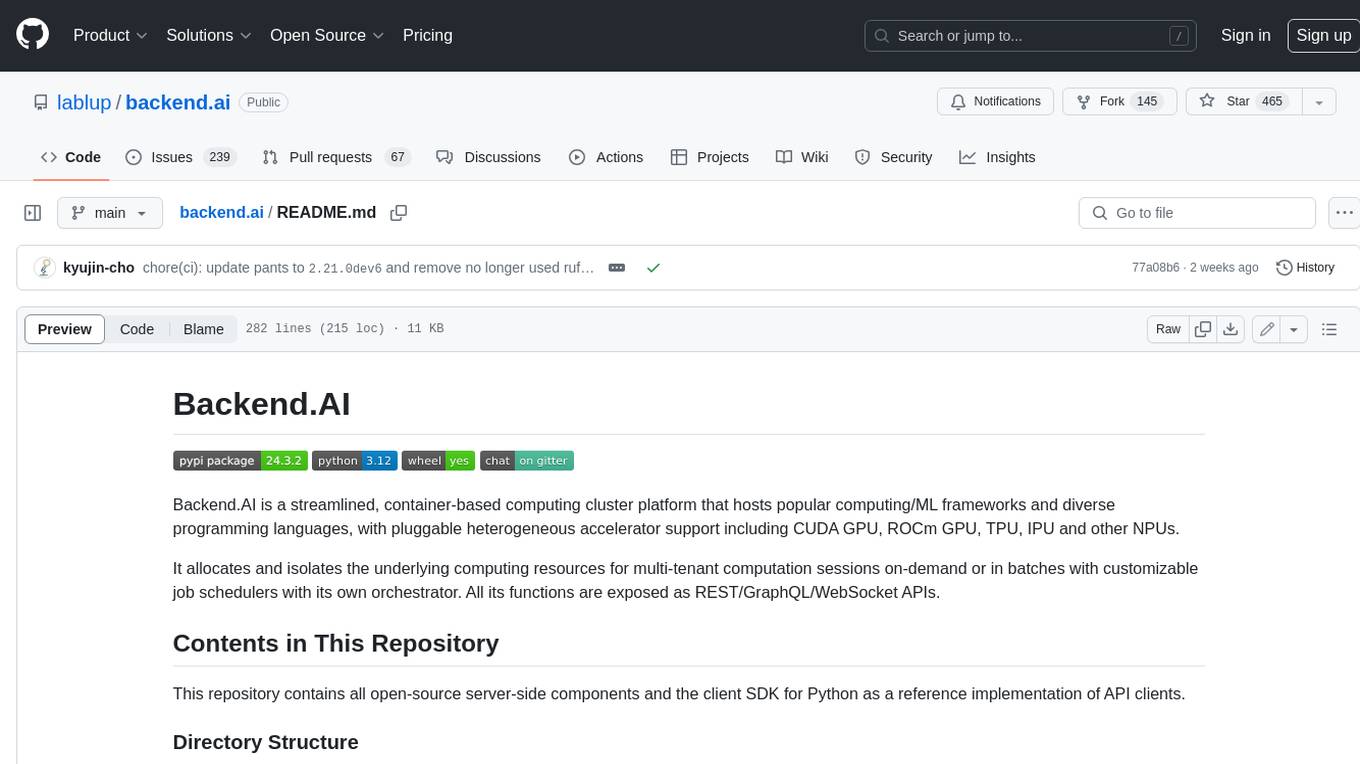

backend.ai

Backend.AI is a streamlined, container-based computing cluster platform that hosts popular computing/ML frameworks and diverse programming languages, with pluggable heterogeneous accelerator support including CUDA GPU, ROCm GPU, TPU, IPU and other NPUs. It allocates and isolates the underlying computing resources for multi-tenant computation sessions on-demand or in batches with customizable job schedulers with its own orchestrator. All its functions are exposed as REST/GraphQL/WebSocket APIs.

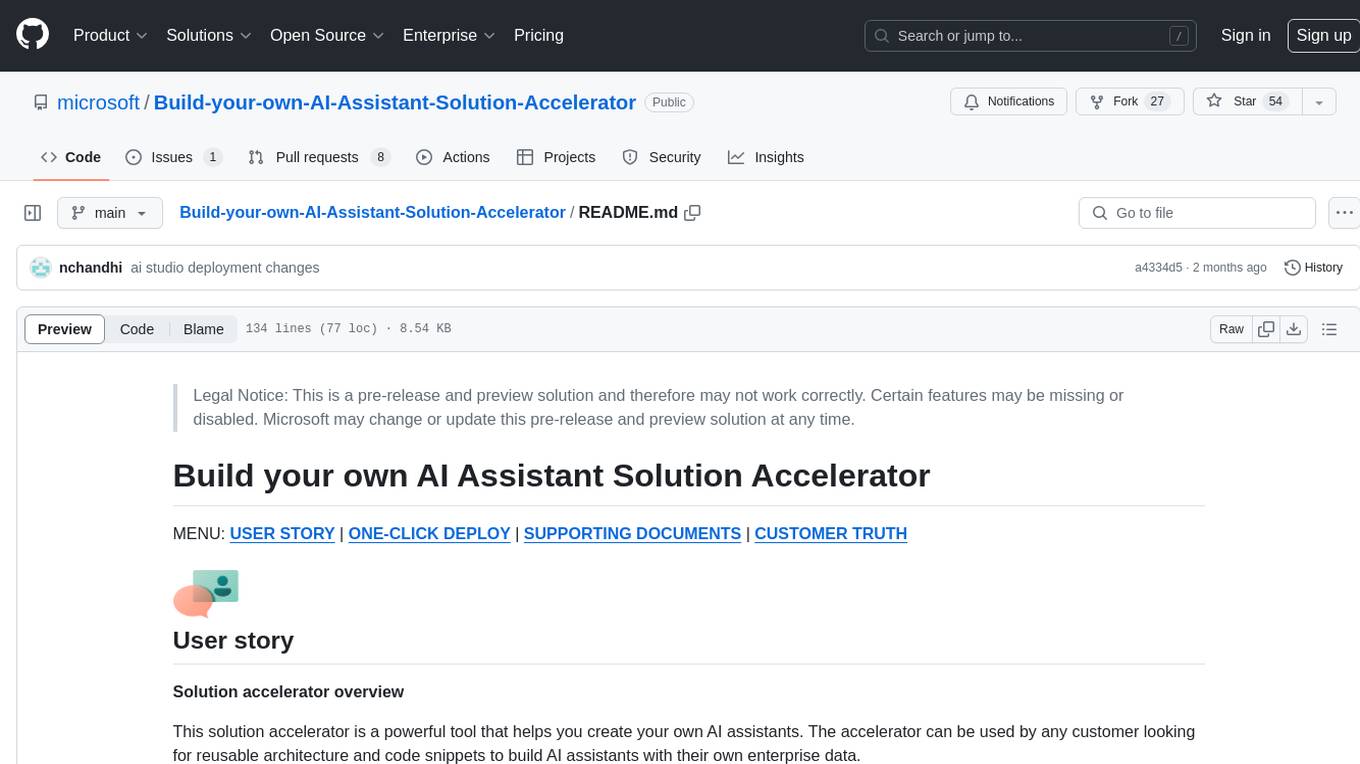

Build-your-own-AI-Assistant-Solution-Accelerator

Build-your-own-AI-Assistant-Solution-Accelerator is a pre-release and preview solution that helps users create their own AI assistants. It leverages Azure Open AI Service, Azure AI Search, and Microsoft Fabric to identify, summarize, and categorize unstructured information. Users can easily find relevant articles and grants, generate grant applications, and export them as PDF or Word documents. The solution accelerator provides reusable architecture and code snippets for building AI assistants with enterprise data. It is designed for researchers looking to explore flu vaccine studies and grants to accelerate grant proposal submissions.

chat-with-your-data-solution-accelerator

Chat with your data using OpenAI and AI Search. This solution accelerator uses an Azure OpenAI GPT model and an Azure AI Search index generated from your data, which is integrated into a web application to provide a natural language interface, including speech-to-text functionality, for search queries. Users can drag and drop files, point to storage, and take care of technical setup to transform documents. There is a web app that users can create in their own subscription with security and authentication.

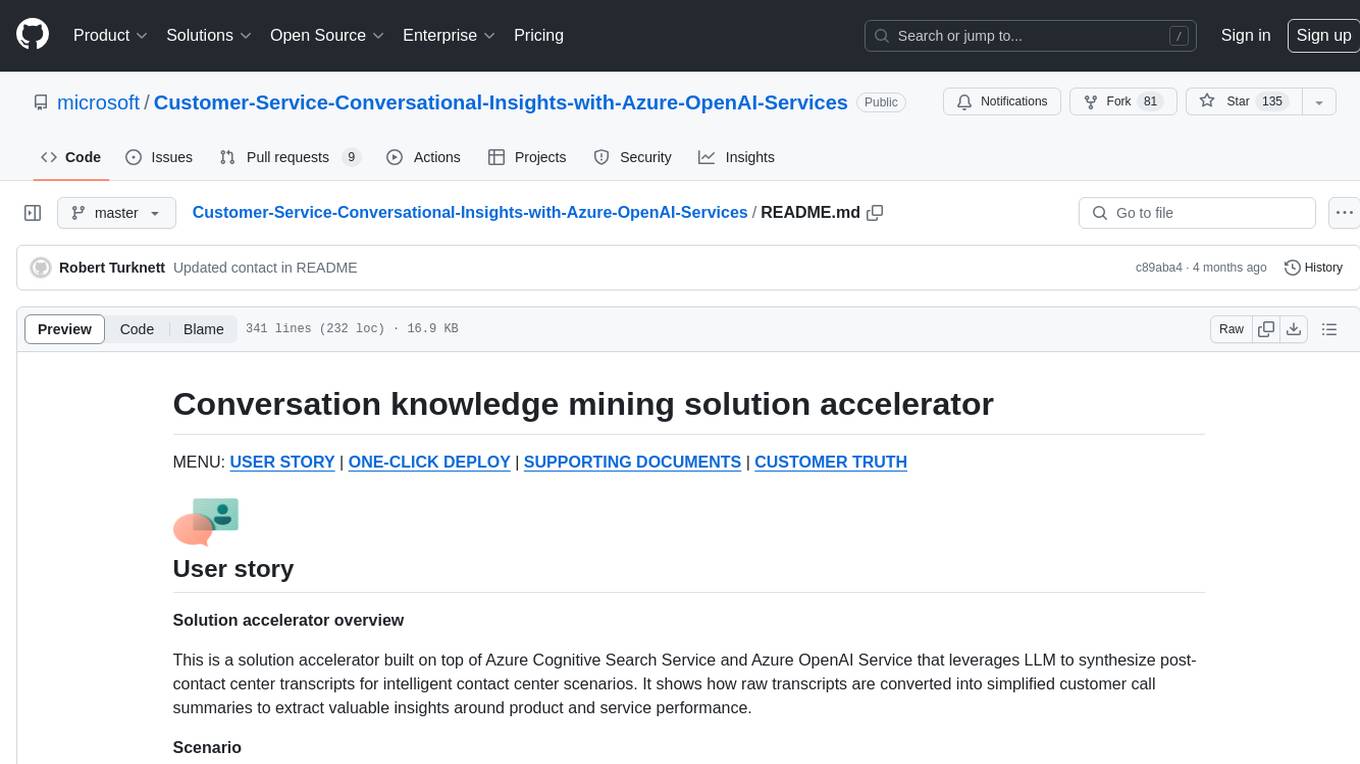

Customer-Service-Conversational-Insights-with-Azure-OpenAI-Services

This solution accelerator is built on Azure Cognitive Search Service and Azure OpenAI Service to synthesize post-contact center transcripts for intelligent contact center scenarios. It converts raw transcripts into customer call summaries to extract insights around product and service performance. Key features include conversation summarization, key phrase extraction, speech-to-text transcription, sensitive information extraction, sentiment analysis, and opinion mining. The tool enables data professionals to quickly analyze call logs for improvement in contact center operations.

intel-extension-for-transformers

Intel® Extension for Transformers is an innovative toolkit designed to accelerate GenAI/LLM everywhere with the optimal performance of Transformer-based models on various Intel platforms, including Intel Gaudi2, Intel CPU, and Intel GPU. The toolkit provides the below key features and examples: * Seamless user experience of model compressions on Transformer-based models by extending [Hugging Face transformers](https://github.com/huggingface/transformers) APIs and leveraging [Intel® Neural Compressor](https://github.com/intel/neural-compressor) * Advanced software optimizations and unique compression-aware runtime (released with NeurIPS 2022's paper [Fast Distilbert on CPUs](https://arxiv.org/abs/2211.07715) and [QuaLA-MiniLM: a Quantized Length Adaptive MiniLM](https://arxiv.org/abs/2210.17114), and NeurIPS 2021's paper [Prune Once for All: Sparse Pre-Trained Language Models](https://arxiv.org/abs/2111.05754)) * Optimized Transformer-based model packages such as [Stable Diffusion](examples/huggingface/pytorch/text-to-image/deployment/stable_diffusion), [GPT-J-6B](examples/huggingface/pytorch/text-generation/deployment), [GPT-NEOX](examples/huggingface/pytorch/language-modeling/quantization#2-validated-model-list), [BLOOM-176B](examples/huggingface/pytorch/language-modeling/inference#BLOOM-176B), [T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), [Flan-T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), and end-to-end workflows such as [SetFit-based text classification](docs/tutorials/pytorch/text-classification/SetFit_model_compression_AGNews.ipynb) and [document level sentiment analysis (DLSA)](workflows/dlsa) * [NeuralChat](intel_extension_for_transformers/neural_chat), a customizable chatbot framework to create your own chatbot within minutes by leveraging a rich set of [plugins](https://github.com/intel/intel-extension-for-transformers/blob/main/intel_extension_for_transformers/neural_chat/docs/advanced_features.md) such as [Knowledge Retrieval](./intel_extension_for_transformers/neural_chat/pipeline/plugins/retrieval/README.md), [Speech Interaction](./intel_extension_for_transformers/neural_chat/pipeline/plugins/audio/README.md), [Query Caching](./intel_extension_for_transformers/neural_chat/pipeline/plugins/caching/README.md), and [Security Guardrail](./intel_extension_for_transformers/neural_chat/pipeline/plugins/security/README.md). This framework supports Intel Gaudi2/CPU/GPU. * [Inference](https://github.com/intel/neural-speed/tree/main) of Large Language Model (LLM) in pure C/C++ with weight-only quantization kernels for Intel CPU and Intel GPU (TBD), supporting [GPT-NEOX](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox), [LLAMA](https://github.com/intel/neural-speed/tree/main/neural_speed/models/llama), [MPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/mpt), [FALCON](https://github.com/intel/neural-speed/tree/main/neural_speed/models/falcon), [BLOOM-7B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/bloom), [OPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/opt), [ChatGLM2-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/chatglm), [GPT-J-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptj), and [Dolly-v2-3B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox). Support AMX, VNNI, AVX512F and AVX2 instruction set. We've boosted the performance of Intel CPUs, with a particular focus on the 4th generation Intel Xeon Scalable processor, codenamed [Sapphire Rapids](https://www.intel.com/content/www/us/en/products/docs/processors/xeon-accelerated/4th-gen-xeon-scalable-processors.html).

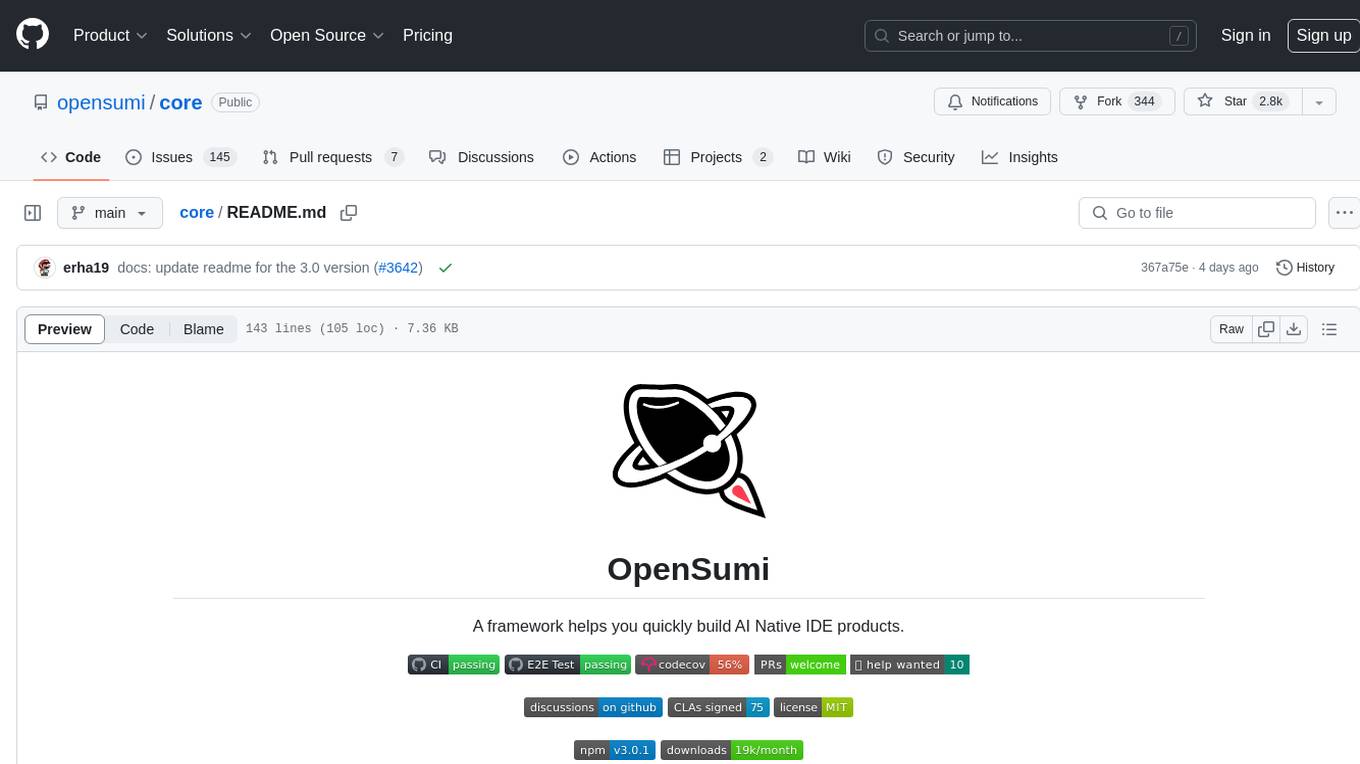

core

OpenSumi is a framework designed to help users quickly build AI Native IDE products. It provides a set of tools and templates for creating Cloud IDEs, Desktop IDEs based on Electron, CodeBlitz web IDE Framework, Lite Web IDE on the Browser, and Mini-App liked IDE. The framework also offers documentation for users to refer to and a detailed guide on contributing to the project. OpenSumi encourages contributions from the community and provides a platform for users to report bugs, contribute code, or improve documentation. The project is licensed under the MIT license and contains third-party code under other open source licenses.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

InvokeAI

InvokeAI is a leading creative engine built to empower professionals and enthusiasts alike. Generate and create stunning visual media using the latest AI-driven technologies. InvokeAI offers an industry leading Web Interface, interactive Command Line Interface, and also serves as the foundation for multiple commercial products.

neural-compressor

Intel® Neural Compressor is an open-source Python library that supports popular model compression techniques such as quantization, pruning (sparsity), distillation, and neural architecture search on mainstream frameworks such as TensorFlow, PyTorch, ONNX Runtime, and MXNet. It provides key features, typical examples, and open collaborations, including support for a wide range of Intel hardware, validation of popular LLMs, and collaboration with cloud marketplaces, software platforms, and open AI ecosystems.

MaixPy

MaixPy is a Python SDK that enables users to easily create AI vision projects on edge devices. It provides a user-friendly API for accessing NPU, making it suitable for AI Algorithm Engineers, STEM teachers, Makers, Engineers, Students, Enterprises, and Contestants. The tool supports Python programming, MaixVision Workstation, AI vision, video streaming, voice recognition, and peripheral usage. It also offers an online AI training platform called MaixHub. MaixPy is designed for new hardware platforms like MaixCAM, offering improved performance and features compared to older versions. The ecosystem includes hardware, software, tools, documentation, and a cloud platform.

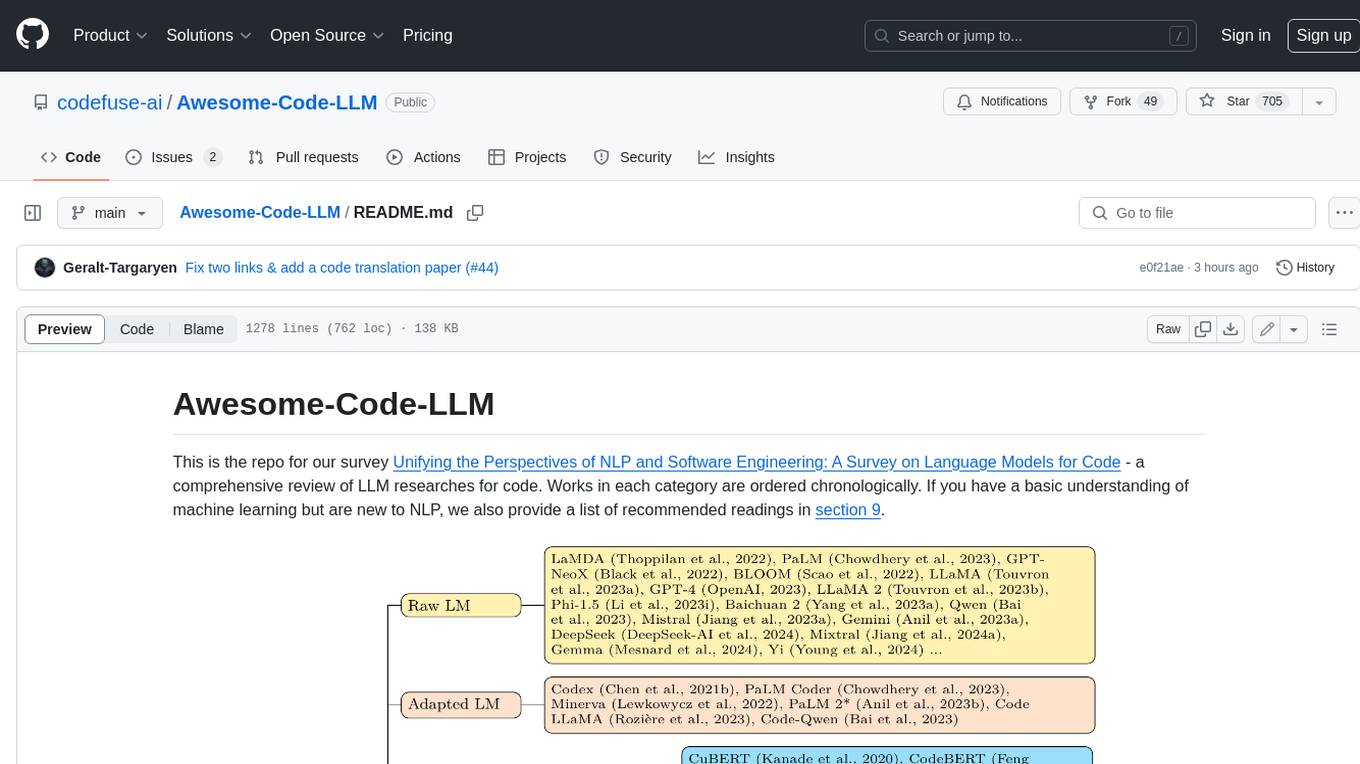

Awesome-Code-LLM

Analyze the following text from a github repository (name and readme text at end) . Then, generate a JSON object with the following keys and provide the corresponding information for each key, in lowercase letters: 'description' (detailed description of the repo, must be less than 400 words,Ensure that no line breaks and quotation marks.),'for_jobs' (List 5 jobs suitable for this tool,in lowercase letters), 'ai_keywords' (keywords of the tool,user may use those keyword to find the tool,in lowercase letters), 'for_tasks' (list of 5 specific tasks user can use this tool to do,in lowercase letters), 'answer' (in english languages)

genkit-plugins

Community plugins repository for Google Firebase Genkit, containing various plugins for AI APIs and Vector Stores. Developed by The Fire Company, this repository offers plugins like genkitx-anthropic, genkitx-cohere, genkitx-groq, genkitx-mistral, genkitx-openai, genkitx-convex, and genkitx-hnsw. Users can easily install and use these plugins in their projects, with examples provided in the documentation. The repository also showcases products like Fireview and Giftit built using these plugins, and welcomes contributions from the community.

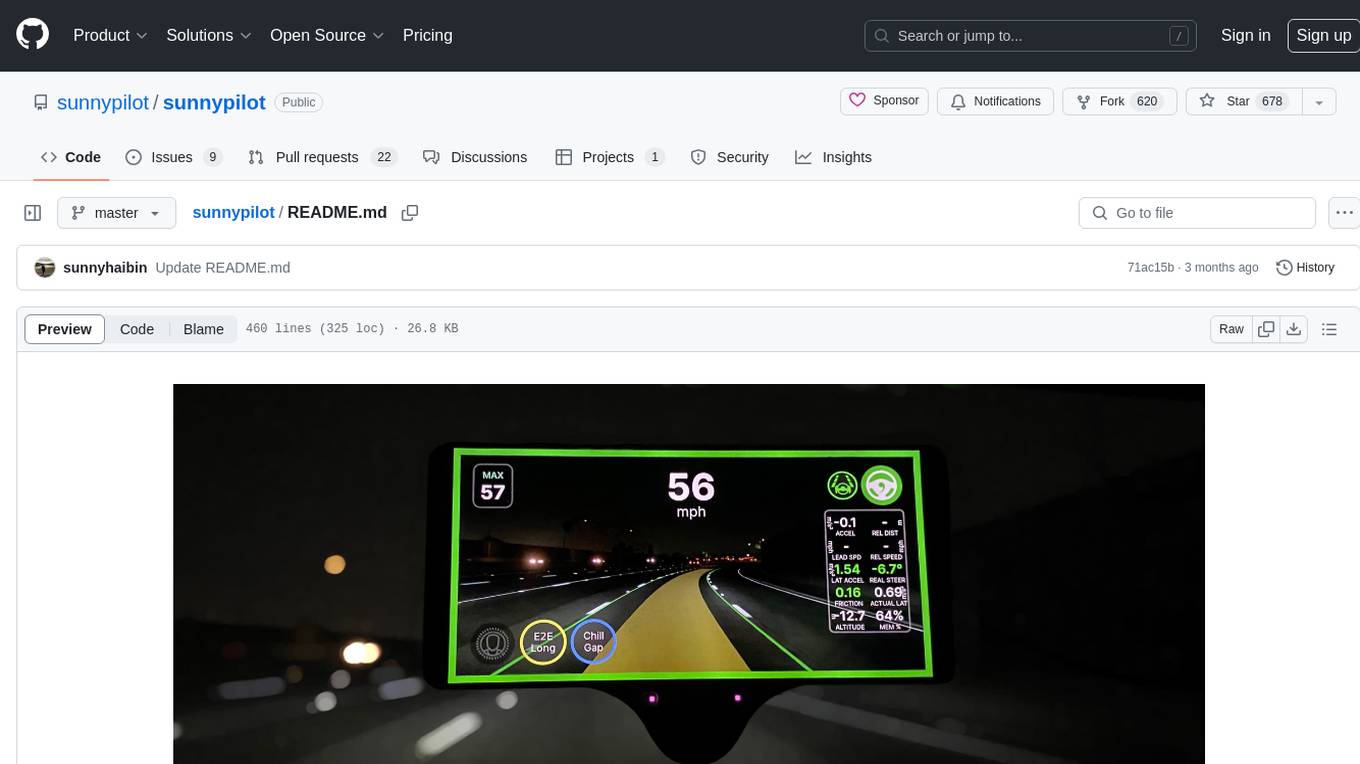

sunnypilot

Sunnypilot is a fork of comma.ai's openpilot, offering a unique driving experience for over 250+ supported car makes and models with modified behaviors of driving assist engagements. It complies with comma.ai's safety rules and provides features like Modified Assistive Driving Safety, Dynamic Lane Profile, Enhanced Speed Control, Gap Adjust Cruise, and more. Users can install it on supported devices and cars following detailed instructions, ensuring a safe and enhanced driving experience.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

tensorrtllm_backend

The TensorRT-LLM Backend is a Triton backend designed to serve TensorRT-LLM models with Triton Inference Server. It supports features like inflight batching, paged attention, and more. Users can access the backend through pre-built Docker containers or build it using scripts provided in the repository. The backend can be used to create models for tasks like tokenizing, inferencing, de-tokenizing, ensemble modeling, and more. Users can interact with the backend using provided client scripts and query the server for metrics related to request handling, memory usage, KV cache blocks, and more. Testing for the backend can be done following the instructions in the 'ci/README.md' file.

terraform-genai-doc-summarization

This solution showcases how to summarize a large corpus of documents using Generative AI. It provides an end-to-end demonstration of document summarization going all the way from raw documents, detecting text in the documents and summarizing the documents on-demand using Vertex AI LLM APIs, Cloud Vision Optical Character Recognition (OCR) and BigQuery.

feedgen

FeedGen is an open-source tool that uses Google Cloud's state-of-the-art Large Language Models (LLMs) to improve product titles, generate more comprehensive descriptions, and fill missing attributes in product feeds. It helps merchants and advertisers surface and fix quality issues in their feeds using Generative AI in a simple and configurable way. The tool relies on GCP's Vertex AI API to provide both zero-shot and few-shot inference capabilities on GCP's foundational LLMs. With few-shot prompting, users can customize the model's responses towards their own data, achieving higher quality and more consistent output. FeedGen is an Apps Script based application that runs as an HTML sidebar in Google Sheets, allowing users to optimize their feeds with ease.

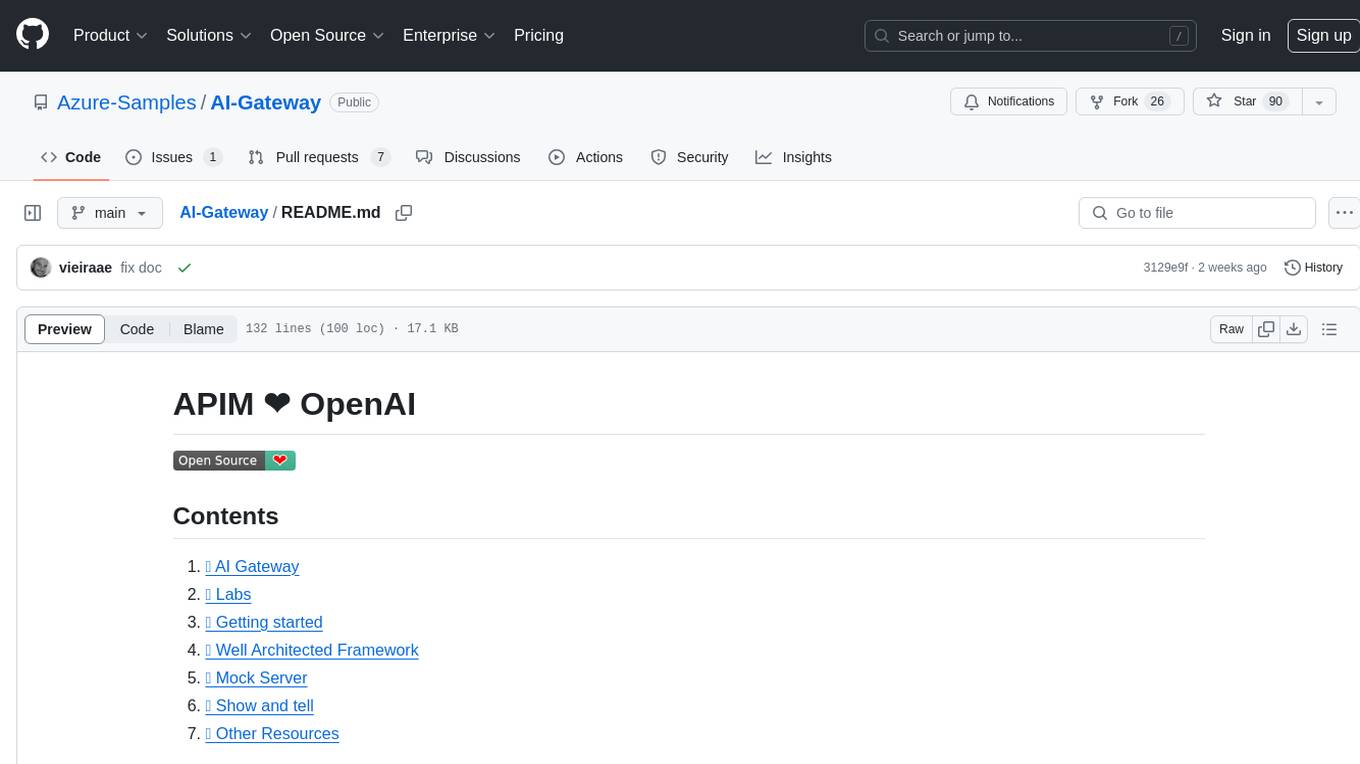

AI-Gateway

The AI-Gateway repository explores the AI Gateway pattern through a series of experimental labs, focusing on Azure API Management for handling AI services APIs. The labs provide step-by-step instructions using Jupyter notebooks with Python scripts, Bicep files, and APIM policies. The goal is to accelerate experimentation of advanced use cases and pave the way for further innovation in the rapidly evolving field of AI. The repository also includes a Mock Server to mimic the behavior of the OpenAI API for testing and development purposes.

5 - OpenAI Gpts

GPTLaudos

Olá radiologista. Para começar, digite /prelim e escreva o tipo de exame e os seus achados preliminares, logo em seguida enviarei o laudo completo!

Chip

"Chip" refers to the chip on this bot's shoulder. he's...not friendly. But he's still helpful, even when he's insulting you.