Best AI tools for< Rugby Referee >

Infographic

8 - AI tool Sites

Replit

Replit is a software creation platform that provides an integrated development environment (IDE), artificial intelligence (AI) assistance, and deployment services. It allows users to build, test, and deploy software projects directly from their browser, without the need for local setup or configuration. Replit offers real-time collaboration, code generation, debugging, and autocompletion features powered by AI. It supports multiple programming languages and frameworks, making it suitable for a wide range of development projects.

Chat Blackbox

Chat Blackbox is an AI tool that specializes in AI code generation, code chat, and code search. It provides a platform where users can interact with AI to generate code, discuss code-related topics, and search for specific code snippets. The tool leverages artificial intelligence algorithms to enhance the coding experience and streamline the development process. With Chat Blackbox, users can access a wide range of features to improve their coding skills and efficiency.

Blackbox

Blackbox is an AI-powered code generation, code chat, and code search tool that helps developers write better code faster. With Blackbox, you can generate code snippets, chat with an AI assistant about code, and search for code examples from a massive database.

Supersimple

Supersimple is an AI-native data analytics platform that combines a semantic data modeling layer with the ability to answer ad hoc questions, giving users reliable, consistent data to power their day-to-day work.

Sublayer

Sublayer is a model-agnostic AI agent framework in Ruby that helps teams adopt AI through essays, tutorials, coaching, community meetups, and real products. The platform provides valuable resources for learning and implementing AI technologies, fostering a community of developers and enthusiasts. Sublayer emphasizes the collaboration between human creativity and machine capability, aiming to amplify innovation and streamline development cycles.

Code & Pepper

Code & Pepper is an elite software development company specializing in FinTech and HealthTech. They combine human talent with AI tools to deliver efficient solutions. With a focus on specific technologies like React.js, Node.js, Angular, Ruby on Rails, and React Native, they offer custom software products and dedicated software engineers. Their unique talent identification methodology selects the top 1.6% of candidates for exceptional outcomes. Code & Pepper champions human-AI centaur teams, harmonizing creativity with AI precision for superior results.

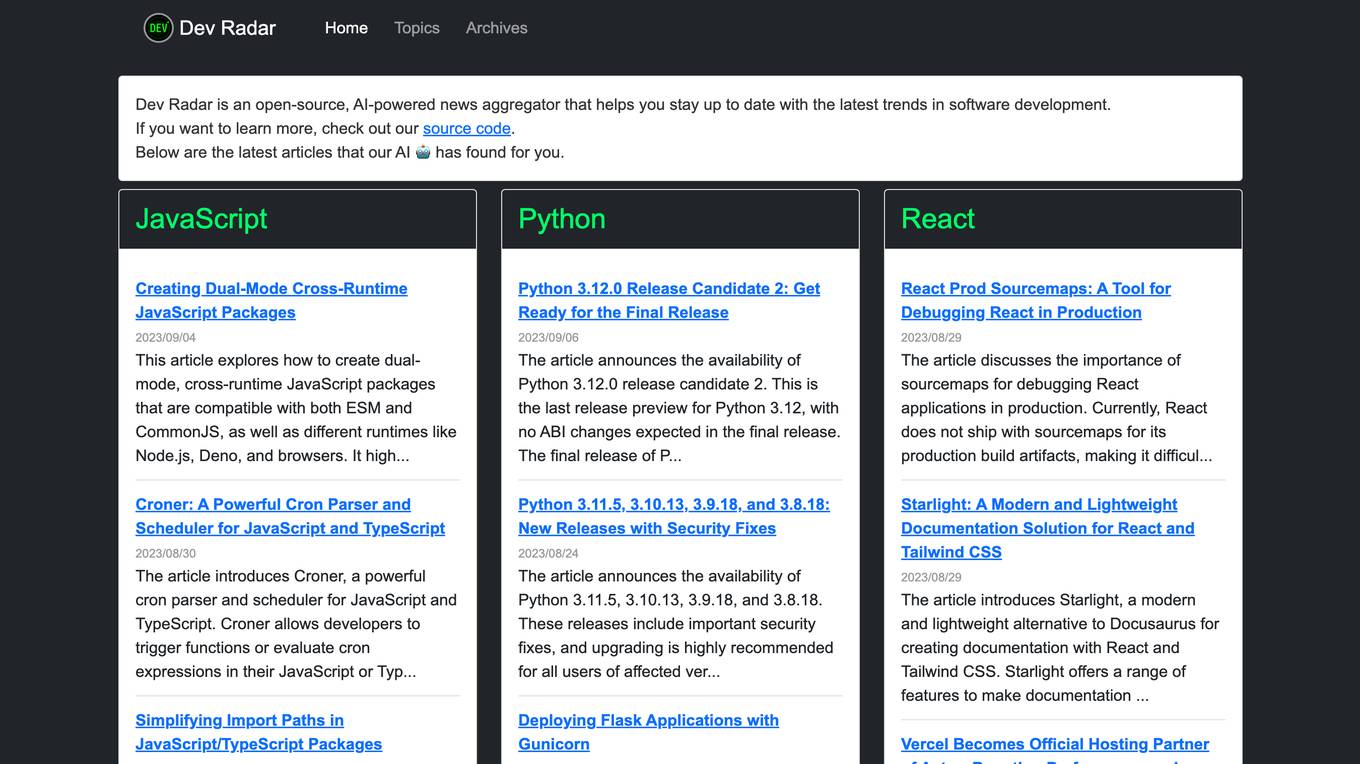

Dev Radar

Dev Radar is an open-source, AI-powered news aggregator that helps users stay up to date with the latest trends in software development. It provides curated articles on various topics such as JavaScript, Python, React, TypeScript, Rust, Go, Node.js, Deno, Ruby, and more. The platform leverages AI technology to deliver relevant and insightful content to developers, making it a valuable resource for staying informed in the rapidly evolving tech industry.

Every AI

Every AI is an AI software that offers over 120 AI models, including ChatGPT from OpenAI and Anthropic/Claude, for a wide range of applications. It provides incredible speeds and access to all models for a subscription fee of $20. The platform aims to simplify AI development at scale by offering developer-friendly solutions with extensive documentation and SDKs for popular programming languages like Ruby and JavaScript.

0 - Open Source Tools

16 - OpenAI Gpts

Rugby Strategy Assistant

Rugby Strategy Assistant is the ultimate rugby strategy app designed for players, coaches, and fans who wish to deepen their understanding of the game. This app serves as a guide, offering valuable insights into game planning, the history of rugby, and the tactical nuances of each match.

Ruby Code Helper

Assists with Ruby programming by providing code examples, debugging tips, and best practices.

AI Ruby Programming Expert

Expert in Ruby programming, offering code generation, learning support, and code review.

Principal Backend Engineer

Expert Backend Developer: Skilled in Python, Java, Node.js, Ruby, PHP for robust backend solutions.

React on Rails Pro

Expert in Rails & React, focusing on high-standard software development.