AI tools for agent-evaluation

Related Tools:

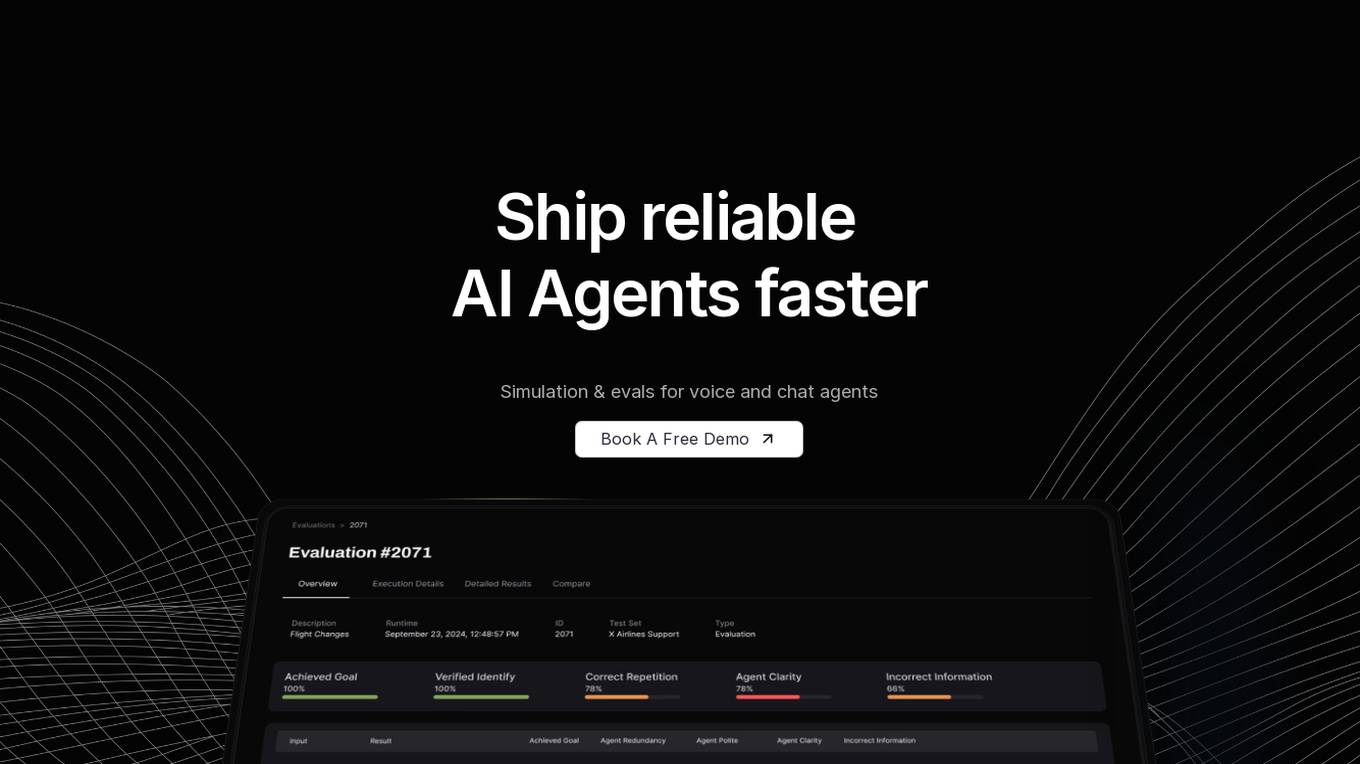

Coval

Coval is an AI tool designed to help users ship reliable AI agents faster by providing simulation and evaluations for voice and chat agents. It allows users to simulate thousands of scenarios from a few test cases, create prompts for testing, and evaluate agent interactions comprehensively. Coval offers AI-powered simulations, voice AI compatibility, performance tracking, workflow metrics, and customizable evaluation metrics to optimize AI agents efficiently.

Vocera

Vocera is an AI voice agent testing tool that allows users to test and monitor voice AI agents efficiently. It enables users to launch voice agents in minutes, ensuring a seamless conversational experience. With features like testing against AI-generated datasets, simulating scenarios, and monitoring AI performance, Vocera helps in evaluating and improving voice agent interactions. The tool provides real-time insights, detailed logs, and trend analysis for optimal performance, along with instant notifications for errors and failures. Vocera is designed to work for everyone, offering an intuitive dashboard and data-driven decision-making for continuous improvement.

Hamming

Hamming is an AI tool designed to help automate voice agent testing and optimization. It offers features such as prompt optimization, automated voice testing, monitoring, and more. The platform allows users to test AI voice agents against simulated users, create optimized prompts, actively monitor AI app usage, and simulate customer calls to identify system gaps. Hamming is trusted by AI-forward enterprises and is built for inbound and outbound agents, including AI appointment scheduling, AI drive-through, AI customer support, AI phone follow-ups, AI personal assistant, and AI coaching and tutoring.

Galileo AI

Galileo AI is a platform that offers automated evaluations for AI applications, bringing automation and insight to AI evaluations to ensure reliable and confident shipping. It helps in eliminating 80% of evaluation time by replacing manual reviews with high-accuracy metrics, enabling rapid iteration, achieving real-time protection, and providing end-to-end visibility into agent completions. Galileo also allows developers to take control of AI complexity, de-risk AI in production, and deploy AI applications flexibly across different environments. The platform is trusted by enterprises and loved by developers for its accuracy, low-latency, and ability to run on L4 GPUs.

Autoblocks AI

Autoblocks AI is an AI application designed to help users build safe AI apps efficiently. It allows users to ship AI agents in minutes, speeding up the development process significantly. With Autoblocks AI, users can prototype quickly, test at a faster rate, and deploy with confidence. The application is trusted by leading AI teams and focuses on making AI agent development more predictable by addressing the unpredictability of user inputs and non-deterministic models.

Enhans AI Model Generator

Enhans AI Model Generator is an advanced AI tool designed to help users generate AI models efficiently. It utilizes cutting-edge algorithms and machine learning techniques to streamline the model creation process. With Enhans AI Model Generator, users can easily input their data, select the desired parameters, and obtain a customized AI model tailored to their specific needs. The tool is user-friendly and does not require extensive programming knowledge, making it accessible to a wide range of users, from beginners to experts in the field of AI.

4'33"

4'33" is an AI agent designed to help students and researchers discover the people they need, such as students seeking professors in a specific field or city. The tool assists in asking better questions, connecting with individuals, and evaluating how well they align with the user's requirements and background. Powered by Perplexity, 4'33" offers a platform for connecting people and answering questions, alongside AI technology. The tool aims to facilitate easier and faster connections between users and relevant individuals, enabling knowledge sharing and collaboration.

Regula

Regula is an AI purchasing agent for B2B services that revolutionizes the purchasing process for businesses. It automates vendor research, qualification calls, and recommendation generation, saving time and ensuring data-driven decision-making. Regula streamlines the procurement process by leveraging AI technology to source, evaluate, and compare vendors across various industries, providing transparent benchmarking and comprehensive reports to help businesses make informed choices efficiently.

Future AGI

Future AGI is a revolutionary AI data management platform that aims to achieve 99% accuracy in AI applications across software and hardware. It provides a comprehensive evaluation and optimization platform for enterprises to enhance the performance of their AI models. Future AGI offers features such as creating trustworthy, accurate, and responsible AI, 10x faster processing, generating and managing diverse synthetic datasets, testing and analyzing agentic workflow configurations, assessing agent performance, enhancing LLM application performance, monitoring and protecting applications in production, and evaluating AI across different modalities.

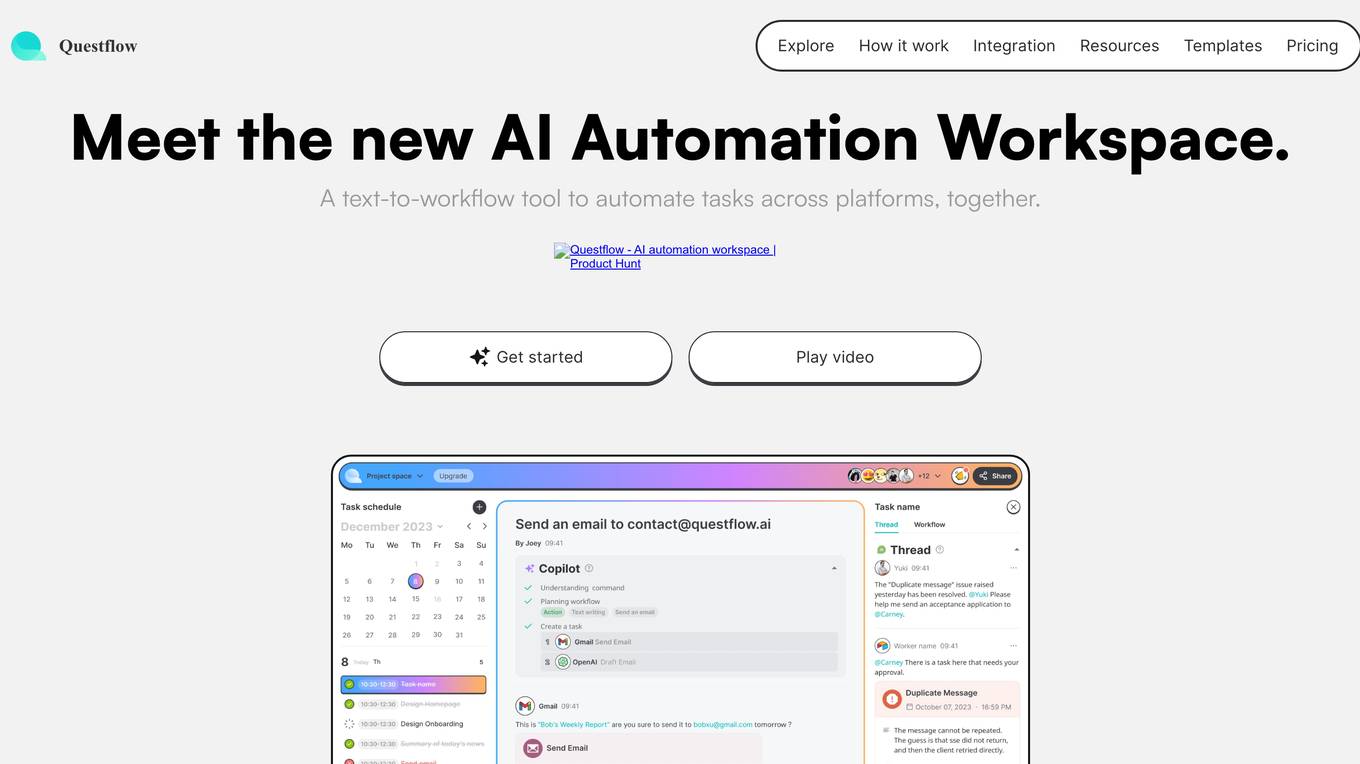

Questflow

Questflow is a decentralized AI agent economy platform that allows users to orchestrate multiple AI agents to gather insights, take action, and earn rewards autonomously. It serves as a co-pilot for work, helping knowledge workers automate repetitive tasks in a private, safety-first approach. The platform offers features such as multi-agent orchestration, user-friendly dashboard, visual reports, smart keyword generator, content evaluation, SEO goal setting, automated alerts, actionable SEO tips, regular SEO goal setting, and link optimization wizard.

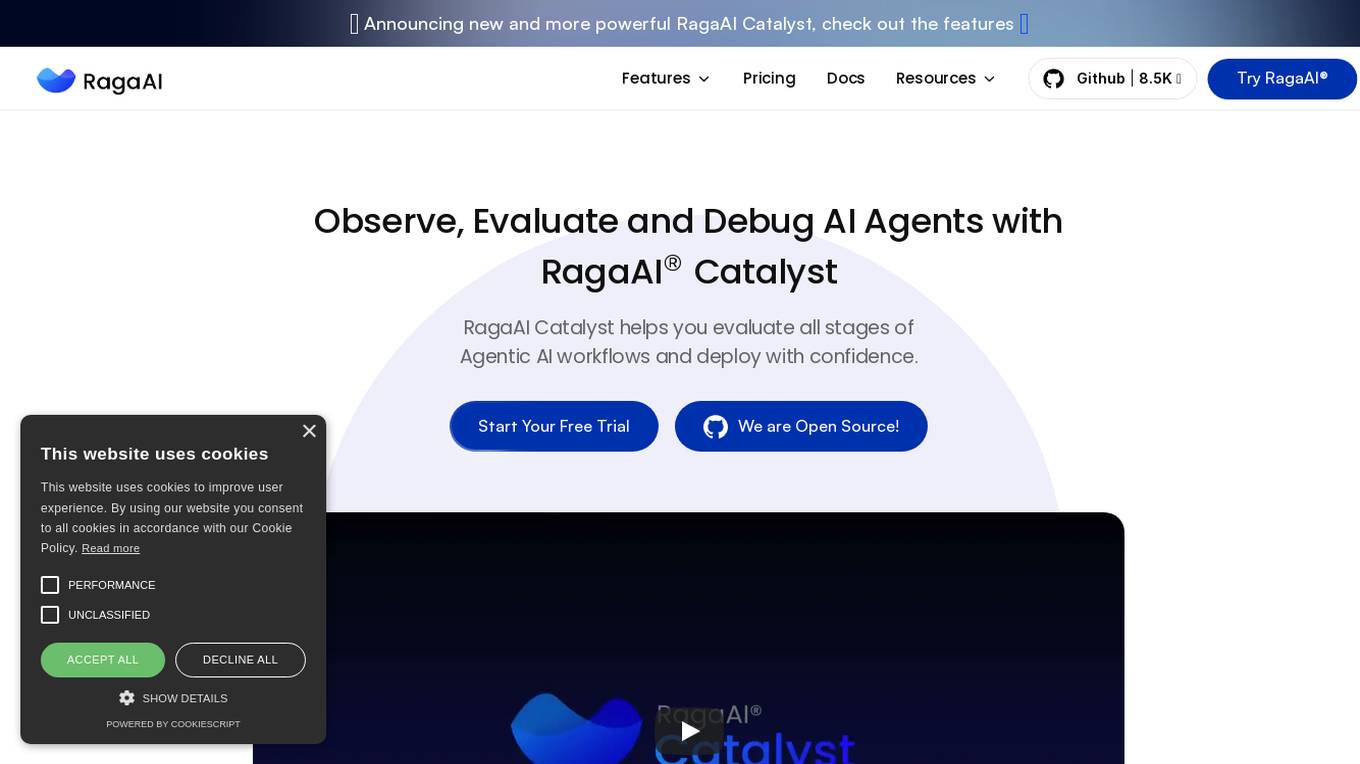

RagaAI Catalyst

RagaAI Catalyst is a sophisticated AI observability, monitoring, and evaluation platform designed to help users observe, evaluate, and debug AI agents at all stages of Agentic AI workflows. It offers features like visualizing trace data, instrumenting and monitoring tools and agents, enhancing AI performance, agentic testing, comprehensive trace logging, evaluation for each step of the agent, enterprise-grade experiment management, secure and reliable LLM outputs, finetuning with human feedback integration, defining custom evaluation logic, generating synthetic data, and optimizing LLM testing with speed and precision. The platform is trusted by AI leaders globally and provides a comprehensive suite of tools for AI developers and enterprises.

JobXRecruiter

JobXRecruiter is an AI-powered CV review tool designed for recruiters to streamline the candidate evaluation process. It automates the review of resumes, provides detailed candidate analysis, and helps recruiters save time by focusing on hiring rather than manual screening. The tool offers a 1-minute setup, reduces candidate evaluation time, and eliminates tedious screening tasks. With JobXRecruiter, recruiters can create projects for each vacancy, receive match scores for candidates, and easily shortlist the best candidates without opening individual CVs. The application is secure, efficient, and a game-changer for recruiters looking to optimize their hiring process.

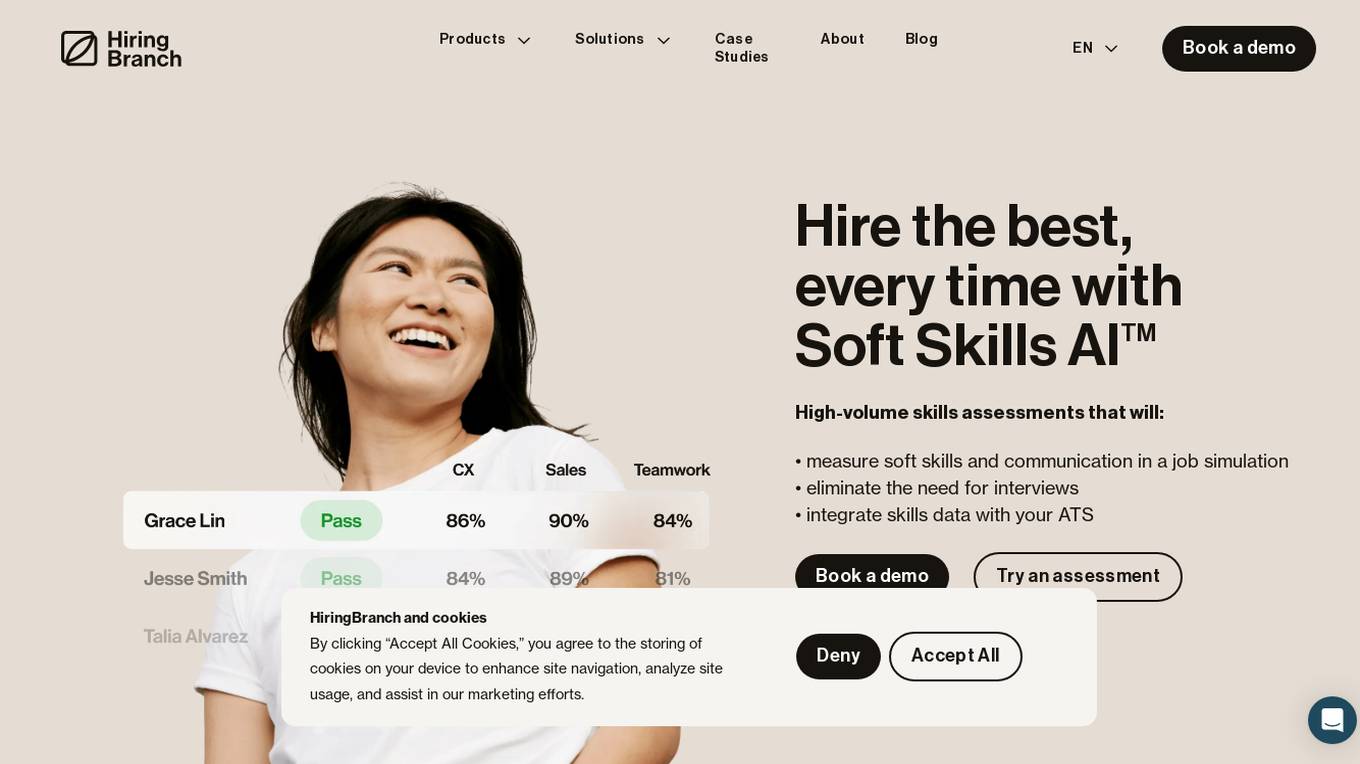

HiringBranch

HiringBranch is an AI-powered platform that offers high-volume skills assessments to help companies hire the best candidates efficiently. The platform accurately measures soft skills and communication through open-ended conversational assessments, eliminating the need for traditional interviews. HiringBranch's AI skills assessments are tailored to various industries such as Telecommunication, Retail, Banking & Insurance, and Contact Centers, providing real-time evaluation of role-critical skills. The platform aims to streamline the hiring process, reduce mis-hires, and improve retention rates for enterprises globally.

PropHunt.ai

PropHunt.ai is an AI-powered platform designed for real estate professionals and property investors. It utilizes advanced machine learning algorithms to analyze property data and provide valuable insights for property hunting and investment decisions. The platform offers features such as property price prediction, neighborhood analysis, investment risk assessment, property comparison, and market trend forecasting. With PropHunt.ai, users can make informed decisions, optimize their property investments, and stay ahead in the competitive real estate market.

Restb.ai

Restb.ai is a leading provider of visual insights for real estate companies, utilizing computer vision and AI to analyze property images. The application offers solutions for AVMs, iBuyers, investors, appraisals, inspections, property search, marketing, insurance companies, and more. By providing actionable and unique data at scale, Restb.ai helps improve valuation accuracy, automate manual processes, and enhance property interactions. The platform enables users to leverage visual insights to optimize valuations, automate report quality checks, enhance listings, improve data collection, and more.

Smalltalks.ai

Smalltalks.ai is an AI-powered conversational platform that enables users to create engaging chatbots for various purposes. With its user-friendly interface and advanced natural language processing capabilities, Smalltalks.ai allows individuals and businesses to design interactive chatbot experiences without the need for extensive coding knowledge. The platform offers a range of customization options, analytics tools, and integration features to enhance the chatbot development process and improve user engagement.

Lucida AI

Lucida AI is an AI-driven coaching tool designed to enhance employees' English language skills through personalized insights and feedback based on real-life call interactions. The tool offers comprehensive coaching in pronunciation, fluency, grammar, vocabulary, and tracking of language proficiency. It provides advanced speech analysis using proprietary LLM and NLP technologies, ensuring accurate assessments and detailed tracking. With end-to-end encryption for data privacy, Lucy AI is a cost-effective solution for organizations seeking to improve communication skills and streamline language assessment processes.

HeyMilo AI

HeyMilo AI is a generative AI-powered voice agent application designed to help companies and recruiting agencies scale their interview processes. It offers AI-powered voice agents capable of conducting two-way conversational interviews, providing real-time candidate insights, and seamless integration with existing recruiting tools. HeyMilo aims to make interviewing easier, faster, and more effective by creating and sharing agents, inviting candidates for interviews, and analyzing interviews to provide comprehensive candidate reports.

GPTHelp.ai

GPTHelp.ai is an AI chatbot tool designed to help website owners provide instant answers to their visitors' questions. The tool is trained on the website content, files, and FAQs to deliver accurate responses. Users can customize the chatbot's design, behavior, and personality to fit their needs. With GPTHelp.ai, creating and training your own AI chatbot is quick and easy, eliminating the need for manual setup of FAQs. The tool also allows users to monitor conversations, intervene if necessary, and view chat history for performance evaluation.

Supplier Evaluation Advisor

Assesses and recommends potential suppliers for organizational needs.

HomeScore

Assess a potential home's quality using your own photos and property inspection reports

Kaufpreis einer Garage ermitteln

Kaufpreis einer Garage ermitteln: Ich bin ein Immobilienbewertungsrechner, spezialisiert auf die Wertermittlung und Schätzung des Marktwerts von Garagen. Als Bewertungstool helfe ich, den Wert von Garagen zu schätzen, indem ich relevante Faktoren wie Lage und Zustand in die Ermittlung einbeziehe.

Gewerbeimmobilien bewerten

Gewerbeimmobilien bewerten: Online Experte in Immobilienbewertung, spezialisiert auf die Ermittlung und Schätzung von Verkehrswert, Marktwert und Bodenwert. Nutzt einen Rechner zur Bewertung von Immobilien und Grundstücken.

MiniVC

This is the AI version of David Teten of Coolwater Capital. David is Founder of PEVCtech.com, FoundersNextMove.com, and VersatileVC.com.

Create an agent team

First, please say "Create an agent team to do 〇〇." / 最初に「〇〇をするためのエージェントチームを作成してください」とお伝え下さい

Agent Finder (By Staf.ai and AgentOps.ai)

Find the best AI agent for your problem, no bulk export

Agent Onboard

Agent that helps you discover other Agents as per your requirements : ) Over 2000+ Agents onboard 🤍

agent-evaluation

Agent Evaluation is a generative AI-powered framework for testing virtual agents. It implements an LLM agent (evaluator) to orchestrate conversations with your own agent (target) and evaluate responses. It supports popular AWS services, allows concurrent multi-turn conversations, defines hooks for additional tasks, and can be used in CI/CD pipelines for faster delivery and stable production environments.

LLM-Agent-Evaluation-Survey

LLM-Agent-Evaluation-Survey is a tool designed to gather feedback and evaluate the performance of AI agents. It provides a user-friendly interface for users to rate and provide comments on the interactions with AI agents. The tool aims to collect valuable insights to improve the AI agents' capabilities and enhance user experience. With LLM-Agent-Evaluation-Survey, users can easily assess the effectiveness and efficiency of AI agents in various scenarios, leading to better decision-making and optimization of AI systems.

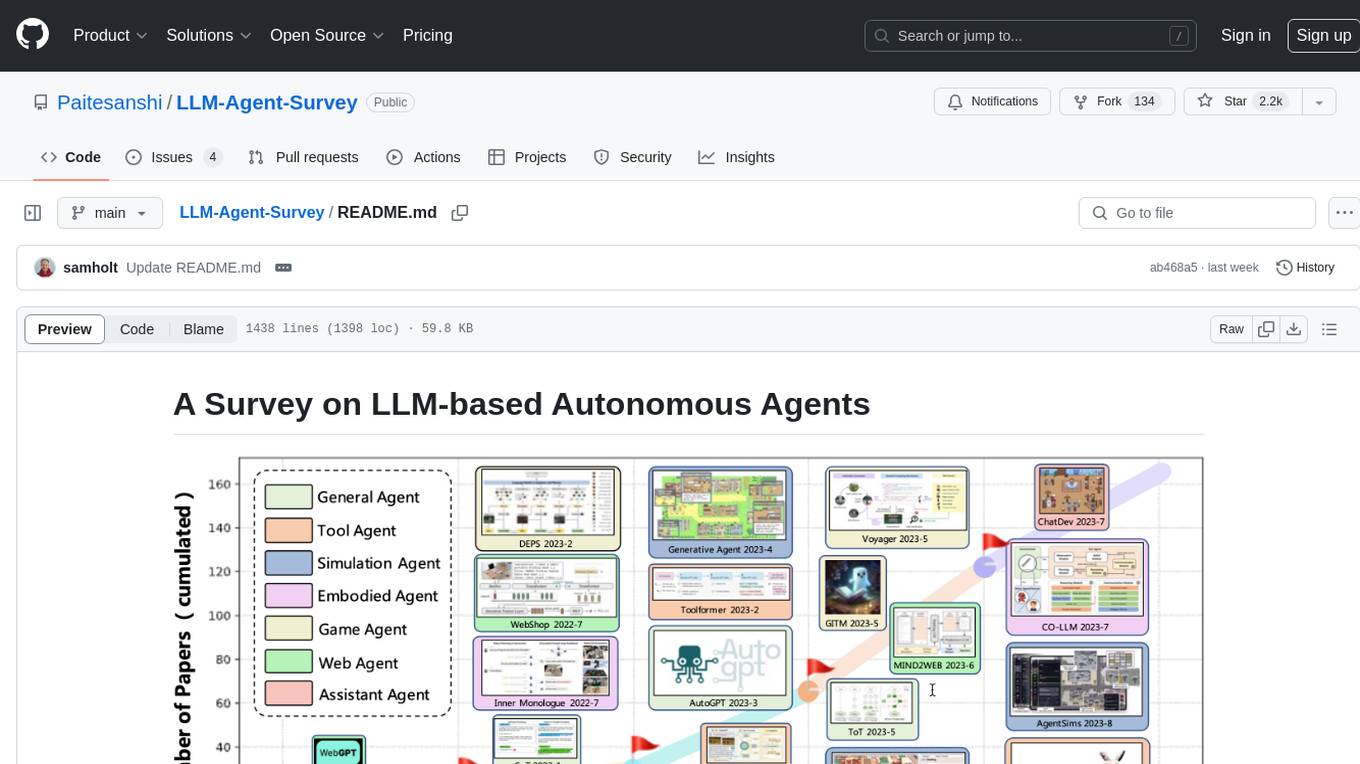

LLM-Agent-Survey

Autonomous agents are designed to achieve specific objectives through self-guided instructions. With the emergence and growth of large language models (LLMs), there is a growing trend in utilizing LLMs as fundamental controllers for these autonomous agents. This repository conducts a comprehensive survey study on the construction, application, and evaluation of LLM-based autonomous agents. It explores essential components of AI agents, application domains in natural sciences, social sciences, and engineering, and evaluation strategies. The survey aims to be a resource for researchers and practitioners in this rapidly evolving field.

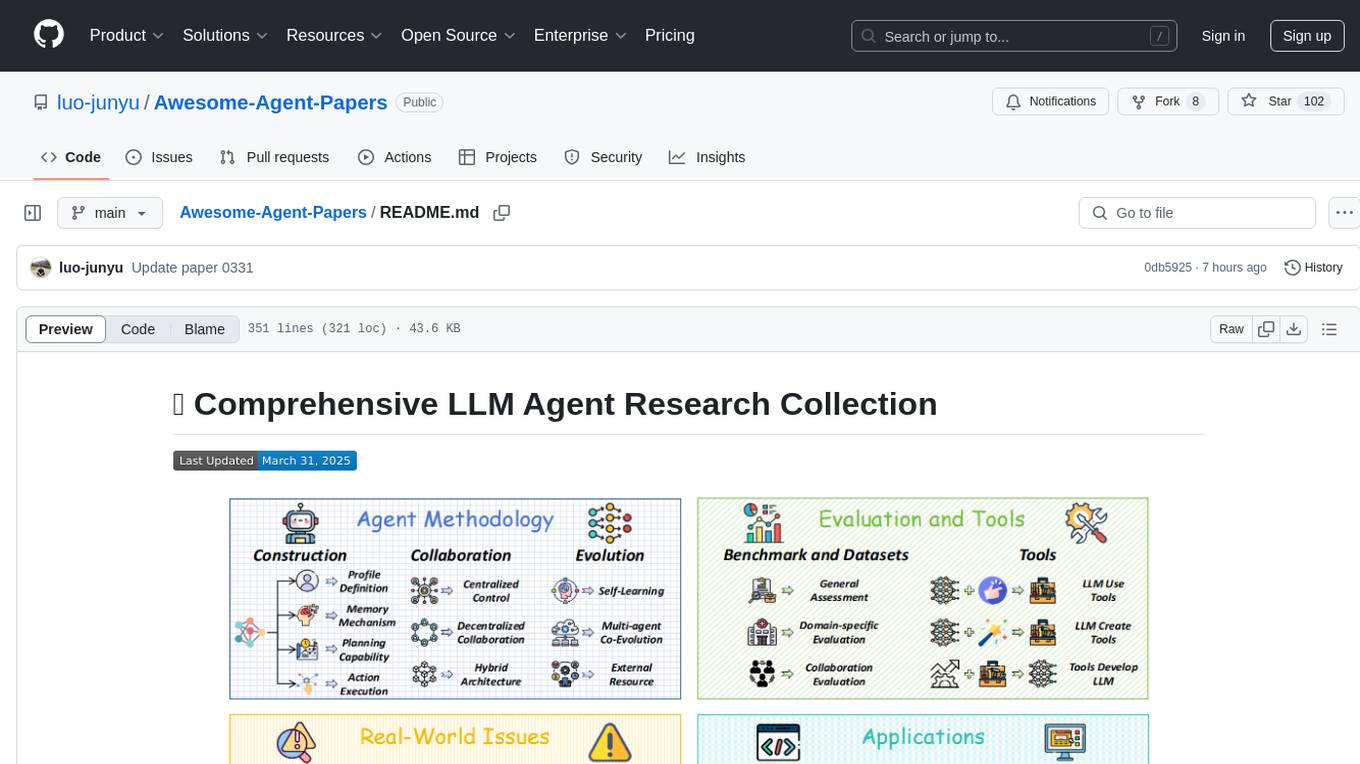

Awesome-Agent-Papers

This repository is a comprehensive collection of research papers on Large Language Model (LLM) agents, organized across key categories including agent construction, collaboration mechanisms, evolution, tools, security, benchmarks, and applications. The taxonomy provides a structured framework for understanding the field of LLM agents, bridging fragmented research threads by highlighting connections between agent design principles and emergent behaviors.

ai-agent-papers

The AI Agents Papers repository provides a curated collection of papers focusing on AI agents, covering topics such as agent capabilities, applications, architectures, and presentations. It includes a variety of papers on ideation, decision making, long-horizon tasks, learning, memory-based agents, self-evolving agents, and more. The repository serves as a valuable resource for researchers and practitioners interested in AI agent technologies and advancements.

mcp-agent

mcp-agent is a simple, composable framework designed to build agents using the Model Context Protocol. It handles the lifecycle of MCP server connections and implements patterns for building production-ready AI agents in a composable way. The framework also includes OpenAI's Swarm pattern for multi-agent orchestration in a model-agnostic manner, making it the simplest way to build robust agent applications. It is purpose-built for the shared protocol MCP, lightweight, and closer to an agent pattern library than a framework. mcp-agent allows developers to focus on the core business logic of their AI applications by handling mechanics such as server connections, working with LLMs, and supporting external signals like human input.

giskard

Giskard is an open-source Python library that automatically detects performance, bias & security issues in AI applications. The library covers LLM-based applications such as RAG agents, all the way to traditional ML models for tabular data.

agentops

AgentOps is a toolkit for evaluating and developing robust and reliable AI agents. It provides benchmarks, observability, and replay analytics to help developers build better agents. AgentOps is open beta and can be signed up for here. Key features of AgentOps include: - Session replays in 3 lines of code: Initialize the AgentOps client and automatically get analytics on every LLM call. - Time travel debugging: (coming soon!) - Agent Arena: (coming soon!) - Callback handlers: AgentOps works seamlessly with applications built using Langchain and LlamaIndex.

swift

SWIFT (Scalable lightWeight Infrastructure for Fine-Tuning) supports training, inference, evaluation and deployment of nearly **200 LLMs and MLLMs** (multimodal large models). Developers can directly apply our framework to their own research and production environments to realize the complete workflow from model training and evaluation to application. In addition to supporting the lightweight training solutions provided by [PEFT](https://github.com/huggingface/peft), we also provide a complete **Adapters library** to support the latest training techniques such as NEFTune, LoRA+, LLaMA-PRO, etc. This adapter library can be used directly in your own custom workflow without our training scripts. To facilitate use by users unfamiliar with deep learning, we provide a Gradio web-ui for controlling training and inference, as well as accompanying deep learning courses and best practices for beginners. Additionally, we are expanding capabilities for other modalities. Currently, we support full-parameter training and LoRA training for AnimateDiff.

Awesome-LLM-Eval

Awesome-LLM-Eval: a curated list of tools, benchmarks, demos, papers for Large Language Models (like ChatGPT, LLaMA, GLM, Baichuan, etc) Evaluation on Language capabilities, Knowledge, Reasoning, Fairness and Safety.

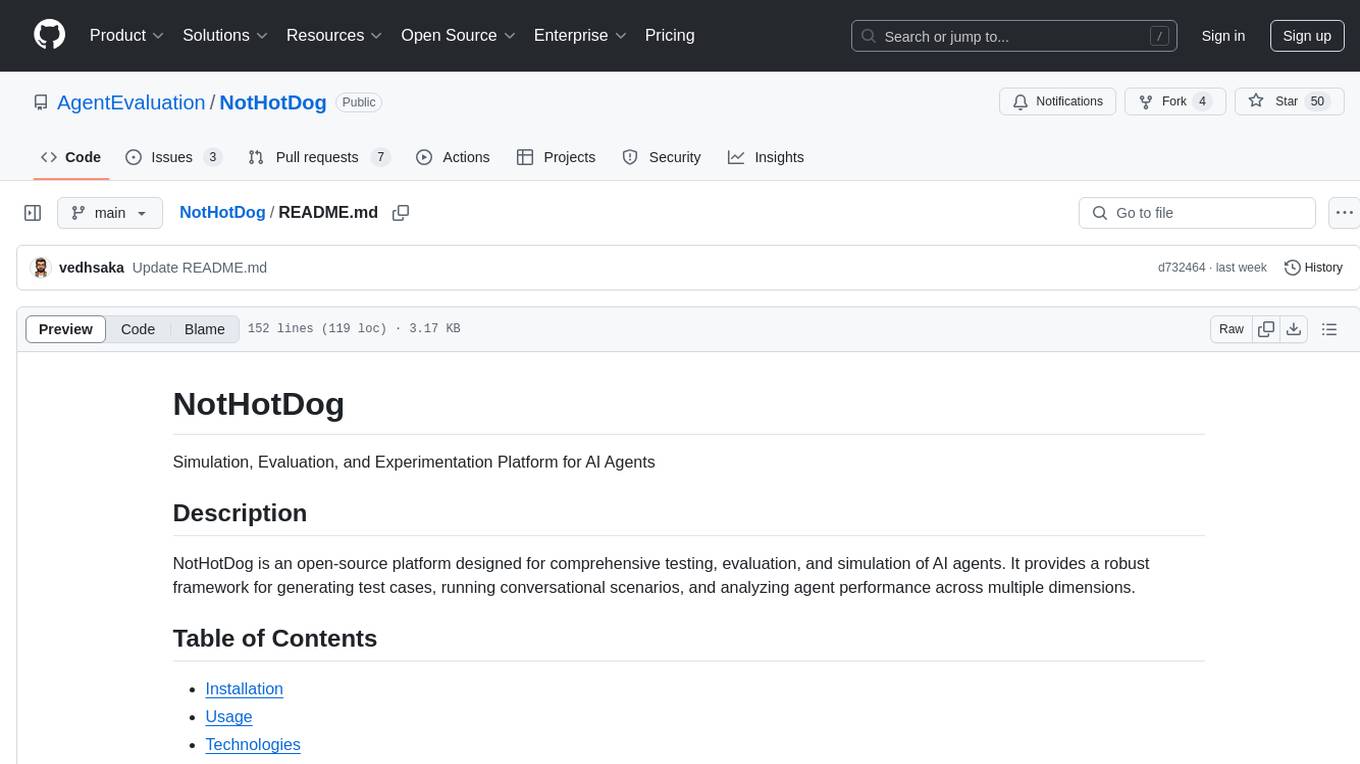

NotHotDog

NotHotDog is an open-source platform for testing, evaluating, and simulating AI agents. It offers a robust framework for generating test cases, running conversational scenarios, and analyzing agent performance.

factorio-learning-environment

Factorio Learning Environment is an open source framework designed for developing and evaluating LLM agents in the game of Factorio. It provides two settings: Lab-play with structured tasks and Open-play for building large factories. Results show limitations in spatial reasoning and automation strategies. Agents interact with the environment through code synthesis, observation, action, and feedback. Tools are provided for game actions and state representation. Agents operate in episodes with observation, planning, and action execution. Tasks specify agent goals and are implemented in JSON files. The project structure includes directories for agents, environment, cluster, data, docs, eval, and more. A database is used for checkpointing agent steps. Benchmarks show performance metrics for different configurations.

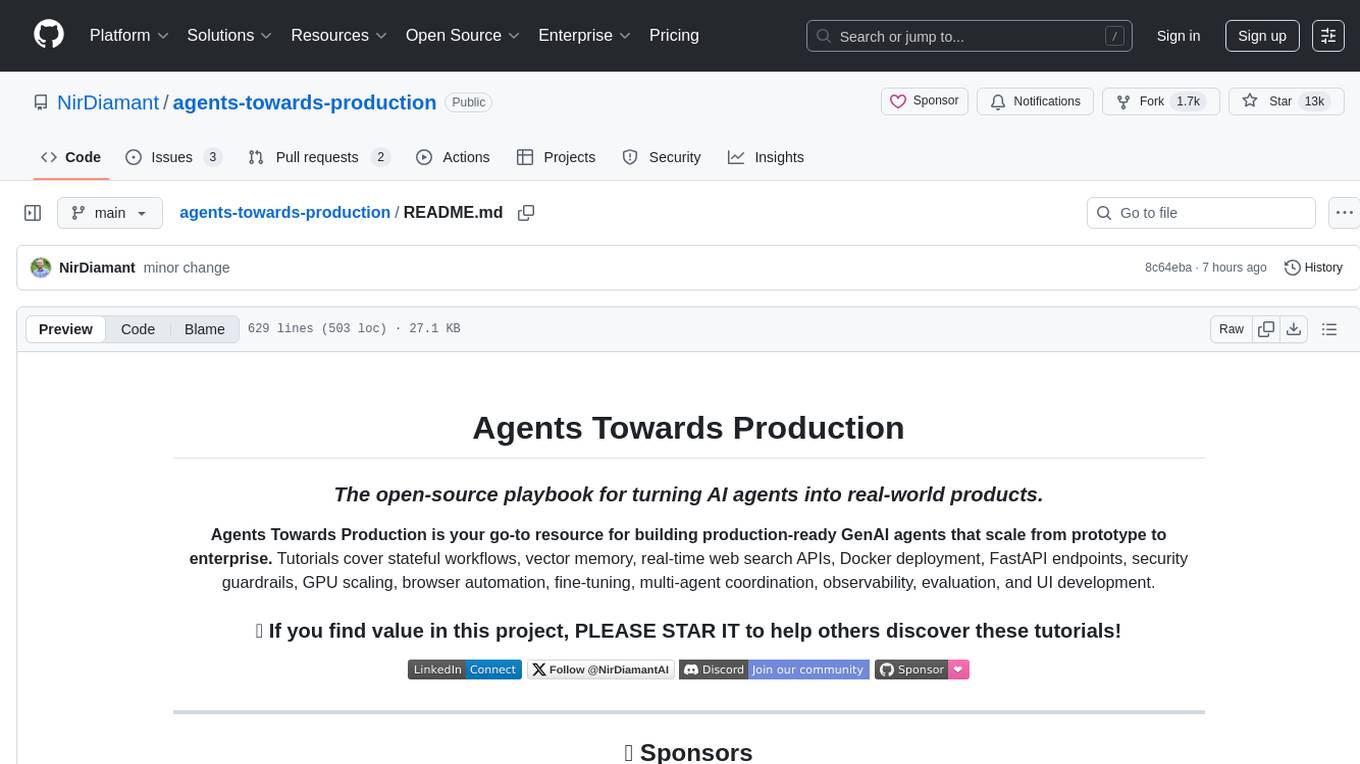

agents-towards-production

Agents Towards Production is an open-source playbook for building production-ready GenAI agents that scale from prototype to enterprise. Tutorials cover stateful workflows, vector memory, real-time web search APIs, Docker deployment, FastAPI endpoints, security guardrails, GPU scaling, browser automation, fine-tuning, multi-agent coordination, observability, evaluation, and UI development.

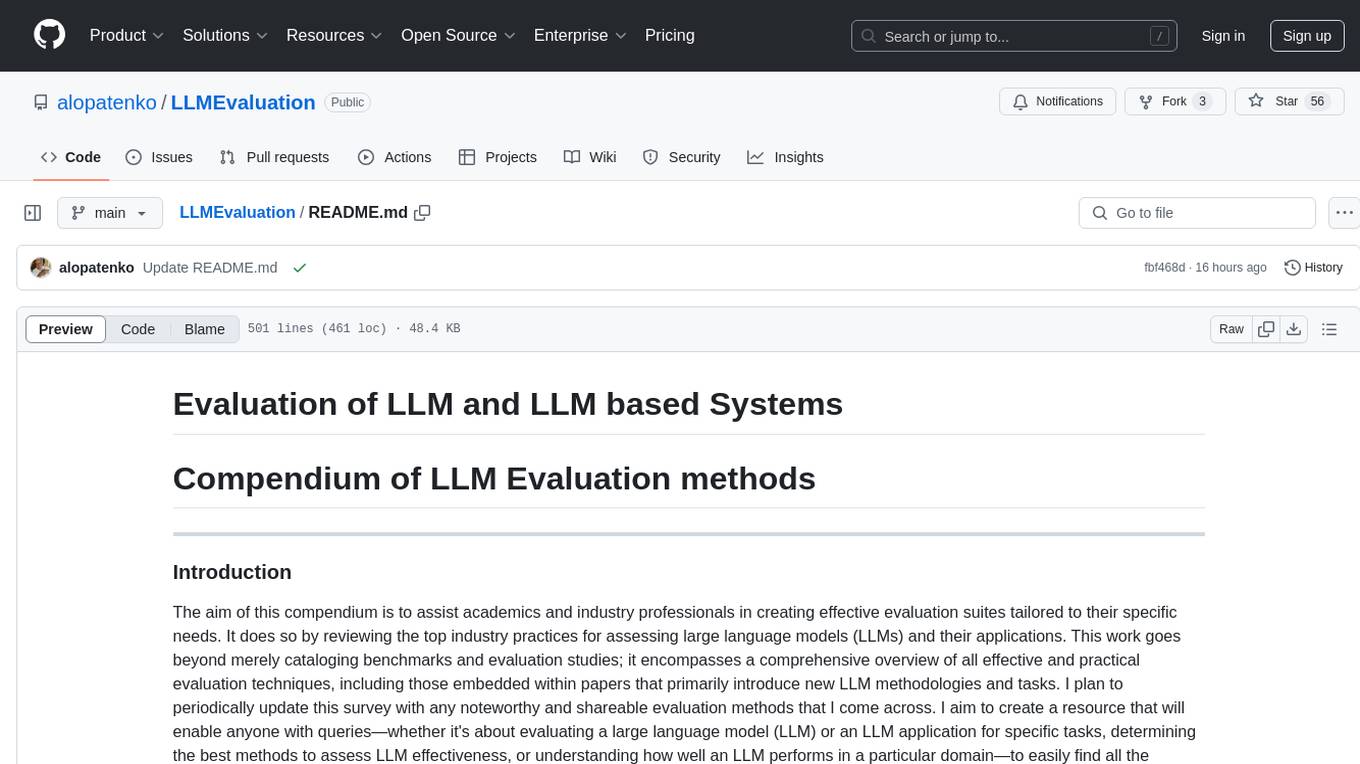

LLMEvaluation

The LLMEvaluation repository is a comprehensive compendium of evaluation methods for Large Language Models (LLMs) and LLM-based systems. It aims to assist academics and industry professionals in creating effective evaluation suites tailored to their specific needs by reviewing industry practices for assessing LLMs and their applications. The repository covers a wide range of evaluation techniques, benchmarks, and studies related to LLMs, including areas such as embeddings, question answering, multi-turn dialogues, reasoning, multi-lingual tasks, ethical AI, biases, safe AI, code generation, summarization, software performance, agent LLM architectures, long text generation, graph understanding, and various unclassified tasks. It also includes evaluations for LLM systems in conversational systems, copilots, search and recommendation engines, task utility, and verticals like healthcare, law, science, financial, and others. The repository provides a wealth of resources for evaluating and understanding the capabilities of LLMs in different domains.

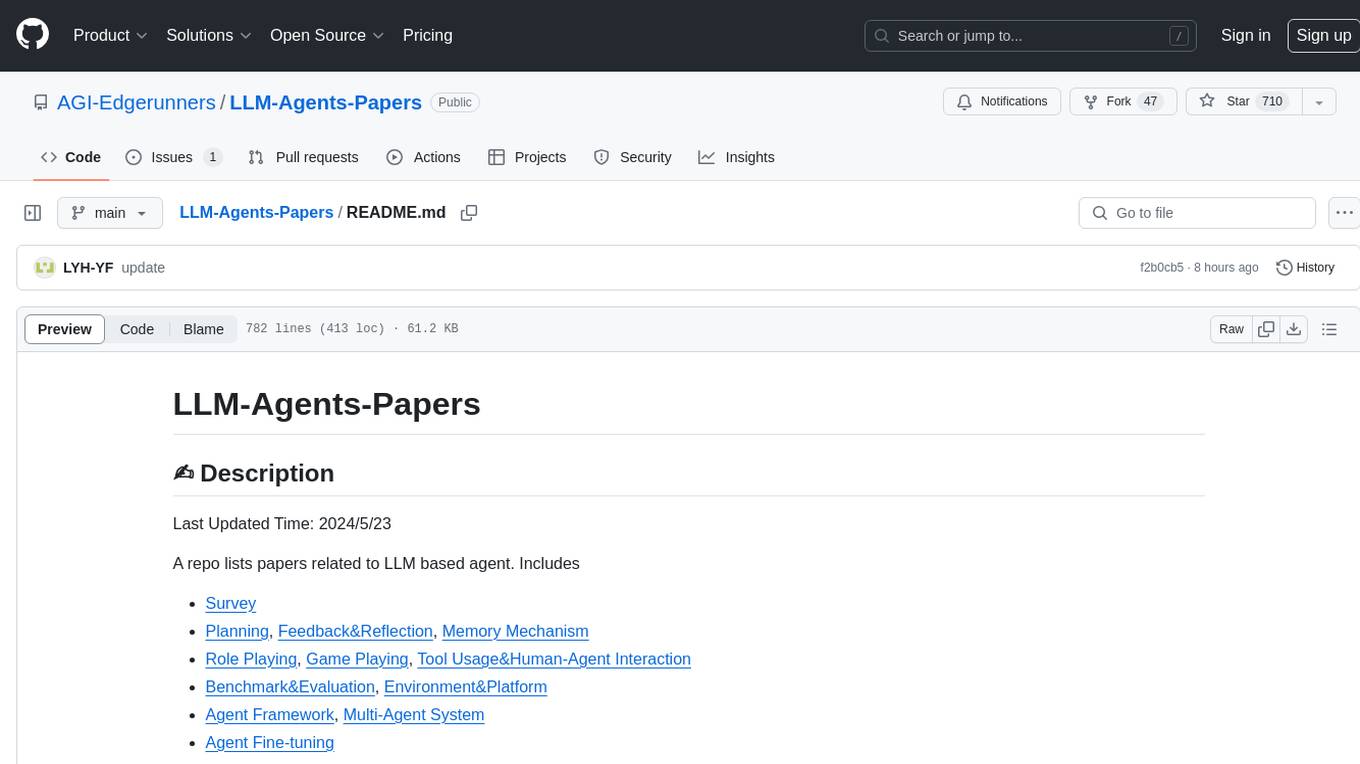

LLM-Agents-Papers

A repository that lists papers related to Large Language Model (LLM) based agents. The repository covers various topics including survey, planning, feedback & reflection, memory mechanism, role playing, game playing, tool usage & human-agent interaction, benchmark & evaluation, environment & platform, agent framework, multi-agent system, and agent fine-tuning. It provides a comprehensive collection of research papers on LLM-based agents, exploring different aspects of AI agent architectures and applications.

Awesome-AI-Agents

Awesome-AI-Agents is a curated list of projects, frameworks, benchmarks, platforms, and related resources focused on autonomous AI agents powered by Large Language Models (LLMs). The repository showcases a wide range of applications, multi-agent task solver projects, agent society simulations, and advanced components for building and customizing AI agents. It also includes frameworks for orchestrating role-playing, evaluating LLM-as-Agent performance, and connecting LLMs with real-world applications through platforms and APIs. Additionally, the repository features surveys, paper lists, and blogs related to LLM-based autonomous agents, making it a valuable resource for researchers, developers, and enthusiasts in the field of AI.

Odyssey

Odyssey is a framework designed to empower agents with open-world skills in Minecraft. It provides an interactive agent with a skill library, a fine-tuned LLaMA-3 model, and an open-world benchmark for evaluating agent capabilities. The framework enables agents to explore diverse gameplay opportunities in the vast Minecraft world by offering primitive and compositional skills, extensive training data, and various long-term planning tasks. Odyssey aims to advance research on autonomous agent solutions by providing datasets, model weights, and code for public use.