Best AI tools for< Validate Data >

20 - AI tool Sites

Lume AI

Lume AI is an AI-powered data mapping suite that automates the process of mapping, cleaning, and validating data in various workflows. It offers a comprehensive solution for building pipelines, onboarding customer data, and more. With AI-driven insights, users can streamline data analysis, mapper generation, deployment, and maintenance. Lume AI provides both a no-code platform and API integration options for seamless data mapping. Trusted by market leaders and startups, Lume AI ensures data security with enterprise-grade encryption and compliance standards.

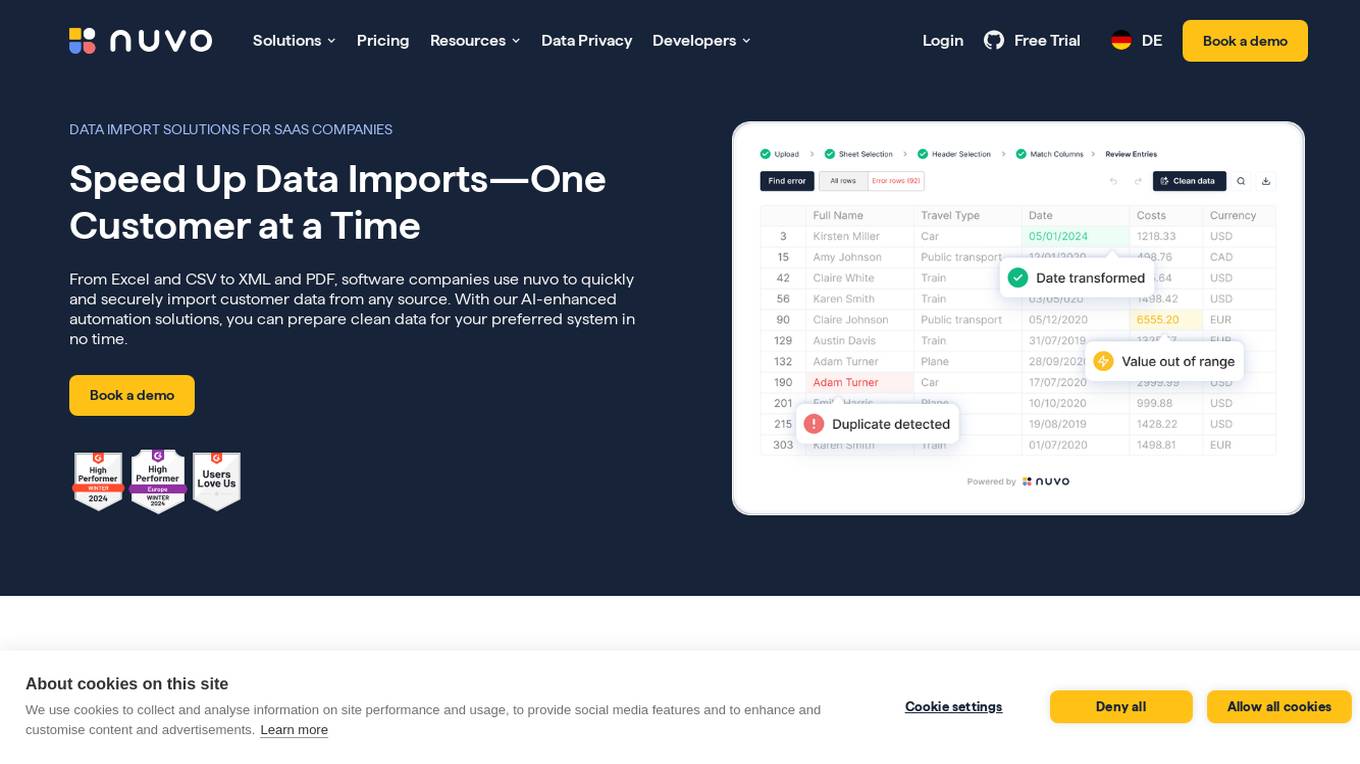

nuvo

nuvo is an AI-powered data import solution that offers fast, secure, and scalable data import solutions for software companies. It provides tools like nuvo Data Importer SDK and nuvo Data Pipeline to streamline manual and recurring ETL data imports, enabling users to manage data imports independently. With AI-enhanced automation, nuvo helps prepare clean data for preferred systems quickly and efficiently, reducing manual effort and improving data quality. The platform allows users to upload unlimited data in various formats, match imported data to system schemas, clean and validate data, and import clean data into target systems with just a click.

Canoe

Canoe is a cloud-based platform that leverages machine learning technology to automate document collection, data extraction, and data science initiatives for alternative investments. It transforms complex documents into actionable intelligence within seconds, empowering allocators with tools to unlock new efficiencies for their business. Canoe is trusted by thousands of alternative investors, allocators, wealth management, and asset servicers to improve efficiency, accuracy, and completeness of investment data.

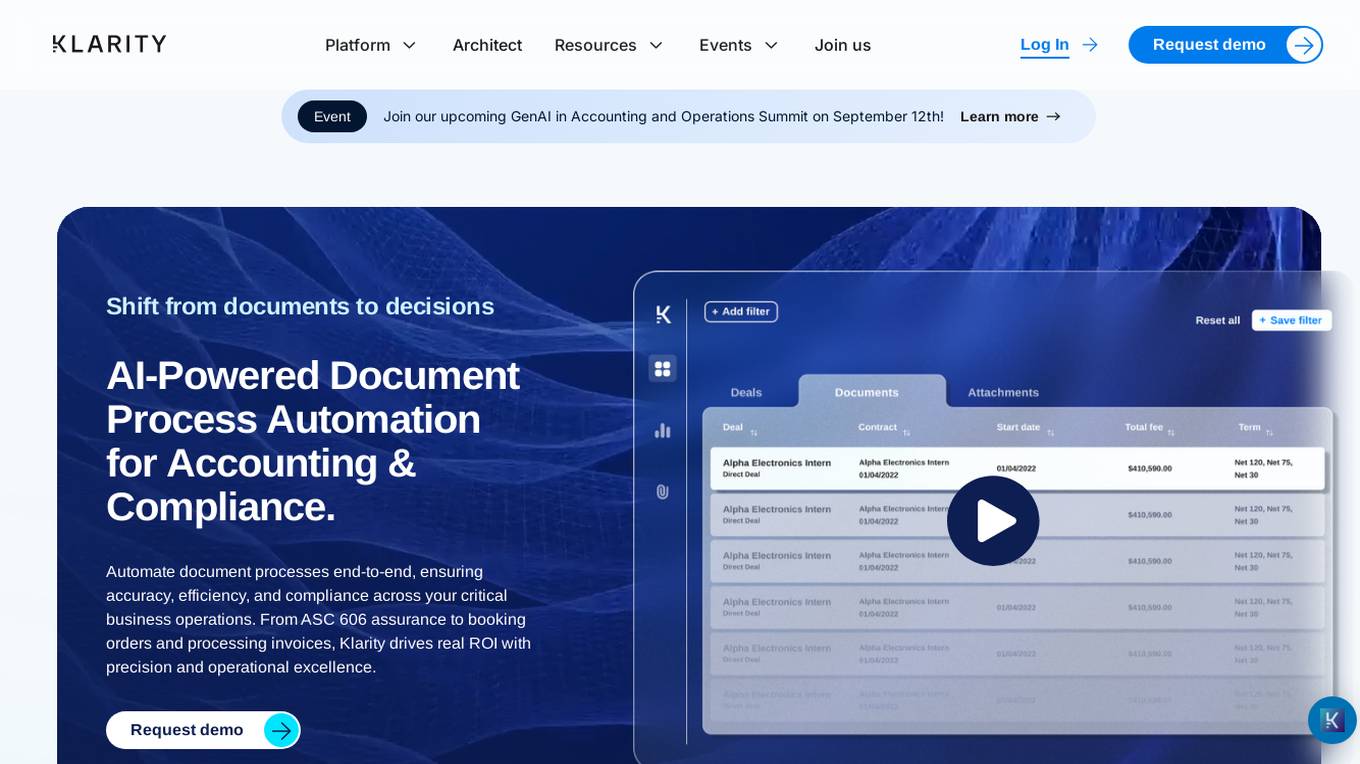

Klarity

Klarity is an AI-powered platform that automates accounting and compliance workflows traditionally offshored. It leverages AI to streamline documentation processes, enhance compliance, and drive real-world impact and sustainable scaling. Klarity helps businesses evolve into Exponential Organizations by optimizing functions, scaling efficiently, and driving innovation with AI-powered automation.

PDFMerse

PDFMerse is an AI-powered data extraction tool that revolutionizes how users handle document data. It allows users to effortlessly extract information from PDFs with precision, saving time and enhancing workflow. With cutting-edge AI technology, PDFMerse automates data extraction, ensures data accuracy, and offers versatile output formats like CSV, JSON, and Excel. The tool is designed to dramatically reduce processing time and operational costs, enabling users to focus on higher-value tasks.

Magic Regex Generator

Magic Regex Generator is an AI-powered tool that simplifies the process of generating, testing, and editing Regular Expression patterns. Users can describe what they want to match in English, and the AI generates the corresponding regex in the editor for testing and refining. The tool is designed to make working with regex easier and more efficient, allowing users to focus on meaningful tasks without getting bogged down in complex pattern matching.

Formula Wizard

Formula Wizard is an AI-powered software designed to assist users in writing Excel, Airtable, and Notion formulas effortlessly. By leveraging artificial intelligence, the application automates the process of formula creation, allowing users to save time and focus on more critical tasks. With features like automating tedious tasks, unlocking insights from data, and customizing templates, Formula Wizard streamlines the formula-writing process for various spreadsheet applications.

Skann AI

Skann AI is an advanced artificial intelligence tool designed to revolutionize document management and data extraction processes. The application leverages cutting-edge AI technology to automate the extraction of data from various documents, such as invoices, receipts, and contracts. Skann AI streamlines workflows, increases efficiency, and reduces manual errors by accurately extracting and organizing data in a fraction of the time it would take a human. With its intuitive interface and powerful features, Skann AI is the go-to solution for businesses looking to optimize their document processing workflows.

Automaited

Automaited is an AI application that offers Ada - an AI Agent for automating order processing. Ada handles orders from receipt to ERP entry, extracting, validating, and transferring data to ensure accuracy and efficiency. The application utilizes state-of-the-art AI technology to streamline order processing, saving time, reducing errors, and enabling users to focus on customer satisfaction. With seamless automation, Ada integrates into ERP systems, making order processing effortless, quick, and cost-efficient. Automaited provides tailored automations to make operational processes up to 70% more efficient, enhancing performance and reducing error rates.

TalkForm AI

TalkForm AI is an AI-powered form creation and filling tool that revolutionizes the traditional form-building process. With the ability to chat to create and chat to fill forms, TalkForm AI offers a seamless and efficient solution for creating and managing forms. The application leverages AI technology to automatically infer field types, validate, clean, structure, and fill form responses, ensuring data remains structured for easy analysis. TalkForm AI also provides custom validations, complicated conditional logic, and unlimited power to cater to diverse form creation needs.

UPTO3

UPTO3 is a decentralized event knowledge graph protocol that aims to provide consensus verification for Web3 events by turning them into NFTs. It allows users to mint and verify events, with rewards based on the results. The platform promotes transparency, open data, and unbiased analysis through economic incentives. UPTO3 will be built on Blast(L2) and offers features such as event minting as NFTs, permissionless access, and decentralized validation tasks.

Retraced

Retraced is a compliance platform designed for fashion and textile supply chains. It offers a comprehensive 360° solution to empower CSR teams in streamlining sustainability strategies, collaborating with suppliers in real-time, and meeting compliance requirements effectively. The platform enables digital connection with suppliers for efficient communication, traceability of products and materials, and fostering transparency for both internal and external stakeholders. Retraced aims to make the fashion industry more transparent and sustainable by providing innovative solutions for market leaders in the industry.

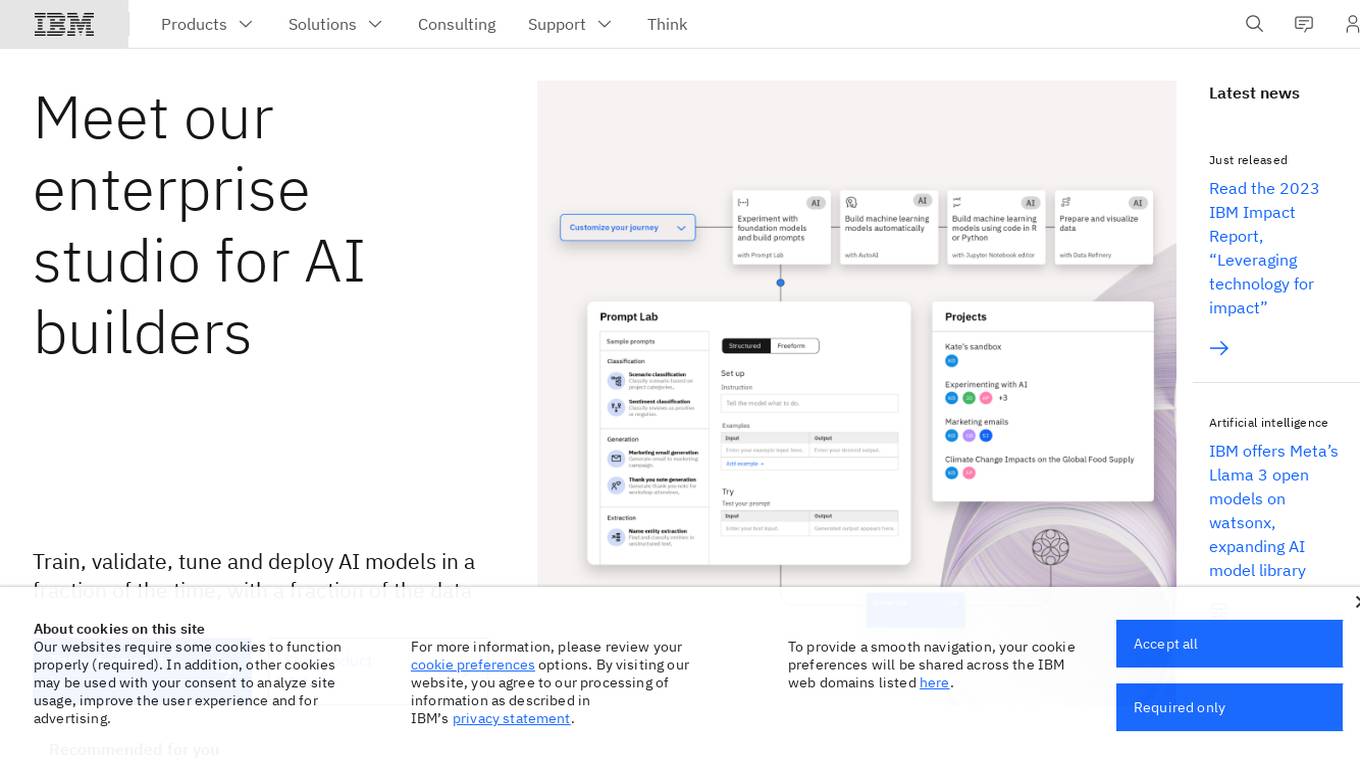

IBM Watsonx

IBM Watsonx is an enterprise studio for AI builders. It provides a platform to train, validate, tune, and deploy AI models quickly and efficiently. With Watsonx, users can access a library of pre-trained AI models, build their own models, and deploy them to the cloud or on-premises. Watsonx also offers a range of tools and services to help users manage and monitor their AI models.

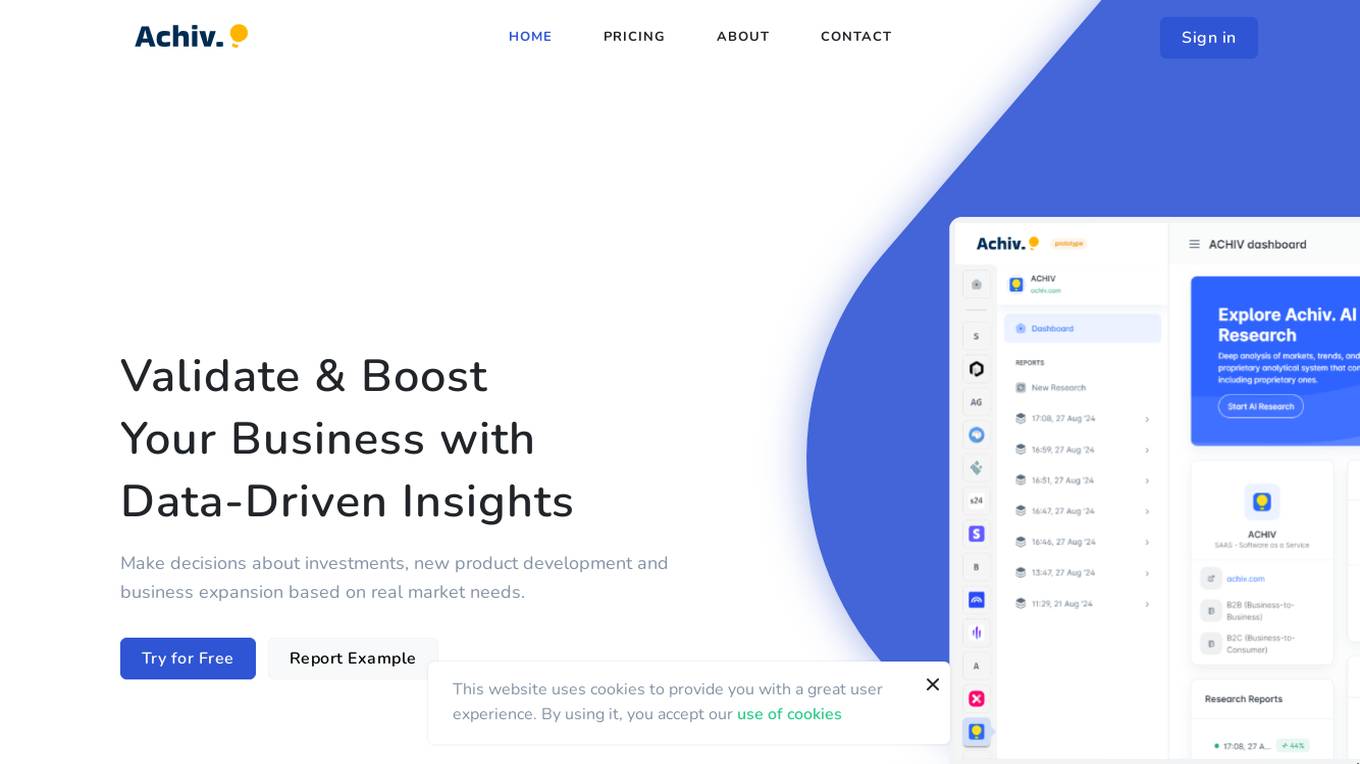

ACHIV

ACHIV is an AI tool for ideas validation and market research. It helps businesses make informed decisions based on real market needs by providing data-driven insights. The tool streamlines the market validation process, allowing quick adaptation and refinement of product development strategies. ACHIV offers a revolutionary approach to data collection and preprocessing, along with proprietary AI models for smart analysis and predictive forecasting. It is designed to assist entrepreneurs in understanding market gaps, exploring competitors, and enhancing investment decisions with real-time data.

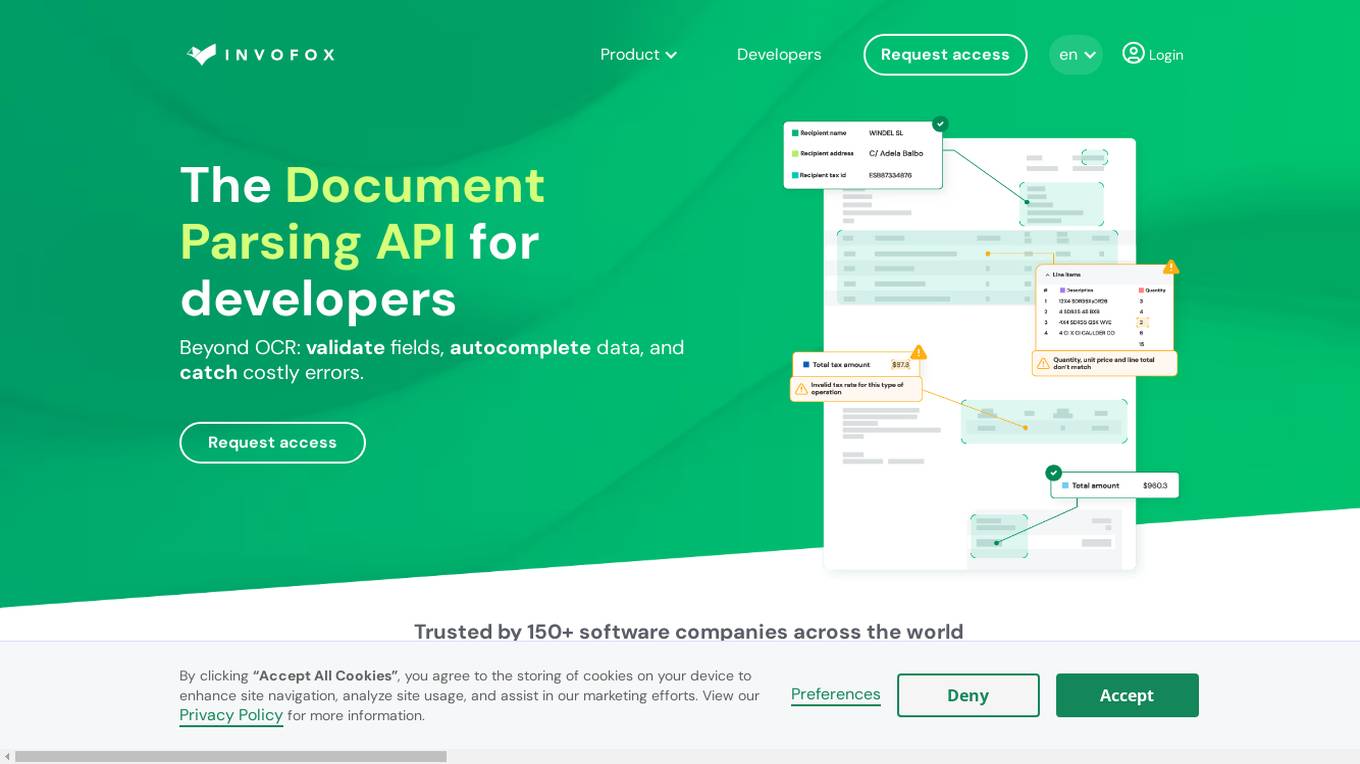

Invofox API

Invofox API is a Document Parsing API designed for developers to validate fields, autocomplete data, and catch errors beyond OCR. It turns unstructured documents into clean JSON using advanced AI models and proprietary algorithms. The API provides built-in schemas for major documents and supports custom formats, allowing users to parse any document with a single API call without templates or post-processing. Invofox is used for expense management, accounts payable, logistics & supply chain, HR automation, sustainability & consumption tracking, and custom document parsing.

Bifrost AI

Bifrost AI is a data generation engine designed for AI and robotics applications. It enables users to train and validate AI models faster by generating physically accurate synthetic datasets in 3D simulations, eliminating the need for real-world data. The platform offers pixel-perfect labels, scenario metadata, and a simulated 3D world to enhance AI understanding. Bifrost AI empowers users to create new scenarios and datasets rapidly, stress test AI perception, and improve model performance. It is built for teams at every stage of AI development, offering features like automated labeling, class imbalance correction, and performance enhancement.

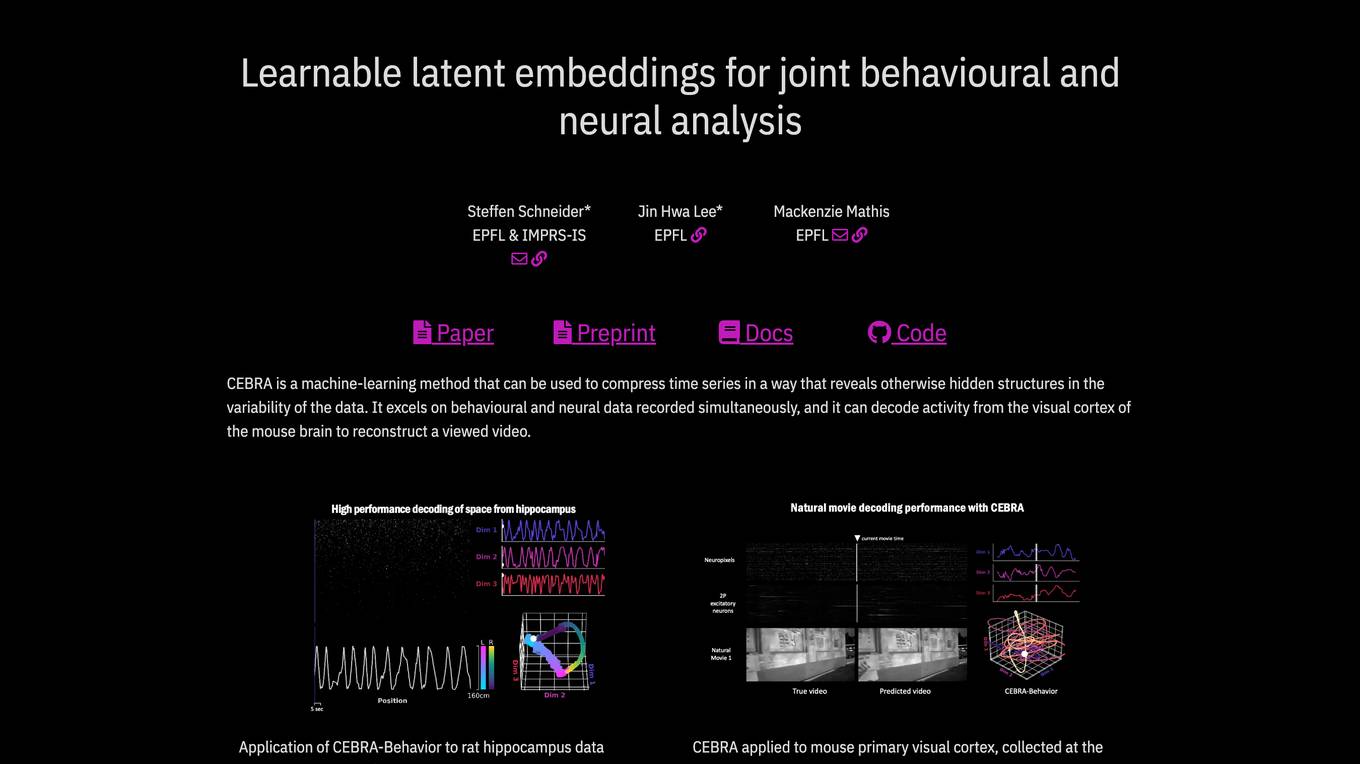

CEBRA

CEBRA is a self-supervised learning algorithm designed for obtaining interpretable embeddings of high-dimensional recordings using auxiliary variables. It excels in compressing time series data to reveal hidden structures, particularly in behavioral and neural data. The algorithm can decode neural activity, reconstruct viewed videos, decode trajectories, and determine position during navigation. CEBRA is a valuable tool for joint behavioral and neural analysis, providing consistent and high-performance latent spaces for hypothesis testing and label-free applications across various datasets and species.

Tonic.ai

Tonic.ai is a platform that allows users to build AI models on their unstructured data. It offers various products for software development and LLM development, including tools for de-identifying and subsetting structured data, scaling down data, handling semi-structured data, and managing ephemeral data environments. Tonic.ai focuses on standardizing, enriching, and protecting unstructured data, as well as validating RAG systems. The platform also provides integrations with relational databases, data lakes, NoSQL databases, flat files, and SaaS applications, ensuring secure data transformation for software and AI developers.

Gretel.ai

Gretel.ai is a synthetic data platform purpose-built for AI applications. It allows users to generate artificial, synthetic datasets with the same characteristics as real data, enabling the improvement of AI models without compromising privacy. The platform offers APIs for generating anonymized and safe synthetic data, training generative AI models, and validating models with quality and privacy scores. Users can deploy Gretel for enterprise use cases and run it on various cloud platforms or in their own environment.

NPI Lookup

NPI Lookup is an AI-powered platform that offers advanced search and validation services for National Provider Identifier (NPI) numbers of healthcare providers in the United States. The tool uses cutting-edge artificial intelligence technology, including Natural Language Processing (NLP) algorithms and GPT models, to provide comprehensive insights and answers related to NPI profiles. It allows users to search and validate NPI records of doctors, hospitals, and other healthcare providers using everyday language queries, ensuring accurate and up-to-date information from the NPPES NPI database.

11 - Open Source AI Tools

opendataeditor

The Open Data Editor (ODE) is a no-code application to explore, validate and publish data in a simple way. It is an open source project powered by the Frictionless Framework. The ODE is currently available for download and testing in beta.

instructor-js

Instructor is a Typescript library for structured extraction in Typescript, powered by llms, designed for simplicity, transparency, and control. It stands out for its simplicity, transparency, and user-centric design. Whether you're a seasoned developer or just starting out, you'll find Instructor's approach intuitive and steerable.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

island-ai

island-ai is a TypeScript toolkit tailored for developers engaging with structured outputs from Large Language Models. It offers streamlined processes for handling, parsing, streaming, and leveraging AI-generated data across various applications. The toolkit includes packages like zod-stream for interfacing with LLM streams, stream-hooks for integrating streaming JSON data into React applications, and schema-stream for JSON streaming parsing based on Zod schemas. Additionally, related packages like @instructor-ai/instructor-js focus on data validation and retry mechanisms, enhancing the reliability of data processing workflows.

instructor_ex

Instructor is a tool designed to structure outputs from OpenAI and other OSS LLMs by coaxing them to return JSON that maps to a provided Ecto schema. It allows for defining validation logic to guide LLMs in making corrections, and supports automatic retries. Instructor is primarily used with the OpenAI API but can be extended to work with other platforms. The tool simplifies usage by creating an ecto schema, defining a validation function, and making calls to chat_completion with instructions for the LLM. It also offers features like max_retries to fix validation errors iteratively.

atomic_agents

Atomic Agents is a modular and extensible framework designed for creating powerful applications. It follows the principles of Atomic Design, emphasizing small and single-purpose components. Leveraging Pydantic for data validation and serialization, the framework offers a set of tools and agents that can be combined to build AI applications. It depends on the Instructor package and supports various APIs like OpenAI, Cohere, Anthropic, and Gemini. Atomic Agents is suitable for developers looking to create AI agents with a focus on modularity and flexibility.

atomic-agents

The Atomic Agents framework is a modular and extensible tool designed for creating powerful applications. It leverages Pydantic for data validation and serialization. The framework follows the principles of Atomic Design, providing small and single-purpose components that can be combined. It integrates with Instructor for AI agent architecture and supports various APIs like Cohere, Anthropic, and Gemini. The tool includes documentation, examples, and testing features to ensure smooth development and usage.

docetl

DocETL is a tool for creating and executing data processing pipelines, especially suited for complex document processing tasks. It offers a low-code, declarative YAML interface to define LLM-powered operations on complex data. Ideal for maximizing correctness and output quality for semantic processing on a collection of data, representing complex tasks via map-reduce, maximizing LLM accuracy, handling long documents, and automating task retries based on validation criteria.

airflow-provider-great-expectations

The 'airflow-provider-great-expectations' repository contains a set of Airflow operators for Great Expectations, a Python library used for testing and validating data. The operators enable users to run Great Expectations validations and checks within Apache Airflow workflows. The package requires Airflow 2.1.0+ and Great Expectations >=v0.13.9. It provides functionalities to work with Great Expectations V3 Batch Request API, Checkpoints, and allows passing kwargs to Checkpoints at runtime. The repository includes modules for a base operator and examples of DAGs with sample tasks demonstrating the operator's functionality.

aiscript

AIScript is a unique programming language and web framework written in Rust, designed to help developers effortlessly build AI applications. It combines the strengths of Python, JavaScript, and Rust to create an intuitive, powerful, and easy-to-use tool. The language features first-class functions, built-in AI primitives, dynamic typing with static type checking, data validation, error handling inspired by Rust, a rich standard library, and automatic garbage collection. The web framework offers an elegant route DSL, automatic parameter validation, OpenAPI schema generation, database modules, authentication capabilities, and more. AIScript excels in AI-powered APIs, prototyping, microservices, data validation, and building internal tools.

jambo

Jambo is a Python package that automatically converts JSON Schema definitions into Pydantic models. It streamlines schema validation and enforces type safety using Pydantic's validation features. The tool supports various JSON Schema features like strings, integers, floats, booleans, arrays, nested objects, and more. It enforces constraints such as minLength, maxLength, pattern, minimum, maximum, uniqueItems, and provides a zero-config approach for generating models. Jambo is designed to simplify the process of dynamically generating Pydantic models for AI frameworks.

20 - OpenAI Gpts

DataQualityGuardian

A GPT-powered assistant specializing in data validation and quality checks for various datasets.

Regex Wizard

Generate and explain regex patterns from your description, it support English and Chinese.

RegExp Builder

This GPT lets you build PCRE Regular Expressions (for use the RegExp constructor).

JSON Outputter

Takes all input into consideration and creates a JSON-appropriate response. Also useful for creating templates.